A Multi-Perspective 3D Reconstruction Method with Single Perspective Instantaneous Target Attitude Estimation

Abstract

:1. Introduction

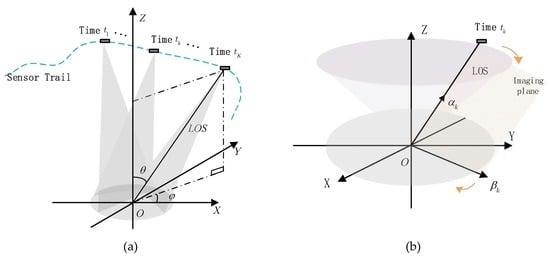

2. Signal Model

3. Theory and Method

3.1. Traditional 3D Geometry Reconstruction Based on Factorization Method

3.2. Analysis and Estimation of 3D Reconstruction Attitude

3.3. Joint Multi-Perspective 3D Reconstruction

3.3.1. Target Attitude Relationship

3.3.2. Point Cloud Fusion

3.4. Algorithm Summation

4. Simulations

4.1. Single Perspective Attitude Estimation

4.2. Multi-Perspective 3D Imaging Results

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chen, C.-C.; Andrews, H.C. Target-motion-induced radar imaging. IEEE Trans. Aerosp. Electron. Syst. 1980, 16, 2–14. [Google Scholar] [CrossRef]

- Zhang, Q.; Yeo, T.S.; Du, G. ISAR imaging in strong ground clutter using a new stepped-frequency signal format. IEEE Trans. Geosci. Remote Sens. 2003, 41, 948–952. [Google Scholar] [CrossRef]

- Wang, Q.; Xing, M.; Lu, G.; Bao, Z. Single range matching filtering for space debris radar imaging. IEEE Geosci. Remote Sens. Lett. 2007, 4, 576–580. [Google Scholar] [CrossRef]

- Lv, X.; Xing, M.; Wan, C.; Zhang, S. ISAR imaging of maneuvering targets based on the range centroid Doppler technique. IEEE Trans. Image Process. 2010, 19, 141–153. [Google Scholar] [PubMed]

- Wang, D.; Ma, X.; Chen, A.; Su, Y. High-resolution imaging using a wideband MIMO radar system with two distributed arrays. IEEE Trans. Image Process. 2010, 19, 1280–1289. [Google Scholar] [CrossRef]

- Chen, J.; Sun, G.; Xing, M.; Liang, B.; Gao, Y. Focusing improvement of curved trajectory spaceborne SAR based on optimal LRWC preprocessing and 2-D singular value decomposition. IEEE Trans. Geosci. Remote Sens. 2019. [Google Scholar] [CrossRef]

- Li, J.; Ling, H. 3D ISAR image reconstruction of a target with motion data using adaptive feature extraction. J. Electromagn. Waves Appl. 2001, 15, 1571–1587. [Google Scholar] [CrossRef]

- Mayhan, J.T.; Burrows, M.L.; Cuomo, K.M.; Piou, J.E. High resolution 3D ‘Snapshot’ ISAR imaging and feature extraction. IEEE Trans. Aerosp. Electron. Syst. 2001, 37, 630–642. [Google Scholar] [CrossRef]

- Stuff, M.A.; Sanchez, P.; Biancalana, M. Extraction of three dimensional motion and geometric invariants from range dependent signals. Multidimen. Syst. Signal Process. 2003, 14, 161–181. [Google Scholar] [CrossRef]

- Given, J.A.; Schmidt, W.R. Generalized ISAR—Part II: Interferometric techniques for three-dimensional location of scatterers. IEEE Trans. Image Process. 2005, 14, 1792–1797. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Zhang, L.; Liu, H. Generalized Three-dimensional imaging algorithms for synthetic aperture radar with metamaterial apertures-based antenna. IEEE Access 2019, 7, 1–12. [Google Scholar] [CrossRef]

- Zhou, J.; Shi, Z.; Fu, Q. Three-dimensional scattering center extraction based on wide aperture data at a single elevation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1638–1655. [Google Scholar] [CrossRef]

- Wang, G.; Xia, X.-G.; Chen, V. Three-dimensional ISAR imaging of maneuvering targets using three receivers. IEEE Trans. Image Process. 2001, 10, 436–447. [Google Scholar] [CrossRef]

- Xu, X.; Narayanan, R.M. Three-dimensional interferometric ISAR imaging for target scattering diagnosis and modeling. IEEE Trans. Image Process. 2001, 10, 1094–1102. [Google Scholar] [PubMed]

- Ma, C.; Yeo, T.S.; Zhang, Q.; Tan, H.; Wang, J. Three-dimensional ISAR imaging based on antenna array. IEEE Trans. Geosci. Remote Sens. 2008, 46, 504–515. [Google Scholar] [CrossRef]

- Duan, G.; Wang, W.; Ma, X.; Su, Y. Three-dimensional imaging via wideband MIMO radar system. IEEE Geosci. Remote Sens. Lett. 2010, 7, 445–449. [Google Scholar] [CrossRef]

- Ma, C.; Yeo, T.S.; Tan, C.; Tan, H. Sparse array 3-D ISAR imaging based on maximum likelihood estimation and clean technique. IEEE Trans. Image Process. 2010, 19, 2127–2142. [Google Scholar] [PubMed]

- Suwa, K.; Wakayama, T.; Iwamoto, M. Three-dimensional target geometry and target motion estimation method using multistatic ISAR movies and its performance. IEEE Geosci. Remote Sens. 2011, 6, 2361–2373. [Google Scholar] [CrossRef]

- Martorella, M.; Salvetti, F.; Stagliano, D. 3D target reconstruction by means of 2D-ISAR imaging and interferometry. In Proceedings of the 2013 IEEE Radar Conf. (RADAR), Ottawa, ON, Canada, 29 April–3 May 2013; pp. 1–6. [Google Scholar]

- Liu, Y.; Song, M.; Wu, K.; Wang, R.; Deng, Y. High-quality 3-D InISAR imaging of maneuvering target based on a combined processing approach. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1036–1040. [Google Scholar]

- Xu, G.; Xing, M.; Xia, X.-G.; Zhang, L.; Chen, Q.; Bao, Z. 3D Geometry and motion estimations of maneuvering targets for interferometric ISAR with sparse aperture. IEEE Trans. Image Process. 2016, 25, 2005–2020. [Google Scholar] [CrossRef]

- Knaell, K.; Cardillo, G. Radar tomography for the generation of three-dimensional images. Proc. Inst. Elect. Eng.—Radar Sonar Navig. 1995, 142, 54–60. [Google Scholar] [CrossRef] [Green Version]

- Tomasi, C.; Kanade, T. Shape and motion from image streams under orthography: A factorization method. Int. J. Comput. Vis. 1992, 9, 137–154. [Google Scholar] [CrossRef]

- Morita, T.; Kanade, T. A sequential factorization method for recovering shape and motion from image streams. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 858–867. [Google Scholar] [CrossRef]

- McFadden, F.E. Three-dimensional reconstruction from ISAR sequences. Proc. SPIE 2002, 4744, 58–67. [Google Scholar]

- Liu, L.; Zhou, F.; Bai, X.; Tao, M. Joint cross-range scaling and 3D Geometry reconstruction of ISAR targets based on factorization method. IEEE Trans. Image Process. 2016, 25, 1740–1750. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Xu, F.; Jin, Y. 3-D information of a space target retrieved from a sequence of high-resolution 2-D ISAR images. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016. [Google Scholar]

- Wang, F.; Xu, F.; Jin, Y. Three-dimensional reconstruction from a multiview sequence of sparse ISAR imaging of a space target. IEEE Trans. Geosci. Remote Sens. 2018, 56, 611–620. [Google Scholar] [CrossRef]

- Paulraj, A.; Roy, R.; Kailath, T. Estimation of signal parameters via rotational invariance techniques-ESPRIT. IEEE Trans. Acoust. 1989, 37, 984–995. [Google Scholar]

- Wang, X.; Zhang, M.; Zhao, J. Super-resolution ISAR imaging via 2D unitary ESPRIT. Electron. Lett. 2015, 51, 519–521. [Google Scholar] [CrossRef]

- Wu, M.; Xing, M.; Zhang, L.; Duan, J.; Xu, G. Super-resolution imaging algorithm based on attributed scattering center model. In Proceedings of the 2014 IEEE China Summit & Ingernational Conference on Signal and Information Processing (ChinaSIP), Xi’an, China, 9–13 July 2014; pp. 271–275. [Google Scholar]

- Oh, S.; Russell, S.; Sastry, S. Markvo chain Monte Carlo data association for multi-target tracking. IEEE Trans. Autom. Control. 2009, 54, 481–497. [Google Scholar]

- Liu, L.; Zhou, F.; Bai, X. Method for scatterer trajectory association of sequential ISAR images based on Markvo chain Monte Carlo algorithm. IET Radar Sonar Navig. 2018, 12, 1535–1542. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, L.; Xing, C.; Xie, P.; Cao, Y. Target three-dimensional reconstruction from the multi-view radar image sequence. IEEE Access. 2019, 7, 36722–36735. [Google Scholar] [CrossRef]

- Zhang, L.; Sheng, J.; Duan, J.; Xing, M. Translational motion compensation for ISAR imaging under low SNR by minimum entropy. EURASIP J. Adv. Signal Process. 2013. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, D.; Xing, M.; Xia, X.-G.; Sun, G.-C.; Fu, J.; Su, T. A Multi-Perspective 3D Reconstruction Method with Single Perspective Instantaneous Target Attitude Estimation. Remote Sens. 2019, 11, 1277. https://doi.org/10.3390/rs11111277

Xu D, Xing M, Xia X-G, Sun G-C, Fu J, Su T. A Multi-Perspective 3D Reconstruction Method with Single Perspective Instantaneous Target Attitude Estimation. Remote Sensing. 2019; 11(11):1277. https://doi.org/10.3390/rs11111277

Chicago/Turabian StyleXu, Dan, Mengdao Xing, Xiang-Gen Xia, Guang-Cai Sun, Jixiang Fu, and Tao Su. 2019. "A Multi-Perspective 3D Reconstruction Method with Single Perspective Instantaneous Target Attitude Estimation" Remote Sensing 11, no. 11: 1277. https://doi.org/10.3390/rs11111277