Abstract

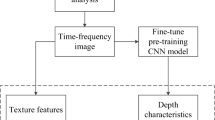

The capability of manipulating objects based on vision system are essential to robotic applications. For a vision system, high accuracy and robustness is vital. However, due to external environment and interference influences, as well as to lighting conditions influences, the most existing image-based vision system are limited in applications. In this context, a novel and robust data-driven vision system for recognizing different types of objects in various external situations has been presented in this paper. The system consists of three parts, namely, integrated module based on X2 Ultra-wideband (UWB) radar, deep learning (DL) module and robot controller. Specifically, the introduction of X2 UWB radar aims to (1) capture and process radar echo signals of different objects in various environments; (2) extract Range-Doppler power spectrograms from the processed signals accurately. The second part combines the DL algorithm to take the spectrograms as the input from the former to realize object classification. And the last part is used to control the behavior of the robot based on the classification results. The experimental results show that the proposed vision system achieves very reliable performance in recognizing six types of objects in four kinds of external environment and lighting conditions with three deep learning network models. Besides, by experimental data comparison, the performance of the proposed system achieves higher than that of the image-based system in the same poor conditions and ResNet is the optimal model for our proposed system in all conditions.

Similar content being viewed by others

References

Amin MG, Zhang YD, Ahmad F, Ho KCD (2016) Radar signal processing for elderly fall detection: the future for in-home monitoring. IEEE Signal Process Mag 33:71–80

Bauernhansl T (2013) Industry 4.0: challenges and limitations in the production; Keynote. In: ATKearney factory of the year 2013. Süddeutscher Verlag Veranstaltungen, Landsberg, 38 Slides

Buyun S, Xiyan Y, Chenglei Z et al (2018) A rapid virtual assembly approach for 3D models of production line equipment based on the smart recognition of assembly features. J Ambient Intell Humaniz Comput 2018:1–14

Christian S, Wei L, Yangqing J, Pierre S et al (2015) Going deeper with convolutions. Computer vision and pattern recognition, pp 1–12

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR). Las Vegas, NV, pp 770–778. https://doi.org/10.1109/CVPR.2016.90

Helge L (2016) Ultra-wideband radar simulator for classifying humans and animals based on micro-doppler signatures. Dissertation, Norwegian University of Science and Technology

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift, pp 448–456

Kianoush S, Savazzi S, Vicentini F, Rampa V, Giussani M (2015) Leveraging RF signals for human sensing: fall detection and localization in human-machine shared workspaces. In: 2015 IEEE 13th international conference on industrial informatics (INDIN). Cambridge, pp 1456–1462. https://doi.org/10.1109/INDIN.2015.7281947

Knoll A, Zhang J, Graf T, Wolfram A (2000) Object recognition and visual servoing: two case studies of employing fuzzy techniques in robot vision. Fuzzy techniques in image processing. Physica-Verlag, Heidelberg, pp 370–393

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. International Conference on Neural Information Processing Systems, vol 60. Curran Associates Inc., pp 1097–1105

Li J, Tao F, Cheng Y et al (2015) Big data in product lifecycle management. Int J Adv Manuf Technol 81:667–684

Lu Y, Riddick F, Ivezic N (2016) The paradigm shift in smart manufacturing system architecture. IFIP International Conference on Advances in Production Management Systems. Springer, Cham, pp 767–776

Mextorf H, Daschner F, Kent M, Knochel RH (2011) UWB free-space characterization and shape recognition of dielectric objects using statistical methods. IEEE Trans Instrum Meas 60:1389–1396

Rainer JJ, Cobos-Guzman S, Galán R (2017) Decision making algorithm for an autonomous guide-robot using fuzzy logic. J Ambient Intell Human Comput 5:1–13

Saucedo-Martínez JA, Pérez-Lara M, Marmolejo-Saucedo JA, Salais-Fierro TE, Vasant P (2017) Industry 4.0 framework for management and operations: a review. J Ambient Intell Human Comput 3:1–13

Stefan F (2005) Video based indoor exploration with autonomous and mobile robots. J Intell Rob Syst 41:245–262

Tang P, Wang H, Kwong S (2016) G-MS2F: googlenet based multi-stage feature fusion of deep cnn for scene recognition. Neurocomputing 225:188–197

Tao F, Qi Q (2017) New IT driven service-oriented smart manufacturing: framework and characteristics. IEEE Trans Syst Man Cybern Syst 99:1–11

Tao F, Zhao D, Hu Y et al (2008) Resource service composition and its optimal-selection based on particle swarm optimization in manufacturing grid system. IEEE Trans Ind Inf 4:315–327

Tao F, Guo H, Zhang L et al (2012) Modelling of combinable relationship-based composition service network and the theoretical proof of its scale-free characteristics. Enterp Inf Syst 6:373–404

Tao F, Zuo Y, Xu LD et al (2014) IoT-based intelligent perception and access of manufacturing resource toward cloud manufacturing. IEEE Trans Ind Inf 10:1547–1557

Tao F, Cheng JF, Qi Q (2017a) IIHub: an industrial internet-of-things hub towards smart manufacturing based on cyber-physical system. IEEE Trans Ind Inf 99:1–1

Tao F, Cheng J, Cheng Y et al (2017b) SDMSim: a manufacturing service supply–demand matching simulator under cloud environment. Robot Comput Integr Manuf 45:34–46

Tao F, Qi Q, Liu A et al (2018a) Data-driven smart manufacturing. J Manuf Syst. https://doi.org/10.1016/j.jmsy.2018.01.006

Tao F, Cheng JF, Qi Q, Zhang M et al (2018b) Digital twin driven product design, manufacturing and service with big data. Int J Adv Manuf Technol 2017:1–14

Yang H, Baradat C, Krut S, Pierrot F (2013) An agile manufacturing system for large workspace applications. Robotics in smart manufacturing. Commun Comput Inf Sci 371:57–70

Zeiler MD, Fergus R (2014) Visualizing and understanding convolutional networks. European Conference on Computer Vision, vol 8689. Springer, Cham, pp 818–833

Zhou Z, Zhang J, Zhang YD (2016) Ultra-wideband radar and vision based human motion classification for assisted living. In: Sensor array and multichannel signal processing workshop (SAM). IEEE, Rio de Janerio, pp 1–5. https://doi.org/10.1109/SAM.2016.7569747

Zhou XZ, Xie L, Zhang P, Zhang YN (2017) Online object tracking based on BLSTM-RNN with contextual-sequential labeling. J Ambient Intell Humaniz Comput 8:1–10

Acknowledgements

The work presented in this paper was supported by the National Great Science Specific Project (Grants No. 2015ZX03002008), National Natural Science Foundation of China (Grants No. NSFC-61471067)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wen, Z., Liu, D., Liu, X. et al. Deep learning based smart radar vision system for object recognition. J Ambient Intell Human Comput 10, 829–839 (2019). https://doi.org/10.1007/s12652-018-0853-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-018-0853-9