Abstract

Purpose

We propose a formal framework for the modeling and segmentation of minimally invasive surgical tasks using a unified set of motion primitives (MPs) to enable more objective labeling and the aggregation of different datasets.

Methods

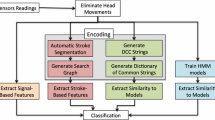

We model dry-lab surgical tasks as finite state machines, representing how the execution of MPs as the basic surgical actions results in the change of surgical context, which characterizes the physical interactions among tools and objects in the surgical environment. We develop methods for labeling surgical context based on video data and for automatic translation of context to MP labels. We then use our framework to create the COntext and Motion Primitive Aggregate Surgical Set (COMPASS), including six dry-lab surgical tasks from three publicly available datasets (JIGSAWS, DESK, and ROSMA), with kinematic and video data and context and MP labels.

Results

Our context labeling method achieves near-perfect agreement between consensus labels from crowd-sourcing and expert surgeons. Segmentation of tasks to MPs results in the creation of the COMPASS dataset that nearly triples the amount of data for modeling and analysis and enables the generation of separate transcripts for the left and right tools.

Conclusion

The proposed framework results in high quality labeling of surgical data based on context and fine-grained MPs. Modeling surgical tasks with MPs enables the aggregation of different datasets and the separate analysis of left and right hands for bimanual coordination assessment. Our formal framework and aggregate dataset can support the development of explainable and multi-granularity models for improved surgical process analysis, skill assessment, error detection, and autonomy.

Similar content being viewed by others

References

Ahmidi N, Tao L, Sefati S, Gao Y, Lea C, Haro BB, Zappella L, Khudanpur S, Vidal R, Hager GD (2017) A dataset and benchmarks for segmentation and recognition of gestures in robotic surgery. IEEE Trans Biomed Eng 64(9):2025–2041

Boehm JR, Fey NP, Fey AM (2021) Online recognition of bimanual coordination provides important context for movement data in bimanual teleoperated robots. In: 2021 IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 6248–6255. IEEE

Bowyer SA, Davies BL, Baena FRY (2013) Active constraints/virtual fixtures: a survey. IEEE Trans Rob 30(1):138–157

De Rossi G, Minelli M, Roin S, Falezza F, Sozzi A, Ferraguti F, Setti F, Bonfè M, Secchi C, Muradore R (2021) A first evaluation of a multi-modal learning system to control surgical assistant robots via action segmentation. IEEE Trans Med Robot Bionics

Falezza F, Piccinelli N, De Rossi G, Roberti A, Kronreif G, Setti F, Fiorini P, Muradore R (2021) Modeling of surgical procedures using statecharts for semi-autonomous robotic surgery. IEEE Trans Med Robot Bionics 3(4):888–899

Gao Y, Vedula SS, Reiley CE, Ahmidi N, Varadarajan B, Lin HC, Tao L, Zappella L, Béjar B, Yuh DD, Chen CCG, Vidal R, Khudanpur S, Hager GD (2014) Jhu-isi gesture and skill assessment working set (jigsaws): a surgical activity dataset for human motion modeling. In: MICCAI workshop: M2CAI, vol 3, p 3

Gibaud B, Forestier G, Feldmann C, Ferrigno G, Gonçalves P, Haidegger T, Julliard C, Katić D, Kenngott H, Maier-Hein L, März K, de Momi E, Nagy DÁ, Nakawala H, Neumann J, Neumuth T, Balderrama JR, Speidel S, Wagner M, Jannin P (2018) Toward a standard ontology of surgical process models. Int J Comput Assist Radiol Surg 13(9):1397–1408

Ginesi M, Meli D, Roberti A, Sansonetto N, Fiorini P (2020) Autonomous task planning and situation awareness in robotic surgery. In: 2020 IEEE/RSJ international conference on intelligent robots and systems (IROS), pp. 3144–3150. IEEE

Hagberg A, Swart P, Chult DS (2008) Exploring network structure, dynamics, and function using networkx. Technical report, Los Alamos National Lab. (LANL), Los Alamos, NM (USA)

Hu D, Gong Y, Hannaford B, Seibel EJ (2015) Semi-autonomous simulated brain tumor ablation with ravenii surgical robot using behavior tree. In: 2015 IEEE international conference on robotics and automation (ICRA). IEEE, pp 3868–3875

Huaulmé A, Sarikaya D, Le Mut K, Despinoy F, Long Y, Dou Q, Chng C-B, Lin W, Kondo S, Bravo-Sánchez L, Arbeláez P, Reiter W, Mitsuishi M, Harada K, Jannin P (2021) Micro-surgical anastomose workflow recognition challenge report. Comput Methods Programs Biomed 212:106452

Hughes J (2021) krippendorffsalpha: an R package for measuring agreement using Krippendorff’s alpha coefficient. R Journal 13(1):413–425

Hutchinson K, Li Z, Cantrell LA, Schenkman NS, Alemzadeh H (2022) Analysis of executional and procedural errors in dry-lab robotic surgery experiments. Int J Med Robot Comput Assist Surg 18(3):e2375

Inouye DA, Ma R, Nguyen JH, Laca J, Kocielnik R, Anandkumar A, Hung AJ (2022) Assessing the efficacy of dissection gestures in robotic surgery. J Robot Surg, pp 1–7

Kitaguchi D, Takeshita N, Hasegawa H, Ito M (2021) Artificial intelligence-based computer vision in surgery: recent advances and future perspectives. Ann Gastroenterol Surg 6:10

Krippendorff K (2011) Computing Krippendorff’s alpha-reliability

Lalys F, Jannin P (2014) Surgical process modelling: a review. Int J Comput Assist Radiol Surg 9(3):495–511

Lea C, Vidal R, Reiter A, Hager GD (2016) Temporal convolutional networks: a unified approach to action segmentation. In: European conference on computer vision, pp 47–54. Springer

Li Z., Hutchinson K., Alemzadeh H (2022) Runtime detection of executional errors in robot-assisted surgery. In: 2022 International conference on robotics and automation (ICRA), pp 3850–3856. IEEE Press

Madapana N, Rahman MM, Sanchez-Tamayo N, Balakuntala MV, Gonzalez G, Bindu JP, Vishnunandan Venkatesh LV, Zhang X, Noguera JB, Low T, et al (2019) Desk: a robotic activity dataset for dexterous surgical skills transfer to medical robots. In: 2019 IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 6928–6934. IEEE

Meireles OR, Rosman G, Altieri MS, Carin L, Hager G, Madani A, Padoy N, Pugh CM, Sylla P, Ward TM et al (2021) Sages consensus recommendations on an annotation framework for surgical video. Surg Endosc 35(9):4918–4929

Meli D, Fiorini P (2021) Unsupervised identification of surgical robotic actions from small non-homogeneous datasets. IEEE Robot Autom Lett 6(4):8205–8212

Menegozzo G, Dall’Alba D, Zandonà C, Fiorini P (2019) Surgical gesture recognition with time delay neural network based on kinematic data. In: 2019 International symposium on medical robotics (ISMR), pp 1–7. IEEE

Nazari T, Vlieger EJ, Dankbaar MEW, van Merriënboer JJG, Lange JF, Wiggers T (2018) Creation of a universal language for surgical procedures using the step-by-step framework. BJS Open 2(3):151–157

Neumuth D, Loebe F, Herre H, Neumuth T (2011) Modeling surgical processes: a four-level translational approach. Artif Intell Med 51(3):147–161

Nwoye CI, Yu T, Gonzalez C, Seeliger B, Mascagni P, Mutter D, Marescaux J, Padoy N (2022) Rendezvous: attention mechanisms for the recognition of surgical action triplets in endoscopic videos. Med Image Anal 78:102433

Park S, Mohammadi G, Artstein R, Morency L-P (2012) Crowdsourcing micro-level multimedia annotations: the challenges of evaluation and interface. In: Proceedings of the ACM multimedia 2012 workshop on crowdsourcing for multimedia, pp 29–34

Qin Y, Feyzabadi S, Allan M, Burdick JW, Azizian M (2020) davincinet: Joint prediction of motion and surgical state in robot-assisted surgery. In: 2020 IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 2921–2928. IEEE

Rivas-Blanco I, Pérez-del Pulgar CJ, Mariani A, Quaglia C, Tortora G, Menciassi A, Muñoz VF (2021) A surgical dataset from the da vinci research kit for task automation and recognition. arXiv preprint arXiv:2102.03643

Valderrama N, Puentes PR, Hernández I, Ayobi N, Verlyck M, Santander J, Caicedo J, Fernández N, Arbeláez P (2022) Towards holistic surgical scene understanding. In: International conference on medical image computing and computer-assisted intervention, pp 442–452. Springer

van Amsterdam B, Clarkson M, Stoyanov D (2021) Gesture recognition in robotic surgery: a review. IEEE Trans Biomed Eng

van Amsterdam B, Clarkson MJ, Stoyanov D (2020) Multi-task recurrent neural network for surgical gesture recognition and progress prediction. In: 2020 IEEE international conference on robotics and automation (ICRA), pp 1380–1386. IEEE

Van Amsterdam B, Funke I, Edwards E, Speidel S, Collins J, Sridhar A, Kelly J, Clarkson MJ, Stoyanov D (2022) Gesture recognition in robotic surgery with multimodal attention. IEEE Trans Med Imaging

Vedular SS, Malpani AO, Tao L, Chen G, Gao Y, Poddar P, Ahmidi N, Paxton C, Vidal R, Khudanpur S, Hager GD, Chen CCG (2016) Analysis of the structure of surgical activity for a suturing and knot-tying task. PLoS ONE 11(3):e0149174

Yasar MS, Evans D, Alemzadeh H (2019) Context-aware monitoring in robotic surgery. In: 2019 International symposium on medical robotics (ISMR), pp 1–7. IEEE

Yong N, Grange P, Eldred-Evans D (2016) Impact of laparoscopic lens contamination in operating theaters: a study on the frequency and duration of lens contamination and commonly utilized techniques to maintain clear vision. Surg Laparosc Endosc Percutaneous Tech 26(4):286–289

Zhang D, Wu Z, Chen J, Gao A, Chen X, Li P, Wang Z, Yang G, Lo BPL,Yang G-Z (2020) Automatic microsurgical skill assessment based on cross-domain transferlearning. IEEE Robot Autom Lett 5(3):4148–4155

Acknowledgements

This work was supported in part by the National Science Foundation grants DGE-1842490, DGE-1829004, and CNS-2146295 and by the Engineering-in-Medicine center at the University of Virginia. We thank the volunteer labelers, Keshara Weerasinghe, Hamid Roodabeh, and Dr. Schenkman, Dr. Cantrell, and Dr. Chen.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no conflict of interest.

Ethics approval and informed consent

This article does not contain any studies involving human participants performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hutchinson, K., Reyes, I., Li, Z. et al. COMPASS: a formal framework and aggregate dataset for generalized surgical procedure modeling. Int J CARS 18, 2143–2154 (2023). https://doi.org/10.1007/s11548-023-02922-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-023-02922-1