Abstract

Voting among different agents is a powerful tool in problem solving, and it has been widely applied to improve the performance in finding the correct answer to complex problems. We present a novel benefit of voting, that has not been observed before: we can use the voting patterns to assess the performance of a team and predict their final outcome. This prediction can be executed at any moment during problem-solving and it is completely domain independent. Hence, it can be used to identify when a team is failing, allowing an operator to take remedial procedures (such as changing team members, the voting rule, or increasing the allocation of resources). We present three main theoretical results: (1) we show a theoretical explanation of why our prediction method works; (2) contrary to what would be expected based on a simpler explanation using classical voting models, we show that we can make accurate predictions irrespective of the strength (i.e., performance) of the teams, and that in fact, the prediction can work better for diverse teams composed of different agents than uniform teams made of copies of the best agent; (3) we show that the quality of our prediction increases with the size of the action space. We perform extensive experimentation in two different domains: Computer Go and Ensemble Learning. In Computer Go, we obtain high quality predictions about the final outcome of games. We analyze the prediction accuracy for three different teams with different levels of diversity and strength, and show that the prediction works significantly better for a diverse team. Additionally, we show that our method still works well when trained with games against one adversary, but tested with games against another, showing the generality of the learned functions. Moreover, we evaluate four different board sizes, and experimentally confirm better predictions in larger board sizes. We analyze in detail the learned prediction functions, and how they change according to each team and action space size. In order to show that our method is domain independent, we also present results in Ensemble Learning, where we make online predictions about the performance of a team of classifiers, while they are voting to classify sets of items. We study a set of classical classification algorithms from machine learning, in a data-set of hand-written digits, and we are able to make high-quality predictions about the final performance of two different teams. Since our approach is domain independent, it can be easily applied to a variety of other domains.

Similar content being viewed by others

1 Introduction

It is well known that aggregating the opinions of different agents can lead to a significant performance improvement when solving complex problems. In particular, voting has been extensively used to improve the performance in machine learning [66], crowdsourcing [4, 55], and even board games [57, 64]. Additionally, it is an aggregation technique that does not depend on any domain, being very suited for wide applicability. However, a team of voting agents will not always be successful in problem-solving. It is fundamental, therefore, to be able to quickly assess the performance of teams, so that a system operator can take actions to recover the situation in time. Moreover, complex problems are generally characterized by a large action space, and hence methods that work well in such situations are of particular interest.

Current works in the multi-agent systems literature focus on identifying faulty or erroneous behavior [13, 44, 48, 74], or verifying correctness of systems [24]. Such approaches are able to identify if a system is not operating correctly, but provide no help if a correct system of agents is failing to solve a complex problem. Other works focus on team analysis. Raines et al. [68] present a method to automatically analyze the performance of a team. The method, however, only works offline and needs domain knowledge. Other methods for team analysis are heavily tailored for robot-soccer [69] and focus on identifying opponent tactics [60].

In fact, many works in robotics propose monitoring a team by detecting differences in the internal state of the agents (or disagreements), mostly caused by malfunction of the sensors/actuators [39–42]. In a system of voting agents, however, disagreements are inherent in the coordination process and do not necessarily mean that an erroneous situation has occurred due to such malfunction. Additionally, research in social choice is mostly focused on studying the guarantees of finding the optimal choice given a noise model for the agents and a voting rule [14, 19, 49], but provide no help in assessing the performance of a team of voting agents.

There are also many recent works presenting methods to analyze and/or make predictions about human teams playing sports games. Such works use an enormous amount of data to make predictions about many popular sports, such as American football [34, 67], soccer [10, 52] and basketball [51, 53]. Clearly, however, these works are not applicable to analyzing the performance of a team of voting agents.

Hence, in this paper, we show a novel method to predict the final performance (success or failure) of a team of voting agents, without using any domain knowledge. Therefore, our method can be easily applied in a great variety of scenarios. Moreover, our approach can be quickly applied online at any step of the problem-solving process, allowing a system operator to identify when the team is failing. This can be useful in many applications. For example, consider a complex problem being solved on a cluster of computers. It is undesirable to allocate more resources than necessary, but if we notice that a team is failing in problem solving, we might wish to increase the allocation of resources. Or consider a team playing together a game against an opponent (such as board games, or poker). Different teams might play better against different opponents. Hence, if we notice that a team is predicted to perform poorly, we could dynamically change it. Under time constraints, however, such prediction must be done quickly.

Although related, note that the contribution of this paper is not in using a team of experts to solve a problem or make predictions [15] or aggregating multiple classifiers through voting as in ensemble systems [66]. Our objective is, given a team of voting agents, to make a prediction about such a team, in order to estimate whether they will be able to solve a certain problem or not.

Our approach is based on a prediction model derived from a graphical representation of the problem-solving process, where the final outcome is modeled as a random variable that is influenced by the subsets of agents that agreed together over the actions taken at each step towards solving the problem. Hence, our representation depends uniquely on the coordination method, and has no dependency on the domain. We explain theoretically why we can make accurate predictions, and we also show the conditions under which we can use a reduced (and scalable) representation. Moreover, our theoretical development allows us to anticipate situations that would not be foreseen by a simple application of classical voting theories. For example, our model indicates that the accuracy can be better for diverse teams composed of different agents than for uniform teams, and that we can make equally accurate predictions for teams that have significant differences in playing strength (which is later confirmed in our experiments). We also study the impact of increasing the action space in the quality of our predictions, and show that we can make better predictions in problems with large action spaces.

We present experimental results in two different domains: Computer Go and Ensemble Learning. In the Computer Go domain, we predict the performance of three different teams of voting agents: a diverse, a uniform, and an intermediate team (with respect to diversity); in four different board sizes, and against two different adversaries. We study the predictions at every turn of the games, and compare with an analysis performed by using an in-depth search. We are able to achieve an accuracy of 71 % for a diverse team in \(9\times 9\) Go, and of 81 % when we increase the action space size to \(21\times 21\) Go. For a uniform team, we obtain 62 % accuracy in \(9\times 9\), and 75 % accuracy in \(21\times 21\) Go. We also show that we are still able to make high-quality predictions when training our prediction functions in games against one adversary, but testing them in games against another adversary, demonstrating their generality.

We evaluate different classification thresholds using Receiver Operating Characteristic (ROC) curves, and compare the performance for different teams and board sizes according to the area under the curves (AUC). We experimentally show in such analysis that: (1) we can effectively make high-quality predictions for all teams and board sizes; (2) the quality of our predictions is better for the diverse and intermediate teams than uniform (irrespective of their strength), across all thresholds; (3) the quality of the predictions increases as the board size grows. Moreover, the impact of increasing the action space on the prediction quality occurs earlier in the game for the diverse team than for the uniform team. Finally, we study the learned prediction functions, and how they change across different teams and different board sizes. Our analysis shows that the functions are not only highly non-trivial, but in fact even open new questions for further study.

In the Ensemble Learning domain, we predict the performance of classifiers that vote to assign labels to set of items. We use the scikit-learn’s digits dataset [65], and teams vote to correctly identify hand-written digits. We are also able to obtain high-quality predictions online about the final performance of two different teams of classifiers, showing the applicability of our approach to different domains.

2 Related work

The research outlined in this article is related to several key areas of related work in multi-agent systems and machine learning, including voting, team performance assessment, (human) sports analytics, agent verification, robotics, multi-agent learning, multiple expert systems and ensemble systems.

We first discuss research in voting. Voting is a technique that can be applied in many different domains, such as: crowdsourcing [4, 55], board games [56, 57, 64], machine learning [66], forecasting systems [37], etc. Voting is very popular since it is highly-parallelizable, easy to implement and provide theoretical guarantees. It is extensively studied in social choice. Normally, it is presented under two different perspectives: as a way to aggregate different opinions, where different voting rules are analyzed to verify if they satisfy a set of axioms that are considered to be important to achieve fairness [63]; or as a way to discover an optimal choice, where different voting rules are analyzed to verify if they converge to always picking the action that has the highest probability of being correct [14, 19, 49].

Since the classical works of Bartholdi et al. [7, 8], many works in social choice also study the computational complexity of computing the winner in elections [2, 3], and/or of manipulating the outcome of an election, in general by disguising an agent’s true preference [18, 23, 79]. There are also works studying the aggregation of partial [77, 78] or non-linear rankings (such as pair-wise comparisons among alternatives) [25], since it could be costly/impossible to request agents for a full linear ranking over all possible actions. Some very recent works in social choice also analyze probabilistic voting rules, where the agents’ votes affect a probability distribution over outcomes [11, 16].

In this work we present a novel perspective to social choice, as instead of studying the computational complexity of manipulation, or the complexity of calculating the winner and the theoretical guarantees of different voting rules, we show here that we can use the voting patterns as a way to assess the performance of a team. Such a “side-effect” of voting has not been observed before, and was never explored in social choice theory and/or applications.

Concerning team assessment, the traditional methods rely heavily on tailoring for specific domains. Raines et al. [68] present a method to build automated assistants for post-hoc, offline team analysis; but domain knowledge is necessary for such assistants. Other methods for team analysis are heavily tailored for robot-soccer, such as Ramos and Ayanegui [69], that present a method to identify the tactical formation of soccer teams (number of defenders, midfielders, and forwards). Mirchevska et al. [60] present a domain independent approach, but they are still focused on identifying opponent tactics, not in assessing the current performance of a team.

Team assessment is also closely related to team formation. Team formation is classically studied as the problem of selecting the team with maximum expected utility for a given task, considering a model of the capabilities of each agent [31, 62]. Under such framework we could directly compute the expected utility of a team for a certain task. However, for many domains we do not have a model of the capabilities of the agents, and we would have to rely on observing the team to estimate its performance, as we propose in this work.

There is also a body of work that focuses on analyzing (and predicting the performance of) human teams playing sports games. For example, Quenzel and Shea [67] learn a prediction model for tied NFL American football games, where they use logistic regression to predict the final winner. They study the coefficients of the regression model to determine which factors affect the final outcome with statistical significance. Heiny and Blevins [34] use discriminant analysis to predict which strategy an American football team will adopt during the game. In soccer, Bialkowski et al. [10] analyze data from games to automatically identify the roles of each player, and Lucey et al. [52] also use logistic regression to predict the likelihood of a shot scoring a goal. We can also find examples in basketball. Maheswaran et al. [53] use a logistic regression model to predict which team will be able to capture the ball in a rebound, while Lucey et al. [51] study which factors are important when predicting whether a team will be able to perform an open 3-points shot or not.

In the multi-agent systems community, we can see many recent works that study how to identify agents that present faulty behavior [44, 48, 74]. Other works focus on verifying correct agent implementation [24] or monitoring the violation of norms in an agent system [13]. Some works go beyond the agent-level and verify if the system as a whole conforms to a certain specification [46], or verify properties of an agent system [36]. However, a team can still have a poor performance and fail in solving a problem, even when the individual agents are correctly implemented, no agent presents faulty behavior, and the system as a whole conforms to all specifications.

Sometimes even correct agents might fail to solve a task, especially embodied agents (robots) that could suffer sensing or actuating problems. Kaminka and Tambe [42] present a method to detect clear failures in an agent team by social comparison (i.e., each agent compares its state with its peers). Such an approach is fundamentally different than this work, as we are detecting a tendency towards failure for a team of voting agents (caused, for example, by simple lack of ability, or processing power, to solve the problem), not a clearly problematic situation that could be caused by imprecision/failure of the sensors or actuators of an agent/robot. Later, Kaminka [41], and Kalech and Kaminka [39, 40] study the detection of failures by identifying disagreement among the agents. In our case, however, disagreements are inherent in the voting process. They are easy to detect but they do not necessarily mean that a team is immediately failing, or that an agent presents faulty behavior/perception of the current state.

This work is also related to multi-agent learning [80], but normally multi-agent learning methods are focused on learning how agents should perform, not on team assessment. An interesting approach has recently been presented in Torrey and Taylor [76], where they have studied how to teach an agent to behave in a way that will make it achieve a high utility. Besides teaching agents, it should also be possible to teach agent teams. During the process of teaching, it is fundamental to identify when the system is leading towards failure. Hence, our approach could be integrated within a team teaching framework.

Furthermore, the techniques of making predictions by choosing among multiple experts’ advice [15], or combining multiple predictions using voting in ensemble systems [66] are also related to this work. Combining multiple forecasters or classifiers has been a very active research area. For example, Sylvester and Chawla [73] uses a Genetic Algorithm to learn an optimal set of weights when combining multiple classifiers, Chiu and Webb [17] uses voting to predict the future actions of an agent, and AL-Malaise et al. [1] use ensembles to predict the performance of a student. Our work, however, is fundamentally different, as we present a technique to predict the final performance of a team of voting agents. Hence, our contribution is not in using a team of agents to make predictions about someone else’s performance. We predict the future performance of our own agent team with a domain independent approach, and we do not use ensembles to perform such prediction. We train a single predictor function and run it a single time for each world state where we wish to perform a prediction.

Being able to analyze the performance of a team is, however, also important in the study of ensemble systems. Team formation is also necessary for ensembles, as we should pick the classifiers that lead to the best performance [28]. Hence, the technique introduced in this paper can also be applied to make predictions about whether a given ensemble will be successful or not in solving a certain classification task.

Finally, it has recently been shown that diverse teams of voting agents are able to outperform uniform teams composed of copies of the best agent [38, 56, 57]. Marcolino et al. [56] show necessary conditions for a diverse team to overcome a uniform team, Marcolino et al. [57] study how the performance of these teams change as the action space increases, and Jiang et al. [38] analyze asymptotic guarantees for such teams under classical social choice models, besides studying the performance of ranked voting rules. The importance of having diverse teams has also been explored in the social sciences literature, for example in the works of Hong and Page [35], and LiCalzi and Surucu [47], that study models where agents are able to know the utility of the solutions, and the team can simply pick the best solution found by one of its members. Hence, none of these previous works dealt with learning a prediction model to estimate online whether a certain team will win or lose a given game. Additionally, we present here an extra benefit of having diverse teams: we show that we can make better predictions of the final performance for diverse teams than for uniform teams. We will, however, build on the model of Marcolino et al. [57] when we show that the prediction quality of our technique increases with the action space size.

3 Prediction method

We start by presenting our prediction method, and in Sect. 4 we will explain why the method works. We consider scenarios where agents vote at every step (i.e., world state) of a complex problem, in order to take common decisions at every step towards problem-solving. Formally, let \(\mathbf {T}\) be a set of agents \(t_i,\,\mathbf {A}\) be a set of actions \(a_j\) and \(\mathbf {S}\) be a set of world states \(s_k\). The agents must vote for an action at each world state, and the team takes the action decided by the plurality voting rule, that picks the action that received the highest number of votes (we assume ties are broken randomly). The team obtains a final reward r upon completing all world states. In this paper, we assume two possible final rewards: “success” (1) or “failure” (0).

We define the prediction problem as follows: without using any knowledge of the domain, identify the final reward that will be received by a team. This prediction must be executable at any world state, allowing a system operator to take remedial procedures in time.

We now explain our algorithm. The main idea is to learn a prediction function, given the frequencies of agreements of all possible agent subsets over the chosen actions. In order to learn such function, we need to define a feature vector to represent each problem solving instance (for example, a game). Our feature vector records the frequency that each subset of agents was the one that determined the action taken by the team (i.e., the subset whose action was selected as the action of the team by the plurality voting rule). When learning the prediction function we calculate the feature vector considering the whole history of the problem solving process (for example, from the first to the last turn of a game). When using the learned prediction function to actually perform a prediction, we can simply compute the feature vector considering all history from the first world state to the current one (for example, from the first turn of a game up to the current turn), and use it as input to our learned function. Note that our feature vector does not hold any domain information, and uses solely the voting patterns to represent the problem solving instances.

Formally, let \(\mathscr {P}(\mathbf {T}) = \{\mathbf {T_1}, \mathbf {T_2}, \ldots \}\) be the power set of the set of agents, \(a_i\) be the action chosen in world state \(s_j\) and \(\mathbf {H_j} \subseteq \mathbf {T}\) be the subset of agents that agreed on \(a_i\) in that world state. Consider the feature vector \(\mathbf {\mathbf {x}} = (x_1, x_2, \ldots )\) computed at world state \(s_j\), where each dimension (feature) has a one-to-one mapping with \(\mathscr {P}(\mathbf {T})\). We define \(x_i\) as the proportion of times that the chosen action was agreed upon by the subset of agents \(\mathbf {T_i}\). That is,

where \(\mathbb {I}\) is the indicator function and \(\mathbf {S_j} \subseteq \mathbf {S}\) is the set of world states from \(s_1\) to the current world state \(s_j\).

Hence, given a set \(\mathbf {\mathbf {X}}\) such that for each feature vector \(\mathbf {\mathbf {x_t}} \in \mathbf {\mathbf {X}}\) we have the associated reward \(r_t\), we can estimate a function, \(\hat{f}\), that returns an estimated reward between 0 and 1 given an input \(\mathbf {\mathbf {x}}\). We classify estimated rewards above a certain threshold \(\vartheta \) (for example, 0.5) as “success”, and below it as “failure”.

In order to learn the classification model, the features are computed at the final world state. That is, the feature vector will record the frequency that each possible subset of agents won the vote, calculated from the first world state to the last one. For each feature vector, we have (for learning) the associated reward: “success” (1) or “failure” (0), accordingly with the final outcome of the problem solving process. In order to execute the prediction, the features are computed at the current world state (i.e., all history of the current problem solving process from the first world state to the current one).

We use classification by logistic regression, which models \(\hat{f}\) as

where \(\alpha \) and \(\mathbf {\varvec{\beta }}\) are parameters that will be learned given \(\mathbf {\mathbf {X}}\) and the associated rewards. While training, we eliminate two of the features. The feature corresponding to the subset \(\emptyset \) is dropped because an action is chosen only if at least one of the agents voted for it. Also, since the rest of the features sum up to 1, and are hence linearly dependent, we also drop the feature corresponding to all agents agreeing on the chosen action.

We also study a variant of this prediction method, where we use only information about the number of agents that agreed upon the chosen action, but not which agents exactly were involved in the agreement. For that variant, we consider a reduced feature vector \(\mathbf {\mathbf {y}} = (y_1, y_2, \ldots )\), where we define \(y_i\) to be the proportion of times that the chosen action was agreed upon by any subset of i agents:

where \(\mathbb {I}\) is the indicator function and \(\mathbf {S_j} \subseteq \mathbf {S}\) is the set of world states from \(s_1\) to the current world state \(s_j\). We compare the two approaches in Sect. 5.

3.1 Example of features

We give a simple example to illustrate our proposed feature vectors. Consider a team of three agents: \(t_1,\,t_2,\,t_3\). Let’s assume three possible actions: \(a_1,\,a_2,\,a_3\). Consider that, in three iterations of the problem solving process, the voting profiles were as shown in Table 1, where we show which action each agent voted for at each iteration. Based on plurality voting rule, the action chosen for the respective iterations would be \(a_1,\,a_2\), and \(a_2\).

We can see an example of how the full feature vector will be defined at each iteration in Table 2, where each column represents a possible subset of the set of agents, and we mark the frequency that each subset agreed on the chosen action. Note that the frequency of the subsets \(\{t_1\},\,\{t_2\}\) and \(\{t_3\}\) remains 0 in this example. This happens because we only count the subset where all agents involved in the agreement are present. If there was a situation where, for example, agent \(t_1\) votes for \(a_1\), agent \(t_2\) votes for \(a_2\) and agent \(t_3\) votes for \(a_3\), then we would select one of these agents by random tie braking. After that, we would increase the frequency of the corresponding subset containing only the agent that was chosen (i.e., either \(\{t_1\},\,\{t_2\}\) or \(\{t_3\}\)).

If the problem has only three iterations in total, we would use the feature vector at the last iteration and the corresponding result (“success”—1, or “failure”—0), while learning the function \(\hat{f}\) (that is, the feature vectors at Iteration 1 and 2 would be ignored). If, however, we already learned a function \(\hat{f}\), then we could use the feature vector at Iteration 1, 2 or 3 as input to \(\hat{f}\) to execute a prediction. Note that at Iteration 1 and 2 the output (i.e., the prediction) of \(\hat{f}\) will be exactly the same, as the feature vector did not change. At Iteration 3, however, we may have a different output/prediction.

In Table 3, we show an example of the reduced feature vector, where the column headings define the number of agents involved in an agreement over the chosen action. We consider here the same voting profiles as before, shown in Table 1. Note that the reduced representation is much more compact, but we have no way to represent the change in which specific agents were involved in the agreement, from Iteration 2 to Iteration 3. In this case, therefore, we would always have the same prediction after Iteration 1, Iteration 2 and also Iteration 3.

4 Theory

We consider here the view of social choice as a way to estimate a “truth”, or the correct (i.e., best) action to perform in a given world state. Hence, we can model each agent as a probability distribution function (pdf): that is, given the correct outcome, each agent will have a certain probability of voting for the best action, and a certain probability of voting for some incorrect action. These pdfs are not necessarily the same across different world states [56]. Hence, given the voting profile in a certain world state, there will be a probability p of picking the correct choice (for example, by the plurality voting rule).

We will start by developing, in Sect. 4.1, a simple explanation of why we can use the voting patterns to predict success or failure of a team of voting agents, based on classical voting theories. Such explanation will give an intuitive idea of why we can use the voting patterns to predict success or failure of a team of voting agents, and it can be immediately derived from the classical voting models. However, it fails to explain some of the results in Sect. 5, and it needs the assumption that plurality is an optimal voting rule. Hence, it is not enough for a deeper understanding of our prediction methodology. Therefore, we will later present, in Sect. 4.2, our main theoretical model, that provides a better understanding of our results. In particular, based on classical models we would expect to make better predictions for teams that have a greater performance (i.e., likelihood of being correct). Our theory and experiments will show, however, that we can actually make better predictions for teams that are more diverse, even if they have a worse performance. Moreover, we will also be able to build on our model, in Sect. 4.3, to study the effect of increasing the action space on the prediction quality.

4.1 Classical voting model

We start with a simple example to show that we can use the outcome of plurality voting to predict success. Consider a scenario with two agents and two possible actions, a correct and an incorrect one. We assume, for this example, that agents have a probability of 0.6 of voting for the correct action and 0.4 of making a mistake.

If both agents vote for the same action, they are either both correct or both wrong. Hence, the probability of the team being correct is given by \(0.6^2 / (0.6^2 + 0.4^2) = 0.69\). Therefore, if the agents agree, the team is more likely correct than wrong. If they vote for different actions, however, one will be correct and the other one wrong. Given that profile, and assuming that we break ties randomly, the team will have a 0.5 probability of being correct. Hence, the team has a higher probability of taking a correct choice when the agents agree than when they disagree (\(0.69 > 0.5\)). Therefore, if across multiple iterations these agents agree often, the team has a higher probability of being correct across these iterations, and we can predict that the team is going to be successful. If they disagree often, then the probability of being correct across the iterations is lower, and we can predict that the team will not be successful.

More generally than the previous example, we can consider all cases where plurality is the optimal voting rule. In social choice, optimal voting rules are often studied as maximum likelihood estimators (MLE) of the correct choice [19]. That is, each agent is modeled as having a noisy perception of the truth (or correct outcome). Hence, the correct outcome influences how each agent is going to vote, as shown in the model in Fig. 1. For example, consider a certain agent t that has a probability 0.6 of voting for the best action, and let’s say we are in a certain situation where action \(a^*\) is the best action. In this situation agent t will have a probability of 0.6 of voting for \(a^*\).

Therefore, given a voting profile and a noise model (the probability of voting for each action, given the correct outcome) of each agent, we can estimate the likelihood of each action being the best by a simple (albeit computationally expensive) probabilistic inference. Any voting rule is going to be optimal if it corresponds to always picking the action that has the maximum likelihood of being correct (i.e., the action with maximum likelihood of being the best action), according to the assumed noise model of the agents. That is, the output of an optimal voting rule always corresponds to the output of actually computing, by the probabilistic inference method mentioned above, which action has the highest likelihood of being the best one.

If plurality is assumed to be an optimal voting rule, then the action voted by the largest number of agents has the highest probability of being the optimal action. We can expect, therefore, that the higher the number of agents that votes for an action, the higher the probability that the action is the optimal one. Hence, given two different voting profiles with a different number of agreeing agents, we expect that the team has a higher probability of being correct (and, therefore, be successful) in the voting profile where a larger number of agents agree on the chosen action.

We formalize this idea in the following proposition, under the classical assumptions of voting models. Hence, we consider that the agents have a higher probability of voting for the best action than any other action (which makes plurality a MLE voting rule [49]), uniform priors over all actions, and that all agents are identical and independent.

Proposition 1

The probability that a team is correct increases with the number of agreeing agents m in a voting profile, if plurality is MLE.

Proof

Let \(a^*\) be the best action (whose identity we do not know) and \(\mathbf V = v_1, v_2 \ldots v_n\) be the votes of n agents. The probability of any action a being the best action, given the votes of the agents (i.e., \(P(a = a^* | v_1, v_2 \ldots v_n)\)), is governed by the following relation:

Let’s consider two voting profiles \(\mathbf V _1, \mathbf V _2\), where in one a higher number of agents agree in the chosen action than in the other (i.e., \(m_\mathbf{V _1} > m_\mathbf{V _2}\)). Let \(w_1\) be the action with the highest number of votes in \(\mathbf V _1\), and \(w_2\) the one in \(\mathbf V _2\).

Without loss of generality (since the order does not matter), let’s reorder the voting profiles \(\mathbf V _1\) and \(\mathbf V _2\), such that all votes for \(w_1\) are in the beginning of \(\mathbf V _1\) and all votes for \(w_2\) are in the beginning of \(\mathbf V _2\). Now, let \(\mathbf V ^x_1\) and \(\mathbf V ^x_2\) be the voting profiles considering only the first x agents (after reordering).

We have that \(P(\mathbf V ^{m_\mathbf{V _2}}_1| w_1 = a^*) = P(\mathbf V ^{m_\mathbf{V _2}}_2| w_2 = a^*)\), since up to the first \(m_\mathbf{V _2}\) agents for both voting profiles we are considering the case where all agents voted for \(a^*\).

Now, let’s consider the agents from \(m_\mathbf{V _2} + 1\) to \(m_\mathbf{V _1}\). In \(\mathbf V _1\), the voted action of all agents (still \(w_1\)) is wired to \(a^*\) (by the conditional probability). However, in \(\mathbf V _2\), the voted action \(a \ne w_2\) is not wired to \(a^*\) in the conditional probability anymore. As each agent is more likely to vote for \(a^*\) than any other action, from \(m_\mathbf{V _2} + 1\) to \(m_\mathbf{V _1}\), the events in \(\mathbf V _1\) (an agent voting for \(a^*\)) has higher probability than the events in \(\mathbf V _2\) (an agent voting for an action \(a \ne a^*\)). Hence, \(P(\mathbf V ^{m_\mathbf{V _1}}_1| w_1 = a^*) > P(\mathbf V ^{m_\mathbf{V _1}}_2| w_2 = a^*)\).

Now let’s consider the votes after \(m_\mathbf{V _1}\). In \(\mathbf V _1\) there are no more votes for \(w_1\), and in \(\mathbf V _2\) there are no more votes for \(w_2\). Hence, all the subsequent votes are not wired to any ranking (as we only wire \(w_1 = a^*\) and \(w_2 = a^*\) in the conditional probabilities). Therefore, each vote can be assigned to any ranking position that is not the first. Since the agents are independent, any sequence of votes will thus be as likely. Hence, \(P(\mathbf V _1| w_1 = a^*) > P(\mathbf V _2| w_2 = a^*)\).

Since we assume uniform priors, it follows that:

Therefore, the team is more likely correct in profiles where a higher number of agents agree. \(\square \)

Hence, if across multiple voting iterations, a higher number of agents agree often, we can predict that the team is going to be successful. If they disagree a lot, we can expect that they are wrong in most of the voting iterations, and we can predict that the team is going to fail.

In the next observation we show that we can increase the prediction accuracy by knowing not only how many agents agreed, but also which specific agents were involved in the agreement. Basically, we show that the probability of a team being correct depends on the agents involved in the agreement. Therefore, if we know that the best agents are involved in an agreement, we can be more certain of a team’s success. This observation motivates the use of the full feature vector, instead of the reduced one.

Observation 1

Given two profiles \(\mathbf V _1, \mathbf V _2\) with the same number of agreeing agents m, the probability that a team is correct is not necessarily equal for the two profiles.

We can easily show this with an example (that is, we only need one example where the probability is not equal to show that it will not always be equal). Consider a problem with two actions. Consider a team of three agents, where \(t_1\) and \(t_2\) have a probability of 0.8 of being correct, while \(t_3\) has a probability of 0.6 of being correct. As the probability of picking the correct action is the highest for all agents, the action chosen by the majority of the agents has the highest probability of being correct (that is, we are still covering a case where plurality is MLE).

However, when only \(t_1\) and \(t_2\) agree, the probability that the team is correct is given by: \(0.8^2\times 0.4{/}(0.8^2\times 0.4 + 0.2^2\times 0.6) = 0.91\). When only \(t_2\) and \(t_3\) agree, the probability that the team is correct is given by: \(0.8\times 0.6\times 0.2{/}(0.8\times 0.6\times 0.2 + 0.2\times 0.4\times 0.8) = 0.59\). Hence, the probability that the team is correct is higher when \(t_1\) and \(t_2\) agree than when \(t_2\) and \(t_3\) agree.

However, based solely on the classical voting models, one would expect that given two different teams, the predictions would be more accurate for the one that has greater performance (i.e., likelihood of being correct), as we formalize in the following proposition. Therefore, this model fails to explain our experimental results (as we will show later). We will use the term strength to refer to a team’s performance.

Proposition 2

Under the classical voting models, given two different teams, one can expect to make better predictions for the strongest one.

Proof Sketch Under the classical voting models, assuming the agents have a noise model such that plurality is a MLE, we have that the best team will have a greater probability of being correct given a voting profile where m agents agree than a worse team with the same amount of m agreeing agents.

Hence, the probability of the best team being correct will be closer to 1 in comparison with the probability of the worse team being correct. The closer the probability of success is to 1, the easier it is to make predictions. Consider a Bernoulli trial with probability of success \(p \approx 1\). In the learning phase, we will see many successes accordingly. In the testing phase, we will predict the majority of the two for every trial, and we will go wrong only with probability \(|1-p| \approx 0\).

Of course, we could also have an extremely weak team, that is wrong most of the time. For such a team, it would also be easy to predict that the probability of success is close to 0. Notice, however, that we are assuming here the classical voting models, where plurality is a MLE. In such models, the agents must play “reasonably well”: classically they are assumed to have either a probability of being correct greater than 0.5 or the probability of voting for the best action is the highest one in their pdf [49]. Otherwise, plurality is not going to be a MLE. \(\square \)

Consider, however, that the strongest team is composed of copies of the best agent (which would often be the case, under the classical assumptions). We actually have that, in fact, such agents will not necessarily have noise models (pdfs) where the best action has the highest probability in all world states. In some world states, a suboptimal action could have the highest probability, making the agents agree on the same mistakes [38, 56]. Therefore, when plurality is not actually a MLE in all world states, we have that Proposition 1 will not hold in the world states where this happens. Hence, we will predict that the team made a correct choice, when actually the team was wrong, causing problems in our accuracy. We give more details in the next section.

4.2 Main theoretical model

We now present our main theory, that holds irrespective of plurality being an optimal voting rule (MLE) or not. Again, we consider agents voting across multiple world states. We assume that all iterations equally influence the final outcome, and that they are all independent.

Let the final reward of the team be defined by a random variable W, and let the number of world states be S. We model the problem solving process by the graphical model in Fig. 2, where \(\mathbf {H}_j\) represents the subset of agents that agreed on the chosen action at world state \(s_j\). That is, we assume that the subset of agents that decided the action taken by the team at each world state of the problem solving process will determine whether the team will be successful or not in the end. A specific problem (for example, Go games where the next state will depend on the action taken in the current one) would call for more complex models to be completely represented. Our model is a simplification of the problem solving process, abstracting away the details of specific problems.

For any subset \(\mathbf {H}\), let \(P(\mathbf {H})\) be the probability that the chosen action was correct given the subset of agreeing agents. If the correct action is \(a^*,\,P(\mathbf {H})\) is equivalent to:

where \(\mathbf {H}\) is the subset of agents which voted for the action taken by the team.

Note that \(P(\mathbf {H})\) depends on both the team and the world state. However, we marginalize the probabilities to produce a value that is an average over all world states. We consider that, for a team to be successful, there is a threshold \(\delta \) such that:

We use the exponent 1/S in order to maintain a uniform scale across all problems. Each problem might have a different number of world states; and for one with many world states, it is likely that the incurred product of probabilities is sufficiently low to fail the above test, independent of the actual subsets of agents that agreed upon the chosen actions. However, the final reward is not dependent on the number of world states.

We can show, then, that we can use a linear classification model (such as logistic regression) that is equivalent to Eq. 2, to predict the final reward of a team.

Theorem 1

Given the model in Eq. 2, the final outcome of a team can be predicted by a linear model over agreement frequencies.

Proof

Getting the \(\log \) in both sides of Eq. 2, we have:

The sum over the steps (world states) of the problem-solving process can be transformed to a sum over all possible subset of agents that can be encountered, \(\mathscr {P}\):

where \(n_{\mathbf {H}}\) is the number of times the subset of agreeing agents \(\mathbf {H}\) was encountered during problem solving. Hence, \(\frac{n_{\mathbf {H}}}{S}\) is the frequency of seeing the subset \(\mathbf {H}\), which we will denote by \(f_{\mathbf {H}}\).

Recall that \(\mathbf {T}\) is the set of all agents. Hence, \(f_{\mathbf {T}}\) (which is the frequency of all agents agreeing on the same action), is equal to \(1 - \sum _{\mathbf {H} \in \mathscr {P} \setminus \{\mathbf {T}\}} f_{\mathbf {H}}\). Also, note that \(n_\emptyset = 0\), since at least one agent must pick the chosen action. Equation 3 can, hence, be rewritten as:

Hence, our final model will be:

Note that \(\log \big (\frac{\delta }{P(\mathbf {T})}\big )\) and the “coefficients” \(\log \big (\frac{P(\mathbf {H})}{P(\mathbf {T})}\big )\) are all constants with respect to a given team, as we have discussed earlier. Considering the set of all \(f_{\mathbf {H}}\) (for each possible subset of agreeing agents \(\mathbf {H}\)) to be the characteristic features of a single problem, the coefficients can now be learned from training data that contains many problems represented using these features. Further, the outcome of a team can be estimated through a linear model. \(\square \)

The number of constants is exponential, however, as the size of the team grows. Therefore, in the following corollary, we show that (under some conditions) we can approximate well the prediction with a reduced feature vector that grows linearly. In order to differentiate different possible subsets, we will denote by \(\mathbf {H}^i\) a certain subset \(\in \mathscr {P}\), and by \(|\mathbf {H}^i|\) the size of that subset (i.e., the number of agents that agree on the chosen action).

Corollary 1

If \(P(\mathbf {H}^i) \approx P(\mathbf {H}^j)\forall \mathbf {H}^i, \mathbf {H}^j\) such that \(|\mathbf {H}^i| = |\mathbf {H}^j|\), we can approximate the prediction with a reduced feature vector, that grows linearly with the number of agents. Furthermore, in a uniform team the reduced representation is equal to the full representation.

Proof

By the assumption of the corollary, there is a \(P_{\mathbf {H}'^n}\), defined as \(P_{\mathbf {H}'^n} \approx P(\mathbf {H}^j\)), \(\forall \mathbf {H}^j\) such that \(|\mathbf {H}^j| = n\). Let \(f_n = \sum f_{\mathbf {H}^j}\), over all \(|\mathbf {H}^j| = n\). Also, let \(\mathbf {N'}\) be the set of all integers \(0< x < N\), where N is the number of agents. We thus have that:

As \(P_{\mathbf {H}'^n}\) depends only on the number of agents, we have that such representation grows linearly with the size of the team.

Moreover, note that for a team made of copies of the same agent, we have that \(P_{\mathbf {H}'^n} = P(\mathbf {H}^j),\,\forall \mathbf {H}^j\) such that \(|\mathbf {H}^j| = n\). Hence, the left hand side of Eq. 5 is going to be equal to the right hand side. \(\square \)

Also, notice that our model does not need any assumptions about plurality being an optimal voting rule (MLE). In fact, there are no assumptions about the voting rule at all. Hence, Proposition 1, Observation 1 and Proposition 2 do not apply, and we can still make accurate predictions irrespective of the performance of a team.

We can also note that the accuracy of the predictions is not going to be the same across different teams, and we may actually be able to have better predictions for a lower performing team. The learned constants \(\hat{c}_{\mathbf {H}} \approx \log \left( \frac{P(\mathbf {H})}{P(\mathbf {T})}\right) \) represent the marginal probabilities across all world states. However, Marcolino et al. [56] and Jiang et al. [38] show that the agent with the highest marginal probability of voting for the correct choice will not necessarily have the highest probability of being correct at all world states. In such world states, the agents tend to agree over the incorrect action that has the highest probability, which will cause problems in our accuracy. Hence, in the following observation, we show that it is possible to make better predictions for a diverse team that has a lower playing performance than a uniform team made of copies of the best agent.

Observation 2

There exists one diverse and one uniform team (made of copies of the best agent), such that the diverse team leads to better prediction accuracy, even though it has a worse playing performance.

We can easily show by an example. Let’s consider the teams shown in Table 4, where each agent has a probability 1 of voting for the action shown in the cell, for each world state. We consider that the team will be successful when picking \(a_1\), and will fail otherwise. We assume that one problem solving instance will be consisted by a single world state, which may be either State 1, State 2 or State 3, each with a 1/3 probability. We also consider that the teams play by using plurality voting.

Let’s first discuss the uniform team [Table 4(a)]. Given enough training samples, the best predictor that can be created for such team is to predict success when all four agents agree. However, such predictor will be correct only in 2/3 of the problem instances, as it will fail in problems consisting of State 3. We can also notice that the uniform team will be successful in 2/3 of the problem instances.

Let’s consider now the diverse team in Table 4(b). With enough training samples, the best predictor that can be learned is to predict success when three agents agree, and failure otherwise. This predictor will be correct in all problem instances, even though the team itself will only be successful in 1/3 of the problem instances.

Although we only give here an existence result, in Sect. 5 we experimentally show a statistically significant higher accuracy for the predictions for a diverse team than for a uniform team, even though they have similar strength (i.e., performance in terms of winning rates). We are able to achieve a better prediction for diverse teams both in the end of the problem-solving process and also while doing online predictions at any world state.

4.3 Action space size

We present now our study concerning the quality of the predictions over large action space sizes. In order to perform this analysis, we assume the spreading tail (ST) agent model, presented in Marcolino et al. [57]. The ST agent model was developed to study how teams of voting agents change in performance as the size of the action space increases. The basic assumption is that the pdf of each member of the team has a non-zero probability over an increasingly larger number of suboptimal actions as the action space grows, while the probability of voting for the optimal action remains unchanged. Marcolino et al. [57] perform an experimental validation of this model in the Computer Go domain.

For completeness, we briefly summarize here the formal definition of the ST agent model, and we refer the reader to Marcolino et al. [57] for a more detailed description. Let \(\mathbf {D_m}\) be the set of suboptimal actions (\(a_j, j \ne 0\)) assigned with a nonzero probability in the pdf of an agent i, and \(d_m = |\mathbf {D_m}|\). The ST model assumes that there is a bound in the ratio of the suboptimal action with highest probability and the one with lowest nonzero probability, i.e., let \(p_{i,min} = min_{j \in \mathbf {D_m}} p_{i,j}\) and \(p_{i,max} = max_{j \in \mathbf {D_m}} p_{i,j}\); there is a constant \(\zeta \) such that \(p_{i,max} \le \zeta p_{i,min} \forall \) agents i. ST agents are agents whose \(d_m\) is non-decreasing on m and \(d_m \rightarrow \infty \) as \(m \rightarrow \infty \). Marcolino et al. [57] consider that there is a constant \(\epsilon > 0\), such that for all ST agents \(i,\,\forall m,\,p_{i,0} \ge \epsilon \). They also assume that \(p_{i,0}\) does not change with m.

Let the size of the action space \(|\mathbf {A}| = \rho \), and \(p_{i,j}\) be the probability that agent i votes for action with rank j. Marcolino et al. [57] show that when \(\rho \rightarrow \infty \), the probability that a team of n ST agents will play the optimal action converges to:

that is, the probability of two or more agents agreeing over suboptimal actions converges to zero, and the agents can only agree over the optimal choice (note that a suboptimal action can still be taken as we may have situations where no agent agrees). Hence, Eq. 6 calculates the total probability minus the cases where the best action is not chosen: the second term covers the case where all agents vote for a suboptimal action and the third term covers the case where one agent votes for the optimal action and all other agents vote for suboptimal actions.

Before proceeding to our study, we are going to make a few definitions and then two weak assumptions. We consider now here any action space size. Let \(\alpha \) be the probability of a team taking the optimal action when all agents disagree. Since we can only take the optimal action if one agent votes for that action, \(\alpha \) is a function of the probability of each agent voting for the optimal action. That is, we may have voting profiles where all agents disagree and no agent voted for the optimal action, or we may have voting profiles where all agents disagree, but there is one agent that voted for the optimal action (and, hence, we may still take the optimal action due to random tie braking).

Let \(\beta \) be the probability of a team taking the optimal action when there is some agreement on the voting profile. \(\beta \) may be different according to each voting profile, but we assume that we always have that \(\beta < 1\) if \(\rho < \infty \), and \(\beta = 1\) if \(\rho \rightarrow \infty \), according to the ST agent model. That is, if two or more agents agree, there is always some probability \(q > 0\) that they are agreeing over a suboptimal action, and \(q \rightarrow 0\) as \(\rho \rightarrow \infty \).

We will make the following weak assumptions: (1) If there is no agreement, the team is more likely to take a suboptimal action than an optimal action. I.e., \(\alpha < 1 - \alpha \); (2) If there is agreement, there is at least one voting profile where the team is more likely to take an optimal action than a suboptimal action. That is, there is at least one \(\beta \) such that \(\beta > 1 - \beta \).

Assumption (1) is weak, since \(\alpha < 1/n\) (as we break ties randomly and there may be cases where no agent votes for the optimal action). Clearly \(1/n < 1 - 1/n\) for \(n > 2\). Assumption (2) is also weak, because if we are given a team that is always more likely to take suboptimal actions than an optimal action for any voting profile, then a trivial predictor that always outputs “failure” would be optimal (and, hence, we would not need a prediction at all). Therefore, assumption (1) and (2) are satisfied for all situations of interest. We present now our result:

Theorem 2

Let \(\mathbf {T}\) be a set of ST agents. The quality of our prediction about the performance of \(\mathbf {T}\) is the highest as \(\rho \rightarrow \infty \).

Proof

Let’s fix the problem to predicting performance at one world state. Hence, as we consider a single decision, there is a single \(\mathbf {H}^i\) such that \(f_{\mathbf {H}^i} = 1\), and \(f_{\mathbf {H}^j} = 0\forall j \ne i\). In order to simplify the notation, we denote by \(\mathscr {H}\) the subset \(\mathbf {H}^i\) corresponding to \(f_{\mathbf {H^i}}=1\). We also consider the performance of the team as “success” on that fixed world state if they take the optimal action, and as “failure” otherwise.

Let a voting event be the process of querying the agents for the vote, obtaining the voting profile and the corresponding final decision. Hence, it has a unique correct label (“success” or “failure”). A voting event \(\xi \) will be mapped to a point \(\chi \) in the feature space, according to the subset of agents that agreed on the chosen action. Multiple voting events, however, will be mapped to the same point \(\chi \) (as exactly the same subset can agree in different situations, sometimes they may be agreeing over the optimal action, and sometimes they may be agreeing over suboptimal actions). Hence, given a point \(\chi \), there is a certain probability that the team was successful, and a certain probability that the team failed. Therefore, by assigning a label to that point, our predictor will also be correct with a certain probability. With enough data, the predictor will output the more likely of the two events. That is, if given a profile, the team has a probability p of taking the optimal action, and \(p > 1-p\), the predictor will output “success”, and it will be correct with probability p. Correspondingly, if \(1 - p > p\), the predictor will output “failure”, and it will be correct with probability \(1 - p\). Hence, the probability of the prediction being correct will be \(\max (p,1-p)\).

We first study the probability of making a correct prediction across the whole feature space, for different action space sizes, and after that we will focus on what happens with the specific voting events as the action space changes.

Let us start by considering the case when \(\rho \rightarrow \infty \). By Eq. 6, we know that every time two or more agents agree on the same action, that action will be the optimal one. Note that this is a very clear division of the feature space, as for every single point where \(|\mathscr {H}| \ge 2\) the team will be successful with probability 1. Therefore, on this subspace we can make perfect predictions.

The only points in the feature space where a team may still take a suboptimal action are the ones where a single agent agrees on the chosen action, i.e., \(|\mathscr {H}| = 1\). Hence, for such points we will make a correct prediction with probability \(\max (\alpha ,1-\alpha )\).

Let’s now consider cases with a smaller action space size (i.e., \(\rho < \infty \)). Let’s first consider the subspace \(|\mathscr {H}| \ge 2\). Before, our predictor was correct with probability 1. Now, given a voting event where there is an agreement, there will be a probability \(\beta < 1\) of the team taking the optimal action. Hence, the predictor will be correct with probability \(\max (\beta ,1 - \beta \)), but \(\max (\beta , 1 - \beta ) < 1\).

Let’s consider now the subspace \(|\mathscr {H}| = 1\). Here the quality of the prediction depends on \(\alpha \), which is a function of the probability of each agent playing the best action. On the ST agent model, however, the probability of one agent voting for the best action is independent of \(\rho \) [57]. Hence, \(\alpha \) does not depend on the action space size, and for these cases the quality of our prediction will be the same as before. Therefore, for all points in the feature space, the probability of making a correct prediction is either the same or worse when \(\rho < \infty \) than when \(\rho \rightarrow \infty \).

However, that does not complete the proof yet, because a voting event \(\xi \) may map to a different point \(\chi \) when the action space changes. For instance, the number of agents that agree over a suboptimal action may overpass the number of agents that agree on the optimal action as the action spaces changes from \(\rho \rightarrow \infty \) to \(\rho < \infty \). Therefore, we need to show that our prediction will be strictly better when \(\rho \rightarrow \infty \) irrespective of such mapping.

Hence, let us now study the voting events. As the number of actions decrease, a certain voting event \(\xi \) when \(\rho \rightarrow \infty \), will map to a voting event \(\xi '\) when \(\rho < \infty \) (where \(\xi \) may or may not be equal to \(\xi '\)). Let \(\chi \) and \(\chi '\) be the corresponding points in the feature space for \(\xi \) and \(\xi '\). Also, let \(\mathscr {H}\) and \(\mathscr {H'}\) be the respective subset of agreeing agents. Let’s consider now the four possible cases:

-

(1)

\(|\mathscr {H}| = |\mathscr {H'}| = 1\). For such events, the performance of the predictor will remain the same, that is, for both cases we will make a correct prediction with probability \(\max (\alpha ,1-\alpha )\). Note that this case will not happen for all events, as \(p_{i,0} \not \rightarrow 0\) when \(\rho \rightarrow \infty \), hence there will be at least one event where \(|\mathscr {H}| \ge 2\).

-

(2)

\(|\mathscr {H}| \ge 2,\,|\mathscr {H'}| \ge 2\). For such events the performance of the predictor will be higher when \(\rho \rightarrow \infty \), as we can make a correct prediction for a point \(\chi \) with probability 1, while for a point \(\chi '\) with probability \(\max (\beta , 1 - \beta ) < 1\).

-

(3)

\(|\mathscr {H}| \ge 2,\,|\mathscr {H'}| = 1\). This case will not happen under the ST agent model. If there was a certain subset \(\mathbf {H}\) of agreeing agents when \(\rho \rightarrow \infty \), when we decrease the number of actions the new subset of agreeing agents \(\mathbf {H'}\) will either have the same size or will be larger. This follows from the fact that we may have a larger subset agreeing over some suboptimal action when the action space decreases, but the original subset that voted for the optimal action will not change.

-

(4)

\(|\mathscr {H}| = 1,\,|\mathscr {H'}| \ge 2\). We know that in this case \(\xi '\) is an event where the team fails (otherwise the same subset would also have agreed when \(\rho \rightarrow \infty \)). Hence, for \(1 - \alpha > \alpha \) [weak assumption (1)], we make a correct prediction for such case when \(\rho \rightarrow \infty \). When \(\rho < \infty \), we make a correct prediction if \(1 - \beta > \beta \). \(\beta \), however, depends on the voting profile of the event \(\xi '\). By weak assumption (2), there will be at least one event where the team is more likely to be correct than wrong (that is, \(\beta > 1 - \beta \)). Hence, there will be at least one event where our predictor changes from making a correct prediction (when \(\rho \rightarrow \infty \)) to making an incorrect prediction (when \(\rho < \infty \)).

Hence, for all voting events, the probability of making a correct prediction will either be the same or worse when \(\rho < \infty \) than when \(\rho \rightarrow \infty \), and there will be at least one voting event where it will be worse, completing the proof. Hence, \(\rho \rightarrow \infty \) is strictly the best case for our prediction. As we assume that all world states are independent, if \(\rho \rightarrow \infty \) is the best case for a single world state, it will also be the best case for a set of world states. \(\square \)

5 Results

5.1 Computer Go

We first test our prediction method in the Computer Go domain. We use four different Go software: Fuego 1.1 [26], Gnu Go 3.8 [27], Pachi 9.01 [9], MoGo 4 [30], and two (weaker) variants of Fuego (Fuego\(\varDelta \) and Fuego\(\varTheta \)), in a total of six different, publicly available, agents. Fuego is the strongest agent among all of them [56]. The description of Fuego\(\varDelta \) and Fuego\(\varTheta \) is available in Marcolino et al. [57].

We study three different teams: Diverse, composed of one copy of each agent;Footnote 1 Uniform, composed of six copies of the original Fuego (initialized with different random seeds, as in Soejima et al. [71]); Intermediate, composed of six random parametrized versions of Fuego (from Jiang et al. [38]). In all teams, the agents vote together, playing as white, in a series of Go games against the original Fuego playing as black. We study four different board sizes for diverse and uniform: \(9\times 9\), \(13\times 13\), \(17\times 17\) and \(21\times 21\). For intermediate, we study only \(9\times 9\), since the random parametrizations of Fuego do not work on larger boards. In Go a player is allowed to place a move at any empty intersection of the board, so the largest number of possible actions at each board size is, respectively: 81, 169, 289, 441. Computation for the work described in this paper was supported by the University of Southern California’s Center for High-Performance Computing (http://hpcc.usc.edu). We ran the Go games in HP DL165 machines, each with 12 2.33 GHz cores, and 48GB of RAM.

In order to evaluate our predictions, we use a dataset of 1000 games for each team and board size combination (in a total of 9000 games, all played from the beginning). For all results, we used repeated random sub-sampling validation. We randomly assign 20 % of the games for the testing set (and the rest for the training set), keeping approximately the same ratio as the original distribution. The whole process is repeated 100 times. Hence, in all graphs we show the average results, and the error bars show the 99 % confidence interval (\(p = 0.01\)), according to a t test. If the error bars cannot be seen in a certain point in a graph, it is because they are smaller than the symbol used to mark that point in the graph. Moreover, when we say that a certain result is significantly better than another, we mean statistically significantly better, according to a t test where \(p < 0.01\), unless we explicitly give a p value.

First, we show the winning rates of the teams in Fig. 3. This result is not yet evaluating the quality of our prediction, it is merely background information that we will use when analyzing our prediction results later. On \(9\times 9\) Go, uniform is better than diverse with statistical significance (\(p = 0.014\)), and both teams are clearly significantly better than intermediate (\(p < 2.2\times 10^{-16}\)). On \(13\times 13\) and \(17\times 17\) Go, the difference between diverse and uniform is not statistically significant (\(p = 0.9619\) and 0.5377, respectively). On \(21\times 21\) Go, the diverse team is significantly better than uniform (\(p = 0.03897\)).

In order to verify our online predictions, we used the evaluation of the original Fuego, but we give it a time limit \(50\times \) longer (i.e., it runs for 500 s per evaluation).Footnote 2 We will refer to this version as “Baseline”. We, then, use the Baseline’s evaluation of a given board state to estimate its probability of victory, allowing a comparison with our approach. Considering that an evaluation above 0.5 is “success” and below is “failure”, we compare our predictions with the ones given by the Baseline’s evaluation, at each turn of the games.

We use this method because the likelihood of victory changes dynamically during a game. That is, a team could be in a winning position at a certain stage, after making several good moves, but suddenly change to a losing position after committing a mistake. Similarly, a team could be in a losing position after several good moves from the opponent, but suddenly change to a winning position after the opponent makes a mistake. Therefore, simply comparing with the final outcome of the game would not be a good evaluation. However, for the interested reader, we show in the “Appendix” how the evaluation would be comparing with the final outcome of the game—and we note here that our prediction quality is still high under that alternative evaluation method.

Since the games have different lengths, we divide all games in 20 stages, and show the average evaluation of each stage, in order to be able to compare the evaluation across all games uniformly. Therefore, a stage is defined as a small set of turns (on average, \(1.35 \pm 0.32\) turns in \(9\times 9\); \(2.76 \pm 0.53\) in \(13\times 13\); \(4.70 \pm 0.79\) in \(17\times 17\); \(7.85 \pm 0.87\) in \(21\times 21\)). For all games, we also skip the first 4 moves, since our baseline returns corrupted information in the beginning of the games.

We will present our results in five different sections. First, we will show the analysis for a fixed threshold (that is, the value \(\vartheta \) above which the output of our prediction function \(\hat{f}\) will be considered “success”). Secondly, we are going to analyze across different thresholds using receiver operating characteristic (ROC) curves, and the area under such curves (AUC). Thirdly, we will present the results for different board sizes. Then, finally, we will study the performance of the reduced feature vector, and we will show results when playing against a different adversary.

5.1.1 Single threshold analysis

We start by showing our results for a fixed threshold, since it gives a more intuitive understanding of the quality of our prediction technique. Hence, in these results we will consider that when our prediction function \(\hat{f}\) returns a value above 0.5 for a certain game, it will be classified as “success”, and when the value is below 0.5, it will be classified as “failure”. In this section we also restrict ourselves to the \(9\times 9\) Go case.

We will evaluate our prediction results according to five different metrics: Accuracy, Failure Precision, Success Precision, Failure Recall, Success Recall. Accuracy is defined as the sum of the true positives and true negatives, divided by the total number of tests (i.e., true positives, true negatives, false positives, false negatives). Hence, it gives an overall view of the quality of the classification. Precision gives the percentage of data points classified with a certain label (“success” or “failure”) that are correctly labeled. Recall denotes the percentage of data points that truly pertain to a certain label, that are correctly classified.

We show the results in Fig. 4. As we can see, we were able to obtain a high-accuracy very quickly, already crossing the 0.5 line in the 2nd stage for all teams. In fact, the accuracy is significantly higher than the 0.5 mark for all teams after the 2nd stage (and for diverse and intermediate since the 1st stage).

From around the middle of the games (stage 10), the accuracy for diverse and uniform already gets close to 60 % (with intermediate only close behind). Although we can see some small drops—that could be explained by the sudden changes in the game—, overall the accuracy increases with the game stage number, as expected. Moreover, for most of the stages, the accuracy is higher for diverse than for uniform. The prediction for diverse is significantly better than for uniform in 90 % of the stages. It is also interesting to note that the prediction for intermediate is significantly better than for uniform in 60 % of the stages, even though intermediate is a significantly weaker team. In fact, we can see that in the last stage, the accuracy, the failure precision and the failure recall is significantly better for intermediate than for the other teams.

5.1.2 Multiple threshold analysis

We now measure our results under a variety of thresholds (that is, the value \(\vartheta \) above which the output of our prediction function \(\hat{f}\) will be considered “success”). In order to perform this study, we use receiver operating characteristic (ROC) curves. An ROC curve shows the true positive and the false positive rates of a binary classifier at different thresholds. The true positive rate is the number of true positives divided by the sum of true positives and false negatives (i.e., the total number of items of a given label). That is, the true positive rate shows the percentage of “success” cases that are correctly labeled as such. The false positive rate, on the other hand, is the number of false positives divided by the sum of false positives and true negatives (i.e., the total number of items that are not of a given label). That is, the false positive rate shows the percentage of “failure” cases that are wrongly classified as “success”.

Hence, ROC curves allow us to better understand the trade-off between the true positives and the false positives of a given classifier. By varying the threshold, we can increase the number of items that receive a given label, increasing henceforth the number of items of such label that are correctly classified, but at the cost of also increasing the number of items that incorrectly receive the same label.

The ideal classifier is the one on the upper left corner of the ROC graph. This point (true positive rate \(=\) 1, false positive rate \(=\) 0) indicates that every item classified with a certain label truly pertains to such label, and every item that truly pertains to such label receives the correct classification.

In this paper, we do not aim only on studying the performance of a single classifier, but rather on comparing the performance of our prediction technique on a variety of situations, changing the team and the action space size (i.e., the board size). Hence, we also study the area under the ROC curve (AUC), as a way to synthesize the quality information from the curve into a single number. The higher the AUC, the better the prediction quality of our technique in a given situation. That is, a completely random classifier would have an AUC of 0.5, and as the ROC curve moves towards the top-left corner of the graph, the AUC gets closer and closer to 1.0.

In fact, the AUC metric has been shown to be equal to the probability of, given a pair of items with different labels (one “success” case and one “failure” case, in our situation), correctly considering the item that was truly a “success” as more likely to be a “success” case than the other item. It has also been shown to be related to other important statistical metrics, such as the Wilcoxon test of ranks, the Mann–Whitney U and the Gini coefficient [32, 33, 59].

We start by studying multiple thresholds in a fixed board size (\(9\times 9\)), and in the next section we study the effect of increasing the action space. Hence, in Fig. 5 we show the ROC curves for all teams in the \(9\times 9\) Go games, for 4 different stages of the game. Although in the beginning of the game (stage 5) it is hard to distinguish the results for the different teams, we can note that from the middle of the games to the end (stage 15 and 20), there is a clear distinction between the prediction quality for diverse and intermediate, when compared with the one for uniform, across many different thresholds.

As mentioned, to formally compare these results across all stages we use the AUC metric. Hence, in Fig. 6, we show the AUC for the three different teams in \(9\times 9\) Go. As we can see, the three teams have similar AUCs up to stage 10, but from that stage on we can get better AUCs for both diverse and intermediate, significantly outperforming the AUC for uniform in all stages. We also find that, considering all stages, we have a significantly better AUC for diverse (than for uniform) in 85 % of the cases, and for intermediate in 55 % of the cases. Curiously, we can also note that even though intermediate is the weakest team, we can obtain for it the (significantly) best AUC in the last stage of the games, surpassing the AUC found for the other teams. Overall, these results show that our hypothesis that we can make better predictions for teams that have higher diversity holds irrespective of the threshold used for classification. On the next section, we study the effect of increasing the action space size.

5.1.3 Action space size

Let us start by showing, in Fig. 7, ROC curves for diverse and uniform under different board sizes. It is harder to distinguish the curves on stages 5 and 10, but we can notice that the curve for \(21\times 21\) tends to dominate the others on stages 15 and 20. Again, to better study these results, we look at how the AUC changes for different teams and board sizes.

In Fig. 8 we can see the AUC results. For the diverse team (Fig. 8a), we start observing the effect of increasing the action space after stage 5, when the curves for \(17\times 17\) and \(21\times 21\) tends to dominate the other curves. In fact, the AUC for \(17\times 17\) is significantly better than smaller boards in 60 % of the stages, and in 80 % of the stages after stage 5. Moreover, after stage 5 no smaller board is significantly better than \(17\times 17\). Concerning \(21\times 21\), we can see that from stage 14, its curve completely dominates all the other curves. In all stages from 14 to 20 the result for \(21\times 21\) is significantly better than for all other smaller boards. Hence, we can note that the effect of increasing the action space seems to depend on the stage of the game, and it gets more evident as the stage number increases.

Concerning the uniform team (Fig. 8b), up to \(17\times 17\) we cannot observe a positive impact of the action space size on the prediction quality; but for \(21\times 21\) there is clearly an improvement from the middle game when compared with smaller boards. On all 8 stages from stage 13 to stage 20, the result for \(21\times 21\) is significantly better than for other board sizes.

In terms of percentage of stages where the result for \(21\times 21\) is significantly better than for \(9\times 9\), we find that it is 40 % for the uniform team, while it is 85 % for the diverse team. Hence, the impact of increasing the action space occurs for diverse earlier in the game, and over a larger number of stages.

Now, in order to compare the performance for diverse and uniform under different board sizes, we show the AUCs in Fig. 9 organized by the size of the board (Fig. 6 is repeated here in Fig. 9a to make it easier to observe the difference between board sizes). It is interesting to observe that the quality of the predictions for diverse is better than for uniform, irrespective of the size of the action space. Moreover, while for \(9\times 9\) and \(13\times 13\) the prediction for diverse is only always significantly better than for uniform after around stage 10, we can notice that for \(17\times 17\) and \(21\times 21\), the prediction for diverse is always significantly better than for uniform, irrespective of the stage (except for stage 1 in \(17\times 17\)). In fact, we can also show that the difference between the teams is greater on larger boards. In Fig. 10 we can see the difference between diverse and uniform, in terms of area under the AUC graph, and also in terms of percentage of stages where diverse is significantly better than uniform, for \(9\times 9\) and \(21\times 21\). The difference between the areas in \(9\times 9\) and \(21\times 21\) is statistically significant, with \(p = 0.0003337\).

We study again the accuracy, precision and recall in \(21\times 21\), as the results in these metrics may be more intuitive to understand for most readers (although limited to a fixed threshold, in this case 0.5). We show these results in Fig. 11, where for comparison we also plot the results for \(9\times 9\). For the diverse team, the accuracy in \(21\times 21\) is consistently significantly better than in \(9\times 9\), in all stages from stage 12. We also have a significantly better accuracy in the first 5 stages. Concerning uniform, the accuracy for \(21\times 21\) is only consistently better from stage 15 (and also in stage 1). Hence, again, we can notice a better prediction quality on larger boards, and also a higher impact of increasing the action space size on diverse than on uniform (in terms of number of stages, and also how early the performance improves).

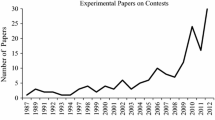

5.1.4 Reduced feature vector