Abstract

This paper introduces a novel approach to evaluate performance in the executive functioning skills of bilingual and monolingual children. This approach targets method- and analysis-specific issues in the field, which has reached an impasse (Antoniou et al., 2021). This study moves beyond the traditional approach towards bilingualism by using an array of executive functioning tasks and frontier methodologies, which allow us to jointly consider multiple tasks and metrics in a new measure; technical efficiency (TE). We use a data envelopment analysis technique to estimate TE for a sample of 32 Greek–English bilingual and 38 Greek monolingual children. In a second stage, we compare the TE of the groups using an ANCOVA, a bootstrap regression, and a k-means nearest-neighbour technique, while controlling for a range of background variables. Results show that bilinguals have superior TE compared to their monolingual counterparts, being around 6.5% more efficient. Robustness tests reveal that TE yields similar results to the more complex conventional MANCOVA analyses, while utilising information in a more efficient way. By using the TE approach on a relevant existing dataset, we further highlight TE’s advantages compared to conventional analyses; not only does TE use a single measure, instead of two principal components, but it also allows more group observations as it accounts for differences between the groups by construction.

Similar content being viewed by others

Introduction

A large strand of the empirical research on bilingualism focuses on the comparative performance of bilingual and monolingual populations with regards to executive function.Footnote 1 On the one hand, a number of studies suggest that bilinguals outperform monolinguals on executive function tasks, in a so-called “bilingual advantage” (Bialystok, 2001; Bialystok et al., 2004, 2006; Bialystok & Martin, 2004; Calvo & Bialystok, 2014; Emmorey et al., 2008). On the other hand, there is increasing evidence that the “bilingual advantage” may not be as universal as originally suggested. In particular, the bilingual advantage may be confined within particular age ranges, such as preschool children or older adults (Bialystok, 2017; Hilchey & Klein, 2011), or specific subcategories of executive function; thus prohibiting generalisations (Bialystok et al., 2009).

This lack of consensus in the literature may be attributed to several factors, broadly grouped into two categories; method-specific and analysis-specific. Method-specific differences comprise the particulars of executive function tasks, such as the administered task, and whether the investigated quantity is the accuracy and/or the reaction time. Some of the executive function tasks that have been used include the dimensional change card sort (DCCS) task (Zelazo et al., 1996), the Children’s Embedded Figures Task (Bialystok & Shapero, 2005), the flanker task (Calvo & Bialystok, 2014; de Abreu et al., 2012; Kapa & Colombo, 2013; Yang et al., 2011), the attentional network task (ANT) (Antón et al., 2014; Poarch & van Hell, 2012; Yang et al., 2011), the Simon task (Poarch & van Hell, 2012), the Stroop task (Antón et al., 2014; Poulin-Dubois et al., 2011) and the Multilocation task (Poulin-Dubois et al., 2011). The majority of studies report a single test, while Poulin-Dubois et al. (2011) is one of the few that report five, which, not surprisingly, lead to different conclusions. Analysis-specific differences comprise variations in the data cleaning, and subsequent analyses; most notably controlling for participant-specific characteristics. As most studies in this field feature small samples, certain limitations are, perhaps, unavoidable. For example, controlling for (or matching on) children’s grade (or age) and socio-economic status (SES), might exclude performance differences attributed to vocabulary and grammar skill differences in both languages, to name but a few. The need to control for an extensive array of indicators has been highlighted in Paap and Greenberg (2013) within this context, and within Stuart (2010) in a broader sense.Footnote 2

In this paper, we aim to address both method-specific and analysis-specific issues, by presenting a novel approach that relies on the frontier methodology that measures the relative efficiency of a decision-making unit (DMU) compared to the best practice, in what is termed as technical efficiency. This is a flexible methodology; due to it being a non-parametric, linear programming technique, it does not rely on distributional assumptions and is not computationally intensive. We apply this methodology in the context of executive function performance evaluation of Greek–English bilingual and Greek monolingual children, while using an extended array of executive function tasks and metrics that are in line with the related literature in this field.

Bilingualism and executive control: mechanisms and challenges

The executive function system is a domain-general cognitive system, vital for the flexibility and regulation of cognition and goal-directed behaviour (Best & Miller, 2010). It is referred to as the most crucial cognitive achievement in early childhood (Abutalebi & Green, 2007; Bialystok et al., 2009; Del Maschio et al., 2018). Children gradually master the ability to control attention, inhibit distraction, monitor sets of stimuli, expand working memory, and shift between tasks. The bilingual advantage refers to the superior performance of bilinguals in tasks that seem to require executive processing, which is the ability to monitor goal-setting cues, to switch attention to goal-relevant sources of information, and to inhibit those that are irrelevant or competing (Bialystok & Martin, 2004; Costa et al., 2008; Emmorey et al., 2008). These advantages in executive functions of bilinguals are thought to be linked to the need for this population to manage multiple languages and continuously monitor the appropriate language for each communicative situation (Bialystok, 2009). More specifically, bilinguals need to select the right language for each circumstance, attend to cues in order to select the right language, select the suitable lexicon and at the same time suppress the interference of the other language/s generating general executive function advantages (Bialystok, 2017; Green, 1998). This consistent exposure to a context where higher-level cognitive function is constantly required may contribute to advanced cognitive performance. High-level cognition is theorized to be required, such as working memory to maintain and manipulate information and inhibitory control to block or ignore competing information internally or from the environment (e.g., irrelevant words). This high-level cognition has been purported to contribute to across-the-board cognitive performance gains, dubbed as “bilingual advantage”.

Many studies have focused on childhood bilingualism and executive control, documenting that bilingual children outperform their monolingual cohorts on executive functioning tasks (Adesope et al., 2010; Bialystok, 2017), including selective attention (Bialystok, 2001), cognitive flexibility (Poulin-Dubois et al., 2011) and working memory (Morales et al., 2013). However, several other studies have not detected a bilingual effect on the executive function domain (Antón et al., 2014; Gathercole et al., 2014; Valian, 2015).

Paap (2018) and Paap et al. (2014) highlight a number of reasons that may be driving the results towards a “bilingual advantage”. Small samples might be one of the caveats, as studies with larger sample sizes tend to report no significant differences between bilinguals/monolinguals (Paap et al., 2015). Several studies featuring large datasets (Antón et al., 2014; Duñabeitia et al., 2014; Gathercole et al., 2014; Paap et al., 2014, 2017; Paap & Greenberg, 2013) reject the existence of a bilingual advantage.Footnote 3 In addition, a series of meta-analyses suggest that the bilingual advantage is either of very small magnitude (De Bruin et al., 2015; Grundy & Timmer, 2017) or non-existent (Donnelly, 2016; Lehtonen et al., 2018). Population-specific differences including variations in the bilingualism definition (Namazi & Thordardottir, 2010), differences/similarities in the languages the bilinguals manage (Bialystok, 2017; Yang et al., 2011), the switching intensity and/or frequency between the two languages (Baddeley, 2003) and cultural differences (Paap, 2018) may also affect the results.

Often the statistical analysis employs AN(C)OVA designs (Calvo & Bialystok, 2014; Poulin-Dubois et al., 2011), while regression techniques (Cox et al., 2016; Crivello et al., 2016), and propensity score matching (Tare & Linck, 2011) approaches tend to be limited. Over-reliance on ANCOVA and similar techniques is not a panacea, and underlying assumptions need to be checked thoroughly. In particular, Paap (2018) critiques how the correlation between the treatment variable and the control variables can be responsible for the appearance of a spurious bilingual advantage. For example, participation in team sports and musical dexterities have been linked to superior executive function (Paap et al., 2018; Paap & Greenberg, 2013; Valian, 2015).Footnote 4 Team sports performance is positively correlated with executive function; the relationship being more pronounced for professional sports at high levels of competition (Paap et al., 2018; Vestberg et al., 2012). Valian (2015) observed that in studies with bilingual and monolingual children, the participants might get different amounts of exercise or might have experienced some other beneficial experience (e.g., musical training) influencing their executive functioning skills.

Inappropriate controlling strategies may also play a role in whether a bilingual advantage is detected. While it is common practice to match on age and SES, less-clear guidance exists for non-verbal intellectual ability and/or language skills. As non-verbal intellectual ability is correlated with particular aspects of executive function (e.g., working memory) (Friedman et al., 2006), matching groups on non-verbal intellectual ability may mitigate the bilingual advantage (Lehtonen et al., 2018). Bilingual language skills may be inferior to monolinguals (Calvo & Bialystok, 2014; Lehtonen et al., 2018); hence both appropriately assessing language skills to ensure a level playing field and matching are imperative (Bialystok et al., 2008).

Differences in the particulars of executive function tasks, such as the administered task and subsequent modifications, whether quantity of interest is the accuracy and/or the response time, may also be affecting the results. Miyake et al. (2000) classify executive function into updating, switching, and inhibition subcategories using latent factor analysis. Subsequent research attempts to proxy these subcategories using certain measures (e.g., antisaccade tasks for inhibition). As highlighted in Paap and Greenberg (2013), studies often use a single task for each executive function component, while De Bruin et al. (2015) find that studies in support of a bilingual advantage tend to report fewer tasks. Proxying for any of the subcategories of executive function relies on the implicit assumption that all proxies for, say, inhibitory control would: i) lead to the same conclusion; ii) be correlated with each other. Failure to observe both conditions suggests that no compelling evidence with regards to the bilinguals’ performance may be reached, as argued in Paap and Greenberg (2013). As such, puzzling results may be reached with a subset of measures suggesting a bilingual advantage, while others not concurring with these (Poulin-Dubois et al., 2011; Tao et al., 2011). This has been identified as the “task impurity problem” where accurate measurement of particular domains of executive function suffers from the fact that the multitude of measures do not tap into the same cognitive processes, besides reported reliability and validity concerns (Lehtonen et al., 2018; Paap & Sawi, 2016). For inhibition alone, a variety of tasks have been used including the antisaccade task (Paap et al., 2014; Paap & Greenberg, 2013), flanker task (Calvo & Bialystok, 2014; de Abreu et al., 2012; Kapa & Colombo, 2013; Paap et al., 2014; Von Bastian et al., 2016; Yang et al., 2011), Simon task (Antoniou et al., 2016; Gathercole et al., 2014; Paap et al., 2014; Paap & Greenberg, 2013; Poarch & van Hell, 2012; Von Bastian et al., 2016), Stroop (Calvo & Bialystok, 2014; Duñabeitia et al., 2014; Poulin-Dubois et al., 2011; Von Bastian et al., 2016), ANT (Antón et al., 2014; Paap & Greenberg, 2013; Poarch & van Hell, 2012; Yang et al., 2011). The present study aims to address these issues by using a comprehensive approach utilising information from multiple subcategories of executive function as per the Miyake et al. (2000) classification.

The current study

In this paper, we present a novel methodology that accounts for the extended array of executive function tasks and metrics. Our method relies on the frontier methodology that measures the relative efficiency of a decision-making unit (DMU) compared to the best practice, in what is termed as technical efficiency. The technique is well-established in the areas of banking, economics, finance, transportation and management (Berger & Humphrey, 1997; Berger & Mester, 1997; Chen et al., 2015). Chen et al. (2015) verify that efficiency analysis is scarce in the management literature, even though its applicability is justified on a number of occasions. Within the fields of linguistics and psychology, efficiency applications are non-existent. We could not find any study using the frontier methodology in any of the highest-ranked journals (Cognition, Psychological Research, Psychonomic Bulletin & Review, Journal of Memory and Language, Psychological Methods, Psychometrika, Psychological Science, The British Journal of Mathematical and Statistical Psychology, Current Directions in Psychological Science) despite the fact that issues faced by researchers in these areas are not markedly different from the areas where the efficiency methodology has been successfully used.Footnote 5

Technical efficiency allows the researcher to jointly examine multiple executive function tasks, while taking into consideration both the accuracy and the response time of the participant in each task. As such technical efficiency may be viewed as a special case of principal component analysis (PCA) technique, however, the two techniques are markedly different. Like PCA, technical efficiency can handle a large number of executive function tasks (identified as “outputs”). Conversely to PCA, technical efficiency accommodates, by construction, factors that may affect the performance in executive function tasks, such as age and non-verbal intellectual ability to name but a few (identified as “inputs”). As technical efficiency is a single variable it dispenses with the need of PCA to interpret the retained factors. Due to its non-parametric nature, it does not impose any distributional assumptions on the data, while as it does not rely on correlations between variables, it can accommodate cases where executive function tasks show low correlation (see Paap and Greenberg (2013) and references therein). For these advantages, we opt for a frontier approach in this paper.

We contribute to the literature in four distinct ways. First, we introduce technical efficiency methodology and highlight the similarities and advantages of the technique to alternative ones that are popular in this field. We provide an application of this technique to an unstudied dataset on the executive functions of Greek–English bilingual and Greek monolingual young children. In addition, we employ an alternative dataset which we analyse with our technical efficiency approach. Second, we contribute to the monolingual/bilingual literature by comparing the executive function scores of bilingual and Greek monolingual young children. Our executive function tests span attention, working memory, and inhibition; hence allowing us to consider multiple aspects of the executive function from 70 participants. Third, we augment the technical efficiency analysis with a second-stage analysis that controls for differences in terms of age, non-verbal intellectual ability, grammar skill, expressive vocabulary skill, receptive vocabulary skill, SES, and language use. A bootstrap regression is used to mitigate any small sample bias, while an ANCOVA and a k-means nearest-neighbour approach are used as robustness. Fourth, we analyse our bilingual/monolingual dataset using conventional ANCOVA/MANCOVA techniques using the same control variables comparing the results to the technical efficiency analysis.

Efficiency studies across disciplines

Assessing the performance of organisations such as firms, financial institutions, educational institutions, and hospitals is of interest to investors, regulators, policy-makers, and consumers. Perhaps the simplest performance ratio is in the form of Ouput/Input. The manager of an electricity power plant may get a rough estimate of the performance by assessing output level produced (e.g., electricity in MWh), given a level of input (e.g., barrels of Oil) (Kumbhakar & Tsionas, 2011). This performance ratio combines two important concepts; first that higher values of output are more desirable; second, there is a cost element that needs to be minimised. The owner of a dairy farm may also be interested in benchmarking the performance of his/her firm in a similar manner. The output in this case may be viewed as the milk (in litres) produced by the cows, while the inputs may relate to the number of cows used, the size of the land, the labour quantity and the quality of the feeds (Alvarez & Arias, 2004). With such information, the manager could benchmark the operations against the competition and/or against time and find areas for improvement. For example, Johnes et al. (2014) argue that Islamic banks have lower technical efficiency than commercial banks due to the formers’ business model restrictions that prohibit the issuance of loans to certain types of businesses.

In the above examples, we shall refer to the business entity as a decision-making unit (DMU). The DMU is a flexible definition allowing the generalisation of the technique across a wide range of applications (see Table 1). In general, the DMU may be viewed as a “black-box” entity that transforms inputs into outputs. The term “decision” implies a mental process; in fact, it could be argued that the manager in the above examples would have some control over the production process and/or the output-input mix. However, this does not need to be the case as the DMU could be a jet engine (Bulla et al., 2000).

Up to this point, we have referred to “performance” without giving an appropriate definition. In fact, this is a known issue in certain disciplines as the “true” firm performance is latent, with individual measures (i.e., proxies) not being comprehensive indicators. In the management literature, the creation of competitive advantage of a firm against its competitors is important, as it could enhance a firm’s performance (Douglas & Judge Jr, 2001). On this occasion, performance per se would relate to profitability; yet other aspects, such as the firm value may also have been relevant. In the banking literature, capitalisation, profitability, stability, and liquidity could fall under the umbrella term of performance; yet multiple indicators exist to separately quantify each of these concepts. Drivers of each of these indicators are not necessarily the same. Ultimately, one may be interested in a holistic performance of a bank. Therefore, the challenge lies in combining all the information from a set of indicators to arrive at a meaningful conclusion, which should be generalizable and replicable. Hence, the need for an approach that could capture multiple aspects of the complex organisational structure and present a single, straightforward indicator to the interested parties is apparent.

We assume that each participant is the DMU, with outputs comprising i) paying attention; ii) organisation; iii) maintaining focus; iv) self-monitoring (Diamond, 2013). These skills may be mapped against the three distinct and interrelated processes, namely working memory, inhibition, and switching identified in Miyake et al. (2000). Inputs to the DMU are non-verbal intellectual ability, grammar skill, expressive vocabulary skill, and receptive vocabulary skill.

Method

Participants

Our sample comprises 32 bilingual (mean age = 9 years and 1month, SD = 2 years and 2 months, 18 females and 14 males) and 38 monolingual (mean age = 9 years and 9 months, SD = 1 year and 8 months, 22 females and 16 males) children; a total of 70Footnote 6. The bilingual children are competent in both Greek and English languages to varying degrees. The bilingual children were recruited if at least one of their parents spoke the Greek language with them. The mean age of acquisition is 7 months (SD = 1 year and 2 months) for Greek and 2 years and 6 months (SD = 2 years and 9 months) for English. We have excluded any trilingual participants.Footnote 7 Children were included in the study if their non-verbal intelligence score was not under 80. In this case, all children had scores over 80. Based on parental and teacher reports, the children did not have any hearing, behavioural, emotional, or mental impairment. More information is included in Table 4 and “Descriptive statistics” section below.

Bilingual Greek–English children were recruited from a Greek supplementary school in the north-west of England. The school offered a Greek-speaking supplementary program for 2.5 to 3.5 hours a week to enhance the reading and writing of the Greek language. This program is supplementary to the mainstream English school that these children attend. Eight of the bilingual children were born in Greece and lived in the UK for more than 2 years at the time of the study. The Greek monolingual control group consisted of children born and based in Greece.

Ethical approval was granted by the College of Arts and Humanities Research Ethics Committee at Bangor University. Information sheets were sent to the head teachers of schools and to the parents and informed consent was obtained before the collection of data. Teachers, parents, and children were provided enough time to express any questions about the nature of the study. Parents and children were informed that they could withdraw at any time, and they were debriefed after the study.

Materials

Parental questionnaire

The children’s language experience was assessed through the Language and Social Background Questionnaire for Children (LSBQ) (Luk & Bialystok, 2013). The LSBQ was forward and backward translated in Greek and it was completed by at least one of the parents/guardians in their preferred language (Greek or English). It consisted of information about the child’s age, grade, date of birth, country of birth, age of onset of all the languages, knowledge of playing a musical instrument, and length of exposure to different educational mediums. The questionnaire also included information about the parents’ language backgrounds. SES was measured as the mean of the highest attained educational level of both parents rated on an eight-point scale. Parental education is the most commonly used index of SES background, is highly predictive of other SES indicators (e.g., income, occupation), and is a better predictor of cognitive performance than other SES indicators (Calvo & Bialystok, 2014). The child’s understanding and speaking in all of their languages was rated on a five-point scale ranging from Poor to Excellent. Language use with parents, siblings, grandparents, neighbours, friends, and caregivers in various situations was measured on a seven-point scale ranging from 1 (only English) to 7 (only Greek/or other language).

Non-verbal intelligence

Non-verbal intelligence was assessed using the Kaufman Brief Intelligence Test, Second Edition (KBIT-2) (Kaufman, 2004). It consists of 46 items including a series of abstract images, such as designs and symbols, and visual stimuli, such as pictures of people and objects. Participants were required to understand the relationships among the presented stimuli and complete visual analogies by indicating the relationship between the images by either pointing to the answer or saying its letter. All items include an option of at least five answers thus reducing the chance of guessing. The Matrices non-verbal subtest was individually administered and scored according to the KBIT-2 manual, and percentages for the Matrices scores were obtained for participants.

Language measures

English language measures

The British Picture Vocabulary Scale, Third Edition - BPVS3 (Dunn & Dunn, 2009) was used to assess the receptive vocabulary of the bilingual and monolingual children in the English language. It is an individually administered, standardised test of Standard English receptive vocabulary for children ranging from 3 years to 16 years and 11 months. In this task, children are asked to select, out of four coloured items in a 2 by 2 matrix, the picture that best corresponds to an English word read out by the researcher. The assessment consists of 14 sets of 12 words of increasing difficulty (e.g., ball, island, fictional). The administration is discontinued when a minimum of eight errors is produced in a single set.

The Clinical Evaluation of Language Fundamentals, Fourth UK Edition - CELF-4UK (Semel et al., 2006) is an individually administered standardised language measure, which is used for the comprehensive assessment of a student’s language skills by combining core subtests with supplementary subtests. The expressive vocabulary subtest was used here to assess the participants’ expressive vocabulary in the English language. This measure is designed for children and adolescents ranging from 5 to 16 years of age. Expressive vocabulary was screened through the Expressive Vocabulary subtest for children. Children were asked to look at a picture and name what they see or what is happening in each picture (e.g., a picture of a girl drawing, the child should give the targeted response ‘colouring’ or ‘drawing’ to score 2 points or the response ‘doing homework’ to score 1 point). The administration is discontinued after seven consecutive zero scores.

The Test for Reception of Grammar, Version 2 - TROG-2 (Bishop, 2003) was used to assess receptive grammar. It is an individually administered standardised test for children and adults and it comprises 80 items of increasing difficulty with four picture choices. Children are asked to select the item that corresponds to the target sentence read out by the researcher. For each grammatical element, there is a block of four target sentences. A block is failed unless all four items of each block are established by the child. The sentences include simple vocabulary of nouns, verbs, and adjectives. If a child fails five consecutive blocks the administration is terminated.

Greek language measures

A standard Modern Greek version of the Peabody Picture Vocabulary Task-PPVT (Dunn, 1981) was adapted and used based on the Greek adaptation by Simos et al. (2011). The children clicked on the image, out of four possible choices, that best corresponded to the target word they heard, such as nouns, verbs, or adjectives. There were 173 items of increasing difficulty. If eight incorrect responses were provided to ten consecutive items, then the task was stopped. The answers were scored as correct (1) or incorrect (0).

The Picture Word Finding Test-PWFT (Vogindroukas et al., 2009) is an individually administered standardised measure used to assess standard Modern Greek expressive vocabulary. It is a tool norm-referenced for Greek adapted from the English Word Finding Vocabulary Test - 4th Edition (Renfrew, 1995). The children are presented with 50 black-and-white images consisting of nouns in developmental order. The words included originate from objects, categories of objects, television programs and fairy-tales very familiar to children. A score sheet is used to record the responses provided during testing which are later scored as correct (1) or incorrect (0). The children are asked to name the objects they saw and when they are ready, they move to the following one. The assessment is discontinued after five consecutive wrong replies.

The Developmental Verbal Intelligence Quotient-DVIQ (Stavrakaki & Tsimpli, 2000) was used to assess Greek receptive grammar. It consisted of five subtests used to measure children’s language abilities in expressive vocabulary, understanding metalinguistic concepts, comprehension and production of morphosyntax, and sentence repetition. This was an assessment that measured language development in standard Modern Greek, and it was administered individually. For this study, only the subtest measuring comprehension of morphosyntax was used for both Greek monolingual and Greek–English bilingual children. Each child was given a booklet with 31 pages, each including three images. The researcher read out a sentence and each child was asked to point to the picture that best represented the situation in the sentence. For example, the sentence might have been “μην καπνίζετε” (do not smoke) and the correct answer depicted a “No Smoking” sign. An answer sheet was used to record the child’s answers (as A, B, or C) during testing which were later scored as correct (1) or incorrect (0).

For each of the background language measures, we define percentage scores as the number of correct responses/number of correct and incorrect responses. Bilinguals were assessed on each of these background measures using one test in each language. Percentages were used in order to create a comparable scale for all tests, which allows us to produce a composite measure.

Executive function tasks

In this section, we present the administration details for the five executive function tasks that span attention, working memory, inhibition, and shifting. All cognitive tasks were administered on a laptop using the experimental software E-Prime 2.0 (Schneider et al., 2002). E-Prime 2.0 is behavioural experiment software that provides an environment for computerised experiment design and data collection with millisecond precision timing ensuring accuracy of data. We discuss each of these tasks in turn below.

Attention task

The Attentional Network Task (ANT) (Fan et al., 2005) was designed to evaluate three different attentional networks: i) alerting; ii) orienting, and iii) executive control (Posner & Petersen, 1990). Participants are asked to indicate the direction (left or right) that the target stimulus (a fish appearing at the centre of the screen) points to. Distance between the participant’s head and the centre of the screen was approximately 50 cm. The child’s task was to press either the right or left key button on the mouse (with the right or left index finger) corresponding to the direction in which the middle fish is swimming. The child was presented with a training block of 16 trials and 128 trials distributed in four experimental blocks. There were breaks in between. During both the training and experimental blocks, auditory feedback was provided to the child.

Working memory tasks

The first task was a Counting recall task, which was an adaptation of the Automated Working Memory Assessment (Alloway, 2007). The children were presented on the laptop screen a varying number, from four to seven, of red circles and blue triangles on the screen. The children should remember the number of red circles in each image. The images presented begin from one and reach seven. Each experimental block, consisting of one to seven images, consists of four trials. If the child failed to correctly recall three trials in a block, the task stopped.

The second task was a Backward digit span task (BDST) and it was adapted from Huizinga et al. (2006). The children began with two training trials in order to understand the task and type the reverse order of the numbers presented. For example, if a child hears the number 7 and 4 they should type 4 and 7. The sequence begins with four trials of two numbers reaching gradually eight numbers. Similarly to the above task, if the child failed to correctly recall three trials in a block the task stopped.

Both tasks were administered in the preferred language of the child. In all cases the preferred language was English for the bilingual children.

Inhibition task

The Nonverbal Stroop task was adapted from Lukács et al. (2016) and consisted of stimuli of arrows pointing upwards, downwards, left and right. Three experimental blocks of 60 trials each were presented to the children. The aim was to select the direction that the arrows indicated regardless of their position on the screen. The children used the arrow buttons on the laptop’s keyboard. The first was the control block and arrows were presented in the middle of the screen (Stroop base). In the second block, which was the congruent block, the direction of the arrows matched their position on the screen (e.g., an arrow indicating upwards was presented at the top of the screen) (Stroop congruent). Finally, the third experimental block was the incongruent block. Here the direction of the arrows was the opposite compared to their position on the screen (e.g., an arrow indicating upwards was presented at the bottom of the screen) (Stroop incongruent). During the administration of the task, the second and the third blocks are randomly mixed to enhance the conflict effect.

For accuracy measures, the number of correct answers for the incongruent items was subtracted from the number of correct answers for the congruent items. The difference in reaction times for congruent and incongruent trials represents the inhibition cost.

Shifting task

All children were also administered one shifting task, the Colour-shape task. This task included three blocks each, where children were presented with two shapes (triangle, circle) coloured either red or blue. The same buttons, one for the left hand and on for the right, corresponded to one of the choices (circle–triangle, red–blue). In the first two experimental blocks, the children’s task was to recognise the shape of the stimulus and ignore their colour or the reverse. The stimuli were presented in the top half and bottom half of the screen, respectively. In the third block, they were required to alternate between colour and shape depending on their location on the screen. Cues directing the participant to the relevant dimension are presented simultaneously with the stimuli on all trials, in all blocks. The first two blocks contained 32 trials each, while the third block contained 64. The number of shifting and non-shifting sequences within the third block was balanced. The difference in reaction times for the first two (non-shifting) and the third (shifting) block represents the shifting cost.

Procedures

A pilot study with four children was conducted before the actual data collection. As a result of the pilot study, the choice of the above fixed order of tasks was such so the children did not feel tired or uninterested. After the end of each session, the researcher thanked the child for their participation. All children participated enthusiastically.

The children were tested individually in a quiet school classroom setting, during one session in Greek and one session in English that lasted 40 min on average. The second session was conducted no more than 1 month’s time after the first one. The researcher informed the children that they would play some games. Parents were administered the questionnaire (LSBQ) and returned it to the researcher, or the classroom teacher, or the school’s head teacher.

The first session was the Greek session for the bilingual participants. Each child completed the tasks in the following fixed order: i) Greek adapted PPVT, ii) ANT, iii) PWFT, iv) Colour shape task, v) Nonverbal Stroop task, and vi) DVIQ. The second session was the English session for the bilingual participants. Each child completed the tasks in the following fixed order: i) KBIT-2, ii) BDST, iii) BPVS, iv) counting recall task, v) CELF-4, and vi) TROG-2.

The Greek monolingual children completed the tasks in the following fixed order: i) Greek adapted PPVT, ii) ANT, iii) PWFT, iv) Colour shape task, v) Nonverbal Stroop task, vi) DVIQ, vi) KBIT-2, vii) BDST, viii) Counting recall task.

Technical efficiency

In this section, we introduce the concept of technical efficiency, which may be viewed as a special case of a performance ratio. We use a random sample from our dataset and assume that each participant is a decision-making unit (DMU) that produces two outputs from two inputs. The outputs are the accuracy scores on two executive function tasks; the BDST and the Counting recall. The inputs are a measure of the non-verbal intellectual ability (KBIT-2) and a measure of the grammar skill (DVIQ). Ultimately, we are interested in comparing the performance of the DMUs. We illustrate three cases; case A considers one Output and one Input; case B uses two Outputs and one Input; case C uses two Outputs and two Inputs.

Table 2, Panel A, presents the output and input values for each of the ten participants of the random sample. Panel B calculates an array of performance measures associated with each of the three cases outlined above.

In case A, the ratio BDST / KBIT-2 may be viewed as a performance measure where higher values denote a participant with a superior performance; i.e., a higher accuracy score in the BDST measure, using a lower KBIT-2 score. Participant F has the highest value (1.278), hence may be viewed as the one with the best performance, or the most efficient. That is, s/he is producing the highest BDST accuracy score by using the lowest KBIT-2 score. A graphical representation of the ten participants is given in Fig. 1a. The line that connects the axis origin (black line) to point D (the left-most in the graph) is the efficient frontier and envelops all the other points. By contrast, a regression line (orange line) goes through the middle of these points; a direct consequence of the estimation technique used. As such, while the regression line considers the “average” as the benchmark unit, by allowing some to over-perform and others to under-perform, the frontier analysts consider the efficient (i.e., best-practice) unit as the benchmark; thus letting all others to under-perform.

Efficient frontiers. Notes. The figure shows the efficient frontier (solid black line) in the case of 1 output / 1 input (Case A), and 2 outputs / 1 input (Case B). The orange line represents the best-fit line from a regression model. The outputs are the accuracy scores in two executive function scores, BDST (Case A and B) and Count recall (Case B). The input is the non-verbal intellectual ability as proxied by the KBIT-2 score (Case A and B). The ten participants labelled A-J are a random sample from our dataset

In case B, the ratios BDST / KBIT-2 and Counting recall / KBIT-2 are defined. Points F, D, and E are of special attention as they are the furthest away from the axis origin, hence they represent the best-performers (i.e., efficient ones). The participants represented by these three points represent efficient combinations in the sense that they produce the maximum output for a given level of input. Contrary to case A, the efficient frontier here is a piecewise linear frontier that is made up of the efficient DMUs and envelops all the inefficient combinations. For example, point I lies inside the frontier and has an efficiency score of Oy/Oy’, which means that there is a margin of improvement in the performance of participant I by Oy’-Oy (i.e., the distance between point I and the efficient frontier).

Case C would require the ratios BDST / KBIT-2, Counting recall / KBIT-2, BDST / KBIT-2 and Counting recall / DVIQ to be computed. However, in this case visual representation would have to be multidimensional. A particular challenge that was made apparent in case B is that the points (F, D, E) are all efficient but have a different output/input mix. For example, point F is superior in terms of BDST, while point E in terms of Counting recall. The fact that the output/input mix would vary among DMUs becomes more apparent as outputs and inputs considered increase. Consequently, it is difficult to identify the participant with the overall best performance, unless we assign some “desirability” on the outputs (and similarly the inputs). For example, this could take the form of a higher accuracy in the BDST having a higher value than in the Counting recall.

To address the issue, Charnes et al. (1978) introduced the concept of technical efficiency in the form of a linear optimisation model – the CCR model. The novelty lies in the use of weighted outputs and weighted inputs to form a performance measure, known as technical efficiency. Technical efficiency may be viewed as a ratio where, on the nominator (denominator) each output (input) is assigned a weight. The weight, which lies between 0 and 1, is universal for all the DMUs, and could be viewed as a measure of the relative desirability of the outputs and inputs.

A linear optimisation technique that maximises the overall technical efficiency of the system is used to estimate the weights (Charnes et al., 1978). Hence, the weights, and consequently any ranking of outputs and inputs that is implied, is determined from the data themselves without any a priori information or assumptions.

Mathematically, and starting from the case of two outputs and two inputs (i.e., Case C), the technical efficiency ratio for a single DMU is given as:

where y1 and y2 being the BDST and Counting recall accuracy scores (Outputs); x1 and x2 being the KBIT-2 and DVIQ scores (inputs); u1, u2, v1 and v2 are output and input weights, respectively.

We can generalise this to the case of R outputs and M inputs as follows:

Here we also add the subscript j which denotes the DMU with j = 1, 2, …, N as well as the tilde on top of the weights to denote that these are estimated through linear optimisation. Note that as the weights are common across all DMUs, they do not carry the j subscript.

The linear optimisation works by maximising the sum of TEj across all DMUs subject to the TEj being bounded between 0 and 1 (where 1 is assigned to the efficient DMUs) for each DMU, and to the weights being non-negative.Footnote 8 Mathematically:

Data transformations

In our case, each child produces certain outputs while receiving certain inputs. We consider the output to be the executive function score, which may be viewed as a proxy for brain performance.

As per the Miyake et al. (2000) classification, three distinct and interrelated components of executive function are defined. These relate to an individual’s ability to switch between various tasks (switching/shifting), the ability to maintain and process information in mind (working memory), and the ability to suppress irrelevant information at any given moment (inhibition). Performance in each of these categories is assessed via the following tasks: i) BDST, ii) Counting recall, iii) Colour shape, iv) Non-verbal Stroop (Stroop), v) ANT. All of these tasks and their administration procedure have been explained in an earlier section.

In each task we record: i) the accuracy (ACC); ii) the response time (RT) of the child, which form our two outputs. The accuracy for each task and each child is calculated as the average accuracy over the respective number of trials that each task consists of, and ranges theoretically between 0 and 1. For tasks that have congruent and incongruent trials, we use the average accuracy. Empirically, extreme points are not observed, thereby the tasks are appropriate for the children’s age. The higher the accuracy the better the performance of the child.

The response time is measured in milliseconds and is only considered for the correct answers to test questions. The lower the response time, the faster the response is given. Consistent with the literature, we exclude any response time that is below 200 ms (Antoniou et al., 2016). We also carry out an outlier treatment in line with Purić et al. (2017), where we trim response times that lie outside of a 3 standard deviations bound.Footnote 9 As the two output variables are inversely coded, we consider the inverse of response time and dub this as response speed (1/RT).Footnote 10 Hence, the two outputs in our case are: i) accuracy (y1); ii) response speed (y2). The inputs are as follows: i) non-verbal intellectual ability (x1); ii) grammar skill (x2); iii) expressive vocabulary skill (x3); iv) receptive vocabulary skill (x4); v) age (x5).

The grammar, expressive vocabulary, and receptive vocabulary skills of monolingual children are assessed in Greek using the DVIQ, the PWFT, and the Greek receptive vocabulary test, respectively. The grammar, expressive vocabulary, and receptive vocabulary skills of bilingual participants are assessed in Greek using the same measures as with the monolinguals and in English using the equivalent English tests, namely TROG-2, CELF-4 and BPVS, respectively. With regards to the intellectual ability, we used the Matrices subtest, which is the non-verbal component of the KBIT-2. Table 3 presents information about the mapping of the tasks for each group of participants.

To arrive at comparable estimates of grammar, expressive vocabulary, and receptive vocabulary skills, we standardise the scores of the monolinguals and bilinguals. As the bilinguals have two measures for each skill, one in Greek and another in English, we follow three strategies to arrive at a composite measure of the respective skill. In the most naïve and easiest-to-implement strategy, we assume that all bilinguals are balanced between English and Greek, hence their composite score would be a simple weighted average of the respective tasks, and this represents Composite Score 1 (CS1). As the balanced bilingual assumption may be strong, we introduce a second, more realistic composite score (CS2) that assumes that bilinguals may be more competent in a particular language. Hence, under CS2, the composite measure is a weighted average of the individual tasks, with the weights calculated from the relative performance of the participants in the Greek and English versions of the test. Composite Score 3 (CS3) is similar to CS2 with the only difference being that the relative weights are derived from the parental questionnaire; hence the relative competency level is self-declared. In the following analysis, we present the results based on CS2, and we compare with the results of CS1 in the robustness section.Footnote 11

Similar to regression models, a DEA analysis needs to be “well specified” in the sense that relevant variables should be included in the specification. In case of regression, a minimum number of observations is required for estimation; statistical inference (e.g., hypothesis testing) requires additional observations and/or bootstrap techniques for small samples. Due to the DEA’s non-parametric nature, minimum sample size has no formal statistical basis. However, DEA’s discriminatory power depends on the relative numbers of inputs, outputs, and DMUs in the sample. As a rule of thumb, the number of DMUs should be at least 2–3 times higher than the inputs and outputs combined (Banker et al., 1989; Golany & Roll, 1989). In our case, the number of DMUs is at least seven times higher than the combined inputs and outputs.

Second-stage analysis

The technical efficiency estimate from the previous step may be used as the dependent variable in subsequent analysis. We investigate differences in the technical efficiency of monolingual and bilingual children in a second-stage analysis. We use three estimation methods: i) an ANCOVA, which is widely used in the literature; ii) a regression with bootstrap corrected standard errors that corrects for potential small sample bias (Cameron & Trivendi, 2005); and iii) a k-means nearest-neighbour matching technique. We opt for the k-means nearest neighbour as it is a non-linear, non-parametric technique that matches observations with similar characteristics. The advantage of k-means nearest-neighbour matching is that it does not rely on a formal model (like propensity score does); thus, being more flexible. Like the propensity score approach, it can match observations on both categorical and continuous variables. However, when matching on continuous variables, a bias-corrected nearest-neighbour matching estimator is necessary (Abadie & Imbens, 2006, 2011). More information is provided in Technical Appendix 2.

We allow for three formulations in each estimation method, hereafter referred to as Specifications A to C, respectively. These specifications are progressively less restrictive as they allow for decreasing similarities between the participants. In particular, specification A controls for differences with respect to non-verbal intellectual ability, grammar skill, expressive vocabulary skill, receptive vocabulary skill and age. Specification B further adds SES to specification A, while specification C further adds language use to specification B.

Results

Descriptive statistics

Table 4 and presents key descriptive statistics for the variables utilised in the analysis. The mean, standard deviation, and median for the bilinguals and monolinguals is reported alongside an ANOVA between-group test. Lack of statistical significance in the F-statistic suggests no group differences between the bilinguals and monolinguals.Footnote 12

A first inspection of the executive function accuracy and response times scores (also see Fig. 2) does not suggest any between-group differences, with the exception of the accuracy score in the BDST task (F(1, 68) = 4.47, p < .05). A comparison of non-verbal intellectual ability and age between the two groups does not suggest any significant difference (F(1, 68) = 0.05, ns) and (F(1, 68) = 1.81, p > .10). A comparison of the Greek versions of the grammar (F(1, 68) = 11.96, p < .001), vocabulary (F(1, 68) = 44.16, p < .001) and language (F(1, 68) = 70.84, p < .001) tasks suggests significant between-group differences (also see Fig. 3), which is consistent with the findings of Bialystok and Craik (2010).

The bilinguals have significantly higher SES compared to the monolingual peers (F(1, 68) = 21.76, p < .001), higher English proficiency score (F(1, 68) = 68.24, p < .001) and lower Greek proficiency score (F(1, 68) = 17.39, p < .001). Proficiency in other languages is comparable in both groups (F(1, 68) = 0.20, ns).Footnote 13 The two groups show a significant difference in terms of Greek language use, with the monolinguals using the Greek language significantly more (F(1, 68) = 129.80, p < .001) compared to the bilinguals, as perhaps expected. The proportion of participants that play a musical instrument is comparable between the two groups (F(1, 68) = 2.47, p > .10).Footnote 14 Years in Greek school is significantly higher for the monolinguals (F(1, 68) = 153.54, p < .001) as they have always been studying in a Greek school in Greece. The majority of the bilingual cohort (25 out of 32 participants) never attended a Greek school in Greece, while the remaining seven attended one for a period of 1–4 years.Footnote 15 Instead, the bilinguals attended a supplementary (Greek) school in England, which is additional to their formal English education.Footnote 16 So the overall exposure to Greek education is comparable between the two groups. The variable Total Greek Education shows the total exposure of a participant to the Greek educational system, whether formal in Greece, or informal (i.e., supplementary) in the UK. A between-groups test reveals only mild difference (F(1, 68) = 4.49, p < .05) in favour of the Greek monolinguals.

The correlations between the accuracy scores, response times as well as age, SES, non-verbal intellectual ability, grammar, expressive vocabulary, and receptive vocabulary score are reported in Appendix Table 11. Positive and significant coefficients between all the accuracy scores of the tasks are evidenced. This suggests a similarity in the performance of the participants across the tasks. The fact that inhibition tasks are positively correlated is in line with the Paap and Greenberg (2013) suggestions. In particular, we find significant correlation between Stroop and Colour shape tasks in terms of accuracy scores (r = 0.45, p < .01) and of response times (r = 0.57, p < .01). However, we also document significant and positive correlations between working memory and inhibition tasks. For example, BDST and Stroop (r = 0.57, p < .01) that provides empirical support to the fact that the underlying cognitive processes may be interrelated or that the proxies used may not tap solely on these processes (task impurity problem). Negative and significant correlations between accuracy and RT scores as perhaps expected, for example within the Stroop task we observe a negative and significant correlation (r = – 0.41, p < .01). All other variables have the expected relationship with accuracy and RT scores of the tasks, with the exception of SES that does not exhibit any significant relationship. For example, higher IQ is positively correlated with accuracy scores and negatively correlated with RTs.Footnote 17

Efficiency estimates

Table 5 presents the technical efficiency estimates of bilinguals and monolinguals. Under panel A, we report technical efficiency estimates when using each executive function task’s accuracy and response speed as outputs. In panel B, we combine the information from multiple executive function tasks in two variants, namely the “accuracy” and the “response speed”. The former uses the accuracy scores of all five tasks, while the latter uses their respective response speed. The “All” variant includes both the accuracy and the response speed from all five executive function tasks. The choice of inputs is always the same, which are the non-verbal intellectual ability, grammar skill, expressive vocabulary skill, receptive vocabulary skill and age.Footnote 18 A battery of statistical tests is performed for the between-group differences. The estimated Cronbach’s alpha (a = 0.93) indicates strong reliability of the technical efficiency variables.

A cursory inspection of panel A results suggests that bilinguals exhibit higher technical efficiency by 16.32% on average compared to their monolingual counterparts. Depending on the executive function task, the gain ranges between 15.75% (Counting recall) (F(1, 68) = 14.78, p < .001) and 20.19% (Colour shape) (F(1, 68) = 16.78, p < .001). For example, the average technical efficiency of bilinguals for the BDST executive function task is at 0.836 against the 0.756 of the monolingual cohort. This suggests that the bilinguals are about 10.25% better at utilising their available inputs than the monolinguals. Panel B results corroborate our previous findings, with bilinguals being around 12.85% more efficient than the monolinguals based on the “All” variant and the effect is significant (F(1, 68) = 21.94, p < .001). An investigation of the “accuracy” and “response speed” variants suggests that the higher efficiency scores of the bilinguals are mainly driven by their relatively faster responses compared to the monolingual group.

Second-stage analysis

Table 6 presents the results of the second-stage analyses. Panel A controls for age, non-verbal intellectual ability, grammar skill, expressive vocabulary skill, and receptive vocabulary skill. Panel B further controls for SES. Panel C further controls for language use. The “Margin” column reports the estimated marginal effect of the between-group differences, where a positive value indicates that the bilinguals exhibit superior technical efficiency compared to their monolingual peers.Footnote 19 The main finding is that after controlling for an extended array of controls, the superior technical efficiency of bilinguals found in “Comparison to an alternative dataset - The Antoniou et al. (2016) dataset” section persist.

The results of the “All” variants are particularly interesting as these combine the information from all five executive function tasks. The marginal effect across all estimation methods and specifications is positive and statistically significant (\( {\hat{\beta}}_{II}=0.054,p<.10 \)). This suggests that the bilinguals exhibit, on average, between 5.4% and 10.3% superior technical efficiency compared to their monolingual peers.

A comparison of panel A and panel C finds the former with more statistically significant coefficients. However, once we add all the covariates certain marginal effects drop from statistical significance at conventional levels. This is particularly the case for the individual executive function tasks. In particular, technical efficiency based on the BDST executive function task is statistically significant at Panel A \( \left({\hat{\beta}}_{II}=0.056,p<.05\right) \) but not at panel C \( \left({\hat{\beta}}_{II}=0.043,\mathrm{ns}\right) \), which highlights the importance of SES and language use in isolating the bilingual effect. This is in line with the comments in Paap (2018) about improper controlling of factors may reveal a bilingualism advantage.

Robustness tests

Comparison with conventional designs

In this section, we compare the insights from the technical efficiency analysis presented in the main part of the paper to an ANCOVA analysis that is commonly used in similar studies. Table 7 reports the descriptive statistics (mean and standard deviation) of the accuracy scores and response times for the five executive function tasks, including several derived measures such as: i) the absolute difference between the incongruent and congruent trials (Difference); ii) a simple average performance measure of the congruent and incongruent trials (Average); iii) the Local Shifting Cost (LSC) and Global Shifting Cost (GSC); iv) the Inhibition effect. For all executive function measures, a series of between-groups ANCOVA analyses are performed with age, non-verbal intellectual ability, grammar skill, expressive vocabulary skill, receptive vocabulary skill, SES, and language use as covariates.

The results of this analysis suggest that there are no significant performance differences between bilingual and monolinguals. For example, and pertaining to the working memory, no conclusive significant difference is found with bilinguals performing better in the Count recall task (F(1, 61) = 4.32, p < .05), but worse in the BDST (F(1, 61) = 6.50, p < .05) compared to their monolingual counterparts. However, a drawback of an ANCOVA analysis is apparent in this case as it is not able to account for the multiple executive function tasks (and their metrics) that are available. As an alternative, we use a MANCOVA analysis that allows for multiple dependent variables at the same time, thereby allowing for more efficient use of the breadth of the administered executive function tests. We use the same control variables as in the ANCOVA case. With regards to the choice of the dependent variables, we present a list of several models, labelled I–X, in Table 8, each using different metrics of each executive function score. In the MANCOVA models presented, we include at least one dependent variable from each of the three categories of executive function, namely working memory, switching and inhibition. These results are reported in Table 8.

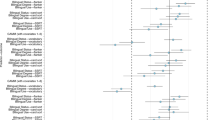

Overall, the MANCOVA results suggest that there are significant differences between the two groups. For example, under Model I, the between-group tests suggest significant differences in the executive function of the two groups (F(1, 61) = 2.34, p < .10; Wilk’s Λ = 0.830). Model X is of particular interest by featuring as dependent variables the same measures used in the technical efficiency analysis as outputs, while the controls variables correspond to the inputs. This model suggests of significant between-group differences in executive function (F(1, 63) = 2.20, p < .05; Wilk’s Λ = 0.710). Hence, the qualitative conclusion obtained using the technical efficiency approach is verified by a MANCOVA analysis. A drawback of the MANCOVA compared to technical efficiency is that subsequent analysis in the former case is more complex, as between-group marginal effects are unique in each dependent variable.

Comparison to an alternative dataset - the Antoniou et al. (2016) dataset

The effect of bilectalism and multilingualism on executive control is examined in Antoniou et al. (2016). In this section, we revisit the Antoniou et al. (2016) dataset, and apply the technical efficiency approach in answering the same questions. The use of the particular dataset is motivated from the conceptual closeness of the investigated topic – i.e., executive function in bilingual/bilectal populations, as well as the number of administered executive function tasks coupled with the identified need to arrive to a comprehensive measure that summarises all information. In particular, the authors administer six executive function tasks on a sample of bilectal, multilingual, and monolingual children. Subsequently, they use a principal component analysis (PCA) technique to produce two composite measures, which they identify as representative of working memory and inhibition. The executive function tasks in Antoniou et al. (2016) are the following: i) Backward digit span (BDST); ii) Corsi blocks forward (Corsi forward); iii) Corsi blocks backward (Corsi backward); iv) Soccer task (Stroop); v) Simon task; vi) Colour shape. For these tasks, the dataset provides either the number of correct trials (BDST, Corsi) or accuracy scores and response times (Soccer, Simon, Colour shape).

We apply the technical efficiency methodology as described in the main paper with the outputs being the percentage scores of each of the three working memory tasks (BDST, Corsi forward, Corsi backward), and the accuracy scores of the Soccer, Simon, and Colour shape tasks. Our choice of inputs is similarly motivated to our main analysis but also takes into account the availability of the data. In particular, we use three inputs: i) non-verbal intellectual ability (IQ), ii) general language ability, iii) vocabulary skill (PPVT), iv) age. We conduct our analyses on three samples, labelled S1-S3. The first (S1) compares bilectal and monolingual children, while the second (S2) compares bilectal, multilingual, and monolingual children. These two use the exact sample specifications of Antoniou et al. (2016) for a direct comparison.

In particular, there are 17 bilectal participants (M = 7.6 years of age; SD = 0.9 years) that are speakers of Cypriot Greek and Standard Modern Greek, while the 25 monolingual participants (M = 7.4 years of age; SD = 0.9 years) only speak Standard Modern Greek under S1. The background analysis in Antoniou et al. (2016) suggests that these two groups do not differ in age, gender, or language comprehension, however the bilectals exhibit significantly lower expressive and receptive vocabulary scores. Under S2, there are 44 bilectal participants (M = 7.6 years of age; SD = 0.9 years), 26 multilingual participants (M = 7.6 years of age; SD = 0.9 years) and 25 monolingual participants (M = 7.4 years of age; SD = 0.9 years). The background analysis in Antoniou et al. (2016) suggests that these three groups do not exhibit significant differences in age, gender, or language comprehension, however there are significant differences in terms of SES and IQ. Our third (S3) analysis compares bilectal, multilingual, and monolingual children and this time we use all the participants that are available in the Antoniou et al. (2016) dataset. Under S3, there are 64 bilectal participants (M = 7.8 years of age; SD = 1.59 years), 47 multilingual participants (M = 7.8 years of age; SD = 1.8 years) and 25 monolingual participants (M = 7.6 years of age; SD = 0.9 years). These three groups do not exhibit statistically significant differences with respect to age (F(2, 133) = 0.550, p > .10), gender (F(2, 133) = 0.370, p > .10), and IQ (F(2, 130) = 2.270, p > .10). There are significant differences in terms of SES (F(2, 130) = 10.43, p < .01) and general language ability (F(2, 133) = 6.830, p < .01).

Table 9, panels A–C present the results of this analyses of S1–S3, respectively. In each group, we report the mean and standard deviation of the technical efficiency as well as the working memory and inhibition composite measures of Antoniou et al. (2016) for comparison purposes. An ANCOVA between-groups analysis is reported with age, IQ, general language ability, and SES as control variables, in line with those used in Antoniou et al. (2016).

For S1, the technical efficiency analysis shows the bilectals to be about 3.2 percentage points more efficient than their monolingual counterparts with the difference between the groups being significant (F(1,36) = 4.53, p < .05). Antoniou et al. (2016) use a 2x2 mixed ANCOVA design for the working memory and inhibition components and find the bilectals to outperform the monolinguals.

The technical efficiency analysis on S2 uncovers significant differences between the groups (F(2,88) = 7.01, p < .01). Specifically, the multilinguals are the most efficient group with an average efficiency score of 0.979, followed by the bilectals at 0.95 and the monolinguals at 0.89. The difference between multilinguals and monolinguals is significant (F(1,45) = 10.21, p < .01), while a similar conclusion is reached for bilectals and monolinguals (F(1,63) = 8.91, p < .01). No significant difference is found between the bilectals and the multilinguals (F(1,64) = 1.40, p > .10). Antoniou et al. (2016) use a 2x3 mixed ANCOVA design and find that bilectals and multilinguals significantly outperform the monolingual group in terms of executive function. However, no significant difference between the bilectals and multilinguals is observed.

The technical efficiency analysis on S3 shows a similar conclusion to S2 with both multilinguals and bilectals being more efficient than their monolingual counterparts. The between-groups ANCOVA analysis suggests that the difference is statistically significant (F(2, 129) = 6.10, p < .01). A 2x3 (working memory versus inhibition by group: multilinguals versus bilectals versus monolinguals) ANCOVA, in the spirit of Antoniou et al. (2016), does not suggest any significant difference in the three groups (F(2, 121) = 1.145, p > .10).

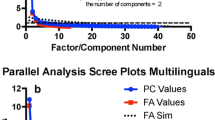

Overall, we confirm the results of the Antoniou et al. (2016) using our technical efficiency approach and offer some more insights in terms of the main advantages of a technical efficiency approach. Compared to the PCA, technical efficiency provides a single ratio, which ranges by construction between 0 and unity, and has a clear interpretation. By contrast, the PCA requires a degree of subjectivity in terms of the number of retained components (or factors), with the Kaiser’s criterion being one of the many used in such analysis (Antoniou et al., 2016). An inherent difficulty in the PCA related to the interpretation of the factors. Another advantage of technical efficiency is that by construction it accounts for differences between the groups in the form of inputs. For example, the PCA analysis is followed by an ANCOVA that accounts for certain differences between the two groups in several metrics. By contrast, several of these metrics may be used as inputs in the technical efficiency analysis. As a consequence, simple unconditional t tests on the technical efficiency estimates have certain merit. An inspection of the t statistics reported in Table 9 yields the same qualitative conclusion as the more complex ANCOVA setup.Footnote 20

The balanced bilinguals assumption

In the main analysis when creating the grammar, receptive, and expressive vocabulary scores for the bilinguals we used what we termed as composite score 2 (see “Second stage analysis” section for more details). Here we compare to the naïve and restrictive strategy where the main assumption is that bilinguals are balanced between the two languages, namely Greek and English. As a consequence, the composite grammar skill score would be a simple average of the respective grammar skill tasks for Greek and English languages (CS1). Admittedly this may seem a strong assumption particularly in cases where some participants may have had limited exposure to the new language. However, as this strategy is less computationally demanding, there is a certain merit in examining the impact of the results from adopting it.

Table 10 replicates Table 5 with the only difference being that bilinguals are now assumed to be balanced using the composite score we explain above. A cursory inspection of the results suggests that the qualitative nature of the story holds, with bilinguals having higher technical efficiency than their monolingual counterparts. However, this gap in efficiency appears less pronounced compared to our main analysis. In particular, for the overall results, bilinguals are now about 11.6% more efficient. Hence, the assumption of balanced bilingualism in this instance reduces the efficiency advantage of the bilinguals by approximately 10% compared to the main analysis (see Table 5). Individual executive function tasks show higher variability. For instance, the bilinguals in the BDST task show a 17.7% lower gain in their efficiency scores to the monolinguals compared to the results of Table 5. Overall, the implicit assumption of balanced bilinguals that appears in the calculation of the composite scores has an important effect.

Conclusions

In this paper, we introduce a novel approach to evaluate performance in the executive functioning skills of bilingual and monolingual children. This approach is based on the frontier methodology that measures the relative efficiency of a decision-making unit (DMU) compared to the best practice, in what is termed as technical efficiency. Technical efficiency may be viewed as a composite performance indicator, which combines information from multiple indicators, represented by inputs and outputs, over a set of decision-making units (DMUs). Technical efficiency estimates are obtained via DEA and are used to benchmark the DMUs, with the efficient DMUs described as “best-practice”. Hence, it is particularly useful in performance evaluation situations where there are several alternative metrics. It is worth pointing out that an efficient DMU has the best composite performance (i.e., is technically efficient) using all the available information reflected in inputs and outputs. By contrast, the complex nature of executive function may be insufficiently captured by analysing single metrics in isolation; often leading to mixed conclusions. An alternative approach might be to construct a weighted average of several metrics. However, an issue here is that an assumption on the weighting scheme would be needed. An additional challenge is when different measurement units are present across the metrics. By contrast, DEA optimally selects the weights thereby letting the data speak for themselves, while it can handle a variety of data subject to only two restrictions. First, DEA applications require that the factors only appear either as input or output. While this is clearly visible in the case of raw data, ratios may be more challenging if for example inputs and outputs share a common denominator. Subject to the above rule, DEA can accommodate both raw data and ratios in inputs/outputs (Cook et al., 2014; Cooper et al., 2000; Dyson et al., 2001). Second, all outputs need to be quantities where “more-the-better” is applicable; the converse is true for the inputs. In our research, the executive function tests’ accuracy and response time is an example where a transformation is required to ensure this condition is met. Technical efficiency brings several important benefits to the discipline. Most importantly, it can take into account multiple tasks and multiple metrics, which define the outputs. By construction, it accounts for differences with respect to key covariates, dubbed as inputs. Being a non-parametric, linear programming technique means that it is flexible, does not rely on distributional assumptions, and is not computationally intensive.

We demonstrate the application of the frontier methodology in the context of bilingualism, by focusing on executive function tasks in 32 Greek–English bilingual children that are compared against 38 Greek monolingual children. Using the accuracy and response times of five executive function tasks spanning working memory, inhibition, and shifting, we find the bilingual cohort to be around 6.5% more efficient compared to the Greek monolinguals, which is a statistically significant difference. This suggests that the bilinguals outperform their monolingual counterparts in terms of executive function, after controlling for differences in terms of age, non-verbal intellectual ability, grammar skill, expressive vocabulary skill, receptive vocabulary skill, SES, and language use. The results are robust to a number of alternative specifications of technical efficiency (e.g., using only the accuracy metric), alternative specification of control variables (e.g., with/-out SES, language use), estimation techniques (e.g., ANCOVA, bootstrap regression, k-means nearest neighbours). To identify the benefits of technical efficiency analysis, we subject our dataset to a conventional ANCOVA / MANCOVA series of analyses. The ANCOVA suggests no distinct evidence of a bilingual superior performance, across a wide range of metrics that are in line with the recent literature. However, the MANCOVA approach owing to its multivariate nature, is able to pick up differences between the two groups. In particular, the MANCOVA and the technical efficiency with the same dependent variables are able to provide similar results; thus, highlighting the merits of technical efficiency. We also apply the technical efficiency approach to an alternative, yet related, dataset sourced from Antoniou et al. (2016). Using our technical efficiency approach we are able to replicate the qualitative conclusions of the Antoniou et al. (2016), which uses principal component analysis. We also comment on the advantages of technical efficiency relatively to principal component analysis; namely the more intuitive nature of the efficiency score, and the fact that it controls by construction for several differences between the two groups. Future research may incorporate technical efficiency analysis along the lines outlined here, expand into more tasks that would cover additional aspects of executive function.

Data availability

The data and code generated during the current study are available at: https://bangoroffice365-my.sharepoint.com/:f:/g/personal/elp4ae_bangor_ac_uk/ErsUByMXntFBobeDz60SVpQB1kZBqVzcjLyRb-2ggf6UTw?e=VoYPJ0

Notes

The comparison of bilinguals and monolinguals is not exhausted within the executive function literature, see for example Hartsuiker et al. (2004) for a comparison in terms of lexical and syntactic information and Bialystok et al. (2015) for an investigation of how bilingualism affects particular aspects of the languages used.

In other contexts, and within the standard econometrics literature, this would amount to omitted variables bias (Greene, 2003).

No clear definition on the number of participants of a large sample size exists; however all cited studies feature at least 230 participants, while Paap et al. (2014) suggest that participants in each group should be at least 180.

Paap and Greenberg (2013) identify a causality issue in this case, where it may be argued that players excel in sports because they exhibit higher executive function. Arguably, this may have been attributed to their growing up in a bilingual environment, and therefore some bilingual advantage been bestowed upon them. However, empirically testing this appears challenging.

This is not a unique problem in this research strand. In finance, a survey of 374 studies finds that a total of 56 different measures have been used to proxy firm performance (Chen et al., 2015).

Our sample size, a total of 70 children, is impacted by the fact that five executive function tasks are administered to all participants. As such, the sample size is larger than the study of Poulin-Dubois et al. (2011) who also administer multiple executive function tasks, but is smaller than the study of Duñabeitia et al. (2014) who administer a single executive function task on about 500 children. We use regression with bootstraped standard errors to correct for any small sample bias.

A few participants from either group have limited knowledge of other languages. This information is revealed to us via the parental questionnaire. The level of knowledge in these other languages is significantly inferior to the main languages under examination (i.e., English and Greek), with participants only knowing a handful of words. The level of knowledge between the Bilinguals and the Greek Monolinguals shows no significant difference between them, which suggests no potential heterogeneity induced by these participants in the analysis. As a robustness check, we exclude these participants from the analysis, and the results remain qualitatively similar.

We implement the DEA optimisation in LIMDEP. Other packages that have been used are Stata, R, Matlab as well as several dedicated software for DEA estimation (e.g, DEAP Frontier).

We also apply an alternative outlier treatment whereby we winsorise at the 1st and 99th percentiles of the response times within each executive function test. Using these response times does not challenge our results. For more detailed insights on data outlier treatments in the context of bilingualism, we direct you to Zhou and Krott (2016).

The transformation is inspired by Bayesian analysis where the inverse of standard deviation (dubbed as precision) is typically used. Other ways are also available. One would be to take the inverse of accuracy for output 1 instead. A more challenging approach would entail classifying response time as a “bad output” in line with the studies of Fukuyama and Matousek (2011). The approach of multiplying the response times by -1 (see Antoniou et al., 2016) does not work with efficiency analysis as the inputs and outputs need to be positive.

A robustness check with CS3 instead of CS2 is also performed with the results being qualitatively similar; hence this is not reported for brevity.