Abstract

Recent research has suggested that our memory systems are especially tuned to process information according to its survival relevance, and that inducing problems of “ancestral priorities” faced by our ancestors should lead to optimal recall performance (Nairne & Pandeirada, Cognitive Psychology, 2010). The present study investigated the specificity of this idea by comparing an ancestor-consistent scenario and a modern survival scenario that involved threats that were encountered by human ancestors (e.g., predators) or threats from fictitious creatures (i.e., zombies). Participants read one of four survival scenarios in which the environment and the explicit threat were either consistent or inconsistent with ancestrally based problems (i.e., grasslands–predators, grasslands–zombies, city–attackers, city–zombies), or they rated words for pleasantness. After rating words based on their survival relevance (or pleasantness), the participants performed a free recall task. All survival scenarios led to better recall than did pleasantness ratings, but recall was greater when zombies were the threat, as compared to predators or attackers. Recall did not differ for the modern (i.e., city) and ancestral (i.e., grasslands) scenarios. These recall differences persisted when valence and arousal ratings for the scenarios were statistically controlled as well. These data challenge the specificity of ancestral priorities in survival-processing advantages in memory.

Similar content being viewed by others

The theory of evolution (Darwin, 1859) arguably represents the most influential scientific idea to date. Recently, memory researchers have attempted to relate this grand theory to the workings of our memory systems, in hopes of illuminating the ever-elusive (and therefore rarely addressed) issue of memory functionality (i.e., why did our memory systems evolve?). This represents an important endeavor because a comprehensive account of human memory hinges on explaining both the proximal mechanisms related to certain memory phenomena and the reasons why these mechanisms developed in the first place (see Anderson & Schooler, 2000).

Taking a functionalist approach, Nairne and colleagues (Nairne & Pandeirada, 2008, 2010; Nairne, Pandeirada, & Thompson, 2008; Nairne, Thompson, & Pandeirada, 2007) have shown that survival processing enhances memory performance as measured by recall, a finding that has been replicated by others (Kang, McDermott, & Cohen, 2008; Weinstein, Bugg, & Roediger, 2008; but see Butler, Kang, & Roediger, 2009, for an exception). In these incidental learning experiments, participants rated words on the basis of their relevance to certain scenarios (e.g., survival, burglary, moving) and then performed a memory test in which the rated words were recalled. Across a variety of comparable scenarios and other “deep” processing conditions, survival processing consistently led to better long-term retention than did other conditions, suggesting that our memory systems may have evolved to help us remember fitness-relevant information.Footnote 1 Nairne and Pandeirada (2010) capture this general idea in their “ancestral priorities” framework.

Critically, and relevant to the present study, it has been argued that inducing problems of priorities specifically faced by our ancestors should lead to better recall than do more modern problems (Weinstein et al., 2008). That is, because it is commonly believed that the majority of our cognitive development occurred during the Pleistocene era (approximately from 1.8 million to 10,000 years ago), our memory processes should be tuned to the problems faced by our ancestors during that time (e.g., avoiding predators, finding food). Indeed, Weinstein et al. presented compelling survival-processing data showing that evading predators on the grasslands (ancestor environment) led to better recall than did evading attackers in a city (modern environment). They concluded that “our memory has adapted very well to self-preservation in the type of setting in which we might have found ourselves until very recently (i.e., the grasslands), but has not yet evolved to function optimally in more modern contexts (i.e., the city)” (p. 918). This specific hypothesis—we will call it the ancestral environment hypothesis—is the primary focus of the present study, as we sought to investigate the specificity of the ancestral priorities framework (Nairne & Pandeirada, 2010).

The claim that our memory systems should be sensitive to ancestral priorities is consistent with a more general idea put forth by evolutionary theory—that nature usually sculpts specific rather than general adaptations (see Cosmides & Tooby, 1994; Futuyma & Moreno, 1988). The proposal is that for any given adaptation, a specific problem originally drove it into existence. Stated more succinctly, specific adaptations reflect specific problems. As a result, it might be expected that processing items in terms of their survival utility on the grassland leads to better retention than does processing these same items with respect to a more modern environment, because the grasslands are believed to represent the environment in which our cognitive systems were sculpted during the Pleistocene era.

The present study investigated this idea further by comparing the typical ancestral priority scenario and a modern survival scenario, with scenarios that involved threats from fictitious creatures (i.e., zombies). According to the ancestral environment hypothesis, those survival scenarios linked to our ancestral past should elicit greater recall than either of the modern survival scenarios and than those scenarios that have never been faced by our species (i.e., evading zombies). However, if recall does not differ for ancestral and modern survival scenarios, or for zombies and other threats, the specificity of ancestral priorities in survival-processing advantages in memory would be challenged. We also collected ratings of arousal and valence for these scenarios in order to examine whether any recall differences might be related to emotional processing elicited by the scenarios, an idea that others have suggested (Kang et al., 2008; Nairne et al., 2007; Weinstein et al., 2008) but that has previously remained untested.

Method

Participants and apparatus

Two-hundred Colorado State University undergraduates took part in this study and received course credit for their participation (141 female, 59 male). Their average age was 19.04 years. Stimuli were presented on PCs programmed with E-Prime software.

Materials and design

The stimuli included 34 concrete nouns taken from Nairne et al. (2007). Four of these were used as practice items; thus, the studied list included 30 items. A between-subjects design was used in which participants were randomly assigned to one of the five rating scenarios (see the scenario instructions provided below; n = 40 in each group).

Procedure

Participants were first informed that they would be rating words on the basis of relevance to a certain scenario. They were then given one of five rating scenarios with the following instructions:

For the grasslands–predators scenario, the instructions were as follows:

In this task we would like you to imagine that you are stranded in the grasslands of a foreign land, without any basic survival materials. Over the next few months, you’ll need to find steady supplies of food and water and protect yourself from predators. We are going to show you a list of words, and we would like you to rate how relevant each of these words would be for you in this survival situation. Some of the words may be relevant and other may not—it’s up to you to decide.

This scenario was identical in wording to the survival scenario used by Nairne et al. (2007).

For the grasslands–zombies scenario, the word predators in the scenario was replaced with the word zombies. For the city–attacker scenario, the words grasslands and predators were replaced with city and attackers, respectively (this scenario was identical in wording to the city survival scenario used by Weinstein et al., 2008). The city–zombie scenario was identical to the city–attacker scenario, except that the word attacker was replaced with zombie.

For the pleasantness ratings, participants were instructed as follows: “In this task, we are going to show you a list of words, and we would like you to rate the pleasantness of each word. Some of the words may be pleasant and other may not—it’s up to you to decide.” This was identical in wording to the instructions used by Nairne et al. (2007).

Words were presented in the center of the screen for 5 s each, and the order of their presentation was randomized for each participant. Except for the pleasantness ratings, participants were asked to rate each word on a five-point scale, where 1 = totally irrelevant and 5 = totally relevant. For the pleasantness ratings, the scale was anchored with 1 = extremely unpleasant and 5 = extremely pleasant. The rating scale appeared just below each item, and participants responded by pressing a key on the number pad. Participants were asked to respond within the 5 s the word was presented. There was no mention of a later memory test.

After the last word was rated, participants completed a brief demographic questionnaire that asked for information regarding age, sex, education, and other personal characteristics. This lasted approximately 2 min. The instructions for the recall task were then given. Participants were asked to recall on a response sheet, in any order, the words they had rated earlier. A period of 10 min was allotted for this recall task. Finally, participants (except for those in the pleasantness condition) were again presented with the scenario in which their ratings were based. Below the scenario were two 9-point scales in which participants were asked to make two final ratings, one based on arousal and the other on valence (the order was counterbalanced). For the arousal scale, 1 = excited and 9 = calm; for the valence scale, 1 = happy and 9 = sad. (These scales were modeled after the Self Assessment Manikin; Lang, Bradley, & Cuthbert, 1999.) Participants were instructed to “Please circle the number that best describes the way you would feel if you were actually in the present scenario.” The experiment concluded after these ratings were made.

Results

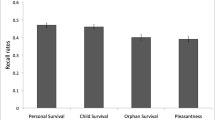

To test for recall differences among the scenarios, a 2 (threat type: zombies vs. predators/attackers) x 2 (environment: grasslands vs. city) ANOVA was conducted (see Fig. 1). The main effect of threat type was reliable, F(1, 156) = 13.23, MSE = 0.13, η 2p = .08, indicating that those scenarios with zombies as the threat elicited higher recall than those with predators/attackers.Footnote 2 Follow-up t tests confirmed this observation. The grasslands–zombies scenario was associated with higher recall than the grasslands–predators scenario, t(78) = 2.38, p < .05, Cohen’s d = 0.54. Likewise, the city–zombies scenario showed higher recall than the city–attackers scenario, t(78) = 2.76, p < .05, Cohen’s d = 0.63. Turning to the environments in the scenarios, no main effect of environment was found, nor was the Threat Type x Environment interaction reliable (Fs < 1). Finally, all survival scenarios elicited higher recall than the pleasantness control condition (all Fs > 6), replicating previous survival-relevant processing advantages.

To investigate the effects of arousal and valence on recall, we first conducted 2 (threat type: zombies vs. predators/attackers) x 2 (environment: grasslands vs. city) ANOVAs on both of these variables. These data can be found in Table 1. Turning first to arousal, a main effect of threat type was found, F(1, 156) = 12.02, MSE = 30.63, η 2p = .07, indicating that those scenarios with the threat of zombies were more arousing than those with the threats of predators/attackers. Follow-up t tests indicated that the grasslands–zombies scenario was associated with higher arousal than the grasslands–predators scenario, t(78) = 2.11, p < .05, and the city–zombies scenario showed higher arousal than the city–attackers scenario, t(78) = 2.77, p < .05. Neither the main effect of environment nor the Threat Type x Environment interaction was reliable (Fs < 1). Turning next to valence, a main effect of threat type was found, such that those scenarios with zombies as the threat were rated as more negative (i.e., sad), F(1, 156) = 6.48, MSE = 18.23, η 2p = .04. Follow-up t tests showed that both the grasslands–zombies and city–zombies scenarios were associated with higher valence than the city–attackers scenario, t(78) = 2.84, p < .05, and t(78) = 2.38, p < .05, respectively. Neither the main effect of environment nor the Environment x Threat Type interaction was reliable (Fs < 1).

To determine whether these differences in arousal and valence can account for the recall differences among the scenarios, we conducted a 2 (threat type: zombies vs. predators/attackers) x 2 (environment: grasslands vs. city) ANCOVA including arousal and valence as covariates. The main effect of threat type was still found, F(1, 154) = 14.54, MSE = 0.13, η 2p = .09. Also consistent with our earlier recall analyses, neither the main effect of environment nor the Threat Type x Environment interaction was reliable. Thus, although the zombie scenarios were more arousing and more negative, these differences do not account for the recall differences between scenarios.

To rule out possible congruency effects (see Craik & Tulving, 1975) and study time explanations for our recall findings, we checked for differences in average ratings and their corresponding response times across scenarios (these are presented in Table 1). This was done by conducting 2 (threat type: zombies vs. predators/attackers) x 2 (environment: grasslands vs. city) ANOVAs on both average ratings and rating reaction times. For the ratings, there was no main effect of threat type (F < 1), no main effect of environment, F(1, 156) = 1.73, MSE = 0.44, η 2p = .01, and no interaction, F(1, 156) = 1.85, MSE = 0.48, η 2p = .01. For response times, there was no main effect of threat (F < 1); however, the main effect of environment was reliable, F(1, 156) = 4.94, MSE = 725,623, η 2p = .03, indicating that those in the grasslands scenarios took longer to rate the words than did those in the city scenarios. However, because there were no recall differences between these scenarios, we did not explore this further.

Discussion

The present experiment sought to further investigate the recent empirical finding that survival processing leads to recall advantages, a finding that fits nicely within an evolutionary framework. More specifically, we tested a hypothesis derived from Weinstein et al. (2008) that we call the ancestral environment hypothesis, which states that processing information in the context of scenarios faced by our ancestors should lead to better recall, as compared to more modern scenarios or scenarios never faced by our ancestors. The data did not support this hypothesis. Although all survival scenarios led to better recall than did a control condition (pleasantness ratings), those scenarios that included zombies as the explicit threat elicited higher recall than did scenarios including more realistic threats. Recall differences could not be explained by differences in emotional processing, because statistically controlling for arousal and valence ratings did not eliminate recall differences.

It is noteworthy that we were unable to replicate the recall differences between the grasslands–predators and city–attackers scenarios that were previously found by Weinstein et al. (2008) and Nairne and Pandeirada (2010). However, their experimental designs were somewhat different from the present experiment, which involved a pure between-subjects manipulation (i.e., participants in each group rated words in the context of a single scenario). For example, Nairne and Pandeirada (2010, Exp. 4) used a pure within-subjects design; both Weinstein et al. and Nairne and Pandeirada (2010, Exp. 1) had participants either rate items in terms of modern (i.e., city) or ancestral (i.e., grasslands) scenarios, and for each group, half of the items were also rated with respect to a moving scenario. Thus, the present experiment is the first to compare these scenarios using a pure between-subjects design. Furthermore, using the weighted effect size of the previously reported studies comparing the grasslands and city scenarios (d = 0.51), we had ample power in the present study to detect this difference (~.87). Finally, in a follow-up experiment, we replicated our null grasslands–city comparison, showing that correct recall associated with the grasslands–predators condition (M = .49, SD = .14) did not differ from that in the city–attackers condition (M = .55, SD = .12), t(58) = –1.69, p = .10, Cohen’s d = –0.44.Footnote 3 In fact, the direction of this effect is the opposite of that predicted by the ancestral environment hypothesis. Thus, for reasons to be determined, the previously reported findings of differences between the city and grasslands scenarios might be limited to within-subjects designs. We should note again, however, that all of the survival scenarios in the present study led to greater recall than did pleasantness ratings, even with a pure between-subjects design, so this limitation would not likely apply to fitness-relevant processing advantages more generally.

Before speculating on the proximal mechanisms that may have operated in this study, it is important to note that we were able to rule out several simple explanations for our findings. First, it was not the case that the zombie scenarios elicited higher relevance ratings than the others, ruling out possible congruency effects (see Craik & Tulving, 1975). Second, we were able to check for possible between-condition study time differences by comparing rating response times across the scenarios. Again, no discernible pattern was found in this analysis that would be consistent with a study time explanation of the recall data. Finally, every participant, no matter the condition, rated the same words; thus, stimulus effects can also be dismissed.

Some have speculated that survival scenarios may elicit higher emotional arousal that, in turn, may lead to higher recall (Kang et al., 2008; Nairne et al., 2007; Weinstein et al., 2008). We investigated this possibility by obtaining arousal and valence ratings for each scenario. Although the arousal and valence ratings mirrored the recall data (i.e., the zombie scenarios were more arousing than the others), these ratings could not account for the recall differences between scenarios when added as a covariate. It should be noted, however, that this analysis only considered the overall ratings of arousal and valence for the scenarios; thus, it is still possible that arousal and valence had an effect on the item level that was not captured in these analyses. Nevertheless, Kang et al. found that scenarios equated on arousal and valence still showed survival-processing advantages in recall, so the available data do not support emotional processing as the mechanism responsible for survival-processing advantages (at least at a general level; see Nairne & Pandeirada, 2010, for a similar finding). This begs the question as to what was the responsible mechanism.

Perhaps survival scenarios that included zombies led to the activation of “death and disgust systems,” making this threat more salient.Footnote 4 Indeed, zombies are likely to evoke more specific imagery than does a predator or attacker, which may be more effective in inducing survival-relevant processing. One could also argue that zombies might be more familiar to participants as a result of being popularized in film and other media recently, and this might have led to more elaborate encoding of studied items. Although this is possible, previous research has shown that the survival-processing advantage is found even though modern scenarios are rated as more familiar than survival scenarios (see Nairne & Pandeirada, 2010, Exp 4). Regardless of potential differences in the familiarity of the scenarios or on other dimensions, it is difficult to reconcile the data we report with the idea that processing associated with our ancestral environments leads to better recall.

As previously mentioned, the present data challenge the specificity of ancestral priorities in survival-processing advantages in memory (Nairne & Pandeirada, 2010; Weinstein et al., 2008). The fact that the zombie scenarios led to higher recall than did the ancestral scenario casts doubt on the notion that the most effective survival processing is specific to ancestral environments. However, it may not be the environment per se that is important, but rather the processing engendered by the scenarios. That is, both the ancestor-consistent and ancestor-inconsistent scenarios may activate similar self-preservation and/or predator avoidance systems, thus leading to the mnemonic benefit of survival processing. However, inherent in this view is the assumption that resemblance to ancestral environments should elicit comparable levels of survival processing, a claim that is difficult to test empirically because of the inherent difficulty in determining which scenarios—real or fictitious—would most effectively activate survival-based processing. Moreover, one may wonder whether memory retention itself is the best marker to use to test the general ancestral priorities idea. Regardless, our data simply suggest that ancestral environments have no specific advantage with respect to encouraging survival-relevant memory benefits. This is not to say, however, that ancestral priorities did not shape our memory systems and that this hypothesis is wrong; rather, it suggests that using scenarios related to ancestral environments may not be an ideal way to test this idea.

Taken together, our data perhaps speak to the larger issue concerning the generality of survival processing. After all, our species is seemingly adept at surviving in all sorts of environments, and at solving myriad novel problems, not just those that confronted us in the environments in which our cognitive systems were sculpted. In other words, our species’ ability to spread both quickly and broadly may provide evidence, albeit indirect, that survival processing is context independent, not optimally designed for any one environment in particular (see Stringer & McKie, 1996). Indeed, just as environments are dynamic, so seems our ability to process fitness-relevant information.

Notes

It should be noted that the proximal mechanism (s) responsible for this memory advantage is still unknown and is not always a primary focus in this line of research. Nevertheless, dimensions such as distinctiveness, emotionality, and novelty might play a role. Furthermore, while this experimental paradigm instructs subjects to imagine themselves in various environments, the degree to which subjects can construct these imagined environments is unclear.

For purposes of analysis, predators/attackers were combined and compared to zombies to assess differences associated with Threat Type. One may conceptualize this comparison as realistic versus unrealistic threats.

Sixty participants (n = 30 in each condition) took part in this group-administered experiment. The design and procedure were identical to Experiment 1, with a few exceptions, and only the grasslands-predators and city-attackers scenarios were used. Words were presented on a screen in the front of a room, and participants rated items on a sheet of paper, rather than using a computer keyboard. Additionally, following Nairne and Pandeirada (2010; Experiment 4), follow-up ratings were given for interestingness of scenario, ease of image creation, arousal, and familiarity. Neither the relevance ratings nor any of the follow-up ratings differed between groups, F’s < 1.

We thank a reviewer for suggesting this possibility.

References

Anderson, J. R., & Schooler, L. J. (2000). The adaptive nature of memory. In E. Tulving & F. I. M. Craik (Eds.), The Oxford handbook of memory (pp. 557–570). New York: Oxford University Press.

Butler, A. C., Kang, S. H. K., & Roediger, H. L., III. (2009). Congruity effects between materials and processing tasks in the survival processing paradigm. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35, 1477–1486.

Cosmides, L., & Tooby, J. (1994). Origins of domain-specificity: The evolution of functional organization. In L. Hirschfeld & S. Gelman (Eds.), Mapping the mind: Domain-specificity in cognition and culture (pp. 85–116). New York: Cambridge University Press.

Craik, F. I. M., & Tulving, E. (1975). Depth of processing and the retention of words in episodic memory. Journal of Experimental Psychology: General, 104, 268–294.

Darwin, C. (1859). On the origin of species. London: John Murray.

Futuyma, D. J., & Moreno, G. (1988). The evolution of ecological specialization. Annual Review of Ecology and Systematics, 19, 207–233.

Kang, S. H. K., McDermott, K. B., & Cohen, S. M. (2008). The mnemonic advantage of processing fitness-relevant information. Memory & Cognition, 36, 1151–1156.

Lang, P. J., Bradley, M. M., & Cuthbert, B. N. (1999). International affective picture system (IAPS): Technical manual and affective ratings. Gainesville, FL: University of Florida, Center for Research in Psychophysiology.

Nairne, J. S., & Pandeirada, J. N. S. (2008). Adaptive memory: Is survival processing special? Journal of Memory and Language, 59, 377–385.

Nairne, J. S., & Pandeirada, J. N. S. (2010). Adaptive memory: Ancestral priorities and the mnemonic value of survival processing. Cognitive Psychology, 61, 1–22

Nairne, J. S., Pandeirada, J. N. S., & Thompson, S. R. (2008). Adaptive memory: The comparative value of survival processing. Psychological Science, 19, 176–180.

Nairne, J. S., Thompson, S. R., & Pandeirada, J. N. S. (2007). Adaptive memory: Survival processing enhances retention. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33, 263–273.

Stringer, C., & McKie, R. (1996). African exodus: The origins of modern humanity. New York: Holt.

Weinstein, Y., Bugg, J. M., & Roediger, H. L., III. (2008). Can the survival recall advantage be explained by basic memory processes? Memory & Cognition, 36, 913–919.

Author Note

We thank Melanie Soderstrom for her helpful comments throughout this project. We also thank Chelsea Crouch, Timothy Pallaoro, and Emily Phenicie for their efforts in data collection.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Soderstrom, N.C., McCabe, D.P. Are survival processing memory advantages based on ancestral priorities?. Psychon Bull Rev 18, 564–569 (2011). https://doi.org/10.3758/s13423-011-0060-6

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-011-0060-6