Abstract

In this report, we examine whether and how altered aspects of perception and attention near the hands affect one’s learning of to-be-remembered visual material. We employed the contextual cuing paradigm of visual learning in two experiments. Participants searched for a target embedded within images of fractals and other complex geometrical patterns while either holding their hands near to or far from the stimuli. When visual features and structural patterns remained constant across to-be-learned images (Exp. 1), no difference emerged between hand postures in the observed rates of learning. However, when to-be-learned scenes maintained structural pattern information but changed in color (Exp. 2), participants exhibited substantially slower rates of learning when holding their hands near the material. This finding shows that learning near the hands is impaired in situations in which common information must be abstracted from visually unique images, suggesting a bias toward detail-oriented processing near the hands.

Similar content being viewed by others

Perception, attentional control, and semantic processing are remarkably influenced by what we do with our hands (e.g., Bekkering & Neggers, 2002; Davoli & Abrams, 2009; Davoli, Du, Montana, Garverick, & Abrams, 2010; Fagioli, Hommel, & Schubotz, 2007; Vishton et al., 2007). One factor that seems to be of particular importance is the proximity with which the hands are held to objects being viewed. For example, in peripersonal space, items near the hands receive perceptual and attentional priority relative to other objects in the environment (Abrams, Davoli, Du, Knapp, & Paull, 2008; Cosman & Vecera, 2010; Reed, Grubb, & Steele, 2006; Tseng & Bridgeman, 2011). These enhancements are not attributable to factors of hand proximity such as hand visibility, response location, or gross postural differences, nor do they emerge in the presence of an arbitrary (i.e., nonhand) visual anchor (Abrams et al., 2008; Davoli & Abrams, 2009; Davoli et al., 2010; Reed et al., 2006; Tseng & Bridgeman, 2011). Instead, the enhanced processing near the hands has been theorized to be cognitively adaptive, given that the hands afford direct interaction with nearby items and that the uses and consequences of those objects can be determined by relatively minor differences in visual detail (Abrams et al., 2008; Graziano & Cooke, 2006).

In this report, we consider whether and how the altered aspects of perception and attention near the hands affect one’s learning of to-be-remembered visual material. The quality of learning and memory is inextricably linked to the quality of perceptual and attentional processing. Hence, the known effects of hand position on these components of experience should have direct consequences for learning and long-term memory, although such consequences have yet to be investigated or described. In addition to theoretical ramifications for conceptions of human learning, this question has practical significance, given that we now regularly consume information both near to (e.g., via a hard copy of text or a handheld electronic device) and far from (e.g., via a desktop computer or a television) the hands.

How might hand position influence learning? One possibility is that enhanced perception (Cosman & Vecera, 2010), prolonged processing (Abrams et al., 2008), and improved working memory (Tseng & Bridgeman, 2011) for objects near the hands will be universally beneficial for learning, because these factors lend themselves to deeper processing, and thus stronger, more complete long-term memories (e.g., Craik & Lockhart, 1972; Craik & Tulving, 1975). We tested this possibility in Experiment 1 and, previewing our results, found no evidence to support it: All else being equal, placing the hands near to (or far from) visual material does not affect learning. That is not to say, however, that hand posture does not affect learning and memory under specific conditions. Instead of postulating a universal benefit of placing to-be-learned material near the hands, it may be more appropriate to hypothesize that the effects of hand position will depend on the learning context. Because processing enhancements near the hands are thought to promote successful interactions with objects in peripersonal space (e.g., Abrams et al., 2008; Graziano & Cooke, 2006; Reed et al., 2006), these enhancements might produce a processing bias toward item-specific detail. Indeed, decisions about whether and how to interact with a nearby object are often based on detailed, rather than categorical, discriminations (e.g., eating the ripe apple but discarding the rotten one), whereas such scrutiny is not necessary for distant objects. If this theory is true, placing the hands near objects might result in a parallel bias in both learning and memory. We tested this hypothesis by creating a situation in which learning depended on the abstraction of information common to many different stimuli. If hand proximity places a bias on item detail, this abstraction should be difficult, and as a result, learning should be impaired. We ultimately supported this possibility in Experiment 2.

The experiments below employed a well-established visual learning paradigm known as contextual cuing, which has by now been applied to a variety of visual environments (e.g., Brockmole & Henderson, 2006; Chun & Jiang, 1998, 1999; Goujon, Didierjean, & Marmèche, 2007). In the typical version of this paradigm, participants search for a known target embedded in a visual display. Some of the search displays are repeated several times throughout the course of the experiment, and this repetition affords observers the opportunity to learn these configurations. If learning is successful, search becomes progressively more efficient (i.e., the contextual cuing effect). The rate at which this learning takes place can therefore be tracked by observing the rate of change in response times as a function of the number of times a scene has been repeated.

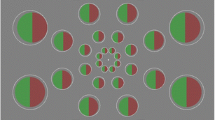

An advantage of using visual displays to assess learning is that visual stimuli can be composed of both item-specific (i.e., detailed) and item-general (i.e., categorical) information. Consider, for example, Fig. 1, which illustrates how stimulus-specific features (in this case, color) can be altered while preserving stimulus-general features (in this case, spatial structure) to produce distinct variations of the same structural theme. Past research has shown that both types of information are used by observers to generate contextual cuing effects (e.g., Ehinger & Brockmole, 2008; Jiang & Song, 2005), but, on the basis of the notion that hand proximity might bias an observer to process an object’s detail, our goal in this study was to determine whether the relative priority given to stimulus-specific features and stimulus-general patterns is altered by hand position.

Representative stimuli from Experiments 1 (panels 1A and 2A) and 2 (all panels A through D). Panels A–D of each example reflect how featural information was altered while maintaining pattern information. Panels 1E and 2E of the examples illustrate details from within the highlighted portions of panels 1D and 2D, respectively. In both experiments, observers searched for and identified the letter embedded within each image (circled in white in panels 1E and 2E)

In two experiments, we engaged observers in a visual search task in which they were to identify single letters embedded within images of fractals and other complex geometrical patterns. In both experiments, we manipulated the proximity of the observers’ hands to the displays while keeping the head-to-display distance constant. Experiment 1 followed typical contextual cuing procedures, in that some images were consistently repeated throughout the experiment (see Fig. 2a). Hence, the observer could rely on any of the visual information available in the images to guide their search, and this all-else-being-equal situation allowed us to test whether merely placing the hands nearer to (or farther from) visual material affects learning. In Experiment 2, we repeated stimuli that differed only in their color content (see Fig. 2b). Hence, any learning of image–target covariance would need to be driven by the item-general pattern information common across images that each varied in their item-specific color composition (cf. Ehinger & Brockmole, 2008). If hand proximity biases processing toward item-specific detail, in this case color, it should be more difficult to appreciate the repetition of structural form when the hands are near the display than when they are farther away. As a result, closer hand proximity should result in diminished or absent learning.

A representative sequence of trials for the first three blocks of Experiments 1 (panel a) and 2 (panel b). In actuality, there were 16 trials per block (8 novel and 8 repeated images); only the first and the last 3 trials per block are illustrated in this example. Repeated images in Experiment 1 retained their exact appearance across blocks, whereas repeated images in Experiment 2 changed color but maintained their structural pattern across blocks. Novel images were never repeated within an experiment

Experiment 1

Experiment 1 considered whether hand position exerts a general effect on contextual cuing. In this experiment, eight images were each periodically repeated 16 times amid images that were presented only once. Participants either held their hands near to (i.e., alongside) the visual display or in their laps. If the perceptual and attentional enhancements associated with hand-nearness generally enhance learning, stronger and more beneficial contextual cuing effects should be observed in the hands-near condition.

Method

Participants

A group of 44 experimentally naïve University of Notre Dame undergraduates participated in exchange for course credit.

Stimuli and apparatus

The stimuli consisted of 136 full-color images of fractals and other abstract geometrical patterns (see Fig. 1, panels 1A and 2A). Within each image, a single gray “T” or “L” was presented in 9-point Arial font (see Fig. 1, panels 1E and 2E). Across scenes, these letters were located at eight possible and equally occurring (x, y) coordinates. Eight of the images were arbitrarily selected to be repeated multiple times throughout the experiment (repeated images). The remaining 128 images were shown once (novel images). The same repeated and novel images were used for all participants. Stimuli were presented on a 17-in. CRT display. Participants were seated approximately 40 cm from the display. Responses were made by pressing one of two response buttons. In separate conditions (described below), these buttons were affixed to either the sides of the monitor or to a lightweight board that participants supported on their laps.

Design and procedure

Participants completed 256 experimental trials that were divided into 16 blocks (refer to Fig. 2a). Within each block, two trial types were arranged: Observers saw each of the eight repeated images in 8 trials, along with 8 novel trials. Within each presentation of a repeated image, the target’s location was held constant, but its identity was randomly determined. Hand position constituted a between-subjects factor. Half of the participants were randomly assigned to place their hands on the sides of the monitor while resting their elbows comfortably on a cushion (hands-near posture), and the other half placed their hands in their laps (hands-far posture). Regardless of hand position, participants conducted a visual search through the scenes for the target letter. They were instructed to indicate its identity as quickly and as accurately as possible by pressing the left button for “T” and the right button for “L.” Trials were terminated if a response was not made within 20 s.

Results and discussion

Trials on which a response was incorrect or missing were discarded (5.4%). For analysis, the 16 blocks were collapsed into four epochs. Mean response times and standard deviations are presented in Table 1. To contrast performance on novel and repeated trials, we calculated a cuing effect for each hand posture (cf. Brockmole, Hambrick, Windisch, & Henderson, 2008). This metric was a proportional measure in which the difference in search times between the novel and repeated trials was divided by the search time observed for novel trials. So, for example, a cuing effect of .33 indicated that search times for repeated trials were one-third faster than those for novel trials.Footnote 1 Cuing scores were entered into a 2 (posture: hands near, hands far) × 4 (epoch: 1–4) mixed factors ANOVA. The results are illustrated in Fig. 3a.

The magnitude of cuing scores increased with epoch, F(3, 126) = 41.70, p < .001, η 2p = .50, indicating the presence of learning: The more times that participants saw a scene, the faster they were to search through it. No main effect of hand posture was observed, F < 1, indicating that it was not simply easier to respond in one posture than in the other, a finding that replicates prior work (e.g., Abrams et al., 2008; Davoli et al., 2010). Importantly, hand posture did not interact with epoch, F <1, and tests of the simple effects revealed no significant differences between hand postures at any epoch, all ts <1.68, ps > .10, indicating that neither the rate nor the magnitude of learning was affected by the nearness of the hands to the visual display. Thus, the enhanced perceptual, attentional, and short-term-memory benefits associated with items near the hands (Abrams et al., 2008; Cosman & Vecera, 2010; Reed et al., 2006; Tseng & Bridgeman, 2011) do not necessarily affect learning and memory.

Experiment 2

In Experiment 2, we considered the possibility that the relationship between hand position and learning depends on the nature of the to-be-learned information. Specifically, we tested whether hand nearness biases observers to process item-specific detail (in this case, color) at the expense of commonalities that exist across visual displays (in this case, structural pattern).

Method

Participants

A new set of 44 University of Notre Dame undergraduates participated in exchange for course credit.

Stimuli and apparatus

The novel stimuli used in Experiment 1 were also used in Experiment 2. Sixteen versions of each of the eight repeated images were created that differed only in their color components through a series of pixelwise manipulations in CIE L*a*b* color space (see Fig. 1 for examples). CIE L*a*b* color space represents any color independently of luminance along two color-opponent axes: green to red (a*) and blue to yellow (b*). Seven transformed pictures were produced using axis swap (e.g., changing yellow to red) and axis invert (e.g., changing yellow to blue) operations (see Oliva & Schyns, 2000). These seven altered versions and the original version of the scene were then further transformed by rotating the color axes 45° in L*a*b color space (see Ehinger & Brockmole, 2008). The apparatus was unchanged from that of Experiment 1.

Design and procedure

All details of the Method were identical to those in Experiment 1, except that each of the 16 possible versions of each repeated image (as opposed to 16 identical repetitions of each repeated image, as in Exp. 1) were shown in random order across the 16 blocks (see Fig. 2b).

Results and discussion

The data exclusions (5.2% of trials) and analyses were the same that were used in Experiment 1. Mean response times and standard deviations are presented in Table 1. Cuing scores were entered into a 2 (posture: hands near, hands far) × 4 (epoch: 1–4) mixed factors ANOVA. The results are shown in Fig. 3b.Footnote 2

The magnitude of cuing scores increased with epoch, F(3, 126) = 16.81, p < .001, η 2p = .29, indicating the standard contextual cuing effect. Again, no main effect of hand posture was observed, F < 1. However, unlike in Experiment 1, hand posture did interact with epoch, F(3, 126) = 3.00, p = .03, η 2p = .07. The rate of learning was shallower when the hands were held alongside the display as opposed to in the lap. Whereas performance did not differ between the hands-near and hands-far conditions in Epochs 1 and 2, clear advantages for the hands-far group were observed in Epochs 3 and 4. Though hand posture did not affect learning when repeated scenes remained identical across repetitions (Exp. 1), hand posture did influence learning when contexts varied in color, despite having identical pattern structure (Exp. 2). Indeed, we confirmed this contrast by observing a three-way interaction within a 2 (experiment) × 2 (hand position) × 4 (epoch) ANOVA, F(3, 252) = 3.00, p = .03, η 2p = .03. These results indicate that near the hands, observers are more detail oriented, which inhibits them from recognizing repetition in structural patterns, common across many images, that differ in the surface feature of color.

General discussion

This report has demonstrated that visual learning is affected by the proximity with which the hands are held to the to-be-learned material in peripersonal space, and it is the first to show how the perceptual and attentional consequences associated with hand posture can influence higher-order cognitive functions such as learning and memory. Despite enhanced perception (Cosman & Vecera, 2010), prolonged processing (Abrams et al., 2008), attentional prioritization (Reed et al., 2006), and improved visual short-term memory (Tseng & Bridgeman, 2011) awarded to objects near the hands, we did not find a corollary advantage in learning in a contextual cuing paradigm that tapped visual long-term memory. This is perhaps surprising, since enriched processing can be reasonably expected to produce more thorough encoding of attended material, which should then manifest through better learning. Instead, we observed that, at best, hand proximity bears no relation to visual learning and visual memory (Exp. 1), and at worst, learning near the hands is impaired in situations in which observers must encode and represent information common to many visual displays that are otherwise unique (Exp. 2). This suggests that processing near the hands is biased toward item-specific detail, a finding that meshes well with the proposal that perceptual and attentional enhancements near the hands are also item-specific (Abrams et al., 2008; Davoli et al., 2010; Graziano & Cooke, 2006).

Given the putative role that enriched processing near the hands plays in facilitating successful interaction with nearby objects, it is possible that attention and perception near the hands may be enhanced at the expense of higher-order cognitive tasks such as learning and memory.Footnote 3 This would be consistent with the notion of two largely dissociable cortical streams of visual processing (cf. Goodale & Milner, 1992; Ungerleider & Mishkin, 1982), one primarily for visuospatial operations (e.g., where an object resides in space or how it may be acted upon) and one primarily for memory-based operations (e.g., what an object is). Whereas accurate and dynamic perception of, and attention to, the space around the hands would be needed for real-time interactions with nearby objects, memory-based operations could interfere with such processing and might, accordingly, be suppressed (see also Davoli et al., 2010, who proposed a similar trade-off to explain impoverished semantic processing near the hands).

Why might human cognition have evolved so that perception, attention, and memory are affected by the position of one’s hands, and does this state of affairs have any practical consequences for behavior in modern society? Detail-oriented processing allows us to discriminate between objects that may otherwise be identical. Because the usefulness of objects can be determined on the basis of minor changes in detail (e.g., a ripe as compared to a rotting apple), it would have been evolutionarily beneficial for an object in the hands to be analyzed at the item-specific level (perhaps even as if it was new) each time. In contrast, it is sufficient to process objects far away from the body in categorical terms, because precise visual details only become more relevant as possible interactions with an object become more probable. In addition to evolutionary significance, detail-oriented processing near the hands may have major implications for emerging developments in applied fields—for example, integrative educational techniques involving haptics (e.g., Minogue & Jones, 2006), the use of hand-held devices in dual-task scenarios (e.g., texting while driving; Drews, Yazdani, Godfrey, Cooper, & Strayer, 2009), and multisensory interventions for people with intellectual and developmental disabilities (e.g., Snoezelen rooms; Lotan, Gold, & Yalon-Chamovitz, 2009).

Notes

An advantage of this approach is that it controls for potential between-group differences in baseline search speed. Although such differences were not present in Experiment 1, we introduce this metric here because baseline search rates do exist in Experiment 2. We note that analysis of raw response times yields conclusions entirely consistent with those described here.

Considering novel trials only, participants in the hands-near condition took, on average, 3,477 ms to find the target. In contrast, in the hands-far condition, participants required 4,079 ms, on average, to find the targets on novel trials [t(42) = 3.60, p < .001]. The proportional cuing effect corrects for this baseline difference. Additionally, we conducted a median split analysis that compared raw response times from the fastest responders in the hands-far condition with those of the slowest responders in the hands-near condition. In this analysis, the baseline search rate difference was eliminated, and both groups averaged 3,705 ms to find the targets on novel trials. The conclusions from this analysis are entirely consistent with those reported in the text. Main effects of trial type [F(1, 20) = 9.54, p < .001] and epoch [F(3, 60) = 8.50, p < .001] were observed, as were trial type × epoch [F(3, 60) = 9.51, p < .001] and trial type × epoch × hand position [F(3, 60) = 2.95, p = .04] interactions.

We thank an anonymous reviewer for suggesting this possibility.

References

Abrams, R. A., Davoli, C. C., Du, F., Knapp, W. K., & Paull, D. (2008). Altered vision near the hands. Cognition, 107, 1035–1047.

Bekkering, H., & Neggers, S. F. W. (2002). Visual search is modulated by action intentions. Psychological Science, 13, 370–374.

Brockmole, J. R., Hambrick, D. Z., Windisch, D. J., & Henderson, J. M. (2008). The role of meaning in contextual cueing: Evidence from chess expertise. Quarterly Journal of Experimental Psychology, 61, 1886–1896.

Brockmole, J. R., & Henderson, J. M. (2006). Using real-world scenes as contextual cues for search. Visual Cognition, 13, 99–108.

Chun, M. M., & Jiang, Y. (1998). Contextual cueing: Implicit learning and memory of visual context guides spatial attention. Cognitive Psychology, 36, 28–71.

Chun, M. M., & Jiang, Y. (1999). Top-down attentional guidance based on implicit learning of visual covariation. Psychological Science, 10, 360–365.

Cosman, J. D., & Vecera, S. P. (2010). Attention affects visual perceptual processing near the hand. Psychological Science, 21, 1254–1258.

Craik, F. I. M., & Lockhart, R. S. (1972). Levels of processing: A framework for memory research. Journal of Verbal Learning and Verbal Behavior, 11, 671–684.

Craik, F. I. M., & Tulving, E. (1975). Depth of processing and the retention of words in episodic memory. Journal of Experimental Psychology. General, 104, 268–294.

Davoli, C. C., & Abrams, R. A. (2009). Reaching out with the imagination. Psychological Science, 20, 293–295.

Davoli, C. C., Du, F., Montana, J., Garverick, S., & Abrams, R. A. (2010). When meaning matters, look but don’t touch: The effects of posture on reading. Memory & Cognition, 38, 555–562.

Drews, F. A., Yazdani, H., Godfrey, C. N., Cooper, J. M., & Strayer, D. L. (2009). Text messaging during simulated driving. Human Factors, 51, 762–770.

Ehinger, K. A., & Brockmole, J. R. (2008). The role of color in visual search in real-world scenes: Evidence from contextual cueing. Perception & Psychophysics, 70, 1366–1378.

Fagioli, S., Hommel, B., & Schubotz, R. I. (2007). Intentional control of attention: Action planning primes action-related stimulus dimensions. Psychological Research, 71, 22–29.

Goodale, M. A., & Milner, A. D. (1992). Separate visual pathways for perception and action. Trends in Neurosciences, 15, 20–25. doi:10.1016/0166-2236(92)90344-8

Goujon, A., Didierjean, A., & Marmèche, E. (2007). Contextual cueing based on specific and categorical properties of the environment. Visual Cognition, 15, 257–275. doi:10.1080/13506280600677744

Graziano, M. S. A., & Cooke, D. F. (2006). Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia, 44, 845–859.

Jiang, Y., & Song, J. (2005). Hyperspecificity in visual implicit learning: Learning of spatial layout is contingent on item identity. Journal of Experimental Psychology. Human Perception and Performance, 31, 1439–1448.

Lotan, M., Gold, C., & Yalon-Chamovitz, S. (2009). Reducing challenging behavior through structured therapeutic intervention in the controlled multi-sensory environment (Snoezelen): Ten case studies. International Journal on Disability and Human Development, 8, 377–392.

Minogue, J., & Jones, M. G. (2006). Haptics in education: Exploring an untapped sensory modality. Review of Educational Research, 76, 317–348.

Oliva, A., & Schyns, P. G. (2000). Diagnostic colors mediate scene recognition. Cognitive Psychology, 41, 176–210.

Reed, C. L., Grubb, J. D., & Steele, C. (2006). Hands up: Attentional prioritization of space near the hand. Journal of Experimental Psychology. Human Perception and Performance, 32, 166–177.

Tseng, P., & Bridgeman, B. (2011). Improved change detection with nearby hands. Experimental Brain Research, 209, 257–269.

Ungerleider, L. G., & Mishkin, M. (1982). Two cortical visual systems. In D. J. Ingle, M. A. Goodale, & R. J. W. Mansfield (Eds.), Analysis of visual behavior (pp. 549–586). Cambridge: MIT Press.

Vishton, P. M., Stephens, N. J., Nelson, L. A., Morra, S. E., Brunick, K. L., & Stevens, J. A. (2007). Planning to reach for an object changes how the reacher perceives it. Psychological Science, 18, 713–719.

Author note

We thank Laura Philipp for her invaluable help with data collection.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Davoli, C.C., Brockmole, J.R. & Goujon, A. A bias to detail: how hand position modulates visual learning and visual memory. Mem Cogn 40, 352–359 (2012). https://doi.org/10.3758/s13421-011-0147-3

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-011-0147-3