Abstract

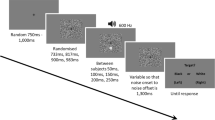

Cross-modal correspondences refer to associations between stimulus features across sensory modalities. Previous studies have shown that cross-modal correspondences modulate reaction times for detecting and identifying stimuli in one modality when uninformative stimuli from another modality are present. However, it is unclear whether such modulation reflects changes in modality-specific perceptual processing. We used two psychophysical timing judgment tasks to examine the effects of audiovisual correspondences on visual perceptual processing. In Experiment 1, we conducted a temporal order judgment (TOJ) task that asked participants to judge which of two visual stimuli presented with various stimulus onset asynchronies (SOAs) appeared first. In Experiment 2, we conducted a simultaneous judgment (SJ) task that asked participants to report whether the two visual stimuli were simultaneous or successive. We also presented an unrelated auditory stimulus, simultaneously or preceding the first visual stimulus, and manipulated the congruency between audiovisual stimuli. Experiment 1 indicated that the points of subjective simultaneity (PSSs) between the two visual stimuli estimated in the TOJ task shifted according to the audiovisual correspondence between the auditory pitch and visual features of vertical location and size. However, these audiovisual correspondences did not affect PSS estimated using the SJ task in Experiment 2. The different results of the two tasks can be explained through the response bias triggered by audiovisual correspondence that only the TOJ task included. We concluded that audiovisual correspondence would not modulate visual perceptual timing and that changes in modality-specific perceptual processing might not trigger the congruency effects reported in previous studies.

Similar content being viewed by others

Data availability

The data supporting the current study are available at https://osf.io/7qgku/

Code availability

The code supporting the current study is available from the corresponding author upon request.

References

Andersen, T. S., Tiippana, K., & Sams, M. (2004). Factors influencing audiovisual fission and fusion illusions. Cognitive Brain Research, 21, 301–308.

Bien, N., Ten Oever, S., Goebel, R., & Sack, A. T. (2012). The sound of size: Crossmodal binding in pitch-size synesthesia: A combined TMS, EEG and psychophysics study. NeuroImage, 59, 663–672.

Brainard, D. H. (1997). The Psychophysics Toolbox. Spatial Vision, 10, 433–436.

Bremner, A. J., Caparos, S., Davidoff, J., de Fockert, J., Linnell, K. J., & Spence, C. (2013). “Bouba” and “Kiki” in Namibia? A remote culture make similar shape–sound matches, but different shape–taste matches to Westerners. Cognition, 126, 165–172.

Chiou, R., & Rich, A. N. (2012). Cross-modality correspondence between pitch and spatial location modulates attentional orienting. Perception, 41, 339–353.

Chiou, R., & Rich, A. N. (2015). Volitional mechanisms mediate the cuing effect of pitch on attention orienting: The influences of perceptual difficulty and response pressure. Perception, 44, 169–182.

Ernst, M. O., & Bülthoff, H. H. (2004). Merging the senses into a robust percept. Trends in Cognitive Sciences, 8(4), 162–169.

Evans, K. K. (2020). The role of selective attention in cross-modal interactions between auditory and visual features. Cognition, 196, 104119.

Evans, K. K., & Treisman, A. (2010). Natural cross-modal mappings between visual and auditory features. Journal of Vision, 10(1), 6, 1–12. https://doi.org/10.1167/10.1.6

Faul, F., Erdfelder. E., Buchner, A. & Lang, A. G. (2009). Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41, 1149–1160.

Janyan, A., Shtyrov, Y., Andriushchenko, E., Blinova, E., & Shcherbakova, O. (2022). Look and ye shall hear: Selective auditory attention modulates the audiovisual correspondence effect. i-Perception, 13, 1–10. https://doi.org/10.1177/20416695221095884

JASP Team. (2022). JASP (Version 0.16.3) [Computer software]. https://jasp-stats.org/

Keetels, M., & Vroomen, J. (2011). No effect of synesthetic congruency on temporal ventriloquism. Attention, Perception, & Psychophysics, 73, 209–218.

Klapetek, A., Ngo, M. K., & Spence, C. (2012). Does crossmodal correspondence modulate the facilitatory effect of auditory cues on visual search? Attention, Perception, & Psychophysics, 74, 1154–1167.

Lee, M. D., & Wagenmakers, E. J. (2014). Bayesian cognitive modeling: A practical course. Cambridge University Press.

Martino, G., & Marks, L. E. (1999). Perceptual and linguistic interactions in speeded classification: Tests of the semantic coding hypothesis. Perception, 28, 903–923.

O’Boyle, M. W., & Tarte, R. D. (1980). Implications for phonetic symbolism: The relationship between pure tones and geometric figures. Journal of Psycholinguistic Research, 9, 535–544.

Orchard-Mills, E., Van der Burg, E., & Alais, D. (2016). Crossmodal correspondence between auditory pitch and visual elevation affects temporal ventriloquism. Perception, 45, 409–424.

Parise, C., & Spence, C. (2008). Synesthetic congruency modulates the temporal ventriloquism effect. Neuroscience Letters, 442, 257–261.

Parise, C. V., & Spence, C. (2009). ‘When birds of a feather flock together’: Synesthetic correspondences modulate audiovisual integration in non-synesthetes. PLOS ONE, 4(5), e5664. https://doi.org/10.1371/journal.pone.0005664

Parise, C. V., & Spence, C. (2012). Audiovisual crossmodal correspondences and sound symbolism: a study using the implicit association test. Experimental Brain Research, 220, 319–333.

Parise, C. V., Knorre, K., & Ernst, M. O. (2014). Natural auditory scene statistics shapes human spatial hearing. Proceedings of the National Academy of Sciences, 111, 6104–6108.

Pelli, D. G. (1997). The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision, 10, 437–442.

Ramachandran, V. S., & Hubbard, E. M. (2001). Synaesthesia —A window into perception, thought and language. Journal of Consciousness Studies, 8(12), 3–34.

Santangelo, V., & Spence, C. (2008). Crossmodal attentional capture in an unspeeded simultaneity judgement task. Visual Cognition, 16, 155–165.

Schneider, K. A., & Bavelier, D. (2003). Components of visual prior entry. Cognitive Psychology, 47, 333–366.

Shams, L., Ma, W. J., & Beierholm, U. (2005). Sound-induced flash illusion as an optimal percept. NeuroReport, 16, 1923–1927.

Spence, C. (2007). Audiovisual multisensory integration. Acoustical Science and Technology, 28(2), 61–70.

Spence, C. (2011). Crossmodal correspondences: A tutorial review. Attention, Perception, & Psychophysics, 73, 971–995.

Spence, C. (2019). On the relative nature of (pitch-based) crossmodal correspondences. Multisensory Research, 32, 235–265.

Spence, C. (2020). Simple and complex crossmodal correspondences involving audition. Acoustical Science and Technology, 41(1), 6–12.

Spence, C., & Deroy, O. (2013). How automatic are crossmodal correspondences? Consciousness and Cognition, 22, 245–260.

Spence, C., & Parise, C. (2010). Prior-entry: A review. Consciousness and Cognition, 19, 364–379.

Theeuwes, J., & Van der Burg, E. (2013). Priming makes a stimulus more salient. Journal of Vision, 13(3), 21, 1–11. https://doi.org/10.1167/13.3.21

Uno, K., & Yokosawa, K. (2022). Pitch-elevation and pitch-size cross-modal correspondences do not affect temporal ventriloquism. Attention, Perception, & Psychophysics, 84, 1052–1063.

Van der Burg, E., Olivers, C. N., Bronkhorst, A. W., & Theeuwes, J. (2008). Pip and pop: Nonspatial auditory signals improve spatial visual search. Journal of Experimental Psychology: Human Perception and Performance, 34, 1053–1065.

Zampini, M., Shore, D. I., & Spence, C. (2005). Audiovisual prior entry. Neuroscience Letters, 381, 217–222.

Funding

This work was supported by the Japan Society for the Promotion of Science, Grant Number 20J14196 and 22J00456 awarded to Kyuto Uno.

Author information

Authors and Affiliations

Contributions

K.U. and K.Y. designed the experiments, analyzed the data, and discussed the results. K.U. performed the experiments and wrote the manuscript. K.Y. refined the manuscript.

Corresponding author

Ethics declarations

Conflicts of interest / Competing interests

Not applicable.

Ethics approval

The project was approved by the Ethical Committee of the Department of Psychology, The University of Tokyo.

Consent to participate

Informed consent was obtained from all participants.

Consent for publication

Informed consent was obtained from all participants and authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open practice statements

The data and materials that supported the current study are available (https://osf.io/7qgku/). None of the experiments was preregistered.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Uno, K., Yokosawa, K. Does cross-modal correspondence modulate modality-specific perceptual processing? Study using timing judgment tasks. Atten Percept Psychophys 86, 273–284 (2024). https://doi.org/10.3758/s13414-023-02812-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-023-02812-3