Figure 1.

For each learning episode, a training subject is randomly sampled. For each training shot, the radar phase information is mapped to the reference belt signal (ref.) via a C-VAE. Through a dense layer, the ANN also tries to regress the extracted respiration , learning from the ideal belt . The latent space mapping is thus constrained to the , whose estimate is also used in the prediction phase.

Figure 1.

For each learning episode, a training subject is randomly sampled. For each training shot, the radar phase information is mapped to the reference belt signal (ref.) via a C-VAE. Through a dense layer, the ANN also tries to regress the extracted respiration , learning from the ideal belt . The latent space mapping is thus constrained to the , whose estimate is also used in the prediction phase.

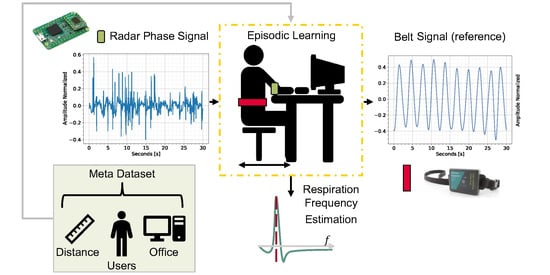

Figure 2.

The diagram shows the main steps of the implementation. For a chosen scenario (room and user), several data sessions with synchronized radar and a reference respiration belt are collected. For multi-output ANN, the labels consist of belt reference signals and the central breath frequencies, estimated from the pure belt reference. The data from fourteen users are then used to train an ANN episodically using Meta-L, while the data from the remaining ten users are solely used for testing.

Figure 2.

The diagram shows the main steps of the implementation. For a chosen scenario (room and user), several data sessions with synchronized radar and a reference respiration belt are collected. For multi-output ANN, the labels consist of belt reference signals and the central breath frequencies, estimated from the pure belt reference. The data from fourteen users are then used to train an ANN episodically using Meta-L, while the data from the remaining ten users are solely used for testing.

Figure 3.

The BGT60TR13 radar system (a) delivers filtered, mixed, and digitized information from each Rx channel. The BGT60TR13C radar (b) is mounted on top of the evaluation board.

Figure 3.

The BGT60TR13 radar system (a) delivers filtered, mixed, and digitized information from each Rx channel. The BGT60TR13C radar (b) is mounted on top of the evaluation board.

Figure 4.

Recording Setup. A synchronized radar system and respiration belt are used to collect 10 30-second sessions per user and distance. The distance ranges used in data collection (up to 30 or 40 cm), refer to the distance between the chest and the radar board.

Figure 4.

Recording Setup. A synchronized radar system and respiration belt are used to collect 10 30-second sessions per user and distance. The distance ranges used in data collection (up to 30 or 40 cm), refer to the distance between the chest and the radar board.

Figure 5.

Preprocessing pipeline. First, the phase information is unwrapped from the raw radar data. The respiration signal and are then estimated by Meta-L, exploiting only in the training phase the data collected with the respiration belt.

Figure 5.

Preprocessing pipeline. First, the phase information is unwrapped from the raw radar data. The respiration signal and are then estimated by Meta-L, exploiting only in the training phase the data collected with the respiration belt.

Figure 6.

Lines in yellow indicate the defined range bin limits and, in red, the detected maximum bin per frame. Range plotting is generated after clutter removal. In (a), the subject did not move much during the session. In (b), the range limits vary according to the user’s distance from the radar board.

Figure 6.

Lines in yellow indicate the defined range bin limits and, in red, the detected maximum bin per frame. Range plotting is generated after clutter removal. In (a), the subject did not move much during the session. In (b), the range limits vary according to the user’s distance from the radar board.

Figure 7.

Band-pass bi-quadratic filter. The diagram (a) depicts the linear flow of the biquad filter, where the output at time instant n is determined by the two previous input I and output O values. Instead, a gain vs. frequency plot of a biquad band-pass filter obtained for a Q of and of 20, over an of 0.33 Hz, is shown as a reference in (b).

Figure 7.

Band-pass bi-quadratic filter. The diagram (a) depicts the linear flow of the biquad filter, where the output at time instant n is determined by the two previous input I and output O values. Instead, a gain vs. frequency plot of a biquad band-pass filter obtained for a Q of and of 20, over an of 0.33 Hz, is shown as a reference in (b).

Figure 8.

Example of sliding window generation for instant bpm estimation on a recorded session The radar signal has been filtered using the ideal belt, . The radar, as opposed to the belt, is not connected to the user during recordings, but to the desk. This results in the local shift of signal breathing peaks due to the millimeter movements of the user. The window (in purple in the plot) is shown paler on the two peaks closest to the calculated peaks’ mean distance. It is also possible to notice some slight corruption at the beginning of the session due to user motion.

Figure 8.

Example of sliding window generation for instant bpm estimation on a recorded session The radar signal has been filtered using the ideal belt, . The radar, as opposed to the belt, is not connected to the user during recordings, but to the desk. This results in the local shift of signal breathing peaks due to the millimeter movements of the user. The window (in purple in the plot) is shown paler on the two peaks closest to the calculated peaks’ mean distance. It is also possible to notice some slight corruption at the beginning of the session due to user motion.

Figure 9.

Comparison of instantaneous bpm between respiration belt and radar (with ideal

) for a recording session. The x-axis corresponds to the difference between the number of frames in the session and the sliding window length. The radar signal corruption flag variable is plotted in green. At the beginning of the session, the radar signal is motion-corrupted (as shown in

Figure 8) and thus does not lead to a reliable bpm. On the other hand, for the workplace use case, the reference belt signal is more robust to motion. In this case, the motion performed was the movement of the hands toward the desk.

Figure 9.

Comparison of instantaneous bpm between respiration belt and radar (with ideal

) for a recording session. The x-axis corresponds to the difference between the number of frames in the session and the sliding window length. The radar signal corruption flag variable is plotted in green. At the beginning of the session, the radar signal is motion-corrupted (as shown in

Figure 8) and thus does not lead to a reliable bpm. On the other hand, for the workplace use case, the reference belt signal is more robust to motion. In this case, the motion performed was the movement of the hands toward the desk.

Figure 10.

Two-component t-SNE representation of the

Breath Meta-Dataset radar data. The circles represent the training users, while the crosses represent the testing users for the Meta-L. No user-specific feature clusters are visible under the t-SNE assumptions. The t-SNE was obtained with a perplexity of 20 and 7000 iterations [

42].

Figure 10.

Two-component t-SNE representation of the

Breath Meta-Dataset radar data. The circles represent the training users, while the crosses represent the testing users for the Meta-L. No user-specific feature clusters are visible under the t-SNE assumptions. The t-SNE was obtained with a perplexity of 20 and 7000 iterations [

42].

Figure 11.

Graphical representation of single-episode learning with C-VAE. The unwrapped radar phase is mapped to the respiration belt signal using the signal reconstruction term. The regularization term makes the latent space closer to a standard multivariate normal distribution. regression allows the parameterization to depend on the respiration signal.

Figure 11.

Graphical representation of single-episode learning with C-VAE. The unwrapped radar phase is mapped to the respiration belt signal using the signal reconstruction term. The regularization term makes the latent space closer to a standard multivariate normal distribution. regression allows the parameterization to depend on the respiration signal.

Figure 12.

Chosen C-VAE topology. The latent space representation is constrained by both the reconstruction of x with respect to the reference and the ideal of breathing y. The decoder layers are an up-sampled mirror version of the encoder layers.

Figure 12.

Chosen C-VAE topology. The latent space representation is constrained by both the reconstruction of x with respect to the reference and the ideal of breathing y. The decoder layers are an up-sampled mirror version of the encoder layers.

Figure 13.

Examples of latent space generation. Examples of radar phase input (a) and generated latent spaces (b), size 32, are shown. The latent spaces are obtained after the model generalization training. Each representation consists of the mean values and the standard deviations . Starting from the top of the representations toward the right, the first 32 pixels represent values, while the last 32 are those of .

Figure 13.

Examples of latent space generation. Examples of radar phase input (a) and generated latent spaces (b), size 32, are shown. The latent spaces are obtained after the model generalization training. Each representation consists of the mean values and the standard deviations . Starting from the top of the representations toward the right, the first 32 pixels represent values, while the last 32 are those of .

Figure 14.

MAML 1–shot experiment, Box Plots. Learning trends of Meta-L, box plots versus episodes (evaluation loop) for the Breath Meta-Dataset. The box in (a) depicts the trend for users in the training set ( tasks). In (b), the trend for the users of the test set ( tasks)is shown. The box’s mid-line represents the median value, while the little green triangle represents the mean.

Figure 14.

MAML 1–shot experiment, Box Plots. Learning trends of Meta-L, box plots versus episodes (evaluation loop) for the Breath Meta-Dataset. The box in (a) depicts the trend for users in the training set ( tasks). In (b), the trend for the users of the test set ( tasks)is shown. The box’s mid-line represents the median value, while the little green triangle represents the mean.

Figure 15.

MAML 1–shot experiment histograms for the first (a) and last (b) set of 300 episodes. The box plots in the topmost plots also contain outliers as small circles outside the whiskers. The mid-plots show an approximation to the Gaussian distribution. The lower plots show the true histograms, which do not underlie a Gaussian distribution. The q1 and q3 represent the first and third quartiles, respectively.

Figure 15.

MAML 1–shot experiment histograms for the first (a) and last (b) set of 300 episodes. The box plots in the topmost plots also contain outliers as small circles outside the whiskers. The mid-plots show an approximation to the Gaussian distribution. The lower plots show the true histograms, which do not underlie a Gaussian distribution. The q1 and q3 represent the first and third quartiles, respectively.

Figure 16.

Loss ( ) as a function of the number of detected breathing spikes over the 30 s sessions for the 10 test users. The base of the box plots with non-uniform ranges was chosen so as to have at least 4 examples for the least common classes (1–4 and 12–14). The upper plot is obtained by fitting the 1–shot Meta-L model (a) to new users, while the middle and lower plots are obtained by 5– (b) and 10– (c) shots adaptation, respectively. For the first two plots, the circles that lie outside the box plots whiskers represent the outliers. Plot (c) shows no visible outliers.

Figure 16.

Loss ( ) as a function of the number of detected breathing spikes over the 30 s sessions for the 10 test users. The base of the box plots with non-uniform ranges was chosen so as to have at least 4 examples for the least common classes (1–4 and 12–14). The upper plot is obtained by fitting the 1–shot Meta-L model (a) to new users, while the middle and lower plots are obtained by 5– (b) and 10– (c) shots adaptation, respectively. For the first two plots, the circles that lie outside the box plots whiskers represent the outliers. Plot (c) shows no visible outliers.

Figure 17.

Standard prediction examples obtained post 1–shot test user-adaptation with MAML . The top plots show the prediction versus the respiration belt reference, while the bottom plots display the estimated bpm and corruption flag. Legends, which also apply to the plots on the right, are placed in the plots on the left. An example of optimal prediction with radar information characterized by little motion corruption is shown in (a). The respiration signal is recovered even in the presence of some corruption, as in (b), thanks to the formulation.

Figure 17.

Standard prediction examples obtained post 1–shot test user-adaptation with MAML . The top plots show the prediction versus the respiration belt reference, while the bottom plots display the estimated bpm and corruption flag. Legends, which also apply to the plots on the right, are placed in the plots on the left. An example of optimal prediction with radar information characterized by little motion corruption is shown in (a). The respiration signal is recovered even in the presence of some corruption, as in (b), thanks to the formulation.

Figure 18.

Edge prediction examples obtained post 1–shot test user-adaptation with MAML

. The top plots show the prediction

versus the respiration belt reference, while the bottom plots display the estimated bpm and corruption flag. Legends, which also apply to the plots on the right, are placed in the plots on the left. In (

a), there are six visible peaks in the belt signal (blue), while in (

b) there are thirteen peaks. In these examples, the algorithm performs less well than in standard cases. This is mainly due to the lack of edge data as prior knowledge during episodic learning. In the bpm estimation in the example (

a), a shorter estimate can be seen for the belt than for radar. This is due to the computation of two distinct windows between radar and belt, as explained in

Section 3.6.

Figure 18.

Edge prediction examples obtained post 1–shot test user-adaptation with MAML

. The top plots show the prediction

versus the respiration belt reference, while the bottom plots display the estimated bpm and corruption flag. Legends, which also apply to the plots on the right, are placed in the plots on the left. In (

a), there are six visible peaks in the belt signal (blue), while in (

b) there are thirteen peaks. In these examples, the algorithm performs less well than in standard cases. This is mainly due to the lack of edge data as prior knowledge during episodic learning. In the bpm estimation in the example (

a), a shorter estimate can be seen for the belt than for radar. This is due to the computation of two distinct windows between radar and belt, as explained in

Section 3.6.

Table 1.

BGT60TR13C radar board, parameters configuration for breath sensing.

Table 1.

BGT60TR13C radar board, parameters configuration for breath sensing.

| Symbol | Quantity | Value |

|---|

| number of transmitters | 1 |

| number of receivers | 3 |

| number of chirps | 2 |

| samples per chirp | 200 |

| center freq. | 60 GHz |

| sampling freq. ADC | 2 MHz |

| frames per second | 20 Hz |

| chirp time duration | 150 µs |

| bandwidth | [58, 62] → 4 GHz |

Table 2.

MAML experiments, average over the last 300 episodes of test tasks evaluation, averaged over 3 repetitions with 95% confidence intervals.

Table 2.

MAML experiments, average over the last 300 episodes of test tasks evaluation, averaged over 3 repetitions with 95% confidence intervals.

| Loss / N–Shots | 1–Shot | 5–Shots | 10–Shots |

|---|

| 84.11 ± 6 | 83.92 ± 1 | 83.39 ± 1 |

Table 3.

MAML experiments, average adaptation time over the last 300 episodes of test tasks evaluation, averaged over 3 repetitions using , in milliseconds.

Table 3.

MAML experiments, average adaptation time over the last 300 episodes of test tasks evaluation, averaged over 3 repetitions using , in milliseconds.

| Time N–Shots | 1–Shot | 5–Shots | 10–Shots |

|---|

| Adaptation Time [ms] | 797 | 2,614 | 5,877 |

Table 4.

MAML experiments, average L and over the last 300 episodes of test tasks evaluation, averaged over 3 repetitions, with 95% confidence intervals.

Table 4.

MAML experiments, average L and over the last 300 episodes of test tasks evaluation, averaged over 3 repetitions, with 95% confidence intervals.

| Loss / N–Shots | 1–Shot | 5–Shots | 10–Shots |

|---|

| (No Corrupt.) | 226.30 ± 5 | 224.53 ± 5 | 221.97 ± 5 |

| (Corrupt.) | 84.11 ± 6 | 83.92 ± 1 | 83.39 ± 1 |

Table 5.

MAML 1–shot experiments, average and trainable parameters with varying latent dimension. The values are obtained over the last 300 episodes of test tasks evaluation. The results are provided with 95% confidence intervals, averaged over 3 repetitions.

Table 5.

MAML 1–shot experiments, average and trainable parameters with varying latent dimension. The values are obtained over the last 300 episodes of test tasks evaluation. The results are provided with 95% confidence intervals, averaged over 3 repetitions.

| Parameters / Latent Dim. | 16 | 32 | 64 | 128 |

|---|

| 86.75 ± 5 | 84.11 ± 6 | 84.31 ± 14 | 85.19 ± 34 |

| Trainable Params. | 382,658 | 739,074 | 1,451,906 | 2,877,570 |

Table 6.

Optimization-based experiments comparison, average over the last 300 episodes of test tasks evaluation, averaged over 3 repetitions with 95% confidence intervals.

Table 6.

Optimization-based experiments comparison, average over the last 300 episodes of test tasks evaluation, averaged over 3 repetitions with 95% confidence intervals.

| Algorithm N–Shots | 1–Shot | 5–Shots | 10–Shots |

|---|

| Reptile | 100.02 ± 2 | 90.78 ± 2 | 86.95 ± 1 |

| MAML | 86.52 ± 5 | 83.68 ± 1 | 83.45 ± 1 |

| MAML | 85.86 ± 10.7 | 82.9 ± 3 | 88.16 ± 15 |

| MAML | 84.11 ± 6 | 83.92 ± 1 | 83.39 ± 1 |