1. Introduction

Nowadays, synthetic aperture radar (SAR) systems play an important role in the different application of remote sensing, geosciences, reconnaissance, and surveillance, which have gained the wider attention in the military and civilian fields [

1,

2,

3,

4,

5]. Ship target recognition and classification using SAR images has significant value in ocean remote sensing applications, since it is able to assist national departments in managing marine ships, as well as the monitoring of marine resource extraction [

6,

7]. However, because the SAR imaging method mainly relies on the target scattering characteristics, electromagnetic waveforms, and imaging algorithms, the ship targets in the SAR images usually do not have the rich detailed information, and the differences between the different types of ship targets are not significant [

7]. Therefore, ship target recognition and classification in the SAR images pose the great challenges.

Figure 1 displays some examples of the ship targets in the public SAR image dataset, i.e., the OpenSARShip2.0 dataset [

8].

The traditional SAR ship classification method usually achieves the goal of classifying the SAR ships by manually designing the features (such as the geometric structure features, electromagnetic scattering features, transform domain features, local invariant features), but the generalization ability of the traditional SAR ship classification method is usually weak [

9,

10]. With the development of the deep learning (DL) technology, the artificial neural network (ANN) is gradually replacing the traditional SAR target classification method and becoming the mainstream choice for SAR ship classification [

10]. However, due to the lack of the significant feature differences between the SAR ship classes, severe imbalance class distribution of the SAR ships, and a small number of the SAR ship images, SAR ship classification based on the DL also faces the significant challenges. Thus, directly applying the classical image classification networks for the SAR ship classification often results in poor performance. Generally, the SAR image has different polarization modes and data forms in the different domains, thus some researchers are gradually shifting their perspective to fusing the image data from the different sources to improve the performance of the SAR ship classification, such as fusing the SAR ship image data with the different polarization modes [

11]. As shown in [

12,

13,

14,

15], Siamese network architecture has been used for the information fusion of the multi-polarized SAR ship images to improve the network performance. Reference [

12] uses an information fusion method of the element-by-element multiplication, while references [

13,

14] propose the Bernoulli pooling and grouping Bernoulli pooling methods, respectively, to fuse the information in the SAR images with different polarization methods. In [

15], the cross-attention mechanism is applied to fuse the multi-polarized information and enhance the network attention to the key features. In [

16], a squeeze-and-excitation Laplacian pyramid network with the dual-polarization feature fusion (SE-LPN-DPFF) for the ship classification in SAR images has been proposed, which reveals the state-of-the-art SAR ship classification performance. In addition, the SAR ship classification method also can use the traditional feature extraction operators to enhance the features of the SAR ships, and then integrates and utilizes the feature learning ability of the neural networks to achieve the better performance. The HOG-ShipCLSNet model has been proposed in [

17], even though the neural networks have a strong feature extraction capability, the traditional manually designed features should be utilized to improve the classification accuracy. This model performs the global attention mechanism operations on the features at different levels, and then feeds each level of the feature into the classifier, averaging the results of each classifier. At the same time, the features extracted by the HOG operator are subjected to the principal component analysis, and finally sent to the final classifier to obtain the final classification result. Based on the same idea, the MSHOG operator has been proposed in [

18], which can extract the SAR ship features and integrate them into neural networks.

On the other hand, with the rapid development of DL technology, the computing power of the DL model is greatly improved, while it also results in great energy consumption. Nowadays, a serious problem in the artificial intelligence (AI) field is that the training process of DL models is expensive, which will become more and more serious when the computing power of DL models increases. The increase in the energy consumption of ANN computing costs is first attributed to the emergence of the increasingly complex ANN model. In 2018, the natural language processing model BERT [

19] released by Google had a parameter count of 340 M. In 2021, the released Switch Transformer [

20] had a parameter count of 1.6 × 10

7 M. In 2023, the latest multimodal large model GPT-4 released by OpenAI caused a huge global response. Although its parameter quantity has not been announced, the industry insiders speculate that it should be equivalent to the parameter quantity of GPT-3 (1.75 × 10

6 M) [

21]. Huge ANN models typically require a significant amount of computing power, and then the high computational costs bring about a sharp increase in energy consumption, which makes it difficult for embedded application platforms with limited energy resources to apply such models. However, designing a network model typically requires repeated adjustments and training, thereby doubling energy consumption.

Although the traditional ANN has made breakthroughs in multiple tasks, the energy consumption issue caused by the cost of the ANN computing makes it difficult to deploy with some resource limited devices and applications. To address this issue, the third-generation ANN called the spiking neural network (SNN) is proposed [

22]. SNNs are based on a brain-like computing framework, using spiking neurons as the basic computing unit and transmitting the information through the sparse spiking sequences, which is called a new-generation of green AI technology with lower energy consumption that can run on neural chip devices. Inspired by the operating mechanism of biological neurons, the main core idea of the SNN is to simulate the process of information encoding and transmission between biological neurons using spiking sequences and spiking functions. SNNs can more accurately simulate information expression and the processing process of the human brain, being a brain-like computing model with high biological plasticity, event-driven characteristics, and lower energy consumption [

23]. Currently, SNN research is mainly focused on the computer vision field using optical images as carriers. Inspired by histogram clustering, Buhmann et al. [

24] first proposed the SNN model based on integrate and fire (IF) neurons, which encodes the image segmentation results with spiking emission frequency. Based on the Time to First Spike encoding strategy, Cui et al. [

25] have proposed two encoding methods (linear encoding and nonlinear encoding), which convert the grayscale values of image pixels into the discrete spiking sequences for image segmentation. Kim et al. [

26] first applied SNNs to the target detection field and proposed a detection model based on the SNN, called as the Spiking-YOLO. By using techniques such as channel-by-channel normalization and signed neurons with imbalanced thresholds, it provided a faster and more accurate message transmission between neurons, achieving better convergence performance and lower energy consumption than the ANN model. Luo et al. [

27] first proposed the target tracker SiamSNN based on SNNs, which has good accuracy and can achieve real-time tracking on the neural morphology chip TrueNorth [

28]. Fang et al. [

29] proposed a residual network based on the spiking neurons to solve the image classification problems by adding the SNN neural layers between the traditional residual units. Bu et al. [

30] proposed a high accuracy and low latency ANN-To-SNN conversion method, and the converted spiking ResNet18 has achieved the recognition accuracy above 0.96 on the CIFAR-10 (an image classification benchmark). The low energy consumption advantage demonstrated by the SNN has the great application prospects for the SAR ship recognition and classification tasks. At present, there are various low-power neural morphology chips that support the deployment of SNNs, such as the TrueNorth [

28], lynxiHP300, ROLLS [

31], etc., providing the strong support for promoting the SNN application.

Currently, the literature review indicates that there is almost no research on SNNs based on the Siamese network paradigm in the SAR ship classification tasks. Aiming at the problems of the excessive model parameter numbers and high energy consumption in the traditional deep learning methods for the SAR ship classification, this paper provides an energy-efficient SAR ship classification paradigm that combines the SNN with the Siamese network architecture, for the first time in the field of the SAR ship classification, which is called the Siam-SpikingShipCLSNet. It combines the advantages of SNN in energy consumption and the advantages of the ideas in performance that use the Siamese neuron network to fuse features from dual-polarized SAR images. Additionally, we migrated the feature fusion strategy from the CNN-based Siamese neural networks to the SNN domain and analyzed the effects of various spiking feature fusion methods on the Siamese SNNs. Finally, an end-to-end error backpropagation optimization method based on the surrogate gradient is adopted to train this model. The list of this paper has been organized as follows. In

Section 2, an energy-efficient and high-performance ship classification strategy has been proposed. In

Section 3, the experiment has been conducted on the OpenSARShip2.0 dataset, and then the experimental results are shown and analyzed. Finally, a conclusion is presented in

Section 4.

2. Energy-Efficient and High-Performance Ship Classification Strategy

The SNN can run on neural chips and have low energy consumption advantages, making it suitable for deployment on embedded platforms with limited resources.

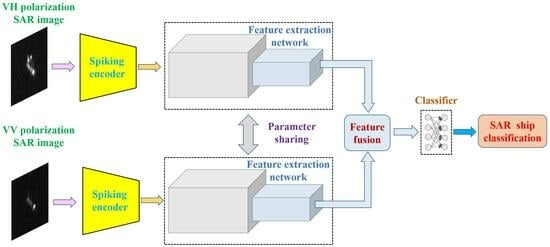

Figure 2 shows the basic architecture of the proposed Siam-SpikingShipCLSNet model. The main body of the proposed model includes a pair of the parameter-shared feature extraction networks, which are used to extract the paired ship features from the dual-polarized SAR images. The extracted ship features are fused in a certain way and then sent to subsequent classification networks for ship classification.

Compared with Siamese networks based on second-generation neural networks such as the convolution neural network (CNN), Siam-SpikingShipCLSNet has significant differences. Firstly, it is based on the third-generation neural network SNNs and uses discrete spiking to transmit the information between neurons. When spiking is transmitted to the neuron, it charges the neuron. If the electric potential exceeds the discharge voltage, the neuron emits a spiking. If there is no spiking transmitted to the current neuron, this neuron is in a resting state and does not work or consume energy. The proposed model is based on frequency encoding, mainly focusing on the frequency of the neurons emitting the spiking without considering the time structure between the spiking sequences. This neural encoding method is a quantitative measure of the output of the neurons. Since it is possible to correspond the spiking firing frequency of neurons within the simulation duration to the continuous values used by the ANN, thus we can draw inspiration from the development achievements of the ANN [

32]. Secondly, the model input is a two-dimensional image composed of the continuous values, which needs to be encoded to obtain a two-dimensional spiking matrix that can represent the image information and be understood by the SNN. At the specified simulation time, the backbone network obtains a spiking feature map composed of the discrete 1/0 spiking. Subsequently, the spiking feature map is sent to the classification network, causing the spiking neurons in the output layer to emit the spiking, which represent the network’s judgment of the current input image category. Meanwhile, compared to the ANNs, the SNNs based on frequency encoding have been extended in the temporal dimension. Usually, the simulation step is set to

, which means recursively processing the

times’ inference of an input image. In this way, multiple output spiking vectors with a length of

will be obtained at the output end of the classification network. Finally, the classification result of the model can be determined by calculating the frequency of each spiking neuron in the output layer during the simulation time

T. The corresponding category of the neuron with the highest spiking frequency is the model classification result.

2.1. Input Image Spiking Encoding

SNNs use the discrete spike to transmit the information, while the pixel values of the input image are continuous, so it is necessary to encode them into spikes. This paper adopts a stateless Poisson information encoding method to achieve this step. The encoder outputs a two-dimensional 0/1 matrix, and an element value of one in the matrix indicates the presence of a spike at that position. The probability of a spike occurring at a certain position is same as the pixel value of the corresponding position in the normalized input image. The larger the pixel value, the greater the likelihood of emitting a spike. The encoding method of the Poisson encoder is as follows:

where

represents the two-dimensional spiking matrix output by the encoder,

represents the input image, and

represents a uniform distribution.

is a random variable following a 0–1 distribution, and its values are used as thresholds to binarize continuous pixel values. During the simulation step

, a two-dimensional spiking sequence with a length of

is obtained. There may be a loss of the image information in the process of converting the continuous pixel value to the discrete two-dimensional map, but this information loss can be greatly reduced after

pulses.

Figure 3 gives an example of visualizing the spiking results using the Poisson encoding. It is seen that although there are differences between the two-dimensional spiking matrix and the original image, it fully preserves the main information of the original image. At the same time, there is also a discrete spiking point caused by the presence of coherent spots in the background. In the actual process of the automatic target recognition, the front-end target detector accurately locates the target and then intercepts it, which can avoid these discrete spiking points.

2.2. Spiking Neuron Model

An SNN is composed of the spiking neurons, and the selection of the spiking neurons affects the overall performance of the SNN model. The typical spiking neuron model includes the Hodgkin Huxley (H-H) model [

33], IF model [

34], leaky integrate and fire (LIF) model [

35], and so on. The H-H spiking neuron model has a strong biological interpretability, but the model is too complex to construct. The IF model is too simplistic and directly uses the capacitors to describe the working process of the neurons, without describing the particle diffusion phenomenon that exists in the biological neurons. The LIF model is an improvement on the IF spiking neuron model. The LIF model considers another physiological factor: the cell membrane is not a perfect capacitor and the charge slowly leaks through the cell membrane over time, allowing the membrane voltage to return to its resting potential [

35]. In this paper, the LIF spiking neuron model is adopted to build a Siamese SNN for the SAR ship classification. The main actions of the spiking neurons include the charging, discharging, and resting, so the corresponding model needs to provide the corresponding descriptions. The differential equations of the LIF model is given by:

where

is the time constant,

is the capacitor, and

is the input impedance.

is the capacitor voltage,

is the resting potential, and

is the current flowing through the capacitor. The change in the voltage during the charging process can be described by the following:

If the membrane voltage exceeds the threshold, the neuron emits spikes, and then the membrane voltage drops to the reset potential .

In practice, the discrete difference equation is generally used to describe the charging, discharging, and resetting actions of the LIF spiking neurons, which is as follows:

Equation (4) represents the change in the neuronal potential during charging. is the input spiking of the neuron, is the state update function of the neuron charging moment, is the membrane voltage of the neuron, and is the spiking sequence emitted by the neuron with a value of 0 or 1. is the spike firing state of the neuron, is the Step function, is the threshold voltage of the spiking emission. Equation (6) represents the hard-reset voltage after transmitting the spike.

2.3. Backbone Network Structure

The overall model of the proposed Siam-SpikingShipCLSNet is shown in

Figure 2. The backbone network is parameter shared and used to extract the feature from the input SAR ship images, whose specific structure is shown in

Figure 4. The network structure is constructed by stacking the spiking convolutional network blocks, and the backbone network consists of three spiking convolution blocks. A single spiking convolution block consists of a convolutional layer, a batch normalization layer, an LIF neuron layer, and a spiking pooling layer. The maximum spiking pooling is selected based on the research [

29], which suggests that the ability of the maximum pooling to process the information is consistent with that of the SNNs, which is beneficial for fitting the temporal data. The backbone network adopts a shallow design to ensures the lightweight network. In addition, the SAR ship image does not have rich feature information, the performance improvement brought by using the deep network is not significant, but the consumption of the computing resources is greater. In addition, this paper adopts an alternative gradient training method, where the gradient is approximate. If a deep network architecture is used, the approximation error of the gradient will gradually accumulate and amplify with the increase in the network depth, which will affect the convergence of the model. The data pass through three spiking convolution blocks to obtain a spiking feature map with a down-sampling rate of eight, which characterizes the feature information of the input image. After the spiking feature fusion processing, it is sent to the target classification network for ship classification.

2.4. Spiking Feature Fusion

The key of the proposed Siamese SNN is the fusion of the spiking features extracted from the backbone network. This paper proposes six fusion methods based on the data fusion idea in the ANN, as shown in

Figure 5.

It can be assumed that the spiking features of the dual-polarized ship images extracted by the backbone network are

and

, and the mapping relationship of the fully connected layer is represented by

. Therefore, under the fusion modes, the network output can be represented by the various formulas in

Table 1.

represents concatenating dual-polarized spiking feature maps in the channel dimension, then feeding them into the subsequent classifiers. indicates taking the maximum element-wise on the corresponding channel of the dual-polarization spiking feature map. In other words, as long as any prior neuron in either branch of the Siamese network emits a pulse, that pulse should be retained. This processing aims to fully utilize the useful features and enhance the firing rate of the spiking neurons. represents the multiplication operation on each channel of the dual-polarized spiking feature map, which means that only the prior neurons in both main networks emit spiking and can be transmitted to the post neurons. This processing aims to enhance common features in the dual-polarized images. Compared to the first three methods, the latter three fusion methods not only fuse the spiking features of the dual-polarized methods, but also send the original spiking features into the classification network for fusion at the output layer.

2.5. Model Learning Methods

The SNNs cannot directly use the gradient descent backpropagation training methods due to their nondifferentiable neural function. Thus, it is necessary to design the algorithms for the error backpropagation of the spiking sequences. SNN learning and optimization methods mainly include the learning rule based on the error backpropagation, learning rule based on the spike-timing dependent plasticity (STDP), and the ANN-to-SNN learning rule. Because the proposed Siam-SpikingShipCLSNet model belongs to a convolutional SNN based on the frequency information encoding, an end-to-end error backpropagation optimization algorithm based on the surrogate gradient is adopted.

2.5.1. Surrogate Gradient Training

The spiking signal emitted by the spiking neurons during the forward propagation is used to transmit the information. The discharge function of the neurons is a Step function, and the corresponding derivative function is an impulse function, so it cannot be used for the directional propagation optimization algorithm based on the gradient descent [

36]. For the proposed model based on the frequency information encoding, the neuron spiking frequency in the output layer characterizes the model classification results. Thus, this paper first calculates the error between it and its label, and then updates the network parameters through the error backpropagation based on the surrogate gradient. The error backpropagation algorithm based on the surrogate gradient refers to the use of the original discharge function of the spiking pulse neurons in (5) in the forward inference of the model, and the use of the approximate differentiable function to update the network parameter in the backpropagation calculation of the gradients. In this paper, the model uses the arctangent function as the surrogate function, which is as follows:

where α takes a value of 2 in this experiment, and the image of the surrogate function is shown in

Figure 6. It is indicated that it can have a shape similar to that of the Step function, and its derivative function is also a sharp curve similar to the impact function.

2.5.2. Loss Function

SNNs based on the frequency information encoding have a higher spiking frequencies output through more excited neurons. Therefore, in the output spiking neuron layer, the category represented by the neuron with the highest spiking frequency can be used as the model classification result. This logic is consistent with the labels that use the one-hot encoding, where the position element corresponding to the specified category has the highest value, with a value of 1 and the rest being 0. Therefore, the neuron spiking emission frequency in the output layer and the distance between the labels can be used to measure the model prediction error. In this paper, the loss function uses the mean square error function, which is as follows:

where

is a vector that represents the number of spikes emitted by each neuron in the output layer.

represents the network output vector, which is equal to the vector

divided by the simulation step

, where

represents the number of the categories and

represents the label.