Semantic Segmentation of Multispectral Images via Linear Compression of Bands: An Experiment Using RIT-18

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Data

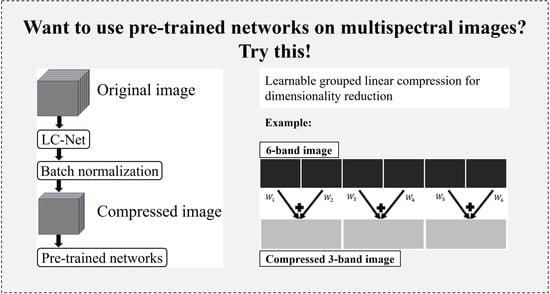

2.2. LC-Net

2.3. Network Structure

2.4. Training Setting

2.5. Comparisons

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dechesne, C.; Mallet, C.; Le Bris, A.; Gouet-Brunet, V. Semantic Segmentation of Forest Stands of Pure Species as a Global Optimization Problem. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Hannover, Germany, 6–9 June 2017; Volume 4, pp. 141–148. [Google Scholar]

- Dong, Z.; Yang, B.; Hu, P.; Scherer, S. An efficient global energy optimization approach for robust 3D plane segmentation of point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 137, 112–133. [Google Scholar] [CrossRef]

- Goldblatt, R.; Stuhlmacher, M.F.; Tellman, B.; Clinton, N.; Hanson, G.; Georgescu, M.; Wang, C.; Serrano-Candela, F.; Khandelwal, A.K.; Cheng, W.H.; et al. Using Landsat and nighttime lights for supervised pixel-based image classification of urban land cover. Remote Sens. Environ. 2018, 205, 253–275. [Google Scholar] [CrossRef]

- Marmanis, D.; Schindler, K.; Wegner, J.D.; Galliani, S.; Datcu, M.; Stilla, U. Classification with an edge: Improving semantic image segmentation with boundary detection. ISPRS J. Photogramm. Remote Sens. 2018, 135, 158–172. [Google Scholar] [CrossRef] [Green Version]

- Kemker, R.; Salvaggio, C.; Kanan, C. Algorithms for semantic segmentation of multispectral remote sensing imagery using deep learning. ISPRS J. Photogramm. Remote Sens. 2018, 145, 60–77. [Google Scholar] [CrossRef] [Green Version]

- Lawin, F.J.; Danelljan, M.; Tosteberg, P.; Bhat, G.; Khan, F.S.; Felsberg, M. Deep projective 3D semantic segmentation. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Ystad, Sweden, 22–24 August 2017; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Springer: Cham, Switherland, 2017; Volume 10424 LNCS, pp. 95–107. [Google Scholar]

- Mateo-García, G.; Laparra, V.; López-Puigdollers, D.; Gómez-Chova, L. Transferring deep learning models for cloud detection between Landsat-8 and Proba-V. ISPRS J. Photogramm. Remote Sens. 2020, 160, 1–17. [Google Scholar] [CrossRef]

- Boulch, A.; Guerry, J.; Le Saux, B.; Audebert, N. SnapNet: 3D point cloud semantic labeling with 2D deep segmentation networks. Comput. Graph. 2018, 71, 189–198. [Google Scholar] [CrossRef]

- Saxena, N.; Babu, N.K.; Raman, B. Semantic segmentation of multispectral images using res-seg-net model. In Proceedings of the Proceedings—14th IEEE International Conference on Semantic Computing, ICSC 2020, San Diego, CA, USA, 5 October 2019; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2020; pp. 154–157. [Google Scholar]

- Sima, C.; Dougherty, E.R. The peaking phenomenon in the presence of feature-selection. Pattern Recognit. Lett. 2008, 29, 1667–1674. [Google Scholar] [CrossRef]

- Kallepalli, A.; Kumar, A.; Khoshelham, K. Entropy based determination of optimal principal components of Airborne Prism EXperiment (APEX) imaging spectrometer data for improved land cover classification. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives, Hyderabad, India, 9–12 December 2014; International Society for Photogrammetry and Remote Sensing: Hannover, Germany, 2014; Volume 40, pp. 781–786. [Google Scholar]

- Theodoridis, S.; Koutroumbas, K. Pattern recognition and neural networks. In Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2001; Volume 2049 LNAI, pp. 169–195. [Google Scholar]

- Cai, Y.; Fan, L.; Atkinson, P.M.; Zhang, C. Semantic Segmentation of Terrestrial Laser Scanning Point Clouds Using Locally Enhanced Image-Based Geometric Representations. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Cai, Y.; Huang, H.; Wang, K.; Zhang, C.; Fan, L.; Guo, F. Selecting Optimal Combination of Data Channels for Semantic Segmentation in City Information Modelling (CIM). Remote Sens. 2021, 13, 1367. [Google Scholar] [CrossRef]

- Bhuiyan, M.A.E.; Witharana, C.; Liljedahl, A.K.; Jones, B.M.; Daanen, R.; Epstein, H.E.; Kent, K.; Griffin, C.G.; Agnew, A. Understanding the effects of optimal combination of spectral bands on deep learning model predictions: A case study based on permafrost tundra landform mapping using high resolution multispectral satellite imagery. J. Imaging 2020, 6, 97. [Google Scholar] [CrossRef]

- Wang, J.; Chang, C.I. Independent component analysis-based dimensionality reduction with applications in hyperspectral image analysis. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1586–1600. [Google Scholar] [CrossRef]

- Bandos, T.V.; Bruzzone, L.; Camps-Valls, G. Classification of hyperspectral images with regularized linear discriminant analysis. IEEE Trans. Geosci. Remote Sens. 2009, 47, 862–873. [Google Scholar] [CrossRef]

- Belkin, M.; Niyogi, P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 2003, 15, 1373–1396. [Google Scholar] [CrossRef] [Green Version]

- Roweis, S.T.; Saul, L.K. Nonlinear dimensionality reduction by locally linear embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Farrell, M.D.; Mersereau, R.M. On the impact of PCA dimension reduction for hyperspectral detection of difficult targets. IEEE Geosci. Remote Sens. Lett. 2005, 2, 192–195. [Google Scholar] [CrossRef]

- Bhatti, U.A.; Yu, Z.; Chanussot, J.; Zeeshan, Z.; Yuan, L.; Luo, W.; Nawaz, S.A.; Bhatti, M.A.; ul Ain, Q.; Mehmood, A. Local Similarity-Based Spatial-Spectral Fusion Hyperspectral Image Classification With Deep CNN and Gabor Filtering. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5514215. [Google Scholar] [CrossRef]

- Fauvel, M.; Chanussot, J.; Benediktsson, J.A. Kernel principal component analysis for the classification of hyperspectral remote sensing data over urban areas. EURASIP J. Adv. Signal Process. 2009, 2009, 783194. [Google Scholar] [CrossRef] [Green Version]

- Tenenbaum, J.B.; De Silva, V.; Langford, J.C. A global geometric framework for nonlinear dimensionality reduction. Science 2000, 290, 2319–2323. [Google Scholar] [CrossRef]

- Zhu, M.; Jiao, L.; Liu, F.; Yang, S.; Wang, J. Residual Spectral-Spatial Attention Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 449–462. [Google Scholar] [CrossRef]

- Roy, S.K.; Manna, S.; Song, T.; Bruzzone, L. Attention-Based Adaptive Spectral-Spatial Kernel ResNet for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7831–7843. [Google Scholar] [CrossRef]

- Sun, W.; Yang, G.; Peng, J.; Meng, X.; He, K.; Li, W.; Li, H.C.; Du, Q. A Multiscale Spectral Features Graph Fusion Method for Hyperspectral Band Selection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5513712. [Google Scholar] [CrossRef]

- Su, H.; Yang, H.; Du, Q.; Sheng, Y. Semisupervised band clustering for dimensionality reduction of hyperspectral imagery. IEEE Geosci. Remote Sens. Lett. 2011, 8, 1135–1139. [Google Scholar] [CrossRef]

- Sun, W.; Peng, J.; Yang, G.; Du, Q. Correntropy-Based Sparse Spectral Clustering for Hyperspectral Band Selection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 484–488. [Google Scholar] [CrossRef]

- Hu, P.; Liu, X.; Cai, Y.; Cai, Z. Band Selection of Hyperspectral Images Using Multiobjective Optimization-Based Sparse Self-Representation. IEEE Geosci. Remote Sens. Lett. 2019, 16, 452–456. [Google Scholar] [CrossRef]

- Jia, X.; Richards, J.A. Segmented principal components transformation for efficient hyperspectral remote-sensing image display and classification. IEEE Trans. Geosci. Remote Sens. 1999, 37, 538–542. [Google Scholar] [CrossRef] [Green Version]

- Zabalza, J.; Ren, J.; Yang, M.; Zhang, Y.; Wang, J.; Marshall, S.; Han, J. Novel Folded-PCA for improved feature extraction and data reduction with hyperspectral imaging and SAR in remote sensing. ISPRS J. Photogramm. Remote Sens. 2014, 93, 112–122. [Google Scholar] [CrossRef] [Green Version]

- Uddin, M.P.; Al Mamun, M.; Hossain, M.A. PCA-based Feature Reduction for Hyperspectral Remote Sensing Image Classification. IETE Tech. Rev. 2021, 38, 377–396. [Google Scholar] [CrossRef]

- Du, Q. Modified Fisher’s linear discriminant analysis for hyperspectral imagery. IEEE Geosci. Remote Sens. Lett. 2007, 4, 503–507. [Google Scholar] [CrossRef]

- Wang, Q.; Meng, Z.; Li, X. Locality Adaptive Discriminant Analysis for Spectral-Spatial Classification of Hyperspectral Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2077–2081. [Google Scholar] [CrossRef]

- Li, W.; Prasad, S.; Fowler, J.E.; Bruce, L.M. Locality-preserving dimensionality reduction and classification for hyperspectral image analysis. IEEE Trans. Geosci. Remote Sens. 2012, 50, 1185–1198. [Google Scholar] [CrossRef] [Green Version]

- Deng, Y.J.; Li, H.C.; Pan, L.; Shao, L.Y.; Du, Q.; Emery, W.J. Modified Tensor Locality Preserving Projection for Dimensionality Reduction of Hyperspectral Images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 277–281. [Google Scholar] [CrossRef]

- Xu, B.; Li, X.; Hou, W.; Wang, Y.; Wei, Y. A Similarity-Based Ranking Method for Hyperspectral Band Selection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9585–9599. [Google Scholar] [CrossRef]

- Jia, S.; Yuan, Y.; Li, N.; Liao, J.; Huang, Q.; Jia, X.; Xu, M. A Multiscale Superpixel-Level Group Clustering Framework for Hyperspectral Band Selection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5523418. [Google Scholar] [CrossRef]

- Huang, S.; Zhang, H.; Pizurica, A. A Structural Subspace Clustering Approach for Hyperspectral Band Selection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Martínez-Usó, A.; Pla, F.; Sotoca, J.M.; García-Sevilla, P. Clustering-based hyperspectral band selection using information measures. IEEE Trans. Geosci. Remote Sens. 2007, 45, 4158–4171. [Google Scholar] [CrossRef]

- Jia, S.; Tang, G.; Zhu, J.; Li, Q. A Novel Ranking-Based Clustering Approach for Hyperspectral Band Selection. IEEE Trans. Geosci. Remote Sens. 2016, 54, 88–102. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, F.; Li, X. Optimal Clustering Framework for Hyperspectral Band Selection. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5910–5922. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Li, Q.; Li, X. Hyperspectral band selection via adaptive subspace partition strategy. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4940–4950. [Google Scholar] [CrossRef]

- Guo, B.; Gunn, S.R.; Damper, R.I.; Nelson, J.D.B. Band selection for hyperspectral image classification using mutual information. IEEE Geosci. Remote Sens. Lett. 2006, 3, 522–526. [Google Scholar] [CrossRef] [Green Version]

- Yang, H.; Du, Q.; Su, H.; Sheng, Y. An efficient method for supervised hyperspectral band selection. IEEE Geosci. Remote Sens. Lett. 2011, 8, 138–142. [Google Scholar] [CrossRef]

- Cao, X.; Xiong, T.; Jiao, L. Supervised Band Selection Using Local Spatial Information for Hyperspectral Image. IEEE Geosci. Remote Sens. Lett. 2016, 13, 329–333. [Google Scholar] [CrossRef]

- Feng, S.; Itoh, Y.; Parente, M.; Duarte, M.F. Hyperspectral Band Selection from Statistical Wavelet Models. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2111–2123. [Google Scholar] [CrossRef]

- Chang, C.I. A joint band prioritization and banddecorrelation approach to band selection for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2631–2641. [Google Scholar] [CrossRef] [Green Version]

- Keshava, N. Distance metrics and band selection in hyperspectral processing with applications to material identification and spectral libraries. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1552–1565. [Google Scholar] [CrossRef]

- Huang, R.; He, M. Band selection based on feature weighting for classification of hyperspectral data. IEEE Geosci. Remote Sens. Lett. 2005, 2, 156–159. [Google Scholar] [CrossRef]

- Demir, B.; Ertürk, S. Phase correlation based redundancy removal in feature weighting band selection for hyperspectral images. Int. J. Remote Sens. 2008, 29, 1801–1807. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Sohn, G.; Jung, J.; Gerke, M.; Baillard, C.; Benitez, S.; Breitkopf, U. The ISPRS benchmark on urban object classification and 3D building reconstruction. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Melbourne, Australia, 25 August–1 September 2012; Copernicus GmbH: Göttingen, Germany, 2012; Volume 1, pp. 293–298. [Google Scholar]

- Volpi, M.; Ferrari, V. Semantic segmentation of urban scenes by learning local class interactions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; IEEE Computer Society: Washington, DC, USA, 2015; Volume 2015, pp. 1–9. [Google Scholar]

- Hughes, M.J.; Hayes, D.J. Automated detection of cloud and cloud shadow in single-date Landsat imagery using neural networks and spatial post-processing. Remote Sens. 2014, 6, 4907–4926. [Google Scholar] [CrossRef] [Green Version]

- Hughes, G.F. On the Mean Accuracy of Statistical Pattern Recognizers. IEEE Trans. Inf. Theory 1968, 14, 55–63. [Google Scholar] [CrossRef] [Green Version]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the Proceedings—30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2017; Volume 2017, pp. 1800–1807. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE Computer Society: Washington, DC, USA, 2018; pp. 6848–6856. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE Computer Society: Washington, DC, USA, 2016; Volume 2016, pp. 770–778. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Borse, S.; Wang, Y.; Zhang, Y.; Porikli, F. InverseForm: A Loss Function for Structured Boundary-Aware Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5901–5911. [Google Scholar]

- Yu, C.; Xiao, B.; Gao, C.; Yuan, L.; Zhang, L.; Sang, N.; Wang, J. Lite-HRNet: A Lightweight High-Resolution Network. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; Institute of Electrical and Electronics Engineers (IEEE): New York, NY, USA, 2021; pp. 10435–10445. [Google Scholar]

- Xu, Z.; Zhang, W.; Zhang, T.; Li, J. Hrcnet: High-resolution context extraction network for semantic segmentation of remote sensing images. Remote Sens. 2021, 13, 71. [Google Scholar] [CrossRef]

- MMSegmentation Contributors. OpenMMLab Semantic Segmentation Toolbox and Benchmark. Available online: https://github.com/open-mmlab/mmsegmentation (accessed on 10 July 2021).

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations (ICLR2021), Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. In Proceedings of the Advances in Neural Information Processing Systems 34 Pre-Proceedings (NeurIPS 2021), Online, 6–14 December 2021. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the Proceedings—30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2017; Volume 2017, pp. 6230–6239. [Google Scholar]

- Zhao, H.; Zhang, Y.; Liu, S.; Shi, J.; Loy, C.C.; Lin, D.; Jia, J. PSANet: Point-wise spatial attention network for scene parsing. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Springer: Cham, Switherland, 2018; Volume 11213 LNCS, pp. 270–286. [Google Scholar]

- Kemker, R.; Salvaggio, C.; Kanan, C. High-Resolution Multispectral Dataset for Semantic Segmentation. arXiv 2017, arXiv:1703.01918. [Google Scholar]

- Pan, B.; Shi, Z.; Xu, X.; Shi, T.; Zhang, N.; Zhu, X. CoinNet: Copy Initialization Network for Multispectral Imagery Semantic Segmentation. IEEE Geosci. Remote Sens. Lett. 2019, 16, 816–820. [Google Scholar] [CrossRef]

- Yuan, Y.; Chen, X.; Wang, J. Object-Contextual Representations for Semantic Segmentation. In Proceedings of the European Conference on Computer Vision, 16th European Conference, Glasgow, UK, 23–28 August 2020; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Springer: Cham, Switzerland, 2020; Volume 12351 LNCS, pp. 173–190. [Google Scholar]

| Dataset | Sensor(s) | GSD (m) | Number of Classes | Number of Bands | Distribution of Bands |

|---|---|---|---|---|---|

| Vaihingen | Green/Red/IR | 0.09 | 5 | 3 | Green, Red, IR |

| Potsdam | VNIR | 0.05 | 5 | 4 | Blue, Green, Red, NIR |

| Zurich Summer | QuickBird | 0.61 | 8 | 4 | Blue, Green, Red, NIR |

| L8 SPARCS | Landsat 8 | 30 | 5 | 10 | Coastal, Green, Red, NIR, SWIR-1, SWIR-2, Pan, Cirrus, TIRS |

| RIT-18 | VNIR | 0.047 | 18 | 6 | Blue, Green, Red, NIR-1, NIR-2, NIR-3 |

| Output Size | ResNet50 | Swin-Tiny | |||

|---|---|---|---|---|---|

| Stem | , 64, stride 2 max pool, stride 2 | , 96, stride 4 | |||

| Resolution 1 | |||||

| Resolution 2 | |||||

| Resolution 3 | |||||

| Resolution 4 | |||||

| FLOPs | |||||

| Parameters | |||||

| Output Size | Stem | Stage 1 | Stage 2 | Stage 3 | Stage 4 | |

|---|---|---|---|---|---|---|

| Resolution 1 | , 64, stride 2 , 64, stride 2 | |||||

| Resolution 2 | ||||||

| Resolution 3 | ||||||

| Resolution 4 | ||||||

| FLOPs | ||||||

| Parameters | ||||||

| Class | ResNet50 | HRNet-w18 | Swin-Tiny | CoinNet | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| PCA | DF | LC-Net | PCA | DF | LC-Net | PCA | DF | LC-Net | - | |

| Road Markings | 0.0 | 33.6 | 40.6 | 0.0 | 3.2 | 73.3 | 0.0 | 13.3 | 63.1 | 85.1 |

| Tree | 72.7 | 91.5 | 90.1 | 8.8 | 78.1 | 85.4 | 80.2 | 82.1 | 88.3 | 77.6 |

| Building | 13.3 | 54.4 | 69.2 | 0.0 | 58.0 | 62.2 | 0.0 | 61.3 | 65.0 | 52.3 |

| Vehicle | 0.0 | 50.5 | 53.2 | 0.0 | 54.6 | 55.7 | 0.0 | 38.5 | 49.5 | 59.8 |

| Person | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Lifeguard Chair | 0.0 | 82.1 | 66.3 | 0.0 | 32.2 | 79.5 | 0.0 | 39.1 | 99.5 | 0.0 |

| Picnic Table | 0.0 | 4.0 | 9.9 | 0.0 | 22.6 | 22.7 | 0.0 | 32.4 | 12.6 | 0.0 |

| Orange Pad | 0.0 | 0.0 | 0.0 | 0.0 | 95.8 | 0.0 | 0.0 | 83.1 | 0.0 | 0.0 |

| Buoy | 0.0 | 0.0 | 0.0 | 0.0 | 0.2 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Rocks | 1.2 | 84.3 | 93.3 | 5.3 | 73.1 | 91.5 | 4.0 | 88.0 | 90.3 | 84.8 |

| Low Vegetation | 0.0 | 1.7 | 4.9 | 0.6 | 11.4 | 5.6 | 0.0 | 2.9 | 19.0 | 4.1 |

| Grass/Lawn | 86.2 | 95.2 | 95.4 | 97.4 | 97.5 | 95.1 | 84.1 | 94.9 | 95.5 | 96.7 |

| Sand/Beach | 92.0 | 10.0 | 93.4 | 86.2 | 94.0 | 94.0 | 96.3 | 76.1 | 95.9 | 92.1 |

| Water/Lake | 58.2 | 96.9 | 97.6 | 89.3 | 95.9 | 98.1 | 93.4 | 98.8 | 94.3 | 98.4 |

| Water/Pond | 13.2 | 14.2 | 95.9 | 0.0 | 7.0 | 98.0 | 43.0 | 63.3 | 98.2 | 92.7 |

| Asphalt | 77.6 | 51.0 | 91.0 | 72.9 | 42.7 | 92.9 | 78.6 | 53.4 | 90.9 | 90.4 |

| Overall Accuracy | 73.8 | 68.7 | 90.7 | 69.5 | 82.4 | 90.4 | 81.2 | 84.6 | 91.0 | 88.8 |

| Mean Accuracy | 25.9 | 41.8 | 56.3 | 22.5 | 44.1 | 59.6 | 30.0 | 48.0 | 60.1 | 52.1 |

| Input Method | ResNet50 | HRNet-w18 | Swin-Tiny | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| OA | MA | Training Hours | OA | MA | Training Hours | OA | MA | Training Hours | ||

| PCA | 73.8 | 25.9 | 3.6 | 69.5 | 22.5 | 3.9 | 81.2 | 30 | 4 | |

| DF | 68.7 | 41.8 | 3.6 | 82.4 | 44.1 | 3.9 | 84.6 | 48 | 4 | |

| LC-Net | 90.7 | 56.3 | 3.6 | 90.4 | 59.6 | 3.9 | 91.0 | 60.1 | 4 | |

| SGS | 123 | 72.6 | 39.7 | 72 | 72.3 | 43.0 | 77 | 72.8 | 43.5 | 80 |

| 124 | 88.7 | 53.2 | 88.4 | 56.5 | 88.9 | 57.0 | ||||

| 125 | 86.7 | 51.4 | 86.4 | 54.7 | 87.0 | 55.2 | ||||

| 126 | 88.6 | 53.1 | 88.3 | 56.4 | 88.9 | 56.9 | ||||

| 134 | 85.4 | 53.2 | 85.1 | 56.5 | 85.7 | 57.0 | ||||

| 135 | 78.8 | 43.3 | 78.4 | 46.6 | 79.1 | 47.1 | ||||

| 136 | 74.5 | 43.8 | 74.1 | 47.1 | 74.7 | 47.6 | ||||

| 145 | 88.8 | 51.2 | 88.5 | 54.5 | 89.1 | 55.0 | ||||

| 146 | 86.4 | 52.8 | 86.1 | 56.1 | 86.6 | 56.6 | ||||

| 156 | 89.3 | 54.4 | 89.8 | 57.7 | 89.6 | 58.2 | ||||

| 234 | 86.3 | 51.9 | 85.9 | 55.2 | 86.5 | 55.7 | ||||

| 235 | 78.5 | 51.5 | 78.2 | 54.8 | 78.7 | 55.3 | ||||

| 236 | 81.0 | 44.1 | 80.7 | 47.3 | 81.3 | 47.8 | ||||

| 245 | 87.8 | 53.3 | 87.4 | 56.6 | 88.1 | 57.1 | ||||

| 246 | 85.4 | 49.6 | 85.1 | 52.9 | 85.7 | 53.4 | ||||

| 256 | 88.2 | 51.4 | 87.9 | 54.7 | 88.5 | 55.2 | ||||

| 345 | 89.3 | 50.7 | 88.9 | 54.0 | 89.5 | 54.5 | ||||

| 346 | 89.1 | 53.7 | 88.8 | 57.0 | 89.4 | 57.5 | ||||

| 356 | 89.4 | 55.7 | 89.0 | 57.8 | 89.8 | 59.3 | ||||

| 456 | 47.5 | 33.5 | 47.2 | 36.8 | 47.8 | 37.3 | ||||

| Methods | The Formation of 3 Input Bands to the Networks from the Original 6 Bands | ResNet50 | HRNet-w18 | Swin-Tiny | |||

|---|---|---|---|---|---|---|---|

| OA | MA | OA | MA | OA | MA | ||

| LC-Net | (12), (34), (56) | 90.7 | 56.3 | 90.4 | 59.6 | 91.0 | 60.1 |

| LC-Net (non-adjacent) | (13), (25), (46) | 90.8 | 56.2 | 90.2 | 59.4 | 91.2 | 59.0 |

| LCAB | (1–6), (1–6), (1–6) | 84.1 | 53.4 | 87.1 | 54.2 | 88.6 | 55.0 |

| Final Weights in LC-Net | |||

|---|---|---|---|

| ResNet50 + LC-Net | HRNet-w18 + LC-Net | Swin-tiny + LC-Net | |

| Band 1 | 0.048 | −0.066 | 0.253 |

| Band 2 | −0.893 | 0.201 | −0.159 |

| Band 3 | 0.927 | −0.464 | 0.220 |

| Band 4 | 1.100 | −0.722 | 0.470 |

| Band 5 | −0.931 | −0.555 | 0.530 |

| Band 6 | 0.460 | −0.417 | 0.348 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cai, Y.; Fan, L.; Zhang, C. Semantic Segmentation of Multispectral Images via Linear Compression of Bands: An Experiment Using RIT-18. Remote Sens. 2022, 14, 2673. https://doi.org/10.3390/rs14112673

Cai Y, Fan L, Zhang C. Semantic Segmentation of Multispectral Images via Linear Compression of Bands: An Experiment Using RIT-18. Remote Sensing. 2022; 14(11):2673. https://doi.org/10.3390/rs14112673

Chicago/Turabian StyleCai, Yuanzhi, Lei Fan, and Cheng Zhang. 2022. "Semantic Segmentation of Multispectral Images via Linear Compression of Bands: An Experiment Using RIT-18" Remote Sensing 14, no. 11: 2673. https://doi.org/10.3390/rs14112673