Oriented Object Detection in Remote Sensing Images with Anchor-Free Oriented Region Proposal Network

Abstract

:1. Introduction

2. Materials and Methods

2.1. Related Work

2.1.1. Generic Object Detection

2.1.2. Oriented Object Detection

2.1.3. Contextual Information and Attention Mechanisms

2.1.4. OBB Representation Methods

2.2. Method

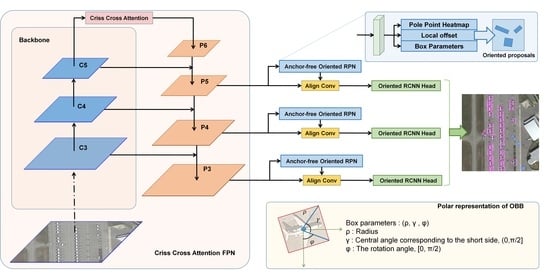

2.2.1. Overall Architecture

2.2.2. Criss-Cross Attention FPN

2.2.3. Anchor-Free Oriented Region Proposal Network

2.2.4. Polar Representation of OBB

2.2.5. Pole Point Regression

2.2.6. Box Parameters Regression

2.2.7. Oriented RCNN Heads

3. Results

3.1. Datasets

3.1.1. DOTA

3.1.2. DIOR-R

3.1.3. HRSC2016

3.2. Implementation Details

3.3. Comparisons with State-of-the-Art Methods

3.3.1. Results on DOTA

3.3.2. Results on DIOR-R

3.3.3. Results on HRSC2016

4. Discussion

4.1. Ablation Study

4.1.1. Effect of the Proposed AFO-RPN

4.1.2. Effect of the CCA-FPN

4.1.3. Effect of the Proposed Polar Representation of OBB

4.2. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| RSI | Remote Sensing Image |

| DCNN | Deep Convolutional Neural Network |

| RSI | Remote Sensing Image |

| HBB | Horizontal Bounding Box |

| OBB | Oriented Bounding Box |

| RPN | Region Proposal Network |

| RoI | Region of Interest |

| FPN | Feature Pyramid Network |

| mAP | mean Average Precision |

| AFO-RPN | Anchor-Free Oriented Region Proposal Network |

| CCA-FPN | Criss-Cross Attention Feature Pyramid Network |

References

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning Rotation-Invariant Convolutional Neural Networks for Object Detection in VHR Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Han, J.; Zhang, D.; Cheng, G.; Guo, L.; Ren, J. Object Detection in Optical Remote Sensing Images Based on Weakly Supervised Learning and High-Level Feature Learning. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3325–3337. [Google Scholar] [CrossRef] [Green Version]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Segment-before-Detect: Vehicle Detection and Classification through Semantic Segmentation of Aerial Images. Remote Sens. 2017, 9, 368. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Zhang, Z.; Tian, Y.; Xu, Y.; Wen, Y.; Wang, S. Target-Guided Feature Super-Resolution for Vehicle Detection in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z. Ship Detection in Spaceborne Optical Image With SVD Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5832–5845. [Google Scholar] [CrossRef]

- Liu, Z.; Yuan, L.; Weng, L.; Yang, Y. A high resolution optical satellite image dataset for ship recognition and some new baselines. In Proceedings of the 6th International Conference on Pattern Recognition Applications and Methods, Porto, Portugal, 24–26 February 2017; Volume 2, pp. 324–331. [Google Scholar]

- Zhou, M.; Zou, Z.; Shi, Z.; Zeng, W.J.; Gui, J. Local Attention Networks for Occluded Airplane Detection in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2020, 17, 381–385. [Google Scholar] [CrossRef]

- Wei, H.; Zhang, Y.; Wang, B.; Yang, Y.; Li, H.; Wang, H. X-LineNet: Detecting Aircraft in Remote Sensing Images by a Pair of Intersecting Line Segments. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1645–1659. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef] [Green Version]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI Transformer for Oriented Object Detection in Aerial Images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 2844–2853. [Google Scholar]

- Ming, Q.; Miao, L.; Zhou, Z.; Song, J.; Yang, X. Sparse Label Assignment for Oriented Object Detection in Aerial Images. Remote Sens. 2021, 13, 2664. [Google Scholar] [CrossRef]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.S.; Bai, X. Gliding Vertex on the Horizontal Bounding Box for Multi-Oriented Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1452–1459. [Google Scholar] [CrossRef] [Green Version]

- Ma, J.; Shao, W.; Ye, H.; Wang, L.; Wang, H.; Zheng, Y.; Xue, X. Arbitrary-Oriented Scene Text Detection via Rotation Proposals. IEEE Trans. Multimedia 2018, 20, 3111–3122. [Google Scholar] [CrossRef] [Green Version]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Zhou, X.; Zhuo, J.; Krahenbuhl, P. Bottom-Up Object Detection by Grouping Extreme and Center Points. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 850–859. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Pan, X.; Ren, Y.; Sheng, K.; Dong, W.; Yuan, H.; Guo, X.; Ma, C.; Xu, C. Dynamic Refinement Network for Oriented and Densely Packed Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 11204–11213. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. CCNet: Criss-Cross Attention for Semantic Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 20–26 October 2019; pp. 603–612. [Google Scholar]

- Han, J.; Ding, J.; Li, J.; Xia, G.S. Align Deep Features for Oriented Object Detection. IEEE Trans. Geosci. Remote Sens. 2021, accepted. [CrossRef]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A Large-Scale Dataset for Object Detection in Aerial Images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 3974–3983. [Google Scholar]

- Cheng, G.; Wang, J.; Li, K.; Xie, X.; Lang, C.; Yao, Y.; Han, J. Anchor-free Oriented Proposal Generator for Object Detection. arXiv 2021, arXiv:2110.01931. [Google Scholar]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. RepPoints: Point Set Representation for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 20–26 October 2019; pp. 9656–9665. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 20–26 October 2019; pp. 9626–9635. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. FoveaBox: Beyound Anchor-Based Object Detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Ye, X.; Xiong, F.; Lu, J.; Zhou, J.; Qian, Y. 3-Net: Feature Fusion and Filtration Network for Object Detection in Optical Remote Sensing Images. Remote Sens. 2020, 12, 4027. [Google Scholar] [CrossRef]

- Zheng, Y.; Sun, P.; Zhou, Z.; Xu, W.; Ren, Q. ADT-Det: Adaptive Dynamic Refined Single-Stage Transformer Detector for Arbitrary-Oriented Object Detection in Satellite Optical Imagery. Remote Sens. 2021, 13, 2623. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J.C.; Feng, Z.M.; Hen, T. R3Det: Refined Single-Stage Detector with Feature Refinement for Rotating Object. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 2–9 February 2021. [Google Scholar]

- Wang, J.; Ding, J.; Guo, H.; Cheng, W.; Pan, T.; Yang, W. Mask OBB: A Semantic Attention-Based Mask Oriented Bounding Box Representation for Multi-Category Object Detection in Aerial Images. Remote Sens. 2019, 11, 2930. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Yang, W.; Li, H.C.; Zhang, H.; Xia, G.S. Learning Center Probability Map for Detecting Objects in Aerial Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4307–4323. [Google Scholar] [CrossRef]

- Shi, F.; Zhang, T.; Zhang, T. Orientation-Aware Vehicle Detection in Aerial Images via an Anchor-Free Object Detection Approach. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5221–5233. [Google Scholar] [CrossRef]

- Xiao, Z.; Qian, L.; Shao, W.; Tan, X.; Wang, K. Axis Learning for Orientated Objects Detection in Aerial Images. Remote Sens. 2020, 12, 908. [Google Scholar] [CrossRef] [Green Version]

- Guo, Z.; Liu, C.; Zhang, X.; Jiao, J.; Ji, X.; Ye, Q. Beyond Bounding-Box: Convex-Hull Feature Adaptation for Oriented and Densely Packed Object Detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 8788–8797. [Google Scholar]

- Wang, Q.; He, X.; Li, X. Locality and Structure Regularized Low Rank Representation for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 911–923. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Liu, S.; Chanussot, J.; Li, X. Scene Classification with Recurrent Attention of VHR Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1155–1167. [Google Scholar] [CrossRef]

- Li, M.; Lei, L.; Tang, Y.; Sun, Y.; Kuang, G. An Attention-Guided Multilayer Feature Aggregation Network for Remote Sensing Image Scene Classification. Remote Sens. 2021, 13, 3113. [Google Scholar] [CrossRef]

- Chong, Y.; Chen, X.; Pan, S. Context Union Edge Network for Semantic Segmentation of Small-Scale Objects in Very High Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2020, 19, 6000305. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, W.; Zhang, T.; Li, J. HRCNet: High-Resolution Context Extraction Network for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2020, 13, 71. [Google Scholar] [CrossRef]

- Zhang, G.; Lu, S.; Zhang, W. CAD-Net: A Context-Aware Detection Network for Objects in Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 10015–10024. [Google Scholar] [CrossRef] [Green Version]

- Wu, Y.; Zhang, K.; Wang, J.; Wang, Y.; Wang, Q.; Li, Q. CDD-Net: A Context-Driven Detection Network for Multiclass Object Detection. IEEE Geosci. Remote Sens. Lett. 2020, 19, 8004905. [Google Scholar] [CrossRef]

- Zhang, K.; Zeng, Q.; Yu, X. ROSD: Refined Oriented Staged Detector for Object Detection in Aerial Image. IEEE Access 2021, 9, 66560–66569. [Google Scholar] [CrossRef]

- Ming, Q.; Miao, L.; Zhou, Z.; Dong, Y. CFC-Net: A Critical Feature Capturing Network for Arbitrary-Oriented Object Detection in Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5605814. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, L.; Lu, H.; He, Y. Center-Boundary Dual Attention for Oriented Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5603914. [Google Scholar] [CrossRef]

- Li, Y.; Huang, Q.; Pei, X.; Jiao, L.; Shang, R. RADet: Refine Feature Pyramid Network and Multi-Layer Attention Network for Arbitrary-Oriented Object Detection of Remote Sensing Images. Remote Sens. 2020, 12, 389. [Google Scholar] [CrossRef] [Green Version]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Sun, X.; Fu, K. SCRDet: Towards More Robust Detection for Small, Cluttered and Rotated Objects. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 20–26 October 2019; pp. 8231–8240. [Google Scholar]

- Yang, X.; Yan, J. Arbitrary-Oriented Object Detection with Circular Smooth Label. In Proceedings of the European Conference on Computer Vision (ECCV), Virtual, 23–28 August 2020; pp. 677–694. [Google Scholar]

- Wei, H.; Zhang, Y.; Chang, Z.; Li, H.; Wang, H.; Sun, X. Oriented objects as pairs of middle lines. ISPRS J. Photogramm. Remote Sens. 2020, 169, 268–279. [Google Scholar] [CrossRef]

- Lu, J.; Li, T.; Ma, J.; Li, Z.; Jia, H. SAR: Single-Stage Anchor-Free Rotating Object Detection. IEEE Access 2020, 8, 205902–205912. [Google Scholar] [CrossRef]

- Wu, Q.; Xiang, W.; Tang, R.; Zhu, J. Bounding Box Projection for Regression Uncertainty in Oriented Object Detection. IEEE Access 2021, 9, 58768–58779. [Google Scholar] [CrossRef]

- Yi, J.; Wu, P.; Liu, B.; Huang, Q.; Qu, H.; Metaxas, D. Oriented Object Detection in Aerial Images with Box Boundary-Aware Vectors. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 5–9 January 2021; pp. 2149–2158. [Google Scholar]

- Xie, E.; Sun, P.; Song, X.; Wang, W.; Liu, X.; Liang, D.; Shen, C.; Luo, P. PolarMask: Single Shot Instance Segmentation with Polar Representation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 12190–12199. [Google Scholar]

- Zhao, P.; Qu, Z.; Bu, Y.; Tan, W.; Guan, Q. PolarDet: A fast, more precise detector for rotated target in aerial images. Int. J. Remote Sens. 2021, 42, 5831–5861. [Google Scholar] [CrossRef]

- Zhou, L.; Wei, H.; Li, H.; Zhao, W.; Zhang, Y.; Zhang, Y. Arbitrary-Oriented Object Detection in Remote Sensing Images Based on Polar Coordinates. IEEE Access 2020, 8, 223373–223384. [Google Scholar] [CrossRef]

- Ming, Q.; Zhou, Z.; Miao, L.; Zhang, H.; Li, L. Dynamic Anchor Learning for Arbitrary-Oriented Object Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 2–9 February 2021. [Google Scholar]

- Qian, W.; Yang, X.; Peng, S.; Yan, J.; Guo, Y. Learning modulated loss for rotated object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 2–9 February 2021. [Google Scholar]

- Zhong, B.; Ao, K. Single-Stage Rotation-Decoupled Detector for Oriented Object. Remote Sens. 2020, 12, 3262. [Google Scholar] [CrossRef]

- Fu, K.; Chang, Z.; Zhang, Y.; Xu, G.; Zhang, K.; Sun, X. Rotation-aware and multi-scale convolutional neural network for object detection in remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 161, 294–308. [Google Scholar] [CrossRef]

- Zhu, Y.; Du, J.; Wu, X. Adaptive Period Embedding for Representing Oriented Objects in Aerial Images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7247–7257. [Google Scholar] [CrossRef] [Green Version]

- Han, J.; Ding, J.; Xue, N.; Xia, G.S. ReDet: A Rotation-equivariant Detector for Aerial Object Detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 2785–2794. [Google Scholar]

- Song, Q.; Yang, F.; Yang, L.; Liu, C.; Hu, M.; Xia, L. Learning Point-Guided Localization for Detection in Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1084–1094. [Google Scholar] [CrossRef]

- Xu, C.; Li, C.; Cui, Z.; Zhang, T.; Yang, J. Hierarchical Semantic Propagation for Object Detection in Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4353–4364. [Google Scholar] [CrossRef]

| Method | Backbone | PL | BD | BR | GTF | SV | LV | SH | TC | BC | ST | SBF | RA | HA | SP | HC | mAP(%) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| One-stage | |||||||||||||||||

| DAL [63] | ResNet 101 | 88.61 | 79.69 | 46.27 | 70.37 | 65.89 | 76.10 | 78.53 | 90.84 | 79.98 | 78.41 | 58.71 | 62.02 | 69.23 | 71.32 | 60.65 | 71.78 |

| ProjBB-R [58] | ResNet 101 | 88.96 | 79.32 | 53.98 | 70.21 | 60.67 | 76.20 | 89.71 | 90.22 | 78.94 | 76.82 | 60.49 | 63.62 | 73.12 | 71.43 | 61.96 | 73.03 |

| RSDet [64] | ResNet 152 | 90.2 | 83.5 | 53.6 | 70.1 | 64.6 | 79.4 | 67.3 | 91.0 | 88.3 | 82.5 | 64.1 | 68.7 | 62.8 | 69.5 | 66.9 | 73.5 |

| CFC-Net [51] | ResNet 50 | 89.08 | 80.41 | 52.41 | 70.02 | 76.28 | 78.11 | 87.21 | 90.89 | 84.47 | 85.64 | 60.51 | 61.52 | 67.82 | 68.02 | 50.09 | 73.50 |

| RDet [37] | ResNet 101 | 88.76 | 83.09 | 50.91 | 67.27 | 76.23 | 80.39 | 86.72 | 90.78 | 84.68 | 83.24 | 61.98 | 61.35 | 66.91 | 70.63 | 53.94 | 73.79 |

| SLA [21] | ResNet 50 | 85.23 | 83.78 | 48.89 | 71.65 | 76.43 | 76.80 | 86.83 | 90.62 | 88.17 | 86.88 | 49.67 | 66.13 | 75.34 | 72.11 | 64.88 | 74.89 |

| RDD [65] | ResNet 101 | 89.70 | 84.33 | 46.35 | 68.62 | 73.89 | 73.19 | 86.92 | 90.41 | 86.46 | 84.30 | 64.22 | 64.95 | 73.55 | 72.59 | 73.31 | 75.52 |

| Two-stage | |||||||||||||||||

| FR-O [30] | ResNet 101 | 79.42 | 77.13 | 17.7 | 64.05 | 35.3 | 38.02 | 37.16 | 89.41 | 69.64 | 59.28 | 50.3 | 52.91 | 47.89 | 47.4 | 46.3 | 54.13 |

| RRPN [23] | ResNet 101 | 88.52 | 71.20 | 31.66 | 59.30 | 51.85 | 56.19 | 57.25 | 90.81 | 72.84 | 67.38 | 56.69 | 52.84 | 53.08 | 51.94 | 53.58 | 61.01 |

| FFA [66] | ResNet 101 | 81.36 | 74.30 | 47.70 | 70.32 | 64.89 | 67.82 | 69.98 | 90.76 | 79.06 | 78.20 | 53.64 | 62.90 | 67.02 | 64.17 | 50.23 | 68.16 |

| RADet [53] | ResNeXt 101 | 79.45 | 76.99 | 48.05 | 65.83 | 65.46 | 74.40 | 68.86 | 89.70 | 78.14 | 74.97 | 49.92 | 64.63 | 66.14 | 71.58 | 62.16 | 69.09 |

| RoI Transformer [20] | ResNet 101 | 88.64 | 78.52 | 43.44 | 75.92 | 68.81 | 73.68 | 83.59 | 90.74 | 77.27 | 81.46 | 58.39 | 53.54 | 62.83 | 58.93 | 47.67 | 69.56 |

| CAD-Net [48] | ResNet 101 | 87.8 | 82.4 | 49.4 | 73.5 | 71.1 | 63.5 | 76.7 | 90.9 | 79.2 | 73.3 | 48.4 | 60.9 | 62.0 | 67.0 | 62.2 | 69.9 |

| SCR-Det [54] | ResNet 101 | 89.98 | 80.65 | 52.09 | 68.36 | 68.36 | 60.32 | 72.41 | 90.85 | 87.94 | 86.86 | 65.02 | 66.68 | 66.25 | 68.24 | 65.21 | 72.64 |

| ROSD [50] | ResNet 101 | 88.88 | 82.13 | 52.85 | 69.76 | 78.21 | 77.32 | 87.08 | 90.86 | 86.40 | 82.66 | 56.73 | 65.15 | 74.43 | 68.24 | 63.18 | 74.92 |

| Gliding Vertex [22] | ResNet 101 | 89.64 | 85.00 | 52.26 | 77.34 | 73.01 | 73.14 | 86.82 | 90.74 | 79.02 | 86.81 | 59.55 | 70.91 | 72.94 | 70.86 | 57.32 | 75.02 |

| SAR [57] | ResNet 101 | 89.67 | 79.78 | 54.17 | 68.29 | 71.70 | 77.90 | 84.63 | 90.91 | 88.22 | 87.07 | 60.49 | 66.95 | 75.13 | 70.01 | 64.29 | 75.28 |

| Mask-OBB [38] | ResNeXt 101 | 89.56 | 85.95 | 54.21 | 72.90 | 76.52 | 74.16 | 85.63 | 89.85 | 83.81 | 86.48 | 54.89 | 69.64 | 73.94 | 69.06 | 63.32 | 75.33 |

| APE [67] | ResNet 50 | 89.96 | 83.62 | 53.42 | 76.03 | 74.01 | 77.16 | 79.45 | 90.83 | 87.15 | 84.51 | 67.72 | 60.33 | 74.61 | 71.84 | 65.55 | 75.75 |

| CenterMap-Net [39] | ResNet 101 | 89.83 | 84.41 | 54.60 | 70.25 | 77.66 | 78.32 | 87.19 | 90.66 | 84.89 | 85.27 | 56.46 | 69.23 | 74.13 | 71.56 | 66.06 | 76.03 |

| CSL [55] | ResNet 152 | 90.25 | 85.53 | 54.64 | 75.31 | 70.44 | 73.51 | 77.62 | 90.84 | 86.15 | 86.69 | 69.60 | 68.04 | 73.83 | 71.10 | 68.93 | 76.17 |

| ReDet [68] | ResNet 50 | 88.79 | 82.64 | 53.97 | 74.00 | 78.13 | 84.06 | 88.04 | 90.89 | 87.78 | 85.75 | 61.76 | 60.39 | 75.96 | 68.07 | 63.59 | 76.25 |

| OPLD [69] | ResNet 101 | 89.37 | 85.82 | 54.10 | 79.58 | 75.00 | 75.13 | 86.92 | 90.88 | 86.42 | 86.62 | 62.46 | 68.41 | 73.98 | 68.11 | 63.69 | 76.43 |

| HSP [70] | ResNet 101 | 90.39 | 86.23 | 56.12 | 80.59 | 77.52 | 73.26 | 83.78 | 90.80 | 87.19 | 85.67 | 69.08 | 72.02 | 76.98 | 72.50 | 67.96 | 78.01 |

| Anchor-free | |||||||||||||||||

| CenterNet-O [26] | Hourglass 104 | 89.02 | 69.71 | 37.62 | 63.42 | 65.23 | 63.74 | 77.28 | 90.51 | 79.24 | 77.93 | 44.83 | 54.64 | 55.93 | 61.11 | 45.71 | 65.04 |

| Axis Learning [41] | ResNet 101 | 79.53 | 77.15 | 38.59 | 61.15 | 67.53 | 70.49 | 76.30 | 89.66 | 79.07 | 83.53 | 47.27 | 61.01 | 56.28 | 66.06 | 36.05 | 65.98 |

| P-RSDet [62] | ResNet 101 | 88.58 | 77.84 | 50.44 | 69.29 | 71.10 | 75.79 | 78.66 | 90.88 | 80.10 | 81.71 | 57.92 | 63.03 | 66.30 | 69.70 | 63.13 | 72.30 |

| BBAVectors [59] | ResNet 101 | 88.35 | 79.96 | 50.69 | 62.18 | 78.43 | 78.98 | 87.94 | 90.85 | 83.58 | 84.35 | 54.13 | 60.24 | 65.22 | 64.28 | 55.70 | 72.32 |

| O-Det [56] | Hourglass 104 | 89.3 | 83.3 | 50.1 | 72.1 | 71.1 | 75.6 | 78.7 | 90.9 | 79.9 | 82.9 | 60.2 | 60.0 | 64.6 | 68.9 | 65.7 | 72.8 |

| PolarDet [61] | ResNet 50 | 89.73 | 87.05 | 45.30 | 63.32 | 78.44 | 76.65 | 87.13 | 90.79 | 80.58 | 85.89 | 60.97 | 67.94 | 68.20 | 74.63 | 68.67 | 75.02 |

| AOPG [31] | ResNet 101 | 89.14 | 82.74 | 51.87 | 69.28 | 77.65 | 82.42 | 88.08 | 90.89 | 86.26 | 85.13 | 60.60 | 66.30 | 74.05 | 67.76 | 58.77 | 75.39 |

| CBDANet [52] | DLA 34 | 89.17 | 85.92 | 50.28 | 65.02 | 77.72 | 82.32 | 87.89 | 90.48 | 86.47 | 85.90 | 66.85 | 66.48 | 67.41 | 71.33 | 62.89 | 75.74 |

| CFA [42] | ResNet 152 | 89.08 | 83.20 | 54.37 | 66.87 | 81.23 | 80.96 | 87.17 | 90.21 | 84.32 | 86.09 | 52.34 | 69.94 | 75.52 | 80.76 | 67.96 | 76.67 |

| Proposed Method | ResNet 101 | 89.23 | 84.50 | 52.90 | 76.93 | 78.51 | 76.93 | 87.40 | 90.89 | 87.42 | 84.66 | 64.40 | 63.97 | 75.01 | 73.39 | 62.37 | 76.57 |

| Proposed Method * | ResNet 101 | 90.20 | 84.94 | 61.04 | 79.66 | 79.73 | 84.37 | 88.78 | 90.88 | 86.16 | 87.66 | 71.85 | 70.40 | 81.37 | 79.71 | 73.51 | 80.68 |

| Method | Backbone | APL | APO | BF | BC | BR | CH | DAM | ETS | ESA | GF | GTF | HA | OP | SH | STA | STO | TC | TS | VE | WM | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RetinaNet-O [19] | ResNet 101 | 64.20 | 21.97 | 73.99 | 86.76 | 17.57 | 72.62 | 72.36 | 47.22 | 22.08 | 77.90 | 76.60 | 36.61 | 30.94 | 74.97 | 63.35 | 49.21 | 83.44 | 44.93 | 37.53 | 64.18 | 55.92 |

| FR-O [30] | ResNet 101 | 61.33 | 14.73 | 71.47 | 86.46 | 19.86 | 72.24 | 59.78 | 55.98 | 19.72 | 77.08 | 81.47 | 39.21 | 33.30 | 78.78 | 70.05 | 61.85 | 81.31 | 53.44 | 39.90 | 64.81 | 57.14 |

| Gliding Vertex [22] | ResNet 101 | 61.58 | 36.02 | 71.61 | 86.87 | 33.48 | 72.37 | 72.85 | 64.62 | 25.78 | 76.03 | 81.81 | 42.41 | 47.25 | 80.57 | 69.63 | 61.98 | 86.74 | 58.20 | 41.87 | 64.48 | 61.81 |

| AOPG [31] | ResNet 50 | 62.39 | 37.79 | 71.62 | 87.63 | 40.90 | 72.47 | 31.08 | 65.42 | 77.99 | 73.20 | 81.94 | 42.32 | 54.45 | 81.17 | 72.69 | 71.31 | 81.49 | 60.04 | 52.38 | 69.99 | 64.41 |

| RoI Trans [20] | ResNet 101 | 61.54 | 45.46 | 71.90 | 87.48 | 41.43 | 72.67 | 78.67 | 67.17 | 38.26 | 81.83 | 83.40 | 48.94 | 55.61 | 81.18 | 75.06 | 62.63 | 88.36 | 63.09 | 47.80 | 66.10 | 65.93 |

| Proposed Method | ResNet 50 | 68.26 | 38.34 | 77.35 | 88.10 | 40.68 | 72.48 | 78.90 | 62.52 | 30.64 | 73.51 | 81.32 | 45.51 | 55.78 | 88.74 | 71.24 | 71.12 | 88.60 | 59.74 | 52.95 | 70.30 | 65.80 |

| Proposed Method | ResNet 101 | 61.65 | 47.58 | 77.59 | 88.39 | 40.98 | 72.55 | 81.90 | 63.76 | 38.17 | 79.49 | 81.82 | 45.39 | 54.94 | 88.67 | 73.48 | 75.75 | 87.69 | 61.69 | 52.43 | 69.00 | 67.15 |

| Method | Backbone | Image Size | mAP |

|---|---|---|---|

| Axis Learning [41] | ResNet 101 | 800 × 800 | 78.15 |

| SLA [21] | ResNet 50 | 768 × 768 | 87.14 |

| SAR [57] | ResNet 101 | 896 × 896 | 88.11 |

| Gliding Vertex [22] | ResNet 101 | - | 88.2 |

| OPLD [69] | ResNet 50 | 1024 × 1333 | 88.44 |

| BBAVectors [59] | ResNet 101 | 608 × 608 | 88.6 |

| DAL [63] | ResNet 101 | 800 × 800 | 88.6 |

| ProjBB-R [58] | ResNet 101 | 800 × 800 | 89.41 |

| CSL [55] | ResNet 152 | - | 89.62 |

| CFC-Net [51] | ResNet 101 | 800 × 800 | 89.7 |

| ROSD [50] | ResNet 101 | 1000 × 800 | 90.08 |

| PolarDet [61] | ResNet 50 | 800 × 800 | 90.13 |

| AOPG [31] | ResNet 101 | 800 × 1333 | 90.34 |

| ReDet [68] | ResNet 50 | 800 × 512 | 90.46 |

| CBDANet [52] | DLA 34 | 512 × 512 | 90.5 |

| Proposed Method | ResNet 50 | 800 × 1333 | 89.96 |

| Proposed Method | ResNet 101 | 800 × 1333 | 90.45 |

| Method | CCA-FPN | AFO-RPN | PL | BD | BR | GTF | SV | LV | SH | TC | BC | ST | SBF | RA | HA | SP | HC | mAP(%) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline [20] | - | - | 88.64 | 78.52 | 43.44 | 75.92 | 68.81 | 73.68 | 83.59 | 90.74 | 77.27 | 81.46 | 58.39 | 53.54 | 62.83 | 58.93 | 47.67 | 69.56 |

| Proposed Method | 🗸 | - | 88.59 | 81.60 | 52.27 | 68.19 | 78.02 | 73.69 | 86.64 | 90.74 | 82.97 | 85.12 | 56.31 | 65.38 | 69.66 | 68.50 | 56.75 | 73.63 (+4.07) |

| - | 🗸 | 88.88 | 84.06 | 52.13 | 69.55 | 70.96 | 76.59 | 79.52 | 90.87 | 87.23 | 86.19 | 56.14 | 65.35 | 66.96 | 72.08 | 64.20 | 74.05 (+4.49) | |

| 🗸 | 🗸 | 89.23 | 84.50 | 52.90 | 76.93 | 78.51 | 76.93 | 87.40 | 90.89 | 87.42 | 84.66 | 64.40 | 63.97 | 75.01 | 73.39 | 62.37 | 76.57 (+7.01 ) |

| Method | CCA-FPN | AFO-RPN | Params(M) | FLOPs(G) |

|---|---|---|---|---|

| Baseline [20] | - | - | 55.13 | 148.38 |

| Proposed Method | - | 🗸 | 41.73 | 134.38 |

| 🗸 | 🗸 | 65.66 | 376.99 |

| Cartesian System | Polar System | DOTA mAP(%) | DIOR-R mAP(%) | HRSC2016 mAP(%) |

|---|---|---|---|---|

| - | 73.84 | 64.81 | 88.12 | |

| - | 72.58 | 63.48 | 84.84 | |

| - | 76.57 | 67.15 | 90.45 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Tian, Y.; Xu, Y.; Zhang, Z. Oriented Object Detection in Remote Sensing Images with Anchor-Free Oriented Region Proposal Network. Remote Sens. 2022, 14, 1246. https://doi.org/10.3390/rs14051246

Li J, Tian Y, Xu Y, Zhang Z. Oriented Object Detection in Remote Sensing Images with Anchor-Free Oriented Region Proposal Network. Remote Sensing. 2022; 14(5):1246. https://doi.org/10.3390/rs14051246

Chicago/Turabian StyleLi, Jianxiang, Yan Tian, Yiping Xu, and Zili Zhang. 2022. "Oriented Object Detection in Remote Sensing Images with Anchor-Free Oriented Region Proposal Network" Remote Sensing 14, no. 5: 1246. https://doi.org/10.3390/rs14051246