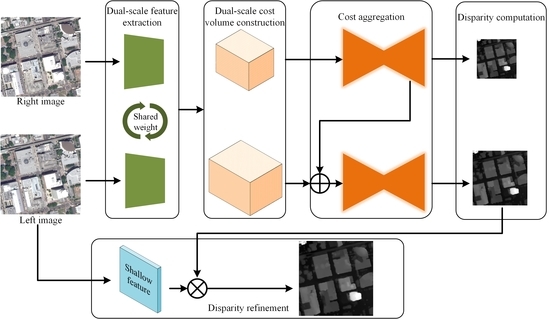

Figure 1.

Overview of DSM-Net, which consists of five components, including feature extraction, cost volume creation, cost aggregation, disparity calculation, and disparity refinement.

Figure 1.

Overview of DSM-Net, which consists of five components, including feature extraction, cost volume creation, cost aggregation, disparity calculation, and disparity refinement.

Figure 2.

The operation for the cost volume creation. The right feature shifts from to , making the resulting cost volume covering the range [, ).

Figure 2.

The operation for the cost volume creation. The right feature shifts from to , making the resulting cost volume covering the range [, ).

Figure 3.

The architecture of the refinement module. Dilated convolutions [

43] are used within the module, “dilation” denotes the dilation rate, “s” denotes the convolution stride. Each convolution layer is followed by a batch normalization layer and a leaky ReLU activation layer (

), except the 1*1*3 and 3*3*1 layer.

Figure 3.

The architecture of the refinement module. Dilated convolutions [

43] are used within the module, “dilation” denotes the dilation rate, “s” denotes the convolution stride. Each convolution layer is followed by a batch normalization layer and a leaky ReLU activation layer (

), except the 1*1*3 and 3*3*1 layer.

Figure 4.

Disparity maps output by different networks. The image in the first column is from the OMA set, and the others are from the JAX testing set. Predictions of DenseMapNet, StereoNet, PSMNet, and DSM-Net are respectively labeled with yellow, red, green, and blue.

Figure 4.

Disparity maps output by different networks. The image in the first column is from the OMA set, and the others are from the JAX testing set. Predictions of DenseMapNet, StereoNet, PSMNet, and DSM-Net are respectively labeled with yellow, red, green, and blue.

Figure 5.

Disparity estimation results of different models on texture-less regions. Predictions of StereoNet, PSMNet, and DSM-Net are respectively labeled with red, green, and blue (subsequent figures in this section are also labeled in this way). Tile numbers are JAX-122-019-005, JAX-079-006-007, and OMA-211-026-006, from left to right.

Figure 5.

Disparity estimation results of different models on texture-less regions. Predictions of StereoNet, PSMNet, and DSM-Net are respectively labeled with red, green, and blue (subsequent figures in this section are also labeled in this way). Tile numbers are JAX-122-019-005, JAX-079-006-007, and OMA-211-026-006, from left to right.

Figure 6.

Disparity estimation results of different models on repetitive patterns. Tile numbers are JAX-280-021-020, JAX-559-022-002, and OMA-132-027-023, from left to right.

Figure 6.

Disparity estimation results of different models on repetitive patterns. Tile numbers are JAX-280-021-020, JAX-559-022-002, and OMA-132-027-023, from left to right.

Figure 7.

Disparity estimation results of different models on disparity discontinuities and occluded areas. Tile numbers are JAX-072-011-022, JAX-264-014-007, and OMA-212-007-005, from left to right.

Figure 7.

Disparity estimation results of different models on disparity discontinuities and occluded areas. Tile numbers are JAX-072-011-022, JAX-264-014-007, and OMA-212-007-005, from left to right.

Figure 8.

Disparity maps output by networks with single-scale and dual-scale learning schemes (tile number: JAX-072-001-006). The outputs of DSM-Net-v1, DSM-Net-v2, and DSM-Net are labeled with red, green, and blue, respectively.

Figure 8.

Disparity maps output by networks with single-scale and dual-scale learning schemes (tile number: JAX-072-001-006). The outputs of DSM-Net-v1, DSM-Net-v2, and DSM-Net are labeled with red, green, and blue, respectively.

Figure 9.

The plain module for cost aggregation in DSM-Net-v3. In this variant, the 4D volume output by the eighth convolution layer at the low scale is up-sampled and added to the initial cost volume at the high scale. Each convolution layer is followed by a batch normalization layer and a leaky ReLU activation layer (), except for the last two layers.

Figure 9.

The plain module for cost aggregation in DSM-Net-v3. In this variant, the 4D volume output by the eighth convolution layer at the low scale is up-sampled and added to the initial cost volume at the high scale. Each convolution layer is followed by a batch normalization layer and a leaky ReLU activation layer (), except for the last two layers.

Figure 10.

Disparity maps output by networks with different cost aggregation modules (tile number: JAX-122-022-002, JAX-156-009-003). The outputs of DSM-Net-v3 and DSM-Net are respectively labeled with purple and blue.

Figure 10.

Disparity maps output by networks with different cost aggregation modules (tile number: JAX-122-022-002, JAX-156-009-003). The outputs of DSM-Net-v3 and DSM-Net are respectively labeled with purple and blue.

Figure 11.

Disparity maps output by networks without and with refinement operations (tile number: JAX-068-006-012, JAX-113-004-011). The outputs of DSM-Net-v4 and DSM-Net are respectively labeled with purple and blue.

Figure 11.

Disparity maps output by networks without and with refinement operations (tile number: JAX-068-006-012, JAX-113-004-011). The outputs of DSM-Net-v4 and DSM-Net are respectively labeled with purple and blue.

Table 1.

The architecture of the shared 2D CNN. Construction of residual blocks is designated in brackets with the number of stacked blocks, “

s” denotes the stride of convolution. Each convolution layer is followed by a batch normalization [

41] layer and a ReLU activation layer, except conv1_3 and conv2_3.

Table 1.

The architecture of the shared 2D CNN. Construction of residual blocks is designated in brackets with the number of stacked blocks, “

s” denotes the stride of convolution. Each convolution layer is followed by a batch normalization [

41] layer and a ReLU activation layer, except conv1_3 and conv2_3.

| Name | Setting | Output |

|---|

| Input | | H × W × 3 |

| conv0_1 | 5 × 5 × 32, s = 2 | ×× 32 |

| conv0_2 | 5 × 5 × 32, s = 2 | ×× 32 |

| conv0_3 | | ×× 32 |

| Low scale | High scale |

| conv2_1 | 3 × 3 × 32, s = 2 | ×× 32 | conv1_1 | 3 × 3 × 32 | ×× 32 |

| conv2_2 | | ×× 32 | conv1_2 | | ×× 32 |

| conv2_3 | 3×3×16 | ×× 16 | conv1_3 | 3×3×16 | ×× 16 |

Table 2.

The architecture of the 3D encoder-decoder module for cost aggregation, each convolution layer is followed by a batch normalization layer and a leaky ReLU activation layer (

), except conv14 and conv15. Factorized 3D convolutions are designated in brackets, “

s” denotes the stride of convolution, and “

” denotes the transpose convolution [

42]. Note that we use two independent modules with the same structure to separately aggregate the cost volumes, the output of conv14 at the low scale is up-sampled and added to the initial high-scale cost volume before the high-scale aggregation. For an input image of size

and evaluating a range of

D candidate disparities, the cost volume is of size

for

k subsampling operations.

Table 2.

The architecture of the 3D encoder-decoder module for cost aggregation, each convolution layer is followed by a batch normalization layer and a leaky ReLU activation layer (

), except conv14 and conv15. Factorized 3D convolutions are designated in brackets, “

s” denotes the stride of convolution, and “

” denotes the transpose convolution [

42]. Note that we use two independent modules with the same structure to separately aggregate the cost volumes, the output of conv14 at the low scale is up-sampled and added to the initial high-scale cost volume before the high-scale aggregation. For an input image of size

and evaluating a range of

D candidate disparities, the cost volume is of size

for

k subsampling operations.

| Name | Setting | Low Scale | High Scale |

|---|

| Cost volume | | | |

| conv1 | | | |

| conv2 | | | |

| conv3 | | | |

| conv4 | | | |

| conv5 | | | |

| conv6 | | | |

| conv7 | | | |

| conv8 | | | |

| conv9 | | | |

| conv10 | | | |

| conv11 | | | |

| conv12 | | | |

| conv13 | | | |

| conv14 | | | |

| conv15 | | | |

Table 3.

The usage of the dataset in our experiments. “JAX” represents Jacksonville and “OMA” represents Omaha (the same below).

Table 3.

The usage of the dataset in our experiments. “JAX” represents Jacksonville and “OMA” represents Omaha (the same below).

| Stereo Pair | Mode | Training/Validation/Testing | Usage |

|---|

| JAX | RGB | 1500/139/500 | Training, validation, and testing |

| OMA | RGB | -/-/2153 | Testing |

Table 4.

Quantitative results of different methods on the JAX testing set and the whole OMA set. The best results are bold.

Table 4.

Quantitative results of different methods on the JAX testing set and the whole OMA set. The best results are bold.

| Model | JAX | OMA | Time (ms) |

|---|

| EPE (Pixel) | D1 (%) | EPE (Pixel) | D1 (%) |

|---|

| DenseMapNet | 1.7405 | 14.19 | 1.8581 | 14.88 | 81 |

| StereoNet | 1.4356 | 10.00 | 1.5804 | 10.37 | 187 |

| PSMNet | 1.2968 | 8.06 | 1.4937 | 8.74 | 436 |

| Bidir-EPNet | 1.2764 | 8.03 | 1.4899 | 8.96 | - |

| DSM-Net | 1.2776 | 7.94 | 1.4757 | 8.73 | 168 |

Table 5.

Quantitative results of different models on individuals of specific stereo pairs from the JAX testing set and OMA set. The best results are bold.

Table 5.

Quantitative results of different models on individuals of specific stereo pairs from the JAX testing set and OMA set. The best results are bold.

| Tile | EPE (Pixel) | D1 (%) |

|---|

| DenseMapNet | StereoNet | PSMNet | DSM-Net | DenseMapNet | StereoNet | PSMNet | DSM-Net |

|---|

| JAX-122-019-005 | 1.5085 | 1.4992 | 1.4815 | 1.2292 | 7.84 | 8.17 | 4.52 | 3.65 |

| JAX-079-006-007 | 1.6281 | 1.3743 | 1.2158 | 1.2082 | 12.42 | 9.71 | 8.46 | 8.16 |

| OMA-211-026-006 | 1.9534 | 1.5783 | 1.5739 | 1.4830 | 15.92 | 10.67 | 9.23 | 9.05 |

| JAX-280-021-020 | 1.3427 | 1.1412 | 1.0413 | 0.9772 | 10.42 | 6.75 | 6.26 | 5.43 |

| JAX-559-022-002 | 1.5756 | 1.5323 | 1.3536 | 1.2977 | 15.03 | 13.56 | 10.55 | 10.14 |

| OMA-132-027-023 | 1.5421 | 1.4018 | 1.3657 | 1.3054 | 11.84 | 9.61 | 9.21 | 8.45 |

| JAX-072-011-022 | 1.6813 | 1.3914 | 1.1675 | 1.0718 | 17.42 | 8.22 | 6.71 | 5.27 |

| JAX-264-014-007 | 1.6105 | 1.3083 | 1.0688 | 1.0528 | 15.54 | 6.67 | 4.65 | 3.81 |

| OMA-212-007-005 | 1.6740 | 1.3359 | 1.2587 | 1.1720 | 11.51 | 7.79 | 6.90 | 5.26 |

Table 6.

Configurations and comparisons of the variants. The best results are bold, the checkmark indicates that the network has this configuration.

Table 6.

Configurations and comparisons of the variants. The best results are bold, the checkmark indicates that the network has this configuration.

| Model | Scale | Cost Aggregation | Refinement | JAX | OMA | Time (ms) |

|---|

| Low | High | Plain | Encoder-Decoder | Without | With | EPE | D1 | EPE | D1 |

|---|

| DSM-Net-v1 | ✓ | | | ✓ | | ✓ | 1.3788 | 9.23 | 1.5327 | 9.77 | 78 |

| DSM-Net-v2 | | ✓ | | ✓ | | ✓ | 1.3195 | 8.34 | 1.4984 | 8.75 | 149 |

| DSM-Net-v3 | ✓ | ✓ | ✓ | | | ✓ | 1.3554 | 8.73 | 1.5078 | 8.91 | 469 |

| DSM-Net-v4 | ✓ | ✓ | | ✓ | ✓ | | 1.2817 | 8.03 | 1.4951 | 8.98 | 160 |

| DSM-Net | ✓ | ✓ | | ✓ | | ✓ | 1.2776 | 7.94 | 1.4757 | 8.73 | 168 |