Urban Riverway Extraction from High-Resolution SAR Image Based on Blocking Segmentation and Discontinuity Connection

Abstract

:1. Introduction

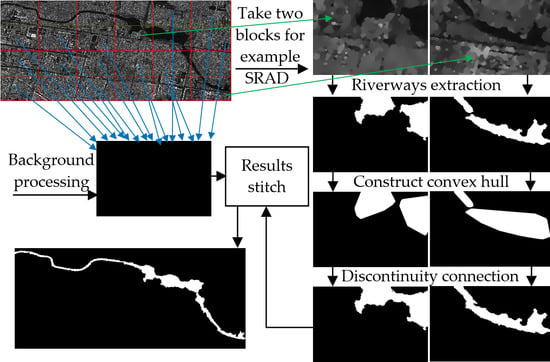

2. Algorithm Description

2.1. Overlapping Block Partition and Classification

2.2. SRAD Filtering Preprocessing

2.3. Extraction of Riverway Segments

2.4. Discontinuity Connection between Riverway Segments

2.4.1. Construction of the Convex Hull

2.4.2. Pyramid Representation of Convex Hull Image

2.4.3. Multi-Layer Region Growth

2.5. Riverway Extraction Result Output

3. Experiment and Analysis

3.1. Data

3.2. Experiment and Results

4. Discussion

4.1. Block Processing Strategy

4.2. Sub-Image Block Filtering

4.3. Discontinuity Connection

4.4. Feasibility and Robustness Analysis

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Usher, M. Conduct of conduits: Engineering, desire and government through the enclosure and exposure of urban water. Int. J. Urban Reg. Res. 2018, 42, 315–333. [Google Scholar] [CrossRef] [Green Version]

- Boell, M.; Alves, H.R.; Volpato, M.; Ferreira, D.; Lacerda, W. Exploiting feature extraction techniques for remote sensing image classification. IEEE Lat. Am. Trans. 2019, 16, 2657–2664. [Google Scholar] [CrossRef]

- Xie, J.; Sun, D.; Cai, J.; Cai, F. Waveband selection with equivalent prediction performance for FTIR/ATR spectroscopic analysis of COD in sugar refinery waste water. Comput. Mater. Contin. 2019, 58, 687–695. [Google Scholar] [CrossRef]

- Katherine, I.; Alexander, B.; Georgia, F.; Achim, R.; Birgit, W. Assessing single-polarization and dual-polarization terrasar-x data for surface water monitoring. Remote Sens. 2018, 10, 949–965. [Google Scholar]

- Park, K.; Park, J.J.; Jang, J.C.; Lee, J.H.; Oh, S.; Lee, M. Multi-spectral ship detection using optical, hyperspectral, and microwave SAR remote sensing data in coastal regions. Sustainability 2018, 10, 4064. [Google Scholar] [CrossRef] [Green Version]

- Goumehei, E.; Tolpekin, V.; Stein, A.; Yan, W. Surface water body detection in polarimetric SAR data using contextual complex Wishart classification. Water Resour. Res. 2019, 55, 7047–7059. [Google Scholar] [CrossRef] [Green Version]

- Sghaier, M.O.; Foucher, S.; Lepage, R. River extraction from high-resolution SAR images combining a structural feature set and mathematical morphology. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1025–1038. [Google Scholar] [CrossRef]

- Dasgupta, A.; Grimaldi, S.; Ramsankaran, R.A.; Pauwels, V.R. Towards operational SAR-based flood mapping using neuro-fuzzy texture-based approaches. Remote Sens. Environ. 2018, 215, 313–329. [Google Scholar] [CrossRef]

- Irwin, K.; Beaulne, D.; Braun, A.; Fotopoulos, G. Fusion of SAR, optical imagery and airborne LiDAR for surface water detection. Remote Sens. 2017, 9, 890. [Google Scholar] [CrossRef] [Green Version]

- Kwang, C.; Jnr, E.M.; Amoah, A.S. Comparing of landsat 8 and sentinel 2A using water extraction indexes over volta river. J. Geogr. Geol. 2018, 10, 1–7. [Google Scholar] [CrossRef] [Green Version]

- Hong, S.; Jang, H.; Kim, N.; Sohn, H.G. Water area extraction using RADARSAT SAR imagery combined with landsat imagery and terrain information. Sensors 2015, 15, 6652–6667. [Google Scholar] [CrossRef] [PubMed]

- Zhou, S.; Kan, P.; Silbernagel, J.; Jiefeng, J. Application of image segmentation in surface water extraction of freshwater lakes using radar data. Int. J. Geo-Inf. 2020, 9, 424. [Google Scholar] [CrossRef]

- Guo, Y.; Zhang, J. A new 2d Otsu for water extraction from SAR image. ISPAr 2017, 42, 733–736. [Google Scholar] [CrossRef] [Green Version]

- Modava, M.; Akbarizadeh, G.; Soroosh, M. Integration of spectral histogram and level set for coastline detection in SAR images. IEEE Trans. Aerosp. Electron. Syst. 2018, 55, 810–819. [Google Scholar] [CrossRef]

- Han, B.; Wu, Y. SAR river image segmentation by active contour model inspired by exponential cross entropy. J. Indian Soc. Remote Sens. 2019, 47, 201–212. [Google Scholar] [CrossRef]

- Xiaoyan, L.; Long, L.; Yun, S.; Quanhua, Z.; Qingjun, Z.; Linjiang, L. Water detection in urban areas from GF-3. Sensors 2018, 18, 1299–1310. [Google Scholar]

- Chao, W.; Fengchen, H.; Xiaobin, T.; Min, T.; Lizhong, X. A river extraction algorithm for high-resolution SAR images with complex backgrounds. Remote Sens. Technol. Appl. 2012, 27, 516–522. [Google Scholar]

- Senthilnath, J.; Shenoy, H.V.; Rajendra, R.; Omkar, S.N.; Mani, V.; Diwakar, P.G. Integration of speckle de-noising and image segmentation using synthetic aperture radar image for flood extent extraction. J. Earth Syst. Sci. 2013, 122, 559–572. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Peng, L.; Huang, S.; Wang, X.; Wang, Y.; Peng, Z. River detection in high-resolution SAR data using the Frangi filter and shearlet features. Remote Sens. Lett. 2019, 10, 949–958. [Google Scholar] [CrossRef]

- Morandeira, N.S.; Grimson, R.; Kandus, P. Assessment of SAR speckle filters in the context of object-based image analysis. Remote Sens. Lett. 2016, 7, 150–159. [Google Scholar] [CrossRef]

- Dou, M.; Yu, L.; Jin, M.; Zhang, Y. Correlation analysis and threshold value research on the form and function indexes of an urban interconnected river system network. Water Sci. Technol. Water Supply 2016, 16, 1776–1786. [Google Scholar] [CrossRef] [Green Version]

- Mustafa, W.A.; Yazid, H.; Jaafar, M. An improved sauvola approach on document images binarization. J. Telecommun. Electron. Comput. Eng. 2018, 10, 43–50. [Google Scholar]

- Yu, H.; Wang, J.; Bai, Y.; Yang, W.; Xia, G.S. Analysis of large-scale UAV images using a multi-scale hierarchical representation. Geo-Spat. Inf. Sci. 2018, 21, 33–44. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.; Hu, W.F.; Long, C.; Wang, D. Exogenous plant growth regulator alleviate the adverse effects of U and Cd stress in sunflower (Helianthus annuus L.) and improve the efficacy of U and Cd remediation. Chemosphere 2020, 262, 127809. [Google Scholar] [CrossRef] [PubMed]

- Thanh, D.N.H.; Prasath, V.B.S.; Hieu, L.M. Melanoma skin cancer detection method based on adaptive principal curvature, colour normalisation and feature extraction with the ABCD rule. J. Digit. Imaging 2020, 33, 574–585. [Google Scholar] [CrossRef] [PubMed]

- Modava, M.; Akbarizadeh, G. Coastline extraction from SAR images using spatial fuzzy clustering and the active contour method. Int. J. Remote Sens. 2017, 38, 355–370. [Google Scholar] [CrossRef]

| Sub-Image Block | dice | jaccard | Radius of the Evaluation Region | ||||

|---|---|---|---|---|---|---|---|

| Overlap | 1 Pixel | 2 Pixels | 3 Pixels | 4 Pixels | |||

| B’1,3 | 96.93 | 94.04 | 45.28 | 74.26 | 94.63 | 98.37 | 99.34 |

| B’1,4 | 95.46 | 91.31 | 43.96 | 73.99 | 92.11 | 94.00 | 96.55 |

| B’3,4 | 94.86 | 90.22 | 40.51 | 71.57 | 91.06 | 93.81 | 94.72 |

| Method | dice | jaccard | Radius of the Evaluation Region | ||||

|---|---|---|---|---|---|---|---|

| Overlap | 1 Pixel | 2 Pixels | 3 Pixels | 4 Pixels | |||

| Proposed method | 93.97 | 88.63 | 44.65 | 72.07 | 94.23 | 97.82 | 98.69 |

| 1-D Otsu in [12] | 72.52 | 56.89 | 32.47 | 58.27 | 72.60 | 75.26 | 76.65 |

| 2-D Otsu in [13] | 84.47 | 73.12 | 37.51 | 63.47 | 77.78 | 81.01 | 81.89 |

| FCM in [16] | 87.08 | 77.12 | 39.85 | 65.41 | 80.13 | 83.28 | 83. 96 |

| Image | Index | Lee | Kuan | Frost | SRAD |

|---|---|---|---|---|---|

| B’1,3 | ENL | 9.76 | 10.45 | 11.20 | 12.51 |

| CNR | 16.94 | 17.82 | 17.35 | 18.55 | |

| B’1,4 | ENL | 5.33 | 7.07 | 8.76 | 8.89 |

| CNR | 8.91 | 11.02 | 11.27 | 11.74 | |

| B’3,4 | ENL | 7.38 | 7.95 | 7.81 | 8.41 |

| CNR | 12.79 | 13.56 | 13.87 | 15.19 |

| Image | dice | jaccard | Radius of the Evaluation Region | ||||

|---|---|---|---|---|---|---|---|

| Overlap | 1 Pixel | 2 Pixels | 3 Pixels | 4 Pixels | |||

| Figure 15a | 92.45 | 85.96 | 45.51 | 79.43 | 84.23 | 91.89 | 92.59 |

| Figure 15b | 93.84 | 88.39 | 45.23 | 80.74 | 86.37 | 92.74 | 93.25 |

| Figure 15c | 90.89 | 83.30 | 43.78 | 78.26 | 83.64 | 91.27 | 92.10 |

| Figure 15d | 95.04 | 90.55 | 46.03 | 82.72 | 87.46 | 94.28 | 94.89 |

| Average: | 93.06 | 87.05 | 45.14 | 80.29 | 85.42 | 92.55 | 93.21 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Yang, Y.; Zhao, Q. Urban Riverway Extraction from High-Resolution SAR Image Based on Blocking Segmentation and Discontinuity Connection. Remote Sens. 2020, 12, 4014. https://doi.org/10.3390/rs12244014

Li Y, Yang Y, Zhao Q. Urban Riverway Extraction from High-Resolution SAR Image Based on Blocking Segmentation and Discontinuity Connection. Remote Sensing. 2020; 12(24):4014. https://doi.org/10.3390/rs12244014

Chicago/Turabian StyleLi, Yu, Yun Yang, and Quanhua Zhao. 2020. "Urban Riverway Extraction from High-Resolution SAR Image Based on Blocking Segmentation and Discontinuity Connection" Remote Sensing 12, no. 24: 4014. https://doi.org/10.3390/rs12244014