Hyperspectral Imagery Classification Based on Multiscale Superpixel-Level Constraint Representation

Abstract

:1. Introduction

2. Related Methods

2.1. Representation-Based Classification Methods

2.1.1. Sparse Representation-Based Model

2.1.2. Joint Representation-Based Framework

2.2. Simple Linear Iterative Clustering

3. Proposed Approach

3.1. Constraint Representation (CR) and Adjacent CR (ACR)

3.1.1. CR Model

3.1.2. ACR Model

3.2. Superpixel-Level CR (SPCR) and Multiscale SPCR (MSPCR)

3.2.1. SPCR Model

3.2.2. MSPCR Model

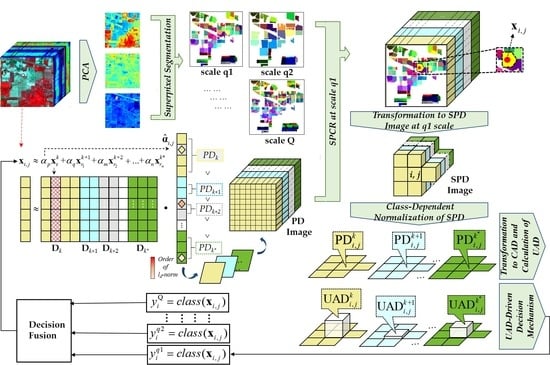

| Algorithm 1. The proposed MSPCR method |

| Input: A hyperspectral image (HSI) image , dictionary , the testing pixel , regularization parameter , scale compensation parameter . |

| Step 1: Reshape into a color image by compositing the first three principal component analysis (PCA) bands. Step 2: Obtain multiscale superpixel segmentation images of according to SLIC in Equations (3) to (5). Step 3: Obtain the participation degree (PD) image of according to Equation (7). Step 4: Extract superpixel centered on the testing pixel from the PD image of to get multiscale SPD image. Step 5: Class-dependent normalization at each scale according to Equation (9). Step 6: Calculate the united activity degree (UAD) according to Equation (11). Step 7: Assign the class of at each scale according to Equation (12). Step 8: Determine the final class label by the decision fusion according to Equation (13). Output: The class labels . |

4. Experimental Results and Analysis

4.1. Experimental Data Description

4.1.1. Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) Indian Pines Scene

4.1.2. Reflective Optics Spectrographic Imaging System (ROSIS) University of Pavia Scene

4.1.3. Hyperspectral Digital Image Collection Experiment (HYDICE) Washington, DC, National Mall Scene

4.2. Parameter Tuning

4.3. Experiments with the AVIRIS Indian Pines Scene

- 1

- As a widely applied supervised classification framework, the SVM classifier has a feasible performance in the classification of HSI. However, there are some isolated pixels appeared in the result due to the noise and spectral variability, as shown in Figure 7. Compared with the SVM, the classic SRC method gains a better classification result, which proves that the SR-based classifier is suitable for the hyperspectral image classification tasks. Compared with the SRC, the CR model obtains an approximate equivalent classification result with a lower computational cost than that of SRC. The result not only underlines the CR model simplified the SRC model via an improved procedure without the calculation of residual error, but also verifies the effectiveness of the PD-driven decision mechanism in the process of HSIC.

- 2

- In the spectral–spatial domain, as shown in Figure 7, SVM-MRF model outperforms the SVM classifier, which demonstrates the exploration of the spatial information can bring a further improvement on the spectral classifiers. Similarly, since the JSRC conducts the classification by sharing a common sparsity support among all neighborhood pixels, the improvement of overall accuracy also appeared in JSRC compared to SRC. Compared with the CR model, the ACR classifier obtains a significant improvement. It solves the spectral variability problem in CR by setting a spatial constraint, and proves that the innovation of decision mechanism from PD-driven to RAD-driven is effective for the HSIC tasks. As mentioned above, the improvements of SVM-MRF, JSRC, and ACR models relative to their original counterparts SVM, SRC, and CR confirm the effectiveness of introducing spatial information into the spectral domain classifiers.

- 3

- From Figure 7, the JSRC has a better classification performance than the SVM-MRF in the AVIRIS Indian Pines scene. As illustrated in Table 1, the ACR classifier achieves a better classification result in comparison to JSRC and SVM-MRF, of which the overall accuracy is 2.38% higher than that of JSRC and 6.11% higher than that of SVM-MRF. On one hand, the RAD-driven mechanism in ACR is more effective than the hybrid norm constraint in JSRC. On the other hand, the post-processing of spatial information in SVM-MRF takes more emphasis on adjusting the initial classification result generated from spectral features, lacking an effective strategy integrating spatial information with spectral information.

- 4

- Compared with the ACR, the proposed SPCR has a slightly higher OA. Table 1 demonstrates the effectiveness of introducing the superpixel segmentation, which preserves the edge information and fully considers the distribution of ground object. In addition, the practicability and reliability of the sparse coefficient, which plays an important role in the PD-driven decision mechanism and the UAD-driven decision mechanism. Thus, the combination of superpixel segmentation and sparse coefficients is effective, the overall accuracy of SPCR reaches to 92.90%, which is 1.66%, 4.04%, and 7.77% higher than ACR, JSRC, and SVM-MRF, respectively.

- 5

- Compared with the SPCR, the proposed MSPCR model brings an improvement. Firstly, it verifies that the MSPCR performs better than the SPCR via alleviating the impact of superpixel segmentation scale on the classification results. Then, it also indicates that the decision fusion takes a comprehensive consideration to the different spatial features and distributions of various categories of objects, which elevates the final classification accuracy.

- The classification results demonstrate that the overall accuracy has a positive relationship with the number of the labeled samples, the overall accuracy is increased by the number of labeled samples. Besides, this phenomenon only be satisfied under a certain number of the labeled samples, the growth trend would be stopped when the labeled samples reach a certain number.

- The integration of the spatial and spectral information benefits precision classification than the pixel-based classification method, which can be verified by the improvement of SVM-MRF, JSRC, ACR, SPCR, and MSPCR relative to their original counterparts, i.e., SVM, SRC, and CR.

- Compared to the traditional classifiers, the PD-driven classifiers provide a better classification performance. This can be confirmed by the overall accuracies of ACR and SPCR toward JSRC and SVM-MRF, as well as CR toward SVM. Moreover, the proposed MSPCR achieved the best performance among these classifiers.

4.4. Experiments with the ROSIS University of Pavia Scene

4.5. Experiments with the HYDIC Washington, DC, National Mall Scene

5. Practical Application and Analysis

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Hong, D.; Gao, L.; Yokoya, N.; Yao, J.; Chanussot, J.; Du, Q.; Zhang, B. More Diverse Means Better: Multimodal Deep Learning Meets Remote-Sensing Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2020. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolution Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020. [Google Scholar] [CrossRef]

- He, L.; Li, J.; Liu, C.; Li, S. Recent Advances on Spectral-Spatial Hyperspectral Image Classification: An Overview and New guidelines. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1579–1597. [Google Scholar] [CrossRef]

- Tong, F.; Tong, H.; Jiang, J.; Zhang, Y. Multiscale Union Regions Adaptive Sparse Representation for Hyperspectral Image Classification. Remote Sens. 2017, 9, 872. [Google Scholar] [CrossRef] [Green Version]

- Cui, B.; Cui, J.; Lu, Y.; Guo, N.; Gong, M. A Sparse Representation-Based Sample Pseudo-Labeling Method for Hyperspectral Image Classification. Remote Sens. 2020, 12, 664. [Google Scholar] [CrossRef] [Green Version]

- Gao, L.; Yao, D.; Li, Q.; Zhuang, L.; Zhang, B.; Bioucas-Dias, J.M. A New Low-Rank Representation Based Hyperspectral Image Denoising Method for Mineral Mapping. Remote Sens. 2017, 9, 1145. [Google Scholar] [CrossRef] [Green Version]

- Ghamisi, P.; Maggiori, E.; Li, S.; Souza, R.; Tarablaka, Y.; Moser, G.; De Giorgi, A.; Fang, L.; Chen, Y.; Chi, M.; et al. New Frontiers in Spectral-Spatial Hyperspectral Image Classification: The Latest Advances Based on Mathematical Morphology, Markov Random Fields, Segmentation, Sparse Representation, and Deep Learning. IEEE Geosci. Remote Sens. Mag. 2018, 6, 10–43. [Google Scholar] [CrossRef]

- Rasti, B.; Hong, D.; Hang, R.; Ghamisi, P.; Kang, X.; Chanussot, J.; Benediktsson, J.A. Feature Extraction for Hyperspectral Imagery: The Evolution from Shallow to Deep (Overall and Toolbox). IEEE Geosci. Remote Sens. Mag. 2020. [Google Scholar] [CrossRef]

- Gao, L.; Li, J.; Khodadadzadeh, M.; Plaza, A.; Zhang, B.; He, Z.; Yan, H. Subspace-Based Support Vector Machines for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 349–353. [Google Scholar]

- Wang, K.; Cheng, L.; Yong, B. Spectral-Similarity-Based Kernel of SVM for Hyperspectral Image Classification. Remote Sens. 2020, 12, 2154. [Google Scholar] [CrossRef]

- Paoletti, M.E.; Haut, J.M.; Tao, X.; Miguel, J.P.; Plaza, A. A New GPU Implementation of Support Vector Machines for Fast Hyperspectral Image Classification. Remote Sens. 2020, 12, 1257. [Google Scholar] [CrossRef] [Green Version]

- Hu, S.; Peng, J.; Fu, Y.; Li, L. Kernel Joint Sparse Representation Based on Self-Paced Learning for Hyperspectral Image Classification. Remote Sens. 2019, 11, 1114. [Google Scholar] [CrossRef] [Green Version]

- Yu, H.; Gao, L.; Li, J.; Li, S.S.; Zhang, B.; Benediktsson, J.A. Spectral-Spatial Hyperspectral Image Classification Using Subspace-Based Support Vector Machines and Adaptive Markov Random Fields. Remote Sens. 2016, 8, 355. [Google Scholar] [CrossRef] [Green Version]

- Jia, S.; Deng, B.; Zhu, J.; Jia, X.; Li, Q. Superpixel-Based Multitask Learning Framework for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2575–2588. [Google Scholar] [CrossRef]

- Gao, L.; Hong, D.; Yao, J.; Zhang, B.; Gamba, P.; Chanussot, J. Spectral Superresolution of Multispectral Imagery with Joint Sparse and Low-Rank Learning. IEEE Trans. Geosci. Remote Sens. 2020. [Google Scholar] [CrossRef]

- Yang, J.; Li, Y.; Chan, J.C.-W.; Shen, Q. Image Fusion for Spatial Enhancement of Hyperspectral Image via Pixel Group Based Non-Local Sparse Representation. Remote Sens. 2017, 9, 53. [Google Scholar] [CrossRef] [Green Version]

- Gao, Q.; Lim, S.; Jia, X. Improved Joint Sparse Models for Hyperspectral Image Classification Based on a Novel Neighbour Selection Strategy. Remote Sens. 2018, 10, 905. [Google Scholar] [CrossRef] [Green Version]

- Zhang, S.; Li, S.; Fu, W.; Fang, L. Multiscale Superpixel-Based Sparse Representation for Hyperspectral Image Classification. Remote Sens. 2017, 9, 139. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Du, Q. Collaborative Representation for Hyperspectral Anomaly Detection. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1463–1474. [Google Scholar] [CrossRef]

- Wang, J.; Jiao, L.; Wang, S.; Hou, B.; Liu, F. Adaptive Nonlocal Spatial–Spectral Kernel for Hyperspectral Imagery Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4086–4101. [Google Scholar] [CrossRef]

- Geng, J.; Wang, H.; Fan, J.; Ma, X.; Wang, B. Wishart Distance-Based Joint Collaborative Representation for Polarimetric SAR Image Classification. IET Radar Sonar Navig. 2017, 11, 1620–1628. [Google Scholar] [CrossRef]

- Yu, H.; Shang, X.; Zhang, X.; Gao, L.; Song, M.; Hu, J. Hyperspectral Image Classification Based on Adjacent Constraint Representation. IEEE Geosci. Remote Sens. Lett. 2020. [Google Scholar] [CrossRef]

- Liang, J.; Zhou, J.; Qian, Y.; Wen, L.; Bai, X.; Gao, Y. On the Sampling Strategy for Evaluation of Spectral-Spatial Methods in Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 862–880. [Google Scholar] [CrossRef] [Green Version]

- Yu, H.; Gao, L.; Liao, W.; Zhang, B.; Pižurica, A.; Philips, W. Multiscale Superpixel-Level Subspace-Based Support Vector Machines for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2142–2146. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, C.; Zhou, Y.; Zhu, X.; Wang, Y.; Zhang, W. From Partition-Based Clustering to Density-Based Clustering: Fast Find Clusters with Diverse Shapes and Densities in Spatial Databases. IEEE Access. 2018, 6, 1718–1729. [Google Scholar] [CrossRef]

- Garg, I.; Kaur, B. Color Based Segmentation Using K-Mean Clustering and Watershed Segmentation. In Proceedings of the 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 16–18 March 2016; pp. 3165–3169. [Google Scholar]

- Sun, H.; Ren, J.; Zhao, H.; Yan, Y.; Zabalza, J.; Marshall, S. Superpixel based Feature Specific Sparse Representation for Spectral-Spatial Classification of Hyperspectral Images. Remote Sens. 2019, 11, 536. [Google Scholar] [CrossRef] [Green Version]

- Sharma, J.; Rai, J.K.; Tewari, R.P. A Combined Watershed Segmentation Approach Using K-Means Clustering for Mammograms. In Proceedings of the 2015 2nd International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 19–20 February 2015; pp. 109–113. [Google Scholar]

- Jia, S.; Deng, B.; Jia, X. Superpixel-Level Sparse Representation-Based Classification for Hyperspectral Imagery. IGARSS 2016, 3302–3305. [Google Scholar] [CrossRef]

- Csillik, O. Fast Segmentation and Classification of Very High Resolution Remote Sensing Data Using SLIC Superpixels. Remote Sens. 2017, 9, 243. [Google Scholar] [CrossRef] [Green Version]

- Jia, S.; Deng, B.; Zhu, J.; Jia, X.; Li, Q. Local Binary Pattern-Based Hyperspectral Image Classification with Superpixel Guidance. IEEE Geosci. Remote Sens. 2018, 56, 749–759. [Google Scholar] [CrossRef]

- Li, G.; Li, L.; Zhu, H.; Liu, X.; Jiao, L. Adaptive Multiscale Deep Fusion Residual Network for Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8506–8521. [Google Scholar] [CrossRef]

- Shao, Z.; Wang, L.; Wang, Z.; Deng, J. Remote Sensing Image Super-Resolution Using Sparse Representation and Coupled Sparse Autoencoder. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2663–2674. [Google Scholar] [CrossRef]

- Li, W.; Du, Q.; Xiong, M. Kernel Collaborative Representation with Tikhonov Regularization for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 48–52. [Google Scholar]

- Zhang, H.; Li, J.; Huang, Y.; Zhang, L. A Nonlocal Weighted Joint Sparse Representation Classification Method for Hyperspectral Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2056–2065. [Google Scholar] [CrossRef]

- Zou, B.; Xu, X.; Zhang, L. Object-Based Classification of PolSAR Images Based on Spatial and Semantic Features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 609–619. [Google Scholar] [CrossRef]

- Xie, F.; Lei, C.; Yang, J.; Jin, C. An Effective Classification Scheme for Hyperspectral Image Based on Superpixel and Discontinuity Preserving Relaxation. Remote Sens. 2019, 11, 1149. [Google Scholar] [CrossRef] [Green Version]

- Zhu, L.; Wen, G. Hyperspectral Anomaly Detection via Background Estimation and Adaptive Weighted Sparse Representation. Remote Sens. 2018, 10, 272. [Google Scholar]

- Yu, H.; Gao, L.; Liao, W.; Zhang, B.; Zhuang, L.; Song, M.; Chanussot, J. Global Spatial and Local Spectral Similarity-Based Manifold Learning Group Sparse Representation for Hyperspectral Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3043–3056. [Google Scholar] [CrossRef]

- Li, S.; Ni, L.; Jia, X.; Gao, L.; Zhang, B.; Peng, M. Multi-Scale Superpixel Spectral–Spatial Classification of Hyperspectral Images. Int. J. Remote Sens. 2016, 37, 4905–4922. [Google Scholar] [CrossRef]

- Jia, S.; Deng, X.; Zhu, J.; Xu, M.; Zhou, J.; Jia, X. Collaborative Representation-Based Multiscale Superpixel Fusion for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7770–7784. [Google Scholar] [CrossRef]

| Class | Samples | SVM | SRC | CR | SVM-MRF | JSRC | ACR | SPCR | MSPCR |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 1460 | 55.60% | 77.29% | 77.00% | 73.51% | 82.29% | 83.97% | 87.53% | 89.74% |

| 2 | 834 | 57.82% | 83.62% | 84.36% | 82.77% | 91.92% | 93.53% | 94.24% | 97.84% |

| 3 | 497 | 88.99% | 97.53% | 97.38% | 95.52% | 98.79% | 98.98% | 96.38% | 97.44% |

| 4 | 489 | 98.90% | 99.84% | 99.88% | 99.34% | 100.00% | 100.00% | 99.39% | 99.82% |

| 5 | 968 | 71.45% | 81.94% | 81.60% | 89.00% | 92.13% | 94.41% | 87.93% | 94.12% |

| 6 | 2468 | 56.22% | 70.47% | 70.19% | 77.95% | 78.76% | 81.23% | 91.75% | 93.41% |

| 7 | 614 | 68.72% | 91.35% | 91.68% | 95.73% | 96.06% | 96.81% | 93.55% | 99.49% |

| 8 | 1294 | 94.41% | 99.61% | 99.62% | 98.36% | 99.66% | 99.85% | 99.91% | 99.92% |

| OA | 68.44% | 83.27% | 83.19% | 85.13% | 88.86% | 91.24% | 92.90% | 95.30% | |

| Class | Samples | SVM | SRC | CR | SVM-MRF | JSRC | ACR | SPCR | MSPCR |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 6631 | 75.04% | 76.12% | 75.76% | 91.06% | 65.12% | 93.94% | 90.62% | 94.79% |

| 2 | 18649 | 80.69% | 78.43% | 78.83% | 88.76% | 92.35% | 87.98% | 93.37% | 96.83% |

| 3 | 2099 | 80.50% | 78.04% | 78.91% | 89.26% | 95.90% | 92.09% | 89.48% | 93.67% |

| 4 | 3064 | 94.31% | 94.96% | 95.47% | 97.06% | 92.20% | 97.03% | 89.15% | 98.18% |

| 5 | 1345 | 99.21% | 99.80% | 99.82% | 99.55% | 100.00% | 100.00% | 97.62% | 99.93% |

| 6 | 5029 | 87.32% | 80.06% | 79.58% | 96.08% | 84.91% | 98.19% | 98.71% | 98.86% |

| 7 | 1330 | 92.82% | 89.28% | 89.83% | 96.37% | 99.85% | 97.59% | 96.42% | 99.83% |

| 8 | 3682 | 83.07% | 70.65% | 68.66% | 94.18% | 93.29% | 91.47% | 95.43% | 96.96% |

| 9 | 947 | 99.86% | 98.27% | 98.34% | 99.90% | 96.62% | 99.68% | 83.44% | 96.96% |

| OA | 83.10% | 80.21% | 80.20% | 92.05% | 88.07% | 92.19% | 93.26% | 96.90% | |

| Class | Samples | SVM | SRC | CR | SVM-MRF | JSRC | ACR | SPCR | MSPCR |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2916 | 85.56% | 94.47% | 93.54% | 93.08% | 85.08% | 92.77% | 95.79% | 98.36% |

| 2 | 1819 | 88.47% | 90.22% | 91.32% | 94.33% | 94.48% | 95.61% | 94.22% | 97.81% |

| 3 | 1264 | 96.12% | 98.72% | 98.54% | 97.00% | 95.81% | 97.24% | 98.57% | 97.50% |

| 4 | 1790 | 96.96% | 98.73% | 98.89% | 98.32% | 96.91% | 99.22% | 98.77% | 98.32% |

| 5 | 1120 | 98.38% | 99.51% | 99.49% | 98.87% | 94.30% | 92.35% | 99.42% | 98.13% |

| 6 | 1281 | 96.22% | 96.81% | 96.74% | 97.25% | 96.24% | 97.58% | 98.43% | 99.89% |

| OA | 92.10% | 95.83% | 95.76% | 95.85% | 92.58% | 97.18% | 97.11% | 98.32% | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, H.; Zhang, X.; Song, M.; Hu, J.; Guo, Q.; Gao, L. Hyperspectral Imagery Classification Based on Multiscale Superpixel-Level Constraint Representation. Remote Sens. 2020, 12, 3342. https://doi.org/10.3390/rs12203342

Yu H, Zhang X, Song M, Hu J, Guo Q, Gao L. Hyperspectral Imagery Classification Based on Multiscale Superpixel-Level Constraint Representation. Remote Sensing. 2020; 12(20):3342. https://doi.org/10.3390/rs12203342

Chicago/Turabian StyleYu, Haoyang, Xiao Zhang, Meiping Song, Jiaochan Hu, Qiandong Guo, and Lianru Gao. 2020. "Hyperspectral Imagery Classification Based on Multiscale Superpixel-Level Constraint Representation" Remote Sensing 12, no. 20: 3342. https://doi.org/10.3390/rs12203342