Classification of Point Clouds for Indoor Components Using Few Labeled Samples

Abstract

:1. Introduction

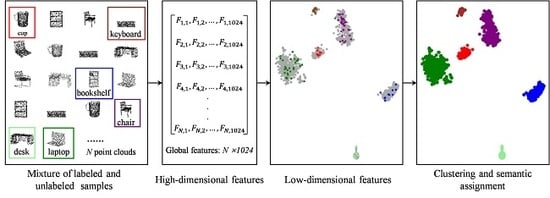

- A novel framework is proposed to achieve the point cloud classification of indoor components using a few labeled samples. In this framework, a neural network and unsupervised methods are used for feature extraction and feature learning, respectively.

- A combination of dimensionality reduction based on manifold learning and clustering is introduced to feature learning. The high-dimensional deep features are embedded into low-dimensional space by using the manifold learning. This reduces the difficulty of quantifying the similarities and differences of point cloud features. Moreover, the improved clustering algorithm, learning from low-dimensional features, is implemented to assign labels to unlabeled the point clouds to achieve classification.

- An extensive comparison is made between the performance of the proposed method and three state-of-the-art deep learning methods by using open-source datasets. Case studies in different indoor scenarios demonstrate that the proposed method can reduce the cost of labeling samples and generate better classification accuracy.

2. Methods

2.1. Mixed Feature Extraction for the Point Clouds of Indoor Components

2.2. Dimensionality Reduction for Mixed Features

| Algorithm 1: Dimensional reduction algorithm. |

| Inputs: A set of global features of point clouds ; perplexity perp; the dimension to be reduced d; number of iterations T, learning rate ; momentum . |

| Compute pairwise affinities with the fixed perplexity perp using (2). |

| Set . |

| Initialize low-dimensional data with |

| Fort = 1 to T do |

| Compute low-dimensional affinities with (5) |

| Compute gradient using (7) |

| Iterative optimization, update low-dimensional data with |

| End for |

| Outputs: A set of low-dimensional features . |

2.3. Semantic Assignment with Few Labeled Samples

| Algorithm 2: Semantic assignment algorithm | |

| Inputs: A set of samples ; neighborhood parameter and . | |

| Initialize as unvisited, , k = 0, | Add to |

| For in do | End if |

| Mark as visited, determine the neighbors of | End for |

| If then | End if |

| Mark as noise | End for |

| Else | For in do |

| Create new cluster , add to | Determine a set of the labeled samples , and |

| For in do | a set of the unlabeled samples |

| If is not visited then | For in do |

| Mark as visited | Find five labeled samples nearest to in , |

| If then | and take their labels as |

| = | Determine the label that appears the |

| End if | most in , add to |

| End if | End for |

| If not yet a member of any cluster | End for |

| Outputs: Semantic labels | |

3. Experiments

3.1. Experimental Data

3.2. Design of Experiments

4. Experimental Results and Analyses

4.1. Experiment 1: Office Scenario

4.2. Experiment 2: Living Room Scenario

4.3. Experiment 3: Bedroom Scenario

4.4. Experiment 4: Comprehensive Classification

5. Discussion

5.1. Influence of the Dimensionality Reduction and Clustering on the Accuracy

5.2. Influence of the Geometric Contrast on the Classification Accuracy

5.3. Influence of the Semantic Assignment Parameters on Classification Accuracy

5.4. Robustness in Point Density

5.5. Number of Categories of Labeled Samples

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chang, H.J.; Lee, C.S.G.; Lu, Y.H.; Hu, Y.C. P-SLAM: Simultaneous localization and mapping with environmental-structure prediction. IEEE Trans. Robot. 2007, 23, 281–293. [Google Scholar] [CrossRef]

- Weingarten, J.W.; Gruener, G.; Siegwart, R. A state-of-the-art 3D sensor for robot navigation. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No.04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2155–2160. [Google Scholar] [CrossRef] [Green Version]

- Endres, F.; Hess, J.; Sturm, J.; Cremers, D.; Burgard, W. 3-D Mapping With an RGB-D Camera. IEEE Trans. Robot. 2014, 30, 177–187. [Google Scholar] [CrossRef]

- Bassier, M.; Vergauwen, M. Topology Reconstruction of BIM Wall Objects from Point Cloud Data. Remote Sens. 2020, 12, 1800. [Google Scholar] [CrossRef]

- Tashakkori, H.; Rajabifard, A.; Kalantari, M. A new 3D indoor/outdoor spatial model for indoor emergency response facilitation. Build. Environ. 2015, 89, 170–182. [Google Scholar] [CrossRef]

- Fernández-Caramés, C.; Serrano, F.J.; Moreno, V.; Curto, B.; Rodríguez-Aragón, J.F.; Alves, R. A real-time indoor localization approach integrated with a Geographic Information System (GIS). Robot. Auton. Syst. 2016, 75, 475–489. [Google Scholar] [CrossRef]

- Musialski, P.; Wonka, P.; Aliaga, D.G.; Wimmer, M.; van Gool, L.; Purgathofer, W. A Survey of Urban Reconstruction. Comput. Graph. Forum 2013, 32, 146–177. [Google Scholar] [CrossRef]

- Tran, H.; Khoshelham, K. Procedural Reconstruction of 3D Indoor Models from Lidar Data Using Reversible Jump Markov Chain Monte Carlo. Remote Sens. 2020, 12, 838. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Sun, J.; Li, W.; Hu, T.; Wang, P. Deep Learning on Point Clouds and Its Application: A Survey. Sensors (Basel) 2019, 19, 4188. [Google Scholar] [CrossRef] [Green Version]

- Grilli, E.; Menna, F.; Remondino, F. A review of point clouds segmentation and classification algorithms. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2017, 2, 339–344. [Google Scholar] [CrossRef] [Green Version]

- Luo, H.; Wang, C.; Wen, Y.; Guo, W. 3-D Object Classification in Heterogeneous Point Clouds via Bag-of-Words and Joint Distribution Adaption. IEEE Geosci. Remote Sens. Lett. 2019, 1–5. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Guan, H.; Wang, C. Automated Extraction of Urban Road Facilities Using Mobile Laser Scanning Data. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2167–2181. [Google Scholar] [CrossRef]

- Song, Y.F.; Chen, X.W.; Li, J.; Zhao, Q.P. Embedding 3D Geometric Features for Rigid Object Part Segmentation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 580–588. [Google Scholar] [CrossRef]

- Wang, X.G.; Zhou, B.; Wang, Z.J.; Zou, D.Q.; Chen, X.W.; Zhao, Q.P. Efficiently consistent affinity propagation for 3D shapes co-segmentation. Visual Comput. 2018, 34, 997–1008. [Google Scholar] [CrossRef]

- Guo, Y.L.; Bennamoun, M.; Sohel, F.; Lu, M.; Wan, J.W. 3D Object Recognition in Cluttered Scenes with Local Surface Features: A Survey. IEEE Trans. Pattern Anal. 2014, 36, 2270–2287. [Google Scholar] [CrossRef]

- Chen, H.; Bhanu, B. 3D free-form object recognition in range images using local surface patches. Int. Conf. Patt. Recog. 2004, 3, 136–139. [Google Scholar] [CrossRef]

- Johnson, A.E.; Hebert, M. Using spin images for efficient object recognition in cluttered 3D scenes. IEEE Trans. Pattern Anal. 1999, 21, 433–449. [Google Scholar] [CrossRef] [Green Version]

- Sun, J.A.; Ovsjanikov, M.; Guibas, L. A Concise and Provably Informative Multi-Scale Signature Based on Heat Diffusion. Comput. Graph. Forum 2009, 28, 1383–1392. [Google Scholar] [CrossRef]

- Rusu, R.B.; Blodow, N.; Marton, Z.C.; Beetz, M. Aligning Point Cloud Views using Persistent Feature Histograms. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3384–3391. [Google Scholar] [CrossRef]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast Point Feature Histograms (FPFH) for 3D Registration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 1848–1853. [Google Scholar] [CrossRef]

- Secord, J.; Zakhor, A. Tree detection in urban regions using aerial lidar and image data. IEEE Geosci. Remote Sens. Lett. 2007, 4, 196–200. [Google Scholar] [CrossRef]

- Li, N.; Pfeifer, N.; Liu, C. Tensor-Based Sparse Representation Classification for Urban Airborne LiDAR Points. Remote Sens. 2017, 9, 1216. [Google Scholar] [CrossRef] [Green Version]

- Manevitz, L.M.; Yousef, M. One-Class SVMs for Document Classification. J. Mach. Learn. Res. 2001, 2, 139–154. [Google Scholar]

- Garcia-Gutierrez, J.; Gonçalves-Seco, L.; Riquelme-Santos, J.C.; Alegre, R.C. Decision trees on lidar to classify land uses and covers. In Proceedings of the ISPRS Workshop: Laser Scanning, Enschede, The Netherlands, 12–14 September 2005; pp. 323–328. [Google Scholar]

- Barros, R.C.; Basgalupp, M.P.; de Carvalho, A.C.P.L.F.; Freitas, A.A. A Survey of Evolutionary Algorithms for Decision-Tree Induction. IEEE Trans. Syst. Man Cybern. C 2012, 42, 291–312. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Zhirong, W.; Song, S.; Khosla, A.; Fisher, Y.; Linguang, Z.; Xiaoou, T.; Xiao, J. 3D ShapeNets: A deep representation for volumetric shapes. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar] [CrossRef] [Green Version]

- Maturana, D.; Scherer, S. VoxNet: A 3D Convolutional Neural Network for real-time object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 922–928. [Google Scholar] [CrossRef]

- Shi, B.; Bai, S.; Zhou, Z.; Bai, X. DeepPano: Deep Panoramic Representation for 3-D Shape Recognition. IEEE Signal Process. Lett. 2015, 22, 2339–2343. [Google Scholar] [CrossRef]

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-view Convolutional Neural Networks for 3D Shape Recognition. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 945–953. [Google Scholar] [CrossRef] [Green Version]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 177–185. [Google Scholar] [CrossRef] [Green Version]

- Griffiths, D.; Boehm, J. A Review on Deep Learning Techniques for 3D Sensed Data Classification. Remote Sens. 2019, 11, 1499. [Google Scholar] [CrossRef] [Green Version]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–6 December 2017. [Google Scholar]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. PointCNN: Convolution On X-Transformed Points. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–6 December 2017. [Google Scholar]

- Chen, L.; Zhan, W.; Tian, W.; He, Y.; Zou, Q. Deep Integration: A Multi-Label Architecture for Road Scene Recognition. IEEE Trans. Image Process. 2019, 28, 4883–4898. [Google Scholar] [CrossRef]

- Chen, L.; Wang, Q.; Lu, X.K.; Cao, D.P.; Wang, F.Y. Learning Driving Models From Parallel End-to-End Driving Data Set. Proc. IEEE 2020, 108, 262–273. [Google Scholar] [CrossRef]

- Mensink, T.; Verbeek, J.; Perronnin, F.; Csurka, G. Metric Learning for Large Scale Image Classification: Generalizing to New Classes at Near-Zero Cost. In Computer Vision—ECCV 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 488–501. [Google Scholar]

- Mensink, T.; Verbeek, J.; Perronnin, F.; Csurka, G. Distance-Based Image Classification: Generalizing to New Classes at Near-Zero Cost. IEEE Trans. Pattern Anal. 2013, 35, 2624–2637. [Google Scholar] [CrossRef] [Green Version]

- Cheny, Z.; Fuy, Y.; Zhang, Y.; Jiang, Y.G.; Xue, X.; Sigal, L. Multi-level Semantic Feature Augmentation for One-shot Learning. IEEE Trans. Image Process. 2019, 28, 4594–4605. [Google Scholar] [CrossRef] [Green Version]

- Cai, Q.; Pan, Y.W.; Yao, T.; Yan, C.G.; Mei, T. Memory Matching Networks for One-Shot Image Recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4080–4088. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.Q.; Feng, C.; Shen, Y.R.; Tian, D. FoldingNet: Point Cloud Auto-encoder via Deep Grid Deformation. 2018 IEEE Conf. Comput. Vis. Pattern Recognit. 2018, 206–215. [Google Scholar] [CrossRef] [Green Version]

- Bello, S.A.; Yu, S.; Wang, C.; Adam, J.M.; Li, J. Review: Deep Learning on 3D Point Clouds. Remote Sens. 2020, 12, 1729. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial Transformer Networks. arXiv 2015, arXiv:1506.02025. [Google Scholar]

- Huang, R.; Xu, Y.S.; Hong, D.F.; Yao, W.; Ghamisi, P.; Stilla, U. Deep point embedding for urban classification using ALS point clouds: A new perspective from local to global. ISPRS J. Photogramm. Remote Sens. 2020, 163, 62–81. [Google Scholar] [CrossRef]

- Cox, T.F.; Cox, M.A. Multidimensional Scaling; Chapman & Hall/CRC: London, UK, 1994. [Google Scholar]

- Shen, H.T. Principal Component Analysis. In Encyclopedia of Database Systems; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Roweis, S.T.; Saul, L.K. Nonlinear dimensionality reduction by locally linear embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tenenbaum, J.B.; de Silva, V.; Langford, J.C. A global geometric framework for nonlinear dimensionality reduction. Science 2000, 290, 2319–2323. [Google Scholar] [CrossRef]

- Maaten, L.V.D.; Hinton, G.E. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Hartigan, J.A.; Wong, M.A. Algorithm AS 136: A K-Means Clustering Algorithm. Appl. Stat. 1979, 28, 100. [Google Scholar] [CrossRef]

- He, X.F.; Cai, D.; Shao, Y.L.; Bao, H.J.; Han, J.W. Laplacian Regularized Gaussian Mixture Model for Data Clustering. IEEE Trans. Knowl. Data Eng. 2011, 23, 1406–1418. [Google Scholar] [CrossRef] [Green Version]

- Fox, W.R.; Kaufman, L.; Rousseeuw, P.J. Finding Groups in Data: An Introduction to Cluster Analysis. Appl. Stat. 1991, 40, 486. [Google Scholar] [CrossRef]

- Von Luxburg, U. A tutorial on spectral clustering. Stat. Comput. 2007, 17, 395–416. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. KDD ‘96 1996, 96, 226–231. [Google Scholar]

- Chang, A.X.; Funkhouser, T.A.; Guibas, L.J.; Hanrahan, P.; Huang, Q.-X.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. ShapeNet: An Information-Rich 3D Model Repository. arXiv 2015, arXiv:1512.03012. [Google Scholar]

- Luciano, L.; Ben Hamza, A. Deep similarity network fusion for 3D shape classification. Vis. Comput. 2019, 35, 1171–1180. [Google Scholar] [CrossRef]

- Liu, C.; Zeng, D.D.; Wu, H.B.; Wang, Y.; Jia, S.J.; Xin, L. Urban Land Cover Classification of High-Resolution Aerial Imagery Using a Relation-Enhanced Multiscale Convolutional Network. Remote Sens. 2020, 12, 311. [Google Scholar] [CrossRef] [Green Version]

- Steckel, J.; Boen, A.; Peremans, H. Broadband 3-D Sonar System Using a Sparse Array for Indoor Navigation. IEEE Trans. Robot. 2013, 29, 161–171. [Google Scholar] [CrossRef]

| Category | Labeled | Unlabeled/test | Train | Category | Labeled | Unlabeled/test | Train |

|---|---|---|---|---|---|---|---|

| bookshelf | 30 | 400 | 757 | monitor | 20 | 310 | 1273 |

| chair | 30 | 300 | 1478 | pillow | 10 | 40 | 176 |

| cup | 10 | 100 | 200 | sofa | 20 | 190 | 1317 |

| desk | 10 | 86 | 200 | table | 10 | 90 | 392 |

| keyboard | 10 | 50 | 180 | trashcan | 10 | 90 | 392 |

| laptop | 10 | 200 | 429 | bed | 5 | 56 | 515 |

| flowerpot | 10 | 168 | 571 | nightstand | 6 | 86 | 200 |

| Category | Bookshelf | Chair | Cup | Desk | Keyboard | Laptop | Flowerpot | Monitor | Pillow | Sofa | Table | Trashcan | Bed | Nightstand | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Experiment | |||||||||||||||

| Experiment 1 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||

| Experiment 2 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||

| Experiment 3 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||

| Experiment 4 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Nearest Neighbor Parameters | 1 | 3 | 5 | 7 | 9 |

| Accuracy | 87.48% | 87.11% | 87.62% | 86.51% | 86.51% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, H.; Yang, H.; Huang, S.; Zeng, D.; Liu, C.; Zhang, H.; Guo, C.; Chen, L. Classification of Point Clouds for Indoor Components Using Few Labeled Samples. Remote Sens. 2020, 12, 2181. https://doi.org/10.3390/rs12142181

Wu H, Yang H, Huang S, Zeng D, Liu C, Zhang H, Guo C, Chen L. Classification of Point Clouds for Indoor Components Using Few Labeled Samples. Remote Sensing. 2020; 12(14):2181. https://doi.org/10.3390/rs12142181

Chicago/Turabian StyleWu, Hangbin, Huimin Yang, Shengyu Huang, Doudou Zeng, Chun Liu, Hao Zhang, Chi Guo, and Long Chen. 2020. "Classification of Point Clouds for Indoor Components Using Few Labeled Samples" Remote Sensing 12, no. 14: 2181. https://doi.org/10.3390/rs12142181