1. Introduction

Remote sensing images, such as multispectral images (MSIs) and hyperspectral images (HSIs), provide abundant spatial and spectral information of real scenes and play a central role in many real-world applications, such as urban planning, surveillance, and environmental monitoring. Unfortunately, during the acquisition process, remote sensing images are often corrupted by various kinds of noise, such as Gaussian noise, speckle noise, and stripe. Image denoising aims to recover an underlying clean image from its noisy observation, which is a fundamental problem in remote sensing image processing. To obtain effective signal-noise separations, denoising methods usually rely on some prior assumptions imposed on the image and noise components.

One of the key issues in denoising methods is the rational design of an image prior, which encourages some expected properties of the denoised image. As a significant property of remote sensing images, low-rankness means that high-dimensional image data lie in a low-dimensional subspace, which can also be considered to be sparsity over a learned basis. Methods based on low-rankness along this line can be categorized into two classes: matrix-based and tensor-based ones. Matrix-based methods perform low-rank matrix approximation on the unfolding (tensor matricization) of the noisy image along the spectral mode. To obtain an efficient low-rank solution, low-rank matrix factorization methods factorize the objective matrix into a product of two flat ones [

1,

2,

3,

4,

5,

6,

7,

8,

9]; rank minimization methods penalize some surrogates of the rank function, such as the convex envelope nuclear norm [

10,

11,

12] or tighter non-convex metrics, e.g., log-determinant penalty [

13], Schatten

p-norm [

14,

15],

-norm (Laplace function) [

16], and truncated/weighted nuclear norm [

17,

18]. These matrix-based methods, however, can capture only the spectral correlation but ignore the global multi-factor correlation in remote sensing images, which usually leads to suboptimal results under severe noise corruption. On the other hand, tensor-based methods explicitly model the underlying image as a low-rank tensor, by solving a tensor decomposition model or minimizing the corresponding induced tensor rank [

19]. Representative works include CANDECOMP/PARAFAC (CP) decomposition with CP rank [

20,

21,

22], Tucker decomposition with Tucker rank [

23,

24,

25,

26,

27], tensor singular value decomposition (t-SVD) with tubal rank [

28,

29], and tensor train (TT) decomposition with TT rank [

30,

31,

32]. Considering that each tensor decomposition represents a specific type of high-dimensional data structure, recent works attempt to combine the merits of different low-rank tensor models, such as the hybrid CP-Tucker model [

33] and the Kronecker-basis-representation (KBR)-based tensor sparsity measure [

34,

35]. By characterizing the correlations across both spatial and spectral modes, the above tensor-based methods have the advantage of preserving the intrinsic multilinear structure of remote sensing images, achieving state-of-the-art denoising performance.

Another critical issue in current denoising methods is the choice of a noise prior, which characterizes the statistical properties of the data noise. This is generally realized by imposing certain assumptions on the noise distribution, leading to specific loss functions between the noisy image and the denoising result. Two traditional noise priors are the Gaussian prior [

1,

2,

21,

24] (

-norm loss) and the Laplacian prior [

5,

36,

37] (

-norm loss), which are widely used for suppressing dense noise and sparse noise (outlier), respectively. A combination of Gaussian and Laplacian priors [

12,

27,

29,

38] (

loss) is commonly considered in mixed noise removal. However, these priors are generally not flexible enough to fit the noise in real applications, whose distributions are much more complicated than Gaussian/Laplacian or a simple mixture of them. To handle such complex noise, several works model the noise with a mixture of Gaussians (MoG) distribution [

3,

4,

8] (weighted-

loss), due to its universal approximation capability to any continuous probability density function [

39]. Later, MoG has been generalized to a mixture of exponential power (MoEP) distribution [

6] (weighted-

loss) for further flexibility and adaptivity. Despite the sophistication of the above priors, they all assume that the noise is independent and identically distributed (i.i.d.), which is still limited in handling realistic noise with non-i.i.d. statistical structures. In remote sensing images, the noise across different bands always exhibits evident distinctions in configuration and magnitude. To encode such noise characteristics, recent works impose non-i.i.d. assumptions on the noise distribution, such as non-i.i.d. MoG [

7] and Dirichlet process Gaussian mixture [

9,

40], resulting in better noise fitting capability and higher denoising accuracy.

Some attempts have been presented to combine the advantages of recent developments in image characterization and noise modeling. To the best of our knowledge, only several studies are constructed as follows. Chen et al. [

41] proposed a robust tensor factorization method based on CP decomposition and MoG noise assumption. However, their model does not consider uncertainty information of latent variables, such as the CP factor matrices and the MoG parameters, and thus is prone to overfitting due to point estimations of these variables by optimization-based approaches. To overcome this defect, Luo et al. [

42] formulated the robust CP decomposition with MoG noise assumption as a full Bayesian model, in which all latent variables are given prior distributions and inferred under a variational Bayesian framework. Considering that CP decomposition cannot well capture the correlations along different tensor modes, Chen et al. [

43] further integrated Tucker decomposition and MoG noise modeling into a generalized robust tensor factorization framework. However, this method also suffers from the overfitting problem and requires some critical hyperparameters to be manually specified, such as the tensor rank and the number of MoG components. Moreover, all the above methods impose an i.i.d. assumption on the data noise, which still under-estimates the complexity of realistic noise and thus leaves room for further improvement.

To overcome the aforementioned issues, in this paper we propose a new remote sensing image denoising method by taking into consideration the intrinsic properties of both remote sensing images and realistic noise. The main contribution of this work is summarized below.

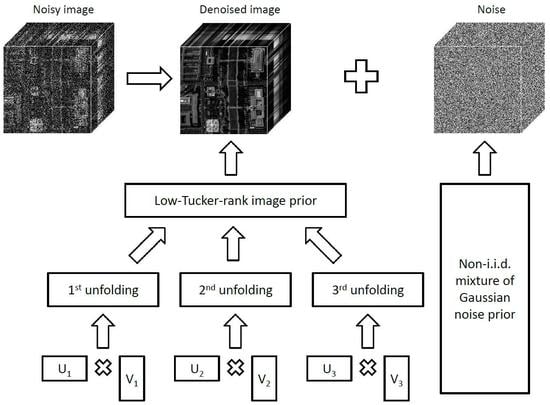

We formulate the image denoising problem as a full Bayesian generative model, in which a low-Tucker-rank image prior is exploited to characterize the intrinsic low-rank tensor structure of the underlying image, and a non-i.i.d. MoG noise prior is adopted to encode the complex and distinct statistical structures of the embedded noise.

We design a variational Bayesian algorithm for an efficient solution to the proposed model, where each variable can be updated in closed-form. Moreover, we develop adaptive strategies for the selection of involved hyperparameters, to make our algorithm free from burdensome hyperparameter-tuning.

We conduct extensive denoising experiments on both simulated and real MSIs/HSIs, and the results show the superiority of the proposed method over the compared state-of-the-art ones.

The rest of the paper is organized as follows.

Section 2 introduces some notation used throughout the paper.

Section 3 describes the proposed model and the corresponding variational inference algorithm.

Section 4 presents experimental results and discussions.

Section 5 concludes the paper.

2. Notation

We use boldface Euler script letters for tensors, e.g., , boldface uppercase letters for matrices, e.g., A, boldface lowercase letters for vectors, e.g., a, and lowercase letters for scalars, e.g., a. In particular, we use I, 0, and 1 for identity matrices, arrays of all zeros, and arrays of all ones, respectively. We use a pair of lowercase and uppercase letters for an index and its upper bound, e.g., . We use Matlab expressions to denote elements and subarrays, e.g., , , and .

Given a tensor

(

reduces to a matrix when

or a vector when

). The Frobenius norm and the 1-norm of

are, respectively, defined as

For a given dimension

, the mode-

d unfolding of

is denoted as

or, more compactly, as

, whose size is

. The inverse process is denoted as

. More precisely, the tensor element

maps to the matrix element

satisfying

see [

19] for more details. The mapping between

and

is denoted as

The Tucker rank of

is defined as a vector consisting of the ranks of its unfoldings, i.e.,

Additional notation is defined where it occurs.

4. Numerical Experiments

We evaluate the denoising performance of the proposed NMoG-Tucker method on synthetic data, MSIs, and HSIs.

Table 1 lists six state-of-the-art competing methods on low-rank matrix/tensor approximation: matrix-based methods LRMR [

38], MoG-RPCA [

4], and NMoG-LRMF [

7]; tensor-based methods LRTA [

24], PARAFAC [

21], and KBR-RPCA [

35]. Parameters involved in all competing methods are set to default values or manually tuned for the best possible denoising performance. All experiments are conducted under Windows 10 and Matlab R2016a (Version 9.0.0.341360) running on a desktop with an Intel(R) Core(TM) i7-8700K CPU at 3.70 GHz and 32 GB memory.

We conduct both simulated and real denoising experiments. In simulated experiments, the noisy data are generated by adding synthetic noises to the original ones, and the denoising performance is evaluated by both quantitative measures and visual quality. In real experiments, the goal is to recover real-world data without knowing the ground-truths, and the denoising results are mainly judged by visual quality.

In simulated experiments, we use the following four quantitative measures: relative error (ReErr), erreur relative globale adimensionnelle de synthèse (ERGAS) [

47], mean of peak signal-to-noise ratio (MPSNR), and mean of structural similarity (MSSIM) [

48]. Denoting by

an estimation to the original data

, the four measures of

with respect to

are defined as follows:

where the details of SSIM can be found in [

48]. In general, better denoising results have smaller ReErr and ERGAS values and larger MPSNR and MSSIM values.

4.1. Synthetic Data Denoising

This section presents simulated experiments on synthetic data denoising. The original data are random low-rank tensors generated by the Tucker model with size and rank , i.e., , where the core tensor and each factor matrix () are drawn from standard Gaussian distribution. We consider two rank settings and . The original data are normalized to have unit mean absolute value, i.e., . We test the following three kinds of synthetic noises.

Gaussian noise: all entries mixed with Gaussian noise .

Gaussian + sparse noise: 80% entries mixed with Gaussian noise and 20% with additive uniform noise between .

Mixture noise: 40% entries mixed with Gaussian noise , 20% with Gaussian noise , 20% with additive uniform noise between , and 20% missing (the locations of missing entries are not given as prior knowledge).

Table 2 reports the ReErr values and execution time of different methods on synthetic data denoising, where every result is an average over ten trials with different realizations of both data and noise. Regarding the denoising accuracy, our method consistently attains comparable or lower ReErr values than the competing methods, and its superiority becomes more significant as the noise complexity increases. Regarding the computational speed, LRTA is generally the fastest method, while our method is the slowest in all cases. The relatively high cost of our algorithm is mainly due to two facts: computing variables corresponding to all three modes requires three times more calculations than those in matrix-based methods; updating the factor matrices row by row is much slower than updating them as a whole in other tensor-based methods. An acceleration of our implementation will be left to future research.

4.2. MSI Denoising

This section presents simulated experiments on MSI denoising. The original data are six MSIs (

Beads,

Cloth,

Hairs,

Jelly Beans,

Oil Painting,

Watercolors) from the Columbia MSI Database (

http://www1.cs.columbia.edu/CAVE/databases/multispectral) [

49] containing scenes of a variety of real-world objects. Each MSI is of size

with intensity range scaled to

. We test the following two kinds of synthetic noises.

Gaussian noise: all entries mixed with Gaussian noise . The signal-to-noise-ratio (SNR) value averaged over all 31 bands and all six MSIs is dB.

Mixture noise: 60% entries mixed with Gaussian noise , 20% with Gaussian noise , and 20% with additive uniform noise between . The SNR value averaged over all 31 bands and all six MSIs is dB.

Table 3 reports the quantitative performance of different methods on MSI denoising, where every result is an average over six testing MSIs. For Gaussian noise, our method achieves comparable denoising performance to LRMR, LRTA, and KBR-RPCA. For mixture noise, our method performs better than the competing methods in terms of all three quantitative measures, and KBR-RPCA is the second best.

Figure 2 shows the average PSNR and SSIM values across all bands of the denoising results by different methods. For easy observation of the details, we plot the differences between our results and the competing ones at larger scales. It can be observed that our method achieves comparable or better performance for most bands, while KBR-RPCA exhibits the best robustness over all bands.

Figure 3 shows two examples on MSI denoising under Gaussian noise and mixture noise. These figures suggest that the results by the competing methods generally maintain some noise or alter image details, whereas our results exhibit higher visual quality in both noise removal and detail preservation. For better visualization, we enlarge a certain patch and show the corresponding error map, which highlights the difference between the denoised patch and the original one. A close inspection reveals that our error maps contain less color information than the competing ones, indicating that our method better recovers the spatial-spectral structures of the original MSIs.

4.3. HSI Denoising

We conduct both simulated and real experiments on HSI denoising.

Gaussian noise: all entries mixed with Gaussian noise . For DCmall, the SNR value of each band varies from 6 to 20 dB, and the mean SNR value of all 160 bands is 13.79 dB. For Cuprite, the SNR value of each band varies from 16 to 20 dB, and the mean SNR value of all 89 bands is 18.69 dB.

Speckle noise: all bands are corrupted by non-i.i.d. speckle noise with signal-dependent intensity, which is simulated by multiplicative uniform noise with mean 1 and variance randomly sampled from for each band. For both DCmall and Cuprite, the SNR value of each band varies from 3 to 30 dB. The mean SNR value of all 160 bands in DCmall is 19.52 dB, and that of all 89 bands in Cuprite is 20.03 dB.

Mixture noise: all bands are corrupted by non-i.i.d. Gaussian noise with zero-mean and band-dependent variances, and the SNR value of each band is uniformly sampled from 10 to 20 dB. Then, we randomly choose 90/50 bands in DCmall/Cuprite to add complex noises: the first 40/20 bands are corrupted by stripe noise with stripe number between and stripe intensity between ; the middle 40/20 bands are corrupted by deadline with line number between ; to entries in the last 40/20 bands are corrupted by speckle noise with mean 1 and variance . Thus, each band is randomly corrupted by one to three types of noises. For both DCmall and Cuprite, the SNR value of each band varies from 4 to 20 dB. The mean SNR value of all 160 bands in DCmall is 11.62 dB, and that of all 89 bands in Cuprite is 12.04 dB.

Table 4 presents the quantitative performance of different methods on simulated HSI denoising, where every result is an average over five trials with different noise realizations. Compared with the competing methods, our method consistently yields better performance in terms of MPSNR, MSSIM, and ERGAS in all cases.

Figure 4 plots the average PSNR and SSIM values across all bands by different methods, as well as the differences between our results and the competing ones at larger scales. These results suggest that our method achieves leading quantitative performance for most bands. We also observe that the matrix-based competing methods LRMR, MoG-RPCA, and NMoG-LRMF suffer from sharp PSNR and SSIM drops at certain bands in the cases of speckle noise and mixture noise, e.g., bands 40–80 in

DCmall and bands 40–60 in

Cuprite. In comparison, our method does not exhibit such phenomenon, which demonstrates its robustness over entire HSI bands.

Figure 5 shows two denoising examples on typical bands in

DCmall and

Cuprite. The noisy band in

DCmall is severely contaminated by a mixture of Gaussian noise, deadline, and speckle noise; the noisy band in in

Cuprite is overwhelmed by heavy speckle noise. We observe that the matrix-based methods LRMR, MoG-RPCA, and NMoG-LRMF, although adopting flexible noise priors, cannot adequately separate the original HSIs from such severe degradations, especially for

Cuprite with fewer spectral bands. As for tensor-based methods, LRTA can hardly reduce the noise, while PARAFAC leaves all the deadlines. Their poor performance is due to the Gaussian noise assumption, which is not able to fit complex noise. In comparison, adopting more intrinsic data and noise priors, KBR-RPCA and our method yield satisfactory denoising results in both cases. Compared with KBR-RPCA, our method preserves finer HSI structures with less residual noise, which can be seen from the demarcated patches and the corresponding error maps. Our better performance is mainly attributed to the non-i.i.d. MoG noise prior, which has a better fitting capability than the Gaussian + sparse assumption in the RPCA framework.

Real HSI denoising. Our experiment uses two real HSI datasets:

Indian Pines (

https://engineering.purdue.edu/~biehl/MultiSpec/hyperspectral.html ) of size

and

Urban (

http://www.tec.army.mil/hypercube ) of size

. In both datasets, some bands are polluted by atmosphere and water absorption with little useful information. We do not remove them, to test the robustness of different methods under severe degradation. The intensity range of the input HSI is scaled to

.

Figure 6 shows a denoising example on band 220 in

Indian Pines. One can see that the original band is overwhelmed by noise with almost no useful information. From the denoising results by the competing methods, we observe that LRTA fails to handle such severe degradation; MoG-RPCA still leaves much noise in the whole image; LRMR, PARAFAC, and KBR remove more noise but simultaneously lose tiny image details; NMoG-LRMF yields a visually satisfactory result, but seems to produce false edges in the demarcated patches. On the other hand, the proposed method outperforms the competing methods in terms of both noise removal and detail preservation.

Figure 7 presents a classification example on

Indian Pines. This test aims to provide a task-oriented evaluation of the denoising performance of different methods, from the perspective of the influence on the classification accuracy. In the ground-truth classification result, a total of 10249 samples are divided into 16 classes, and the number of samples in each class ranges from 20 to 2455. To conduct a supervised classification, we randomly choose ten samples for each class as training data, and use the remaining samples in each class as testing data. Then, the support vector machine (SVM) classification [

50] is performed on the noisy image and its denoised versions by different methods, and the classification results are quantitatively evaluated by overall accuracy (OA). It can be seen that noise corruption significantly limits the classification accuracy, and the classification results of the denoised HSIs are more or less improved since the noise is suppressed. Among all denoising methods, our method leads to the highest OA value, demonstrating its superiority in benefiting the SVM classification.

Figure 8 shows a denoising example on band 99 in

Urban under slight noise. In this example, the original band is mainly corrupted by several vertical stripes with intensity 0.01∼0.02. To visually evaluate the denoising performance, we show color maps of the noise components estimated by different methods, which should highlight the underlying noise with as few image structures as possible. For better visualization, we also plot the corresponding vertical mean profiles. From these results, we observe that LRTA fails to recognize the stripes, while the other competing methods can detect the stripes but simultaneously remove structural information of the original image. In comparison, our method extracts clearly the stripes with very few image features, indicating a more accurate signal-noise separation.

Figure 9 displays a denoising example on band 206 in

Urban under severe noise, including the noisy/denoised bands and the corresponding horizontal mean profiles. One can see that the original band is contaminated by a mixture of stripes, deadlines, and other complex noise, leading to rapid fluctuations in the horizontal mean profile. Regarding the denoising results by different methods, LRTA can hardly suppress the noise; LRMR, MoG-RPCA, PARAFAC, and KBR-RPCA still leave some horizontal stripes, and the corresponding curves show evident fluctuations; NMoG-LRMF removes the noise and produces a smooth curve, but it also introduces some spectral distortions in certain regions, such as the red demarcated patch. Comparatively, our method effectively attenuates the noise and simultaneously reveals the original spatial-spectral information, providing a better trade-off between noise removal and detail preservation.

4.4. Discussion

In

Section 3.3, we have developed adaptive strategies for the selection of hyperparameters involved in our model. These strategies themselves also introduce additional hyperparameters, which are fixed as default settings in our experiments. This section discusses the selection of those hyperparameters and tests their effects on the denoising performance.

The selection of and . The hyperparameters and are introduced in the update formula of the threshold (25), in order to determine the Tucker rank estimation . In (25), controls the upper bound of the sum of the dropping singular values in each iteration. In general, a small leads to a slow but stable rank decreasing process; a large makes this process fast but aggressive, increasing the risk of underestimating the true rank. On the other hand, in (25) controls the lower bound of the threshold , which provides a mechanism for avoiding overestimating the true rank. Roughly speaking, larger values of make our algorithm easier to reduce the rank. Please note that a too large tends to underestimate the true rank, e.g., if one sets , then the rank decreasing process cannot stop until the rank reduces to zero.

Table 5 investigates the effects of

and

on the denoising performance of our method. This test is based on synthetic data denoising, and the original data are with size

and Tucker rank

. We observe that our method yields rather stable ReErr values with exact estimations of the true rank, under a wide range of settings of

and

. One exception is the mixture noise case with

, where the true rank is underestimated, resulting in an evident increase in ReErr. Since our method is robust with a reasonable range of

and

, we choose

and

as their default settings in all experiments.

The initialization of . The hyperparameter

controls the number of Gaussian components in the MoG noise prior (2). In

Section 3.3, we have developed an adaptive strategy to reduce

from a large starting point to the value matching the noise complexity. However, it remains a problem to choose an appropriate initialization

.

Table 6 studies the effects of

on the denoising performance of our method. This test is based on synthetic data denoising, and the original data are with size

and Tucker rank

. From these results, we have the following two observations. First, as expected, the developed selection strategy can find suitable values of

fitting the noise distribution. Second, our method performs poorly when

is too small to provide sufficient noise fitting capability, while its performance tends to be stable after each

is larger than a reasonable value, e.g., 8. Therefore, we choose the default setting of

as

in all experiments, since it is robust enough to most realistic noise.