1. Introduction

Smart infrastructure has become an attractive research field in recent years. It integrates technologies of sensing, control, and Internet of Things (IoT) to provide a more secure, efficient, and convenient living space for the residents. While energy management [

1] and the IoT network [

2] are some of the research aspects, there is another growing trend of deploying domestic robots in smart infrastructure to enhance the quality of living and working. Personal robots that do laundry or floor cleaning would reduce the housework burden, and assistive mobile robots can help the elderly and disabled to live with more ease and security [

3]. To interact with these robots, a natural and intuitive interface is needed for interpreting human’s intention. Hand gesture recognition is just the appropriate tool, as we naturally use our hands to convey information while communicating with others [

4]. In previous research, hand gesture recognition has been applied to many smart-home applications, including the control of appliances like lamp [

5] and TV [

6], interaction with computer games [

7], and robot control [

8].

Hand gestures can be categorized into two types: static and dynamic gestures. The former is generally defined by a static posture of the hand and fingers, and the latter is formed by the dynamic movement of the hand and arm. Though non-wearable camera system can recognize both types of gestures [

9], it suffers from a restricted working region that the user has to stand in front of the camera. In contrast, the problem can be solved by the thriving technology of wearable IoT [

10]. Using a wearable camera, the user can walk from room to room or even going outdoors, while keeping the capability of sending gesture commands.

Using wearable camera for hand gesture recognition has many advantages over other types of sensors such as surface electromyography (sEMG) and inertial sensors. Though sEMG sensors can detect useful signals from muscle activities [

11], there remain some open questions about its sensitivity to different subjects and different mounting positions. Inertial sensors mounted around user’s wrist [

12] can obtain the posture and acceleration of the hand to recognize dynamic gestures. However, when it comes to static gestures, a set of bulky sensors need to be placed at each finger [

13] to know the fingers’ bending condition. Whereas for the wearable camera, since both the hand and background images can be captured by the camera, it is promising to achieve recognition of both static and dynamic gestures. Moreover, through the analysis of background image, it also provides other smart-home usages such as text tracking for the blind [

14], diet monitoring of the elderly [

15], and recognizing daily activities [

16] to ensure the security of the elderly who stay alone at home.

Several vision-based wearable systems have been developed in past years for hand gesture recognition. Valty et al. [

17] analyzed the goals and feasibility of using wrist-worn RGB camera. Howard et al. [

18] used infrared sensors beneath the wrist to detect whether a finger is bending. Later, Kim et al. [

19] utilized a combination of infrared LED and infrared camera beneath the wrist to calculate the bending angle of each finger. However, placing a large device beneath the wrist can be intrusive when people use a mouse on the table or stand straight where the underside of the wrist naturally contacts with the table surface or leg. Lv et al. [

20] directly placed a smartphone on the back of the wrist, whose hardware is too cumbersome and the software only detects gestures formed by the index finger and middle finger. Sajid et al. [

21] used Google Glass on the head to recognize in-air hand gestures, but the user has to look at their hands all the time, which is inconvenient. A more thorough investigation on wearable cameras is needed to make it more user-friendly and convenient.

Many algorithms have been proposed to recognize hand gestures from the image. Some are machine learning algorithms using the features of Gabor filters [

22], Haar [

23], HOG [

24], and CNN [

25], or extracting features from the hand edge [

26] or hand shape [

7]. However, these features are not so intuitive to represent the hand gestures formed by different fingers configurations. If the customer wants to define a new class of gesture, such as pointing to the left or right using the index finger, many new training images need to be collected to retrain the model. Other algorithms take fingers as the features. The detection of fingers are based on ridge detection [

27], convex hull [

28], curvature [

9], drawing a circle on the hand centroid [

29,

30], or convex decomposition [

31]. Nevertheless, the method in [

31] is time-consuming, and the rest [

9,

27,

28,

29,

30] might have difficulties when handling distorted fingers and images taken by the wearable camera. As for the subsequent classification, algorithms in [

27,

30] are learning-based which require many training images for each class. Moreover, works in [

9,

28,

29] used rule classifiers and zero training image, thus they lacked some adaptiveness for gestures with distortion and varying postures. Therefore, a balance should be kept between the convenience and robustness.

The contribution of our work is threefold: a novel wearable camera system, an improvement on finger segmentation, and the recognition of fingers based on single template image. To our best knowledge, this is the first wearable camera system worn on the backside of the wrist for recognizing hand gestures (if the one using a smartphone [

20] is not included). It is less intrusive than camera systems beneath the wrist [

19] and doesn’t require eye gaze as the one worn on the head [

21]. We also innovated on the algorithms. A two-phase distance transformation method is proposed to segment fingers out of the palm. Then, the angles of the detected fingers with respect to the wrist are calculated. For those fingers not in sight, all their possible positions are predicted based on the known fingers and the template gesture. After that, these predicted gestures with a complete five fingers are compared to the template, and the one with a minimal error is the recognition result.

The rest of the paper is arranged as follows: the architecture of the wearable hand gesture recognition system is described in

Section 2. The algorithms of finger detection, finger angle prediction, and template matching are presented in

Section 3 which are then evaluated on the dataset in

Section 4. Furthermore, an application of robot control is given in

Section 5. Finally, we discuss and conclude our work in

Section 6 and

Section 7.

2. System Architecture

The architecture of the proposed hand gesture recognition system is shown in

Figure 1. The wearable wrist-worn RGB camera device, WwwCam, is worn on the backside of the user’s right wrist to shoot images of the hand, which are then sent to the computer through Wi-Fi. The computer recognizes the hand gesture and applies it to smart-home applications such as lighting a lamp, switching TV channels, and controlling domestic robots. Gestures used in this system are static gestures, which are defined by the status of each finger and can be inferred from a single image, such as gesture “Five” with five spread fingers, and gesture “Nine” with three fingers, as shown in the two small pictures in

Figure 1.

The configuration of WwwCam is displayed in

Figure 2. An RGB monocular camera is fixed on a holder and worn on the wrist through a flexible wristband. Due to the close distance between the camera and the hand, the camera with a wide camera angle of 150° was selected so that the hand could fall into the camera view. The camera is then connected to a Raspberry Pi Zero W through USB cable. This Raspberry Pi is a powerful microcomputer with a BCM2835 chip of 1 GHz single-core CPU and a BCM43438 chip for 2.4 GHz 802.11n wireless communication. There might be other options for the microprocessing unit, such as Arduino. However, the clock frequency of the Arduino is usually less than 0.1 GHz with limited capability for the processing of complex tasks such as image processing. Moreover, the Raspberry Pi Zero W with a Linux operating system has drawn considerable interest from researchers, due to its well-developed drives for the peripheral modules like cameras, a Wi-Fi module for various communications, as well as a relatively small size of 65 × 30 × 5.1 mm

3. Therefore, the Raspberry Pi was chosen as the processor of the device.

We installed a Linux system called Raspbian on the Raspberry Pi and programmed on it using Python. The program reads out the video streaming from the camera and transmits it to the laptop through Wi-Fi by TCP protocol using the “socket” library. This process is a simulation of the transmission of video from the wearable device to the computer in a smart-home using domestic Wi-Fi environment. Then, on the laptop, a mathematical software, MATLAB, receives the video and runs the hand gesture recognition algorithm.

The wearable device is supplied by a 5 V power bank with a capacity of 2200 mAh. The Raspberry Pi consumes an average current of 0.30 A when reading and transmitting the video, and consumes 0.11 A at the idle state. Thus, when the user continuously uses it to send gesture commands, the device could be running for more than five hours. Several approaches can be applied to further extend the battery life, such as using the cell with a larger energy density, reducing the frequency of image acquisition, and optimizing the software to keep the device in sleep mode when the user is not using it.

The wristband and the support of the camera are adjustable, so the camera can be set to the proper position and orientation to have a good view of the hand— this is: when five fingers are stretched out: (1) the middle finger is at about the middle of the figure; (2) the highest fingertip is above the 1/2 image height; and (3) the wrist is at the bottom of the image. A feasible position can be seen from

Figure 1 and

Figure 2.

3. Algorithm

In the proposed hand gesture recognition scheme, the recognition of static hand gestures is based on the idea that if we find the fingers in the image and acquire their names, we know the gesture type. Thus, we focused on improving the finger segmentation, and then proposed a simple but effective finger angle prediction and a template matching metric to recognize each finger’s name.

The images in the dataset we used were first resized from the original size of 960 × 1280 pixels to 120 × 160 pixels for more efficient computing. Then, the hand gesture recognition algorithm is achieved in four steps. (1) First, the hand is segmented from the image by setting thresholds in YCbCr color space; (2) finger regions are separated from the palm through a two-phase distance transformation. Then, we locate each finger and calculate their angles with respect to the wrist; (3) all possible positions of the remaining fingers are predicted; (4) among them, the one that is the most rational and similar to the template is considered as the right case. Its corresponding gesture is the recognition result.

3.1. Hand Segmentation

Many methods based on skin color have been proposed to detect hand, where the original RGB image is often converted to another color space such as YC

bC

r, HSV, and UCS for further processing [

32]. Since this is not our focus, we chose the experimental environment with a background color different from the skin, and adopted a simple method to segment hand region by thresholding on the YC

bC

r color space. First, the image is converted using Equation (1) [

33]. Then, for each pixel, if all three of its color channels are between the corresponding lower and upper thresholds, this pixel belongs to the hand and is marked as white. Otherwise, it is marked as black.

After setting thresholds to obtain a binary image, we apply it with an erosion, dilation, and filling holes operations to eliminate noises. Among the remaining connected components, the one that contains the bottom middle point of the image is considered to be the hand. All subsequent operations are done within this region of interest. The procedures of segmentation, thresholds, and outcome are shown in

Figure 3. Notice that the image we used has a small size of 120 × 160 pixels, so figures of the hand shown in this paper might not look so smooth.

3.2. Finger Detection

3.2.1. Segment Fingers

We proposed a two-phase distance transformation method to segment fingers. Distance transformation (DT) of the Euclidean type calculates the Euclidean distance between a pixel and its nearest boundary in linear time [

34]. In previous studies [

29,

30], it was used to locate the palm centroid for drawing a circle with a proper radius to separate fingers and palm. Here, we discovered another of its property that it could retrain the shape of the palm by eliminating spindly fingers with a performance better than circle-based segmentation.

In phase one, the edge of the hand mask is set as the boundary for the first DT. The resultant image is shown in

Figure 4a, where whiter pixels represent a further distance to the boundary. The radius of the hand’s maximum inner circle

R0, which equals to the largest distance value in DT image, is used as a reference for following operations. Then, the region with a distance value larger than

din is found and named as the palm kernel which would be used for the second DT. This process could also be comprehended as contracting the hand towards its inside to eliminate fingers while retaining the shape of the palm. The value of the contraction distance

din was set as

R0/2.

In phase two, the palm kernel is utilized as the second boundary for DT, displayed in

Figure 4b. After DT, the region with a distance value no greater than

din is considered as the palm, which is similar to expanding the palm kernel to its original shape. Further, the regions with a value larger than (

din + dout) are considered as the finger regions, where

dout is set as

R0/

7 after experiment. The reason for setting this expansion distance

dout is twofold: (1) to exclude narrow regions that are not fingers, either caused by noises or a not fully bent finger; and (2) to more robustly estimate the region width to determine the number of fingers it contains. The results of several other gesture samples are shown in

Figure 4c–f.

3.2.2. Calculate Finger Angles

In the palm region, the palm’s centroid point and the bottom middle point are denoted as

Pc0 and

P0, respectively shown in

Figure 5a. We established a coordinate system on the left bottom corner of the image, so a pixel

Pi can be represented as (

Pix,

Piy). Afterwards, we define the inclination angle of two points <

Pi,

Pj> as follows:

The orientation of the hand is then calculated as (<P0, Pc0> − π/2). In other parts of the algorithm, this value is by default subtracted from all other angle values to amend the orientation problem.

In each finger region, the left and right corners are defined as the points which are on the finger’s edge, but one pixel away from the hand edge. Among these points, the one with the largest angle with respect to

P0 is considered as the left corner, while the one with the smallest angle is the right corner. The distance between them is used to estimate the number of fingers in this region, which equals to:

where

ni is the number of fingers in the

ith region,

Wi is the width of the

ith region, and

wave is the average width of a finger calculated from the template image. The total number of fingers in this picture equals to

N = ∑

ni, which are indexed from left to right as 1–

N.

Then, each finger is enlarged to the palm to obtain the whole finger region, as shown in

Figure 5b. They are expanded for four times, each time by three pixels. So, the total extended distance is twelve, which is about twice as

dout to ensure the expansion is complete.

The left and right corners are once again located. For the

ith region, a number of (

ni-1) finger corners are uniformly inserted between the left and right corners. Then, between each pair of the adjacent corners, a finger point is inserted, marked in red hollow circles in

Figure 5b. All finger angles are indexed from left to right as

Pi,

i = 1–

N. The adjacent two corners at the left and right side of

Pi are called finger left point

PLi and finger right point

PRi. The angle of the

ith finger is defined as

A[

i] which equals to <

P0,

Pi>. The finger left and right angles

AL[

i] and

AR[

i] are defined in the same way. Until now, each finger in the image is detected and described by three angles. The overall procedures are described in Algorithm 1.

| Algorithm 1 Finger detection |

function Finger Angles = Finger detection (Hand Mask)

[Palm Kernel] ] = 1st Distance Transformation (Boundary = Edge of Hand Mask)

[Palm, Finger Regions] = 2rd Distance Transformation (Boundary = Palm Kernel)

[Left & Right Corners] ] = Find Corners (Finger Regions)

[Finger Number in Each Region] = Evaluate Distance (Left & Right Corners)

[Finger Regions]] = Enlarge Fingers to the Palm (Finger Regions)

[Left & Right Corners]] = Find Corners (Finger Regions)

[Finger Angles] = Calculate Finger Angles (Left & Right Corners, Finger Number)

[Boundary Angles] = Find the Left and Right Boundary Points (Edge of Hand Mask) |

3.2.3. Find Boundaries

Before the next step, left and right boundary points are detected, which indicate a possible angle range of five fingers, as shown in blue circles in

Figure 5b. For the left boundary, the search starts from the left bottom point of the hand contour and goes clockwise along the contour. When the slope angle of the tangent line at some point is smaller than π/3, or the searching point reaches the leftmost finger left corner, the search stops and the boundary point is found. The rule for the right boundary is the same. To be more specific, the two endpoints of the tangent line are chosen to be five pixels away, shown in baby blue color in the figure. The first, twenty points at the beginning of the search are skipped over because they might contain confusing contour of the wrist. Finally, left and right boundary angles

ABL and

ABR are calculated using the left and right boundary points.

3.3. Finger Angle Prediction

The proposed algorithm requires one template image for each person to implement the template matching. The template is the gesture “Five”, as shown in

Figure 6a, whose five stretched fingers are detected and calculated using the method described above. The five types of fingers are indexed from the thumb to the little finger as 1 to 5. For the

ith finger, its finger angle is noted as

AT[

i], and the left and right finger angles are

ATL[

i] and

ATR[

i] respectively, where

i = 1–5.

We represent a gesture using a five-element tuple

Y. If the

ith finger type exists in the gesture,

Y[

i] equals 1, otherwise 0. For the template gesture,

YT equals [1, 1, 1, 1, 1]. For an unknown gesture with

N fingers,

Y could be

M possible values corresponding to

M different gestures, where

M equals to:

We denote the jth possible gesture as Yj. In order to compare Yj with YT to determine which candidate is most similar to the template, we calculate the five finger angles of Yj, named as AEj[i], AELj[i], AERj[i], j = 1–M, and i = 1–5. These five fingers are defined as extended fingers, where N of them are already known, and the remaining (5-N) ones are predicted using the following method.

If Yj[i] equals 1, then this finger is already known. If Yj[i] equals 0, then this finger needs to be hypothetically inserted near the known fingers. The insertion works as such: if several fingers are inserted between two known fingers, they are uniformly distributed between the two. If several fingers are added at the left of the leftmost finger, then the gap between each pair of fingers equals to the corresponding one in the template gesture. So too does the case of inserting fingers to the right. Moreover, for the left and right angles of an inserted finger with the ith index, the difference between them is equal to the corresponding ith finger in the template. The detailed implementation is described in Algorithm 2.

| Algorithm 2 Finger angle prediction. |

function AE, AEL, AER = Finger prediction(A, AL, AR, AT, ATL, ATR, Y)

# A[N]: N known finger angles of the image.

# AT[5]: Five finger angles of the template gesture “Five”.

# Y[5]: Five binary elements reprensting a possible gesture of the image.

# AE[5]: Five extended finger angles under the case of Y[5].

# Step 1. Initialize known fingers

AE = [0, 0, 0, 0, 0]

IndexKnownFingers = find(Y[5] == 1) # e.g., Y=[0, 1, 0, 0, 1], return [2,5];

AE[IndexKnownFingers] = A

# Step 2. Predict fingers on the left

for i = min(IndexKnownFingers) - 1 to 1 step -1

AE[i] = AE[i + 1] + (AT[i] - AT[i + 1])

# Step 3. Predict fingers on the right

for i = max(IndexKnownFingers) + 1 to 5

AE[i] = AE[i - 1] + (AT[i] - AT[i - 1])

# Step 4. Predict fingers which are inserted between the two

for i = 1 to length (IndexKnownFingers) - 1

IndLeft = IndexKnownFingers[i]

IndRight = IndexKnownFingers[i + 1]

for j = IndLeft + 1 to IndRight - 1

AE[j] = AE[j - 1] + (AE[IndRight] - AE[IndLeft]) × (j - IndLeft) / (IndRight - IndLeft)

# Step 5. Calculate finger left and right angles

AEL[IndexKnownFingers] = AL

AER[IndexKnownFingers] = AR

for i = 1 to 5

if Y[i] == 0

AWidth = ATL[i] - ATR[i]

AEL[i] = AE[i] + AWidth/2

AER[i] = AE[i] - AWidth/2 |

Three of the possible gestures and their extended finger angles are shown in

Figure 6b–d, where b,c are false predictions, and d is correct. Red lines indicate the detected fingers, and the oranges lines are the predicted positions of the two unknown fingers.

For b, the two orange fingers are too away from the center and exceed the right boundary. Thus, it is not a rational prediction. For c, a predicted finger is inserted between two known fingers, leading to a too small angle gap. Based on these observations, four standards are brought forward to evaluate a candidate gesture.

3.4. Template Matching

Heretofore, for a given image, we have found its

N fingers and calculated its finger angles

A[

i], A

L[

i], and A

R[

i], where

i = 1–

N. Based on these angles and the template’s finger angles

AT, we have predicted all the possible positions of the rest (5-

N) fingers to form a set of complete hands with five fingers and five extended angles

AEj[

i], where

j corresponds to the

jth possible gesture

Yj,

i = 1–5, and

j = 1–

M. All notations are shown in

Figure 7.

Now we need to decide which gesture in {

Yj} is most correct. Thus, we defined a metric

L(

Yj), also known as cost function, to assess the dissimilarity between a candidate

Yj and the template. Further, we also add two terms to evaluate the rationality of the gesture

Yj itself. Finally, the gesture with a minimal error is considered to be the true case. The metric is given below:

The cost value consists of four parts:

L1 is the dissimilarity of finger angles between the extended fingers and the template. It is the primary term for matching two gestures.

L2 is the dissimilarity of the differential of finger angles. The angle interval between two adjacent fingers is supposed to remain constant. When a predicted finger is mistakenly inserted between two adjacent fingers, L2 will be very large. After the experiment, we set the exponent of this equation ExpL2 = 2.

L3 is the penalty for two adjacent fingers who stay too close to each other. If the right corner of the ith finger is on the right of the left corner of the (i + 1)th finger, then a large penalty is produced. This term is only considered for those fingers inserted between the known two fingers. In the equation, B[i] equals 1 if the ith finger is inserted between the two. Otherwise, B[i] equals 0. Since L3 is a penalty, its exponent ExpL3 should be larger than L1 and L2 which is set to 4.

L4 is the penalty when some fingers are outside the boundary. Under the right circumstances, the right boundary must be on the right of the little finger, and the left boundary must be on the left of the index finger. Considering that the detection of boundary points is not very accurate, three looser criteria are applied here: (1) if the Nth finger is not the little finger, then its right angle AR[N] should be on the left of (larger than) the right boundary ABR; (2) if the Nth finger is the little finger, then its right angle AR[N] should be near the right boundary ABR; (3) if the 1st finger is neither the thumb nor the index finger, then its left angle should be on the right of (smaller than) the left boundary ABL. In the calculation, a tolerance of 5° is considered, as shown in Equation (9). For gestures not satisfying either of these three criteria, a large penalty Inf of 99,999 is imposed.

After adding up the above four terms for each candidate gesture, the one with a minimal error is the classification result. Under this approach of finger angle prediction and template matching, instead of a large training set containing all target gestures, it takes only one gesture “Five” as the training set to recognize all gestures formed by different combinations of fingers. In theory, a total number of 25 = 32 gestures can be recognized. It is a great advantage of the proposed algorithm that newly defined gestures can be easily added to the gesture library to serve as new commands.

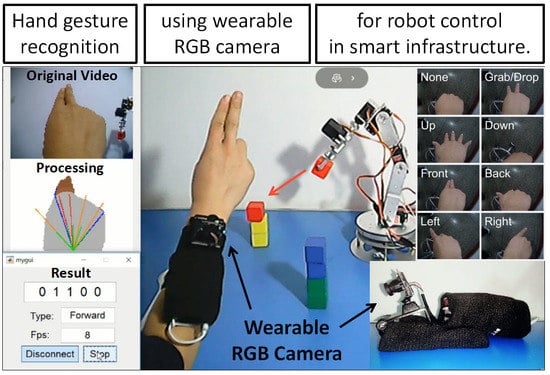

5. Application

The proposed wearable camera has many interesting applications. For example, for a user at home, he or she can perform fingerspelling digits to remotely switch TV channels at will. When the TV has a video-on-demand service, the use of fingerspelling alphabets, such as “O” for “OK” and “B” for “Back”, becomes an intuitive way to select the menu item. To demonstrate the practicability of the device, here we applied it to a more complex task of operating an assistive robotic arm to transport small wooden building blocks. This simulates the scenario for a disabled person using his/her hand gestures to command an assistive robot to fetch objects (see

Figure 12). The video demo can be found at the link in the

Supplementary Materials.

For the simplicity and intuitiveness of the control commands, three old gestures and five new gestures were used, namely “None”, “Grab/Drop”, “Up” & “Down”, “Front” & “Back”, and “Left” & “Right”, shown in

Figure 12e. The user performed these gestures with his right hand to control the robotic gripper to move towards the desired direction, as well as grab/release building blocks. In the video demo, we successfully transported two red blocks from on the table to the top of two yellow blocks. A snapshot of the video is shown in

Figure 12a–d. The detailed implementation is described below.

• Gestures recognition

The recognition algorithm is slightly modified for the new set of gestures. Among the eight gestures, the ones formed by different fingers were recognized by the steps described in the main article. The remaining ones were classified by the following approach: “Grab/Drop” and “Front” are distinguished by looking at the number of fingers in each region. “None” and “Down” are separated by the height of the palm centroid. “Left” and “Right” are differentiated by checking the direction of the finger, which was calculated by drawing a line between finger point and fingertip. Fingertip is the point on the intersection of hand contour and finger contour, and has a maximal sum of the distance to the two finger corners. Before recognition, we took one picture for each gesture to obtain the margin of different heights and the margin of different finger directions, and used the two margins to achieve the above classification.

• Control of robot arm

The robot has five degrees of freedom on its arm and one degree on its gripper. We adopted Resolved-Rate Motion Control [

35] (pp. 180–186) to drive the gripper towards the direction of the front, back, up, and down. The linear speed was set at a moderate level of 0.04 m/s so that the user could give the right command in time. Moreover, the left and right rotation of the body of the robot arm was achieved by the bottom servo motor at an angular velocity of 0.2 rad/s. Moreover, since the frame rate of the video might drop to 8 frames per second under poor lighting conditions, we set the frame rate and control frequency as 8 per second, which was still fast enough for real-time control.

6. Discussion

The proposed finger angle prediction and matching method is based on the intuitive features of the fingers, which brings the advantage that only very few training images (one for each person) are needed. In general, for machine learning algorithms, there should be training examples for each class, and they ought to be comprehensive and cover all circumstances to make the model robust. When adding a new class, new training images of this class need to be gathered. In the proposed method, however, by manually selecting and processing the specific features (fingers) for the specific target (hand gestures), the number of the required training image is reduced to only one. For the gesture “Back” and “Front” in the application section, they were recognized without the need of a new training set, but only based on the template of gesture “Five”.

There are also some drawbacks to the current work. After the user wears the device, the camera needs to first take a picture of the hand in order to utilize more knowledge about the camera posture and hand configuration. Further, the color thresholding method we used to segment the hand is sensitive to the variation of lights and background colors. More complex and robust methods should be adopted. The estimation of the hand’s orientation and the left and right boundaries needs refinement as well. Future work might exploit the feature of hand shape and fuse it into our current scheme to improve the performance.