- 1Department of History, Society and Human Studies, University of Salento, Lecce, Italy

- 2Laboratory of Applied Psychology and Intervention, University of Salento, Lecce, Italy

- 3Department of Clinical and Experimental Medicine, University of Messina, Messina, Italy

- 4Institute of Applied Sciences and Intelligent Systems, National Research Council, Lecce, Italy

- 5Faculty of Biomedical Sciences, Università della Svizzera Italiana, Lugano, Switzerland

Several studies have found a delay in the development of facial emotion recognition and expression in children with an autism spectrum condition (ASC). Several interventions have been designed to help children to fill this gap. Most of them adopt technological devices (i.e., robots, computers, and avatars) as social mediators and reported evidence of improvement. Few interventions have aimed at promoting emotion recognition and expression abilities and, among these, most have focused on emotion recognition. Moreover, a crucial point is the generalization of the ability acquired during treatment to naturalistic interactions. This study aimed to evaluate the effectiveness of two technological-based interventions focused on the expression of basic emotions comparing a robot-based type of training with a “hybrid” computer-based one. Furthermore, we explored the engagement of the hybrid technological device introduced in the study as an intermediate step to facilitate the generalization of the acquired competencies in naturalistic settings. A two-group pre-post-test design was applied to a sample of 12 children (M = 9.33; ds = 2.19) with autism. The children were included in one of the two groups: group 1 received a robot-based type of training (n = 6); and group 2 received a computer-based type of training (n = 6). Pre- and post-intervention evaluations (i.e., time) of facial expression and production of four basic emotions (happiness, sadness, fear, and anger) were performed. Non-parametric ANOVAs found significant time effects between pre- and post-interventions on the ability to recognize sadness [t(1) = 7.35, p = 0.006; pre: M (ds) = 4.58 (0.51); post: M (ds) = 5], and to express happiness [t(1) = 5.72, p = 0.016; pre: M (ds) = 3.25 (1.81); post: M (ds) = 4.25 (1.76)], and sadness [t(1) = 10.89, p < 0; pre: M (ds) = 1.5 (1.32); post: M (ds) = 3.42 (1.78)]. The group*time interactions were significant for fear [t(1) = 1.019, p = 0.03] and anger expression [t(1) = 1.039, p = 0.03]. However, Mann–Whitney comparisons did not show significant differences between robot-based and computer-based training. Finally, no difference was found in the levels of engagement comparing the two groups in terms of the number of voice prompts given during interventions. Albeit the results are preliminary and should be interpreted with caution, this study suggests that two types of technology-based training, one mediated via a humanoid robot and the other via a pre-settled video of a peer, perform similarly in promoting facial recognition and expression of basic emotions in children with an ASC. The findings represent the first step to generalize the abilities acquired in a laboratory-trained situation to naturalistic interactions.

Introduction

Emotions are social and dynamic processes, and they serve as early mediators of communication during childhood (Ekman, 1984; Eisenberg et al., 2000; Davidson et al., 2009). Emotions are mental states that, at the same time, define social interactions and are determined by them (Halberstadt et al., 2001). When children express emotions, they convey a message or a need to others who recognize and understand them in order to respond appropriately to the children. Similarly, the understanding of the emotions of others allows children to develop social skills and learn how to become a socially competent partner (Marchetti et al., 2014). Furthermore, emotional competence is one of the pivotal components of many social processes, appropriate inter-individual interactions, and adaptive behaviors (Schutte et al., 2001; Lopes et al., 2004, 2005; Buckley and Saarni, 2006; Nuske et al., 2013). A demonstration of the crucial role of emotional competence as a social skill derives by examining individuals with well-known impairments in social functioning. One such group is composed of individuals with an autism spectrum condition [henceforth ASC (American Psychiatric Association, 2013)], a neurodevelopmental disorder characterized by two core symptoms: social communication deficits (diagnostic criterion A) and a pattern of repetitive and restricted behaviors and interests (diagnostic criterion B). Social communication impairments are the hallmark of ASC, defined in terms of delay in social-emotional reciprocity and nonverbal-communication, and in developing and understanding social relationships (American Psychiatric Association, 2013). As in many other atypically developmental conditions (Marchetti et al., 2014; Lecciso et al., 2016), social communication impairments negatively impact the social functioning of individuals, as explained by the principles of the theory of mind (Baron-Cohen, 2000; Marchetti et al., 2014). To be specific, the deficit in the theory of mind, which is often called mindblindness (Lombardo and Baron-Cohen, 2011), leads children with an ASC to express difficulties in the understanding of the emotions of others that support their tendency of social withdrawal.

Several studies found a degree of delay in the development of emotional regulation functioning in individuals with an ASC, depending on IQ of children (Harms et al., 2010), in terms of facial emotion recognition [henceforth FER; (Hubert et al., 2007; Clark et al., 2008; Uljarevic and Hamilton, 2013; Lozier et al., 2014)] and facial emotion expression [henceforth FEE (Shalom et al., 2006; Zane et al., 2018; Capriola-Hall et al., 2019)]. These two competencies are often identified as being challenging areas for children with an ASC from the first years of life (Garon et al., 2009; Harms et al., 2010; Sharma et al., 2018) and may interfere with day-to-day social functioning even during later childhood and adulthood (Jamil et al., 2015; Cuve et al., 2018). Moreover, recognition and expression of emotions are two related competencies (Denham et al., 2003; Tanaka and Sung, 2016). During face-to-face interactions, an individual should capture the eye gaze of the other first to recognize the specific emotion he/she is expressing, and then to recreate it via an imitating process.

FER delay is related to eye avoidance (Kliemann et al., 2012; Grynszpan and Nadel, 2015; Sasson et al., 2016; Tanaka and Sung, 2016), which interferes with emotional processing and prevents individuals with an ASC from labeling the emotions. Regarding FEE, according to the simulation model (Illness SP-E in Mental, 2007), the delay is mainly related to the broken mirror neuron system (Williams et al., 2001; Rizzolatti et al., 2009), which prevents individuals with an ASC to mentally and physically recreate the observed action/emotion. In summary, individuals with an ASC show a delay in both emotional recognition and expression (Moody and Mcintosh, 2006; Ae et al., 2008; Iannizzotto et al., 2020a). To help them foster those competencies, forefront technology-based interventions have been developed (Scassellati et al., 2012; Grynszpan et al., 2014).

Within the research field of the Social Assistive Robotics system (Tapus et al., 2007; Feil-Seifer and Mataric, 2021), several technological devices have been designed to develop social skills in individuals with an ASC and promote the application of those devices as a daily life routine (Ricks and Colton, 2010). Interventions built based on those devices applied computer technology (Moore et al., 2000; Bernard-Opitz et al., 2001; Liu et al., 2008), robot systems (Dautenhahn and Werry, 2004; Kim et al., 2013; Lai et al., 2017), and virtual reality environments with an avatar (Conn et al., 2008; Welch et al., 2009; Bellani et al., 2011; Lahiri et al., 2011). This massive development in technological devices for the development of social skills in individuals with an ASC receives support from two recent theories on autism: the Intense World Theory by Markram and Markram (2010) and the Social Motivation Theory by Chevallier et al. (2012). According to the Intense World Theory (Markram and Markram, 2010), an autistic brain is constantly hyper-reactive and, as a consequence, perceptions and memories of environmental stimuli are memorized without filter. This continuous assimilation of information creates discomfort for individuals with an ASC who protect themselves by rejecting social interactions. The Social Motivation Theory (Chevallier et al., 2012) argued that individuals with an ASC are not prone to establish relationships with human partners, since they show a weak activation of the brain system in response to social reinforcements (Chevallier et al., 2012; Delmonte et al., 2012; Watson et al., 2015). This should explain the preference for the physical and mechanical world (Baron-Cohen, 2002). Technology-based types of training have the strength and potential to increase engagement and attention of children (Bauminger-Zviely et al., 2013), and to develop new desirable social behaviors (e.g., gestures, joint attention, spontaneous imitation, turn-taking, physical contact, and eye gaze) that are a prerequisite of the subsequent development of emotional competence (Robins et al., 2004; Zheng et al., 2014, 2016; So et al., 2016, 2019).

A huge amount of studies have already demonstrated that interventions applying technological devices have positive effects on the development of social functioning in individuals with an ASC (Liu et al., 2008; Diehl et al., 2012; Kim et al., 2013; Aresti-Bartolome and Garcia-Zapirain, 2014; Giannopulu et al., 2014; Laugeson et al., 2014; Peng et al., 2014; Vélez and Ferreiro, 2014; Pennisi et al., 2016; Hill et al., 2017; Kumazaki et al., 2017; Sartorato et al., 2017; Saleh et al., 2020). Most of the studies in this field adopted robots as social mediators (Diehl et al., 2012), playmates (Barakova et al., 2009), or as behavior-eliciting agents (Damianidou et al., 2020). Several studies reported that human-like robots are more engaging for individuals with an ASC than non-humanoid devices (Robins et al., 2004, 2006). Moreover, robots can engage individuals with an ASC during a task and reinforce their adequate behaviors (Scassellati, 2005; Freitas et al., 2017), since they are simpler, predictable, less stressful, and more consistent even compared with human-human interactions (Dautenhahn and Werry, 2004; Gillesen et al., 2011; Diehl et al., 2012; Yoshikawa et al., 2019).

Two very recent reviews (Damianidou et al., 2020; Saleh et al., 2020) considered studies applying robot-based training to improve social communication and interaction skills in individuals with an ASC. Only 6–10% of the studies reviewed by Damianidou et al. (2020) and Saleh et al. (2020) focused on emotion recognition and expression. Among those studies, four (Barakova and Lourens, 2010; Mazzei et al., 2012; Costa et al., 2013; Kim et al., 2017; Koch et al., 2017) were preliminary research on the software making the robots work; therefore, they did not directly test the effectiveness of the training. The FER ability was the focus of three studies (Costa et al., 2014; Koch et al., 2017; Yun et al., 2017). Costa et al. (2014), with an exploratory study, tested a robot-based intervention on two children with an ASC (age range = 14–16 years) and found an improvement in their ability to label emotions. The study by Koch et al. (2017) on 13 children with an ASC (age range = 5–11 years) compared a non-human-like robot-based intervention with a human-based one for FER ability. The level of engagement of the children was higher in the non-human-like robot-based intervention, and their behaviors were evaluated as more socially adequate than those of children trained with the human intervention. Finally, the study by Yun et al. (2017) applied a non-human-like robot-based compared to a similar human-based intervention on 15 children with an ASC (age range = 4–7 years) finding a general improvement in FER abilities of the children, but no differences between interventions.

On the other side, four studies have considered interventions for FEE abilities (Giannopulu and Pradel, 2012; Giannopulu et al., 2014; Bonarini et al., 2016; Soares et al., 2019). The study by Giannopulu and Pradel (Giannopulu and Pradel, 2012) is a single-case study examining the effectiveness of a non-human-like robot-based intervention on a child with a diagnosis of low-functioning autism (chronological age = 8 years; developmental age = 2 years). Training helped the child to use a robot as a mediator to initiate social interactions with humans and express emotions spontaneously. Giannopulu et al. (2014) compared a group of children with an ASC (n = 15) with a typically developing peer group (n = 20) with a mean age of 6–7 years old. Their findings showed that the children with an ASC, after the training, increased their emotional production, reaching the levels of the typically developing peers. Bonarini et al. (2016) applied a non-human-like robot-based intervention on three children with a low-functioning autism diagnosis (chronological age = 3 years; developmental age = not specified). They did not find any significant improvement.

Finally, Soares et al. (2019) compared three different conditions, intervention with a humanoid robot vs. intervention with a human vs. no intervention, on children with a diagnosis of high-functioning autism (n = 15 children for each group; age range = 5–10 years). They found that the children trained by the robot showed better emotion recognition and higher abilities to imitate facial emotion expressions compared with the other two groups.

Although these studies often do not use a randomized controlled trial experiment and their sample sizes are limited, their preliminary findings are still crucial for the development of research in this field. Technological-based interventions help individuals with an ASC to fill the gap and to overcome their delay in emotion recognition and expression. What is still under debate is whether the abilities acquired during the intervention with a robot are likely (or not) to be generalized in naturalistic interactions with human beings, as also requested in other conditions (Iannizzotto et al., 2020a,b; Pontikas et al., 2020; Valentine et al., 2020; Caprì et al., 2021). The direct generalization process from a robot-human interaction to a human-human interaction could be stressful for individuals with an ASC, because the stimuli produced by robots are simpler, predictable, less stressful, and more consistent than the ones produced by humans (Dautenhahn and Werry, 2004; Gillesen et al., 2011; Diehl et al., 2012; Yoshikawa et al., 2019). Therefore, intermediate and “hybrid” training that combines a technological device with the display of a human face of a peer, with standardized emotion expressions (Leo et al., 2018, 2019), could provide a fading stimulus to guide children with an ASC toward generalization of the acquired abilities. Such intermediate training should first be tested against the equivalent robot-based training to determine its efficacy and then can be used as a fading stimulus.

Albeit a previous systematic review (Ramdoss et al., 2012) argued that the evidence of computer-based interventions provided mixed results and highlighted critical issues, a recent meta-analysis (Kaur et al., 2013) reported that computer-based videos and games were used extensively and that they were useful in terms of improvement of social skills in children with an ASC. Despite contrasting conclusions, both the reviews suggested that further studies should be designed in order to better understand the critical issues of this kind of intervention.

This study places itself in this field of research to test a type of hybrid computer-based training with a standardized video of a peer compared with an equivalent robot-based intervention. To the best knowledge of the authors, this is the first attempt to test such intervention with children who are diagnosed with ASC. Specifically, we compared these two technological interventions to evaluate their effectiveness on the development of facial emotion recognition and expression abilities. We expected to find an overall significant difference between the pre- and post-interventions (i.e., HP1-time effect). In other words, we expected that recognition and expression abilities of children improved from pre- to post-interventions via the imitation process. Indeed, some evidence (Bandura, 1962; Bruner, 1974) highlighted that imitation is a key process to learn social skills, and it has been applied in other studies on children with autism (Zheng et al., 2014).

Two further research questions were formulated. RQ1-group effect: is there any difference in the emotion recognition and expression abilities between children who received a robot-based intervention and those who received a computer-based intervention (i.e., group effect)? RQ2-group*time effect: is there a significant interaction between type of intervention (i.e., group effect) and time of evaluations (i.e., time effect)?

A final research question considering engagement of children has been formulated. RQ3-engagement: we explored whether the hybrid technological device applied in this research induced a similar level of engagement compared with the humanoid robot. Previous studies (Dautenhahn and Werry, 2004; Diehl et al., 2012; Bauminger-Zviely et al., 2013; Yoshikawa et al., 2019) have compared the robot-child interaction with the child-human one; among them, only one (Yoshikawa et al., 2019) highlighted that the robot-child interaction is more engaging than the other. However, to the best knowledge of the authors, no studies have compared human-based intervention to computer-based intervention based on their level of engagement.

Method and Materials

Design and Procedure

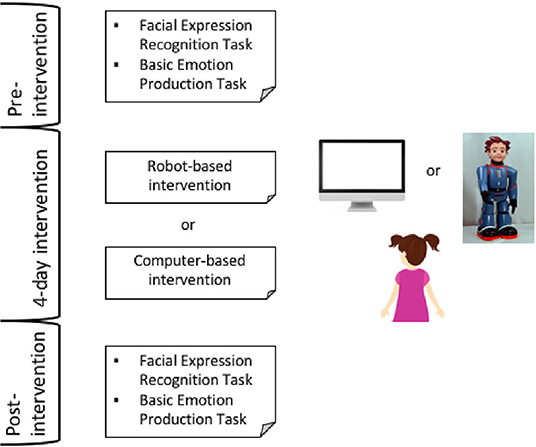

A two-group pre-post-test study design (see Table 1) was applied to investigate the effectiveness of the two types of training conducted to develop and promote FEE of basic emotions (happiness, sadness, fear, and anger) in children with ASC. All the participants recruited in this study were diagnosed according to the gold standard measures (i.e., Autism Diagnostic Observation Schedule-2 and Autism Diagnostic Interview-Revised) and the Diagnostic and Statistical Manual of Mental Disorders, 5th Edition (DSM-5) diagnostic criteria. The diagnosis has been done by professionals working on two non-profit associations that helped us with the recruitment. These two associations are affiliated with the Italian National Health Service, and the severity of autistic traits is periodically evaluated in order to inform the psychological intervention provided by the service. The inclusion criterion was age range of children between 5 and 17 years; and the exclusion criteria were: (1) presence of comorbidity (2) lack of verbal ability, and (3) IQ below the normal range. Seventeen children met these criteria, and their families were invited to participate in the study. They received a brief description of the research protocol and then signed the informed consent. Data collection was performed in a quiet room in clinics where the associations have their headquarters. The Ethical Committee of the L'Adelfia non-profit association, which hosted the study, approved the research (01/2018) and informed consent was signed by the parents.

Each child was first marched with a peer, creating a couple, with a similar chronological age (± 6 months) and IQ score (± 10 T-score) evaluated through the Raven Colored Progressive matrices (Measso et al., 1993). For five children, it was not possible to find a match with a similar age/IQ peer; therefore, they were not included in the study. Then, one child of the couple was assigned to one group and the other child to the other group. Table 1 and Figure 1 show the phases of the study. Both groups received a pre-intervention evaluation, such as measurement of FER and FEE abilities of children. The pre-intervention phase of the evaluation consisted of a 20-min session conducted in a quiet room by a trained therapist with the child seated in front of the therapist. The subsequent day (day 1 of treatment, see Table 1), one group (robot-intervention) received the training with the humanoid robot Zeno R25 [Robokind (Hanson et al., 2012; Cameron et al., 2016)], a device with a prerecorded childish voice (Matarić et al., 2007). The second group (computer-intervention) received the training with a video with a typically developing peer as a mediator. The training phase consisted of four days of intervention focused on the facial expression of basic emotions. Each day started with a baseline evaluation of the facial emotion expression ability during which the child was asked to express each basic emotion five times. Afterward, the training started with four sessions in which each emotion was expressed five times as a dynamic stimulus by the human-like robot and as a static stimulus in the intervention with the video. The child then had to imitate the expression five times. Each day of training ended with a post-intervention evaluation with a procedure similar to the one applied in the baseline evaluation at the beginning of the day. The emotion sequence was counterbalanced during the phase of the intervention (i.e., baseline, post-intervention, and training sessions). Finally, after 9–10 days, in the post-intervention, the therapist proposed the same evaluation done in the pre-intervention.

Participants

Twelve out of 17 children with ASC (M = 9.33 years; sd = 2.19 years; range = 6–13 years; all males) were included in the study. Raven's Colored Progressive Matrices mean score was M = 105 (sd = 10.98). No significant differences were found in the chronological age and IQ scores between the two groups as well as in the pre-intervention evaluation. Most of the children were born at term (n = 10; 83.3%), one was pre-term; 58.3% of the children were first-born, and 16.6% of them were second-born or later; two children had a twin. All the children were enrolled in a behavioral intervention with the Applied Behavioral Analysis method. The mean age of the mother was 38.6 years (ds = 12.6 y), and their educational level was low (up to eight years of education) for 7.7%, intermediate (up to 13 years of education) for 30.8%, and high (15 or more years of education) for 84.6%. The mean age of father was 47.3 years (sd = 3.8 years), and their educational level was low (up to eight years of education) for 23.1%, intermediate (up to 13 years of education) for 53.8%, and high (15 or more years of education) for 15.4%. The parents were all married, except two who were divorced.

Measures

Pre-intervention and post-intervention evaluations. To evaluate the ability of the children to recognize and express the four basic emotions (happiness, sadness, fear, and anger), we administered the Facial Emotion Recognition Task [FERT; adapted by Wang et al. (2011)] and the Basic Emotions Production Task (BEPT; technical report).

The Facial Emotion Recognition Task (FERT) is composed of 20 items (i.e., four emotions asked five times each). Each item included four black-and-white photographs of faces expressing the four basic emotions extracted by Ekman's FACS system (Ekman, 1984). The choice to include visual stimuli extracted from the FACS system is due to the fact that the software used in this study to evaluate the facial expressions of the children as correct or incorrect has been developed according to the FACS system and previously validated (Leo et al., 2018). In one example of the items, the therapist said to the child: “Show me the happy face.” The child, then, had to indicate the correct face among the four provided ones. The requests were provided sequentially to the child, as happiness-sadness-fear-anger, with no counterbalance. One point was attributed when the emotion was correctly detected and 0 points for wrong answers or no answers. One score for each emotion (range 0–5) and one total score were calculated as a sum of the correct answers (range 0–20).

The Basic Emotion Production task (BEPT; technical report) asked the child to express the four basic emotions without any external stimulus to imitate. For example, the therapist asked the child: “Do you make me a happy face?”. The requests were provided sequentially to the child as happiness-sadness-fear-anger, and the sequence was repeated five times. Each child was asked to express a total of 20 emotion expressions (four emotions * five times each). The facial expression of each child was scored as correct or incorrect by the software previously validated on typically and atypically developing children (Leo et al., 2018, 2019). One point was attributed when the emotion was correctly detected and 0 points for wrong answers or no answers. One score for each emotion (range 0–5) and one total score were calculated as a sum of the correct answers (range 0–20).

Interventions. The robot-intervention group received training with Zeno R25, a humanoid robot manufactured by Robokind (www.robokind.com). The robot has a face able to express emotions with seven degrees of freedom, such as eyebrows, mouth opening, and smile. The robot can also move its arms and legs. Zeno R25 features a system on a chip Texas Instruments OMAP 4460, OMAP 4460 dual-core 1.5 GHz ARM Cortex A9 processor with 1 GB of RAM and 16 GB of storage. The robot has Wi-Fi, Ethernet, two USB ports, an HDMI port, and an NFC chip for contactless data transfer. It is 56 cm tall and provided with sensors (gyroscope, accelerometer, compass, and infrared), a camera (five megapixels) lodged in his right eye, nine touch zones distributed over its entire skeleton, eight microphones, and a loudspeaker. On his chest, a 2.4-in LCD touch screen is used to access the functions and distribute content. The software part is based on the Ubuntu Linux distribution. The software includes basic software routines for invoking face and body movements. For the study purposes, an additional camera was placed on the robot chest (the same camera used for the second intervention group with the video instead of the robot). The camera was a full HD one (resolution 1,920 × 1,080 pixels), and it has been fixed at the height of the trouser belt of the robot (its least mobile part to reset the ego-motion). The robot has been connected via Ethernet with a laptop to which the additional camera has also been connected via USB. On the laptop, a software interface (GUI) properly built using the C++ environment and QT multiplatform library is installed. Through the interface, the commands to the robot are sent in order to invoke its speech and facial movement primitives. The images acquired from the camera were sent to the laptop via the USB connection, and they can be either stored (for subsequent processing) or also processed in real-time to provide immediate feedback to the child. When the child correctly answered the question, the robot would give him positive feedback (“Very well”); whereas if the child refused or did not correctly answer, the robot would continue with the task. The robot has a camera that follows the gaze of the child: if the child took his gaze off from the robot, he would receive a voice prompt made by the robot to engage him again in the task. The inputs of the voice prompt were given by the engineers who managed the software.

The second group of children was trained using pre-recorded videos of a typically developing peer performing facial expressions of emotions and reproducing the same procedure as done by the robot, such as the positive feedback (“Very well”). Similar to the robot-based intervention, in the computer-based training, the webcam of the computer followed the gaze of the children; if the child took his gaze off from the camera, he would receive a voice prompt made by the child/peer of the video. The inputs were given by the engineer who managed the software. The same GUI applied with Zeno has been used for this second intervention. In that case, the GUI sends commands to a video player with a playlist consisting of short videos of the typically developing peer. The child in the videos was trained by two of the authors of this study who are experts in developmental psychology. Each emotion expression was executed and recorded several times in order to have a range of videos among which choose the most appropriate ones. The same two experts selected a set of expressions performed according to the FACS principles (Ekman, 1984) and the GUI evaluated and chose for the training the ones that received the highest scores. The videos were projected on a 27-in monitor having full HD resolution. The monitor was placed on a cabinet, and at the bottom of the monitor, the same camera used for the sessions with the robot was placed. The software for automatic facial expression analysis running on the laptop was implemented using a C++ development environment also exploiting OpenCV (www.opencv.org) and OpenFace (github.com/TadasBaltrusaitis/OpenFace) libraries.

Engagement. The level of engagement was calculated as the number of voice prompts (i.e., the name of the child) that the two devices used to involve the child during the task. Each time the child took off his gaze from the device, the robot/peer would call the child by his name to engage him again in the task. The level of engagement ranged from 0 to 22 prompts (M = 4.5; sd = 6.7), with higher scores indicating lower engagement.

Data Collection and Statistical Strategy

The videos were analyzed using modern computer vision technologies (Leo et al., 2020) specifically aimed for detecting and analyzing human faces for healthcare applications.

In particular, a type of software implemented elsewhere (Leo et al., 2018) and validated both on typically developing children and children with ASC (RStudio Team, 2020) was applied. The data were analyzed using RStudio Team (2020) and the Statistical Package for the Social Science v.25 (IBM Corp, 2010). In the pre-intervention, the competencies of the children on FER and BEP tasks were compared through independent sample t-tests. To test the hypothesis and the research questions, nonparametric analyses for longitudinal data on a small sample size were computed using the nparLD package (Noguchi et al., 2012) for RStudio. The F1LDF1 design was applied. The interventions (robot- vs. computer-based) were included as a group variable allowing the estimation of a group effect. The two evaluations (pre- and post-interventions) were included as a time variable allowing the estimation of a time effect. Finally, the interaction of group*time was included as well. The ANOVA-type test and the modified ANOVA-type test with box approximation were calculated for testing group effect, time effect, and their interaction. It is worth noting that the higher degree of freedom of each ANOVA model was equal to infinity, “in order to improve the approximation of the distribution under the hypothesis of ‘no treatment effects' and ‘no interaction between whole-plot factors'” [Noguchi et al., 2012, p. 14]. As a measure of the effect of the group*time interaction, we reported the relative treatment effect (RTE) ranging from 0 to 1 (Noguchi et al., 2012). When the interaction between group*time was significant, Mann–Whitney U was calculated. Bonferroni corrections have been applied. The Hedge's g effects size (Hedges and Olkin, 1985) has been calculated as well. A p-value of 0.05 was taken as statistically significant. A non-parametric Mann–Whitney test was carried out to evaluate whether the hybrid computer-based training is able to engage the attention of the child during the task similarly as the robot.

Results

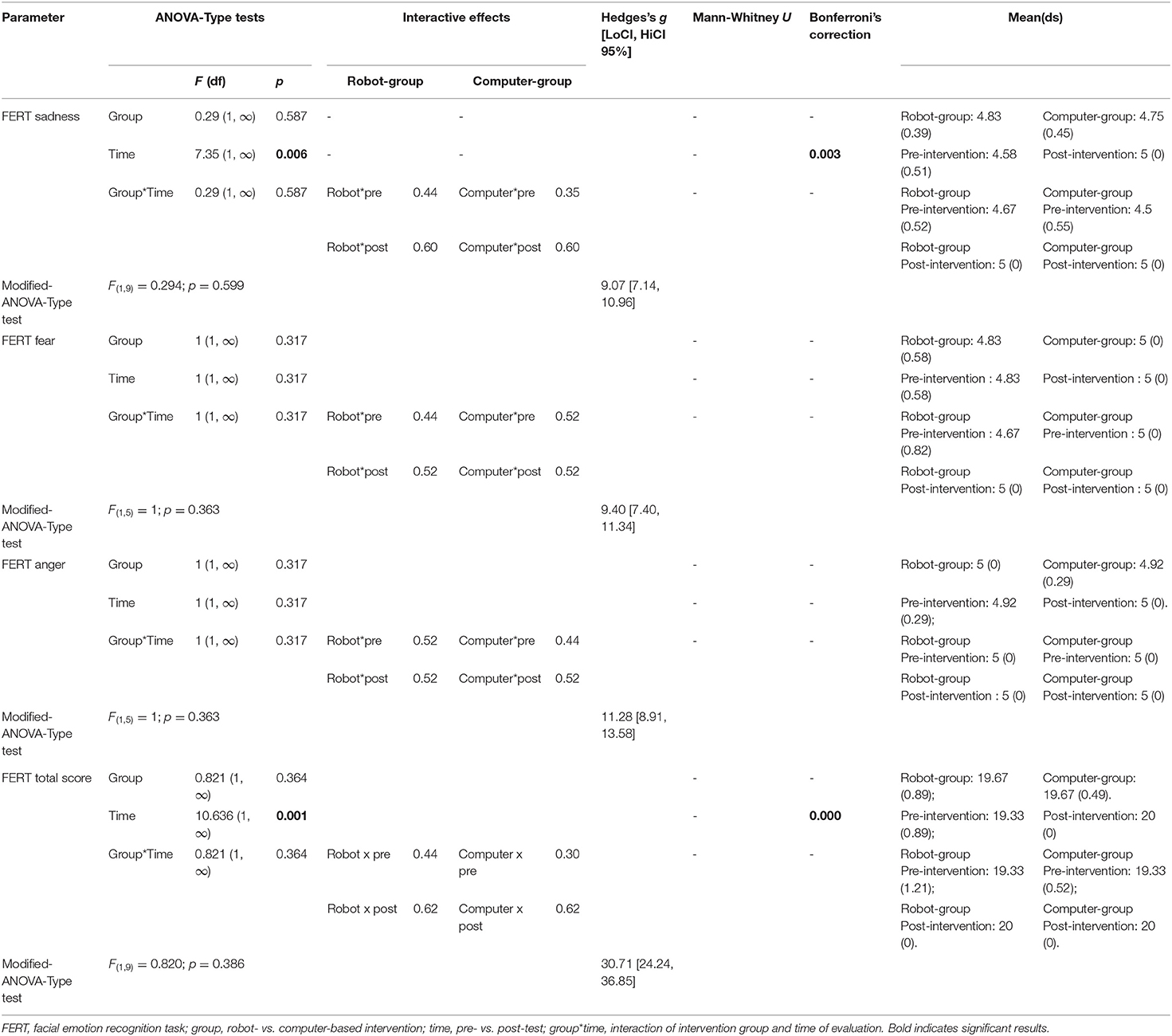

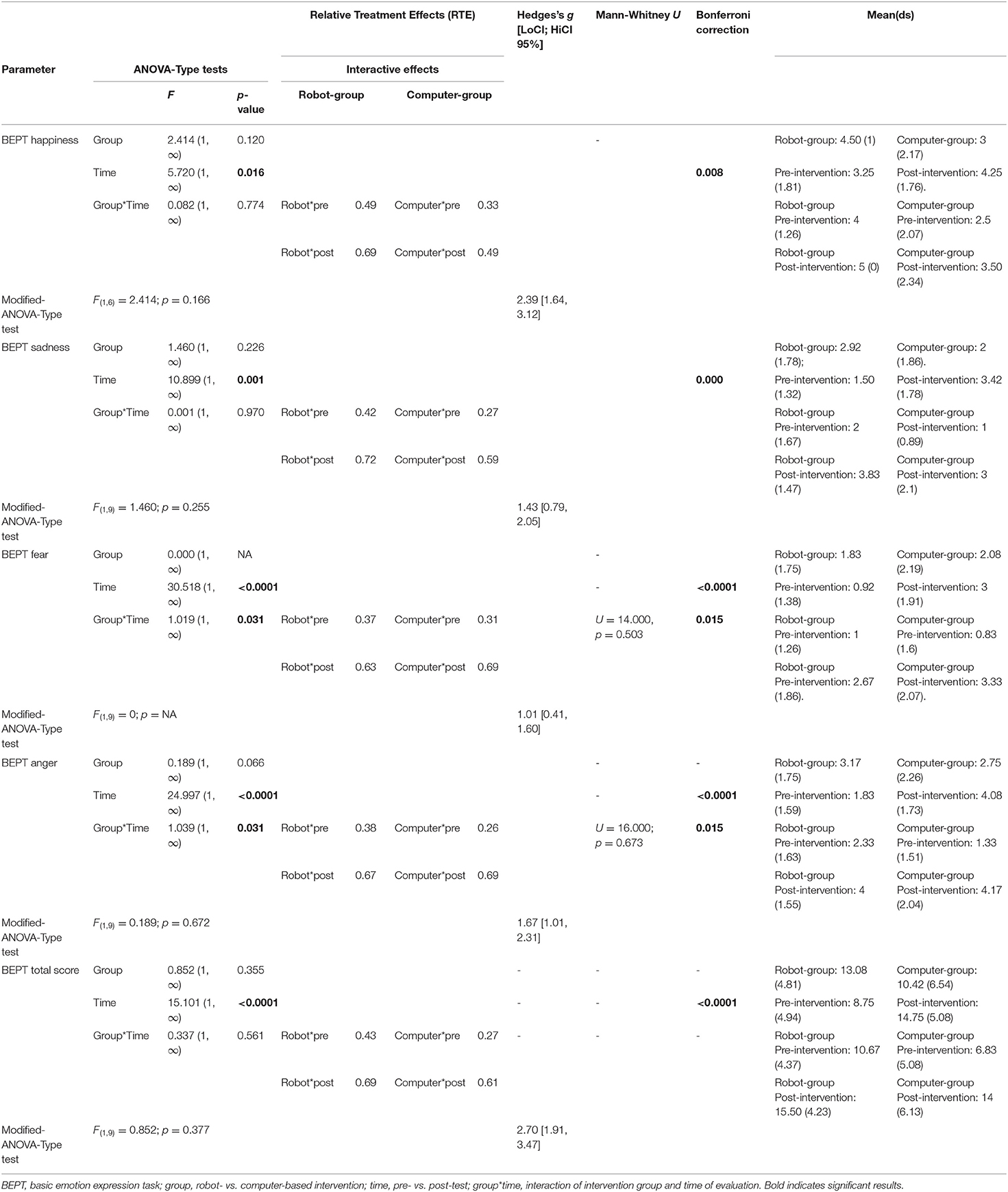

The results of the nonparametric longitudinal analyses are shown in Table 2 (for Facial Emotion Recognition Task) and Table 3 (for Basic Emotion Production Task). The modified ANOVA tests were not significant. Moreover, for both emotion recognition and expression scores, the ANOVA-type tests showed that significant group effects can be excluded (RQ1-group effect). This means that there were no significant differences between humanoid robot-based intervention and computer-based intervention on the facial emotion recognition and expression of the children. The facial recognition of happiness reached the ceiling (M = 5) in the pre-intervention evaluation in both groups; therefore, these scores have not been further analyzed.

The results of the time effects and group*time effects revealed several significance. Regarding the FERT (see Table 2), significant results emerged in the time effect of sadness with post-evaluation scores higher than those of pre-evaluation scores (HP1-time effect). Similarly, the results revealed a time effect for the FERT total score mining that all the children improved their broader ability to recognize basic emotions when they were trained by a technological device. Regarding the BEPT, significant time effects emerged for all the four basic emotions and for the BEPT total score, with scores in the post-intervention always higher than the scores in the pre-intervention (HP1-time effect). This means that the children acquired higher performances in the expression of basic emotions after interventions with the technological devices. Regarding the expression of fear and anger, the ANOVA-type tests showed two significant effects for the group*time interaction. However, the Mann–Whitney tests did not find a significant difference among the four subgroups. This corroborated the idea that both interventions (robot and computer) improved the ability of the children (RQ2-group*time effect).

The comparison of the level of engagement during the two training sessions showed no significant difference (U = 13.000; p =0.413). This means that the hybrid technological device applied in this research induced a similar level of engagement compared with the humanoid robot (RQ3-engagement).

Discussion

The main study purpose was to give a contribution to the field of research regarding the application of technology to improve the emotional competencies of individuals with an ASC. In particular, the main focus was on whether the proposed computer-based intervention would be effective in terms of the development and promotion of facial emotion recognition and expression. We debated that a straightforward generalization, from the technological device to the human interaction, might be stressful for individuals with an ASC, and that an intermediate transition with hybrid training would help the generalization process. For this reason, this study presented a two-group pre-post-test study design testing the effectiveness of two technological-based interventions aimed at developing facial emotion expression and recognition in children with an ASC. The technology on which the interventions are based exploited a robot and a pre-recorded video with a typically developing peer.

The first hypothesis expected to find an overall significant difference between the pre- and post-intervention evaluation phases demonstrating that the interventions improved facial emotion expression and recognition abilities of children. The expression and recognition of four basic emotions (happiness, sadness, fear, and anger) were considered in two groups of 12 children with an ASC. The results corroborated the preliminary hypothesis revealing an improvement in the broader ability to recognize and express basic emotions. Moreover, the findings showed a higher post-intervention recognition of the negative emotion of sadness and higher post-intervention production of happiness, sadness, fear, and anger. Albeit the study limitation is related to sample size, this evidence is in line with previous studies (Pennisi et al., 2016; Hill et al., 2017; Kumazaki et al., 2017; Sartorato et al., 2017; Saleh et al., 2020) suggesting that intensive training that applies technological devices helps children in filling the gap.

The study also proposed three research questions. First of all, this study compared the efficacy of the two interventions (robot vs. computer) on basic emotion recognition and expression (RQ1-group effect). The findings revealed that a group effect can be excluded: this means that there was no difference in the performance of the children with the two technological devices. In other words, the application of technology itself, as previously discussed, not the type of technology applied, fosters improvement. This is the first attempt to evaluate the effectiveness of the two interventions promoting emotional competence comparing two different technological devices; therefore, the preliminary results need further demonstration with a larger sample.

The second research question (RQ2-group*time effect) asked whether there was a significant interaction between the two technological-based interventions (robot- vs. computer-based) and the two times of evaluation (pre- vs. post-test). The results showed a significant interaction effect regarding the expression of fear and anger. However, further comparisons with Mann–Whitney U were not significant.

Finally, we investigated whether the hybrid computer training had a similar level of engagement compared with the robot. The exploratory evidence suggested no difference in the levels of engagement, considered in the form of the number of voice prompts given by the device, between children trained by the robot and those trained by the computer. In other words, the engagement degrees of the children were pretty high, as demonstrated by the low mean, and similar across the two devices.

Therefore, albeit the results in this study should be interpreted cautiously, they provided the first evidence supporting the use of hybrid technology as a mediator to facilitate and smoothen the processing of emotions in the human face by individuals with an ASC, similar to the findings of Golan et al. (2010) who used a video displaying a human face of an adult. An intermediate and “hybrid” type of training that combines a technological device with the display of a human face, with standardized emotion expressions, may provide a fading stimulus to guide children with an ASC toward generalization of acquired abilities. Future studies should test and validate the hybrid training with a larger sample and test whether its effectiveness in guiding children toward the generalization of emotion recognition and expression from the robot, to the hybrid device, to the human face.

Limitations

This study presents some limitations. The small sample size, although similar to other studies in the same field, limited the breadth of the conclusions. Future research should test the effectiveness of the two interventions with a larger sample size. Although the two groups of children were matched according to chronological and mental age and they are not significantly different based on their baseline evaluations, we suggest that the wide age range represents a limitation for this study. The second limitation is linked to the lack of information regarding psychological parameters other than age and IQ, such as the severity of autistic traits and information on general, social functioning, and adaptive behaviors. Because of privacy concerns, it was not possible to have this information. Finally, the third limitation concerns the lack of the wait-list control group of children who did not receive any intervention. In order to test whether the improvement in emotional skills of the children depended on the technological-based interventions, further study should be designed with a wait-list control group.

Future Direction

The evidence demonstrated the effectiveness of training on emotion recognition and expression when a technological device is used as a mediator. The data confirmed the benefit produced by training mediated by a humanoid robot and, concurrently, a similar impact when a hybrid device is used. Furthermore, the data showed a similar level of engagement of the children with the robot and the video on the computer. Therefore, a further step in this field would be the implementation of a research plan considering a repeated measure design with three phases, starting from intensive robot-based training, followed by the first generalization with hybrid computer-based training, and then by the full generalization of acquired skills in naturalistic settings toward adults and peers.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

Ethics Statement

The studies involving human participants were reviewed and approved by L'Adelfia non-profit association ethical committee. Written informed consent to participate in this study was provided by the participants' legal guardian/next of kin.

Author Contributions

SP and FL conceived the study, and together with AL developed the design. AL recruit participants and together with ML, PC, PS, and PM collected data. AL, RF, and TC carried out the statistical analysis. ML, CD, PC, PM, and PS developed the technological devices, the softwares and analyzed data collected by the robot and the computer-based application. FL, AL, and SP wrote the draft paper. ML wrote the technical section of the robot and computer/video intervention. All authors read the paper, gave their feedback, and approved the final version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors are grateful to the two non-profit associations (Amici di Nico and L'Adelfia) that helped us with the recruitment. They are grateful to the therapists, Dr. Anna Chiara Rosato and Chiara Pellegrino, and the psychologist, Dr. Muriel Frascella, for their cooperation and help. They are grateful to all the families who participated in the study.

References

Ae, M. S., Van Den, C., Ae, H., and Smeets, R. C. (2008). Facial feedback mechanisms in autistic spectrum disorders. J. Autism Dev. Disord. 38, 1250–1258. doi: 10.1007/s10803-007-0505-y

American Psychiatric Association (2013). Diagnostic and Statistical Manual of Mental Disorders: DSM-5. Washington, DC. doi: 10.1176/appi.books.9780890425596

Aresti-Bartolome, N., and Garcia-Zapirain, B. (2014). Technologies as support tools for persons with autistic spectrum disorder: a systematic review. Int. J. Environ. Res. Public Health. 11, 7767–7802. doi: 10.3390/ijerph110807767

Bandura, A. (1962). Social Learning Through Imitation. Available online at: https://psycnet.apa.org/record/1964–01869-006 (accessed June 11, 2021).

Barakova, E., Gillessen, J., and Feijs, L. (2009). Social training of autistic children with interactive intelligent agents. J. Integr. Neurosci. 8, 23–34. doi: 10.1142/S0219635209002046

Barakova, E. I., and Lourens, T. (2010). Expressing and interpreting emotional movements in social games with robots. Pers Ubiquitous Comput. 14, 457–467. doi: 10.1007/s00779-009-0263-2

Baron-Cohen, S. (2000). Theory of mind and autism: a fifteen year review. Understand. Other Minds Perspect. Develop. Cogn. Neurosci. 2, 3–20.

Baron-Cohen, S. (2002). The extreme male brain theory of autism. Trends Cogn. Sci. 6, 248–254. doi: 10.1016/S1364-6613(02)01904-6

Bauminger-Zviely, N., Eden, S., Zancanaro, M., Weiss, P. L., and Gal, E. (2013). Increasing social engagement in children with high-functioning autism spectrum disorder using collaborative technologies in the school environment. Autism 17, 317–339. doi: 10.1177/1362361312472989

Bellani, M., Fornasari, L., Chittaro, L., and Brambilla, P. (2011). Virtual reality in autism: state of the art. Epidemiol. Psychiatr. Sci. 20, 235–238. doi: 10.1017/S2045796011000448

Bernard-Opitz, V., Sriram, N., and Nakhoda-Sapuan, S. (2001). Enhancing social problem solving in children with autism and normal children through computer-assisted instruction. J. Autism Dev. Disord. 31, 377–384. doi: 10.1023/A:1010660502130

Bonarini, A., Clasadonte, F., Garzotto, F., Gelsomini, M., and Romero, M. (2016). “Playful interaction with Teo, a mobile robot for children with neurodevelopmental disorders,” in ACM International Conference Proceeding Series. Association for Computing Machinery, 223–231. doi: 10.1145/3019943.3019976

Bruner, J. S. (1974). From communication to language—a psychological perspective. Cogn 3, 255–287. doi: 10.1016/0010-0277(74)90012-2

Buckley, M., and Saarni, C. (2006). “Skills of emotional competence: developmental implications,” in Emotional Intelligence in Everyday Life, ed J. H. Beck (New York, NY: Psychology Press), 51–76.

Cameron, D., Fernando, S., Millings, A., Szollosy, M., Collins, E., Moore, R., et al. (2016). “Congratulations, it's a boy! Bench-marking children's perceptions of the robokind Zeno-R25,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Springer Verlag, p. 33–39. Available online at: https://link.springer.com/chapter/10.1007/978–3-319–40379-3_4 (accessed June 10, 2021).

Caprì, T., Nucita, A., Iannizzotto, G., Stasolla, F., Romano, A., Semino, M., et al. (2021). Telerehabilitation for improving adaptive skills of children and young adults with multiple disabilities: a systematic review. Rev. J. Autism Dev. Disord. 8, 244–252. doi: 10.1007/s40489-020-00214-x

Capriola-Hall, N., Wieckowski, A., Swain, D., Tech, V., Aly, S., Youssef, A., et al. (2019). Group differences in facial emotion expression in autism: evidence for the utility of machine classification. Behav. Ther. 50, 828–838. doi: 10.1016/j.beth.2018.12.004

Chevallier, C., Kohls, G., Troiani, V., Brodkin, E., and Schultz, R. (2012). The social motivation theory of autism. Trends Cogn. Sci. 16, 231–239. doi: 10.1016/j.tics.2012.02.007

Clark, T. F., Winkielman, P., and Mcintosh, D. N. (2008). Autism and the extraction of emotion from briefly presented facial expressions: stumbling at the first step of empathy. Emotion 8, 803–809. doi: 10.1037/a0014124

Conn, K., Liu, C., and Sarkar, N. (2008). Affect-Sensitive Assistive Intervention Technologies For Children With Autism: An Individual-Specific Approach. ieeexplore.ieee.org. Available online at: https://ieeexplore.ieee.org/abstract/document/4600706/ (accessed June 10, 2021).

Costa, S., Soares, F., Pereira, A. P., Santos, C., and Hiolle, A. (2014). “Building a game scenario to encourage children with autism to recognize and label emotions using a humanoid robot,” in IEEERO-MAN 2014 - 23rd IEEE International Symposium on Robot and Human Interactive Communication: Human-Robot Co-Existence: Adaptive Interfaces and Systems for Daily Life, Therapy, Assistance and Socially Engaging Interactions (Edinburgh: Heriot-Watt University), 820–825. doi: 10.1109/ROMAN.2014.6926354

Costa, S., Soares, F., and Santos, C. (2013). “Facial expressions and gestures to convey emotions with a humanoid robot,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (Cham: Springer), 542–551. Available online at: https://link.springer.com/chapter/10.1007/978–3-319–02675-6_54 (accessed June 10, 2021).

Cuve, H., Gao, Y., and Fuse, A. (2018). Is it avoidance or hypoarousal? A systematic review of emotion recognition, eye-tracking, and psychophysiological studies in young adults with autism spectrum. Res. Autism Spectr. Disord. 55, 1–13. doi: 10.1016/j.rasd.2018.07.002

Damianidou, D., Eidels, A., and Arthur-Kelly, M. (2020). The use of robots in social communications and interactions for individuals with ASD: a systematic review. Adv. Neurodevelop. Disord. 4, 357–388. doi: 10.1007/s41252-020-00184-5

Dautenhahn, K., and Werry, I. P. (2004). Towards interactive robots in autism therapy. jbe-platform.com. Pragmat. Cogn. 12, 1–35. doi: 10.1075/pc.12.1.03dau

Davidson, R., Sherer, K., and Goldsmith, H. (2009). Handbook of Affective Sciences. Oxford: Oxford University Press.

Delmonte, S., Balsters, J. H., McGrath, J., Fitzgerald, J., Brennan, S., Fagan, A. J., et al. (2012). Social and monetary reward processing in autism spectrum disorders. Mol. Autism 3, 1–13. doi: 10.1186/2040-2392-3-7

Denham, S. A., Blair, K. A., Demulder, E., Levitas, J., Sawyer, K., Auerbach-Major, S., et al. (2003). Preschool emotional competence: pathway to social competence? Child Dev. 74, 238–256. doi: 10.1111/1467-8624.00533

Diehl, J. J., Schmitt, L. M., Villano, M., and Crowell, C. R. (2012). The clinical use of robots for individuals with autism spectrum disorders: a critical review. Res. Autism Spectr. Disord, 6, 249–262. doi: 10.1016/j.rasd.2011.05.006

Eisenberg, N., Fabes, R. A., Guthrie, I. K., and Reiser, M. (2000). Dispositional emotionality and regulation: their role in predicting quality of social functioning. J. Pers. Soc. Psychol. 78, 136–157. doi: 10.1037/0022-3514.78.1.136

Ekman, P. (1984). “Expression and the nature of emotion,” in Approaches to Emotion, eds K. R. C. Scherer, P. Ekman (New York, NY: Psychology Press), 319–344.

Feil-Seifer, D., and Mataric, M. J. (2021). Defining Socially Assistive Robotics. ieeexplore.ieee.org. Available online at: https://ieeexplore.ieee.org/abstract/document/1501143/ (accessed June 10, 2021).

Freitas, H., Costa, P., Silva, V., Pereira, A., and Soares, F. (2017). Using a Humanoid Robot as the Promoter of Interaction With Children in the Context of Educational Games. Available online at: https://repositorium.sdum.uminho.pt/handle/1822/46807 (accessed June 11, 2021).

Garon, N., Bryson, S. E., Zwaigenbaum, L., Smith, I. M., Brian, J., Roberts, W., et al. (2009). Temperament and its relationship to autistic symptoms in a high-risk infant sib cohort. J. Abnorm. Child Psychol. 37, 59–78. doi: 10.1007/s10802-008-9258-0

Giannopulu, I., Montreynaud, V., and Watanabe, T. (2014). “Neurotypical and autistic children aged 6 to 7 years in a speaker-listener situation with a human or a minimalist InterActor robot,” in IEEE RO-MAN 2014 - 23rd IEEE International Symposium on Robot and Human Interactive Communication: Human-Robot Co-Existence: Adaptive Interfaces and Systems for Daily Life, Therapy, Assistance and Socially Engaging Interactions (Edinburg: Heriot-Watt University), 942–948. doi: 10.1109/ROMAN.2014.6926374

Giannopulu, I., and Pradel, G. (2012). From child-robot interaction to child-robot-therapist interaction: a case study in autism. Appl. Bionics Biomech. 9, 173–179. doi: 10.1155/2012/682601

Gillesen, J. C. C., Barakova, E. I., Huskens, B. E. B. M., and Feijs, L. M. G. (2011). “From training to robot behavior: towards custom scenarios for robotics in training programs for ASD,” in IEEE International Conference on Rehabilitation Robotics (Zurich). doi: 10.1109/ICORR.2011.5975381

Golan, O., Emma, A. E., Ae, A., Ae, Y. G., Mcclintock, S., Kate, A. E., et al. (2010). Enhancing emotion recognition in children with autism spectrum conditions: an intervention using animated vehicles with real emotional faces. J Autism Dev Disord. 9:862. doi: 10.1007/s10803-009-0862-9

Grynszpan, O., and Nadel, J. (2015). An eye-tracking method to reveal the link between gazing patterns and pragmatic abilities in high functioning autism spectrum disorders. Front. Hum. Neurosci. 8:1067. doi: 10.3389/fnhum.2014.01067

Grynszpan, O., Weiss, P. L., Perez-Diaz, F., and Gal, E. (2014). Innovative technology-based interventions for autism spectrum disorders: a meta-analysis. Autism 18, 346–361. doi: 10.1177/1362361313476767

Halberstadt, A. G., Denham, S. A., and Dunsmore, J. C. (2001). Affective social competence. Soc. Dev. 10, 79–119. doi: 10.1111/1467-9507.00150

Hanson, D., Robotics, H., Garver, C. R., Mazzei, D., Garver, C., Ahluwalia, A., et al. (2012). Realistic Humanlike Robots for Treatment of ASD, Social Training, and Research; Shown to Appeal to Youths With ASD, Cause Physiological Arousal, and Increase Human-to-Human Social Engagement. Available online at: https://www.researchgate.net/publication/233951262 (accessed June 10, 2021).

Harms, M. B., Martin, A., and Wallace, G. L. (2010). Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychol. Rev. 20, 290–322. doi: 10.1007/s11065-010-9138-6

Hedges, L. V., and Olkin, I. (1985). Statistical Methods for Meta-Analysis. Orlando, FL: Academic Press.

Hill, T. L., Gray, S. A. O., Baker, C. N., Boggs, K., Carey, E., Johnson, C., et al. (2017). A pilot study examining the effectiveness of the PEERS program on social skills and anxiety in adolescents with autism spectrum disorder. J. Dev. Phys. Disabil. 29, 797–808. doi: 10.1007/s10882-017-9557-x

Hubert, B., Wicker, B., Moore, D. G., Monfardini, E., Duverger, H., Da Fonseca, D., et al. (2007). Recognition of emotional and non-emotional biological motion in individuals with autistic spectrum disorders. J. Autism Dev. Disord. 37, 1386–1392. doi: 10.1007/s10803-006-0275-y

Iannizzotto, G., Nucita, A., Fabio, R. A., Caprì, T, and Lo Bello, L. (2020a). Remote eye-tracking for cognitive telerehabilitation and interactive school tasks in times of COVID-19. Information 11:296. doi: 10.3390/info11060296

Iannizzotto, G., Nucita, A., Fabio, R. A., Caprì, T, and Lo Bello, L. (2020b). “More intelligence and less clouds in our smart homes: a few notes on new trends in AI for smart home applications,” in Studies in Systems, Decision and Control (Springer), 123–136. Available online at: https://link.springer.com/chapter/10.1007/978–3-030–45340-4_9 (accessed June 10, 2021).

Illness SP-E in Mental (2007). A Perception-Action Model for Empathy. pdfs.semanticscholar.org. Available online at: https://pdfs.semanticscholar.org/a028/b3b02e53f1af2180a7d868803b7b0a20e868.pdf (accessed June 10, 2021).

Jamil, N., Khir, N. H. M., Ismail, M., and Razak, F. H. A. (2015). “Gait-based emotion detection of children with autism spectrum disorders: a preliminary investigation,” in Procedia Computer Science (Elsevier B.V.), 342–8. doi: 10.1016/j.procs.2015.12.305

Kaur, M., Gifford, T., Marsh, K., and Bhat, A. (2013). Effect of robot-child interactions on bilateral coordination skills of typically developing children and a child with autism spectrum disorder: a preliminary study a study of interpersonal synchrony in individuals with and without autism View project. J. Mot. Learn Dev. 1, 31–37. doi: 10.1123/jmld.1.2.31

Kim, E. S., Berkovits, L. D., Bernier, E. P., Leyzberg, D., Shic, F., Paul, R., et al. (2013). Social robots as embedded reinforcers of social behavior in children with autism. J. Autism Dev. Disord. 43, 1038–1049. doi: 10.1007/s10803-012-1645-2

Kim, J. C., Azzi, P., Jeon, M., Howard, A. M., and Park, C. H. (2017). “Audio-based emotion estimation for interactive robotic therapy for children with autism spectrum disorder,” in 2017 14th International Conference on Ubiquitous Robots and Ambient Intelligence, URAI 2017. Institute of Electrical and Electronics Engineers Inc., 39–44. doi: 10.1109/URAI.2017.7992881

Kliemann, D., Dziobek, I., Hatri, A., Baudewig, J., and Heekeren, H. R. (2012). The role of the amygdala in atypical gaze on emotional faces in autism spectrum disorders. J. Neurosci. 32, 9469–9476. doi: 10.1523/JNEUROSCI.5294-11.2012

Koch, S. A., Stevens, C. E., Clesi, C. D., Lebersfeld, J. B., Sellers, A. G., McNew, M. E., et al. (2017). A feasibility study evaluating the emotionally expressive robot SAM. Int. J. Soc. Robot. 9, 601–613. doi: 10.1007/s12369-017-0419-6

Kumazaki, H., Warren, Z., Corbett, B. A., Yoshikawa, Y., Matsumoto, Y., Higashida, H., et al. (2017). Android robot-mediated mock job interview sessions for young adults with autism spectrum disorder: a pilot study. Front. Psychiatry 8:169. doi: 10.3389/fpsyt.2017.00169

Lahiri, U., Warren, Z., and Sarkar, N. (2011). Design of a gaze-sensitive virtual social interactive system for children with autism. IEEE Trans. Neural Syst. Rehabil. Eng. 19, 443–452. doi: 10.1109/TNSRE.2011.2153874

Lai, C. L. E., Lau, Z., Lui, S. S. Y., Lok, E., Tam, V., Chan, Q., et al. (2017). Meta-analysis of neuropsychological measures of executive functioning in children and adolescents with high-functioning autism spectrum disorder. Autism Res. 10, 911–939. doi: 10.1002/aur.1723

Laugeson, E. A., Ellingsen, R., Sanderson, J., Tucci, L., and Bates, S. (2014). The ABC's of teaching social skills to adolescents with autism spectrum disorder in the classroom: the UCLA PEERS Ò program. J. Autism Dev. Disord. 44, 2244–2256. doi: 10.1007/s10803-014-2108-8

Lecciso, F., Levante, A., Baruffaldi, F., and Petrocchi, S. (2016). Theory of mind in deaf adults. Cogent. Psychol. 3:1264127. doi: 10.1080/23311908.2016.1264127

Leo, M., Carcagnì, P., Distante, C., Mazzeo, P. L., Spagnolo, P., Levante, A., et al. (2019). Computational analysis of deep visual data for quantifying facial expression production. Appl. Sci. 9:4542. doi: 10.3390/app9214542

Leo, M., Carcagnì, P., Distante, C., Spagnolo, P., Mazzeo, P. L., Chiara Rosato, A., et al. (2018). Computational assessment of facial expression production in ASD children. Sensors 18:3993. doi: 10.3390/s18113993

Leo, M., Carcagnì, P., Mazzeo, P. L., Spagnolo, P., Cazzato, D., and Distante, C. (2020). Analysis of facial information for healthcare applications: a survey on computer vision-based approaches. Information 11:128. doi: 10.3390/info11030128

Liu, C., Conn, K., Sarkar, N., and Stone, W. (2008). Physiology-based affect recognition for computer-assisted intervention of children with Autism Spectrum Disorder. Int. J. Hum. Comput. Stud. 66, 662–677. doi: 10.1016/j.ijhsc.2008.04.003

Lombardo, M., and Baron-Cohen, S. (2011). The role of the self in mindblindness in autism. Conscious Cogn. 20, 130–140 doi: 10.1016/j.concog.2010.09.006

Lopes, P. N., Brackett, M. A., Nezlek, J. B., Schütz, A., Sellin, I., and Salovey, P. (2004). Emotional intelligence and social interaction. Personal. Soc. Psychol. Bull. 30, 1018–1034. doi: 10.1177/0146167204264762

Lopes, P. N., Salovey, P., Côté, S, and Beers, M. (2005). Emotion regulation abilities and the quality of social interaction. Emotion 5, 113–118. doi: 10.1037/1528-3542.5.1.113

Lozier, L. M., Vanmeter, J. W., and Marsh, A. A. (2014). Impairments in facial affect recognition associated with autism spectrum disorders: a meta-analysis. Dev. Psychopathol. 26, 933–945. doi: 10.1017/S0954579414000479

Marchetti, A., Castelli, I., Cavalli, G., Di Terlizzi, E., Lecciso, F., Lucchini, B., et al. (2014). “Theory of Mind in typical and atypical developmental settings: some considerations from a contextual perspective,” in Reflective Thinking in Educational Settings: A Cultural Frame Work, eds A. Antonietti, E. Confalonieri, A. Marchetti (Cambridge: Cambridge University Press), 102–136. doi: 10.1017/CBO9781139198745.005

Markram, K., and Markram, H. (2010). The intense world theory - a unifying theory of the neurobiology of autism. Front. Hum. Neurosci. 4:224. doi: 10.3389/fnhum.2010.00224

Matarić, M. J, Eriksson, J., Feil-Seifer, D. J., and Winstein, C. J. (2007). Socially assistive robotics for post-stroke rehabilitation. J. Neuroeng. Rehabil. 4, 1–9. doi: 10.1186/1743-0003-4-5

Mazzei, D., Greco, A., Lazzeri, N., Zaraki, A., Lanata, A., Igliozzi, R., et al. (2012). “Robotic social, therapy on children with autism: preliminary evaluation through multi-parametric analysis,” in Proceedings - 2012 ASE/IEEE International Conference on Privacy, Security, Risk and Trust and 2012 ASE/IEEE International Conference on Social Computing, SocialCom/PASSAT 2012 (Amsterdam), 766–771. doi: 10.1109/SocialCom-PASSAT.2012.99

Measso, G., Zappalà, G., Cavarzeran, F., Crook, T. H., Romani, L., Pirozzolo, F. J., et al. (1993). Raven's colored progressive matrices: a normative study of a random sample of healthy adults. Acta Neurol. Scand. 88, 70–74. doi: 10.1111/j.1600-0404.1993.tb04190.x

Moody, E. J., and Mcintosh, D. N. (2006). Mimicry and Autism: Bases and Consequences of Rapid Automatic Matching Behavior Emotional Mimicry View project Supporting Rural and Latino Families who have Children with Autism View project. Available online at: https://www.researchgate.net/publication/261027377 (accessed June 10, 2021).

Moore, D., McGrath, P., and Thorpe, J. (2000). Computer-aided learning for people with autism - a framework for research and development. Innov. Educ. Teach Int. 37, 218–228. doi: 10.1080/13558000050138452

Noguchi, K., Gel, Y. R., Brunner, E., and Konietschke, F. (2012). nparLD: an R software package for the nonparametric analysis of longitudinal data in factorial experiments. J. Statist. Softw. 50:i12. doi: 10.18637/jss.v050.i12

Nuske, H. J., Vivanti, G., and Dissanayake, C. (2013). Are emotion impairments unique to, universal, or specific in autism spectrum disorder? A comprehensive review. Cogn. Emot. 27, 1042–1061. doi: 10.1080/02699931.2012.762900

Peng, Y. H., Lin, C. W., Mayer, N. M., and Wang, M. L. (2014). “Using a humanoid robot for music therapy with autistic children,” in CACS 2014 - 2014 International Automatic Control Conference, Conference Digest. Kaohsiung: Institute of Electrical and Electronics Engineers Inc., 156–160. doi: 10.1109/CACS.2014.7097180

Pennisi, P., Tonacci, A., Tartarisco, G., Billeci, L., Ruta, L., Gangemi, S., et al. (2016). Autism and social robotics: a systematic review. Autism Res. 9, 165–183. doi: 10.1002/aur.1527

Pontikas, C. M., Tsoukalas, E., and Serdari, A. (2020). A map of assistive technology educative instruments in neurodevelopmental disorders. Disabil. Rehabil. Assist. Technol. 2020:1839580. doi: 10.1080/17483107.2020.1839580

Ramdoss, S., Machalicek, W., Rispoli, M., Mulloy, A., Lang, R., and O'reilly, M. (2012). Computer-based interventions to improve social and emotional skills in individuals with autism spectrum disorders: a systematic review. Dev. Neurorehabil. 15, 119–35. doi: 10.3109/17518423.2011.651655

Ricks, D., and Colton, M. (2010). Trends and considerations in robot-assisted autism therapy. IEEE Int. Conf. Robot. Autom. 2010, 4354–4359. doi: 10.1109/ROBOT.2010.5509327

Rizzolatti, G., Fabbri-Destro, M., and Cattaneo, L. (2009). Mirror neurons and their clinical relevance. Nat. Clin. Pract. Neurol. 5, 24–34. doi: 10.1038/ncpneuro0990

Robins, B., Dautenhahn, K., and Dubowski, J. (2006). Does appearance matter in the interaction of children with autism with a humanoid robot? Interact. Stud. Soc. Behav. Commun. Biol. Artif. Syst. 7, 479–512. doi: 10.1075/is.7.3.16rob

Robins, B., Dickerson, P., Stribling, P., and Dautenhahn, K. (2004). Robot-mediated joint attention in children with autism. Interact. Stud. Soc. Behav. Commun. Biol. Artif. Syst. 5, 161–198. doi: 10.1075/is.5.2.02rob

RStudio Team (2020). RStudio: Integrated Development Environment for R. Boston, MA: RStudio, PBC. Available online at: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.651.1157&rep=rep1&type=pdf#page=14 (accessed October 11, 2020).

Saleh, M. A., Hanapiah, F. A., and Hashim, H. (2020). Robot applications for autism: a comprehensive review. Disabil. Rehabil. Assist. Technol. 2019:1685016. doi: 10.1080/17483107.2019.1685016

Sartorato, F., Przybylowski, L., and Sarko, D. K. (2017). Improving therapeutic outcomes in autism spectrum disorders: enhancing social communication and sensory processing through the use of interactive robots. J. Psychiatric Res. 90, 1–11. doi: 10.1016/j.jpsychires.2017.02.004

Sasson, N., Pinkham, A., Weittenhiller, L. P., Faso, D. J., and Simpson, C. (2016). Context effects on facial affect recognition in schizophrenia and autism: behavioral and eye-tracking evidence. Schizophr. Bull. 42, 675–683. doi: 10.1093/schbul/sbv176

Scassellati, B. (2005). How Social Robots Will Help Us to Diagnose, Treat, and Understand Autism Some of the authors of this publication are also working on these related projects: NBIC 2 View project Robot Self-Modeling View project How social robots will help us to diagnose, treat, and understand autism. Conf. Pap. Int. J. Robot. Res. 28:220757236. doi: 10.1007/978-3-540-48113-3_47

Scassellati, B., Henny, A., and Matarić, M. (2012). Robots for use in autism research. Annu. Rev. Biomed. Eng. 14, 275–294. doi: 10.1146/annurev-bioeng-071811-150036

Schutte, N. S., Malouff, J. M., Bobik, C., Coston, T. D., Greeson, C., Jedlicka, C., et al. (2001). Emotional intelligence and interpersonal relations. J. Soc. Psychol. 141, 523–536. doi: 10.1080/00224540109600569

Shalom, D., Mostofsky, S., Hazlett, R., Goldberg, M., Landa, R., and Faran, Y. (2006). Normal physiological emotions but differences in expression of conscious feelings in children with high-functioning autism. J. Autism Dev. Disord. 6:77. doi: 10.1007/s10803-006-0077-2

Sharma, S. R., Gonda, X., and Tarazi, F. I. (2018). Autism spectrum disorder: classification, diagnosis and therapy. Pharmacol. Ther. 190, 91–104. doi: 10.1016/j.pharmthera.2018.05.007

So, W., Wong, M., Lam, W., Cheng, C., Ku, S., Lam, K., et al. (2019). Who is a better teacher for children with autism? Comparison of learning outcomes between robot-based and human-based interventions in gestural. Res. Dev. Disabil. 86, 62–67. doi: 10.1016/j.ridd.2019.01.002

So, W. C., Wong, M. K. Y., Cabibihan, J. J., Lam, C. K. Y., Chan, R. Y. Y., and Qian, H. H. (2016). Using robot animation to promote gestural skills in children with autism spectrum disorders. J. Comput. Assist. Learn. 32, 632–646. doi: 10.1111/jcal.12159

Soares, F. O., Costa, S. C., Santos, C. P., Pereira, A. P. S., Hiolle, A. R., and Silva, V. (2019). Socio-emotional development in high functioning children with Autism Spectrum Disorders using a humanoid robot. Interact. Stud. Soc. Behav. Commun. Biol. Artif. Syst. 20, 205–233. doi: 10.1075/is.15003.cos

Tanaka, J. W., and Sung, A. (2016). The “eye avoidance” hypothesis of autism face processing. J. Autism Dev. Disord. 46, 1538–1552. doi: 10.1007/s10803-013-1976-7

Tapus, A., Maja, M., and Scassellatti, B. (2007). The grand challenges in socially assistive robotics. IEEE Robot. Automat. Magazine. 14:339605. doi: 10.1109/MRA.2007.339605

Uljarevic, M., and Hamilton, A. (2013). Recognition of emotions in autism: a formal meta-analysis. J. Autism Dev. Disord. 43, 1517–1526. doi: 10.1007/s10803-012-1695-5

Valentine, J., Davidson, S. A., Bear, N., Blair, E., Paterson, L., Ward, R., et al. (2020). A prospective study investigating gross motor function of children with cerebral palsy and GMFCS level II after long-term Botulinum toxin type A use. BMC Pediatr. 20, 1–13. doi: 10.1186/s12887-019-1906-8

Vélez, P., and Ferreiro, A. (2014). Social robotic in therapies to improve children's attentional capacities. Rev. Air Force. 2:101. Available online at: http://213.177.9.66/ro/revista/Nr_2_2014/101_VÉLEZ_FERREIRO.pdf

Wang, Y., Su, Y., Fang, P., and Zhou, Q. (2011). Facial expression recognition: can preschoolers with cochlear implants and hearing aids catch it? Res. Dev. Disabil. 32, 2583–2588. doi: 10.1016/j.ridd.2011.06.019

Watson, K. K., Miller, S., Hannah, E., Kovac, M., Damiano, C. R., Sabatino-DiCrisco, A., et al. (2015). Increased reward value of non-social stimuli in children and adolescents with autism. Front. Psychol. 22:6. doi: 10.3389/fpsyg.2015.01026

Welch, K. C., Lahiri, U., Liu, C., Weller, R., Sarkar, N., and Warren, Z. (2009). “An affect-sensitive social interaction paradigm utilizing virtual reality environments for autism intervention,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (Berlin, Heidelberg), 703–712. doi: 10.1007/978-3-642-02580-8_77

Williams, J. H. G., Whiten, A., Suddendorf, T., and Perrett, D. I. (2001). Imitation, mirror neurons and autism. Neurosci. Biobehav. Rev. 25, 287–295. doi: 10.1016/S0149-7634(01)00014-8

Yoshikawa, Y., Kumazaki, H., Matsumoto, Y., Miyao, M., Kikuchi, M., and Ishiguro, H. (2019). Relaxing gaze aversion of adolescents with autism spectrum disorder in consecutive conversations with human and android robot-a preliminary study. Front. Psychiatry. 10:370. doi: 10.3389/fpsyt.2019.00370

Yun, S. S., Choi, J. S., Park, S. K., Bong, G. Y., and Yoo, H. J. (2017). Social skills training for children with autism spectrum disorder using a robotic behavioral intervention system. Autism Res. 10, 1306–1323. doi: 10.1002/aur.1778

Zane, E., Neumeyer, K., Mertens, J., Chugg, A., and Grossman, R. B. (2018). I think we're alone now: solitary social behaviors in adolescents with autism spectrum disorder. J. Abnorm. Child Psychol. 46, 1111–1120. doi: 10.1007/s10802-017-0351-0

Zheng, Z., Das, S., Young, E., Swanson, A., Warren, Z., and Sarkar, N. (2014). Autonomous robot-mediated imitation learning for children with autism. IEEE Int. Conf. Robot. Autom. 2014, 2707–2712. doi: 10.1109/ICRA.2014.6907247

Keywords: new technology, autism spectrum disorder, social skills, emotion recognition, emotion expression, robot, computer, training

Citation: Lecciso F, Levante A, Fabio RA, Caprì T, Leo M, Carcagnì P, Distante C, Mazzeo PL, Spagnolo P and Petrocchi S (2021) Emotional Expression in Children With ASD: A Pre-Study on a Two-Group Pre-Post-Test Design Comparing Robot-Based and Computer-Based Training. Front. Psychol. 12:678052. doi: 10.3389/fpsyg.2021.678052

Received: 08 March 2021; Accepted: 17 June 2021;

Published: 21 July 2021.

Edited by:

Theano Kokkinaki, University of Crete, GreeceReviewed by:

Soichiro Matsuda, University of Tsukuba, JapanWing Chee So, The Chinese University of Hong Kong, China

Copyright © 2021 Lecciso, Levante, Fabio, Caprì, Leo, Carcagnì, Distante, Mazzeo, Spagnolo and Petrocchi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Flavia Lecciso, flavia.lecciso@unisalento.it

Flavia Lecciso

Flavia Lecciso Annalisa Levante

Annalisa Levante Rosa Angela Fabio3

Rosa Angela Fabio3 Tindara Caprì

Tindara Caprì Marco Leo

Marco Leo Paolo Spagnolo

Paolo Spagnolo Serena Petrocchi

Serena Petrocchi