- 1School of Social Work, Rutgers, The State University of New Jersey, New Brunswick, NJ, United States

- 2Brown School of Social Work, Washington University in St. Louis, St. Louis, MO, United States

Technology is one medium to increase youth engagement, especially among underserved and minority groups, in suicide preventive interventions. Technology can be used to supplement or adjunct an in-person intervention, guide an in-person intervention, or be the stand-alone (automated) component of the intervention. This range in technological use is now called the continuum of behavioral intervention technologies (BITs). Overall, suicide intervention researchers do not use this terminology to categorize how the role of technology differs across technology-enhanced youth interventions. There is growing recognition that technology-enhanced interventions will not create substantial public health impact without an understanding of the individual (youth, families, and providers), mezzo (clinics and health systems of care), and contextual factors (society, culture, community) that are associated with their implementation. Implementation science is the study of methods to promote uptake of evidence-based practices and policies into the broader health care system. In this review, we incorporate work from implementation science and BIT implementation to illustrate how the study of technology-enhanced interventions for youth suicide can be advanced by specifying the role of technology and measuring implementation outcomes.

Introduction

Globally, suicide is a leading cause of death among youth (Centers for Disease Control and Prevention, National Center for Injury, Prevention and Control, 2020; World Health Organization, 2019). Suicidality, which includes suicidal ideation, plans of a suicide attempt, and actual suicide attempts (Posner et al., 2007) is also a pervasive problem that burdens young lives (Kokkevi et al., 2012; Page et al., 2013; Kann et al., 2018). Yet, many youth exhibiting suicidal thoughts and behaviors do not have contact with a mental health specialist over the course of a year, especially youth who identify as male, or as a racial or ethnic minority (Husky et al., 2012). Attitudinal (e.g., concerns about stigma, preference for self-management) and structural (e.g., limited time, transportation, and insurance) barriers may impact youth initiation of mental health services (Arria et al., 2011; Czyz et al., 2013). Untreated suicidality may lead to future psychological distress, as the presence of suicidal ideation during adolescence increases the odds of a suicide attempt in adulthood (Cha et al., 2017). Therefore, it is crucial that at risk individuals and those presenting symptoms of suicidality are engaged in appropriate mental health services as soon as possible (Cho et al., 2013).

As internet and smart-phone use is prominent among youth world-wide (Anderson and Jiang, 2018; Taylor and Silver, 2019), interventions that integrate technology may address barriers to engagement in in-person mental health services, such as access, reach, and stigma (Kreuze et al., 2017; Berrouiguet et al., 2018). Within suicidology, there has been a recent focus on the development of evidence-based technology-enhanced interventions and tools that integrate various technologies ranging from telephones and text messaging to videos and online platforms (Kreuze et al., 2017). Technology may be used to supplement or adjunct an in-person intervention or may be the stand-alone component of the intervention (i.e., an automated intervention with no provider interaction) (Hermes et al., 2019). Hermes et al. (2019) call this range in technological use the “continuum of behavioral intervention technologies (BITs).” In contrast to other mental and behavioral health fields (Nguyen et al., 2013; Glover et al., 2019), suicidology, overall, does not currently use the BIT terminology to categorize how the role of technology differs across the delivery of technology-enhanced youth interventions.

While randomized controlled trials (RCTs) are often the gold standard for testing the efficacy of interventions (Hariton and Locascio, 2018), there is minimal knowledge on if and/or how trials incorporate implementation outcomes regarding youth suicide interventions. Implementation science is the study of methods to promote uptake of evidence-based practices and policies into the broader health care system (National Cancer Institute, 2020), and implementation is a critical element to understanding how and why technology-enhanced interventions work (or need adjustments) in the real world (Wozney et al., 2018). The implementation of health and mental health services is often measured by 8 defined outcomes: acceptability, adoption, appropriateness, feasibility, fidelity, implementation, penetration, and sustainability (Proctor et al., 2011). Without detailed measurement and assessment of these outcomes, pitfalls are not identified, and the implementation of technology-enhanced interventions in larger mental health care systems may fail (Graham et al., 2019). For example, concerns regarding user privacy and confidentiality, and the commercialization of mobile health tools may impact youth usage and the sustainability of interventions (Struik et al., 2017; Lustgarten et al., 2020). To address the limitations of RCTs, scholars now recommend the use of effectiveness-implementation hybrid designs, which integrate the testing or observation of the intervention's clinical impact and implementation processes (Curran et al., 2012).

Objectives

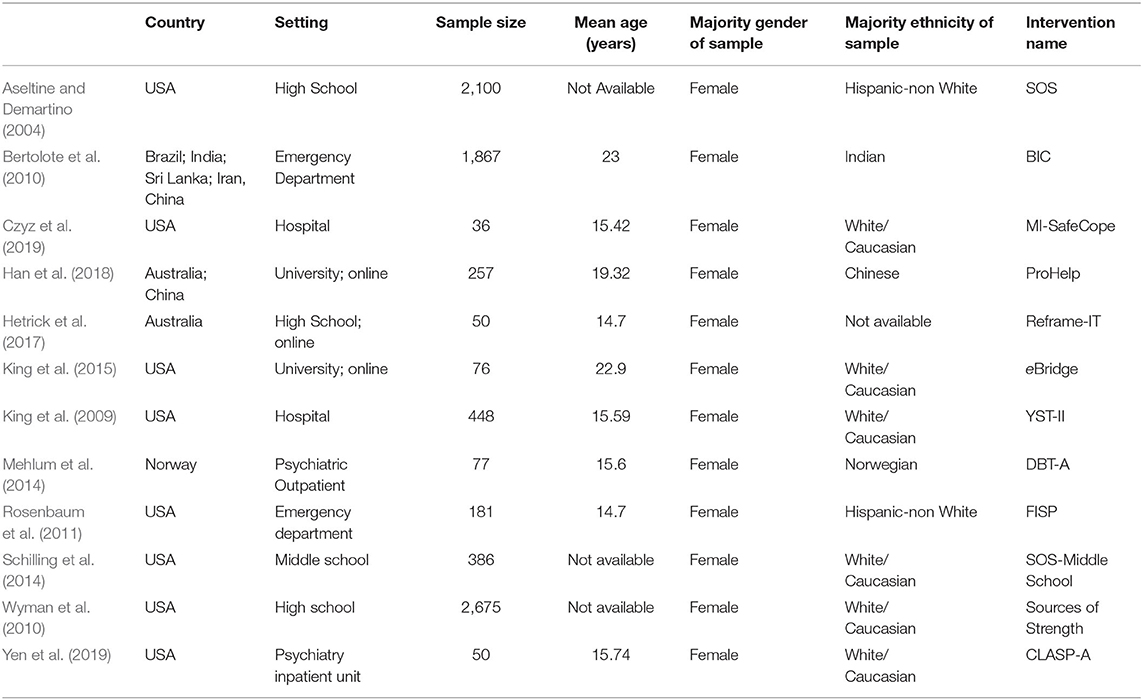

In this review, we incorporate work from implementation science (Proctor et al., 2010, 2011) and BIT implementation (Wozney et al., 2018; Hermes et al., 2019) to illustrate how the study of technology-enhanced interventions for youth suicide can be advanced by specifying the role of technology and measuring diverse outcomes that take into account the unique features of this implementation context. Examples are drawn from 12 RCTs that were identified as part of a larger systematic review focused on the efficacy and effectiveness outcomes of technology-enhanced suicide interventions for youth (Szlyk and Tan, 2020). The 12 international studies represent interventions conducted over the last 19 years that span common place-based settings for youth (e.g., schools, hospital, clinics) and include online platforms as the treatment setting. The variety of selected studies demonstrate opportunities for innovation in measuring implementation outcomes and exemplify the heterogeneous use of technology in interventions.

In the following sections, we discuss how (1) how technology-enhanced interventions for youth suicide can be classified using the BIT continuum, and (2) how implementation outcomes can be measured in future effectiveness trials of these interventions. Our overall intent is to illuminate how technology-enhanced interventions for youth suicide can benefit from the explicit measurement of implementation outcomes.

Redefining Implementation Outcomes for Technology- Enhanced Interventions for Youth Suicide

Proctor et al. (2011) taxonomy provided an invaluable foundation for measuring implementation outcomes in the behavioral health sciences. Currently, researchers suggest that the traditional implementation outcomes be redefined to account for the growing use of technology in behavioral and mental health interventions (Wozney et al., 2018; Hermes et al., 2019). For example, the meaning of traditional implementation outcomes may be different within the context of technology. Perceived usability and usefulness are considered other terms for feasibility and appropriateness; yet, in this context, they may be a better fit for measuring the acceptability of a technology-enhanced intervention (Brooke, 1996; Hermes et al., 2019). Also, since provider and organizational interaction can vary with technology-enhanced interventions, the level of analysis of implementation outcomes may be different as compared to face-to-face interventions. For instance, a fully-automated intervention will not measure implementation outcomes at the provider level, since interaction is between the youth consumer and tool or platform.

Now is an ideal moment for suicidologists to learn from colleagues who specialize in implementation science and BITs: researchers are rapidly developing new technology-enhanced interventions, there is a need for youth suicide interventions that are engaging and accessible, and researchers must also identify how current interventions can be improved and implemented into real-world practice settings. Keeping these three points in mind, we examined 12 RCT studies that included prominent youth suicide interventions that incorporated technology. The selected studies were the only RCTs identified through a large systematic review of the technology-enhanced interventions for youth suicide. The systematic review adhered to PRISMA guidelines (Moher et al., 2009) (please see flow diagram, search terms, and checklist in Supplementary Materials). Even though the large systematic review allowed for interesting insights about the technology-enhanced interventions, we felt that only an examination of the cumulative 26 studies (of varying study design) was a missed opportunity to understand the nuances of study subsamples (e.g., RCTs). We considered the identified RCTs to be an appropriate sample to examine how established and prominent youth suicide preventive interventions could be categorized along the BIT continuum and could measure implementation outcomes in future hybrid trials.

The interventions represented were: Signs of Suicide (SOS), a universal prevention program for high school (Aseltine and Demartino, 2004) and middle school students (Schilling et al., 2014); Brief Intervention and Contact (BIC; Bertolote et al., 2010); MI-SafeCope (Czyz et al., 2019), a motivational interview-enhanced safety planning intervention; ProHelp (Han et al., 2018), a brief psychoeducational online program; ReFrame-IT (Hetrick et al., 2017), an internet-based CBT program; Electronic Bridge to Mental Health Services (eBridge; King et al., 2015), which provided personalized feedback and optional online counseling for university students; the Youth Nominated Support Team Version Two (YST-II; King et al., 2009), a psycho-education and follow-up intervention following psychiatric hospitalization; Dialectical Behavior Therapy for Adolescents (DBT-A; Mehlum et al., 2014); the Family Intervention for Suicide Prevention (FISP; Rosenbaum et al., 2011), an adaptation of an emergency room intervention; Sources of Strength (Wyman et al., 2010), a school-based suicide prevention program; Coping Long-Term with Active Suicide Program for Adolescents (CLASP-A; Yen et al., 2019), an adapted program for the post-discharge transition period. Table 1 provides additional study characteristics.

BIT Continuum and Potential Data Streams

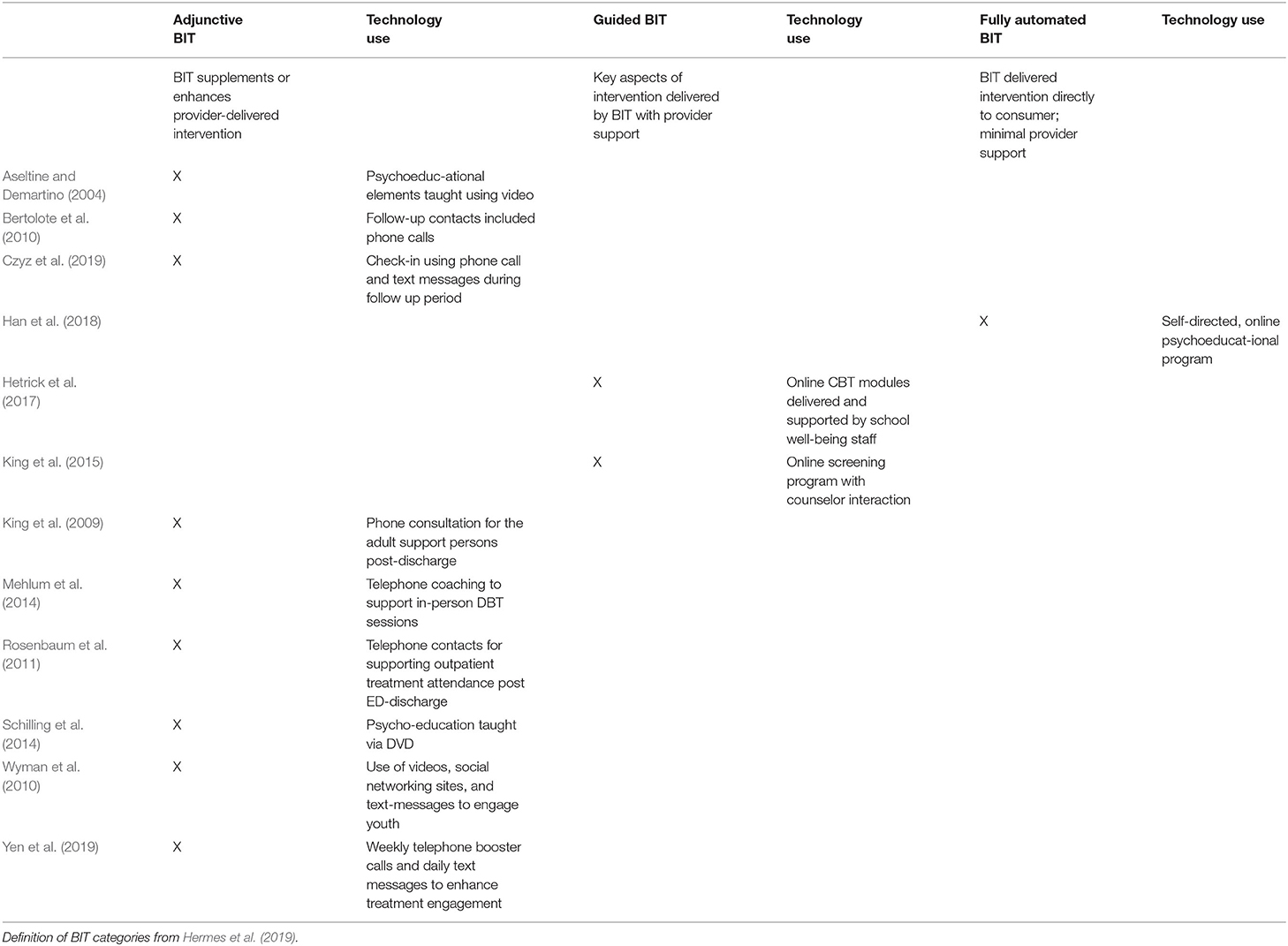

Hermes et al. (2019) posit that the measurement of implementation outcomes needs to take into account the data streams of a BIT (how data is recorded and collected) and the continuum of provider and technology-based support (adjunct, guided, or fully-automated intervention). This contrasts the usual practice in suicidology, of categorizing and grouping interventions by tier of prevention: universal, selective, and indicated. For example, from this review: Sources of Strength (Wyman et al., 2010) is a universal preventive intervention that is offered to all students; eBridge (King et al., 2015) is a selective preventive intervention used to identify university students at elevated risk of suicide; DBT-A (Mehlum et al., 2014) is an indicated preventive intervention that treats youth experiencing severe suicidality. Prior to identifying implementation outcomes, we categorized the 12 studies as adjunctive, guided, or fully-automated (Hermes et al., 2019) and the use of technology (the data stream) was documented (see Table 2).

Nine studies described interventions that could be categorized as adjunctive technology-enhanced interventions (Aseltine and Demartino, 2004; King et al., 2009; Bertolote et al., 2010; Wyman et al., 2010; Rosenbaum et al., 2011; Mehlum et al., 2014; Schilling et al., 2014; Czyz et al., 2019; Yen et al., 2019). This means that these studies featured a technological component to supplement an intervention delivered by a provider (Hermes et al., 2019). For example, the SOS program for high school students included a video to enhance a psychoeducational presentation about youth suicide risk (Aseltine and Demartino, 2004). Two studies described interventions that could be categorized as guided technology-enhanced interventions (King et al., 2015; Hetrick et al., 2017). Therefore, important aspects of the intervention were delivered by a technological component with some provider support. For instance, college students engaged the eBridge platform remotely, and mental health providers responded accordingly to students' completed suicide risk assessments and digital messages (King et al., 2015). Finally, one study described an intervention that could be categorized as a fully-automated technology-enhanced intervention (Han et al., 2018); college students accessed ProHelp, a brief psychoeducational online program, with minimal to no provider support. Technology used ranged from phones (either for calls or text messaging), videos, to online platforms, which suggests a potential variety of data streams.

Measurement of Implementation Outcomes

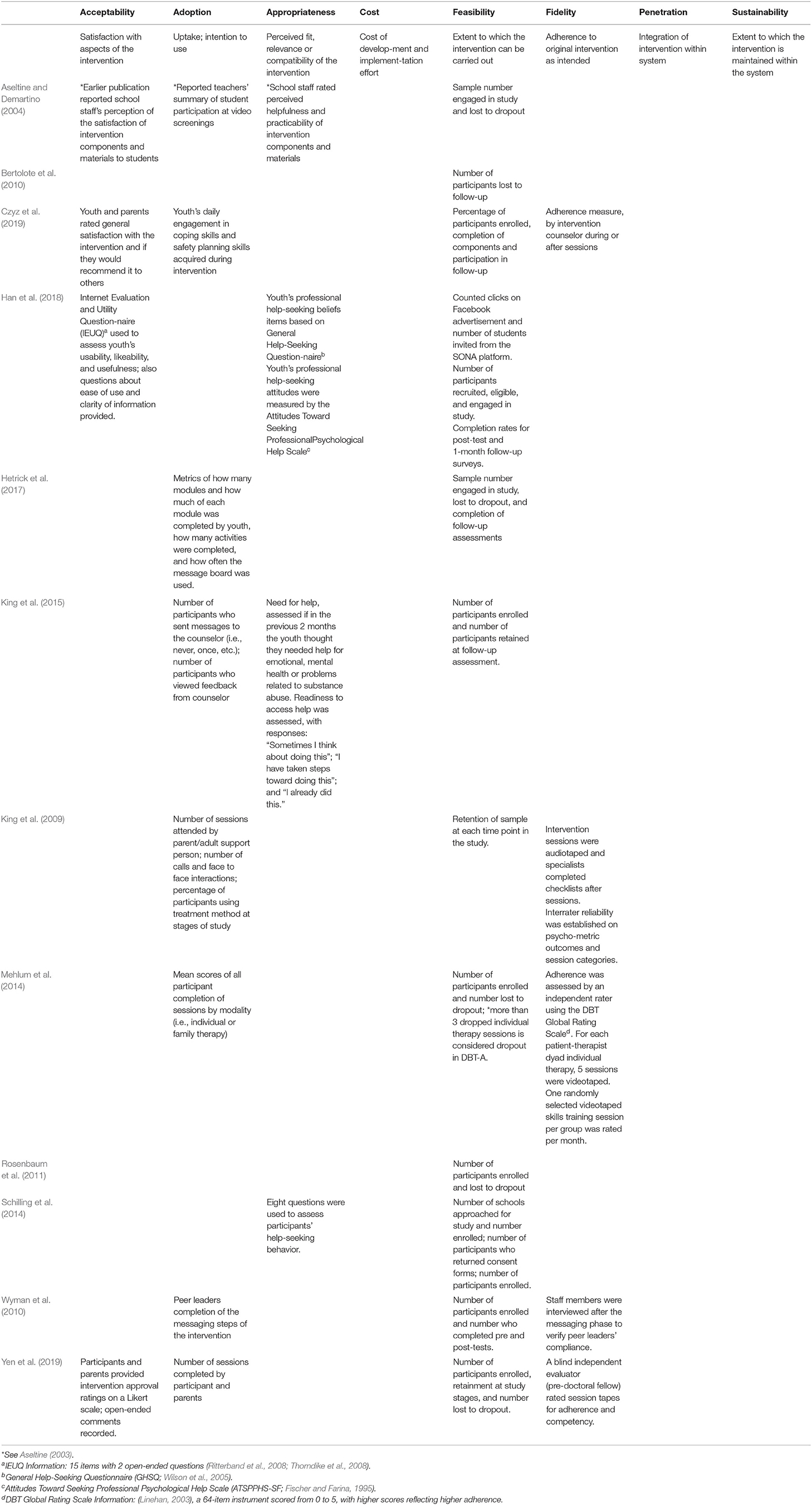

We examined how the eight implementation outcomes from Proctor's taxonomy, with adjustments based on the work of Hermes et al. (2019) and Wozney et al. (2018) and colleagues, could be identified and measured among our sample (see Table 3). Feasibility was conceptualized as “trialability” and informed by recruitment, retention, and youth participation rates (Proctor et al., 2011). Feasibility was measured by the number or proportion of youth participants recruited, enrolled, and retained in the study or lost to dropout. Eight studies measured adoption of the intervention (Aseltine and Demartino, 2004; King et al., 2009, 2015; Wyman et al., 2010; Mehlum et al., 2014; Hetrick et al., 2017; Czyz et al., 2019; Yen et al., 2019), and, overall, studies recorded session attendance and participant engagement with online intervention tools and modules. Five studies measured intervention fidelity by using adherence rating scales for session evaluation (King et al., 2009; Mehlum et al., 2014; Czyz et al., 2019; Yen et al., 2019), interrater reliability of psychometric outcomes and session checklists (King et al., 2009) and verification of completion of intervention components by additional sources (Wyman et al., 2010).

Four studies measured acceptability of the intervention and its components by using participant ratings or responses to open-ended questions about intervention satisfaction (Aseltine, 2003; Han et al., 2018; Czyz et al., 2019; Yen et al., 2019). One study also measured acceptability by using the Internet Evaluation and Utility Questionnaire (IEUQ; Ritterband et al., 2008; Thorndike et al., 2008), which assesses usability, likeability, and usefulness of an online intervention (Han et al., 2018). Four studies measured appropriateness of the intervention by assessments of participants' help-seeking behaviors (Schilling et al., 2014) and beliefs and attitudes about help-seeking (Han et al., 2018), perceptions of intervention helpfulness and practicability (Aseltine, 2003), and ratings of perceived need for help and readiness to access help (King et al., 2015). We included measures of help-seeking as a proxy for appropriateness since this key behavior influences the intervention's fit and relevance for youth participants struggling with suicidality.

Examples of implementation cost, penetration, and sustainability were not identified among our sample. It is logical that these three outcomes were not identified among the RCTs, as they are outcomes that are observed or that occur in later stages of implementation (Proctor et al., 2011). Suggestions for future research are outlined in the discussion section.

Discussion

This review sought to discuss and demonstrate how technology-enhanced interventions for youth suicide can adopt the terminology of the BIT continuum and begin to measure implementation outcomes in future hybrid trials. Based on the first exercise, technology-enhanced interventions for youth greatly varied in terms of provider and technology support. Therefore, the conceptualization of implementation outcomes and how they can be measured or observed should be specific to the category of the BIT continuum. For instance, the SOS program (Aseltine and Demartino, 2004; Schilling et al., 2014), an adjunctive BIT, would measure implementation outcomes differently than ProHelp (Han et al., 2018), a fully-automated BIT. The SOS program could measure implementation outcomes by different levels of analysis—provider (teacher or mental health professional), consumer (student), and administrator (principal), while ProHelp may only report consumer-based outcomes (the youth who are accessing the intervention).

Additionally, we discovered that data stream sources range across the BIT continuum and within BIT categories. Using the same example, the SOS program's psychoeducation video cannot be used as a source of implementation data collection, while ProHelp's online platform automatically records youth intervention engagement and use (i.e., youth clicks on an online advertisement, participant entry into the platform). Yet, MI-SafeCope (Czyz et al., 2019), another adjunctive BIT, had a different data stream than the SOS program by using phone calls and text messages for participant follow-up. Therefore, technology-enhanced suicide interventions for youth are incredibly heterogeneous, and suicidologists should consider an intervention's stage in the BIT continuum and data stream when measuring both effectiveness and implementation outcomes. These observations may inform how specifically technology-enhanced interventions should be matched when comparing outcomes, since comparison by common characteristics (e.g., sample population, study design) overlooks that the interventions' mechanisms are extremely varied.

It is important to note that the majority of our sample were adjunctive technology-enhanced interventions. This is likely the result of the elongated time frame it takes to pilot, adapt, and then perform an RCT using a then considered “cutting edge technology” (e.g., text-messaging, DVDs). We believe more RCTs will be conducted for guided and automated interventions, as technology progresses, and researchers and funders become more adept at expediting the experimental process for technology-enhanced interventions.

The second exercise demonstrated how implementation outcomes can be potentially measured in RCTs of technology-enhanced interventions for youth suicide. Of the constructs present, outcomes were measured via observations or counts, questionnaires and/or rating. Future studies may benefit from including case audits, analyses of administrative data, qualitative methods (focus groups and semi-structured interviews), and the leveraging of data that platforms automatically collect (Proctor et al., 2011; Hermes et al., 2019) and balance outcomes reported by youth consumers and parents/guardians, non-clinical actors (such as peer leaders and school staff), clinical providers, and organizational administrators (Wozney et al., 2018). The practice of collecting implementation outcomes from various sources may also help to ensure that new interventions adequately respect youth privacy and confidentiality, and that user data is managed accordingly.

Researchers should consider measuring and reporting implementation cost, penetration, and sustainability, as these outcomes are also dependent on a study's stage in the BIT continuum, source of data stream, setting, and stage in the implementation process. Implementation cost is an outcome that is relevant to all stages of implementation (Proctor et al., 2011), and increased reporting of cost can inform colleagues and funding sources of the financial realities of developing, disseminating, and sustaining a technology-enhanced intervention for youth suicide. For instance, interventions that have more provider-driven components (such as DBT-A; Mehlum et al., 2014) would require substantial funding for ongoing clinician trainings, while interventions with more automated components (such as Reframe-IT; Hetrick et al., 2017) would require funding for launching, monitoring, and maintaining an online platform. Researchers must also consider the potential financial cost for the youth user (e.g., payment for internet access or cellphone coverage, payment to access mobile app).

Guided or fully-automated interventions broaden the possibilities for measuring penetration, since interventions can be disseminated or accessed by many people at a faster rate than interventions that are mainly face to face. This also suggests that the level of analysis may now include a virtual setting that is part of a larger education or medical institution or under the auspice of a private technological software company. For example, the school-based SOS program (Aseltine and Demartino, 2004) (an adjunctive BIT) would measure penetration by including school personnel and student peers who are trained and who implement the intervention (level of analysis at the school or school district); eBridge (King et al., 2015) (a guided BIT) may measure penetration based on the online platform's capacity to screen a certain number of students by the number of students who access the platform (level of analysis at the university counseling center's platform). Lastly, measurement of sustainability may now include the technological evolution of a youth suicide intervention. For instance, a fully-automated intervention would track updates (e.g., version 2.0 of a mobile application), and report adjustments to account for new technology and consumer preferences (e.g., switching from having the intervention developed for a mobile application specific to Android phones to one featured on IPhones).

Conclusion

Overall, this review emphasizes the diversity within the sub-field of technology-enhanced interventions for youth suicide. Therefore, it is important that suicidologists be specific of how their intervention uses technology, varies in provider and technology-based support, and measures implementation outcomes. As youth suicide and suicidality continues to increase in the U.S., especially among youth with minority identities, the measurement of implementation outcomes may help to understand why an intervention fails or underperforms among a certain youth population, and how successful interventions can be disseminated more broadly. This review also illustrates that implementation outcomes can be measured as early as the RCT phase and raises considerations for how outcomes could be integrated in more implementation-focused studies.

The ever increasing integration of technology in interventions provides opportunities to innovate youth engagement and access. Yet, it also provides opportunities for further stigmatization of underserved populations and misallocated efforts if interventions do not take into account the needs of youth and providers, and the realities of implementing interventions beyond controlled settings. As demonstrated by colleagues specializing in implementation science and BIT, the development and testing of technology-enhanced interventions for youth suicide allow for recharacterization of implementation outcome measurement and, thus, heighten chances of achieving public health impact (Graham et al., 2019). We hope that this review honors the work of youth suicide researchers who have integrated technology into interventions and inspires future suicidologists to understand the nuances of technology-enhanced interventions, and how both provider and technologically-based components translate to implementation in real-world settings.

Author Contributions

HS and JT identified the subsample of articles and extracted examples based on implementation and Behavioral Intervention Technology (BIT) frameworks. RL-H provided expertise in implementation science to evaluate examples identified from selected studies. HS wrote body of manuscript. JT and RL-H edited final versions of manuscript. All authors contributed to the article and approved the submitted version.

Funding

Research reported in this publication was supported by the National Institute of Mental Health of the National Institutes of Health under Award Number T32MH019960.

Disclaimer

The content is solely the responsibility of the author and does not necessarily represent the official views of the National Institutes of Health.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank Susan Fowler, The Brown School librarian, for her assistance with designing search term hedges, and Drs. Leopoldo J. Cabassa, Sean Joe, and members of the Race and Opportunity Lab at Washington University for providing feedback on this project.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2021.657303/full#supplementary-material

References

Anderson, M., and Jiang, J. (2018). Teens, Social Media & Technology 2018. Available online at: https://www.pewresearch.org/internet/2018/05/31/teens-social-media-technology-2018/ (accessed December 12 2019).

Arria, A. M., Winick, E. R., Garnier-Dykstra, L. M., Vincent, K. B., Caldeira, K. M., Wilcox, H. C., et al. (2011). Help seeking and mental health service utilization among college students with a history of suicide ideation. Psychiatr. Serv. 62, 1510–1513. doi: 10.1176/appi.ps.005562010

Aseltine, R. H. (2003). An evaluation of a school based suicide prevention program. Adolesc. Fam. Health 3, 81–88.

Aseltine, R. H., and DeMartino, R. (2004). An outcome evaluation of the SOS suicide prevention program. Am. J. Public Health 94, 446–451. doi: 10.2105/AJPH.94.3.446

Berrouiguet, S., Courtet, P., Larsen, M. E., Walter, M., and Vaiva, G. (2018). Suicide prevention: towards integrative, innovative and individualized brief contact interventions. Eur. Psychiatry 47, 25–26. doi: 10.1016/j.eurpsy.2017.09.006

Bertolote, J. M., Fleischmann, A., De Leo, D., Phillips, M., Botega, N. J., Vijayakumar, L., et al. (2010). Repetition of suicide attempts: data from emergency care settings in five culturally different low- and middle-income countries participating in the WHO SUPRE-MISS Study. Crisis 31, 194–201. doi: 10.1027/0027-5910/a000052

Brooke, J. (1996). “SUS - A quick and dirty usability scale,” in Usability Evaluation in Industry, eds P. W. Jordan, B. T. Thomas, I. L. McClelland, and B. Weerdmeester (Boca Raton, FL: CRC Press), 189–194.

Centers for Disease Control Prevention National Center for Injury Prevention Control. (2020). Web-based Injury Statistics Query and Reporting System (WISQARS) (Fatal Injury Reports, 1999–2018, for National, Regional, and States). Avaialble online at: www.cdc.gov/injury/wisqars (accessed November 30, 2020).

Cha, B. C., Franz, P. J., Guzman, E. M., Glenn, C. R., Kleiman, E. M., and Nock, M. K. (2017). Annual research review: Suicide among youth –Epidemiology, (potential) etiology, and treatment. J. Child Psychol. Psychiatry 58:11. doi: 10.1111/jcpp.12831

Cho, J., Lee, W. J., Moon, K. T., Suh, M., Sohn, J., Ha, K. H., et al. (2013). Medical care utilization during 1 year prior to death in suicides motivated by physical illnesses. J. Prev. Med. Public Health 46:147. doi: 10.3961/jpmph.2013.46.3.147

Curran, G. M., Bauer, M., Mittman, B., Pyne, J. M., and Stetler, C. (2012). Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med. Care 50, 217–226. doi: 10.1097/MLR.0b013e3182408812

Czyz, E. K., Horwitz, A. G., Eisenberg, D., Kramer, A., and King, C. A. (2013). Self-reported barriers to professional help seeking among college students at elevated risk for suicide. J. Am. Coll. Health 61, 398–406. doi: 10.1080/07448481.2013.820731

Czyz, E. K., King, C. A., and Biermann, B. J. (2019). Motivational interviewing-enhanced safety planning for adolescents at high suicide risk: a pilot randomized controlled trial. J. Clin. Child Adolesc. Psychol. 48, 250–262. doi: 10.1080/15374416.2018.1496442

Fischer, E. H., and Farina, A. (1995). Attitudes toward seeking professional psychological help: a shortened form and considerations for research. J. Coll. Stud. Dev. 36, 368–373. doi: 10.1037/t05375-000

Glover, A. C., Schueller, S. M., Winiarski, D. A., Smith, D. L., Karnik, N. S., and Zalta, A. K. (2019). Automated mobile phone-based mental health resource for homeless youth: pilot study assessing feasibility and acceptability. JMIR Ment. Health 6:e15144. doi: 10.2196/15144

Graham, A. K., Lattie, E. G., and Mohr, D. C. (2019). Experimental therapeutics for digital mental health. JAMA Psychiatry 76, 1223–1224. doi: 10.1001/jamapsychiatry.2019.2075

Han, J., Batterham, P. J., Calear, A. L., Wu, Y., Xue, J., and Van Spijker, B. A. (2018). Development and pilot evaluation of an online psychoeducational program for suicide prevention among university students: a randomized controlled trial. Internet Interv. 12, 111–120. doi: 10.1016/j.invent.2017.11.002

Hariton, E., and Locascio, J. J. (2018). Randomized controlled trials – the gold standard for effectiveness research. BJOG 125:1716. doi: 10.1111/1471-0528.15199

Hermes, E. D., Lyon, A. R., Schueller, S. M., and Glass, J. E. (2019). Measuring the implementation of behavioral intervention technologies: recharacterization of established outcomes. J. Med. Internet Res. 21:e11752. doi: 10.2196/11752

Hetrick, S. E., Yuen, H. P., Bailey, E., Cox, G., Templer, K., Rice, S., et al. (2017). Internet-based cognitive behavioural therapy for young people with suicide-related behaviour (Reframe-IT): a randomised controlled trial. Evid. Based Ment. Health 20, 76–82. doi: 10.1136/eb-2017-102719

Husky, M. M., Olfson, M., He, J. P., Nock, M. K., Swanson, S. A., and Merikangas, K. R. (2012). Twelve-month suicidal symptoms and use of services among adolescents: results from the National Comorbidity Survey. Psychiatr. Serv. 63, 989–996. doi: 10.1176/appi.ps.201200058

Kann, L., McManus, T., Harris, W. A., Shanklin, S. L., Flint, K. H., Queen, B., et al. (2018). Youth risk behavior surveillance—United States, 2017. MMWR Surveill. Summ. 67:1. doi: 10.15585/mmwr.ss6708a1

King, C. A., Eisenberg, D., Zheng, K., Czyz, E. K., Kramer, A., Horwitz, A. G., et al. (2015). Online suicide risk screening and intervention with college students: a pilot randomized controlled trial. J. Consult. Clin. Psychol. 83, 630–636. doi: 10.1037/a0038805

King, C. A., Klaus, N. M., Kramer, A., Venkataraman, S., Quinlan, P., and Gillespie, B. W. (2009). The youth-nominated support tream-version II for suicidal adolescents: a randomized controlled intervention trial. J. Consult. Clin. Psychol. 77, 880–893. doi: 10.1037/a0016552

Kokkevi, A., Rotsika, V., Arapaki, A., and Richardson, C. (2012). Adolescents' self-reported suicide attempts, self-harm thoughts and their correlates across 17 European countries. J. Child Psychol. Psychiatry 53, 381–389. doi: 10.1111/j.1469-7610.2011.02457.x

Kreuze, E., Jenkins, C., Gregoski, M. J., York, J., Mueller, M., Lamis, D. A., et al. (2017). Technology-enhanced suicide prevention interventions: a systematic review. J. Telemed. Telecare 23, 605–617. doi: 10.1177/1357633X16657928

Lustgarten, S. D., Garrison, Y. L., Sinnard, M. T., and Flynn, A. W. (2020). Digital privacy in mental healthcare: current issues and recommendations for technology use. Curr. Opin. Psychol. 36, 25–31. doi: 10.1016/j.copsyc.2020.03.012

Mehlum, L., Tormoen, A. J., Ramberg, M., Haga, E., Diep, L. M., Laberg, S., et al. (2014). Dialectical behavior therapy for adolescents with repeated suicidal and self-harming behavior: a randomized trial. J. Am. Acad. Child Adolesc. Psychiatry 53, 1082–1091. doi: 10.1016/j.jaac.2014.07.003

Moher D. Liberati A. Tetzlaff J. Altman D. G. The PRISMA Group. (2009). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 6:e1000097. doi: 10.1371/journal.pmed.1000097

National Cancer Institute. (2020). About implementation science. Available online at: https://cancercontrol.cancer.gov/IS/about.html (accessed November 30, 2020).

Nguyen, B., Shrewsbury, V. A., O'Connor, J., Steinbeck, K. S., Hill, A. J., Shah, S., et al. (2013). Two-year outcomes of an adjunctive telephone coaching and electronic contact intervention for adolescent weight-loss maintenance: the Loozit randomized controlled trial. Int. J. Obes. 37, 468–472. doi: 10.1038/ijo.2012.74

Page, R. M., Saumweber, J., Hall, P. C., Crookston, B. T., and West, J. H. (2013). Multi-country, cross-national comparison of youth suicide ideation: findings from global school-based health surveys. Sch. Psychol. Int. 34, 540–555. doi: 10.1177/0143034312469152

Posner, K., Oquendo, M. A., Gould, M., Stanley, B., and Davies, M. (2007). Columbia classification algorithm of suicide assessment (C-CASA): classification of suicidal events in the FDA's pediatric suicidal risk analysis of antidepressants. Am. J. Psychiatry 164, 1035–1043. doi: 10.1176/ajp.2007.164.7.1035

Proctor, E. K., Landsverk, J., Aarons, G., Chambers, D., Glisson, C., and Mittman, B. (2010). Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Adm. Policy Ment. Health 36, 24–34. doi: 10.1007/s10488-008-0197-4

Proctor, E. K., Silmere, H., Raghavan, R., Hovmand, P. S., Aarons, G., Bunger, A. C., et al. (2011). Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm. Policy Ment. Health 38, 65–76. doi: 10.1007/s10488-010-0319-7

Ritterband, L. M., Ardalan, K., Thorndike, F. P., Magee, J. C., Saylor, D. K., Cox, D. J., et al. (2008). Real world use of an internet intervention for pediatric encopresis. J. Med. Internet Res. 10:e16. doi: 10.2196/jmir.1081

Rosenbaum, J. A., Baraff, L. J., Berk, M., Grob, C. S., Devichnavarro, M., Suddath, R., et al. (2011). An emergency department intervention for linking pediatric suicidal patients to follow-up mental health treatment. Psychiatr. Serv. 62, 1303–1309. doi: 10.1176/ps.62.11.pss6211_1303

Schilling, E. A., Lawless, M., Buchanan, L., and Aseltine, R. H. (2014). Signs of Suicide shows promise as a middle school suicide prevention program. Suicide Life Threat. Behav. 44, 653–667. doi: 10.1111/sltb.12097

Struik, L. L., Haines-Saah, R. J., and Bottorff, J. L. (2017). “Digital media and health promotion practice,” in Health Promotion in Canada: New Perspectives on Theory, Practice, Policy, and Research, 4th Edn, eds I. Rootman, A. Pederson, K. L. Frohlich, and S. Dupere, (Toronto, ON: Canadian Scholars Press), 305–324.

Szlyk, H., and Tan, J. (2020). The role of technology and the continuum of care for youth suicidality: systematic review. J. Med. Internet Res. 22:e18672. doi: 10.2196/18672

Taylor, K., and Silver, L. (2019). Smartphone Ownership Is Growing Rapidly Around the World, but not Always Equally. Available online at: https://www.pewresearch.org/global/2019/02/05/smartphone-ownership-is-growing-rapidly-around-the-world-but-not-always-equally/ (accessed December 12, 2019).

Thorndike, F. P., Saylor, D. K., Bailey, E. T., Gonder-Frederick, L., Morin, C. M., and Ritterband, L. M. (2008). Development and perceived utility and impact of an internet intervention for insomnia. J. Appl. Psychol. 4, 32–42. doi: 10.7790/ejap.v4i2.133

Wilson, C. J., Deane, F. P., Ciarrochi, J. V., and Rickwood, D. (2005). Measuring help seeking intentions: properties of the general help seeking questionnaire. Can. J. Couns. 39, 15–28. doi: 10.1037/t42876-000

World Health Organization (2019). Suicide. Retrieved from: https://www.who.int/news-room/fact-sheets/detail/suicide (accessed November 30, 2020).

Wozney, L., McGrath, P. J., Gehring, N. D., Bennett, K., Huguet, A., Hartling, L., et al. (2018). eMental healthcare technologies for anxiety and depression in childhood and adolescence: systematic review of studies reporting implementation outcomes. JMIR Ment. l Health 5:e48. doi: 10.2196/mental.9655

Wyman, P. A., Brown, C. H., LoMurray, M., Schmeelk-Cone, K., Petrova, M., Yu, Q., et al. (2010). An outcome evaluation of the sources of strength suicide prevention program delivered by adolescent peer leaders in high schools. Am. J. Public Health 100, 1653–1661. doi: 10.2105/AJPH.2009.190025

Keywords: youth, suicidality, technology, psychosocial intervention, implementation science

Citation: Szlyk HS, Tan J and Lengnick-Hall R (2021) Innovating Technology-Enhanced Interventions for Youth Suicide: Insights for Measuring Implementation Outcomes. Front. Psychol. 12:657303. doi: 10.3389/fpsyg.2021.657303

Received: 22 January 2021; Accepted: 29 April 2021;

Published: 03 June 2021.

Edited by:

Cristina Costescu, Babeş-Bolyai University, RomaniaReviewed by:

Patti Ranahan, Concordia University, CanadaEmma Motrico, Loyola Andalusia University, Spain

Copyright © 2021 Szlyk, Tan and Lengnick-Hall. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hannah S. Szlyk, hannah.szlyk@rutgers.edu; orcid.org/0000-0001-7337-8475

Hannah S. Szlyk

Hannah S. Szlyk Jia Tan2

Jia Tan2 Rebecca Lengnick-Hall

Rebecca Lengnick-Hall