- 1Biomedical and Mobile Health Technology Lab, Department of Health Sciences and Technology, Zurich, Switzerland

- 2School of Electrical Engineering and Computer Science, KTH Royal Institute of Technology, Stockholm, Sweden

- 3Electronics and Telecommunications Department, Politecnico Di Torino, Torino, Italy

Remote photoplethysmography (rPPG) provides a non-contact method for measuring blood volume changes. In this study, we compared rPPG signals obtained from video cameras with traditional contact-based photoplethysmography (cPPG) to assess the effectiveness of different RGB channels in cardiac signal extraction. Our objective was to determine the most effective RGB channel for detecting blood volume changes and estimating heart rate. We employed dynamic time warping, Pearson’s correlation coefficient, root-mean-square error, and Beats-per-minute Difference to evaluate the performance of each RGB channel relative to cPPG. The results revealed that the green channel was superior, outperforming the blue and red channels in detecting volumetric changes and accurately estimating heart rate across various activities. We also observed that the reliability of RGB signals varied based on recording conditions and subject activity. This finding underscores the importance of understanding the performance nuances of RGB inputs, crucial for constructing rPPG signals in algorithms. Our study is significant in advancing rPPG research, offering insights that could benefit clinical applications by improving non-contact methods for blood volume assessment.

1 Introduction

Photoplethysmography (PPG) signals are instrumental in monitoring essential health indicators such as heart rate (HR) (Elgendi, 2020), heart rate variability (Mayampurath et al., 2018), blood pressure (Elgendi et al., 2019), atrial fibrillation (Pereira et al., 2020), and mental health (Lyzwinski et al., 2023). Recent advancements have facilitated the development of affordable smart wearables with contact PPG (cPPG) sensors for cardiac activity assessment (Elgendi, 2012; Mashhadi et al., 2015; Ayesha et al., 2021). A novel approach, remote PPG (rPPG), utilizes video cameras and RGB color channels for non-invasive cardiac signal extraction, aligning with the blood volume pressure (BVP) absorptivity spectrum (Zijlstra et al., 1991).

We hypothesize that the green channel is ideal for rPPG signal extraction. This is explored through algorithms like PCA (Lewandowska et al., 2011), CHROM-based rPPG (De Haan and Jeanne, 2013), LGI (Pilz et al., 2018), POS (Wang et al., 2016), and emerging deep learning methods (Yu et al., 2019; Schrumpf et al., 2021), over diverse datasets (Haugg et al., 2022; van Es et al., 2023). Understanding the reliability of RGB signals, influenced by recording settings and subject factors, is crucial when compared to cPPG for robust signal transformation (Charlton et al., 2023).

Previous research, including Verkruysse et al. (Verkruysse et al., 2008), Sinhal et al. (Sinhal et al., 2021), and Bhattacharjee et al. (Bhattacharjee and Yusuf, 2021), has explored the quality of rPPG signals from RGB data. While the green channel’s potential is acknowledged, the roles of blue and red channels, assessed under limited conditions, remain unclear. Our study addresses these limitations, utilizing three diverse datasets with varying camera types, pulse oximeters, lighting conditions, distances, and participant activities (Frey et al., 2022; Haugg et al., 2023).

We aim to identify the RGB channel in rPPG that most closely mirrors cPPG across different settings. Our objectives include assessing the similarity between RGB and cPPG signals in various contexts and statistically validating the robustness of the identified signal’s performance. This comprehensive analysis will enhance our understanding of cardiac insights and improve future video-based health monitoring techniques.

2 Materials and methods

2.1 Datasets

This study used three publicly-available datasets: LGI-PPGI, PURE and MR-NIRP. All three datasets include participants engaged in different activities while a video is recording them, and the cPPG signal is taken with a pulse oximeter.

LGI-PPGI (Pilz et al., 2018) is a dataset that contains videos from six participants, five males and one female. Each participant records a session doing four activities: Resting, Talking, exercising on a bicycle ergometer (Gym), and Rotation. The camera is a Logitech HD C270 webcam (25 fps), and the gold standard cPPG signals are taken with a pulse oximeter, CMS50E PPG device (sampling rate of 60 Hz). The camera video stream was captured uncompressed with autoexposure. The lighting condition depends on the activity; Talking is recorded outdoors, while the other activities are recorded indoors.

The PURE dataset (Stricker et al., 2014) consists of 10 participants, eight males and two females. They perform activities classified as Steady, Talking, Slow Translation, Fast Translation, Small Rotation, and Medium Rotation. An eco274CVGE camera by SVS-Vistek GmbH (30 fps) with a 640 × 480 pixel resolution and 4.8 mm lens was used. The pulse oximeter model is CMS50E (sampling rate of 60 Hz). The lighting is daylight through a window frontal to the face. The distance to the camera was 1.1 m, on average.

The MR-NIRP video dataset (indoor) (Magdalena Nowara et al., 2018) is composed of eight subjects, six males and two females, with different skin tones labelled by the data creator: one participant with an Asian skin tone, four with an Indian skin tone, and three with a Caucasian skin tone. The activities were Still and Motion, and in the latter, the participants talked and moved their heads. The main camera used is a FLIR Blackfly BFLY-U3-23S6C-C (sensor format ‘rggb’) with a resolution of 640 × 640 (30 fps). The device used to record the cPPG sequences was a CMS 50D + finger pulse oximeter (sampling rate of 60 Hz).

2.2 Data processing

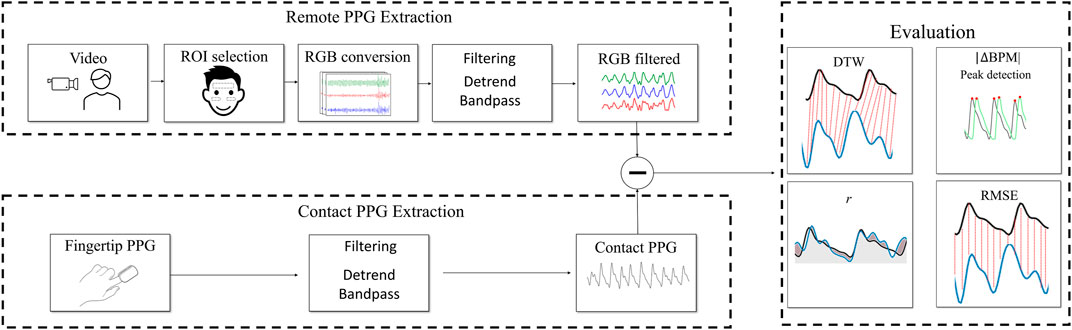

To obtain the rPPG signals that were later compared with the cPPG signals, several pre-processing steps were applied. A visualization is shown in Figure 1. The framework pyVHR (Boccignone et al., 2022) was implemented to do the preprocessing. For every video, the same procedure was applied. The regions of interest (ROIs) were extracted using the MediaPipe (Lugaresi et al., 2019) framework, which allowed the selection of 468 facial landmarks. Each landmark corresponds to a specific facial area, identified by a numerical label denoting its location. The choice was based on a combination of ROIs from the right cheek, left cheek, and forehead, as proposed by other authors (Kwon et al., 2015; Kim et al., 2021). Specifically, the landmarks were (107, 66, 69, 109, 10, 338, 299, 296, 336, 9) from the forehead, (118, 119, 100, 126, 209, 49, 129, 203, 205, 50) from the left cheek, and (347, 348, 329, 355, 429, 279, 358, 423, 425, 280) from the right cheek (Haugg et al., 2022). After extracting the ROIs, the average of each color was calculated for each frame. Consequently, each video consisted of a series of distinct frames, with each frame being characterized by three values denoting the average intensity across the Red, Green, and Blue (RGB) channels. This transformation effectively rendered the videos as signal representations, wherein each video was associated with three signals, each signal having a length equal to the number of frames within the video. Each signal is an rPPG signal, which will be compared to the cPPG signal extracted from the pulse oximeter.

FIGURE 1. Visualization of the data extraction to remote photoplethysmograpm (rPPG) evaluation process. DTW = dynamic time warping, r = Pearson’s correlation coefficient, RMSE = root-mean-square error, |ΔBPM| = difference in heartbeats obtained from rPPG and contact PPG.

To compare the ground truth cPPG and the rPPG, both signals were normalized according to robust normalization. This normalization is useful in cases where outliers exist, and it is more effective than other scaling techniques, such as min–max. The rPPG signals were downsampled to have the same sampling frequency as the cPPG signals, which allowed for comparison. Then, two filters were applied to the rPPG and cPPG: detrend and bandpass. The bandpass filter is a sixth order Butterworth filter with a cutoff frequency range of 0.65–4 Hz. The signals were divided into 10-s windows with no overlap, accounting for a total of 60 s per video. Therefore, the final dataset consisted of samples of 10 s of processed rPPG and the ground truth cPPG, which are compared with the metrics described later in this section.

2.3 Frequency domain

For the spectral analysis, Welch’s method was applied to every rPPG and cPPG window after preprocessing. The highest peak in the frequency domain was then chosen as the estimated HR. Other methods, such as autocorrelation, were implemented, but the absolute differences in |ΔBPM| were very small. We found this metric useful as it evaluates the frequency domain and it shows the capability of the rPPG signals from every channel to predict the HR.

2.4 Evaluation of the signals

Four metrics were used to evaluate the signals: dynamic time warping (DTW), Pearson’s r correlation coefficient, root-mean-square error (RMSE), and difference in heartbeats obtained from rPPG and cPPG (|ΔBPM|). For each video, the evaluation was done for every metric over 10-s windows. The resulting values were averaged to obtain the final results for each video. This enabled us to evaluate the results of every color channel from different perspectives.

2.4.1 DTW

DTW (Müller, 2007) is an algorithm that measures the similarity between two time series, defined as:

Given two time series A = [a1, a2, … , an] and B = [b1, b2, … , bm], where n and m are the lengths of the time series, the DTW distance between A and B is defined as:

where K is a warp path that maps the indices ik of time series A to indices jk of time series B and d (i, j) is a local distance function that measures the dissimilarity between data points ai and bj.

It is particularly useful when the time series have different speeds and lengths. This applies to this case, given that, sometimes, the rPPG and its ground truth are not aligned, so other metrics that match timestamps are less suitable. The Python package DTAIDistance (Meert et al., 2020) was used to implement the metric.

2.4.2 Pearson’s correlation coefficient (r)

The r coefficient measures the strength of the association between rPPG and cPPG using the following equation:

where xi and yi are points at lag i of the rPPG and PPG signals, respectively.

2.4.3 RMSE

RMSE is the standard deviation of the prediction error (i.e., residuals between the ground truth values and the extracted rPPG signals). It is expressed as follows:

where N is the number of points and xi, yi are the points at lag i of the rPPG and contact PPG signals, respectively.

2.4.4 |ΔBPM|

Using Welch’s method, the HR was calculated with the power spectral density as the highest peak of the signal in the frequency domain. The range was restricted from 39 BPM to 240 BPM. To find the |ΔBPM|, the absolute difference in BPM from rPPG and the reference HR was calculated for every window, and then averaged.

3 Statistical tests

Statistical tests were conducted to compare the RGB channels. Non-parametric statistical tests are implemented, given that they do not make as many assumptions about the data as parametric statistical tests and, for some cases, the sample size is not large enough, e.g., comparing activities within a particular dataset where the number of subjects is small.

3.1 Friedman test

The Friedman test is suitable for this study because it compares the means of three or more groups. The following hypotheses were tested:

• Null hypothesis (H0): the medians of the groups are equal.

• Alternative hypothesis (H1): the median of at least one group is different.

The groups are represented by the three channels red, green, and blue. When RGB signals are compared among datasets or activities, the blocks are represented by the subjects. Within each block, the ranks are calculated (the idea of ranks is based on the order of the values, i.e., greater or less than) (Friedman, 1937). Then, for each group, find the average rank value as

If the p-value is significant, the means of the groups are equal, so the null hypothesis can be rejected. The next step is to use a post hoc test to calculate the pairwise group differences in the groups.

3.2 Nemenyi test

The Nemenyi test was applied to find the difference in the average ranking values and then to compare the difference with a critical distance (CD). The CD is defined as:

where qα follow the Studentized range statistic divided by

The general procedure is to apply the Friedmann test to each group (in our case it is the channels RGB) and if the p-value is significant, the difference in the means of the groups is different. In that case, the Nemenyi test is performed to rank the channels pairwise, i.e., green versus red, green versus blue and red versus blue.

4 Results

The main goal of the experiments was to show the differences between RGB channels in diverse settings. This was done by comparing the DTW, r, RMSE, and |ΔBPM| metrics. The first experiment was designed to evaluate the RGB signals across datasets. This makes it possible to assess whether the difference between the red, green, and blue channels is significant, depending on the dataset. For every dataset, there is a variation in the source of the illumination and the devices employed, including the camera and pulse oximeter. For the second experiment, the focal point was the activities conducted by the subjects. We analyzed the importance of the subjects’ activity and its impact on the performance of the rPPG signal. This makes it possible to differentiate the factors that can negatively alter the signal.

4.1 Analysis across datasets

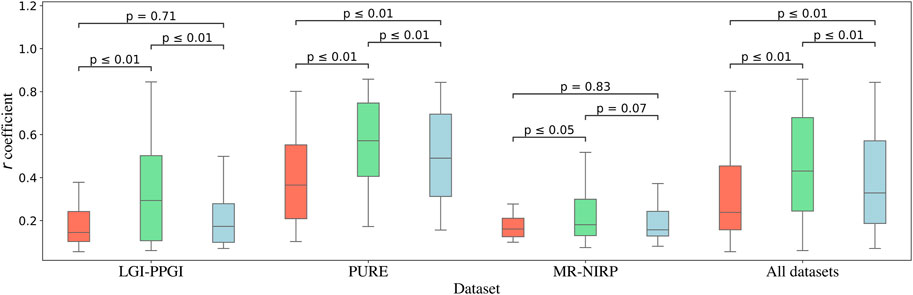

Figure 2 shows the boxplots for every channel according to its r coefficient. As shown, for all the datasets, the green channel performs the best, followed by the blue and red channels, with p-values

FIGURE 2. Similarity of each channel with the contact PPG, measured with the r coefficient. The results are shown for the datasets LGI-PPGI, PURE, and MR-NIRP. The p-value of every combination of channels, obtained with the Friedman test followed by the post hoc Nemenyi test, is represented by the bars above the boxplots.

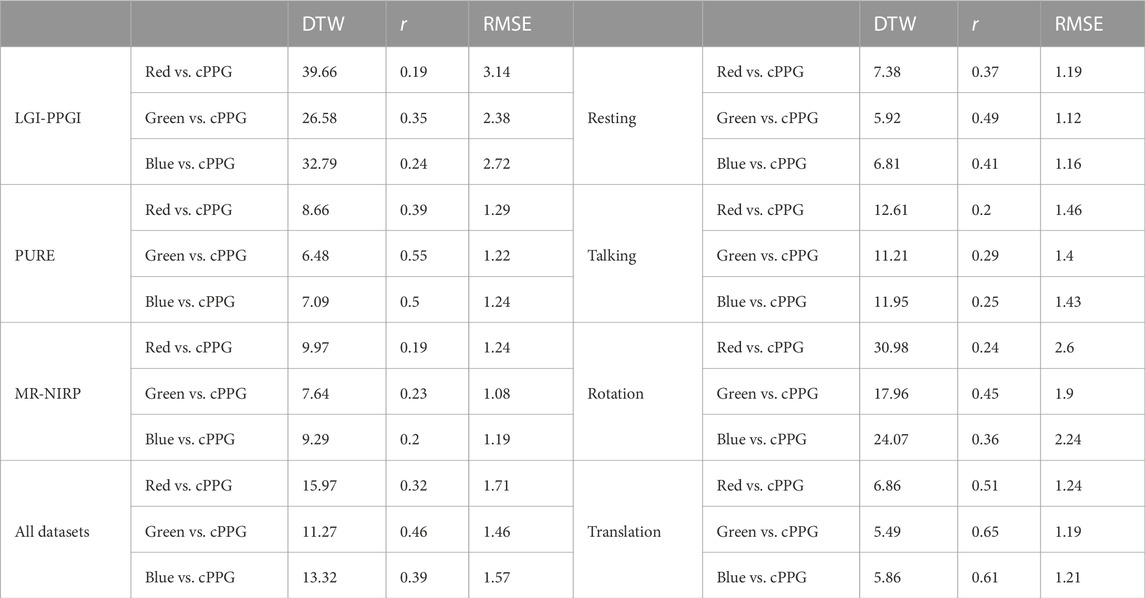

TABLE 1. Comparison of each color channel and the contact PPG (cPPG) across different activities and datasets. Results are averaged over subjects and time windows. The metrics DTW, r, and RMSE are shown. DTW = dynamic time warping, r = Pearson’s correlation coefficient, and RMSE = root-mean-square error.

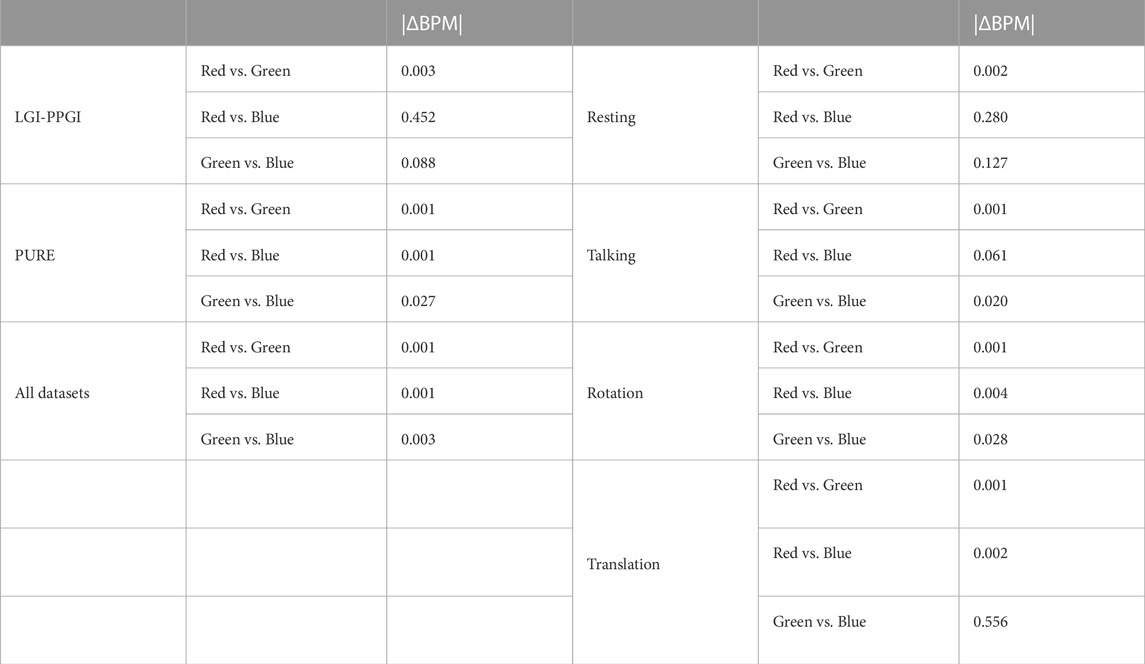

TABLE 2. Comparison of the RGB channels regarding similarity to cPPG, with the purpose of finding significant differences between each channel. The p-values are shown for DTW, r, and RMSE for both experiments, obtained with the Friedman test followed by the post hoc Nemenyi test. The results for the datasets are shown on the left; the results for the participants’ activities are shown on the right.

4.2 Analysis across activities

The second experiment was designed to determine the differences between the RGB and cPPG signals based on the activity the subjects performed. This is also a useful way to detect whether RGB signals are prone to be unreliable when the subjects engage in different activities. The activities were divided into Resting, Talking, Rotation, and Translation. Rotation considers the Rotation activity from LGI-PPGI and the Small Rotation and Medium Rotation from the PURE dataset. Talking includes the Talking activity from the LGI-PPGI and PURE datasets and Motion from the MR-NIRP dataset (during the Motion activity, the subjects talk). Lastly, Translation includes the Small and Medium Translation activities from the PURE dataset.

The performance of the RGB colors is similar to the previous case, that is, the green channel is the best-performing signal, followed by the blue and red channels. Having said that, green always shows significantly better results than red, but this is not always the case with the green channel versus the blue channel. For the Rotation and Translation activities, the green signal outperformed the blue signal in terms of the r coefficient; however, in the other cases, the difference was not significant. The results for every metric and the p-values are listed in Tables 1 and 2. As shown, the results are consistent in terms of the RGB analysis of both the datasets and the activities.

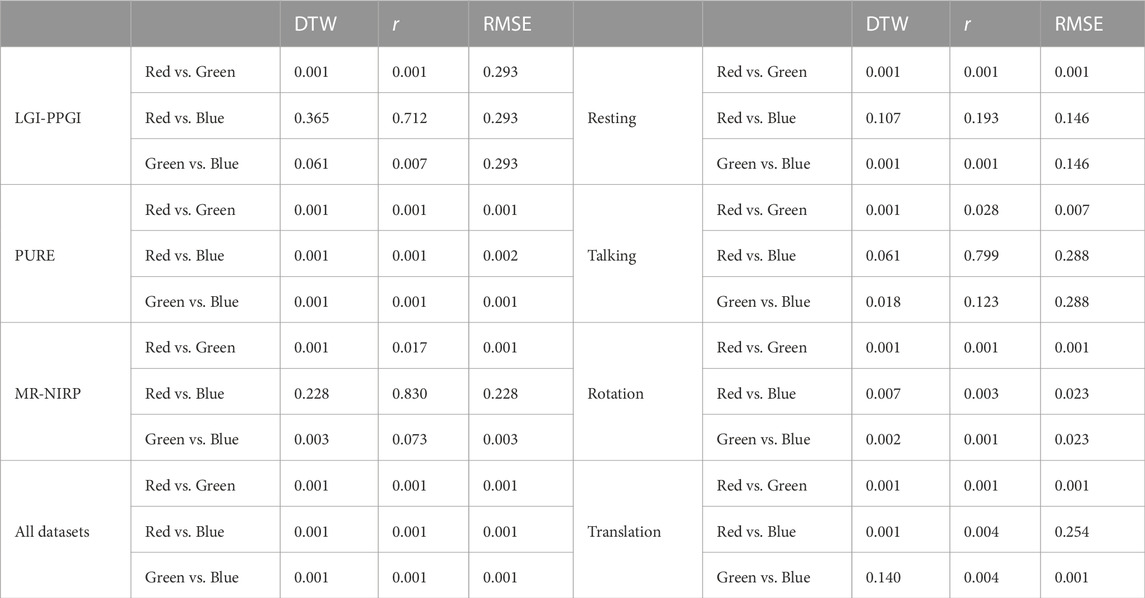

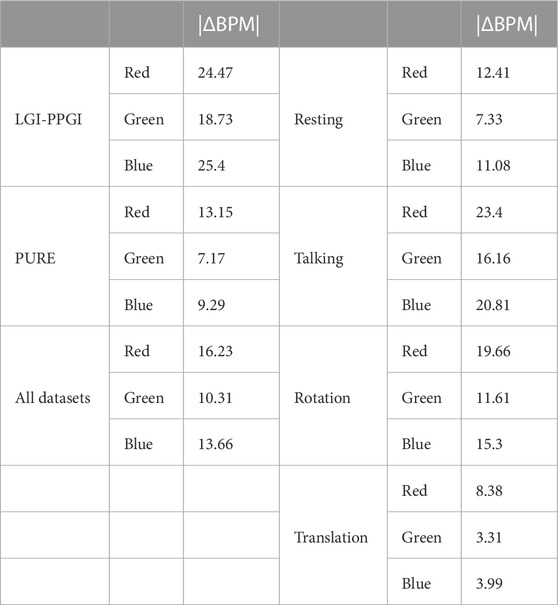

4.3 HR and frequency domain

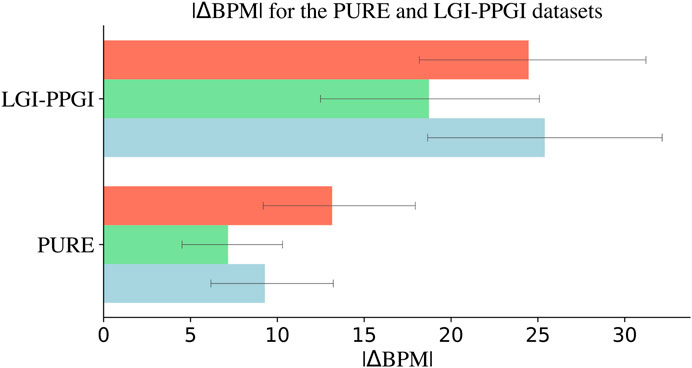

Welch’s method was used to quantify how each signal performs when predicting HR. A comparison of the HR for LGI-PPGI and PURE is shown in Figure 3. The estimation was done with the LGI-PPGI and PURE datasets because they were the only datasets that included the ground truth BPM. PURE is better than LGI-PPGI not only in terms of r, RMSE, and DTW, but also HR estimation. The results are shown in Table 3; the p-values are shown in Table 4. The green channel again outperformed the other channels, which followed the same pattern as the results of the morphology of the signals in the time domain. However, the red channel signal estimates the HR better than the blue channel signal in the LGI-PPGI dataset. It is important to note that the |ΔBPM| varies greatly between the PURE and LGI-PPGI datasets.

FIGURE 3. Heart rate estimation from each RGB channel with Welch’s method using the PURE and LGI-PPGI datasets, composed of different videos of 10 and 6 subjects, respectively, performing a wide range of activities. The blue signal shows better results than the red signal in PURE, but not in LGI-PPGI, where the blue signal, on average, is outperformed by the red signal. The green signal is consistently the optimal signal. Note that |ΔBPM| = absolute difference in heartbeats obtained from rPPG and contact PPG.

TABLE 3. Comparison of the heart rate estimated from each RGB channel and the ground truth heart rate. Results are shown for the |ΔBPM| metric for the comparison across activities and datasets. In both experiments and datasets, the green channel yields the most favorable outcomes. However, its variation shifts from 3.31 |ΔBPM| in Translation to 16.16 |ΔBPM| in Talking, showing great variation. Note that |ΔBPM| = absolute difference in heartbeats obtained from rPPG and contact PPG.

TABLE 4. Comparison of the channels when estimating the heart rate against the ground truth to find significant differences between each channel. The p-values for |ΔBPM| are shown for both experiments, obtained with the Friedman test followed by the post hoc Nemenyi test. The results for the datasets are shown on the left; the results for the activities are shown on the right. Note that |ΔBPM| = absolute difference in heartbeats obtained from rPPG and contact PPG.

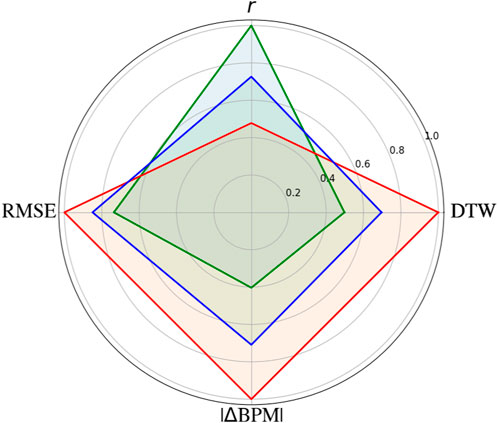

To conduct an overall comparison of the RGB channels, all the metrics were visualized. The results are shown in Figure 4. Note that all the metrics are normalized to one. Overall, the green channel is best in terms of r, DTW, RMSE, and |ΔBPM|. In every case, the blue channel was ranked second and the red channel was ranked third.

FIGURE 4. Overall performance for the red, green, and blue channels. The r, dynamic time warping (DTW), and root-mean-square error (RMSE) metrics were used. The results are shown for an average over subjects and time windows for each metric. The green channel consistently shows the best performance, followed by the blue and red channels. All the metrics are normalized to one.

5 Discussion

We assessed the performance of the RGB channels as rPPG signals for different datasets and activities, and we evaluated them using four metrics to rank the channels. First, the morphology of the signals was compared for r, RMSE, and DTW. For the comparison with datasets, the green channel showed better overall results than the blue and red channels. However, depending on the metric, some of the results are not statistically significant. This is the case of the red channel versus the blue channel for the MR-NIRP and LGI-PPGI datasets for all the metrics. Nonetheless, the PURE dataset results for every metric and channel were significant, with all of the p-values

Regarding the spectral analysis, the results confirmed what had been previously shown in terms of the ranking of the channels. The |ΔBPM| was greater for the red signal, followed by the blue and green signals. Again, the results were better for the PURE dataset than for the LGI-PPGI dataset. This is partly due to the better morphology of the signals in the PURE dataset compared to the LGI-PPGI dataset. In some cases, the RGB signals are not reliable, especially when the settings in which the videos are recorded are not appropriate and the subject moves. For example, when the subject engages in fast rotation or is exercising on a bicycle in a gym, it can negatively affect the quality of the signal. Nevertheless, some algorithms, such as CHROM, perform well when abrupt changes in amplitude and shape occur in the signal due to different subject movements.

While other studies have confirmed these results, to date, there has been no exhaustive study of what channel is the best for different datasets, activities, and metrics. Most researchers used the green channel based on the results obtained by a research group in 2008 (Verkruysse et al., 2008). Still, that study (Verkruysse et al., 2008) aimed to demonstrate rPPG with ambient light, and the results were shown only for one participant at a time. This indicates that the green channel is a proper candidate, but there is no exhaustive scientific study that has confirmed it. One recent study (Sinhal et al., 2021) pointed out the difference between RGB signals and other algorithms, but only for one dataset, and it used fewer metrics. It is important to include several datasets because, as we have seen, the dataset has a significant impact on the results. Moreover, in that study (Sinhal et al., 2021), there was no analysis of how the subjects’ movements could affect the assessment. Another study (Lee et al., 2013), focused on the differences in RGB in relation to movement. That study had 12 subjects, and the movements were divided into horizontal and vertical. Our study included a wider range of movements and more subjects. They confirmed the results for HR but did not find statistically significant differences between green and blue for the SNR ratio (Lee et al., 2013).

We have shown that to obtain high-quality rPPG signals using only the RGB channels, the settings (such as the camera or illumination) in which the subjects’ activities are recorded is important because the variation in the inter-dataset is mostly due to those settings. Moreover, the cPPG signals of some datasets are obtained with unreliable devices, such as wristbands, instead of a pulse oximeter. Therefore, the rPPG from the RGB is not robust. Furthermore, although the RGB ranking results were the same, the values obtained for every metric showed differences between the activities. For the green channel, the |ΔBPM| obtained in the Resting activity was 7.73; it was 16.6 for the Talking activity. It is important to note that Resting has a higher |ΔBPM| than Translation because the latter only includes data from PURE, which is the best performing dataset. Overall, this leads to the conclusion that RGB signals should be recorded in situations where the subject is not moving and, more importantly, when the cameras and illumination conditions are adequate. While many modern algorithms overcome most of this reliability problem, when less noisy RGB signals with higher quality are obtained, the algorithms’ results are better.

6 Conclusion

In conclusion, the green channel signal exhibited the most favorable outcomes in all metrics assessed, followed by the blue and red channels. This conclusion was verified not only for signal morphology but also for HR estimation in the frequency domain. However, when analyzing the signal morphology, the RGB performance varied depending on the dataset and activity, with the selection of dataset having a significant impact on the rPPG for all metrics. The PURE dataset performed better than the LGI-PPGI and MR-NIRP datasets in terms of all metrics. Furthermore, the green channel provided the most accurate HR estimation in the frequency domain, with the blue and red channels following closely behind. Notably, for different activities, the |ΔBPM| exhibited a substantial change, ranging from 3.31 BPM for the green channel in the Resting activity to 33.9 BPM for the red channel in the Rotation activity. This implies that RGB signals are not resilient to diverse datasets and activities, which is an important consideration when utilizing them in clinical applications.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: The PURE dataset is available at https://www.tu-ilmenau.de/universitaet/fakultaeten/fakultaet-informatik-und-automatisierung/profil/institute-und-fachgebiete/institut-fuer-technische-informatik-und-ingenieurinformatik/fachgebiet-neuroinformatik-und-kognitive-robotik/data-sets-code/pulse-rate-detection-dataset-pure. The LGI-PPGI dataset is available at https://github.com/partofthestars/LGI-PPGI-DB. The MR-NIRP dataset is available at https://computationalimaging.rice.edu/mr-nirp-dataset/. The framework pyVHR can be downloaded from https://github.com/phuselab/pyVHR.

Author contributions

RO: Data curation, Formal Analysis, Visualization, Writing–original draft. ME: Conceptualization, Investigation, Methodology, Supervision, Project administration, Visualization, Writing–original draft, Writing–review and editing. GM: Data curation, Writing–original draft. CM: Funding acquisition, Project administration, Resources, Software, Supervision, Writing–review and editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. The open access fees were funded by ETH Zurich.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ayesha A. H., Qiao D., Zulkernine F. (2021). “Heart rate monitoring using PPG with smartphone camera,” in 2021 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Houston, TX, USA, 09-12 December 2021, 2985–2991.

Bhattacharjee A., Yusuf M. S. U. (2021). “A facial video based framework to estimate physiological parameters using remote photoplethysmography,” in 2021 International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT), Bhilai, India, 19-20 February 2021, 1–7.

Boccignone G., Conte D., Cuculo V., D'Amelio A., Grossi G., Lanzarotti R., et al. (2022). pyvhr: a python framework for remote photoplethysmography. PeerJ Comput. Sci. 8, e929. doi:10.7717/peerj-cs.929

Charlton P. H., Allen J., Bailón R., Baker S., Behar J. A., Chen F., et al. (2023). The 2023 wearable photoplethysmography roadmap. Physiol. Meas. 44, 111001. doi:10.1088/1361-6579/acead2

De Haan G., Jeanne V. (2013). Robust pulse rate from chrominance-based rPPG. IEEE Trans. Biomed. Eng. 60, 2878–2886. doi:10.1109/TBME.2013.2266196

Demšar J. (2006). Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 7, 1–30.

Elgendi M. (2012). On the analysis of fingertip photoplethysmogram signals. Curr. Cardiol. Rev. 8, 14–25. doi:10.2174/157340312801215782

Elgendi M., Fletcher R., Liang Y., Howard N., Lovell N. H., Abbott D., et al. (2019). The use of photoplethysmography for assessing hypertension. NPJ Digit. Med. 2, 60. doi:10.1038/s41746-019-0136-7

Frey L., Menon C., Elgendi M. (2022). Blood pressure measurement using only a smartphone. npj Digit. Med. 5, 86. doi:10.1038/s41746-022-00629-2

Friedman M. (1937). The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J. Am. Stat. Assoc. 32, 675–701. doi:10.1080/01621459.1937.10503522

Haugg F., Elgendi M., Menon C. (2022). Effectiveness of remote PPG construction methods: a preliminary analysis. Bioengineering 9, 485. doi:10.3390/bioengineering9100485

Haugg F., Elgendi M., Menon C. (2023). Grgb rppg: an efficient low-complexity remote photoplethysmography-based algorithm for heart rate estimation. Bioengineering 10, 243. doi:10.3390/bioengineering10020243

Kim D. Y., Lee K., Sohn C.-B. (2021). Assessment of roi selection for facial video-based rPPG. Sensors 21, 7923. doi:10.3390/s21237923

Kwon S., Kim J., Lee D., Park K. (2015). “Roi analysis for remote photoplethysmography on facial video,” in 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25-29 August 2015 (IEEE), 4938–4941.

Lee J., Matsumura K., Yamakoshi K. I., Rolfe P., Tanaka S., Yamakoshi T. (2013). “Comparison between red, green and blue light reflection photoplethysmography for heart rate monitoring during motion,” in 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 03-07 July 2013, 1724–1727.

Lewandowska M., Rumiński J., Kocejko T., Nowak J. (2011). “Measuring pulse rate with a webcam—a non-contact method for evaluating cardiac activity,” in 2011 Federated Conference on Computer Science and Information Systems (FedCSIS), Szczecin, Poland, 18-21 September 2011, 405–410.

Lugaresi C., Tang J., Nash H., McClanahan C., Uboweja E., Hays M., et al. (2019). Mediapipe: a framework for building perception pipelines. arXiv preprint arXiv:1906.08172.

Lyzwinski L. N., Elgendi M., Menon C. (2023). The use of photoplethysmography in the assessment of mental health: scoping review. JMIR Ment. Health 10, e40163. doi:10.2196/40163

Magdalena Nowara E., Marks T. K., Mansour H., Veeraraghavan A. (2018). “Sparseppg: towards driver monitoring using camera-based vital signs estimation in near-infrared,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18-22 June 2018, 1272–1281.

Mashhadi M. B., Asadi E., Eskandari M., Kiani S., Marvasti F. (2015). Heart rate tracking using wrist-type photoplethysmographic (PPG) signals during physical exercise with simultaneous accelerometry. IEEE Signal Process. Lett. 23, 227–231. doi:10.1109/LSP.2015.2509868

Mayampurath A., Volchenboum S. L., Sanchez-Pinto L. N. (2018). Using photoplethysmography data to estimate heart rate variability and its association with organ dysfunction in pediatric oncology patients. npj Digit. Med. 1, 29. doi:10.1038/s41746-018-0038-0

Meert W., Hendrickx K., Craenendonck T. V. (2020). wannesm/dtaidistance v2.0.0. doi:10.5281/zenodo.3981067

Müller M. (2007). “Dynamic time warping,” in Information retrieval for music and motion (Berlin, Heidelberg: Springer), 69–84.

Pereira T., Tran N., Gadhoumi K., Pelter M. M., Do D. H., Lee R. J., et al. (2020). Photoplethysmography based atrial fibrillation detection: a review. npj Digit. Med. 3, 3. doi:10.1038/s41746-019-0207-9

Pilz C. S., Zaunseder S., Krajewski J., Blazek V. (2018). “Local group invariance for heart rate estimation from face videos in the wild,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18-22 June 2018, 1254–1262.

Schrumpf F., Frenzel P., Aust C., Osterhoff G., Fuchs M. (2021). “Assessment of deep learning based blood pressure prediction from PPG and rPPG signals,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19-25 June 2021, 3820–3830.

Sinhal R., Singh K. R., Gupta K. (2021). “Color intensity: a study of RPPG algorithm for heart rate estimation,” in 2021 International Conference on Computational Performance Evaluation (ComPE), Shillong, India, 01-03 December 2021, 580–584.

Stricker R., Müller S., Gross H.-M. (2014). “Non-contact video-based pulse rate measurement on a mobile service robot,” in The 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25-29 August 2014, 1056–1062.

van Es V. A., Lopata R. G., Scilingo E. P., Nardelli M. (2023). Contactless cardiovascular assessment by imaging photoplethysmography: a comparison with wearable monitoring. Sensors 23, 1505. doi:10.3390/s23031505

Verkruysse W., Svaasand L. O., Nelson J. S. (2008). Remote plethysmographic imaging using ambient light. Opt. express 16, 21434–21445. doi:10.1364/oe.16.021434

Wang W., Den Brinker A. C., Stuijk S., De Haan G. (2016). Algorithmic principles of remote PPG. IEEE Trans. Biomed. Eng. 64, 1479–1491. doi:10.1109/TBME.2016.2609282

Yu Z., Peng W., Li X., Hong X., Zhao G. (2019). “Remote heart rate measurement from highly compressed facial videos: an end-to-end deep learning solution with video enhancement,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 27 October 2019 - 02 November 2019, 151–160.

Keywords: rPPG, pulse oximentry, blood flow, volumetric changes, digital health, mobile health, non-contact assessment, remote monitoring

Citation: Ontiveros RC, Elgendi M, Missale G and Menon C (2023) Evaluating RGB channels in remote photoplethysmography: a comparative study with contact-based PPG. Front. Physiol. 14:1296277. doi: 10.3389/fphys.2023.1296277

Received: 29 September 2023; Accepted: 28 November 2023;

Published: 22 December 2023.

Edited by:

Takemi Matsui, Tokyo Metropolitan University, JapanReviewed by:

Guanghao Sun, The University of Electro-Communications, JapanJan Kubicek, VSB-Technical University of Ostrava, Czechia

Copyright © 2023 Ontiveros, Elgendi, Missale and Menon. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mohamed Elgendi, moe.elgendi@hest.ethz.ch; Carlo Menon, carlo.menon@hest.ethz.ch

†These authors have contributed equally to this work

Rodrigo Castellano Ontiveros1,2†

Rodrigo Castellano Ontiveros1,2† Mohamed Elgendi

Mohamed Elgendi Carlo Menon

Carlo Menon