- 1Department of Radiation Oncology, Shandong Cancer Hospital and Institute, Shandong First Medical University and Shandong Academy of Medical Sciences, Jinan, China

- 2Department of Radiation Oncology, Qingdao Central Hospital, Qingdao, Shandong, China

- 3Hunan Cancer Hospital, Xiangya School of Medicine, Central South University, Changsha, Hunan, China

- 4Manteia Technologies Co., Ltd, Xiamen, Fujian, China

Purpose/Objective(s): The aim of this study was to improve the accuracy of the clinical target volume (CTV) and organs at risk (OARs) segmentation for rectal cancer preoperative radiotherapy.

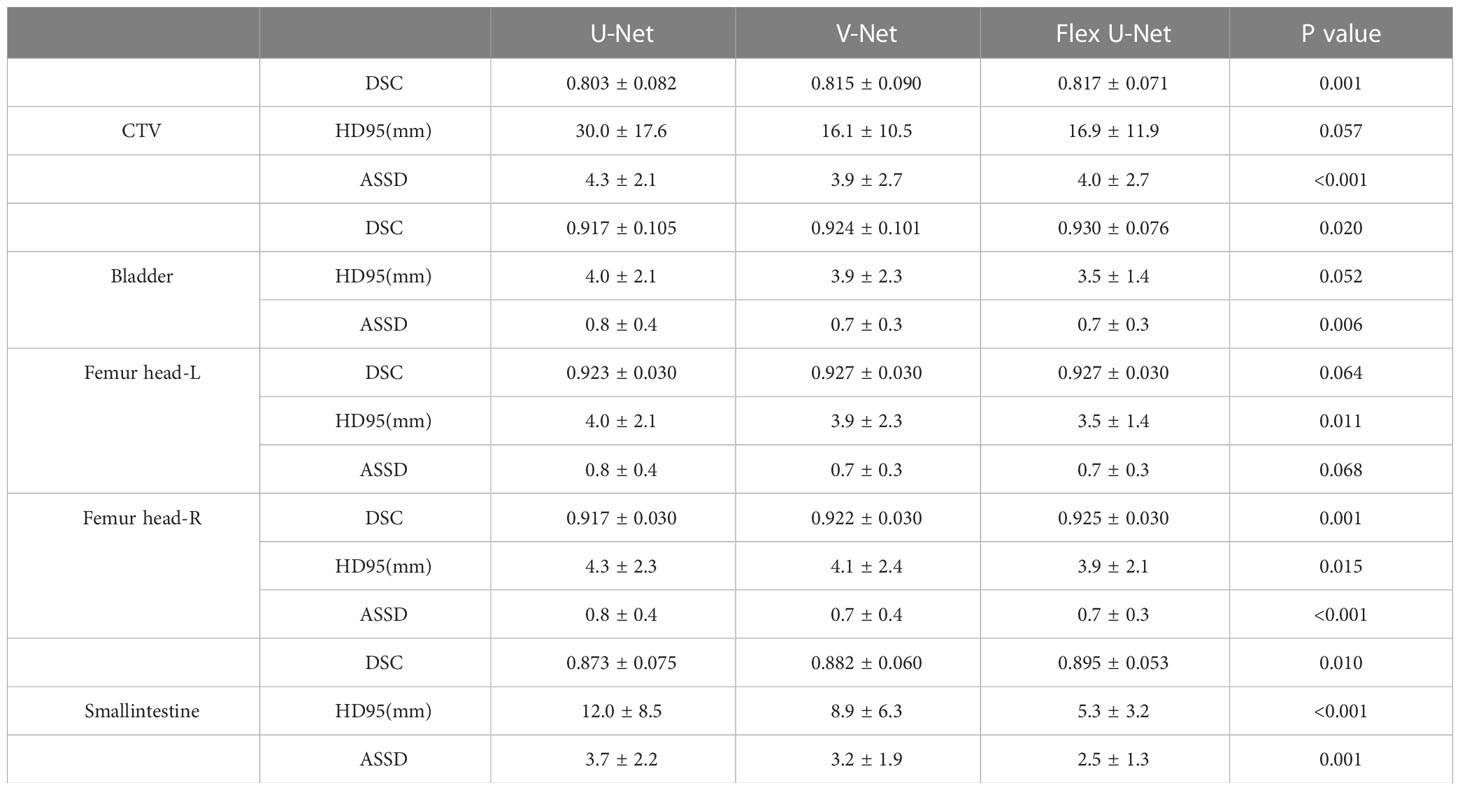

Materials/Methods: Computed tomography (CT) scans from 265 rectal cancer patients treated at our institution were collected to train and validate automatic contouring models. The regions of CTV and OARs were delineated by experienced radiologists as the ground truth. We improved the conventional U-Net and proposed Flex U-Net, which used a register model to correct the noise caused by manual annotation, thus refining the performance of the automatic segmentation model. Then, we compared its performance with that of U-Net and V-Net. The Dice similarity coefficient (DSC), Hausdorff distance (HD), and average symmetric surface distance (ASSD) were calculated for quantitative evaluation purposes. With a Wilcoxon signed-rank test, we found that the differences between our method and the baseline were statistically significant (P< 0.05).

Results: Our proposed framework achieved DSC values of 0.817 ± 0.071, 0.930 ± 0.076, 0.927 ± 0.03, and 0.925 ± 0.03 for CTV, the bladder, Femur head-L and Femur head-R, respectively. Conversely, the baseline results were 0.803 ± 0.082, 0.917 ± 0.105, 0.923 ± 0.03 and 0.917 ± 0.03, respectively.

Conclusion: In conclusion, our proposed Flex U-Net can enable satisfactory CTV and OAR segmentation for rectal cancer and yield superior performance compared to conventional methods. This method provides an automatic, fast and consistent solution for CTV and OAR segmentation and exhibits potential to be widely applied for radiation therapy planning for a variety of cancers.

Introduction

Currently, rectal cancer is one of the deadliest malignancies, ranking third in the incidence of malignant tumors and fourth in the mortality rate (1). Chemoradiotherapy (CCRT) followed by surgical resection is typically considered the standard treatment for reducing the incidence of local recurrence for locally advanced rectal cancer (2). Intensity-modulated radiation therapy (IMRT) and volumetric modulated arc therapy (VMAT) have become state-of-the-art methods in current radiotherapy practice, because of their ability to facilitate conformity in the desired target dose and sufficiently spare critical structures (3, 4). However, such precise treatments require that CTV and OARs be accurately delineated, as introduced by the International Commission on Radiation Units and Measurement (ICRU) and the subsequently revised guidelines.

It is a very significant yet time-consuming process to manually draw the contours of tumor targets and organs at risk (OARs) in a slice-by-slice manner on planning CT scans in radiation oncology (5, 6). The segmentation task is performed by radiation oncologists, which need to be rich in knowledge, experience and time to achieve a clinical acceptable quality. Moreover, a large amount of image reading work puts a serious burden on radiologists, and the final decisions may be affected by the inter- and intraobserver segmentation variability (7). It is worth noting that multiple studies have shown that the contouring consensus among different oncologists is poor, which has hindered the ability to systematically assess the quality of radiation therapy plans and is considered a major source of uncertainty (8, 9). Therefore, there is an urgent clinical need for an algorithm that can accurately and automatically segment target volumes and OARs.

During the last few decades, the “Atlas-based autosegmentation (ABAS)” method has been one of the most widespread and successful image segmentation techniques in oncology (10). However, ABAS has two main challenges. First, it is hard to establish a “universal atlas” for every organ due to inconsistencies between the shapes and sizes of the organs in the human body. Moreover, a major disadvantage of employing ABAS is that the deformable registration process is time-consuming. It often must align the target image with multiple atlases, which repeats the registration process several times. Recently, various semi-automatic and automatic segmentation methods for CTV delineation based on manual features and machine learning methods have been developed and validated (11). However, due to the potentially conflicting requirements between images, it is difficult to establish a robust, direct, and objective cost function for graph-based methods.

Recently, emerging deep learning (DL) technologies have achieved considerable advancements in medical imaging segmentation, with U-Net being the most popular algorithm. The widespread used U-Net demonstrates the advantages in terms of accurate segmentation due to its U-shaped structure combined with a fast training speed, context information and a small quantity of data used (12). It is inevitable to innovate and improve U-Net-based approaches owing to the current challenges faced by medical image segmentation. The most important point is that the existence of noise reduces the validity of U-Net in supervised learning, which affects the resulting model performance.

In this work, we proposed a new type of convolutional neural network (Flex U-Net) to automatically segment CTVs and OARs in planning CT scans for rectal cancer. Based on popular U-Net architectures, different from conventional segmentation models, the proposed method uses a register model to correct the noise caused by manual annotation, which is a source of potential error in radiation therapy treatments. Attempting to decouple the noise in the labels from the ground truths through the register network provides a pure training target for the segmentation model. Experimental results show that the Flex U-Net proposed in this paper achieves better performance than traditional methods; thus can provide an automatic, fast and consistent solution for radiation therapy planning.

Materials and methods

Data acquisition

A total of 265 patients with locally advanced rectal cancer who received preoperative CCRT followed by surgical resection from January 2016 to January 2021 in our department were retrospectively enrolled in this study. Simulated contrast CT data were acquired on a Brilliance CT Big Bore (Philips Healthcare, Best, the Netherlands) system. The CT images were reconstructed with matrix size of 512 × 512 and thickness of 3.0 mm. Magnetic resonance images were collected for some patients to assist with target determination. Regions of interest (ROIs) and OARs were manually drawn on each image slice in the planning CT scans using the Eclipse TPS (Varian Medical Systems, Palo Alto, CA, USA) or Pinnacle TPS (Philips Radiation Oncology Systems, Fitchburg, WI, USA). Clinical Target Volume (CTV) and Organs at Risk (OARs) delineation was based on the guideline published by international experts (13). A set of outlines of the CTVs and OARs on each patient’s CT image was first manually contoured by a radiation oncologist, then reviewed, edited, and finally approved by responsible another radiation oncologist with more than 10 years of experience.

Image preprocessing

The deep learning performance of a model can be significantly influenced by intensity variations within the utilized dataset. For instance, different CT scanners may use different reconstruction protocols, different slice thicknesses and different voxel space for specific clinical considerations. Therefore, intensity normalization and voxel spacing-based resampling were applied to the raw data. The image intensity distribution of each patient was N (0, 1) after normalization. The median spacing of each axis across all the images was selected as the target spacing. All image preprocessing was performed before training started.

Flex U-Net network architecture

The proposed Flex U-Net strategy contains two networks: a segmentation network and a register network. As illustrated in Figure 1, each image was used as input for the segmentation network to obtain a probability map. This map (gradient stopped) was then fed into the registration network together with the image to obtain a deformation field. The deformation field was then applied to the probability map to obtain a corrected map, which was finally used to calculate the segmentation loss relative to the ground truth.

The theoretical motivation for Flex U-Net stems from the observation of the intra- or interobserver variability that is implicit in labels. There is an inevitable variance caused by the objective determination of the labels during the contouring process. This variance is reflected in the fact that the contour is not sufficient for fitting the real target edge in the given image. Although variance exists in the labels of the dataset, we believe that the overall dataset distribution should be unbiased. Therefore, we decomposed the image segmentation problem into two parts, segmenting the real target edge and performing variance correction, which corresponded to the segmentation network and the register network, respectively.

Loss function

Suppose that we are given a training dataset , where denote scanned images and manual labels, respectively. indicates that is the real ground truth yn with a variance of ϵ. Our goal is to train a segmentation network θs that can predict the real yn from the input image xn, which requires a register network θR to correct to yn. Given the segmentation network probability map and the deformation field , the main objective can be expressed as the following equation:

where denotes the segmentation probability map applied by the deformation field. Specifically, Lseg can be expressed as a combination of the cross-entropy and Dice losses as follows:

Where a is 1.0 by default. To balance the deformation intensity of the register network, the smooth regularization process of the deformation field is introduced as follows:

In summary, our final objective is

Training details

The deep learning-based training framework used in this study was PyTorch 1.8 LTS. The network structures of the segmentation and register networks were the same, as shown in Figure 1. Batch normalization and the rectified linear unit (ReLU) activation function were applied after each convolution layer except for the last layer. The feature maps output by each stage in the encoder module transferred the semantic information to the decoder by concatenation. Data augmentations, including spatial transformation, Gaussian noise addition, Gaussian blurring, and nonlinear intensity shifting, were applied in turn. Other training details included the adaptive moment estimation (Adam) optimizer with a learning rate of 0.0003 and a poly-decay schedule, a total of 100 epochs and 200 iterations per epoch, and a batch size of 16 samples.

Quantitative evaluation

To assess whether the Flex U-Net correctly segmented the target area, there are 27 patients used to evaluate the performance of the model. We computed several quantitative measurements, namely, the Dice similarity coefficient (DSC), Hausdorff-95 distance (95% HD), and average symmetric surface distance (ASSD), to compare the segmentation results of the proposed method with those of U-Net and V-Net.

The DSC is defined as follows.

where A represents the ground truth, B denotes the model prediction results and A∩B is the intersection of A and B. The result of DSC is the value ranging from 0 and 1, where 0 reflects no overlap and 1 means there is a complete overlap between structures A and B.

The HD is defined as shown in Eq. (2):

where d(a, b) is the distance between point a and point b.

The ASSD is defined as shown in Eq. (3):

where S (A) represents the surface voxels in set A, and d (v, S (A)) represents the shortest distance from any voxel to S (A).

Results

The network performance on the independent test group measured by the DSC, HD95 and ASSD metrics is summarized in Table 1. The proposed Flex U-Net showed better overall agreement than U-Net and V-Net, as shown by the value of DSC. Compared with U-Net and Flex U-Net, the DSC of CTVFlex U-Net was 0.817, significantly higher than that of CTVU-Net (P = 0.001). Regarding OARs, the differences in DSC of femur head-R (P = 0.001) and smallintestine (P = 0.010) were statistically significant, indicating that Flex U-Net had better segment accuracy. In addition, the HD95 values for all targets were reduced by Flex U-Net compared those of U-Net and V-Net. These values showed the excellent performance of automatic contour segmentation compared with the results of manual segmentation.

Discussion

Preoperative (chemo) radiotherapy followed by total mesorectal excision is the current standard of care for patients with locally advanced rectal cancer and has been shown to significantly reduce the risk of locoregional recurrence (14, 15). Consistency of target delineation is a key factor in determining the efficacy of radiotherapy. Caravatta et al. (16) evaluated the overlap accuracy between the CTV delineation results of different radiation oncologists and obtained a DSC of 68%. Lu et al. (17) investigated the interobserver variations in the GTV contouring results obtained for H&N patients and reported a DSC value of only 75%. Automatic segmentation of the CTV and OARs has been proposed as a solution to accelerate the delineation process, which is expected to improve the efficiency and consistency of target delineation.

The DL method does not manually extract and learn the information features of the description pattern but discovers a representation of the information through self-learning and uses hierarchical learning abstraction to efficiently complete high-level tasks (18). In the field of computer vision, image segmentation refers to the process of subdividing a digital image into multiple image regions (sets of pixels) that have definite similarity among the features in each region, and the features of different regions exhibit obvious differences. One of the important aspects is that DL has the ability to relieve radiation oncologists from their labor-intensive workloads and increase the consistency, accuracy, and reproducibility of region of interest (ROI) delineation (19). Several authors have applied DL to target volume definition in head and neck cancer (6, 20), prostate cancer (21), lung cancer (22), brain metastases (23), and breast cancer (24).

Target segmentation is the first and key step toward tumor radiotherapy. In other words, image segmentation is about identifying the set of voxels that make up the ROI, which typically can be achieved by employing deep learning methods to medical imaging. Unlike OARs, a CTV is not a well-defined area with clear boundaries but rather includes tissues with the potential for tumor spread or subclinical diseases that are almost undetectable in planning CT images. CTV segmentation depends largely on the radiation oncologists’ knowledge. More specifically, deep learning can reduce the use of domain expert knowledge in the extract and selection of the most appropriate discriminative features.

U-Net is a DL network that segments critical features, and has become a popular baseline in medical imaging segmentation. Nevertheless, these algorithms still do not meet the requirements in the field of radiation therapy. U-Net can be optimized and adjusted according to the actual application scene, and it still has great potential for improvement in terms of training accuracy, feature enhancement and fusion, the use of small sample training set, application range, training speed optimization and so on. This study introduces the modified and developed U-Net models that are suitable for improving segmentation accuracy.

Unlike conventional segmentation models, the proposed method uses a register model to correct the noise caused by manual annotation, which is a source of potential error in radiation therapy treatments. The existence of noise makes the utilized segmentation model learn invalid or even harmful information in supervised learning, which affects the performance of the model. Through the role of the register network, the noise in the labels and the ground truths are decoupled, providing a cleaner training target for the segmentation model. Attempting to decouple the noise in the label from the ground truth through the register network provides a pure training target for the segmentation model. Stefano et al. (25) evaluated a CNN for the automatic segmentation of rectal cancers in multiparametric MR imaging, and the results showed that the average DSC was 0.69. Song et al. (26) used two CNNs, DeepLabv3+ and ResUNet, to segment CTV, and the experimental results showed that the DSCs were 0.79 vs 0.78. Our network exhibited superior performance, and improved the effect of the segmentation model.

Due to data preparation and implementation limitations, there are still some strive to attain better segmentation ability. First, supplementary image modalities can be added to the proposed Flex U-Net to further improve itsd segmentation certainty. Second, the data conducted in this study was from a single center, and all the subjects had the same image parameters. The performance of this study will need to be compared with the results of more prospective studies to confirm our initial findings on the efficiency and accuracy of the method in order to further optimize its performance.

Conclusion

In this work, we proposed a CTV and OAR segmentation framework for rectal cancer radiotherapy. This research employs a register model to correct the noise caused by manual annotation to refine the performance of the automatic segmentation model. The proposed Flex U-Net is successfully applied to rectal cancer patients and achieves satisfactory CTV and OAR segmentations. Comparison studies proved that our proposed network can reach better segmentation accuracy than conventional U-Net methods, which show great potential to assist physicians in radiotherapy planning for a variety of cancer patients not limited to rectal cancer.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding author.

Author contributions

Conceptualization, XS and YY; methodology, HW; software, LX; validation, QZ and WZ; resources, XS and HS; data curation, HW; writing—original draft preparation, XS; writing—review and editing, YY; visualization, WZ; supervision, HS; project administration, YY; funding acquisition, XS and YY. All authors contributed to the article and approved the submitted version.

Funding

This study was supported by the National Natural Science Foundation of China (Grant No. 82072094), the Natural Science Foundation of Shandong Province (Grant No. ZR2019LZL017), the Taishan Scholars Project of Shandong Province (Grant No. ts201712098).

Conflict of interest

Authors LX, QZ, WZ are employed by Manteia Technologies Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2023.1172424/full#supplementary-material

References

1. Siegel RL, Miller KD, Fuchs HE, Jemal A. Cancer statistics, 2021. CA Cancer J Clin (2021) 71(1):7–33. doi: 10.3322/caac.21654

2. Oronsky B, Reid T, Larson C, Knox SJ. Locally advanced rectal cancer: the past, present, and future. Semin Oncol (2020) 47(1):85–92. doi: 10.1053/j.seminoncol.2020.02.001

3. Zhao J, Hu W, Cai G, Wang J, Xie J, Peng J, et al. Dosimetric comparisons of VMAT, IMRT and 3DCRT for locally advanced rectal cancer with simultaneous integrated boost. Oncotarget (2016) 7(5):6345. doi: 10.18632/oncotarget.6401

4. Owens R, Mukherjee S, Padmanaban S, Hawes E, Jacobs C, Weaver A, et al. Intensity-modulated radiotherapy with a simultaneous integrated boost in rectal cancer. Clin Oncol (R Coll Radiol) (2020) 32(1):35–42. doi: 10.1016/j.clon.2019.07.009

5. Men K, Dai J, Li Y. Automatic segmentation of the clinical target volume and organs at risk in the planning CT for rectal cancer using deep dilated convolutional neural networks. Med Phys (2017) 44(12):6377–89. doi: 10.1002/mp.12602

6. Cardenas CE, McCarroll RE, Court LE, Elgohari BA, Elhalawani H, Fuller CD, et al. Deep learning algorithm for auto-delineation of high-risk oropharyngeal clinical target volumes with built-in dice similarity coefficient parameter optimization function. Int J Radiat Oncol Biol Phys (2018) 101(2):468–78. doi: 10.1016/j.ijrobp.2018.01.114

7. Ng M, Leong T, Chander S, Chu J, Kneebone A, Carroll S, et al. Australian Gastrointestinal trials group (AGITG) contouring atlas and planning guidelines for intensity- modulated radiotherapy in anal cancer. Int J Radiat Oncol Biol Phys (2012) 5:1455–62. doi: 10.1016/j.ijrobp.2011.12.058

8. Joye I, Macq G, Vaes E, Roels S, Lambrecht M, Pelgrims A, et al. Do refined consensus guidelines improve the uniformity of clinical target volume delineation for rectal cancer? results of a national review project. Radiother Oncol (2016) 120(2):202–6. doi: 10.1016/j.radonc.2016.06.005

9. Franco P, Arcadipane F, Trino E, Gallio E, Martini S, Iorio GC, et al. Variability of clinical target volume delineation for rectal cancer patients planned for neoadjuvant radiotherapy with the aid of the platform anatom-e. Clin Transl Radiat Oncol (2018) 11:33. doi: 10.1016/j.ctro.2018.06.002

10. Iglesias JE, Sabuncu MR. Multi-atlas segmentation of biomedical images: a survey. Med Image Anal (2015) 24(1):205–19. doi: 10.1016/j.media.2015.06.012

11. Stefano A, Vitabile S, Russo G, Ippolito M, Sabini MG, Sardina D, et al. An enhanced random walk algorithm for delineation of head and neck cancers in PET studies. Med Biol Eng Comput (2017) 55:897–908. doi: 10.1007/s11517-016-1571-0

12. Yin XX, Sun L, Fu Y, Lu R, Zhang Y. U-Net-Based medical image segmentation. J Healthc Eng (2022) 2022:4189781. doi: 10.1155/2022/4189781

13. Valentini V, Gambacorta MA, Barbaro B, Chiloiro G, Coco C, Das P, et al. International consensus guidelines on clinical target volume delineation in rectal cancer. Radiother Oncol J Eur Soc Ther Radiol Oncol (2016) 120(2):195–201. doi: 10.1016/j.radonc.2016.07.017

14. Erlandsson J, Holm T, Pettersson D, Berglund A, Cedermark B, Radu C, et al. Optimal fractionation of preoperative radiotherapy and timing to surgery for rectal cancer (Stockholm III): a multicentre, randomised, non-blinded, phase 3, noninferiority trial. Lancet Oncol (2017) 18:336–46. doi: 10.1016/S1470-2045(17)30086-4

15. Hanna CR, Slevin F, Appelt A, Beavon M, Adams R, Arthur C, et al. Intensity-modulated radiotherapy for rectal cancer in the UK in 2020. Clin Oncol (R Coll Radiol) (2021) 33(4):214–23. doi: 10.1016/j.clon.2020.12.011

16. Caravatta L, Macchia G, Mattiucci GC, Sainato A, Cernusco NL, Mantello G, et al. Inter-observer variability of clinical target volume delineation in radiotherapy treatment of pancreatic cancer: a multi-institutional contouring experience. Radiat Oncol (2014) 9(1):198. doi: 10.1186/1748-717X-9-198

17. Lu L, Cuttino L, Barani I, Song S, Fatyga M, Murphy M, et al. SU-FF-J-85: inter-observer variation in the planning of head/neck radiotherapy. Med Phys (2006) 33(6):2040. doi: 10.1118/1.2240862

18. Chen M, Wu S, Zhao W, Zhou Y, Zhou Y, Wang G. Application of deep learning to auto-delineation of target volumes and organs at risk in radiotherapy. Cancer Radiother (2022) 26(3):494–501. doi: 10.1016/j.canrad.2021.08.020

19. Krithika Alias AnbuDevi M, Suganthi K. Review of semantic segmentation of medical images using modified architectures of UNET. Diagnostics (Basel) (2022) 12(12):3064. doi: 10.3390/diagnostics12123064

20. Men K, Chen X, Zhang Y, Zhang T, Dai J, Yi J, et al. Deep deconvolutional neural network for target segmentation of nasopharyngeal cancer in planning computed tomography images. Front Oncol (2017) 7:315. doi: 10.3389/fonc.2017.00315

21. Cardenas CE, McCarroll RE, Court LE, Elgohari BA, Elhalawani H, Fuller CD, et al. Deep learning algorithm for auto-delineation of high-risk oropharyngeal clinical target volumes with built-in dice similarity coefcient parameter optimization function. Int J Radiat Oncol Biol Phys (2018) 101:468–78. doi: 10.1016/j.ijrobp.2018.01.114

22. Macomber MW, Phillips M, Tarapov I, Jena R, Nori A, Carter D, et al. Autosegmentation of prostate anatomy for radiation treatment planning using deep decision forests of radiomic features. Phys Med Biol (2018) 63(23):235002. doi: 10.1088/1361-6560/aaeaa4

23. Lustberg T, van Soest J, Gooding M, Peressutti D, Aljabar P, van der Stoep J, et al. Clinical evaluation of atlas and deep learning based automatic contouring for lung cancer. Radiother Oncol (2018) 126:312–7. doi: 10.1016/j.radonc.2017.11.012

24. Liu Y, Stojadinovic S, Hrycushko B, Wardak Z, Lau S, Lu W, et al. A deep convolutional neural network-based automatic delineation strategy for multiple brain metastases stereotactic radiosurgery. PloS One (2017) 12(10):e0185844. doi: 10.1371/journal.pone.0185844

25. Trebeschi S, van Griethuysen JJM, Lambregts DMJ, Lahaye MJ, Parmar C, Bakers FCH, et al. Deep learning for fully-automated localization and segmentation of rectal cancer on multiparametric MR. Sci Rep (2017) 7(1):5301. doi: 10.1038/s41598-017-05728-9

Keywords: automatic segmentation, Flex U-Net, rectal cancer, clinical target volume, organs at risk

Citation: Sha X, Wang H, Sha H, Xie L, Zhou Q, Zhang W and Yin Y (2023) Clinical target volume and organs at risk segmentation for rectal cancer radiotherapy using the Flex U-Net network. Front. Oncol. 13:1172424. doi: 10.3389/fonc.2023.1172424

Received: 03 March 2023; Accepted: 05 May 2023;

Published: 18 May 2023.

Edited by:

Wei Zhao, Beihang University, ChinaReviewed by:

Kuo Men, Chinese Academy of Medical Sciences and Peking Union Medical College, ChinaYinglin Peng, Sun Yat-sen University Cancer Center (SYSUCC), China

Copyright © 2023 Sha, Wang, Sha, Xie, Zhou, Zhang and Yin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yong Yin, yinyongsd@126.com

Xue Sha

Xue Sha Hui Wang2

Hui Wang2 Wei Zhang

Wei Zhang Yong Yin

Yong Yin