- School of Optometry and Vision Science, University of New South Wales Sydney, Sydney, NSW, Australia

Currently the integrity of brain function that drives behavior is predominantly measured in terms of pure motor function, yet most human behavior is visually driven. A means of easily quantifying such visually-driven brain function for comparison against population norms is lacking. Analysis of eye-hand coordination (EHC) using a digital game-like situation with downloadable spatio-temporal details has potential for clinicians and researchers. A simplified protocol for the Lee-Ryan EHC (Slurp) Test app for iPad® has been developed to monitor EHC. The two subtests selected, each of six quickly completed items with appeal to all ages, were found equivalent in terms of total errors/time and sensitive to developmental and aging milestones known to affect EHC. The sensitivity of outcomes due to the type of stylus being used during testing was also explored. Populations norms on 221 participants aged 5 to 80+years are presented for each test item according to two commonly used stylus types. The Slurp app uses two-dimensional space and is suited to clinicians for pre/post-intervention testing and to researchers in psychological, medical, and educational domains who are interested in understanding brain function.

Introduction

Human behavior is largely driven by visual information (Bisley, 2011), making visual function the most appropriate measure of brain function. However, developmental visuomotor milestones and most traumatic brain injury and neurological diseases are predominantly monitored in terms of motor functions that are at best gross to measure. By comparison, involvement of visual-drivers in the measurement of visuomotor responses can potentially provide a highly refined and sensitive measure of neural action planning and the functional integrity of both visual and motor systems at gross and fine levels. The development of a portable app to easily and objectively quantify eye-hand coordination (EHC) in terms of spatial and temporal coordinates on a series of visuomotor tasks of varied difficulty would make such visually-driven assessment amenable and have significant potential across a wide variety of domains: psychological, medical (particularly neurological and ophthalmic), educational.

Each day a multitude of our physical movements are defined by goal-orientated reaching and grasping in response to a visual stimulus. The neuronal pathways involved in these visual oculo-motor and manual motor systems utilize prediction-mediated feedback relationships which have been extensively investigated in primates (for a review see Goodale, 2011). What underpins how successfully a task is carried out is one's dynamic assessment of associated spatial and temporal errors, and how one factors in muscular control, proprioception, practice effects, motor coordination, and aspects of cognitive function such as visual attention, perception, and memory (Crawford et al., 2004). Choice of an object to reach, touch, grasp, or even avoid, assumes executive planning that has already engaged endogenous and exogenously activated neural networks (Corbetta and Shulman, 2002). Such dorsal stream networks involve control and direction of selective visual attention, as well as the interaction of long term and working memory with the ventral visual stream as needed for exogenous identification and grasping of an object (for example, is it stationary or moving?), plus a semantic understanding of the object's characteristics. Successful grasping requires experience with an object's resilience, density, weight and texture to conceptualize a future action, plus use of appropriate subcortical (superior colliculus and pretectal accommodation areas) and frontal field visual pathways to redirect eye movements to shift and direct attention. Engagement of parieto-frontal dorsal networks along with aspects of motor and somatosensory networks is required to plan these goal-directed shifts of attention to appropriately “weight” the grasp suited to an object's characteristics (for example, whether fine, slippery, easily breakable, heavy, or if moving).

Various methods of quantifying visuomotor responses by measuring eye and hand coordination have been reported, for example, the Grooved Pegboard (Merker and Podell, 2011), Purdue pegboard (Gardner and Broman, 1979), cup stacking tasks (Ruff, 1993; Merker and Podell, 2011), or finger point-and-touch (Gao et al., 2010). These are manually timed activities and require subjective observations, thus creating potential practitioner bias. Other drawbacks are that these tasks lack novelty, are highly repetitive and relatively non-engaging, especially for older individuals. Furthermore, gross arm movements during most of the tests may introduce a confounder into interpretation of “EHC” performance (Binsted et al., 2001). Digital apparatuses that provide more objective measures are now available, such as the Dynavision (Vesia et al., 2008) and the Kinematic Assessment Tool (Culmer et al., 2009). However, these particular digital methods are costly, time-consuming and for the Dynavision quite large, making them impractical for routine clinical assessment. On the other hand, the Lee-Ryan Eye Hand Coordination Test (Lee et al., 2014) (now known as “Slurp” because each test item is a cartoon-shaped 3-D rendered animal- or geometric-shaped straw emerging from a milkshake that draws the milkshake up out of the glass during tracing) uses an inexpensive iPad® app with either a rubber-tipped stylus or a “biro”-style Bluetooth stylus (e.g., iPencil®). The device is preferentially used flat on a desk, eliminating upper arm involvement during testing. Further, the flatness of the tablet device reduces the demand on stereopsis which, if impaired, in itself can reduce the success of visually guided movements (Webber et al., 2008; Suttle et al., 2011). Results using a 13-item pilot version of the app indicate high repeatability and that its tasks are highly engaging for both young and older participants (Lee et al., 2014). An additional advantage of the Slurp test is that all temporo-spatial data is downloadable making the test useful as a research tool.

It is well-known from the assessment of hand movements and control, that visuomotor performance is a function of age and subject to developmental stages (Voelcker-Rehage, 2008). However, a criticism of existing EHC tests, including the Slurp EHC Test, is that few population norms have been established (Tiffin and Asher, 1948; Gardner and Broman, 1979; Ruff, 1993; Klavora and Esposito, 2002; Wicks et al., 2015). Notably, pilot data on 83 participants using the full 20-item version of the Slurp test revealed that depending on age it can take up to 30 min to complete (Junghans BM, et al. IOVS 2017;58:ARVO E-Abstract 5427), which detracts from its routine implementation. As this data also showed that after just one practice item there is no order effect during presentation of the remaining 19 test items, potential existed to select particular items to create two smaller subtests that are objective, much quicker, clinically-useful and equivalent measures to facilitate comparison of developmental or aging milestones or pre/post therapeutic interventions. Areas that may warrant multiple assessment of eye-hand coordination with reference to population norms cover the ophthalmic condition of amblyopia (Suttle et al., 2011), neurological conditions such as stroke (Low et al., 2017), Parkinsons disease (Boisseau et al., 2002), and acquired brain injury (Gao et al., 2010) or the psychological and educational situations of developmental coordination disorder (Bieber et al., 2016), autism spectrum disorder (Anzulewicz et al., 2016), etc.

As a simpler test protocol is highly desirable for the Slurp test, the current study aimed to describe age-norms for visually normal persons without cognitive or neural impairment on two statistically equivalent subtests of the Slurp EHC Test, under conditions using two readily available but quite different types of stylus.

Materials and Methods

The study was approved by the University of New South Wales (UNSW) Human Research Ethics Advisory (HREA) Panel D: Biomedical. All participants were provided with a Participant Information Statement, and gave signed and informed consent after the study was explained in accordance with the Declaration of Helsinki. In the case of children who participated, one parent similarly signed consent, whereas the children themselves gave verbal consent. Participants were recruited as a result of flyers circulated within the School of Optometry and Vision Science and at one private optometric practice. All participants were screened for 20/20 equivalent visual acuity at near plus the absence of any history of motor, visual or cognitive impairment. Participants sourced from the private optometric practice had previously been determined to also have no binocular vision abnormalities.

By inspection of existing data on 83 subjects who had completed the full version of the Slurp EHC Test, two subsets of six items each were chosen such that each contained two easy, two moderate and two harder traces and yielded similar total error and total time scores. Subtest “A” (the “Dragonfly subset”) was created and contains the Square, Snail, Whale, Elephant, Slurp and Dragonfly items, and Subtest “B” (the “Octopus subset”) the Triangle, Rabbit, Monster, Unicorn, Cat, and Octopus items. The study sample size accords with an estimate of size of at least 96 participants from the normal population assuming a 95% confidence level and a margin of error of 10%. This sample size is consistent with previous studies, and our own work which has shown sufficient power with this number of participants.

Testing was carried out under conditions similar to those existing during the pilot data capture that led to the selection of subsets A and B. An Apple iPad® [Apple Inc., Cupertino, CA, USA, iPad Pro® iOS 11.1 Model A1674; 9.7 inch] was loaded with the Slurp Test (https://itunes.apple.com/us/app/slurp/id1148830242?mt=8) and the investigator selected out the respective test items from the 20 available. After explanation of how testing would proceed, all participants first undertook one item referred to as the “Castle” as a demonstration of how the test would be conducted, and to absorb any potential learning effect (Lee et al., 2014). For participants undertaking both subsets, Tests A and B were presented in random order. Within each subset, items were also presented in randomized order. Participants were seated at 33 cm from the iPad® for children or 40 cm for adults and viewed binocularly whilst wearing their habitual spectacle or contact lens correction. No scaling for distance was undertaken. Either an iPencil® [Apple Inc., Cupertino, CA, USA, Bluetooth Model A1603] or a typical rubber-tipped stylus in common use (Targus Slim Stylus for Smartphone, Anaheim California, USA) was used for tracing. The Slurp software monitors the location of the stylus on the iPad in terms of its perpendicular distance from the path along the midpoint of the 5.0 mm wide straw. The software is currently set such that when the stylus deviates in excess of 3.5 mm, the commencement of an error is indicated in the data set and contributes to the count of errors. The warning sound option was activated to alert when a tracing error had been made. At the conclusion of tracing, the participant's data set was emailed from the iPad, thus providing the number of errors made and the time taken to complete for each trace.

Participants were split into age groups in two ways: three age groupings across all ages, nine age groupings across all ages (numbers of participants in each age group are indicated in Tables 1–3). Data was analyzed using SPSS (Statistical Package for the Social Sciences version 25, IBM Corporation, New York, USA). A comparison between error scores and time taken to complete Set A and Set B was made using analysis of variance (ANOVA) with consideration of main effects due to gender, type of stylus used and age. The raw data supporting the conclusions of this manuscript will be made available by the authors, without undue reservation, to any qualified researcher.

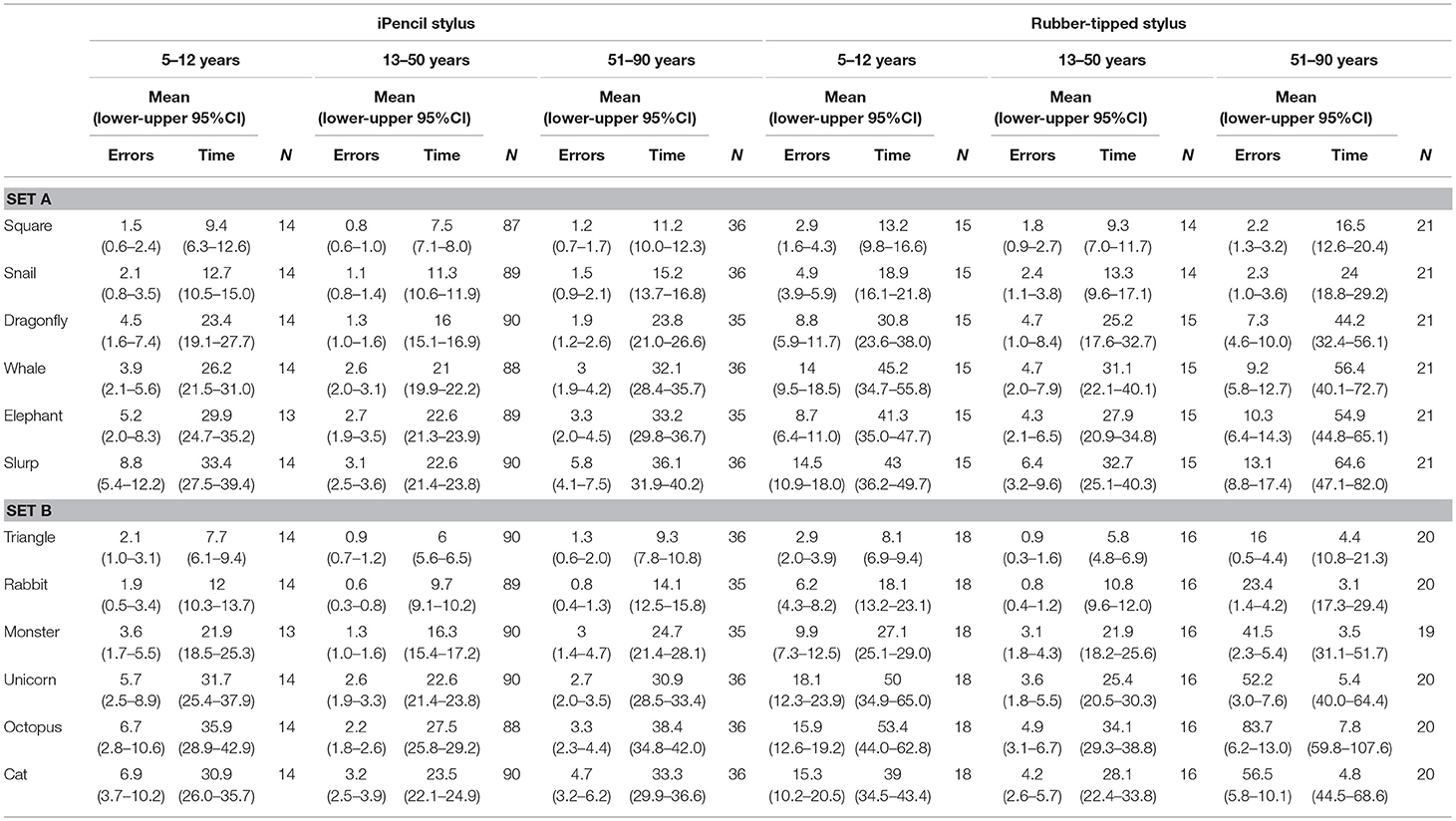

Table 1. Mean error scores and time taken for individual test items in subset A and subset B according to type of stylus used and age (three age groups).

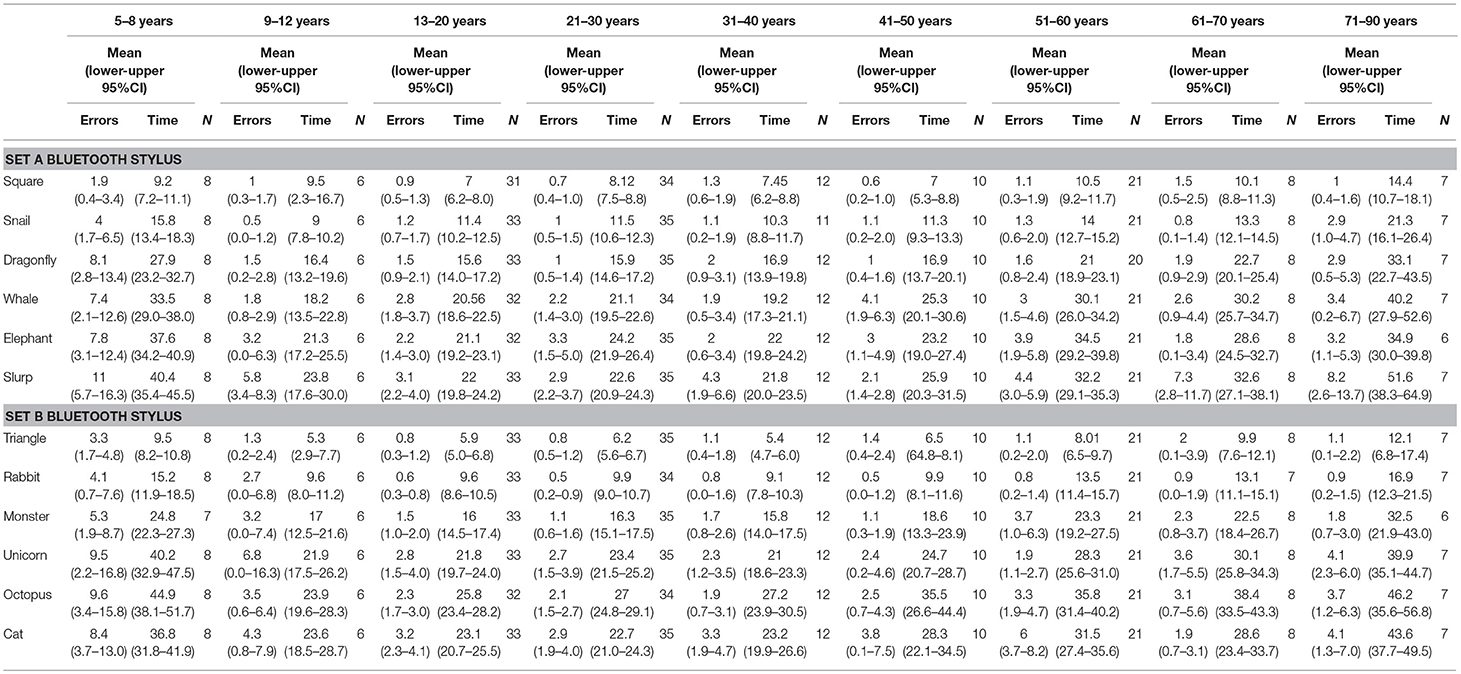

Table 2. Mean error scores and time taken for individual test items in subset A and subset B when using a Bluetooth stylus grouped into nine age groups.

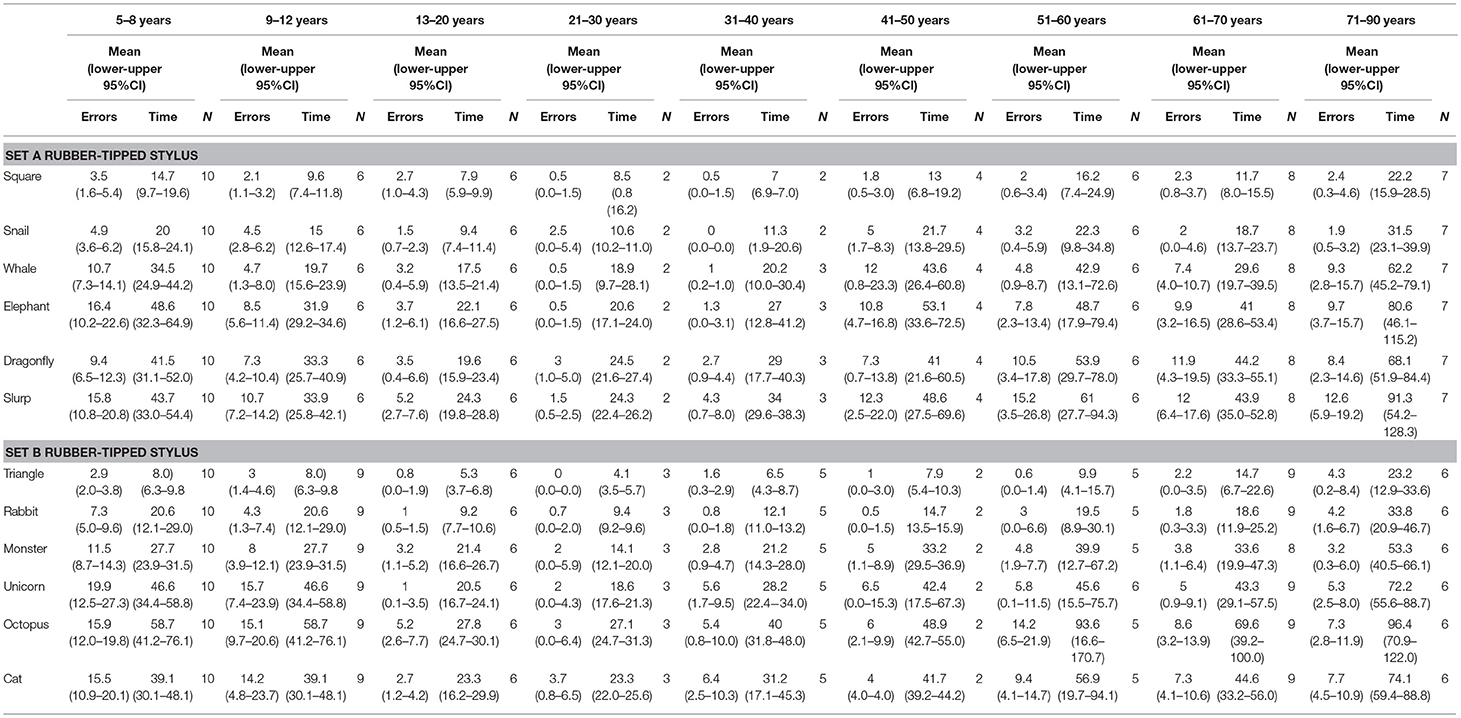

Table 3. Mean error scores and time taken for individual test items in subset A and subset B when using a rubber-tipped stylus grouped into nine age groups.

Results

The 221 participants who completed either Set A or B or both ranged in age from 5 to 88 years, with 60.5% female. Given there was no significant gender difference found for either errors made or times taken on each of the items, data from males and females of the same age group were pooled. Only 6.4% of the participants were left-handed and were scattered across age groups and type of stylus used. Inspection of rank order of error scores and time taken put left-handers fully within the range of scores found for right-handers and therefore their results were pooled. The mean error scores and time taken for 192 participants across all ages to complete Subset A (Dragonfly), regardless of stylus used, are 21.4 ± 21.6 errors and 139.2 ± 77.1 s, respectively. The mean error scores and time taken for 195 participants across all ages to complete Subset B (Octopus), regardless of stylus used, are 21.0 ± 23.2 errors and 142.8 ± 76.61 s, respectively. Despite the varying complexity and length of each test item, the ratio of errors per unit time was found to not differ significantly between items.

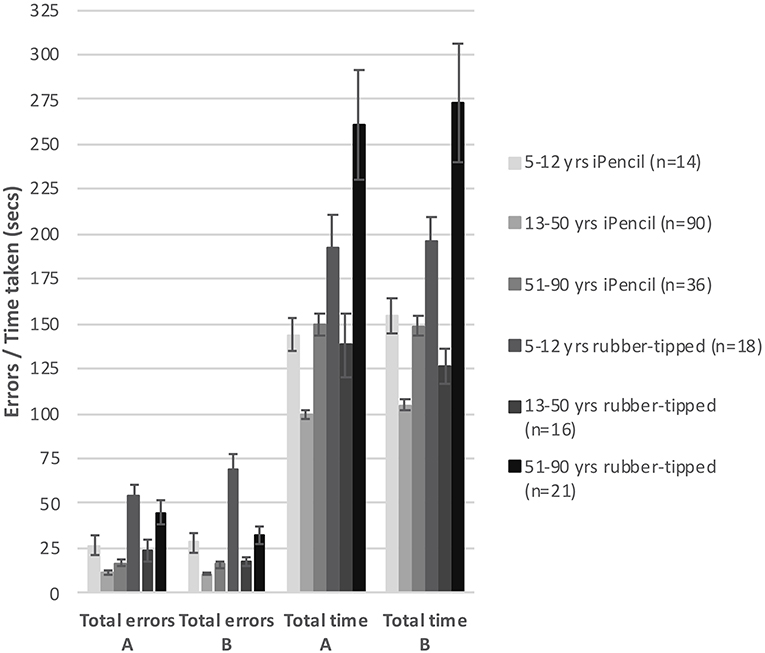

Two main factors relating to performance emerged; stylus and age. First, which type of stylus was used was found to be highly significant for both errors made (Subset A F(1, 86) = 8.649, p = 0.004 and Subset B F(1, 86) = 7.791, p = 0.006) and for time taken (Subset A F(1, 86) = 29.552, p < 0.000 and Subset B F(1, 86) = 28.908, p < 0.000). Of all participants, 73.3% completed Test A using an iPencil® and 26.7% used the rubber-tipped stylus, while Test B was completed by 72.2% using the iPencil®, and 27.8% the rubber-tipped stylus. Using a rubber-tipped stylus approximately doubles the number of errors, and increases the time taken by ~30% for those under 50 years and by 75% for those over 50 years of age (Figure 1 and Tables 1–3).

Figure 1. The bar graph shows mean total errors made and mean total time taken to complete subsets A and B against type of stylus used within three generalized age groups. Error bars represent 95% confidence intervals.

Second, age was also a significant factor in total errors made completing each subset (Figure 1) (Subset A F(1, 86) = 2.563, p < 0.000 and Subset B F(1, 86) = 2.596, p < 0.000) and the total time taken for each subset (Subset A F(1, 86) = 17.627, p < 0.000 and Subset B F(1, 86) = 15.230, p < 0.000), as well as in the errors made and time taken per individual test item (Tables 2, 3). With participants broken up into three age groups, analysis clearly indicated superior performance as regards both errors and time is achieved by those aged between 13 and 50 years (Figure 1 and Table 1). The consistency of this pattern can be seen in Figure 1, regardless of which subset of items is being undertaken or which stylus is used. Increasingly poorer performance was found as participants fell into the younger and older age groups moving away from the teenage/young adult years (Table 3). Additionally, tracing the most complexly shaped items amplified the poorer outcomes.

Set A and Set B were deemed comparable to each other with respect to total errors made/time taken, as the mean total errors made and the mean total time taken were not significantly different between Sets A and B, regardless whether a Bluetooth stylus or a rubber-tipped stylus was used (Figure 1). However, despite the mean total errors and time being similar for Sets A and B, the profile of increasing item complexity through the six items was not as similar as predicted. Set A appears to contain two easy items, two moderately complex items, and two complex items as predicted by pilot testing, whereas Set B has a less pronounced stepping of increasing complexity (two items are clearly easier, one item appears of moderate challenge and the remaining three items appear to be as hard as each other).

Discussion

This study has demonstrated a quick, sensitive and objective means of assessing neural function in both pediatric and geriatric populations through analysis of the performance on an EHC task in an engaging two-dimensional space. EHC population norms across ages 5 to 80+ years have been determined for accuracy and timing on 12 variously complex tracing plates in the Slurp app. An age-related developmental effect with peak performance during young adulthood has been demonstrated. The automated timing/error-counting facility ensures subject and observer objectivity and thus addresses important issues against other forms of measuring visuomotor performance. The validation of Subtests A (Dragonfly) and B (Octopus) as statistically equivalent with respect to total errors made and total time taken for all age groups, indicates potential for reliable and valid pre-/post-intervention analysis of brain function. Each six-plate subtest can be completed in ~2–4 min, depending on age, making the app useful to clinicians in a range of disciplines such as neurology, optometry, psychology (including bedside assessment of patient visuomotor integrity), and education.

No gender difference was found using the Slurp EHC app. Consensus is poor regarding the influence gender has on visuomotor performance in three-dimensional space through assessment of skills based on throw and catch (Wickens, 1974; Plimpton and Regimbal, 1992; Wicks et al., 2015), reach and touch (Klavora and Esposito, 2002) or pick and place items (Ruff, 1993). Handedness may be considered as an issue. The relatively few left-handers yielded results dispersed along a similar spectrum as right-handers. It should be noted that each trace item requires a variety of leftwards/rightwards and upwards/downwards tracing, making certain sections of the test items problematic for both right and left-handers.

Importantly, a similar significant main effect for age has been measured by us in a two-dimensional space as by others in a three-dimensional that has implications for the study of developmental or aging effects of sensory-motor integration (Hay, 1979; Ruff, 1993; Smyth et al., 2004). In the current study, children under age 12 made a significantly greater number of errors and took significantly longer, demonstrating also a much greater variability in performance. It is known that children use vision in the control of hand movements in different ways according to age (Hay, 1979; Smyth et al., 2004) and therefore the greater variability and poorer EHC performance found in children in the current study, particularly under the age of nine, is not unexpected. “Attention and directing” studies reveal that younger children appear to employ visually-driven movements of the hand using a feedforward approach whereas by approximately age seven onwards they use a feedback-feedforward model that integrates proprioceptive information (Hay, 1979; Smyth et al., 2004), performance peaking in their teens. Developmentally, children continue to develop throw/catch visual coordination skills into their mid-teens, with boys outperforming girls (Wicks et al., 2015). The Purdue Pegboard Test has been used to establish “pick and place” normative data on children (n = 1,334, ages 5–16 years) (Gardner and Broman, 1979) and indicated improved speed until approximately age 10 years, after which performance leveled.

The impact of a poor functional maturation of EHC on quality of life and employment prospects should be considered. Visual-motor integration and visual-spatial integration have been found to be important measures that contribute to academic achievement (Carlson et al., 2013). Poorer handwriting is related to poorer visual-motor integration in normal children (Kaiser et al., 2009) and especially so in children with developmental coordination disorder (Wilmut et al., 2006; Bieber et al., 2016), attention deficit hyperactivity disorder (ADHD) (Stasik et al., 2009), autism spectrum disorder (Anzulewicz et al., 2016) and amblyopia (Engel-Yeger et al., 2012; Bieber et al., 2016), and thus emphasizes the need for a simple, objective measure of EHC.

Despite the development of good EHC by teen years, peak EHC does not continue through adulthood (Rand and Stelmach, 2011; Engel-Yeger et al., 2012; Ebaid et al., 2017; Low et al., 2017). In the current study, those over the age of 40 increasingly made more errors, took longer, and demonstrated greater variability in performance as the decades progressed. The deterioration in motor control during adulthood is well-known (Rand and Stelmach, 2011; Engel-Yeger et al., 2012; Ebaid et al., 2017; Low et al., 2017). Adults undertaking the Purdue Pegboard test (n = 7,834) showed a marked deterioration in dexterity with age (Tiffin and Asher, 1948), with similar outcomes on the more demanding Grooved Pegboard test (n = 357, 16–70 years) (Ruff, 1993). Superimposed upon aging itself, are other neural or physical factors in chronic conditions that are known to affect EHC such as arthritis (Suomi and Collier, 2003) or neurodegenerative diseases such as familial tremor (Trillenberg et al., 2006), Parkinson's disease (Boisseau et al., 2002), glaucoma (Kotecha et al., 2009), and Alzheimer's disease (Verheij et al., 2012). Clearly, acute conditions such as traumatic brain injury, including stroke, might also be expected to have profound effects on EHC (Gao et al., 2010; Rizzo et al., 2017), but the focus on functional assessment has to date been on motor coordination rather than sensory status or visuomotor integration (Ebaid et al., 2017; Low et al., 2017). On the other hand, one study assessing wrist-aiming found that older persons who are physically active do not appear to suffer as great a reduction in EHC performance as would be expected (Van Halewyck et al., 2014).

Regarding our choice of a tablet-device to assess EHC there are several considerations. First, one might question whether older generations may be unfamiliar using a stylus to trace on glass and thus confound results. Indeed, a number of our older participants did hold the stylus “in wonder” for a few moments and tentatively draw on the glass before commencing the practice trace. Any need for adaptation to proprioception or its impact on performance on the Slurp Test for participants unfamiliar with a tablet device was not pursued. However, the “castle” item we used as the practice item is itself a demanding trace requiring many changes in direction over a considerable distance (the average time for 101 participants was 28.3 ± 18.3 s). Hence it could well be that adaptation is achieved whilst tracing the castle. Furthermore, the fact that the 19-item pilot study (which included a number of older participants) used the Castle item as its practice item and showed no order effect across the subsequent 19 test items, is also suggestive that stylus-acclimatization is over before testing starts. Second, there may be limitations to the interpretation of the heterogeneity of outcomes due to the small number of participants in the 70+ age group (only seven participants using the iPencil® and seven a rubber-tipped stylus). However, an increasing heterogeneity in outcomes was already apparent in the next younger group aged 61–70 years (eight participants using the iPencil® and nine a rubber-tipped stylus), consolidating the notion that some older people are affected by age-related factors more so than others. A larger sample would facilitate elucidation of further factors that might contribute to these poorer performances.

Third, our choice to use a tablet device to test EHC in a two-dimensional space vs. the traditional three-dimensional reach and grasp/point style of EHC test, rests with the fact that we wanted to minimize upper arm involvement. This is important if one's test paradigm aims to assess subtle changes in the brain's integrity. As vision is such a widespread driver of human actions throughout the day (Bisley, 2011), in our opinion, detection of subtle changes in the brain requires assessment of fine motor control that is not contaminated by aberrations in the gross musculature. Children with developmental delay or some medical conditions and adults with medical/degenerative conditions affecting the shoulder and the arm may perform more poorly on reach and grasp or reach and point tasks than when undertaking simpler motor activities at a desk. Hence, our test commences once the subject has steadied themselves on the tablet. Thereafter, mainly fine motor movements of the wrist and fingers as driven by visual appraisal of the situation come under scrutiny.

The protocol for conducting the Slurp EHC Test warrants scrutiny due to its novelty. First, the optional sound alert was activated to indicate to the participant that they had deviated outside the straw. Having this alert present serves to pull the participant back into attending to the task, but one may ask whether it will detract from vision being the primary sense providing feedback to the visuomotor task at hand and thus introduce a ceiling effect and limit the magnitude of any deviation. Notably, when simply tracing along the straw there are no sound cues. We have preliminary data on the magnitude of the deviation which shows a similar age-related “starts poor, peaks, becomes poorer again” data set as do errors made and time (Junghans BM, et al. IOVS 2017;58:ARVO E-Abstract 5427). This implies that the sound cue announcing an error does not impose a ceiling on the magnitude of the deviation, although we cannot rule out a dampening effect. No studies have been undertaken to understand the impact of this when using the Slurp Test. Second, participants were to be limited to only 5 s to appraise the task ahead of them when their next item appeared. Although the investigator was never required to intercept, many participants could be seen to quickly survey the shape of the straw and smile upon realizing what shape they would trace next. Whether this scoping process creates a visuomotor map and enhances tracing outcomes has not been investigated. Importantly however, the benefit of this scoping phase with regards keeping the participant engaged with the test, perhaps in itself makes this brief instinctive limited scoping worthwhile. Third, the total time to undertake any EHC test is an important consideration. Whether a further reduction in the number of test items, to say just three, would yield the same EHC assessment outcomes is worth exploring.

For the researcher, the Slurp app offers new capabilities to quantify finer visuomotor performance in terms of space and time. At the end of testing, an option is available to download the database that yields the following information approximately every 0.1 s: x/y coordinates for the stylus, x/y coordinates of the midpoint of the straw nearest the stylus, the magnitude of the resultant deviation of the straw from the midpoint of the straw, categorically whether the deviation is/isn't deviating beyond the pre-set threshold, and the velocity of the stylus at each capture point. A pre-set error threshold of 3.5 mm was integrated by the app programmers to take pixilation effects into consideration. However, it is possible to change this sensitivity within the database retrospectively. A further benefit of the Slurp app is that the exact duration of each tracing task (some as short as 5–6 s) is captured digitally to a precision of 1000th of a second, which aligns with the importance of time as a sensitive measure of brain function (Miall and Reckess, 2002). The app's timing function is totally unobtrusive and thus assists the authenticity of the times captured.

In summary, digital eye-hand coordination testing offers a wide range of practitioners and scientists a level of understanding of the integrity of the brain that has hitherto been undertaken without sufficient attention or rigor in data capture. The Slurp (Lee-Ryan) Test app offers a quick and sensitive means of assessing the spatio-temporal aspects of eye-hand coordination in a manner acceptable to all age groups and applicable across a spectrum of situations in psychology, medicine and education.

Ethics Statement

The study was approved by the University of New South Wales (UNSW) Human Research Ethics Advisory (HREA) Panel D: Biomedical. All participants were provided with a Participant Information Statement, and gave signed and informed consent after the study was explained in accordance with the Declaration of Helsinki. In the case of children who participated, one parent similarly signed consent, whereas the children themselves gave verbal consent.

Author Contributions

BJ participated in conceptualization and design, research, collection of data, interpretation of results, drafting, and revision of the manuscript. SK participated in design, collection of data, data analysis, interpretation of results, and revision of the manuscript.

Funding

This project was funded through the academics' personal university allocations.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. Whilst it might be perceived that the authors have the potential to gain financially from the sale of the Slurp app on iTunes, the funds go directly to the University of New South Wales with only a portion forwarded to the School of Optometry and Vision Science for the purpose of covering necessary upgrades and to maintain functioning of the app in response to upgrades to the Apple iOS.

Acknowledgments

We sincerely thank Professor Sheila Crewther (School of Psychology and Public Health, La Trobe University, Melbourne) for her considerable input into the Introduction and Discussion sections of the manuscript, and Jenny Gou, Rebecca So, Vivien W. C. Cheung, Samuel C. Tang, and SooJin Nam (UNSW Sydney) for their efforts collecting the data, and of course we thank Kiseok Lee, Malcolm Ryan, and Catherine Suttle (UNSW Sydney) for their development of the app.

References

Anzulewicz, A., Sobota, K., and Delafield-Butt, J. T. (2016). Toward the autism motor signature: gesture patterns during smart tablet gameplay identify children with autism. Sci. Rep. 6:31107. doi: 10.1038/srep31107

Bieber, E., Smits-Engelsman, B. C., Sgandurra, G., Cioni, G., Feys, H., Guzzetta, A., et al. (2016). Manual function outcome measures in children with developmental coordination disorder (DCD): systematic review. Res. Dev. Disabil. 55, 114–131. doi: 10.1016/j.ridd.2016.03.009

Binsted, G., Chua, R., Helsen, W., and Elliott, D. (2001). Eye-hand coordination in goal-directed aiming. Hum. Mov. Sci. 20, 563–585. doi: 10.1016/S0167-9457(01)00068-9

Bisley, J. W. (2011). The neural basis of visual attention. J. Physiol. 589, 49–57. doi: 10.1113/jphysiol.2010.192666

Boisseau, E., Scherzer, P., and Cohen, H. (2002). Eye-hand coordination in aging and in parkinsons disease? Aging Neuropsychol. Cogn. 9, 266–275. doi: 10.1076/anec.9.4.266.8769

Carlson, A. G., Rowe, E., and Curby, T. W. (2013). Disentangling fine motor skills' relations to academic achievement: the relative contributions of visual-spatial integration and visual-motor coordination. J. Genet. Psychol. 174, 514–533. doi: 10.1080/00221325.2012.717122

Corbetta, M., and Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3, 201–215. doi: 10.1038/nrn755

Crawford, J. D., Medendorp, W. P., and Marotta, J. J. (2004). Spatial transformations for eye-hand coordination J. Neurophysiol. 92, 10–19. doi: 10.1152/jn.00117.2004

Culmer, P. R., Levesley, M. C., Mon-Williams, M., and Williams, J. H. (2009). A new tool for assessing human movement: the Kinematic Assessment Tool. J. Neurosci. Methods 184, 184–192. doi: 10.1016/j.jneumeth.2009.07.025

Ebaid, D., Crewther, S. G., MacCalman, K., Brown, A., and Crewther, D. P. (2017). Cognitive processing speed across the lifespan: beyond the influence of motor speed. Front. Aging Neurosci. 9:62. doi: 10.3389/fnagi.2017.00062

Engel-Yeger, B., Hus, S., and Rosenblum, S. (2012). Age effects on sensory-processing abilities and their impact on handwriting. Can. J. Occup. Ther. 79, 264–274. doi: 10.2182/CJOT.2012.79.5.2

Gao, K. L., Ng, S. S., Kwok, J. W., Chow, R. T., and Tsang, W. W. (2010). Eye-hand coordination and its relationship with sensori-motor impairments in stroke survivors. J. Rehabil. Med. 42, 368–373. doi: 10.2340/16501977-0520

Gardner, R. A., and Broman, M. (1979). The purdue pegboard: normative data on 1334 school children. J. Clin. Child Psychol. 8, 156–162. doi: 10.1080/15374417909532912

Goodale, M. A. (2011). Transforming vision into action. Vision Res. 51, 1567–1587. doi: 10.1016/j.visres.2010.07.027

Hay, L. (1979). Spatial-temporal analysis of movements in children. J. Motor Behav. 11, 189–200. doi: 10.1080/00222895.1979.10735187

Kaiser, M.-L., Albaret, J.-M., and Doudin, P.-A. (2009). Relationship between visual-motor integration, eye-hand coordination, and quality of handwriting. J. Occup. Ther. Schools Early Interven. 2, 87–95. doi: 10.1080/19411240903146228

Klavora, P., and Esposito, J. G. (2002). Sex differences in performance on three novel continuous response tasks. Percept. Motor Skills 95, 49–56. doi: 10.2466/pms.2002.95.1.49

Kotecha, A., O'Leary, N., Melmoth, D., Grant, S., and Crabb, D. P. (2009). The functional consequences of glaucoma for eye–hand coordination. Invest. Ophthalmol. Visual Sci. 50, 203–213. doi: 10.1167/iovs.08-2496

Lee, K., Junghans, B. M., Ryan, M., Khuu, S., and Suttle, C. M. (2014). Development of a novel approach to the assessment of eye-hand coordination. J. Neurosci. Methods 228, 50–56. doi: 10.1016/j.jneumeth.2014.02.012

Low, E., Crewther, S. G., Ong, B., Perre, D., and Wijeratne, T. (2017). Compromised motor dexterity confounds processing speed task outcomes in stroke patients. Front. Neurol. 8:484. doi: 10.3389/fneur.2017.00484

Merker, B., and Podell, K. (2011). “Grooved pegboard test,” in Encyclopedia of Clinical Neuropsychology, eds J. S. Kreutzer, J. DeLuca, and B. Caplan (New York, NY: Springer), 1176–1178. doi: 10.1007/978-0-387-79948-3_187

Miall, R. C., and Reckess, G. Z. (2002). The Cerebellum and the timing of coordinated eye and hand tracking. Brain Cogn. 48, 212–226. doi: 10.1006/brcg.2001.1314

Plimpton, C. E., and Regimbal, C. (1992). Differences in motor proficiency according to gender and race. Percept. Motor Skills 74, 399–402. doi: 10.2466/pms.1992.74.2.399

Rand, M. K., and Stelmach, G. E. (2011). Effects of hand termination and accuracy requirements on eye-hand coordination in older adults. Behav. Brain Res. 219, 39–46. doi: 10.1016/j.bbr.2010.12.008

Rizzo, J. R., Hosseini, M., Wong, E. A., Mackey, W. E., Fung, J. K., Ahdoot, E., et al. (2017). The intersection between ocular and manual motor control: eye–hand coordination in acquired brain injury. Front. Neurol. 8:227. doi: 10.3389/fneur.2017.00227

Ruff, R. P. S. (1993). Gender and age-specific changes in motor speed and eye-hand coordination in adults: normative values for the finger tapping and grooved pegboard tests Percept. Motor Skills 76, 1219–1230. doi: 10.2466/pms.1993.76.3c.1219

Smyth, M. M., Peacock, K. A., and Katamba, J. (2004). The role of sight of the hand in the development of prehension in childhood. Q. J. Exp. Psychol. A 57, 269–296. doi: 10.1080/02724980343000215

Stasik, D., Tucha, O., Tucha, L., Walitza, S., and Lange, K. W. (2009). Graphomotor functions in children with attention deficit hyperactivity disorder (ADHD). Psychiatr. Polska 43, 183–192.

Suomi, R., and Collier, D. (2003). Effects of arthritis exercise programs on functional fitness and perceived activities of daily living measures in older adults with arthritis. Arch. Phys. Med. Rehabil. 84, 1589–1594. doi: 10.1053/S0003-9993(03)00278-8

Suttle, C. M., Melmoth, D. R., Finlay, A. L., Sloper, J. J., and Grant, S. (2011). Eye-hand coordination skills in children with and without amblyopia. Invest. Ophthalmol. Vision Sci. 52, 1851–1864. doi: 10.1167/iovs.10-6341

Tiffin, J., and Asher, E. J. (1948). The Purdue pegboard; norms and studies of reliability and validity. J. Appl. Psychol. 32, 234–247.

Trillenberg, P., Fuhrer, J., Sprenger, A., Hagenow, A., Kompf, D., Wenzelburger, R., et al. (2006). Eye-hand coordination in essential tremor. Mov. Disord. 21, 373–379. doi: 10.1002/mds.20729

Van Halewyck, F., Lavrysen, A., Levin, O., Boisgontier, M. P., Elliott, D., and Helsen, W. F. (2014). Both age and physical activity level impact on eye-hand coordination. Hum. Mov. Sci. 36, 80–96. doi: 10.1016/j.humov.2014.05.005

Verheij, S., Muilwijk, D., Pel, J. J., van der Cammen, T. J., Mattace-Raso, F. U., and van der Steen, J. (2012). Visuomotor impairment in early-stage Alzheimer's disease: changes in relative timing of eye and hand movements. J. Alzheimers Dis. 30, 131–143. doi: 10.3233/JAD-2012-111883

Vesia, M., Esposito, J., Prime, S., and Klavora, P. (2008). Correlations of selected psychomotor and visuomotor tests with initial Dynavision performance. Percept. Motor Skills 107, 14–20. doi: 10.2466/pms.107.1.14-20

Voelcker-Rehage, C. (2008). Motor-skill learning in older adults—a review of studies on age-related differences. Eur. Rev. Aging Phys. Activity 5:5–16. doi: 10.1007/s11556-008-0030-9

Webber, A. L., Wood, J. W., Gole, G. A., and Brown, B. (2008). The effect of amblyopia on fine motor skills in children. Invest. Ophthalmol. Vision Sci. 49, 594–603. doi: 10.1167/iovs.07-0869

Wickens, C. (1974). Temporal limits of human information processing: a developmental study. Psychol. Bull. 81, 739–755. doi: 10.1037/h0037250

Wicks, L. J., Telford, R. M., Cunningham, R. B., Semple, S. J., and Telford, R. D. (2015). Longitudinal patterns of change in eye–hand coordination in children aged 8–16 years. Hum. Mov. Sci. 43, 61–66. doi: 10.1016/j.humov.2015.07.002

Keywords: eye-hand coordination, brain function, visually-driven behavior, behavior assessment, population norms

Citation: Junghans BM and Khuu SK (2019) Populations Norms for “SLURP”—An iPad App for Quantification of Visuomotor Coordination Testing. Front. Neurosci. 13:711. doi: 10.3389/fnins.2019.00711

Received: 31 January 2019; Accepted: 24 June 2019;

Published: 10 July 2019.

Edited by:

Rufin VanRullen, Centre National de la Recherche Scientifique (CNRS), FranceReviewed by:

Anna Ma-Wyatt, University of Adelaide, AustraliaNicos Souleles, Cyprus University of Technology, Cyprus

Copyright © 2019 Junghans and Khuu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Barbara M. Junghans, b.junghans@unsw.edu.au

Barbara M. Junghans

Barbara M. Junghans Sieu K. Khuu

Sieu K. Khuu