Teaching an Old Dog New Tricks: Strategies That Improve Early Recognition in Similarity-Based Virtual Screening

- 1Department of Defense Biotechnology High Performance Computing Software Applications Institute, Telemedicine and Advanced Technology Research Center, U.S. Army Medical Research and Development Command, Fort Detrick, MD, United States

- 2The Henry M. Jackson Foundation for the Advancement of Military Medicine, Inc., Bethesda, MD, United States

High throughput screening (HTS) is an important component of lead discovery, with virtual screening playing an increasingly important role. Both methods typically suffer from lack of sensitivity and specificity against their true biological targets. With ever-increasing screening libraries and virtual compound collections, it is now feasible to conduct follow-up experimental testing on only a small fraction of hits. In this context, advances in virtual screening that achieve enrichment of true actives among top-ranked compounds (“early recognition”) and, hence, reduce the number of hits to test, are highly desirable. The standard ligand-based virtual screening method for large compound libraries uses a molecular similarity search method that ranks the likelihood of a compound to be active against a drug target by its highest Tanimoto similarity to known active compounds. This approach assumes that the distributions of Tanimoto similarity values to all active compounds are identical (i.e., same mean and standard deviation)—an assumption shown to be invalid (Baldi and Nasr, 2010). Here, we introduce two methods that improve early recognition of actives by exploiting similarity information of all molecules. The first method ranks a compound by its highest z-score instead of its highest Tanimoto similarity, and the second by an aggregated score calculated from its Tanimoto similarity values to all known actives and inactives (or a large number of structurally diverse molecules when information on inactives is unavailable). Our evaluations, which use datasets of over 20 HTS campaigns downloaded from PubChem, indicate that compared to the conventional approach, both methods achieve a ~10% higher Boltzmann-enhanced discrimination of receiver operating characteristic (BEDROC) score—a metric of early recognition. Given the increasing use of virtual screening in early lead discovery, these methods provide straightforward means to enhance early recognition.

Introduction

Lead discovery by high throughput screening (HTS) is often described as a process akin to finding a needle in a haystack (Aherne et al., 2002). Given the significant achievements in automation, major pharmaceutical companies now routinely screen hundreds of thousands of samples to identify compounds that are active against specific drug targets. However, the number of chemicals available for bioactivity testing has increased exponentially over the past decade. For instance, as of 2015, the number of structurally unique chemicals registered in PubChem was more than 60 million (Kim et al., 2016), and in 2018, the total number of organic and inorganic substances disclosed in the literature was estimated to be 154 million1. Thus, despite the increased screening capacity, it remains impractical to assay a significant fraction of all available chemicals. Consequently, virtual screening is becoming increasingly important to prioritize and select compounds (Kar and Roy, 2013). The most widely used virtual screening methods are based on molecular similarity searches (Kristensen et al., 2013). These approaches typically rank molecules in a chemical library based on their structural similarity to a set of molecules known to be active against a desired target. Chemicals ranked high on the list can then be acquired and tested for the desired activity or property.

The most commonly used metric to compare the performance of different virtual screening methods is the area under the receiver operating characteristic curve (ROC_AUC) (Triballeau et al., 2005). This is useful for comparing overall performance of methods for ranking a database (Truchon and Bayly, 2007; Zhao et al., 2009). However, the ROC_AUC is inappropriate for virtual screening when the goal is to create a smaller subset enriched with the maximum number of actives (Truchon and Bayly, 2007). The distinction is critical, especially when the chemical libraries are large and only a small fraction of compounds can be tested. Truchon and Bayly (2007) illustrated the difference using three basic cases: (1) half of the actives ranked at the top of a rank-ordered list and the other half at the bottom; (2) all actives randomly distributed across ranks; and (3) all actives ranked in the middle of the list. In all three cases, the ROC_AUC value is 0.5 and, therefore, according to this metric, all three virtual screening methods that generated the three rank-ordered lists perform equally. However, because only a small fraction of chemicals in a large library can be tested, “early recognition” of actives is practically important. That is, case 1 is preferable to case 2 or 3, and case 2 could be considered more desirable than case 3.

Many metrics have been proposed to address early recognition. Examples include the partial area under the ROC curve (McClish, 1989), enrichment factor (Halgren et al., 2004), area under the accumulation curve (Kairys et al., 2006), robust initial enhancement (Sheridan et al., 2001), Boltzmann-enhanced discrimination of the receiver operating characteristic (BEDROC) (Truchon and Bayly, 2007), and predictiveness curve (Empereur-Mot et al., 2015). Although no metric is perfect, perhaps the most frequently adopted is BEDROC, which employs an adjustable parameter, α, to define “early detection.” Truchon and Bayly suggest setting this parameter to 20.0, which dictates that 80% of the maximum contribution to BEDROC comes from the top 8% of the ranked list. A comparatively higher BEDROC score between two virtual screening methods indicates an enhanced ability to enrich the list of top-ranking compounds with active molecules.

Using both AUC_ROC and BEDROC, Nasr et al. (2009) carried out a large-scale study of the performance of 14 similarity search methods, including eight parameter-free methods (no parameters to be learned from training data) and six with one or two parameters to be learned from training data. Consistent with previous results, they found that the best parameter-free method is the Max-Sim method, which ranks molecules based on their maximum Tanimoto coefficient (TC, also commonly referred to as Tanimoto similarity) to the active query molecules. Among the six methods that require parameters to be fit to the data, the exponential Tanimoto discriminant (ETD) method was the best performer overall. This method is defined by the following equations.

Here, S(B) denotes the aggregated score for molecule B, m, and n, respectively denote the numbers of active and inactive query molecules, Ai denotes the ith active query molecule, Ij denotes the jth inactive molecule, TCAB denotes the TC between molecules A and B, λ, and k denote parameters to be learned from the data. The higher the aggregated score, the more likely it is that molecule B is active. Nasr et al. (2009) provided neither recommended default parameter values for λ and k, nor values learned from any of their datasets.

In this article, we introduce two parameter-free similarity search methods that improve the early recognition of actives over the Max-Sim method. Using HTS data, we demonstrate that, on average, the BEDROC values derived from both methods are about 10% higher than those derived from the Max-Sim scoring method.

Methods and Materials

Rank by Z-Scores

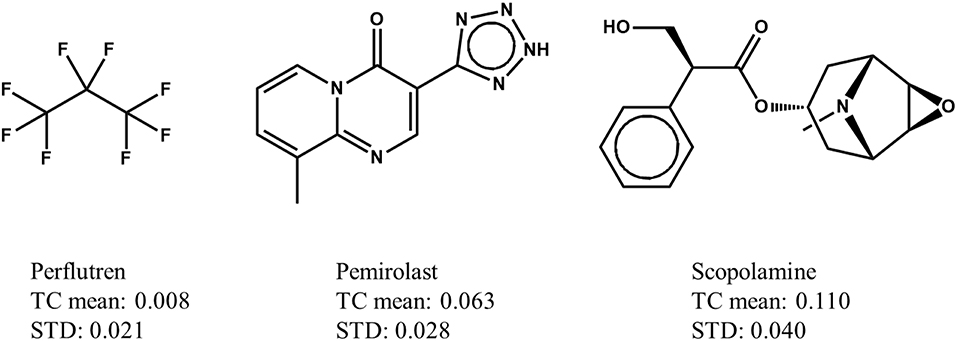

In a Max-Sim similarity search, we first calculate all TCs between the compounds in a chemical library and active query molecules. The library compounds are then ranked based on their highest TCs. The underlying assumption is that the higher the TC, the more likely a compound is to be active. This assumption is valid for searches with a single active query molecule, and for searches with multiple active query molecules if the distributions of TCs are identical (i.e., have the same mean and standard deviation irrespective of the query molecules). Although it has been standard practice for many years to conduct Max-Sim similarity searches, no study had examined the statistical distribution of TCs until 2010, when Baldi and Nasr (2010) investigated in detail the significance of Tanimoto similarity. They showed that the statistical distribution of TCs is not invariant, but depends on the number of fingerprint features present in a query molecule. This finding and its implications, however, are largely overlooked by the cheminformatics community, perhaps due to the reasonably good performance of the Max-Sim method and the extremely low mean TCs for any query molecule. As an example, Figure 1 shows the means and standard deviations of the TCs of 10,000 chemicals randomly selected from the U.S. National Cancer Institute (NCI) chemical library calculated with respect to each of three drugs approved by the U.S. Food and Drug Administration. All of the means and standard deviations are close to zero, suggesting that most NCI compounds do not have the same activity as that of the approved drugs. The small mean TCs may obscure an important fact—that the values are not identical and could be significantly different. For instance, the mean TC of scopolamine is 43% higher than that of pemirolast. To appreciate the implications of the difference, let us assume that a molecule has TCs of 0.80 and 0.70 calculated with respect to scopolamine and pemirolast, respectively. Based on the Max-Sim method, one would expect the molecule to have activities more similar to those of scopolamine. However, because of the difference in the means and standard deviations, the z-scores of the molecule calculated with respect to scopolamine and pemirolast are 17.3 and 22.8, respectively, suggesting that the molecule is more likely to have activities similar to those of pemirolast than to those of scopolamine. If we consider that there are differences in mean TCs and standard deviations, then ranking molecules by the maximum z-score is statistically preferable in a similarity search. Accordingly, we designate this approach as the maxZ method.

Figure 1. Examples of means and standard deviations (STD) of the Tanimoto coefficients (TCs) of 10,000 compounds randomly selected from the National Cancer Institute's virtual screening library calculated with respect to three drugs approved by the Food and Drug Administration.

Rank by Aggregated Similarity

In the past two decades, HTS has contributed to the discovery of numerous structurally novel active compounds against many important drug targets. As these compounds are identified from large experimentally tested screening libraries, they are classified as either active or inactive based on predefined activity criteria. In follow-up studies based on virtual screening by similarity search methods, only active compounds are used as queries. As noted in the Introduction, Nasr et al. (2009) developed the ETD method, which exploits information of both active and inactive compounds. They found that ETD performed best among 14 parameterized and non-parameterized TC-based similarity search methods. An undesirable feature of this method, however, is that it requires two parameters that may not be universally applicable, but still need to be fit for each individual dataset. Here, we propose an aggregated similarity (AS) method that does not require any parameter fitting based on individual datasets. We define the AS method by the following equation:

where, X denotes a compound in a chemical library, m and n, respectively denote the number of active and inactive molecules, TC(Ai, X) denotes the TC between the ith active molecule and X, TC(Ij, X) denotes the TC between the jth inactive molecule and X, and α is set to 10−6—a small number to avoid division by zero when TC equals zero. Possible AS(X) values range from zero to infinity, where zero indicates that molecule X shares no fingerprint features with any of the active query molecules, i.e., all TC(Ai, X) = 0, and infinity indicates that molecule X shares no fingerprint features with any of the inactive query molecules. In reality, because the number of inactive molecules is large (i.e., a positive is like a needle in a haystack and, therefore, most molecules can be classified as inactives), the probability of X sharing no fingerprint features with any of the inactive query molecules is zero, unless a very small number of inactive query molecules is used (even though a large number of them should be available).

One problem with using information on inactive compounds is that the results of large-scale screening campaigns are not equally reliable for active and inactive compounds. This is because such campaigns are typically executed in multiple confirmatory steps focusing on active compounds. The first step involves an initial primary screening of a large number of samples at a single concentration with few or no replicates. Samples deemed to meet the primary activity criteria are then selected and retested in multiple replicates, usually with counter-assays to affirm activity. Samples that satisfy the retesting criteria may be further tested at multiple concentrations to determine potency. One consequence of this screening protocol is that the activities of a positive compound are more reliable because they are reassessed in multiple tests, whereas compounds fail to meet primary active criteria are not retested to confirm inactivity. As a result, the set of inactive molecules is likely to contain false negatives. A more obvious problem with the AS method is that it cannot be applied to cases where information on inactives is unavailable. As a means to overcome this challenge, we suggest that a set of structurally diverse compounds can be used as putative inactive compounds. This is because compounds that are truly active against the most valuable drug targets are rare (i.e., needles in a haystack). Therefore, within a structurally diverse set of compounds, the number of compounds that are active against a drug target should be small. Here, we tested the validity of this hypothesis by using 10,000 structurally diverse compounds as putative inactives. We selected these compounds by clustering ~275,000 compounds of the NCI virtual screening library (Shiryaev et al., 2011) into 10,000 clusters based on the TC (a measure of molecular similarity), and selecting the cluster centers as structurally diverse compounds to represent coverage of the chemical space of the full dataset. In doing so, we considered a singleton as a cluster of size one.

Datasets

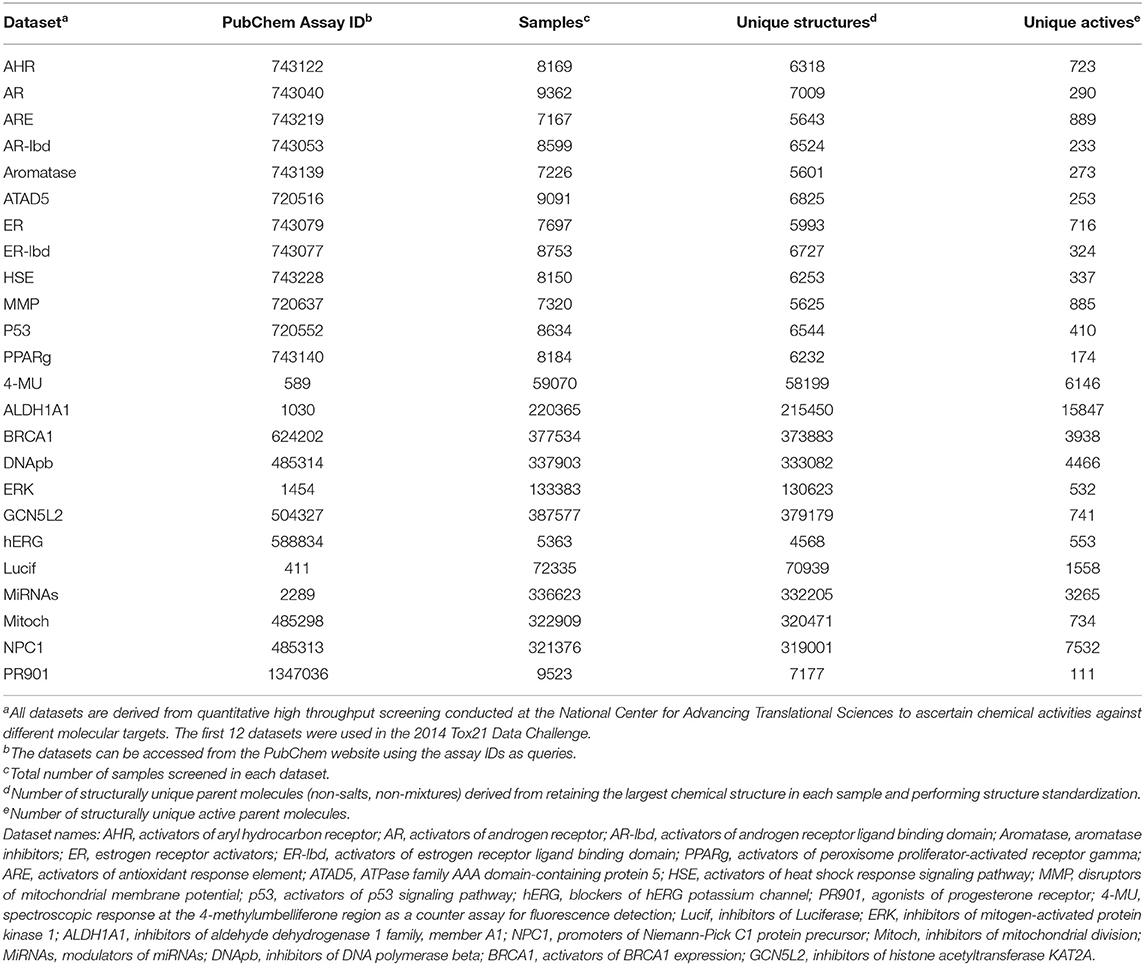

We evaluated the performance of the similarity search methods using HTS data generated from the National Center for Advancing Translational Sciences of the National Institutes of Health. We downloaded the data in two batches. The first batch consisted of the results of ~8,000 samples screened against 10 toxicity-related targets using 12 different assays, with two different assays deployed for two of the 10 targets. Thus, roughly the same 8,000 samples were tested in 12 assays, generating 12 molecular activity datasets. As these datasets were used in the Tox21 Data Challenge for molecular activity predictions (Huang and Xia, 2017), we downloaded them from Tox21 Data Challenge web site2. Because the datasets were relatively small (consisting of ~7,000 structurally unique compounds), we used them to evaluate maxZ scoring methods based on two-dimensional (2-D) molecular fingerprints and three-dimensional (3-D) molecular shapes.

A library consisting of 8,000 samples can hardly be considered a “large” library for HTS. Therefore, we used a second batch of data that consisted of results for a few thousand to a few hundred thousand samples screened against 12 different molecular targets. We downloaded these data from the PubChem web site (https://pubchem.ncbi.nlm.nih.gov/) using their assay IDs as queries. Table 1 shows the assay IDs of these datasets together with the Tox21 Challenge datasets. Details of the datasets, including the molecular targets, specific assays, number of samples screened, and number of samples deemed active, can be found from PubChem using the respective assay IDs as queries.

Because some samples were prepared from the same parent chemicals, we first cleaned the data before using them to evaluate the performance of the similarity search methods. We first removed counter-ions in salts and retained the largest component in samples consisting of non-bonded (i.e., disconnected) components. We then standardized the structures by neutralizing acids and bases (protonating acids and de-protonating bases) and generating a canonical SMILES from the standardized structure for each sample. For the results of each dataset, we applied a first-pass filter on canonical SMILES and retained only the first sample entry of a structurally unique parent compound. Table 1 summarizes the resulting number of structurally unique parent compounds tested and the number of structurally unique actives from each assay.

In addition to the 24 HTS datasets, we also evaluated performance of the methods on 40 datasets in the Directory of Useful Decoys (DUD) (Huang et al., 2006) and an enhanced version of DUD consisting of 102 datasets called DUDE datasets (Mysinger et al., 2012). Each of these datasets consists of compounds known active on a protein target and many compounds of similar physicochemical properties as the actives but of very different molecular structures as the actives. These datasets are designed for evaluating the performance of docking-based virtual screening methods. We expect them to be less challenging than the HTS datasets for 2-D molecular similarity search methods, because in these datasets the actives and decoys are well-separated in molecular structure space and, therefore, any fingerprint-based similarity search methods are expected to perform well on these datasets.

Evaluation Protocol

To evaluate the performance of the methods, for each dataset we randomly selected 100 actives as the queries, and combined the other actives with the other compounds tested. We then calculated the maximum TC for each of these compounds with respect to the queries, as well as the maximum z-score and AS score. For these calculations, we used the extended connectivity fingerprint (Rogers and Hahn, 2010) with a maximum diameter of four chemical bonds (ECFP_4) and a fixed fingerprint length of 2,048 bits. We calculated ROC_AUC and BEDROC values for the Max-Sim, maxZ, and AS methods. For all BEDROC calculations, we used the default parameter setting of α = 20.0, i.e., corresponding to 80% of the maximum contribution to BEDROC coming from the top 8% of the list of ranked molecules. To ensure statistical significance of the findings, we repeated the calculations nine times, using 100 randomly selected actives as queries each time. We compared the performance of the methods based on the resulting mean ROC_AUC and BEDROC values.

Results

Performance of the maxZ Method

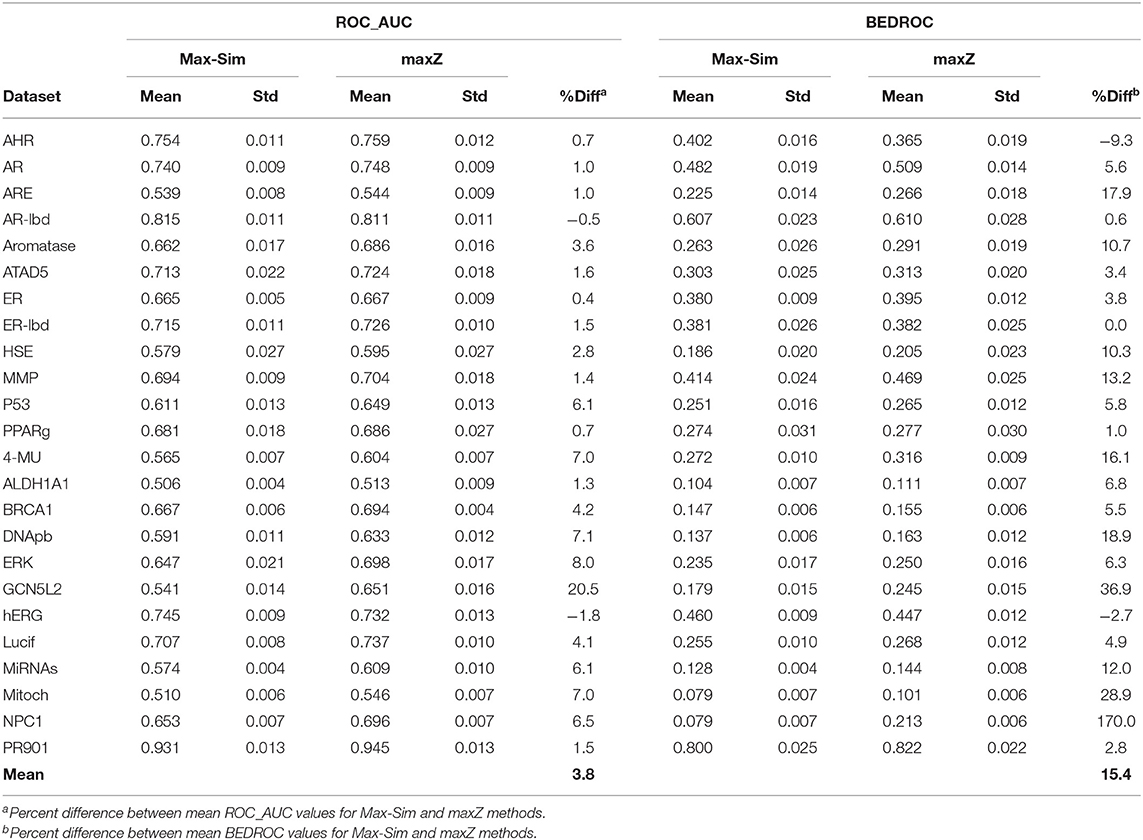

Table 2 shows a summary of the mean ROC_AUC and BEDROC values derived from the Max-Sim and maxZ methods for the 24 datasets. The mean ROC_AUC values derived from the Max-Sim method and those derived from the maxZ method were similar, with the latter only 3.7% higher than the former. In contrast, the mean difference in BEDROC values between the maxZ and Max-Sim methods was as high as 15%. However, the result for one dataset, NPC1, was an outlier, as the difference was as high as 170%, and the mean difference in BEDROC values decreased to 8.7% when it was excluded. Nonetheless, the maxZ method still outperformed the Max-Sim method, as the ROC_AUC and BEDROC values derived from maxZ were smaller than those derived from Max-Sim in only two of the 24 datasets. Although the differences between the maxZ and Max-Sim results were small for some datasets, for those showing a considerable difference, maxZ performed significantly better. For instance, the ROC_AUC values derived from maxZ were at least 5% higher than those derived from Max-Sim in 8 of the 24 datasets, whereas Max-Sim performed better than maxZ by 5% or more in only two datasets. This difference was even more pronounced for BEDROC values, as maxZ outperformed Max-Sim by 5% or more in 15 of the 24 datasets, whereas the opposite was true in only one dataset. Overall, the ROC_AUC values show that the maxZ method performs only slightly better than the Max-Sim method for ranking all samples in the dataset, whereas the BEDROC values indicate that the maxZ method performs markedly better than the Max-Sim method in the early recognition of active compounds.

Table 2. Mean and standard deviation of ROC_AUC and BEDROC values derived from a similarity search using the rank by maximum similarity (Max-Sim) and maximum z-score (maxZ) approaches over 10 runs, each with 100 randomly selected actives as queries.

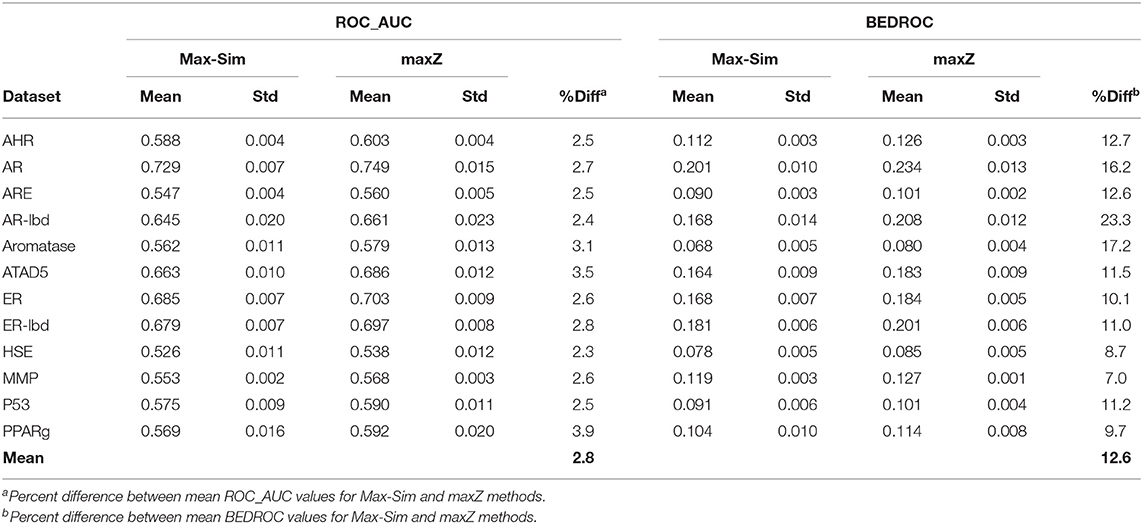

A popular 3-D equivalent of 2-D fingerprint-based molecular similarity search is the Rapid Overlay of Chemical Structures (ROCS) method (OpenEye Scientific Software, Santa Fe, NM) (Fontaine et al., 2007), which calculates the Tanimoto similarity between 3-D molecular shapes and pharmacophore features. Because of the similarity between 2-D fingerprint-based and 3-D ROCS-based similarity searches, we hypothesized that the maxZ method would also improve early recognition for ROCS-based 3-D similarity searches. To test this hypothesis, we generated up to 15 low-energy conformers for each molecule in the 12 datasets used in the 2014 Tox21 Data Challenge, using Omega version 3.0.1.2 (OpenEye Scientific Software) with default parameters (Hawkins et al., 2010). We then conducted ROCS-based similarity searches for each dataset using randomly selected 10% actives as active queries. Each query molecule was represented by up to 15 of its lowest-energy conformers. We calculated the Tanimoto combo similarity (commonly called the combo score, which is the sum of the shape TC and color force field TCs) pairwise between the conformers of each active query and each conformer of the other compounds using ROCS version 3.2.2.2 with default parameters. The maximum Tanimoto combo score between a query molecule and a non-query molecule is designated as the Tanimoto combo score of the non-query molecule. We then calculated the ROC_AUC and BEDROC values using the maximum Tanimoto combo scores and the maximum z-scores calculated from the Tanimoto combo scores. We repeated this calculation nine more times, each with 10% of the actives randomly selected as active query molecules. Table 3 shows the means and standard deviations of the ROC_AUC and BEDROC values. The results were similar to those of 2-D fingerprint-based similarity searches, i.e., the ROC_AUC values derived from the maxZ method were a few percentage points higher than those derived from the Max-Sim method, but the difference between BEDROC values was 12,6% on average. Thus, sorting the samples by the maximum z-values of the combo scores led to a substantial improvement in early recognition.

Table 3. Mean and standard deviation of ROC_AUC and BEDROC values derived from a ROCS-based 3-D molecular similarity search using the rank by maximum similarity (Max-Sim) and maximum z-score (maxZ) methods.

We evaluated the maxZ method for 3-D similarity search of the Tox21 Challenge datasets only, because the datasets were small (7,009 structurally unique compounds in the largest) and the computations could be completed within a reasonable amount of time. Most of the other datasets are much larger, with up to a few hundred thousand structurally unique compounds. We did not evaluate the performance of the maxZ method on these datasets, because the ROCS calculations would have required substantially more computing resources.

Performance of the AS Method

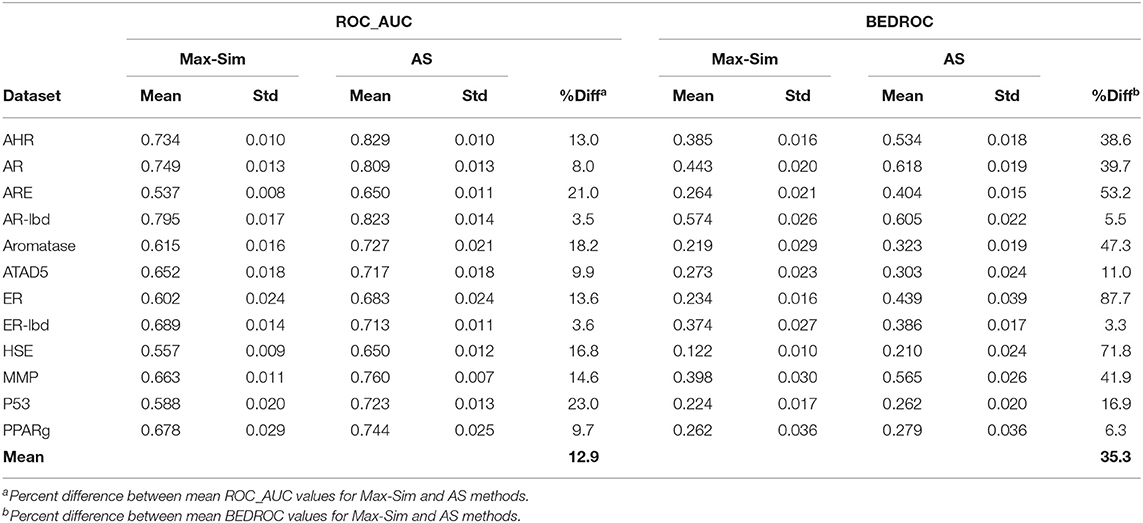

Table 4 shows the ROC_AUC and BEDROC values calculated from the Max-Sim and AS methods for the 12 Tox21 Challenges datasets. We calculated the AS score using a negative set (inactives) of 1,000 randomly selected compounds from the set of all screening negatives. We used the rest of the actives and inactives in each dataset as test data to evaluate the performance of each similarity search method. Both ROC_AUC and BEDROC values calculated from AS scores were significantly higher than the corresponding values obtained using the Max-Sim method, confirming that exploiting the available information on inactives improves the performance of both virtual screening methods. Note that the improvement of BEDROC values is significantly greater than that of ROC_AUC values, suggesting that the performance gains are mainly due to early recognition of actives in the AS method.

Table 4. Mean and standard deviation of ROC_AUC and BEDROC values derived from a fingerprint-based similarity search using the rank by maximum similarity (Max-Sim) and rank by aggregated score (AS) methods.

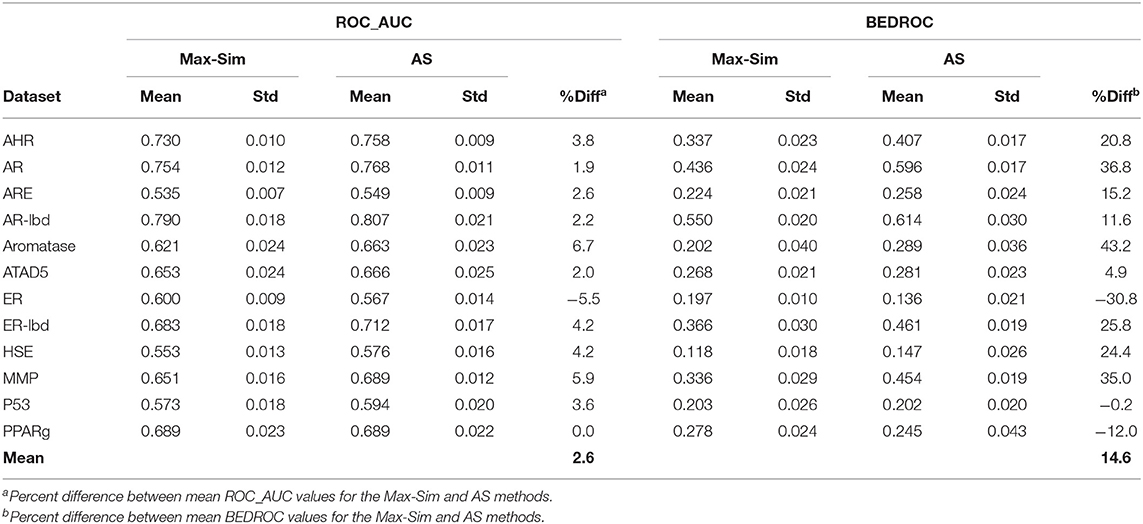

As noted in section Rank by Aggregated Similarity, because drug discovery involves rigorous confirmation of the activities of actives, but rarely any investments in efforts to confirm inactivity, information on inactive compounds is usually less reliable than that on active compounds. In addition, for some projects, active queries are not derived from screening of chemical libraries and, hence, there is no information on inactive compounds. However, because the number of compounds that are active against any drug target can be assumed to be miniscule compared to the number of all available compounds, we hypothesized that a large number of structurally diverse compounds should be able to serve as putative inactive compounds for the AS method. To test this hypothesis, we repeated the evaluation above, using 10,000 structurally diverse compounds selected from the NCI library. Table 5 shows that the replacement of inactive compounds by structurally diverse compounds led to a significant performance deterioration of the AS method, especially in terms of ROC_AUC values, which were only 2.6% higher on average than those of the Max-Sim method. However, the overall mean BEDROC value was still 14.6% higher than that of the Max-Sim method, indicating that early recognition improved even when inactive compounds from screening were unavailable.

Table 5. Mean and standard deviation of ROC_AUC and BEDROC values derived from a fingerprint-based similarity search using the rank by maximum similarity (Max-Sim) and rank by aggregated score (AS) methods, using 10,000 structurally diverse compounds as inactive compounds.

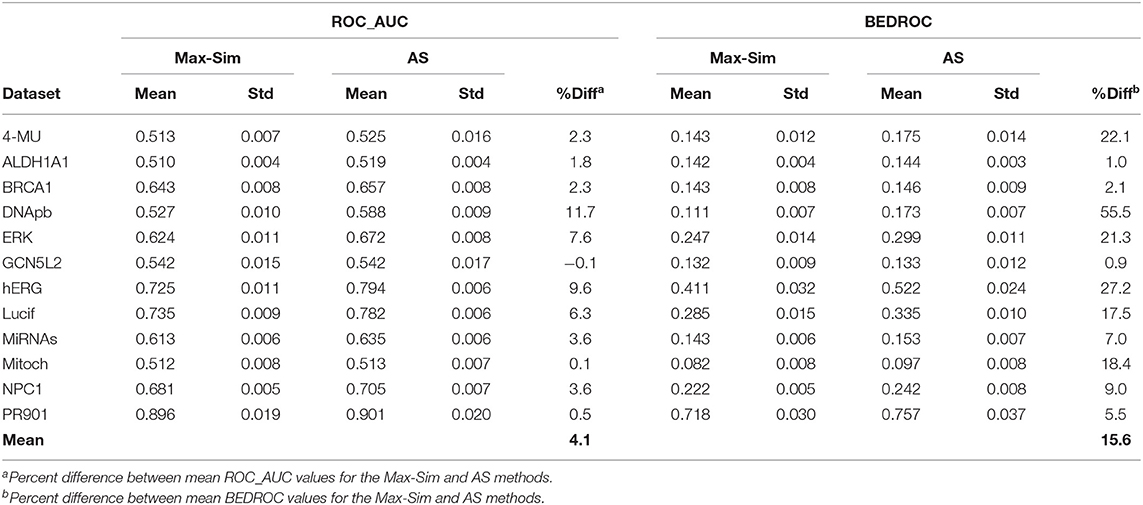

To assess the validity of the findings on the 12 Tox21 Challenge datasets for a wider range of datasets with the number of chemicals ranging from a few thousand to few hundred thousand, we conducted virtual screening using the AS method and the same 10,000 structurally diverse NCI compounds as putative inactive compounds. The results were comparable to those obtained from the Tox21 Challenge datasets, indicating that the method is applicable to a wide range of HTS datasets (Table 6).

Table 6. Mean and standard deviation of ROC_AUC and BEDROC values derived from a fingerprint-based similarity search using the rank by maximum similarity (Max-Sim) and rank by aggregated score (AS) methods, using 10,000 structurally diverse compounds as inactive compounds.

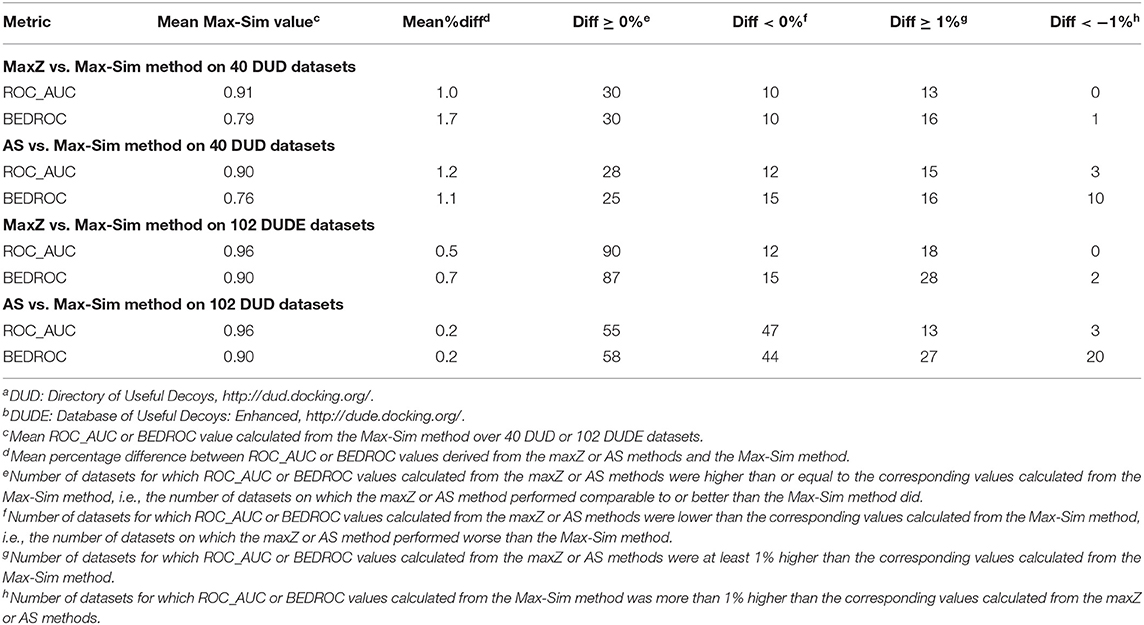

Performance of the maxZ and AS Methods on the DUD and DUDE Datasets

Because most DUD and DUDE datasets contain <100 actives, we performed evaluations on these datasets by randomly selecting 10% of the actives as queries. We used the remaining actives and all decoys as test sets to evaluate the performance of the methods. As these datasets do not contain any experimentally determined inactives, we used the same set of 10,000 structurally diverse NCI compounds as putative inactives in testing the performance of the AS method. Table S1 shows detailed results obtained from the 40 DUD and 102 DUDE datasets and Table 7 summarizes these results.

The most obvious difference between the summary results in Table 7 and the results in Table 2 was that the ROC_AUC and BEDROC values of the HTS datasets derived from the Max-Sim method were significantly lower than the corresponding values of the DUD and DUDE datasets. The mean ROC_AUC values of the DUD and DUDE datasets were 0.90 and 0.96, respectively, and the corresponding mean BEDROC values were 0.76 and 0.90. These values were significantly higher than the corresponding mean ROC_AUC and BEDROC values of 0.66 and 0.29 for the 24 HTS datasets. These results corroborate our expectation that, because the actives and decoys are well-separated in molecular structure space, the DUD and DUDE datasets present much less of a challenge than do the HTS datasets for similarity search methods. Because the Max-Sim method achieved near perfect performance for these datasets, as indicated by an average ROC_AUC value of 0.96 and an average BEDROC value of 0.90 for the DUDE datasets, any improvement beyond the Max-Sim results will necessarily be small given the little room left for improvement. Indeed, Table 7 shows that on average, the ROC_AUC or BEDROC values derived from the maxZ or AS method were only about 1% higher and <1% higher than the corresponding values of the Max-Sim method for the DUD and DUDE datasets, respectively. Nevertheless, Table 7 shows that the number of datasets for which the maxZ and AS methods performed better than the Max-Sim method by more than 1% was significantly higher than that for which the Max-Sim method performed better by more than 1%. Thus, for these datasets, the maxS and AS methods still outperformed the Max-Sim method (albeit with a smaller effect size) even though the Max-Sim method already achieved near perfect performance.

Discussion

Fingerprint-based molecular similarity search is one of the most important tools for virtual screening of large chemical libraries. Over the years, many similarity search methods have been investigated, but the simple, parameter-free, rank-by-maximum Tanimoto similarity approach remains a popular method. It achieves robust performance based on the Tanimoto similarity of each compound in a compound library to its closest query molecule and disregarding its similarity to all other query molecules. In addition, it compares the values of Tanimoto similarity to different query molecules directly. This is theoretically correct only if the distribution of similarity values to all other query molecules is identical, an assumption that has been shown to be invalid (Baldi and Nasr, 2010).

In this study, we proposed and evaluated two parameter-free similarity search methods. The AS method considers information on the similarity to all query molecules, whereas the maxZ method converts the Tanimoto similarity into a z-score for a statistically sound, direct comparison. The results of our evaluations using over 20 HTS datasets indicated that neither method achieved significantly higher ROC_AUC values over the standard Max-Sim method. However, BEDROC values derived from both methods were ~10% higher than those of the Max-Sim method. Thus, although our methods perform comparably to the standard similarity search method when judged by ranking all compounds in a screening library, they perform better on early recognition by placing more actives at the top of a ranked list. This is an important trait for virtual screening of large chemical libraries, considering that follow-up experimental testing is feasible for only a small fraction of chemicals.

A conventional similarity search calculates TCs between all query molecules and all library molecules, and these values are sufficient for converting TCs to z-scores. As such, the additional computational cost to perform a similarity search using the maxZ method is minimal. Conversely, the AS method is notably slower than the Max-Sim method. However, with the ever-increasing power and decreasing cost of computing hardware, the method can become competitive based on its performance. Thus, when early recognition is among the objectives of virtual screening, the two methods provide alternatives to the standard Max-Sim method.

Data Availability Statement

The raw data supporting the conclusions of this manuscript will be made available by the authors, without undue reservation, to any qualified researcher.

Author Contributions

All authors contributed to the conception of the ideas and planned computational evaluations. RL performed the evaluations and wrote the first draft of the manuscript. AW supervised the project and contributed to the final manuscript.

Funding

The research was supported by the U.S. Army Medical Research and Development Command (Ft. Detrick, MD) as part of the U.S. Army's Network Science Initiative, and by the Defense Threat Reduction Agency (Grant No. CBCall14-CBS-05-2-0007).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors gratefully acknowledge the assistance of Dr. Tatsuya Oyama in editing the manuscript. The opinions and assertions contained herein are the private views of the authors and are not to be construed as official or as reflecting the views of the U.S. Army, the U.S. Department of Defense, or The Henry M. Jackson Foundation for Advancement of Military Medicine, Inc.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fchem.2019.00701/full#supplementary-material

Footnotes

1. ^https://www.cas.org/about/cas-content (accessed July 5, 2019).

2. ^https://tripod.nih.gov/tox21/challenge/data.jsp (accessed July 5, 2019).

References

Aherne, G. W., McDonald, E., and Workman, P. (2002). Finding the needle in the haystack: why high-throughput screening is good for your health. Breast Cancer Res. 4, 148–154. doi: 10.1186/bcr440

Baldi, P., and Nasr, R. (2010). When is chemical similarity significant? The statistical distribution of chemical similarity scores and its extreme values. J. Chem. Inf. Model. 50, 1205–1222. doi: 10.1021/ci100010v

Empereur-Mot, C., Guillemain, H., Latouche, A., Zagury, J. F., Viallon, V., and Montes, M. (2015). Predictiveness curves in virtual screening. J. Cheminform. 7:52. doi: 10.1186/s13321-015-0100-8

Fontaine, F., Bolton, E., Borodina, Y., and Bryant, S. H. (2007). Fast 3D shape screening of large chemical databases through alignment-recycling. Chem. Cent. J. 1:12. doi: 10.1186/1752-153X-1-12

Halgren, T. A., Murphy, R. B., Friesner, R. A., Beard, H. S., Frye, L. L., Pollard, W. T., et al. (2004). Glide: a new approach for rapid, accurate docking and scoring. 2. Enrichment factors in database screening. J. Med. Chem. 47, 1750–1759. doi: 10.1021/jm030644s

Hawkins, P. C., Skillman, A. G., Warren, G. L., Ellingson, B. A., and Stahl, M. T. (2010). Conformer generation with OMEGA: algorithm and validation using high quality structures from the Protein Databank and Cambridge Structural Database. J. Chem. Inf. Model. 50, 572–584. doi: 10.1021/ci100031x

Huang, N., Shoichet, B. K., and Irwin, J. J. (2006). Benchmarking sets for molecular docking. J. Med. Chem. 49, 6789–6801. doi: 10.1021/jm0608356

Huang, R., and Xia, M. (2017). Editorial: Tox21 Challenge to build predictive models of nuclear receptor and stress response pathways as mediated by exposure to environmental toxicants and drugs. Front. Environ. Sci. 5:3. doi: 10.3389/fenvs.2017.00003

Kairys, V., Fernandes, M. X., and Gilson, M. K. (2006). Screening drug-like compounds by docking to homology models: a systematic study. J. Chem. Inf. Model. 46, 365–379. doi: 10.1021/ci050238c

Kar, S., and Roy, K. (2013). How far can virtual screening take us in drug discovery? Expert Opin. Drug Discov. 8, 245–261. doi: 10.1517/17460441.2013.761204

Kim, S., Thiessen, P. A., Bolton, E. E., Chen, J., Fu, G., Gindulyte, A., et al. (2016). PubChem substance and compound databases. Nucleic Acids Res. 44, D1202–D1213. doi: 10.1093/nar/gkv951

Kristensen, T. G., Nielsen, J., and Pedersen, C. N. (2013). Methods for similarity-based virtual screening. Comput. Struct. Biotechnol. J. 5:e201302009. doi: 10.5936/csbj.201302009

McClish, D. K. (1989). Analyzing a portion of the ROC curve. Med. Decis. Making 9, 190–195. doi: 10.1177/0272989X8900900307

Mysinger, M. M., Carchia, M., Irwin, J. J., and Shoichet, B. K. (2012). Directory of useful decoys, enhanced (DUD-E): better ligands and decoys for better benchmarking. J. Med. Chem. 55, 6582–6594. doi: 10.1021/jm300687e

Nasr, R. J., Swamidass, S. J., and Baldi, P. F. (2009). Large scale study of multiple-molecule queries. J. Cheminform. 1:7. doi: 10.1186/1758-2946-1-7

Rogers, D., and Hahn, M. (2010). Extended-connectivity fingerprints. J. Chem. Inf. Model. 50, 742–754. doi: 10.1021/ci100050t

Sheridan, R. P., Singh, S. B., Fluder, E. M., and Kearsley, S. K. (2001). Protocols for bridging the peptide to nonpeptide gap in topological similarity searches. J. Chem. Inf. Comput. Sci. 41, 1395–1406. doi: 10.1021/ci0100144

Shiryaev, S. A., Cheltsov, A. V., Gawlik, K., Ratnikov, B. I., and Strongin, A. Y. (2011). Virtual ligand screening of the National Cancer Institute (NCI) compound library leads to the allosteric inhibitory scaffolds of the West Nile Virus NS3 proteinase. Assay Drug Dev. Technol. 9, 69–78. doi: 10.1089/adt.2010.0309

Triballeau, N., Acher, F., Brabet, I., Pin, J. P., and Bertrand, H. O. (2005). Virtual screening workflow development guided by the “receiver operating characteristic” curve approach. Application to high-throughput docking on metabotropic glutamate receptor subtype 4. J. Med. Chem. 48, 2534–2547. doi: 10.1021/jm049092j

Truchon, J. F., and Bayly, C. I. (2007). Evaluating virtual screening methods: good and bad metrics for the “early recognition” problem. J. Chem. Inf. Model. 47, 488–508. doi: 10.1021/ci600426e

Keywords: lead discovery, virtual screening, early recognition, Tanimoto similarity, z-score, BEDROC, ROCS

Citation: Liu R, AbdulHameed MDM and Wallqvist A (2019) Teaching an Old Dog New Tricks: Strategies That Improve Early Recognition in Similarity-Based Virtual Screening. Front. Chem. 7:701. doi: 10.3389/fchem.2019.00701

Received: 26 July 2019; Accepted: 08 October 2019;

Published: 23 October 2019.

Edited by:

Jose L. Medina-Franco, National Autonomous University of Mexico, MexicoReviewed by:

Olivier Sperandio, Institut National de la Santé et de la Recherche Médicale (INSERM), FranceEva Nittinger, Universität Hamburg, Germany

Copyright © 2019 Liu, AbdulHameed and Wallqvist. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ruifeng Liu, rliu@bhsai.org; Anders Wallqvist, sven.a.wallqvist.civ@mail.mil

Ruifeng Liu

Ruifeng Liu Mohamed Diwan M. AbdulHameed

Mohamed Diwan M. AbdulHameed Anders Wallqvist

Anders Wallqvist