Orbital and eyelid diseases: The next breakthrough in artificial intelligence?

- 1Department of Ophthalmology, Second Hospital of Jilin University, Changchun, China

- 2Department of Engineering, The Army Engineering University of PLA, Nanjing, China

- 3The Eye Hospital, School of Ophthalmology & Optometry, Wenzhou Medical University, Wenzhou, China

Orbital and eyelid disorders affect normal visual functions and facial appearance, and precise oculoplastic and reconstructive surgeries are crucial. Artificial intelligence (AI) network models exhibit a remarkable ability to analyze large sets of medical images to locate lesions. Currently, AI-based technology can automatically diagnose and grade orbital and eyelid diseases, such as thyroid-associated ophthalmopathy (TAO), as well as measure eyelid morphological parameters based on external ocular photographs to assist surgical strategies. The various types of imaging data for orbital and eyelid diseases provide a large amount of training data for network models, which might be the next breakthrough in AI-related research. This paper retrospectively summarizes different imaging data aspects addressed in AI-related research on orbital and eyelid diseases, and discusses the advantages and limitations of this research field.

Introduction

Artificial Intelligence (AI) simulates and extends human intelligence, and has been hailed as “the Future of Employment” (Yang et al., 2021). Long before the mid-twentieth century, the British scientist Alan Turing first predicted that machines could become intelligent (Li et al., 2019), and in 1956, McCarthy introduced “AI” at the Dartmouth Conference (Dzobo et al., 2020). At the time, “AI” was actualized via a static computer program that controlled a machine, which is unlike the AI we currently know (Mintz and Brodie, 2019). In 1959, Samuel developed the theory of AI and proposed “machine learning (ML)” (Finlayson et al., 2019), which denotes the capability of a computer to learn by itself without explicit program instructions (Nichols et al., 2019). In ML large amounts of data are analyzed to make predictions on real-world events using supervised and unsupervised algorithms. ML has spawned variants such as conventional machine learning (CML) and deep learning (DL) (Brehar et al., 2020; Ye et al., 2020). DL has exhibited a remarkable ability to analyze high-dimensional data with multiple processing layers, gradually becoming the mainstream of ML modeling (Finlayson et al., 2019). In particular, DL-based technologies display excellent abilities to extract image features and associate various types of data, which plays an active role in the automatic recognition of image, sound, and text data (Cai et al., 2020). Ting et al. (2017) reported that AI could automatically diagnose diabetic retinopathy from more than 100,000 retinal photographs. In recent years, DL has gradually become a new tool in the automatic diagnosis of glaucoma and cataracts (Lin et al., 2019; Wu et al., 2019; Wang et al., 2020a; Girard and Schmetterer, 2020). Some commercial software applications related to DL are used to assist in the diagnosis of retinal diseases in clinical practice (van der Heijden et al., 2018; Girard and Schmetterer, 2020).

Imaging data, including orbital computed tomography (CT), orbital magnetic resonance imaging (MRI), and external ocular photographs, play a crucial role in the diagnosis and treatment of orbital and eyelid diseases (Bailey and Robinson, 2007; Abdullah et al., 2010). Currently, AI automatically diagnoses and grades some orbital and eyelid diseases, such as orbital blowout fractures and thyroid-associated ophthalmopathy (TAO) (Li et al., 2020; Song et al., 2021a). Automatic measurement of eyelid morphological parameters and automatic surgical decision-making based on AI technology are two recent research hotspots (Bahceci Simsek and Sirolu, 2021; Chen et al., 2021; Lou et al., 2021; Hung et al., 2022). Compared to traditional medical models, AI can rapidly analyze large sets of patient data, achieving healthcare cost savings and assisting in the construction of teleconsultation platforms (Bi et al., 2020). Automatic measurement of eyelid morphological parameters based on AI technology could correct artifactual errors to maintain objectivity and repeatability in patient data evaluation, which might be a new tool in the assessment of oculoplastic surgery (Lou et al., 2021). However, because of the small amount of standard imaging data and the imbalance in categories, ensuring a highly-efficient algorithm training is still a challenge. In addition, the development of methods for obtaining high-quality imaging data of orbital and eyelid diseases should also be considered.

In this paper, we comprehensively review the application of AI-based technology to the diagnosis and treatment of orbital and eyelid diseases by analyzing various types of image data. The advantages and limitations of AI in this field are also discussed to explore its potential targets in detecting and treating orbital and eyelid diseases.

What is artificial intelligence?

AI is a branch of computer science, in which “artificial” indicates that the systems are man-made and “intelligence” denotes features such as consciousness and thinking (Thrall et al., 2018). The major purpose of AI is to simulate human thinking processes by learning from existing experiences to solve problems that cannot be solved through traditional computer programming (Bischoff et al., 2019). ML is a subset of AI that has become the mainstream of AI technology (Shin et al., 2021). ML extracts and analyzes the features of input samples to classify new homogeneous samples (Totschnig, 2020). ML automatically improves and optimizes computer algorithms and programs by analyzing the data rather than relying on explicit program instructions (Nichols et al., 2019; Cho et al., 2021). Among the various ML models that have emerged, neural networks simulate the synaptic structure of human neurons and improve the computational ability of ML by adjusting the parameters of network models (Starke et al., 2021). Convolutional neural networks (CNNs), which have an encoding structure similar to that of visual cortical neurons (Hou et al., 2019), have become one of the most popular neural network models (Mintz and Brodie, 2019).

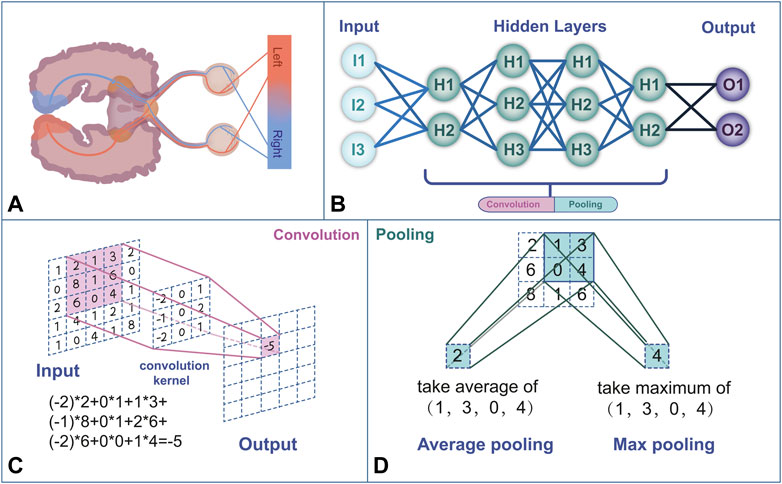

In human vision, each neuron in the visual cortex responds to stimulation by activating specific regions in the visual space that form the entire visual field (Figure 1) (Brachmann et al., 2017). Similarly, CNNs extract features from the input image and output a feature map using convolution and pooling operations (Le et al., 2020). A convolution layer consists of a set of two-dimensional numerical matrices that are also known as filters. The CNN obtains the pixel value of the output images by multiplying the value in the filter by the value of the corresponding pixel in the image and summing the product, that is, via convolution operations (Brachmann et al., 2017). To avoid similar sizes of the output pixels after the convolution operation, the CNN changes the size of the output pixels by reducing the input values through the pooling operation. By repeating the convolution and pooling operations, the CNN continuously self-corrects so that the output values become closer to the human ratings (Larentzakis and Lygeros, 2021). New neural network models, such as UNet and ResNet, have been developed to overcome the difficulty of training CNNs with deep layers. These neural network models improve the framework of a CNN by expanding its depth, convolutional layer, or pooling layer. For example, while traditional CNN models can only classify images and output the labeling of an entire image, UNet can achieve pixel-level classification and output the class of each pixel, which makes it well-suited for image segmentation tasks (Yin et al., 2022). ResNet solves the gradient vanishing and gradient exploding problems of traditional CNNs by adding a residual block (He et al., 2020).

FIGURE 1. (A) Human visual feedback pathway. (B) Neural network structure framework mimics the human neural network. (C) The convolution process performs a linear transformation at each position of the image and maps it to a new value. (D) Pooling is a computational process that reduces the data size. The commonly used pooling methods are max pooling and average pooling.

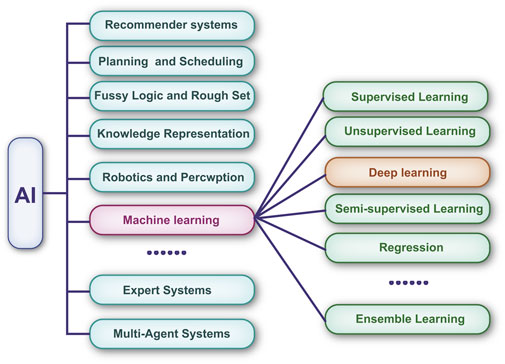

To process large amounts of data, multilayer neural networks have been cascaded to form DL algorithms (Kaluarachchi et al., 2021). Compared with traditional ML algorithms, DL has a greater ability to analyze large-scale matrix data (Jalali et al., 2021). The relationship between AI, ML, and DL is shown in Figure 2. Currently, DL-based technologies are widely used in the diagnosis of certain ophthalmic diseases, such as cataracts (Wu et al., 2019) and glaucoma (Sudhan et al., 2022), and the segmentation of medical images, including those of retinal vessels (van der Heijden et al., 2018).

FIGURE 2. Machine learning is a subset of artificial intelligence. Deep learning has revolutionized the machine learning field in the past few years. It is now widely used in image recognition, voice recognition, etc.

Artificial intelligence technology applied to orbital computed tomography/magnetic resonance imaging images

Orbital CT and MRI are important tools for the diagnosis and monitoring of orbital and eyelid diseases (Weber and Sabates, 1996). MRI and CT rely on magnetic fields and radio wave energy to provide images within the orbit (Russell et al., 1985; Langer et al., 1987). MRI is suitable for imaging soft tissue, whereas CT is commonly used to image bony structures (Hou et al., 2019). CT and MRI images are suitable as training data for AI-based research as they have less background occupation and noise (Thrall et al., 2018; Jalali et al., 2021).

Automatic identification and segmentation of anatomical structures from orbital computed tomography/magnetic resonance imaging

Automatic recognition and labeling of anatomical structures of the eye orbit can be achieved through the segmentation of medical images based on AI technology (Hou et al., 2019). Furthermore, AI can segment bony structures from orbital CT/MRI images. Hamwood et al. (2021) developed a DL system for the segmentation of bony regions from orbital CT/MRI images that exhibited excellent efficiency, particularly in terms of computational time. Li et al. (2022a) extracted bony orbit features and analyzed Asian aging characteristics through the popular deep CNN (DCNN) model. Some commercial software can also automatically segment orbital regions from CT images (Hamwood et al., 2021). In addition, using AI-based technology, irregular soft tissues, such as fat and abscesses, have been reliably segmented from orbital CT/MRI images. Brown et al. (2020) used a UNet-like CNN to segment orbital septal fat from orbital MRI images, and the results showed that AI segmentation was consistent with manual segmentation. Fu et al. (2021) trained and evaluated a context-aware CNN (CA-CNN) to segment orbital abscess regions from CT images of patients with orbital cellulitis, with the AI results being similar to those obtained by medical experts.

In addition, AI can automatically quantify certain anatomical structures based on image segmentation. Umapathy et al. (2020) established an MRes-UNet model to segment and quantify the volume of the eyeball based on orbital CT images. Pan et al. (2022) achieved automatic calculation of the size and height of the bony orbit regions using a U-Net++ based on pre-3D images reconstructed from orbital CT images.

Automatic diagnosis and grading of orbital and eyelid diseases based on orbital computed tomography/magnetic resonance imaging images

Orbital CT/MRI images are crucial in the preliminary diagnosis of orbital diseases such as orbital wall fractures, orbital tumors, and TAO (Griffin et al., 2018). Orbital blowout fractures are one of the most common injuries caused by orbital trauma. Li et al. (2020) used the Inception V3 DCNN to automatically classify CT images exhibiting orbital burst fractures. Song et al. (2021a) proposed a 3D-ResNet to automatically detect TAO from orbital CT images, and the trained AI algorithm showed excellent performance in a real clinical setting. Lin et al. (2021) used a DCNN to grade TAO based on orbital MRI images, resulting in a labeling of disorder areas that was consistent with that made manually through an occlusion test. Hanai et al. (2022) developed a deep neural network to assess the enlarged extraocular muscles (EEM) of patients with Graves’ ophthalmopathy (GO) from orbital CT images. When applied to the test data, the area under the receiver operating curve (AUC) was 0.946, indicating that the deep neural network could effectively detect EEM in GO patients. Lee et al. (2022) used 288 orbital CT scans from patients with mild and moderate-to-severe GO and healthy controls to train a neural network for diagnosing and assessing the severity of GO. The developed neural network yielded an AUC of 0.979 in diagnosing patients with moderate-to-severe GO. Han et al. (2022) automatically identified the differences in the orbital cavernous venous malformations (OCVM) from orbital CT images by training 13 ML models, including support vector machines (SVMs) and random forests. Nakagawa et al. (2022) implemented a VGG-16 network to determine from CT images whether a nasal or sinus tumor invades the periorbital area. The network model achieved a diagnostic accuracy of 0.920, indicating that CNN-based DL techniques can be a useful supporting tool for assessing the presence of orbital infiltration on CT images.

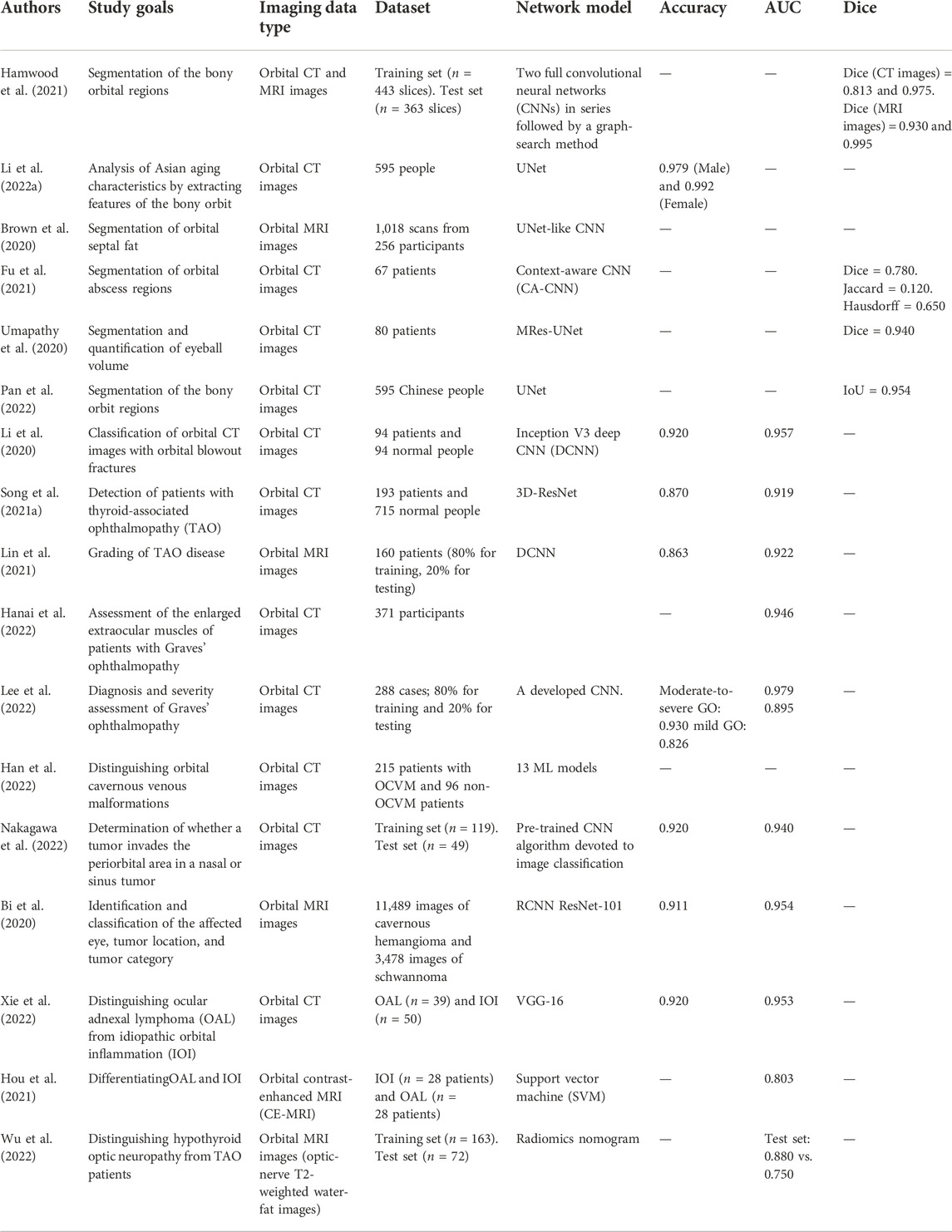

In addition to diagnosing and grading diseases, AI can extract and determine subtle features from images to differentiate confusing diseases. Orbital cavernous hemangioma and schwannoma differ in terms of surgical strategy but have similar MRI features. Bi et al. (2020) developed a database of orbital MRI images of patients with cavernous hemangioma and schwannoma from 45 hospitals in China and used AI to identify and classify the affected eye, tumor location, and tumor category. The AI system was validated, showing an accuracy greater than 0.900 on a multicenter database. Xie et al. (2022) developed a DL model that combines multimodal radiomics with clinical and imaging features to distinguish ocular adnexal lymphoma (OAL) from idiopathic orbital inflammation (IOI). The diagnosing results yielded an AUC of 0.953, indicating that the DL-based analysis may successfully help distinguish between OAL and IOI. Hou et al. (2021) used an SVM classifier and the bag-of-features (BOF) technique to distinguish OAL from IOI based on orbital MRI images. During an independent verification test, the proposed method with augmentation achieved an AUC of 0.803, indicating that BOF-based radiomics might be a new tool for the differentiation between OAL and IOI. Early detection of hypothyroid optic neuropathy (TON) is crucial in clinical decision-making. Wu et al. (2022) built an AI predictive model to distinguish between TAO and TON by extracting radiomic features from optic-nerve T2-weighted water-fat images from a cohort of patients with TAO and a cohort of patients with TON. Table 1 summarizes the discussed AI-related studies on orbital CT/MRI images.

Artificial intelligence technology based on external ocular photographs

Owing to features such as easy and convenient delivery and storage, external ocular photographs are unique imaging data for diagnosing orbital and eyelid diseases. External ocular photographs show abnormalities and deformities in the orbital and eyelid appearance caused by trauma, tumors, inflammation, and other factors (Fukuda et al., 2005). With the development of face recognition technology, AI could locate and extract ocular information from faces, which lays the foundation for AI research based on external ocular photographs.

Automatic measurements of eyelid morphologic parameters from external ocular photographs

The accurate measurement of eyelid morphological parameters is crucial in developing an individual eyelid surgery strategy. However, manual measurement of eyelid morphological parameters is difficult to replicate because of subjective errors induced by head movements and changes in facial expressions. AI provides a more objective and convenient tool for quantifying eyelid morphological parameters by parameterizing facial structures and automatically measuring length, area, and volume. Moriyama et al. (2006) achieved eye motion tracking based on the eyelid structure parameters and iris position. Van Brummen et al. (2021) utilized a ResNet-50 model to segment regions, such as the iris and eyebrow, to measure the marginal reflex distance (MRD) in static and dynamic external ocular photographs. Simsek and Sirolu used computer vision algorithms to automatically measure pupillary distance (PD), eye area (EA), and average eyebrow height (AEBH) from external ocular photographs for evaluating the surgical effect of patients who had undergone Muller’s muscle-conjunctival resection (MMCR) surgery (Bahceci Simsek and Sirolu, 2021). The automated measurement of eyelid morphology parameters based on AI technology helps assess eyelid status and improves the accuracy of eyelid surgery.

Compared with other types of imaging data, external ocular photographs can be taken and shared by patients and physicians through smartphones and the internet, which provides sufficient data for AI research on external ocular photographs. Chen et al. (2021) compiled CNN algorithms using the software MAIA to build DL models for the automatic measurement of MRD1, MRD2, and levator muscle strength based on external ocular photographs taken with smartphones. This study was the first smartphone-based DL model for the automatic measurement of eyelid morphological parameters. Compared to those obtained manually, measurements taken with the aid of AI are more objective.

Ptosis is a common eyelid disorder in which a drooping eyelid obscures the pupil, hindering vision in severe cases. Ptosis is generally diagnosed by measuring eyelid morphological parameters, such as the levator muscle strength, lid fissure height, and limbal reflex distance, based on typical clinical symptoms. Surgical therapy is the main treatment for ptosis (Mahroo et al., 2014). Tabuchi et al. (2022) performed an automatic diagnosis of ptosis using a pre-trained MobileNetV2 CNNi applied to photos of patients taken with an iPad Mini. Hung et al. (2022) realized the automatic identification of monocular appearance photos of ptosis patients based on a VGG-16 neural network, and the results showed that AI outperformed GPs in diagnosing ptosis. Combined with devices such as smartphones, the analysis of eye appearance based on AI can be useful in further clinical scenarios. AI provides an objective tool for measuring eyelid morphological parameters and planning surgery strategies instead of relying on the experience of surgeons. Song et al. (2021b) developed a gradient-boosted decision tree (GBDT) for choosing ptosis surgery strategies and trained it with 3D models created by photographing and scanning the eyes of ptosis patients with a structured light camera. The AI model evaluates the external ocular photographs and the 3D model to determine whether surgery is required and establish the surgery strategy to follow. Lou et al. (2021) evaluated the outcome of ptosis surgery by comparing pre- and postoperative values of eyelid morphological parameters, such as MRD1 and MRD2, which were automatically measured by a UNet from ocular appearance photographs of the patients.

Artificial intelligence diagnosis and prediction based on external ocular photographs

Oculoplastic surgery involves the aesthetic restoration and predicting postoperative outcomes through AI can help the surgeon develop a personalized plastic surgery plan. The major purpose of oculoplastic surgery is to realize the expected aesthetic goals. However, it is hard to judge the expected aesthetic results due to a variety of subjective factors (Swanson, 2011). Establishing an objective facial beauty standard is still controversial. Zhai et al. (2019) proposed a new facial detection method based on a transfer learning CNN, which has better classification accuracy than previous geometric assessment methods, laying a foundation for the prediction of oculoplastic surgery effects. Yixin et al. explored the effect of the eyelid on oculoplastic surgery and aesthetic outcomes by comparing the postoperative metrics of oculoplastic patients assessed by the CNN model with those assessed only artificially. The CNN assessment group had better postoperative extent, lower eyelid skin wrinkles, eyelid tear troughs, skin shine, and aesthetic scores than the control group, suggesting that CNN is a beneficial tool for evaluating oculoplastic surgery (Yixin et al., 2022).

Eyelid and periocular skin tumors seriously affect the health and aesthetics of patients (Silverman and Shinder, 2017). Early preliminary screening through external photography helps detect and monitor these tumors. Seeja and Suresh (2019) trained a UNet to automatically segment skin lesions and differentiate melanoma from benign skin lesions, achieving reliable results in the segmentation and diagnosis of melanoma. Li et al. (2022b) used a faster region-based CNN and a DL classification network to build an AI system that automatically detects malignant eyelid tumors from ocular external photographs, obtaining positive performance on both internal and external test sets (AUC ranging from 0.899 to 0.955). CNNs could fully mine image information and distinguish deep features from external photography to detect subtle eyelid and skin tumors that are elusive to the naked eye, thus helping reduce misdiagnosis and missed diagnosis.

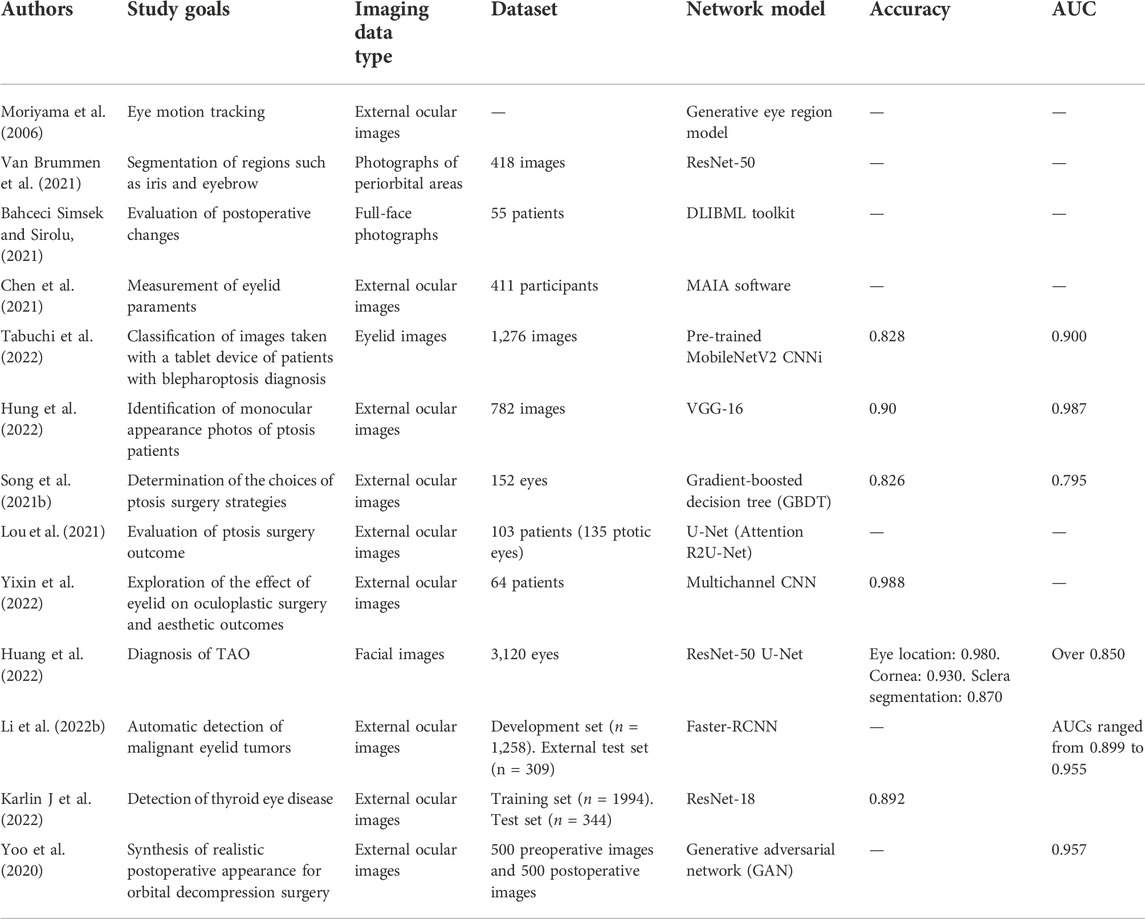

Changes in ocular appearance, such as retraction of the upper eyelid, strabismus, and proptosis, are crucial in the diagnosis of TAO (Hodgson and Rajaii, 2020). Huang et al. (2022) used the ResNet-50 model to obtain an automatic diagnosis of TAO based on external ocular photographs. Karlin J et al. (2022) developed a DL model for detecting TAO based on external ocular photographs. A set comprising 1944 photographs from a clinical database was used for training, and a test set of 344 additional images was used to evaluate the trained DL network. The accuracy of the model on the test set was 0.892, and heatmaps showed that the model could identify pixels corresponding to the clinical features of TAO. Orbital decompression surgery can alleviate the symptoms of eye protrusion and repair the appearance of patients with TAO. According to the 2021-EUGOGO guidelines, orbital decompression surgery is the recommended treatment strategy for patients with severe TAO (Smith, 2021). Yoo et al. (2020) trained a conditional generative adversarial network (GAN) using pre- and postoperative external ocular photographs of patients with orbital decompression. The trained GAN could convert the preoperative external ocular photographs into predictive postoperative images, which were similar to the real postoperative condition, suggesting that GAN might be a new tool for the prediction of oculoplastic surgery results. Table 2 summarizes the aforementioned AI-related studies on external ocular images.

Artificial intelligence-based techniques using other image data types

Tear spillage is a major symptom of lacrimal duct obstruction (LDO), and its incidence in rural areas is gradually increasing (Brendler et al., 2013). The use of anterior segment optical coherence tomography (AS-OCT) to assess the tear meniscus is considered a more objective non-invasive diagnostic procedure. Imamura et al. used DenseNet-169 and pooled DL models (VGG-16, ResNet-50, DenseNet-121, DenseNet-169, Inception ResNet-V2, and Inception-V3) to detect patients with LDO from AS-OCT images. The trained network models exhibited remarkable reliability in marking the areas of the tear meniscus (Imamura et al., 2021).

Pathological examination is the gold standard for diagnosing the nature of ocular tumors. However, traditional pathological examination results are influenced by the experience of the physician, which takes a large amount of time from specimen submission to result confirmation (Heran et al., 2014). AI is not influenced by subjective factors and can process a large number of specimens in a short time. Wang et al. (2020b) used AI technology to automatically diagnose malignant melanoma of the eyelid from pathological sections. They also developed a random forest model to grade tumor malignancy, suggesting that AI may be a future tool for the rapid screening and grading of tumor pathology.

Jiang et al. (2022) proposed a DL framework for the automatic detection of malignant melanoma (MM) of the eyelid based on self-supervised learning (SSL). The framework consisted of a self-supervised model for detecting MM regions at the patch level and another model for classifying lesion types at the slide level. Considering that the differential diagnosis of basal cell and sebaceous carcinomas of the eyelid is highly dependent on the experience of the pathologist, Luo et al. (2022) proposed a fully automated differential diagnostic method based on whole slide images (WSIs) and DL classification, achieving an accuracy of 0.983 for the trained network model.

In addition, AI-decision models can be established based on various types of patient information. Song et al. (2022) trained an ML model using a database that contained both ocular surface characteristics and demographic information (gender, age) of patients with lacrimal sacculitis. Tan et al. (2017) established an alternating decision tree to predict the risk of reconstructive surgery after eyelid basal cell carcinoma (pBBC) resection which provides a new prediction model based on a database with various patient information.

Discussion

The acquisition and analysis of imaging data are crucial in the treatment of orbital and eyelid diseases. In this paper, we discuss the advantages and limitations of AI technology for diagnosing orbital and eyelid diseases by analyzing the different characteristics of image data and the current problems and potential approaches to promote the development of AI-based technology in this field.

Orbital and eyelid diseases are primarily caused by inflammatory (Lutt et al., 2008), metabolic, and traumatic factors (Li et al., 2020). The anatomy integrity of the orbital and eyelid not only protects and supports important structures, such as the eyeball and optic nerve, but is also critical to the aesthetic appearance of the patient’s face (Huggins et al., 2017). AI converts traditional medical images into matrix data and supports clinical decision-making by developing models and analyzing the matrix data (Mintz and Brodie, 2019). Structural segmentation of orbital CT/MRI images using AI might assist in endoscopic and 3D-print surgery and lay a foundation for robotic surgery (Wang et al., 2022). In addition, the automatic measurement of eyelid morphological parameters based on external ocular photographs provides a new tool for developing individualized eyelid surgical strategies (Bahceci Simsek and Sirolu, 2021). Thus, AI technology for diagnosing and treating orbital and eyelid diseases, which remains in its infancy, has great potential for broad clinical application.

Imaging data play an important role in the diagnosis and treatment of orbital and eyelid diseases, providing an adequate source of data for AI training. Non-invasive Orbital CT examination is easy and fast to perform (Lee et al., 2004). Orbital MRI examination is free of ionizing radiation damage and is superior in revealing soft tissue. Compared with MRI examinations, CT images are noisier (Hamwood et al., 2021). Therefore, the traditional UNet algorithm is better suited to training with CT images because it extracts rich feature scales and can effectively filter local noise (Pan et al., 2022). External ocular photographs serve as a unique type of imaging data for orbital and eyelid diseases and provide information for eyelid surgery decisions. Compared with other types of medical images, external ocular photographs are non-invasive and can be easily taken by doctors and patients with smartphones, which breaks the barrier of expensive image equipment and facilitates the application of AI (Chen et al., 2021). Furthermore, automatic facial recognition and eye-tracking technology, which have been widely used in safety inspection, instrument development, etc., could also be applied to AI research based on external ocular photographs (Asaad et al., 2020). In addition, visual field tests, OCT, and CT of the optic-nerve canal also play an active role in the diagnosis of orbital and eyelid diseases. The multimodal diagnostic images provide adequate raw datasets for training AI models and validating their performance.

Although AI analysis of imaging data of orbital and eyelid diseases has, there are some limitations in its development (Mintz and Brodie, 2019; Yang et al., 2021). Uneven disease prevalence and small sample sizes for certain rare diseases cause oversampling when training AI models for specific types of diseases, resulting in poor generalization and a lack of adaptability to new data. Several studies have shown that the category imbalance problem can be solved by weighting the data differently when computing the loss function (Liu et al., 2021; Luo et al., 2021). Moreover, the current AI datasets of orbital and eyelid diseases are generally obtained from the same medical institution. However, it is difficult to obtain standardized data because of the differences in the examination equipment used by different medical institutions. Image-based AI research requires large sets of standard, annotated imaging data, which are still scarce in the case of orbital and eyelid diseases as compared, for instance, with ImageNet. Transfer learning may offer a good solution to the lack of imaging data. When obtaining a large dataset or labeling the data is difficult, learning can be transferred from a task with sufficient data that is easily labeled and similar to the target task. Liu et al. (2020) modified ResNet-152, which was pre-trained on ImageNet, through transfer learning to classify left and right optic discs with an accuracy of 0.988, thus demonstrating a new solution to the lack of data in orbital and eyelid diseases. In addition, we can increase the amount of data through data augmentation by rotating, panning, zooming, or changing the brightness or contrast of the images. For example, Song et al. (2021a) performed 200 rotations on the training data for a CNN to increase the dataset size and reduce overfitting. When verified, the overfitting of the trained CNN remarkably decreased. There are also some drawbacks regarding the quality of imaging data in orbital and eyelid diseases, which have limited the development of AI-related research. For example, it is difficult to obtain standardized orbital CT/MRI images due to the long scanning time, different equipment, and variable experience of the operators. Zhai et al. (2021) developed a method based on a signed distance field for the automatic calibration and quantitative error evaluation when processing orbital CT images, which provides a new tool to standardize CT/MRI images. Moreover, lighting variations prevent high-quality standardized external ocular photography. To address this problem, some studies have attempted to model the illumination templates and establish illumination-invariant algorithms (Li et al., 2007; Lu et al., 2017), whose main purpose is to make shapes and textures independent of illumination variations. Lastly, ethical considerations and patient privacy issues associated with external ocular photography also require in-depth deliberation.

Overall, although there are still some limitations to the advance of AI-based research on orbital and eyelid diseases, as large databases are established and shared and as new neural networks that more closely resemble biological neurons are developed, further development of such AI applications is expected to occur, leading to the next breakthrough in ophthalmology.

Conclusion

AI technology has a significant potential for application in the automatic diagnosis and precise quantification of orbital and eyelid diseases. AI is more objective than manual methods, can process large amounts of data in a short time, and, thus, could assist physicians in clinical decision-making and surgical design. The predictive capabilities of AI may also play an active role in assessing the outcome of oculoplastic surgery. As computer algorithms are updated and high-quality datasets become available, AI will play a broader role in the assessment of orbital and eyelid disorders in the future.

Author contributions

X-LB and G-YL prepared the first draft of the document. All authors contributed to the writing and editing of the manuscript and agreed to its submission.

Funding

The study was funded by the National Natural Science Foundation of China (grant No. 82171053 and No. 81570864), and the Natural Science Foundation of Jilin Province (grant No. 20200801043GH and No. 20190201083JC).

Acknowledgments

We thank Editage (https://www.editage.com) for English language editing during the preparation of this manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdullah, A., Elsamaloty, H., Patel, Y., and Chang, J. (2010). CT and MRI findings with histopathologic correlation of a unique bilateral orbital mantle cell lymphoma in Graves' disease: A case report and brief review of literature. J. Neurooncol. 97 (2), 279–284. doi:10.1007/s11060-009-0019-x

Asaad, M., Dey, J. K., Al-Mouakeh, A., Manjouna, M. B., Nashed, M. A., Rajesh, A., et al. (2020). Eye-tracking technology in plastic and reconstructive surgery: A systematic review. Aesthet. Surg. J. 40 (9), 1022–1034. doi:10.1093/asj/sjz328

Bahceci Simsek, I., and Sirolu, C. (2021). Analysis of surgical outcome after upper eyelid surgery by computer vision algorithm using face and facial landmark detection. Graefes Arch. Clin. Exp. Ophthalmol. 259 (10), 3119–3125. doi:10.1007/s00417-021-05219-8

Bailey, W., and Robinson, L. (2007). Screening for intra-orbital metallic foreign bodies prior to MRI: Review of the evidence. Radiogr. (Lond) 13 (1), 72–80. doi:10.1016/j.radi.2005.09.006

Bi, S., Chen, R., Zhang, K., Xiang, Y., Wang, R., Lin, H., et al. (2020). Differentiate cavernous hemangioma from schwannoma with artificial intelligence (AI). Ann. Transl. Med. 8 (11), 710. doi:10.21037/atm.2020.03.150

Bischoff, F., Koch, M. d. C., and Rodrigues, P. P. (2019). Predicting blood donations in a tertiary care center using time series forecasting. Stud. Health Technol. Inf. 261, 135–139.

Brachmann, A., Barth, E., and Redies, C. (2017). Using CNN features to better understand what makes visual artworks special. Front. Psychol. 8, 830. doi:10.3389/fpsyg.2017.00830

Brehar, R., Mitrea, D. A., Vancea, F., Marita, T., Nedevschi, S., Lupsor-Platon, M., et al. (2020). Comparison of deep-learning and conventional machine-learning methods for the automatic recognition of the hepatocellular carcinoma areas from ultrasound images. Sensors (Basel) 20 (11), E3085. doi:10.3390/s20113085

Brendler, C., Pour AryaNN., , Rieger, V., Klinger, S., and Rothermel, A. (2013). A substrate isolated LDO for an inductively powered retinal implant. Biomed. Tech. 58, 4367. doi:10.1515/bmt-2013-4367

Brown, R. A., Fetco, D., Fratila, R., Fadda, G., Jiang, S., Alkhawajah, N. M., et al. (2020). Deep learning segmentation of orbital fat to calibrate conventional MRI for longitudinal studies. Neuroimage 208, 116442. doi:10.1016/j.neuroimage.2019.116442

Cai, L., Gao, J., and Zhao, D. (2020). A review of the application of deep learning in medical image classification and segmentation. Ann. Transl. Med. 8 (11), 713. doi:10.21037/atm.2020.02.44

Chen, H. C., Tzeng, S. S., Hsiao, Y. C., Chen, R. F., Hung, E. C., and Lee, O. K. (2021). Smartphone-based artificial intelligence-assisted prediction for eyelid measurements: Algorithm development and observational validation study. JMIR Mhealth Uhealth 9 (10), e32444. doi:10.2196/32444

Cho, S. M., Austin, P. C., Ross, H. J., Abdel-Qadir, H., Chicco, D., Tomlinson, G., et al. (2021). Machine learning compared with conventional statistical models for predicting myocardial infarction readmission and mortality: A systematic review. Can. J. Cardiol. 37 (8), 1207–1214. doi:10.1016/j.cjca.2021.02.020

Dzobo, K., Adotey, S., Thomford, N. E., and Dzobo, W. (2020). Integrating artificial and human intelligence: A partnership for responsible innovation in biomedical engineering and medicine. OMICS 24 (5), 247–263. doi:10.1089/omi.2019.0038

Finlayson, S. G., Bowers, J. D., Ito, J., Zittrain, J. L., Beam, A. L., and Kohane, I. S. (2019). Adversarial attacks on medical machine learning. Science 363 (6433), 1287–1289. doi:10.1126/science.aaw4399

Fu, R., Leader, J. K., Pradeep, T., Shi, J., Meng, X., Zhang, Y., et al. (2021). Automated delineation of orbital abscess depicted on CT scan using deep learning. Med. Phys. 48 (7), 3721–3729. doi:10.1002/mp.14907

Fukuda, Y., Fujimura, T., Moriwaki, S., and KiTahara, T. (2005). A new method to evaluate lower eyelid sag using three-dimensional image analysis. Int. J. Cosmet. Sci. 27 (5), 283–290. doi:10.1111/j.1467-2494.2005.00282.x

Girard, M. J. A., and Schmetterer, L. (2020). Artificial intelligence and deep learning in glaucoma: Current state and future prospects. Prog. Brain Res. 257, 37–64. doi:10.1016/bs.pbr.2020.07.002

Griffin, A. S., Hoang, J. K., and Malinzak, M. D. (2018). CT and MRI of the orbit. Int. Ophthalmol. Clin. 58 (2), 25–59. doi:10.1097/IIO.0000000000000218

Hamwood, J., Schmutz, B., Collins, M. J., Allenby, M. C., and Alonso-Caneiro, D. (2021). A deep learning method for automatic segmentation of the bony orbit in MRI and CT images. Sci. Rep. 11 (1), 13693. doi:10.1038/s41598-021-93227-3

Han, Q., Du, L., Mo, Y., Huang, C., and Yuan, Q. (2022). Machine learning based non-enhanced CT radiomics for the identification of orbital cavernous venous malformations: An innovative tool. J. Craniofac. Surg. 33 (3), 814–820. doi:10.1097/SCS.0000000000008446

Hanai, K., Tabuchi, H., Nagasato, D., Tanabe, M., Masumoto, H., Miya, S., et al. (2022). Automated detection of enlarged extraocular muscle in Graves' ophthalmopathy with computed tomography and deep neural network. Sci. Rep. 12 (1), 16036. doi:10.1038/s41598-022-20279-4

He, F., Liu, T., and Tao, D. (2020). Why ResNet works? Residuals generalize. IEEE Trans. Neural Netw. Learn. Syst. 31 (12), 5349–5362. doi:10.1109/TNNLS.2020.2966319

Heran, F., Berges, O., BlustaJn, J., Boucenna, M., Charbonneau, F., Koskas, P., et al. (2014). Tumor pathology of the orbit. Diagn. Interv. Imaging 95 (10), 933–944. doi:10.1016/j.diii.2014.08.002

Hodgson, N. M., and Rajaii, F. (2020). Current understanding of the progression and management of thyroid associated orbitopathy: A systematic review. Ophthalmol. Ther. 9 (1), 21–33. doi:10.1007/s40123-019-00226-9

Hou, R., Zhou, D., Nie, R., Liu, D., and Ruan, X. (2019). Brain CT and MRI medical image fusion using convolutional neural networks and a dual-channel spiking cortical model. Med. Biol. Eng. Comput. 57 (4), 887–900. doi:10.1007/s11517-018-1935-8

Hou, Y., Xie, X., Chen, J., Lv, P., Jiang, S., He, X., et al. (2021). Bag-of-features-based radiomics for differentiation of ocular adnexal lymphoma and idiopathic orbital inflammation from contrast-enhanced MRI. Eur. Radiol. 31 (1), 24–33. doi:10.1007/s00330-020-07110-2

Huang, X., Ju, L., Li, J., He, L., Tong, F., Liu, S., et al. (2022). An intelligent diagnostic system for thyroid-associated ophthalmopathy based on facial images. Front. Med. 9, 920716. doi:10.3389/fmed.2022.920716

Huggins, A. B., Latting, M. W., Marx, D. P., and Giacometti, J. N. (2017). Ocular adnexal reconstruction for cutaneous periocular malignancies. Semin. Plast. Surg. 31 (1), 22–30. doi:10.1055/s-0037-1598190

Hung, J. Y., Chen, K. W., Perera, C., Chiu, H. K., Hsu, C. R., Myung, D., et al. (2022). An outperforming artificial intelligence model to identify referable blepharoptosis for general practitioners. J. Pers. Med. 12 (2), 283. doi:10.3390/jpm12020283

Imamura, H., Tabuchi, H., Nagasato, D., Masumoto, H., Baba, H., Furukawa, H., et al. (2021). Automatic screening of tear meniscus from lacrimal duct obstructions using anterior segment optical coherence tomography images by deep learning. Graefes Arch. Clin. Exp. Ophthalmol. 259 (6), 1569–1577. doi:10.1007/s00417-021-05078-3

Jalali, Y., Fateh, M., Rezvani, M., Abolghasemi, V., and Anisi, M. H. (2021). ResBCDU-net: A deep learning framework for lung CT image segmentation. Sensors (Basel) 21 (1), E268. doi:10.3390/s21010268

Jiang, Z., Wang, L., Wang, Y., Jia, G., Zeng, G., Wang, J., et al. (2022). A self-supervised learning based framework for eyelid malignant melanoma diagnosis in whole slide images. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, 1–15. doi:10.1109/TCBB.2022.3207352

Kaluarachchi, T., Reis, A., and Nanayakkara, S. (2021). A review of recent deep learning approaches in human-centered machine learning. Sensors (Basel) 21 (7), 2514. doi:10.3390/s21072514

Karlin J, G. L., LaPierre N, Danesh K., Farajzadeh, J., Palileo, B., Taraszka, K., Zheng, J., et al. (2022). Ensemble neural network model for detecting thyroid eye disease using external photographs. Br. J. Ophthalmol. 2022, 321833. doi:10.1136/bjo-2022-321833

Langer, B. G., Mafee, M. F., Pollack, S., Spigos, D. G., and Gyi, B. (1987). MRI of the normal orbit and optic pathway. Radiol. Clin. North Am. 25 (3), 429–446. doi:10.1016/s0033-8389(22)02253-9

Larentzakis, A., and Lygeros, N. (2021). Artificial intelligence (AI) in medicine as a strategic valuable tool. Pan Afr. Med. J. 38, 184. doi:10.11604/pamj.2021.38.184.28197

Le, W. T., Maleki, F., Romero, F. P., Forghani, R., and Kadoury, S. (2020). Overview of machine learning: Part 2: Deep learning for medical image analysis. Neuroimaging Clin. N. Am. 30 (4), 417–431. doi:10.1016/j.nic.2020.06.003

Lee, H. J., Jilani, M., Frohman, L., and Baker, S. (2004). CT of orbital trauma. Emerg. Radiol. 10 (4), 168–172. doi:10.1007/s10140-003-0282-7

Lee, J., Seo, W., Park, J., Lim, W. S., Oh, J. Y., Moon, N. J., et al. (2022). Neural network-based method for diagnosis and severity assessment of Graves' orbitopathy using orbital computed tomography. Sci. Rep. 12 (1), 12071. doi:10.1038/s41598-022-16217-z

Li, L., Song, X., Guo, Y., Liu, Y., Sun, R., Zou, H., et al. (2020). Deep convolutional neural networks for automatic detection of orbital blowout fractures. J. Craniofac. Surg. 31 (2), 400–403. doi:10.1097/SCS.0000000000006069

Li, L., Zheng, N. N., and Wang, F. Y. (2019). On the crossroad of artificial intelligence: A revisit to alan turing and norbert wiener. IEEE Trans. Cybern. 49 (10), 3618–3626. doi:10.1109/TCYB.2018.2884315

Li, S. Z., Chu, R., Liao, S., and Zhang, L. (2007). Illumination invariant face recognition using near-infrared images. IEEE Trans. Pattern Anal. Mach. Intell. 29 (4), 627–639. doi:10.1109/TPAMI.2007.1014

Li, Z., Chen, K., Yang, J., Pan, L., Wang, Z., Yang, P., et al. (2022). Deep learning-based CT radiomics for feature representation and analysis of aging characteristics of asian bony orbit. J. Craniofac. Surg. 33 (1), 312–318. doi:10.1097/SCS.0000000000008198

Li, Z., Qiang, W., Chen, H., Pei, M., Yu, X., Wang, L., et al. (2022). Artificial intelligence to detect malignant eyelid tumors from photographic images. NPJ Digit. Med. 5 (1), 23. doi:10.1038/s41746-022-00571-3

Lin, C., Song, X., Li, L., Li, Y., Jiang, M., Sun, R., et al. (2021). Detection of active and inactive phases of thyroid-associated ophthalmopathy using deep convolutional neural network. BMC Ophthalmol. 21 (1), 39. doi:10.1186/s12886-020-01783-5

Lin, H., Li, R., Liu, Z., Chen, J., Yang, Y., Chen, H., et al. (2019). Diagnostic efficacy and therapeutic decision-making capacity of an artificial intelligence platform for childhood cataracts in eye clinics: A multicentre randomized controlled trial. EClinicalMedicine 9, 52–59. doi:10.1016/j.eclinm.2019.03.001

Liu, T. Y. A., Ting, D. S. W., Yi, P. H., Wei, J., Zhu, H., Subramanian, P. S., et al. (2020). Deep learning and transfer learning for optic disc laterality detection: Implications for machine learning in neuro-ophthalmology. J. Neuroophthalmol. 40 (2), 178–184. doi:10.1097/WNO.0000000000000827

Liu, Y., Li, Q., Wang, K., Liu, J., He, R., Yuan, Y., et al. (2021). Automatic multi-label ECG classification with category imbalance and cost-sensitive thresholding. Biosens. (Basel) 11 (11), 453. doi:10.3390/bios11110453

Lou, L., Cao, J., Wang, Y., Gao, Z., Jin, K., Xu, Z., et al. (2021). Deep learning-based image analysis for automated measurement of eyelid morphology before and after blepharoptosis surgery. Ann. Med. 53 (1), 2278–2285. doi:10.1080/07853890.2021.2009127

Lu, L., Zhang, X., Xu, X., and Shang, D. (2017). Multispectral image fusion for illumination-invariant palmprint recognition. PLoS One 12 (5), e0178432. doi:10.1371/journal.pone.0178432

Luo, X., Yang, L., Cai, H., Tang, R., Chen, Y., and Li, W. (2021). Multi-classification of arrhythmias using a HCRNet on imbalanced ECG datasets. Comput. Methods Programs Biomed. 208, 106258. doi:10.1016/j.cmpb.2021.106258

Luo, Y., Zhang, J., Yang, Y., Rao, Y., Chen, X., Shi, T., et al. (2022). Deep learning-based fully automated differential diagnosis of eyelid basal cell and sebaceous carcinoma using whole slide images. Quant. Imaging Med. Surg. 12 (8), 4166–4175. doi:10.21037/qims-22-98

Lutt, J. R., Lim, L. L., Phal, P. M., and Rosenbaum, J. T. (2008). Orbital inflammatory disease. Semin. Arthritis Rheum. 37 (4), 207–222. doi:10.1016/j.semarthrit.2007.06.003

Mahroo, O. A., Hysi, P. G., Dey, S., Gavin, E. A., Hammond, C. J., and Jones, C. A. (2014). Outcomes of ptosis surgery assessed using a patient-reported outcome measure: An exploration of time effects. Br. J. Ophthalmol. 98 (3), 387–390. doi:10.1136/bjophthalmol-2013-303946

Mintz, Y., and Brodie, R. (2019). Introduction to artificial intelligence in medicine. Minim. Invasive Ther. Allied Technol. 28 (2), 73–81. doi:10.1080/13645706.2019.1575882

Moriyama, T., Kanade, T., Xiao, J., and Cohn, J. F. (2006). Meticulously detailed eye region model and its application to analysis of facial images. IEEE Trans. Pattern Anal. Mach. Intell. 28 (5), 738–752. doi:10.1109/TPAMI.2006.98

Nakagawa, J., Fujima, N., Hirata, K., Tang, M., Tsuneta, S., Suzuki, J., et al. (2022). Utility of the deep learning technique for the diagnosis of orbital invasion on CT in patients with a nasal or sinonasal tumor. Cancer Imaging 22 (1), 52. doi:10.1186/s40644-022-00492-0

Nichols, J. A., Herbert Chan, H. W., and Baker, M. A. B. (2019). Machine learning: Applications of artificial intelligence to imaging and diagnosis. Biophys. Rev. 11 (1), 111–118. doi:10.1007/s12551-018-0449-9

Pan, L., Chen, K., Zheng, Z., Zhao, Y., Yang, P., Li, Z., et al. (2022). Aging of Chinese bony orbit: Automatic calculation based on UNet++ and connected component analysis. Surg. Radiol. Anat. 44 (5), 749–758. doi:10.1007/s00276-022-02933-8

Russell, E. J., Czervionke, L., HuckManM., , Daniels, D., and McLachlan, D. (1985). CT of the inferomedial orbit and the lacrimal drainage apparatus: Normal and pathologic anatomy. AJR. Am. J. Roentgenol. 145 (6), 1147–1154. doi:10.2214/ajr.145.6.1147

Seeja, R. S., and Suresh, A. (2019). Deep learning based skin lesion segmentation and classification of melanoma using support vector machine (SVM). Asian pac. J. Cancer Prev. 20 (5), 1555–1561. doi:10.31557/APJCP.2019.20.5.1555

Shin, S., Austin, P. C., Ross, H. J., Abdel-Qadir, H., Freitas, C., Tomlinson, G., et al. (2021). Machine learning vs. conventional statistical models for predicting heart failure readmission and mortality. Esc. Heart Fail. 8 (1), 106–115. doi:10.1002/ehf2.13073

Silverman, N., and Shinder, R. (2017). What's new in eyelid tumors. Asia. Pac. J. Ophthalmol. 6 (2), 143–152. doi:10.22608/APO.201701

Smith, T. J. (2021). Comment on the 2021 EUGOGO clinical practice guidelines for the medical management of Graves' orbitopathy. Eur. J. Endocrinol. 185 (6), L13–L14. doi:10.1530/EJE-21-0861

Song, X., Li, L., Han, F., Liao, S., and Xiao, C. (2022). Noninvasive machine learning screening model for dacryocystitis based on ocular surface indicators. J. Craniofac. Surg. 33 (1), e23–e28. doi:10.1097/SCS.0000000000007863

Song, X., Liu, Z., Gao, Z., Fan, X., Zhai, G., Guangtao, Z., et al. (2021). Artificial intelligence CT screening model for thyroid-associated ophthalmopathy and tests under clinical conditions. Int. J. Comput. Assist. Radiol. Surg. 16 (2), 323–330. doi:10.1007/s11548-020-02281-1

Song, X., Tong, W., Lei, C., Huang, J., Fan, X., Zhai, G., et al. (2021). A clinical decision model based on machine learning for ptosis. BMC Ophthalmol. 21 (1), 169. doi:10.1186/s12886-021-01923-5

Starke, G., De Clercq, E., Borgwardt, S., and Elger, B. S. (2021). Why educating for clinical machine learning still requires attention to history: A rejoinder to gauld et al. Psychol. Med. 51 (14), 2512–2513. doi:10.1017/S0033291720004766

Sudhan, M. B., Sinthuja, M., Pravinth Raja, S., Amutharaj, J., Charlyn Pushpa Latha, G., Sheeba Rachel, S., et al. (2022). Segmentation and classification of glaucoma using U-net with deep learning model. J. Healthc. Eng. 2022, 1601354. doi:10.1155/2022/1601354

Swanson, E. (2011). Objective assessment of change in apparent age after facial rejuvenation surgery. J. Plast. Reconstr. Aesthet. Surg. 64 (9), 1124–1131. doi:10.1016/j.bjps.2011.04.004

Tabuchi, H., Nagasato, D., Masumoto, H., Tanabe, M., Ishitobi, N., Ochi, H., et al. (2022). Developing an iOS application that uses machine learning for the automated diagnosis of blepharoptosis. Graefes Arch. Clin. Exp. Ophthalmol. 260 (4), 1329–1335. doi:10.1007/s00417-021-05475-8

Tan, E., LinF., , Sheck, L., Salmon, P., and Ng, S. (2017). A practical decision-tree model to predict complexity of reconstructive surgery after periocular basal cell carcinoma excision. J. Eur. Acad. Dermatol. Venereol. 31 (4), 717–723. doi:10.1111/jdv.14012

Thrall, J. H., Li, X., Li, Q., Cruz, C., Do, S., Dreyer, K., et al. (2018). Artificial intelligence and machine learning in radiology: Opportunities, challenges, pitfalls, and criteria for success. J. Am. Coll. Radiol. 15 (3), 504–508. doi:10.1016/j.jacr.2017.12.026

Ting, D. S. W., Cheung, C. Y. L., Lim, G., Tan, G. S. W., Quang, N. D., Gan, A., et al. (2017). Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA 318 (22), 2211–2223. doi:10.1001/jama.2017.18152

Totschnig, W. (2020). Fully autonomous AI. Sci. Eng. Ethics 26 (5), 2473–2485. doi:10.1007/s11948-020-00243-z

Umapathy, L., Winegar, B., MacKinnon, L., HillM., , Altbach, M. I., Miller, J. M., et al. (2020). Fully automated segmentation of globes for volume quantification in CT images of orbits using deep learning. AJNR. Am. J. Neuroradiol. 41 (6), 1061–1069. doi:10.3174/ajnr.A6538

Van Brummen, A., Owen, J. P., Spaide, T., Froines, C., Lu, R., Lacy, M., et al. (2021). PeriorbitAI: Artificial intelligence automation of eyelid and periorbital measurements. Am. J. Ophthalmol. 230, 285–296. doi:10.1016/j.ajo.2021.05.007

van der Heijden, A. A., Abramoff, M. D., Verbraak, F., van Hecke, M. V., Liem, A., and Nijpels, G. (2018). Validation of automated screening for referable diabetic retinopathy with the IDx-DR device in the Hoorn Diabetes Care System. Acta Ophthalmol. 96 (1), 63–68. doi:10.1111/aos.13613

Wang, L., Ding, L., Liu, Z., Sun, L., Chen, L., Jia, R., et al. (2020). Automated identification of malignancy in whole-slide pathological images: Identification of eyelid malignant melanoma in gigapixel pathological slides using deep learning. Br. J. Ophthalmol. 104 (3), 318–323. doi:10.1136/bjophthalmol-2018-313706

Wang, M., Shen, L. Q., Pasquale, L. R., Wang, H., Li, D., Choi, E. Y., et al. (2020). An artificial intelligence approach to assess spatial patterns of retinal nerve fiber layer thickness maps in glaucoma. Transl. Vis. Sci. Technol. 9 (9), 41. doi:10.1167/tvst.9.9.41

Wang, Y., Sun, J., Liu, X., Li, Y., Fan, X., and Zhou, H. (2022). Robot-assisted orbital fat decompression surgery: First in human. Transl. Vis. Sci. Technol. 11 (5), 8. doi:10.1167/tvst.11.5.8

Weber, A. L., and Sabates, N. R. (1996). Survey of CT and MR imaging of the orbit. Eur. J. Radiol. 22 (1), 42–52. doi:10.1016/0720-048x(96)00737-1

Wu, H., Luo, B., Zhao, Y., Yuan, G., Wang, Q., Liu, P., et al. (2022). Radiomics analysis of the optic nerve for detecting dysthyroid optic neuropathy, based on water-fat imaging. Insights Imaging 13 (1), 154. doi:10.1186/s13244-022-01292-7

Wu, X., Huang, Y., Liu, Z., Lai, W., Long, E., Zhang, K., et al. (2019). Universal artificial intelligence platform for collaborative management of cataracts. Br. J. Ophthalmol. 103 (11), 1553–1560. doi:10.1136/bjophthalmol-2019-314729

Xie, X., Yang, L., Zhao, F., Wang, D., Zhang, H., He, X., et al. (2022). A deep learning model combining multimodal radiomics, clinical and imaging features for differentiating ocular adnexal lymphoma from idiopathic orbital inflammation. Eur. Radiol. 32 (10), 6922–6932. doi:10.1007/s00330-022-08857-6

Yang, L. W. Y., Ng, W. Y., Foo, L. L., Liu, Y., Yan, M., Lei, X., et al. (2021). Deep learning-based natural language processing in ophthalmology: Applications, challenges and future directions. Curr. Opin. Ophthalmol. 32 (5), 397–405. doi:10.1097/ICU.0000000000000789

Ye, Y., Xiong, Y., Zhou, Q., Wu, J., Li, X., and Xiao, X. (2020). Comparison of machine learning methods and conventional logistic regressions for predicting gestational diabetes using routine clinical data: A retrospective cohort study. J. Diabetes Res. 2020, 4168340. doi:10.1155/2020/4168340

Yin, X. X., Sun, L., Fu, Y., Lu, R., and Zhang, Y. (2022). U-Net-Based medical image segmentation. J. Healthc. Eng. 2022, 4189781. doi:10.1155/2022/4189781

Yixin, Q., Bingying, L., Shuiling, L., Xianchai, L., Zhen, M., Xingyi, L., et al. (2022). Effect of multichannel convolutional neural network-based model on the repair and aesthetic effect of eye plastic surgery patients. Comput. Math. Methods Med. 2022, 5315146. doi:10.1155/2022/5315146

Yoo, T. K., Choi, J. Y., and Kim, H. K. (2020). A generative adversarial network approach to predicting postoperative appearance after orbital decompression surgery for thyroid eye disease. Comput. Biol. Med. 118, 103628. doi:10.1016/j.compbiomed.2020.103628

Zhai, G., Yin, Z., Li, L., Song, X., and Zhou, Y. (2021). Automatic orbital computed tomography coordinating method and quantitative error evaluation based on signed distance field. Acta Radiol. 62 (1), 87–92. doi:10.1177/0284185120914029

Keywords: artificial intelligence, deep learning, orbital and eyelid diseases, ophthalmic plastic surgery, orbital computed tomography, orbital magnetic resonance imaging

Citation: Bao X-L, Sun Y-J, Zhan X and Li G-Y (2022) Orbital and eyelid diseases: The next breakthrough in artificial intelligence?. Front. Cell Dev. Biol. 10:1069248. doi: 10.3389/fcell.2022.1069248

Received: 13 October 2022; Accepted: 08 November 2022;

Published: 18 November 2022.

Edited by:

Weihua Yang, Jinan University, ChinaReviewed by:

Jing Rao, Shenzhen Eye Hospital, ChinaYongjin Zhou, Shenzhen University, China

Kun Liu, Shanghai First People’s Hospital, China

Copyright © 2022 Bao, Sun, Zhan and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Guang-Yu Li, liguangyu@aliyun.com

Xiao-Li Bao

Xiao-Li Bao Ying-Jian Sun1

Ying-Jian Sun1  Guang-Yu Li

Guang-Yu Li