A preliminary evaluation of data-informed mentoring at an Australian medical school

Submitted: 17 March 2020

Accepted: 3 June 2020

Published online: 5 January, TAPS 2021, 6(1), 60-69

https://doi.org/10.29060/TAPS.2021-6-1/OA2239

Frank Bate1, Sue Fyfe2, Dylan Griffiths1, Kylie Russell1, Chris Skinner1, Elina Tor1

1University of Notre Dame Australia, Australia; 2Curtin University, Australia

Abstract

Introduction: In 2017, the School of Medicine of the University of Notre Dame Australia implemented a data-informed mentoring program as part of a more substantial shift towards programmatic assessment. Data-informed mentoring, in an educational context, can be challenging with boundaries between mentor, coach and assessor roles sometimes blurred. Mentors may be required to concurrently develop trust relationships, guide learning and development, and assess student performance. The place of data-informed mentoring within an overall assessment design can also be ambiguous. This paper is a preliminary evaluation study of the implementation of data informed mentoring at a medical school, focusing specifically on how students and staff reacted and responded to the initiative.

Methods: Action research framed and guided the conduct of the research. Mixed methods, involving qualitative and quantitative tools, were used with data collected from students through questionnaires and mentors through focus groups.

Results: Both students and mentors appreciated data-informed mentoring and indications are that it is an effective augmentation to the School’s educational program, serving as a useful step towards the implementation of programmatic assessment.

Conclusion: Although data-informed mentoring is valued by students and mentors, more work is required to: better integrate it with assessment policies and practices; stimulate students’ intrinsic motivation; improve task design and feedback processes; develop consistent learner-centred approaches to mentoring; and support data-informed mentoring with appropriate information and communications technologies. The initiative is described using an ecological model that may be useful to organisations considering data-informed mentoring.

Keywords: Data-Informed Mentoring, Mentoring, Programmatic Assessment, E-Portfolio

Practice Highlights

- Students and mentors appreciated the introduction of data-informed mentoring.

- Assessment policies and practices should be integrated with data-informed mentoring.

- Data-informed mentoring presents curriculum challenges in task design and framing feedback.

- The student context informs the data-informed mentoring approach (learner-centred to mentor-directed).

- Data-informed mentoring requires supportive information and communications technologies.

I. INTRODUCTION

An often-cited definition of mentoring, highlights the role of experienced and empathetic others guiding students to re-examine their ideas, learning and personal and professional development (Standing Committee on Postgraduate Medical and Dental Education, 1998).

Heeneman and de Grave (2017) identify some subtle differences between traditional conceptions of mentoring and the type of mentoring that is required under programmatic assessment, which in this paper we refer to as Data-Informed Mentoring (D-IM). For example, D-IM is embedded in a curriculum in which rich data on student progress arises from student interaction with assessment tasks, informing and enhancing their progress (see Appendix). Further, in programmatic assessment, the learning portfolio is typically the setting in which the mentor-mentee relationship develops. This setting brings together institutional imperatives (e.g. assessable tasks), and personal imperatives such as evidence of competence and personal reflection. Situating mentoring in a curriculum and assessment framework impacts upon the mentoring relationship.

Meeuwissen, Stalmeijer, and Govaerts (2019) propose that a different type of mentoring is required under programmatic assessment. Mentors interpret data and feedback provided by content experts across domains of learning thus providing an evidence-base to facilitate student reflection. They might also take on a variety of roles (e.g. critical friend, coach, assessor) that could influence the mentoring relationship including the level of trust that is established with the student. These challenges suggest that conventional definitions of mentoring might not capture the essence of D-IM. Whilst the availability of rich information potentially enhances the mentoring experience and personalises learning, mentors and students are challenged to make sense and act upon this information; students might focus on issues that fall outside of the scope of the data provided (e.g. their wellbeing); mentors may also struggle to delineate boundaries between multiple roles or draw a line on where their scope of practice, as a mentor, begins and ends.

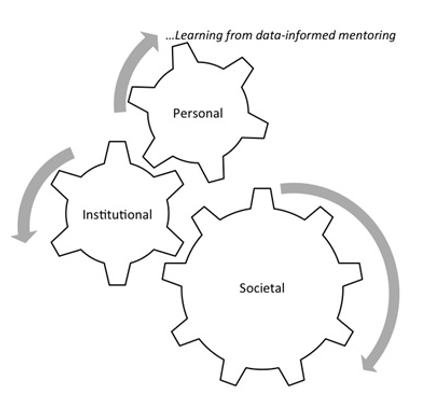

Mentoring is a social construct and as such is best considered through a holistic lens taking account of societal, institutional and personal factors (Sambunjak, 2015). The current study adopted Sambunjak’s “ecological model” (2015, p. 48) as a framework to help understand the impact of D-IM (Figure 1). Societal, institutional and personal forces are inter-related. For example, a student’s approach to D-IM might be influenced by financial circumstances resulting in the need to work part-time (societal); a medical school’s assessment policy (institutional); or a student’s learning style (personal). The model is presented as a set of cogs where the optimal educational experience is achieved if all elements work in harmony. The study uses the ecological model to help answer the central research question that guided the study: how did students and staff react and respond to D-IM?

Figure 1. An ecological framework for conceptualizing D-IM (modified from Sambunjak, 2015)

This paper shares findings from the study derived from the first two years of data collection. Its focus is on the implementation of D-IM and how students and staff reacted to this implementation (Kirkpatrick & Kirkpatrick, 2006).

II. METHODS

The School of Medicine Fremantle (the School) of the University of Notre Dame Australia introduced D-IM as part of its incremental approach to programmatic assessment. The School offers a four-year doctor of medicine (MD) with around 100 students enrolling each year. The first two years are pre-clinical consisting of problem-based learning supported by lectures and small group learning. The final two years involve clinical rotations mostly located at hospital sites. Each year of the MD constitutes a course that students need to pass in order to progress to the next year. The School’s assessment mix includes knowledge-based examinations (multiple choice/case-based), Objective Structured Clinical Examinations, work-based assessments and rubric-based assessments (e.g. reflections). Examinations are administered mid-year and end-of-year for pre-clinical students and end-of-year for students in the clinical years.

All performance data informs D-IM. Regular feedback from assessors is provided and collated in an e-portfolio (supported by Blackboard) so that students have opportunities to reflect on their progress and plan future learning. Students are allocated a mentor each year who has access to their students’ e-portfolio.

Mentoring was provided by 26 pre-clinical de-briefing (CD) tutors whose role was to facilitate student reflection on their learning and support and guide their interpretation of the feedback they had received. D-IM was introduced to first year students in 2017 and first and second year students in 2018. Three mentoring meetings were conducted per student per year. CD tutors also have a role in assessing student performance and providing feedback. Each CD tutor has a CD group which is also their mentoring group (8-10 students). However, tasks are assessed and feedback is provided by a different tutor. This means that mentor and assessor functions are separated.

In preparation for the implementation of D-IM, targeted professional development was provided to tutors which unpacked the mentoring role and provided examples of how performance data can be used to underpin mentoring sessions.

The University of Notre Dame Australia Human Research Ethics Committee (HREC) provided ethical approval for the research, and a research team was formed in 2017. Action research guided the conduct of the research, as it aims to understand and influence the change process. Action research is the “systematic collection and analysis of data for the purpose of taking action and making change” (Gillis & Jackson, 2002, p. 264). It involves cycles of planning, implementing, observing and reflecting on the processes and consequences of the action. The subjects of the research have input into cycles and influence changes that are made as a result of feedback and reflection (Kemmis & McTaggart, 2000). Each cycle of the research runs for one year so that planning, action, observation and reflection can inform the next iteration.

Mixed methods research involving qualitative and quantitative methods, was used. Data were collected each year from student questionnaires and focus groups which included mentors. Participation in the research was underpinned by a Statement of Informed Consent. For the questionnaire consent constituted ticking a box on an online form. For the focus group, a physical form was signed before taking part in a focus group. The student questionnaire comprised qualitative and quantitative components and posed 9 statements on mentoring. The questionnaire was critically appraised by a panel of 8 academic staff in May 2017 and it was agreed that the questionnaire had attained face validity before it was administered in September 2017.

Students were asked to rate each statement of the questionnaire according to a Likert-type scale from Strongly Disagree, Disagree, Neutral, Agree to Strongly Agree. For interpretation, a numerical value was assigned to each response from 1=Strongly Disagree through to 5=Strongly Agree. Quantitative data were downloaded from SurveyMonkey as Excel files for extraction of descriptive statistics and then imported into SPSS Version 25. Statistical analysis was undertaken using SPSS version 25. Two statistical tests were conducted. The first test, a non-parametric median test on students’ perception of each aspect of DI-M, is consistent with the purpose of action research to inform future practice. Responses to individual survey items using a Likert-type response scale are ordinal in nature, and the distributions are not identical for the two cohorts, therefore a median test was used. This statistic compares the responses from two independent groups to individual survey items, with reference to the overall pooled median rating for the two cohorts combined. More specifically, the median test examines whether there are the same proportion of responses above and below the overall pooled median rating, in each of the two cohorts, for each individual item. A second test, an aggregate mean score (an integer), was calculated from the students’ responses to the nine statements in each cohort. The mean score for each cohort provided an overall indication on the extent to which respondents were satisfied with the mentoring program. A parametric test, (independent t-test) was used to examine if there were statistically significant differences in mean scores between the two independent cohorts.

Qualitative data were coded from students’ comments to two open-ended questions in the student questionnaire: (1) Please comment on any aspect of the learning portfolio that you feel were particularly beneficial for your learning journey; and (2) Please comment on any aspect of the learning portfolio that could be improved in the future. Qualitative data from mentors through three focus groups in both 2017 and 2018 were recorded, transcribed and imported into Nvivo12 to help identify patterns across and within data sources. Data saturation was achieved after two focus group iterations. Two researchers independently coded students’ comments and staff transcripts and then met to discuss and resolve differences in interpretation. These codes were then presented to the broader team in which ideas were further unpacked and themes developed using Braun and Clarke’s (2006) thematic approach to analysis.

III. RESULTS

In 2017, 29% of the year 1 student cohort responded to the questionnaire (n=33) and in 2018, the response fraction across both Year 1 and Year 2 was 47% (n=98). The 2017 student cohort is described as Cohort 1 and the 2018 Student Cohort is Cohort 2. The response fraction for Cohort 1 increased from 29% in 2017 to 46% in 2018. In 2017, 21 staff participated in focus groups (7 of whom were mentors). In 2018, 17 staff took part (9 mentors). Tables 1-2 compare student responses to the 9 items on mentoring on the following basis:

- Over time in 2017 and 2018 within Cohort 1 (Table 1);

- For first year students–Cohort 1 2017 and Cohort 2 2018 (Table 2).

For each table, median ratings are shown for each item along with the results of the median test to discern statistically significant differences between or within cohorts. Table 1 compares Cohort 1 responses to D-IM over time.

|

Item |

Overall Pooled Median* |

Cohort 1 2017 (n=32) |

Cohort 1 2018 (n=51) |

|

||

|

|

|

n> pooled median |

n<= pooled median |

n> pooled median |

n<= pooled median |

Median Test |

|

The mentoring process was well organised

|

4 |

6 |

26 |

6 |

45 |

χ2 =0.776; df=1; p=0.378 |

|

My mentor was personally very well organised

|

5 |

0 |

32 |

0 |

50 |

n/a**

|

|

There were an appropriate number of mentoring meetings throughout the year

|

4 |

2 |

30 |

4 |

47 |

χ2 =0.074; df=1; p=0.785 |

|

My mentor was respectful

|

5 |

0 |

32 |

0 |

51 |

n/a**

|

|

My mentor listened to me

|

5 |

0 |

32 |

0 |

50 |

n/a**

|

|

My mentor asked thought-provoking questions which helped me to reflect

|

4 |

10 |

22 |

12 |

39 |

χ2 =0.602; df=1; p=0.438 |

|

My mentor added value to my learning

|

4 |

10 |

22 |

11 |

40 |

χ2 =0.975; df=1; p=0.323 |

|

My mentor helped me to set future goals that were achievable

|

4 |

9 |

23 |

11 |

40 |

χ2 =0.462; df=1; p=0.497 |

|

The summaries provided of my performance in the Blackboard Community Site were useful in helping me to reflect on my progress |

3 |

17 |

16 |

14 |

37 |

χ2 =4.983; df=1; p=0.026*** |

Note. *In the median test, a comparison is made between the median rating in each group to the ‘overall pooled median’ from both groups. **Values are less than or equal to the overall pooled median therefore Median Test could not be performed. ***Significant at p < 0.05 level.

Table 1. Student Perceptions of D-IM within Cohort 1 in 2017 and 2018–Median Tests for Individual Items

The only statistically significant difference noted for Cohort 1 was for the summaries of performance provided in Blackboard that were designed to underpin D-IM. The data provided in these summaries was less valued by students who engaged with D-IM in their second year.

The aggregate mean score in response to the statements on D-IM in the survey was positive in 2017 (M=4.02; SD=0.62; n=32). Mentoring continued to be well perceived by Cohort 1 as they progressed to second year in 2018 (M=3.80; SD=0.67; n=51). The slight difference in aggregate mean scores between 2017 and 2018 is not statistically significant (t=1.571; df=82, p=0.120). Table 2 compares first year students’ perceptions of D-IM.

|

Item |

Overall Pooled Median* |

Cohort 1 n=32 |

Cohort 2 n=47 |

|

||

|

|

|

n> pooled median |

<= pooled median |

> pooled median |

<= pooled median |

Median Test |

|

The mentoring process was well organised

|

4 |

6 |

26 |

9 |

37 |

χ2 =0.008; df=1; p=0.928 |

|

My mentor was personally very well organised

|

5 |

0 |

32 |

0 |

47 |

n/a** |

|

There were an appropriate number of mentoring meetings throughout the year

|

4 |

2 |

30 |

8 |

39 |

χ2 =0.998; df=1; p=0.158 |

|

My mentor was respectful |

5

|

0 |

32 |

0 |

47 |

n/a**

|

|

My mentor listened to me |

5 |

0 |

32 |

0 |

47 |

n/a**

|

|

My mentor asked thought-provoking questions which helped me to reflect

|

4 |

10 |

22 |

18 |

29 |

χ2 =0.413; df=1; p=0.520 |

|

My mentor added value to my learning

|

4 |

10 |

22 |

17 |

30 |

χ2 =0.205; df=1; p=0.651 |

|

My mentor helped me to set future goals that were achievable

|

4 |

9 |

23 |

17 |

30 |

χ2 =0.558; df=1; p=0.455 |

|

The summaries provided of my performance in the Blackboard Community Site were useful in helping me to reflect on my progress |

3 |

17 |

16 |

17 |

30 |

χ2 =1.868; df=1; p=0.172 |

Note. *In the median test, a comparison is made between the median rating in each group to the ‘overall pooled median’ from both groups. **Values less than or equal to the overall pooled median therefore Median Test could not be performed.

Table 2. First Year Student Perceptions of D-IM –Median Tests between Cohort 1 and Cohort 2 for Individual Items

No statistically significant differences were noted between cohorts 1 and 2.

The aggregate mean score in response to the statements on D-IM in the survey was positive for Cohort 1 in 2017 (M=4.02; SD=0.62; n=32). Equally positive responses were noted in Cohort 2 in 2018 (M=3.91; SD=0.79; n=47). The difference between aggregate mean scores for first year students’ perceptions is not statistically significant (t=0.686; df=78, p=0.495).

Data from tables 1 and 2 reveals that students are highly satisfied with three aspects of mentoring: the personal organisation of the mentor along with their respectful and listening attributes. Students were also satisfied with the mentoring process, the number of mentoring meetings, the ability of the mentoring to assist in reflection and to add value to their learning, and also the propensity of the mentor to assist in action-planning. However, the summaries provided in the Blackboard environment were a source of dissatisfaction for students.

As discussed, qualitative data were collected from students through the questionnaire and staff through focus groups. The research team collated the qualitative data and confirmed that the qualitative data corroborated quantitative results with students and mentors appreciating the introduction of D-IM. For example, “Mentor sessions are important in providing support to students and…are a welcome introduction” (Yr1 Student, 2017); “Mentoring was useful to develop self-directed learning and to check where you were” (Yr2 Student, 2018); “You get to know the students, things were revealed which would not have been otherwise” (Mentor, 2017); and “Mentoring enabled me to facilitate more, listen more. Definitely a difference when you’re one-on-one with somebody” (Mentor, 2018).

In tune with the action research method adopted by the study which seeks to identify and respond to opportunities for improvement, the Research Team identified three concerns from the qualitative data: differing views of the purpose of D-IM and the role of the mentor; the provision of student feedback and information and communications technologies (ICT); and workload.

A. Differing Views of the Purpose of D-IM and the Role of Mentor

Mentors had differing conceptions of the purpose of D-IM and the role of a mentor. Some mentors perceived their primary function to be one of facilitating reflection and being encouraging whilst others were more directive, providing advice or sharing their own experiences. “I was…a sounding board to prompt their thoughts about how their progress was going. Rather than offering ways of solving problems it was more pointing where problems might lie and encouraging them to think of solutions” (Mentor, 2017); “The basic rule is to guide them… guide them properly, maybe to get them to change their study strategies and other things” (Mentor, 2017).

B. Provision of Student Feedback and ICT

Students reported that feedback was inconsistent in timeliness and quality. Often feedback lacked guidance for improvement or was too late for it to help the student improve their learning: “More in-depth feedback on work, and returned in a timeframe that allows it to be relevant to our learning” (Yr1 Student, 2018); “Marking seemed thoughtless and halfhearted” (Yr2 Student, 2018).The use of a Blackboard Wiki to collate and present data points was also less than ideal with students finding the site difficult to navigate and use although they generally reported that it was safe and secure.

C. Workload

Students understood the role of reflection and appreciated having a mentor although there was some misunderstanding of the role of the portfolio with some students seeing it as extra work: “The amount of work required…was disproportionate” (Yr2 Student, 2018). Some students felt that the added stress and anxiety detracted from their study of medicine: “The portfolio actually detracts from spending time learning content that is essential to clinical years” (Yr2 Student, 2018). These concerns needed to be addressed by the School and are discussed in the context of changes that have and will be made to D-IM for preclinical students in the School.

IV. DISCUSSION

On the whole there was a positive response to D-IM implementation by students and staff. This response is consistent with Frei, Stamm, and Buddeberg-Fischer (2010, p. 1) who found that the “personal student-faculty relationship is important in that it helps students to feel that they are benefiting from individual advice.”

The findings of the research, however, reveal some tensions between the various elements of Sambunjak’s (2015) ecological model that link to the three areas of concern identified in the research. These tensions are shown in Figure 2.

Figure 2. The ecological framework to explore tensions in D-IM

A. Purpose and Role of Mentors and D-IM

The role of the mentor at the School is to support and guide students, and this role was not confused with other functions such as content expert or assessor. In this respect, the role conflict described by Meeuwissen et al. (2019) and Heeneman and deGrave (2017) was not evident at the School. However, mentoring approaches were situated on a continuum between learner-centred and mentor-directed. It is probable that the mentor’s style–empowering, checking or directing (Meeuwissen et al., 2019, p.605)–and their potentially different view of their role impacted on how D-IM sessions played out. Three ways of understanding the role of mentor in medical education have been identified: someone who can answer questions and give advice, someone who shares what it means to be a doctor and someone who listens and stimulates reflection (Stenfors-Hayes, Hult, & Owe Dahlgren, 2011). In a study of mentoring styles of beginning teachers, Richter et al. (2013) found that the mentor’s beliefs about learning have the greatest impact on the quality of the mentoring experience. Although professional development was provided to mentors on their role as facilitators of reflection and these issues were outlined and discussed, there were differences in interpretation of the role in the D-IM context.

Heeneman and de Grave (2017) argue that students need to be self-directed in order to be effective medical professionals. It is posited that a number of factors can influence the extent to which the mentor directs proceedings including the mentor’s experience, role clarity, rate of student progress, depth of student reflections and the perceived importance of the data required for assessment purposes.

In this study most students engaged positively with D-IM, though, albeit with variations in the extent and quality of reflection and action planning. A slight decrease in students’ enthusiasm towards D-IM was noted as they progressed from first to second year. This could be related to the novelty of D-IM diminishing over time that has been evident in other educational technology innovations (Kuykendall, Janvier, Kempton, & Brown, 2012). However, students also have a different mentor in each year. According to Sambunjak (2015), mentoring requires commitment sustained over a long period of time. At Maastricht University, for example, Heeneman and de Grave (2017) report that students are allocated the same mentor for a four-year medical course. It is, therefore, likely that in the current study the short timeframe for mentors to establish student relationships, and the introduction of a different mentor each year contributed to a reduction in student satisfaction.

B. Feedback and ICT Support

D-IM is dependent on quality data. That is, the perceived value of tasks that students engage with, and the feedback that they receive on, these tasks. Findings suggest that students found some tasks repetitive and feedback belated and superficial. Better task design and feedback practices are required. This finding is consistent with those of Bate, Macnish and Skinner (2016) in a study of task design within a learning portfolio. Findings also indicated dissatisfaction with Blackboard ICT environment. The portal was not intuitive and the structure and requirements for use of the template did not stimulate the desired level of reflection.

C. Workload

Students at the School are “time poor” and many work part-time whilst studying. They are graduate entrants used to achieving academic success. Most are millennials comfortable with distilling and manipulating data and using online technologies. These characteristics are consistent with what Waljee, Copra, and Saint (2018) see as the new breed of medical students, being accustomed to distilling information and desirous of rapid career advancement. In these circumstances, it is unsurprising that students valued D-IM as it promoted focused data-driven discussion on their progress. However, it is also unsurprising that students were critical of anything that, in their opinion, did not support the “study of medicine”. Although students were sometimes critical of tasks that fed into D-IM (Bate et al., 2020), the reflective and action planning components of DI-M were not onerous and were at any rate optional.

For most students, grades rather than learning were paramount and this created a competitive environment which fuelled strategic learning in engaging with tasks underpinning D-IM. The School’s Assessment Policy has implications here. Progression is determined by passing discrete assessments and causes students to focus on grades rather than learning. These dispositions play out in D-IM sessions where, for example, goals are sometimes framed around passing examinations rather than addressing deficits in understanding. The School also distinguishes between formative and summative assessment with the result being that formative assessments are less valued by students. Opportunities to test understanding through formative testing are sometimes not taken up and result in less information for students and their mentor to gauge learning progress.

Bhat, Burm, Mohan, Chahine, and Goldszmidt (2018, p. 620) identified a set of “threshold concepts” in medicine that are crucial for students transitioning into clinical practice. Among these are self-directed, metacognitive and collaborative dispositions to learning. However, for a student in the preclinical years, these threshold concepts are not perceived to be the important factors that determine their progress through the course and their aim to become a doctor. Thus the tensions between students valuing mentoring but feeling that reflecting on their performance through D-IM is time-consuming and unrelated to their course progression is a source of tension within the model.

D. Actions as a Result of the Study

The action research approach of this study meant that in all results the Research Team was looking for ways to improve the system. Some issues could be improved quickly. A refinement of the Blackboard environment and a change to a software solution called SONIA was implemented in 2019 to improve the ICT interface and reduce workload. Continuing professional development (PD) for staff is undertaken and takes the research results into account. Within the mentor PD program, the Research Team saw that mentoring requires mentors to be able to diagnose the readiness and willingness of students to consider their learning educational journey. This means that, whilst the D-IM program needs a consistent view of D-IM where mentors see their role as facilitating reflection, different mentoring skills and behaviours are needed by mentors for different students. PD is also needed for students so that they understand the relationship between their achievement of learning and the role of D-IM in their journey.

Some issues are longer-term or resource dependent. A focus on the role of feedback in the system, especially for student reflection and its timeliness for mentoring sessions and action planning is critical to making D-IM valued by students. However, it is not always possible for staff to provide feedback in an optimum timeframe although the quality of the feedback can be improved by clear guidelines, expectations and an intuitive online interface.

Of great complexity and more difficult to resolve is the tension between developing the “threshold concepts” (Bhat et al., 2018); the generic skills which are built on self-reflection and are supported by D-IM and the ways in which a student progresses through the course. These are School-based rules of progression and produce a framework within which D-IM needs to operate.

V. LIMITATIONS OF THE STUDY

The study was conducted at one University and although it will ultimately cover a six-year timeframe, findings should be gauged within the context of this setting. Relatively low response rates were noted, and selection bias is a possibility with students most engaged with D-IM completing the questionnaire. Although professional development was provided to underpin the mentoring role, there was variation in the way tutors interpreted this role. The study was conducted at a time where other changes were occurring at the School (e.g. development of more continuous forms of assessment) and these changes might have impacted on D-IM. The questionnaire used in the study contained nine questions on mentoring. To gain a more nuanced understanding of D-IM at the School, it may be useful to use a comprehensive and validated questionnaire (e.g. Heeneman & de Grave, 2019) capturing the perceptions of mentees and mentors.

VI. CONCLUSION

The School aims to create quality, patient-centred and compassionate doctors who are lifelong learners (Candy, 2006). D-IM is an effective augmentation to the School’s educational program and the paper has demonstrated that it was well received by students and staff. Future directions include consideration of D-IM in clinical mentoring, development of more consistent learner-centred approaches to mentoring; improved task design and feedback; support for D-IM with appropriate ICT; and better integration of D-IM with assessment policies and practices.

Notes on Contributors

Associate Professor Frank Bate completed his PhD at Murdoch University. He is the Director of Medical and Health Professional Education at the School of Medicine Fremantle, University of Notre Dame Australia. He conceptualised and led the research, and was the primary author responsible for developing, reviewing and improving the manuscript.

Professor Sue Fyfe attained her PhD at the University of Western Australia and is an adjunct professor at Curtin University. She assisted in conceptualising the research design, conducted the qualitative data analysis and made a significant contribution to reviewing and improving the manuscript.

Dr Dylan Griffiths has a PhD from the University of Essex and is the Quality Assurance Manager at the School of Medicine Fremantle, University of Notre Dame Australia. He conducted data collection and assisted with preliminary analysis.

Associate Professor Kylie Russell obtained her PhD from the University of Notre Dame Australia. She is currently a Project Officer at the School of Medicine Fremantle, University of Notre Dame Australia. She assisted in the development of the research methodology and made a contribution to reviewing and improving the manuscript.

Associate Professor Chris Skinner completed his PhD at the University of Western Australia. He is Domain Chair of Personal and Professional development at the School of Medicine Fremantle, University of Notre Dame Australia. He assisted in conceptualising the research design and made a contribution to reviewing and improving the manuscript.

Associate Professor Elina Tor completed her PhD at Murdoch University and is the Associate Professor of Psychometrics at the School of Medicine Fremantle, University of Notre Dame Australia. She helped conceptualise the research design, led the quantitative data analysis, and made a significant contribution to reviewing and improving the manuscript.

Ethical Approval

The University of Notre Dame Australia Human Research Ethics Committee (HREC) has provided ethical approval for the research (Approval Number 017066F).

Acknowledgement

The authors acknowledge the thoughtful and insightful feedback provided by staff and students.

Funding

No internal or external funding was sought to conduct this research.

Declaration of Interest

There is no conflict of interest to declare.

References

Bate, F., Fyfe, S., Griffiths, D., Russell, K., Skinner, C., & Tor, E. (2020). Does an incremental approach to implementing programmatic assessment work: Reflections on the change process. MedEdPublish, 9(1), 55. https://doi.org/10.15694/mep.2020.000055.1

Bate, F., Macnish, J., & Skinner, C. (2016). The cart before the horse? Exploring the potential of ePortfolios in a Western Australian medical school. International Journal of ePortfolio, 6 (2), 85-94.

Bhat, C., Burm, S., Mohan, T., Chahine, S., & Goldszmidt, M. (2018). What trainees grapple with: A study of threshold concepts on the medicine ward. Medical Education, 52(6), 620–631. https://doi.org/10.1111/medu.13526

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3, 77-101. https://doi.org/10.1191/1478088706qp063oa

Candy, P. (2006). Promoting lifelong learning: Academic developers and the university as a learning organization. International Journal for Academic Development, 1(1), 7-18. https://doi.org/10.1080/1360144960010102

Frei, E., Stamm, M., & Buddeberg-Fischer, B. (2010). Mentoring programs for medical students: A review of PubMed literature 2000-2008, BMC Medical Education, 10(32), 1-14. https://doi.org/10.1186/1472-6920-10-32

Gillis, A., & Jackson, W. (2002). Research for Nurses: Methods and Interpretation. Philadelphia, PA: F.A. Davis Co.

Heeneman, S., & de Grave, W. (2017). Tensions in mentoring medical students toward self-directed and reflective learning in a longitudinal portfolio-based mentoring system – An activity theory analysis. Medical Teacher, 39(4), 368-376. https://doi.org/10.1080/0142159X.2017.1286308

Heeneman, S., & de Grave, W. (2019). Development and initial validation of a dual-purpose questionnaire capturing mentors’ and mentees’ perceptions and expectations of the mentoring process. BMC Medical Education, 19(133), 1-13. https://doi.org/10.1186/s12909-019-1574-2

Kemmis, S., & McTaggart, R. (2000). Participatory action research. In N. K. Denzin & Y. S. Lincoln (Eds.) Handbook of Qualitative Research (2nd Ed.; pp 567-606). New York: Sage Publications.

Kirkpatrick, D., & Kirkpatrick, J. (2006). Evaluating Training Programs: The Four Levels (3rd ed.). Oakland, CA: Berrett-Koehler Publishers, Inc.

Kuykendall, B., Janvier, M., Kempton, I., & Brown, D. (2012). Interactive whiteboard technology: Promise and reality. In T. Bastiaens & G. Marks (Eds.), Proceedings of E-Learn 2012 – World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education 1 (pp. 685-690). Retrieved from Association for the Advancement of Computing in Education (AACE), https://www.learntechlib.org/p/41669

Meeuwissen, N., Stalmeijer, R., & Govaerts, M. (2019). Multiple-role mentoring: Mentors conceptualisations, enactments and role conflicts. Medical Education, 53, 605-615. https://doi.org/10.1111/medu.13811

Richter, D., Kunter, M., Lüdtke, O., Klusmann, U., Anders, Y., & Baumert, J. (2013). How different mentoring approaches affect beginning teachers’ development in the first years of practice. Teaching and Teacher Education, 36, 166-177. https://doi.org/10.1016/j.tate.2013.07.012

Sambunjak, D. (2015). Understanding the wider environmental influences on mentoring: Towards an ecological model of mentoring in academic medicine. Acta Medica Academia, 44(1), 47-57. https://doi.org/10.5644/ama2006-124.126

Standing Committee on Postgraduate Medical and Dental Education. (1998). Supporting Doctors and Dentists at Work: An Enquiry into Mentoring. London: SCOPME.

Stenfors-Hayes, T., Hult, H., & Owe Dahlgren, L. (2011). What does it mean to be a mentor in medical education? Medical Teacher, 33(8), 423-428. https://doi.org/10.3109/0142159X.2011.586746

Waljee, J. F., Copra, V., & Saint, S. (2018). Mentoring Millennials. JAMA, 319(15), 1547-1548. https://doi.org/10.1001/jama.2018.3804

*Frank Bate

Medical and Health Professional Education,

School of Medicine Fremantle,

University of Notre Dame Australia,

PO Box 1225, Fremantle,

Western Australia 6959

Telephone: +66 9433 0944

Email address: frank.bate@nd.edu.au

Announcements

- Fourth Thematic Issue: Call for Submissions

The Asia Pacific Scholar is now calling for submissions for its Fourth Thematic Publication on “Developing a Holistic Healthcare Practitioner for a Sustainable Future”!

The Guest Editors for this Thematic Issue are A/Prof Marcus Henning and Adj A/Prof Mabel Yap. For more information on paper submissions, check out here! - Best Reviewer Awards 2023

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2023.

Refer here for the list of recipients. - Most Accessed Article 2023

The Most Accessed Article of 2023 goes to Small, sustainable, steps to success as a scholar in Health Professions Education – Micro (macro and meta) matters.

Congratulations, A/Prof Goh Poh-Sun & Dr Elisabeth Schlegel! - Best Article Award 2023

The Best Article Award of 2023 goes to Increasing the value of Community-Based Education through Interprofessional Education.

Congratulations, Dr Tri Nur Kristina and co-authors! - Volume 9 Number 1 of TAPS is out now! Click on the Current Issue to view our digital edition.

- Best Reviewer Awards 2022

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2022.

Refer here for the list of recipients. - Most Accessed Article 2022

The Most Accessed Article of 2022 goes to An urgent need to teach complexity science to health science students.

Congratulations, Dr Bhuvan KC and Dr Ravi Shankar. - Best Article Award 2022

The Best Article Award of 2022 goes to From clinician to educator: A scoping review of professional identity and the influence of impostor phenomenon.

Congratulations, Ms Freeman and co-authors. - Volume 8 Number 3 of TAPS is out now! Click on the Current Issue to view our digital edition.

- Best Reviewer Awards 2021

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2021.

Refer here for the list of recipients. - Most Accessed Article 2021

The Most Accessed Article of 2021 goes to Professional identity formation-oriented mentoring technique as a method to improve self-regulated learning: A mixed-method study.

Congratulations, Assoc/Prof Matsuyama and co-authors. - Best Reviewer Awards 2020

TAPS would like to express gratitude and thanks to an extraordinary group of reviewers who are awarded the Best Reviewer Awards for 2020.

Refer here for the list of recipients. - Most Accessed Article 2020

The Most Accessed Article of 2020 goes to Inter-related issues that impact motivation in biomedical sciences graduate education. Congratulations, Dr Chen Zhi Xiong and co-authors.