Abstract

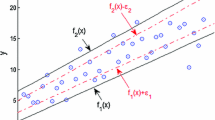

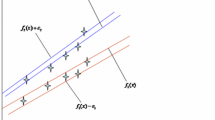

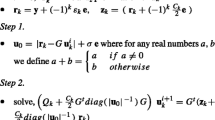

The training algorithm of classical twin support vector regression (TSVR) can be attributed to the solution of a pair of quadratic programming problems (QPPs) with inequality constraints in the dual space. However, this solution is affected by time and memory constraints when dealing with large datasets. In this paper, we present a least squares version for TSVR in the primal space, termed primal least squares TSVR (PLSTSVR). By introducing the least squares method, the inequality constraints of TSVR are transformed into equality constraints. Furthermore, we attempt to directly solve the two QPPs with equality constraints in the primal space instead of the dual space; thus, we need only to solve two systems of linear equations instead of two QPPs. Experimental results on artificial and benchmark datasets show that PLSTSVR has comparable accuracy to TSVR but with considerably less computational time. We further investigate its validity in predicting the opening price of stock.

Similar content being viewed by others

References

Boser, B.E., Guyon, I.M., Vapnik, V.N., 1992. A Training Algorithm for Optimal Margin Classifiers. Proc. 5th Annual Workshop on Computational Learning Theory, p.144–152. [doi:10.1145/130385.130401]

Chen, Z.Y., Fan, Z.P., 2012. Distributed customer behavior prediction using multiplex data: a collaborative MK-SVM approach. Knowl.-Based Syst., 35:111–119. [doi:10. 1016/j.knosys.2012.04.023]

Cong, H.H., Yang, C.F., Pu, X.R., 2008. Efficient Speaker Recognition Based on Multi-class Twin Support Vector Machines and GMMs. IEEE Conf. on Robotics, Automation and Mechatronics, p.348–352. [doi:10.1109/RAMECH.2008.4681433]

Ding, S.F., Qi, B.J., 2012. Research of granular support vector machine. Artif. Intell. Rev., 38(1):1–7. [doi:10.1007/s10462-011-9235-9]

Ding, S.F., Su, C.Y., Yu, J.Z., 2011. An optimizing BP neural network algorithm based on genetic algorithm. Artif. Intell. Rev., 36(2):153–162. [doi:10.1007/s10462-011-9208-z]

Huang, H.J., Ding, S.F., 2012. A novel granular support vector machine based on mixed kernel function. Int. J. Dig. Cont. Technol. Its Appl., 6(20):484–492. [doi:10.4156/jdcta.vol6.issue20.52]

Jayadeva, Khemchandani, R., Chandra, S., 2007. Twin support vector machines for pattern classification. IEEE Trans. Pattern Anal. Mach. Intell., 29(5):905–910. [doi:10.1109/TPAMI.2007.1068]

Liu, Y., Yang, J., Li, L., Wu, W., 2012. Negative effects of sufficiently small initial weights on back-propagation neural networks. J. Zhejiang Univ.-Sci. C (Comput. & Electron.), 13(8):585–592. [doi:10.1631/jzus.C1200008]

Mangasarian, O.L., Wild, E.W., 2006. Multisurface proximal support vector machine classification via generalized eigenvalues. IEEE Trans. Pattern Anal. Mach. Intell., 28(1): 69–74. [doi:10.1109/TPAMI.2006.17]

Moraes, R., Valiati, J.F., Neto, W.P.G., 2013. Document-level sentiment classification: an empirical comparison between SVM and ANN. Exp. Syst. Appl., 40(2):621–633. [doi:10.1016/j.eswa.2012.07.059]

Osuna, E., Freund, R., Girosi, F., 1997. An Improved Training Algorithm for Support Vector Machines. Proc. IEEE Workshop on Neural Networks for Signal Processing, p.276–285. [doi:10.1109/NNSP.1997.622408]

Pan, H., Zhu, Y.P., Xia, L.Z., 2013. Efficient and accurate face detection using heterogeneous feature descriptors and feature selection. Comput. Vis. Image Understand., 117(1):12–28. [doi:10.1016/j.cviu.2012.09.003]

Peng, X.J., 2010a. Primal twin support vector regression and its sparse approximation. Neurocomputing, 73(16–18): 2846–2858. [doi:10.1016/j.neucom.2010.08.013]

Peng, X.J., 2010b. TSVR: an efficient twin support vector machine for regression. Neur. Networks, 23(3):365–372. [doi:10.1016/j.neunet.2009.07.002]

Platt, J.C., 1999. Using Analytic QP and Sparseness to Speed Training of Support Vector Machines. In: Kearns, M., Solla, S., Cohn, D. (Eds.), Advances in Neural Information Processing Systems 11. MIT Press, Cambridge, MA, p.557–563.

Suykens, J.A.K., van de Walle, J., 2001. Optimal control by least squares support vector machines. Neur. Networks, 14(1):23–35. [doi:10.1016/S0893-6080(00)00077-0]

Vapnik, V.N., 1995. The Nature of Statistical Learning Theory. Springer-Verlag, New York. [doi:10.1007/978-1-4757-2440-0]

Wu, J.X., 2012. Efficient HIK SVM learning for image classification. IEEE Trans. Image Process., 21(10):4442–4453. [doi:10.1109/TIP.2012.2207392]

Xu, X.Z., Ding, S.F., Shi, Z.Z., Zhu, H., 2012. A novel opti-mizing method for RBF neural network based on rough set and AP clustering algorithm. J. Zhejiang Univ.-Sci. C (Comput. & Electron.), 13(2):131–138. [doi:10.1631/jzus.C1100176]

Zhang, X.S., Gao, X.B., Wang, Y., 2009. Twin support vector machines for MCs detection. J. Electron. (China), 26(3): 318–325. [doi:10.1007/s11767-007-0211-0]

Zhong, P., Xu, Y.T., Zhao, Y.H., 2012. Training twin support vector regression via linear programming. Neur. Comput. Appl., 21(2):399–407. [doi:10.1007/s00521-011-0525-6]

Author information

Authors and Affiliations

Corresponding author

Additional information

Project supported by the National Basic Research Program (973) of China (No. 2013CB329502), the National Natural Science Foundation of China (No. 61379101), and the Fundamental Research Funds for the Central Universities, China (No. 2012LWB39)

Rights and permissions

About this article

Cite this article

Huang, Hj., Ding, Sf. & Shi, Zz. Primal least squares twin support vector regression. J. Zhejiang Univ. - Sci. C 14, 722–732 (2013). https://doi.org/10.1631/jzus.CIIP1301

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1631/jzus.CIIP1301