Abstract

Results of the Model Unspecific Search in CMS (MUSiC), using proton–proton collision data recorded at the LHC at a centre-of-mass energy of 13\(\,\text {TeV}\), corresponding to an integrated luminosity of 35.9\(\,\text {fb}^{-1}\), are presented. The MUSiC analysis searches for anomalies that could be signatures of physics beyond the standard model. The analysis is based on the comparison of observed data with the standard model prediction, as determined from simulation, in several hundred final states and multiple kinematic distributions. Events containing at least one electron or muon are classified based on their final state topology, and an automated search algorithm surveys the observed data for deviations from the prediction. The sensitivity of the search is validated using multiple methods. No significant deviations from the predictions have been observed. For a wide range of final state topologies, agreement is found between the data and the standard model simulation. This analysis complements dedicated search analyses by significantly expanding the range of final states covered using a model independent approach with the largest data set to date to probe phase space regions beyond the reach of previous general searches.

Similar content being viewed by others

1 Introduction

The CERN LHC has produced proton–proton (\(\text {pp} \)) collisions at an unprecedented centre-of-mass energy of 13\(\,\text {TeV}\) since 2015, providing an excellent opportunity to search for new phenomena in regions that were previously inaccessible to collider experiments. While the standard model (SM) of particle physics is well established as the theory that describes the fundamental particles and their interactions, it cannot explain certain phenomena such as dark matter, neutrino oscillations, and the matter-antimatter asymmetry in the universe. Several theories of physics beyond the standard model (BSM) have been developed to address the inadequacies of the SM, and a wide range of parameter and phase space regions of such theoretical models is accessible for a direct search for the first time at the LHC. A large number of searches for a range of BSM signatures have been conducted by the experiments at the LHC, including the CMS experiment [1], but no direct evidence for BSM physics has been found to date. Thus, it becomes imperative to expand the scope of searches so that signs of new physics that are in principle detectable by the CMS experiment are not missed.

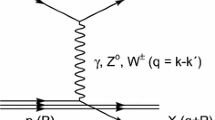

Dedicated searches targeting specific BSM theories are often restricted in their scope to a few final states that are sensitive to the particular models probed. Practical constraints on the number of such analyses mean that there are models and final states that remain unexplored, where BSM signatures could possibly be hidden. Furthermore, new phenomena may exist that are not described by any of the existing models. Hence, complementary to the existing searches for specific BSM scenarios, a generalised model-independent approach is employed in the analysis reported here: Model Unspecific Search in CMS (MUSiC ). The MUSiC analysis uses an automated approach to quantify deviations between a Monte Carlo (MC) simulation of SM processes, as seen in the CMS detector, and the observed data in a wide variety of final states, in order to detect anomalies and identify discrepancies that could be hints of BSM physics or other neglected or unknown phenomena. Following the MUSiC approach, events from data and SM simulation are classified based on the so-called final-state objects in an event, i.e. electrons (e), muons (\(\upmu \)), photons (\(\upgamma \)), jets originating from light-flavour quarks or gluons, jets originating from b quarks (b jets), and missing transverse momentum (\(p_{\mathrm{T}} ^{\mathrm{miss}}\)), resulting in several hundred different event classes. Then, an automated statistical method is used to scan the different event classes and multiple kinematic distributions in each event class for deviations between the data and simulation, identifying either excesses or deficits. A deviation is considered significant if the measured significance of the deviation is beyond the expectation of the SM-only hypothesis. The discovery of significant deviations by MUSiC would lead to a detailed investigation of both data and simulation in the final states of interest. Such deviations or anomalies could result from a possible insufficient description of the SM or detector effects in the simulation, from systematic effects that are unknown or incorrectly modelled, or they could be the first hints of BSM phenomena. This last interpretation cannot be the result of the general search algorithm itself, since the algorithm uses a simplified approach in order to probe a wide variety of diverse final states. Rather, it would require additional study in the form of a dedicated analysis of the final states of interest, ideally performed on statistically independent data sets.

Since the analysis relies on the simulation to estimate the SM expectation, only final-state objects that are well modelled in the simulation are incorporated. In particular, \(\uptau \) leptons are not considered separately because of challenges in modelling the effects of misidentification of hadronic jets as \(\uptau \) leptons in the simulation. However, \(\uptau \) leptons enter the analysis in the form of electrons or muons from leptonic \(\uptau \) decays, or as jets from hadronic \(\uptau \) lepton decays. Other more complex objects, e.g. hadronic decays of highly boosted W or Z bosons, are not considered in the current analysis. Furthermore, because beyond the leading order (LO) MC simulations of the quantum chromodymanics (QCD) multijet and \(\upgamma \)+jets processes of the SM are not available to this analysis, the analysis is restricted to those final states that contain at least one isolated lepton (electron or muon), since the contributions of these processes are expected to be low in such final states. Finally, the electric charges of the final-state objects are not considered in the analysis.

Dedicated analyses in specific final states with search strategies optimised for particular signatures are expected to have greater sensitivity than the present, more general approach. Moreover, further final-state objects, kinematic distributions, and phase space regions remain to be explored.

General model-unspecific searches have been performed in the past by the D0 [2,3,4] and CDF experiments [5, 6] at the Tevatron, and by the H1 experiment [7, 8] at HERA. Such searches have also been performed at the LHC by the ATLAS Collaboration [9], and preliminary results have been reported by the CMS Collaboration based on the MUSiC approach using the \(\text {p} \text {p} \) collision data set collected during the year 2010 at \(\sqrt{s} = 7\,\text {TeV} \) [10], and during 2012 at 8\(\,\text {TeV}\) [11].

This paper describes the MUSiC analysis that is performed with the full CMS data set of \(\text {p} \text {p} \) collisions at \(\sqrt{s} = 13\,\text {TeV} \) collected during 2016, corresponding to an integrated luminosity of \(35.9{\,\text {fb}^{-1}} \). The increased centre-of-mass energy and much larger amount of data analysed compared to the previously reported results significantly extend the regions of BSM phase space than can be probed.

We begin with the description of the CMS detector and object reconstruction in Sect. 2, followed by a summary of the data set and simulated samples along with the object and event selection in Sects. 3 and 4. The MUSiC search strategy is presented in Sect. 5, and systematic uncertainties are discussed in Sect. 6. After selected sensitivity studies are presented in Sect. 7, the results are shown in Sect. 8, before the paper is summarised in Sect. 9. Additional figures complementing the results are shown in Appendix.

2 The CMS detector and object reconstruction

The central feature of the CMS apparatus is a superconducting solenoid of 6\(\,\text {m}\) internal diameter, providing a magnetic field of 3.8\(\,\text {T}\). Within the solenoid volume are a silicon pixel and strip tracker, a lead tungstate crystal electromagnetic calorimeter (ECAL), and a brass and scintillator hadron calorimeter (HCAL), each composed of a barrel and two endcap sections. Forward calorimeters extend the pseudorapidity (\(\eta \)) coverage provided by the barrel and endcap detectors. Muons are detected in gas-ionisation chambers embedded in the steel flux-return yoke outside the solenoid. A more detailed description of the CMS detector, together with a definition of the coordinate system used and the relevant kinematic variables, can be found in Ref. [1].

Events of interest are selected using a two-tiered trigger system [12]. The first level, composed of custom hardware processors, uses information from the calorimeters and muon detectors to select events at a rate of around 100 \(\,\text {kHz}\) within a time interval of less than 4 \(\upmu \)s. The second level, known as the high-level trigger (HLT), consists of a farm of processors running a version of the full event reconstruction software optimised for fast processing, and reduces the event rate to around 1 \(\,\text {kHz}\) before data storage.

At CMS, the global event reconstruction (also called the particle-flow event reconstruction [13]) aims to reconstruct and identify each individual particle in an event, using an optimised combination of all subdetector information. In this process, the identification of the particle type (muon, electron, photon, charged or neutral hadron) plays an important role in the determination of the particle direction and energy. Photons are identified as ECAL energy clusters not linked to the extrapolation of any charged particle trajectory to the ECAL. Electrons are identified as a charged-particle track and potentially many ECAL energy clusters corresponding to this track extrapolation to the ECAL and to possible bremsstrahlung photons emitted along the way through the tracker material. Muons are identified as tracks in the central tracker consistent with either a track or several hits in the muon system, and associated with calorimeter deposits compatible with the muon hypothesis. Charged hadrons are identified as charged-particle tracks neither identified as electrons, nor as muons. Finally, neutral hadrons are identified as HCAL energy clusters not linked to any charged-hadron trajectory, or as a combined ECAL and HCAL energy excess with respect to the expected charged-hadron energy deposit.

The energy of photons is obtained from the ECAL measurement. The energy of electrons is determined from a combination of the track momentum at the main interaction vertex, the corresponding ECAL cluster energy, and the energy sum of all bremsstrahlung photons associated with the track. The candidate vertex with the largest value of summed physics-object \(p_{\mathrm{T}} ^2\) is taken to be the primary \(\text {p} \text {p} \) interaction vertex, where \(p_{\mathrm{T}}\) denotes the transverse momentum. The physics objects are the jets, clustered using the jet finding algorithm [14, 15] with the tracks assigned to candidate vertices as inputs, and the associated missing transverse momentum, taken as the negative vector sum of the \(p_{\mathrm{T}}\) of those jets. The energy of muons is obtained from the corresponding track momentum. The energy of charged hadrons is determined from a combination of the track momentum and the corresponding ECAL and HCAL energies, corrected for zero-suppression effects and for the response function of the calorimeters to hadronic showers. Finally, the energy of neutral hadrons is obtained from the corresponding corrected ECAL and HCAL energies.

In the barrel section of the ECAL, an energy resolution of about 1% is achieved for unconverted or late converting photons in the tens of GeV energy range. Other photons detected in the barrel have a resolution of about 1.3% up to \(|\eta | = 1.0\), rising to about 2.5% at \(|\eta | = 1.4\). In the endcaps, the resolution of unconverted or late-converting photons is about 2.5%, whereas other photons detected in the endcap have a resolution between 3 and 4% [16]. The momentum resolution for electrons with \(p_{\mathrm{T}} \approx 45\,\,\text {GeV} \) from \(\text {Z} \rightarrow \text {e} ^+ \text {e} ^-\) decays ranges from 1.7 to 4.5%. It is generally better in the barrel region than in the endcaps, and also depends on the bremsstrahlung energy emitted by the electron as it traverses the material in front of the ECAL [17].

Muons are measured in the range \(|\eta | < 2.4\), with detection planes made using three technologies: drift tubes, cathode strip chambers, and resistive-plate chambers. The single muon trigger efficiency exceeds 90% over the full \(\eta \) range, and the efficiency to reconstruct and identify muons is greater than 96%. Matching muons to tracks measured in the silicon tracker results in a relative transverse momentum resolution for muons with \(p_{\mathrm{T}}\) up to 100\(\,\,\text {GeV}\) of 1% in the barrel and 3% in the endcaps. The \(p_{\mathrm{T}}\) resolution in the barrel is better than 7% for muons with \(p_{\mathrm{T}}\) up to 1\(\,\text {TeV}\) [18].

For each event, hadronic jets are clustered from these reconstructed particles using the anti-\(k_{\mathrm{T}}\) algorithm [14, 15] with a distance parameter of 0.4. Jet momentum is determined as the vectorial sum of all particle momenta in the jet, and is found from simulation to be, on average, within 5 to 10% of the true momentum over the whole \(p_{\mathrm{T}}\) spectrum and detector acceptance. Additional \(\text {p} \text {p} \) interactions within the same or nearby bunch crossings (pileup) can contribute additional tracks and calorimetric energy depositions to the jet momentum. To mitigate this effect, charged particles identified to be originating from pileup vertices are discarded and an offset correction is applied to correct for remaining contributions [13]. Jet energy corrections are derived from simulation to bring, on average, the measured response of jets to that of particle level jets. In situ measurements of the momentum balance in dijet, \(\text {photon}+\text {jet}\), \(\text {Z} +\text {jet}\), and multijet events are used to account for any residual differences between the jet energy scale in data and in simulation [19]. The jet energy resolution amounts typically to 15% at 10\(\,\,\text {GeV}\), 8% at 100\(\,\,\text {GeV}\), and 4% at 1\(\,\text {TeV}\). Additional selection criteria are applied to each jet to remove jets potentially dominated by anomalous contributions from various subdetector components or reconstruction failures [20]. Jets originating from b quarks are identified as b-tagged jets using the combined secondary vertex algorithm (v2) described in Ref. [21].

The missing transverse momentum vector \({\vec {p}}_{\mathrm{T}}^{\text {miss}}\) is computed as the negative vector sum of the transverse momenta of all the particle-flow candidates in an event, and its magnitude is denoted as \(p_{\mathrm{T}} ^{\mathrm{miss}}\) [22]. The \({\vec {p}}_{\mathrm{T}}^{\text {miss}}\) is modified to account for corrections to the energy scale of the reconstructed jets in the event.

3 Data set and simulated samples

The analysis presented in this paper is performed on the data sample collected by the CMS experiment during 2016, based on \(\text {p} \text {p} \) collisions at a \(\sqrt{s} = 13\,\text {TeV} \), corresponding to an integrated luminosity of \(35.9{\,\text {fb}^{-1}} \).

The MUSiC analysis aims to find deviations in the data when compared to the SM predictions, and hence an inclusive description of the SM with a full set of simulated samples covering the entire range of SM processes that are expected to be detected by the CMS experiment is required to have a good estimate of the SM expectation in each final state. MC simulated events from the generators pythia 8.212 [23], MadGraph 5_amc@nlo version 2.2.2 [24] with MLM [25] or FxFx [26] matching schemes, powheg v2 [27,28,29,30,31,32,33,34,35,36,37,38], and sherpa 2.1.1 [39, 40] are combined to model each SM process of relevance in the studied energy regime, with the NNPDF3.0 [41] parton distribution functions (PDFs) being used for most of the simulated samples. Simulation of the parton shower and hadronisation process is done with pythia 8.205 [23], with the underlying event tune CUETP8M1 [42]. The detector response is simulated using the Geant4 package [43]. The presence of pileup in data is incorporated in simulated events by using additional inelastic events generated with pythia with the same underlying event tune as the main interaction that are superimposed on the hard-scattering events.

When available, higher order cross section estimates are used to normalise the MC simulated samples. The cross sections of the inclusive \(\text {W} (\rightarrow \ell \upnu )+\text {jets}\) and \(\text {Z} (\rightarrow \ell ^+ \ell ^-)+\text {jets}\) processes were obtained at next-to-next-to-LO (NNLO) in QCD using FEWZ 3.1.b2 [44] and at next-to-LO (NLO) electroweak (EW) precision using MCSANC 1.01 [45], while that for the \(\text {Z} \rightarrow \upnu \upnu \) process was calculated at NLO in QCD using mcfm 6.6 [46]. Cross sections for the \(\text {W} \text {W} \rightarrow \ell \upnu \text {q} \text {q} \) and \(\text {W} \text {W} \rightarrow 2\ell 2\upnu \) processes were also obtained at NNLO in QCD using Ref. [47]. The \(t \overline{t}\) cross section was calculated at NNLO in QCD including resummation of next-to-next-to-leading logarithmic soft-gluon terms with Top++2.0 [48], and the single top quark cross section was obtained at NLO in QCD with Hathor v2.1 [49, 50]. The cross sections for the SM Higgs boson (\(\text {H}\)) processes were obtained at NLO, NNLO, or next-to-NNLO (N3LO) from Ref. [51], depending on the specific process.

A summary of the SM simulation samples can be found in Table 1. Considering their perturbative accuracy, for the processes that are expected to be dominant in several final states, such as the W, Z, diboson, and top quark processes, it is preferred to use samples generated at NLO or better. However, simulated samples at LO are also used to improve the statistical precision in the tails of the kinematic phase space. The choice of MC generators in some cases also reflects the limited availability of simulated samples for this analysis. Given the analysis requirement of the presence of at least a single isolated lepton, the QCD multijet, \(\upgamma \)+jets, and diphoton processes are not expected to be the dominant processes although they have large cross sections. For such processes, simulated samples generated at LO are used. Both the \(\text {W} \)+jets and the \(\text {Z} \)+jets processes in leptonic final states are simulated with MadGraph 5_amc@nlo at NLO precision with up to two additional partons, with the FxFx scheme used for merging, and additional samples generated with pythia at LO precision and with powheg at NLO precision are used to improve the statistical precision at high masses for the \(\text {W} \)+jets process and the \(\text {Z} \)+jets process, respectively. For the \(\text {Z} \)+jets process with neutrinos in the final state, MadGraph 5_amc@nlo samples produced at LO are used. Simulated samples for the \(\upgamma \)+jets process are generated at LO using MadGraph 5_amc@nlo. The \(t \overline{t}\) process is simulated using powheg at NLO, and MadGraph 5_amc@nlo simulation at NLO is used for top pair production in association with vector bosons. The \(t \overline{t}\) \(t \overline{t}\) process is simulated at NLO using MadGraph 5_amc@nlo. Single-top processes are simulated at NLO using powheg and single-top production in association with gauge bosons is simulated at NLO using MadGraph 5_amc@nlo. At NLO in perturbative QCD, the \(\text {t} \text {W} \) single-top process interferes with the \(t \overline{t}\) process, and this effect is accounted for using the “diagram removal” approach to correct the simulated samples for the \(\text {t} \text {W} \) single-top production process [52]. Diboson processes are simulated at NLO using a combination of MadGraph 5_amc@nlo and powheg, with the exception of the \(\text {W} \upgamma \) and \(\upgamma \upgamma \) processes where simulated samples at LO generated with MadGraph 5_amc@nlo and sherpa are used in certain phase space regions. QCD multijet events are simulated by MadGraph 5_amc@nlo at LO precision. Triboson processes are simulated at NLO with MadGraph 5_amc@nlo. The different Higgs boson production processes are simulated at NLO using a combination of MadGraph 5_amc@nlo and powheg.

For the samples listed in Table 1, kinematic overlaps, resulting from additional samples used to increase the statistical precision, are removed. For most of the SM processes, the statistical precision of the number of simulated events corresponds to an integrated luminosity much larger than the analysed data set. This is not the case, in general, for the QCD multijet and the \(\upgamma \)+jets MC samples; however, this analysis considers only final states that contain at least one isolated electron or muon, and the contribution of these SM processes is predicted to be small in such final states. For SM processes that are expected to have significant contributions in several different final states, such as the W, Z, diboson, and top quark processes, the number of simulated events correspond to a range of 3 to 10,000 times the number of expected events based on the integrated luminosity of the data set analysed.

4 Object and event selection

It is necessary to determine the physics object content of each event unambiguously, which includes the identification of each reconstructed object and the removal of overlap between the individual objects. Since the analysis relies on simulation for the SM background prediction, tight selection criteria for the different objects are used to minimise the effect of misidentification while still retaining a reasonably high efficiency for selecting the objects. A summary of the object selection criteria, discussed in this Section, is shown in Table 2.

Events are required to be triggered by one of several single-lepton, dilepton, or single-photon triggers. The single-photon trigger improves the electron trigger efficiency at high electron momenta when used in combination with the single-electron trigger. Selected events are required to contain the reconstructed objects that correspond to the associated trigger for the event, and have a value of \(p_{\mathrm{T}}\) that is above the trigger requirement. Overlap between triggers is removed, such that events triggered by two or more triggers are not counted multiple times. Details of the trigger selection are given in Table 3.

Muons are required to have \(p_{\mathrm{T}} > 25\,\,\text {GeV} \) and \(|\eta | < 2.4\). Two dedicated selection criteria are used to select well-reconstructed, isolated muons: “Tight muon ID” for muons with \(p_{\mathrm{T}}\) up to 200\(\,\,\text {GeV}\), and “high-momentum muon ID” for muons with \(p_{\mathrm{T}} > 200\,\,\text {GeV} \), as described in Refs. [18, 53]. The efficiency for the selection of muons with such criteria has been measured to be between 96 and 98%, whereas the probability of pions (kaons) to be misidentified as muons is about 0.1 (0.3)% [18].

Selected electrons need to fulfill \(p_{\mathrm{T}} > 25 \,\,\text {GeV} \) and \(|\eta | < 2.5\), excluding electrons in the barrel endcap transition region of the CMS ECAL (\(1.44< |\eta | < 1.57\)). Two dedicated selection criteria are applied: the “tight” selection criteria are used for electrons with \(p_{\mathrm{T}}\) up to 200\(\,\,\text {GeV}\), and the “HEEP” electron selection is used for electrons with \(p_{\mathrm{T}} > 200\,\,\text {GeV} \) [17, 54]. Detailed studies of efficiency and misidentification probabilities for the electron reconstruction are presented in Ref. [17].

Photons with \(p_{\mathrm{T}} > 25\,\,\text {GeV} \) and \(|\eta | < 1.44\) in the barrel region of the CMS ECAL, where the misidentification rate is low, are selected if they pass the dedicated “tight” photon identification requirements that have been introduced in Ref. [16] and adapted for the present data set.

Jets must have \(p_{\mathrm{T}} > 50\,\,\text {GeV} \) and \(|\eta | < 2.4\). These criteria select well-reconstructed jets within the coverage of the CMS tracking system in the high-pileup environment of the 2016 data taking, with an average of 22 \(\text {p} \text {p} \) interactions per bunch crossing. For \(\text {b} \)-tagged jets, the chosen “tight” working point corresponds to about 41% efficiency in identifying \(\text {b} \) jets and about 0.1% misidentification rate for light-flavour and gluon jets [21]. The missing transverse momentum \(p_{\mathrm{T}} ^{\mathrm{miss}}\) in the event is included as an object in the event classification if \(p_{\mathrm{T}} ^{\mathrm{miss}} > 100\,\,\text {GeV} \). The distribution of \(p_{\mathrm{T}} ^{\mathrm{miss}}\) at small values is strongly affected by resolution effects, and for most cases the value of \(p_{\mathrm{T}} ^{\mathrm{miss}}\) associated with BSM phenomena is large. Thus, if \(p_{\mathrm{T}} ^{\mathrm{miss}} < 100\,\,\text {GeV} \), this variable is not used in the selection process.

A reconstructed object may be identified as more than one particle. It is also possible for some detector signals to be used for different reconstructed objects, e.g. an electron and a photon overlapping in the calorimeters. Possible ambiguities are resolved as follows. First, the list of particles is prioritised in the order of muons, electrons, photons, and finally jets, assumed to correspond to the order of purity. Then, in the case of an ambiguity such as the reconstruction of multiple electrons or photons based on the same calorimeter energy deposit, or in the case of an object overlapping with a jet, the particle with the highest priority is selected. Other particles close in \(\varDelta R = \sqrt{{(\varDelta \eta )^2+(\varDelta \phi )^2}}\) are removed from the event (where \(\phi \) is the azimuthal angle in radians), using the threshold of \(\varDelta R = 0.5\) for jets and \(\varDelta R = 0.4\) for all other particles.

Events passing the above criteria are then categorised into event classes based on the event content. Event classes containing at least one lepton (electron or muon) are considered in the analysis.

5 The MUSiC search algorithm

The MUSiC analysis is designed to be robust, unbiased by specific BSM physics models, and as inclusive as possible. Every region is treated as a potential signal region. The modelling of the known SM background processes is based solely on MC simulation. No techniques based on control samples in data are employed to estimate the background expectation, since this would result in losing some kinematic regions in the data.

The main steps of the MUSiC search algorithm are described below, starting with the classification of events, then the introduction of the kinematic distributions of interest, followed by a description of the scanning procedure and the strategy to account for the look-elsewhere effect (LEE) using pseudo-data generation, and finally with the concept of the global overview of the scan results.

5.1 Classification of events

Events in data and simulated samples are assigned to different classes (final states) based on the physics object content of each event. To determine the object content of an event unambiguously, all selection criteria described in detail in Sect. 4 are applied both to the observed data and the simulation, resulting in a defined number of well-reconstructed objects in the final state of the event.

Each event is sorted into three different types of event classes:

-

1.

Exclusive event classes for events containing only those selected objects that are specified for the event class and no additional final state objects. Thus each event is assigned to just one exclusive class.

-

2.

Inclusive event classes contain events that include a nominal set of selected objects, but may contain additional objects. An event is assigned to all inclusive event classes that can be constructed from the selected objects. For example, events containing two muons and any number of additional objects would be classified into the \(2 \upmu +X\) inclusive event class.

-

3.

Jet-inclusive event classes are defined as inclusive classes but restrict additional allowed objects to jets. High jet multiplicities are not expected to be accurately described in the simulation, and thus all exclusive classes with five or more jets are instead assigned to the \(X + 5\text {jets} + \text {Njets}\) class, which includes events with at least five jets and is inclusive in terms of the number of additional jets that might be present. The threshold of five jets was chosen based on studies described in Sect. 8.1.

There is no explicit limit placed on the number of objects, and, consequently, on the number of event classes, except for the case of jets, where it is set to five. Events with greater than five jets can still enter the inclusive and jet-inclusive event classes. The construction of event classes from the physics object content of the final state, using the example of an event containing \(1\text {e} +2\upmu +1\text {jet}\), is illustrated in Fig. 1.

All exclusive event classes are statistically independent of each other and can be regarded as uncorrelated (counting) experiments. This is not the case for the inclusive event classes, where a single event will generally end up in more than one event class. The resulting direct correlations are included while performing the statistical analysis, with the exception of correlations in the statistical uncertainties in the simulated events, which are assumed to be negligible. In the presence of a possible signal, it is a priori unknown how the same events populate different inclusive and jet-inclusive event classes, and therefore further interpretation of the results of the statistical analysis would need to include the possible consequences of such an effect.

5.2 Kinematic distributions of interest

Although signs of new physics can become visible in the distributions of many different kinematic variables, three are chosen for this analysis that seem especially promising in terms of sensitivity to phenomena at high \(p_{\mathrm{T}}\) predicted by a large number of BSM scenarios. This choice also prevents the analysis from being overly complex, as might result from the addition of more kinematic distributions. The three chosen kinematic distributions are:

-

1.

\(S_{\mathrm{T}}\): The \(p_{\mathrm{T}}\) sum of all the physics objects that are considered for that event class, defined as

$$\begin{aligned} S_{\mathrm{T}} = \sum _{i}|\vec {p}_{T,i} | \end{aligned}$$(1)where the sum is over the particles that make up the event class. It is the most general variable of the three. It is calculated for every event passing the analysis requirements, and includes \(p_{\mathrm{T}} ^{\mathrm{miss}}\) when applicable. The BSM physics is often expected to involve new heavy particles, the effects of which would show up predominantly in the tails of the \(S_{\mathrm{T}}\) distributions.

-

2.

\(M\) or \(M_{\mathrm{T}}\): The combined mass \(M\) is the invariant mass calculated from all physics objects considered for the event class. For classes with \(p_{\mathrm{T}} ^{\mathrm{miss}}\), the transverse mass \(M_{\mathrm{T}}\) is used instead of \(M\), because the longitudinal component of the missing momentum is unknown. Here, \(M_{\mathrm{T}}\) is defined as

$$\begin{aligned} M_{\mathrm{T}} = \sqrt{\Bigl (\sum _{i}E_{i}\Bigr )^2-\Bigl (\sum _{i}p_{x,i}\Bigr )-\Bigl (\sum _{i}p_{y,i}\Bigr )} \end{aligned}$$(2)where the sum is over the particles that make up the event class, \(E_{i}\) is the energy, and \(p_{x,i}\) and \(p_{y,i}\) are the x and y components of the momenta of the particle with index i. This distribution is important for cases where a new massive particle is produced as a resonance and the mass distribution of its decay products is a prominent place to look for a deviation. All events in the event classes containing at least two objects are used to evaluate the combined mass.

-

3.

\(p_{\mathrm{T}} ^{\mathrm{miss}}\): For classes with significant \(p_{\mathrm{T}} ^{\mathrm{miss}}\) of at least 100\(\,\,\text {GeV}\), it is an indicator of the energy of particles escaping detection. Only events with a substantial amount of \(p_{\mathrm{T}} ^{\mathrm{miss}}\) are considered here, since the low-\(p_{\mathrm{T}} ^{\mathrm{miss}}\) region is dominated by detector resolution effects and SM processes containing neutrinos. High values for \(p_{\mathrm{T}} ^{\mathrm{miss}}\) can be associated with new particles with large \(p_{\mathrm{T}}\) that do not interact with the detector.

For a given exclusive event class, object types and multiplicities are identical in all events. Variables for the distributions of interest are calculated from the kinematic properties of all final-state objects. Since inclusive and jet-inclusive event classes also include events with more objects than those associated with the corresponding exclusive event class, an ambiguity must be resolved to ensure that the same event property is evaluated for all events in the distribution. Hence, only the objects stated explicitly in the name of the event class are used to derive the kinematic properties. For example, in the case of the \(1\text {e} +2\upmu +p_{\mathrm{T}} ^{\mathrm{miss}} +X\) event class, only the four mentioned objects (one electron, two muons, and \(p_{\mathrm{T}} ^{\mathrm{miss}}\)) contribute to the \(S_{\mathrm{T}}\) distribution, in each case considering the ones with the highest \(p_{\mathrm{T}}\) if more than the mentioned number of a particular object are present.

The bin widths for the kinematic distributions probed are chosen as a compromise between a relatively large bin width, which is favorable in terms of computation time but detrimental in terms of sensitivity to potential narrow signals, and a small bin width, where random fluctuations will gain in importance and possibly mask the actual deviations of interest. An optimal choice is made in an automated way based on the typical total detector resolution of all objects in each specific kinematic region, leading to a larger value for the bin width at higher energies. All bin widths are integer multiples of 10\(\,\,\text {GeV}\).

5.3 Scan for deviations: region of interest scan

A statistical analysis is performed to identify deviations between data and the SM prediction by initially comparing the event yields in the event classes, followed by a complete scan of the kinematic distributions in the different event classes, referred to as the region of interest (RoI) scan. The procedure is described in two parts below, beginning with a discussion of the \(p\text { value}\) definition that is used to quantify any observed deviation, followed by the description of the construction of the regions within which the algorithm searches for deviations.

The measure for deviations is a \(p\text { value}\) that describes the agreement between simulation and data using a hybrid Bayesian-frequentist approach, where the statistical fluctuations are assumed to follow a Poisson distribution, and nuisance parameters are modelled using a Gaussian prior function. Both excesses and deficits are taken into account. The \(p\text { value}\) \(p_{\mathrm{data}}\) is defined as:

where \(N_{\mathrm{data}}\) is the number of observed events, \(N_{\mathrm{SM}}\) is the number of expected events from SM simulation, and \(\sigma _{\mathrm{SM}}\) denotes the uncertainty in \(N_{\mathrm{SM}}\), combining the statistical uncertainty arising from the number of generated MC events and systematic uncertainties. The probability distribution is summed up from \(i = N_{\mathrm{data}}\) to infinity for the case of an excess in observed data compared with the expectation, and from \(i = 0\) to \(N_{\mathrm{data}}\) for the case of a deficit in observed data compared with the expectation. The Gaussian distribution is truncated at zero and normalised to unity with a factor C.

A region is defined as any contiguous combination of bins. Since several regions can contain the same bins, they are not disjoint, and a distribution with \(N_{\mathrm{bins}}\) bins will result in \(N_{\mathrm{bins}} (N_{\mathrm{bins}} + 1) / 2\) connected regions. All bins in question are then successively combined into regions by adding up their individual contributions, and a \(p\text { value}\) is calculated. The smallest \(p\text { value}\) (\(p^{\mathrm{data}}_{\mathrm{min}}\)) defines the RoI. This process is illustrated in Fig. 2. This procedure, referred to as the RoI algorithm, is performed for all distributions in all classes.

The minimum number of bins within a region is required to be three for the \(S_{\mathrm{T}}\) and \(p_{\mathrm{T}} ^{\mathrm{miss}}\) distributions, since in these cases narrow deviations of less than three bins would be indicative of statistical fluctuations. Regions with a single bin are allowed for the mass distributions. Regions where statistical accuracy is poor due to the limited number of simulated events leading to an unreliable background estimate are removed, effectively considering only regions composed of a larger number of bins in such cases. Separate monitoring is in place to ensure that no potentially interesting signal is missed because an event class that contains data events fails to reach the required number of simulated events, particularly checking for event classes with more than one event in the recorded data where the expectation from simulation is below a threshold of 0.1 events.

5.4 Post-trial probability (\(\tilde{p}\)) and look-elsewhere effect

The chosen definition of the \(p\text { value}\) serves as a measure of the probability of observing a deviation in a single region, while the algorithm is intended to give a measure of finding a deviation of equal or lesser compatibility anywhere in the distribution. We define the probability \(\tilde{p}\) to observe such a deviation in any of the considered regions throughout the distribution. The transition from a per-region to a per-distribution \(p\text { value}\), sometimes referred to as a post-trial probability, is a requirement to allow the comparison of observed deviations between many different distributions. A given \(p\text { value}\) can be translated into a \(\tilde{p}\text { value}\) by the LEE effect correction, which describes the increased probability to observe a significant deviation if a large number of regions is considered.

An analytical calculation of the required correction is difficult because of correlations between bins and the irregular shape of systematic uncertainties, but the LEE correction can be determined using pseudo-experiments. Pseudo-experiments are generated in a randomized manner according to the background-only hypothesis, varying the prediction of the simulation according to the associated uncertainties.

The RoI is not necessarily at the same position as the one found in the data. The number of trials resulting in a local \(p\text { value}\) (\(p_{\mathrm{min}}\)) smaller or equal to the one found in the data to simulation comparison (\(p^{\mathrm{data}}_{\mathrm{min}}\)) is determined and divided by the full number of trials to get the fraction \(\tilde{p}\):

This fraction is the post-trial \(p\text { value}\) (\(\tilde{p}\)), representing a statistical estimate of how probable it is to see a deviation at least as strong as the observed one in any region of the distribution. While optimising for the computation time and also ensuring a robust measurement, and at the same time taking into account correlations across the different event classes, a total of 10,000 trials are conducted for each event class.

Pseudo-experiments for a single distribution in a single class require generating randomised values for each bin n to closely resemble the ensemble of expected values given the background-only (null) hypothesis. The systematic uncertainties in the null hypothesis are represented by a set of nuisance parameters \(\nu _j\), which are expected to be fully correlated across all bins. This assumption requires that systematic uncertainties have been separated to a level at which the underlying processes responsible for the uncertainty remain similar for the complete range considered in a distribution. The effect of each nuisance parameter is modelled with a Gaussian centred on the mean expectation value for each bin n. To include these correlation effects, a random number \(\kappa _{j}\), following a standard normal distribution, is generated for each nuisance parameter, excluding the statistical uncertainty of the MC samples. The mean expectation value \(\langle N_{n} \rangle \) in each bin is then shifted according to

where \(\langle N_{n,{\text {shifted}}}\rangle \) is the shifted mean in each bin, and \(\delta _{\nu _{j,n}}\) denotes the symmetrised 68% confidence interval for \(\nu _j\) in bin n. The value of \(\langle N_{n,{\text {shifted}}}\rangle \) is further spread using a Poisson distribution to model the expected statistical variations.

The procedure employed here has been developed considering the limitations arising from the absence of control regions and the requirement to keep the algorithm consistent over the vast range of final states probed. The procedure has been validated using toy simulations for the various statistical configurations that are expected in the analysis of the data set.

5.5 Global overview of the RoI scan

Considering the large number of different event classes and kinematic distributions scanned, a convenient global overview of the scan is required. The RoI scanning algorithm gives a RoI and its associated \(\tilde{p}\text { value}\) separately for each event class and each kinematic distribution. To create the global overview, the results of the RoI scanning algorithm for the different event classes are grouped and presented together, separately for each of the three kinematic distributions scanned (\(S_{\mathrm{T}}\), \(M\), and \(p_{\mathrm{T}} ^{\mathrm{miss}}\)) for a particular type of event class (exclusive, inclusive, or jet-inclusive). For each such grouping, the observed distribution of deviations in the different event classes is compared with the expectation for the same distribution from the SM-only hypothesis, obtained from the pseudo-experiments. Any unexpected deviation from the scans would become apparent in such a comparison. This would also probe certain BSM signals that show smaller deviations spread out over several different final states, in addition to such scenarios where the signature is a large deviation in specific final states.

To produce a global overview of all event classes, the \(\tilde{p}\text { values}\) calculated for each kinematic distribution are summarised in a single histogram. A \(\tilde{p}\text { value}\) can be calculated for any particular pseudo-experiment in the same way as is done for collision data, by dividing the number of such experiments with \(p\text { values}\) that are smaller than the \(p\text { value}\) for the particular pseudo-experiment under consideration by their total number. This represents the \(\tilde{p}\text { value}\) for one pseudo-experiment, and similarly \(\tilde{p}\text { values}\) can be calculated for all the different pseudo-experiments. The resulting histogram of \(\tilde{p}\text { values}\) from the different pseudo-experiments shows the expected distribution of \(\tilde{p}\text { values}\) for the simulation-only hypothesis. The \(\tilde{p}\text { value}\) distribution obtained from the observed distribution (from collision data) is then compared with the one obtained from pseudo-experiments, taking the median of the distributions from the different pseudo-experiments as the central value for the SM-only hypothesis. Furthermore, \(\pm 1 \sigma \) (\(\pm 2 \sigma \)) uncertainty bands around the median expectation are obtained corresponding to the bands within which the distributions from 68 (95)% of the pseudo-experiments are contained. This is summarised with an illustrative example in Fig. 3.

Illustrative example of a \(\tilde{p}\text { value}\) distribution for different event classes (final states) based on a RoI scan of an \(S_{\mathrm{T}}\) distribution. Histograms of the number of event classes corresponding to a bin in \(-\log _{10}(\tilde{p})\) for the different pseudo-experiment iterations (shown on the left) are used to create the global overview plot for a scan of each particular kinematic distribution for each event class type (shown on the right for an \(S_{\mathrm{T}}\) distribution scan in exclusive event classes, without showing the observed deviations from data here). The mean and the median distributions of \(\tilde{p}\text { values}\) obtained from the different pseudo-experiments are shown as solid cyan and dotted grey lines. The distribution estimated from the analytic calculation is shown as a green dashed line. The 68% (\(\pm 1 \sigma \)) and 95% (\(\pm 2 \sigma \)) uncertainty bands are displayed as dark and light blue areas, respectively

In addition to this numerical calculation, the \(\tilde{p}\text { value}\) distribution can also be determined analytically. Since \(\tilde{p}\text { values}\) are distributed uniformly, the content of each bin (\(N_{\mathrm{bin}}\)) can be evaluated from the edges of the bin and the number of event class distributions (\(N_{\mathrm{dist}}\)) contributing to the bin. For the double-logarithmic scale used to plot the \(\tilde{p}\text { value}\) distribution (as in the nominal distribution shown in Fig. 3), the content of each bin is given by

where \(B_{\mathrm{low}}\) and \(B_{\mathrm{up}}\) are the lower and upper bin edges, respectively. This analytic description is depicted by a green dashed line in the \(\tilde{p}\text { value}\) distribution shown in Fig. 3. The analytical and numerical distributions are found to agree. The approach to estimate systematic uncertainties, as implemented in this analysis and described later, can affect the \(\tilde{p}\text { value}\) distribution with more event classes showing smaller deviations and appearing in the bin with the smallest deviations. The size of the uncertainty bands of the expected \(\tilde{p}\text { value}\) distributions has been increased, motivated by studies with pseudo-data that include the potential effect of overestimating the systematic uncertainties by up to 50%. Since this effect is limited to the first few bins in the \(\tilde{p}\text { value}\) distributions, which correspond to small deviations, the modification does not have a significant impact on the part of the distribution where possible statistically significant deviations are expected to appear.

6 Systematic uncertainties

Estimates of all major known sources of systematic uncertainties are incorporated. In particular, uncertainties in the following quantities are included: integrated luminosity, contributions of pileup interactions, total cross sections of SM processes, PDFs, energy and momentum scale of all objects, reconstruction efficiencies, resolutions, misidentification probabilities, and the number of simulated events. The uncertainties arising from the finite size of the simulated samples are uncorrelated between bins. The effect of each of the other sources of uncertainty is fully correlated across all bins and event classes. Systematic uncertainties that influence kinematic properties are evaluated by variations of such variables, which might shift some particles in and out of the acceptance for the selection. This effect is included by allowing uncertainty contributions to cause migrations between different event classes. A summary of the systematic uncertainties is presented in Table 4.

The uncertainty in the value of the integrated luminosity is 2.5% [56], and this uncertainty is propagated into the analysis as a normalisation uncertainty in all simulated events in each region. Since the pileup conditions assumed in the sample generation are not identical to the data, simulated samples are corrected to reproduce the pileup distribution in data, which has an average number of \(\text {p} \text {p} \) interactions per bunch crossing of approximately 22 for the 2016 data sample. The associated uncertainties because of the estimation of the pileup distribution in data are propagated through the individual event weights for the MC samples in the analysis.

The uncertainties in the total cross sections for individual SM physics processes are included, although not all cross sections are known to the same order of perturbation theory. Uncertainties in individual simulated samples of a single physics process, e.g. samples of different phase space regions or QCD multijet samples enriched in heavy flavours, are assumed to be fully correlated. The total cross section uncertainty is evaluated from all contributing physics processes assuming their separate uncertainties are uncorrelated. For processes generated at LO we apply an uncertainty of 50% in the value of the cross section. For higher-order calculations the effect of missing higher-order corrections is estimated using coherent variations in the factorisation and renormalisation scales in the MC simulation by factors of 2.0 and 0.5 up and down, respectively.

To estimate the uncertainties corresponding to the PDFs, the procedure outlined in the PDF4LHC recommendations for the LHC Run 2 [55] is used. These uncertainties are treated as arising from a single source, and hence are fully correlated over all bins and event classes. For the assumed central value of the strong coupling \(\alpha _{\mathrm{S}} = 0.118\), variations of \(\pm 0.0015\) are used. This simplified approach is used here, considering the complexities associated with the variety of event classes and the number of bins corresponding to the different kinematic observables that are used for the scan in each event class probed. While the correlations are treated in a simplistic manner in this approach, the limitations of this approach are not expected to have a significant impact on the results considering the impact of other systematic and statistical uncertainties that are taken into account in this analysis.

Uncertainties in the energy or momentum measurement of the different physics objects are estimated by varying the measured kinematic observables such as \(p_{\mathrm{T}}\) and \(\eta \) within their uncertainties. For all variations, the full analysis is performed and the uncertainty in the event yield is derived from the resulting difference in each kinematic distribution. The effect of these variations in the measured \(p_{\mathrm{T}} ^{\mathrm{miss}}\) is also included. The uncertainty in the muon momentum scale has a dependence on \(p_{\mathrm{T}}\) and \(\eta \) that is taken into account. For \(1\,\text {TeV} \) muons in the central region of the detector, the uncertainty is 7% [18]. Uncertainties in the energy scale for electrons and photons have been estimated separately for the barrel and endcap regions, and are 0.2% (barrel) and 0.3% (endcap) for low-energy electrons [17], 0.15% (barrel) and 0.30% (endcap) for low-energy photons [16], and 2% for high-energy electrons and photons [17]. Corrections are applied to the energy scale of reconstructed jets to account for effects from pileup, simulated true jet response, and residual data and simulation scale factors, as summarised in Ref. [19]. The associated uncertainties range from 3–5%, depending on the jet \(p_{\mathrm{T}}\) and \(\eta \). Although the jet energy corrections are not constant throughout the entire detector, they will be similar for jets close to each other. For this analysis it is assumed that jet energy scale uncertainties are fully correlated. All energy deposits measured in the CMS detector and not assigned to a reconstructed physics object are summed up and referred to as unclustered energy, with its uncertainty propagated to the \(p_{\mathrm{T}} ^{\mathrm{miss}}\) uncertainty [22].

Scale factors, in general close to one and depending on \(p_{\mathrm{T}}\) and \(\eta \), correct for differences in the efficiencies for reconstruction and identification of the objects in data and simulation. The uncertainties arising from the employed methods or limited size of the analysed data sets used to calculate the efficiencies are included. Uncertainties are assumed to be fully correlated for objects of the same type and uncorrelated for objects of different type. For \(\text {b} \) tagging uncertainties we use \(p_{\mathrm{T}}\) and jet hadron flavour-dependent scale factors along with their uncertainties, which are derived from data [21].

Although the selection criteria have been chosen to minimise misidentification, residual amounts of misidentified objects remain. The fraction of misidentified objects is determined in the simulation with generator-level information by matching generated particles to the reconstructed objects. To cover the uncertainty in misidentified objects (i.e. those not matched to a generated particle of the same type), we apply a 50% uncertainty in the simulation. Misidentification is mainly relevant when jets are wrongly identified as charged leptons or photons, whereas the uncertainty in the inverse process, i.e. leptons misidentified as jets, is usually negligible compared to the reconstruction efficiency and scale factor uncertainties. The uncertainty assigned has been validated in the studies described later in Sect. 8.1. Misidentification uncertainties are assumed to be fully correlated for objects of the same type, and uncorrelated for objects of different types.

The statistical uncertainty in the number of generated events and the total event weight, arising from the limited number of events produced for each simulated process, is included. Contributions from different simulated data sets are uncorrelated when constructing regions.

7 Sensitivity studies

To illustrate the capability of the MUSiC analysis to identify deviations in the comparison of measured data and the SM simulation, two separate approaches are followed. In the first approach, a simulated BSM signal in addition to the SM simulation is injected into the analysis and compared to the SM simulation alone. In the second approach, the measured data are used, but a particular process is removed from the SM simulation. In both cases the MUSiC algorithm is shown to find event classes in significant tension with the SM expectation. Examples for both approaches are discussed here.

A dedicated BSM signal is used to test the ability of the MUSiC algorithm to identify possible new physics signals. A simulation of the BSM signal is added to the SM simulation, and pseudo-data are generated that can be scanned with the MUSiC algorithm against the SM-only background. The signal described here is expected to introduce a localised excess of events in individual final states: a new heavy vector boson \(\text {W} ^{\prime } \) is produced and promptly decays into a charged lepton and a neutrino, as predicted by the sequential standard model (SSM) [57]. In the CMS detector, such a signature is reconstructed as an event containing a single isolated, energetic charged lepton, substantial \(p_{\mathrm{T}} ^{\mathrm{miss}}\), and any number of jets originating from initial- or final-state radiation. Simulated samples for the \(\text {W} ^{\prime } \) process are produced at LO using pythia 8.212, and the cross sections are obtained at NNLO QCD using FEWZ for different values of the \(\text {W} ^{\prime } \) boson mass. Distributions of pseudo-data with an additional \(\text {W} ^{\prime } \) boson signal are generated 200 times per event class and kinematic variable. This ensures a statistically stable outcome. The \(p\text { value}\) between signal-induced pseudo-data and SM expectation is calculated for each of the pseudo-experiments, and the pseudo-data generation corresponding to the median \(p\text { value}\) is chosen as the representative event class distribution. Up to 10,000 pseudo-experiments are generated under the SM-only hypothesis to account for the LEE in each distribution and event class.

As a representative example, a scan of the (transverse) invariant mass distribution in exclusive event classes is presented here. The distribution of a signal and the SM background for the \(1\upmu +p_{\mathrm{T}} ^{\mathrm{miss}} \) event class is shown in Fig. 4 for a \(\text {W} ^{\prime } \) boson with mass of 3 \(\,\text {TeV}\). For \(\text {W} ^{\prime } \) masses of 2, 3, 4, and 5\(\,\text {TeV}\), the final \(\tilde{p}\) distributions for the scan of the invariant (transverse) mass in exclusive event classes are shown in Fig. 5. Two or more final states with significant deviations from the SM simulation beyond the expectation are found, as seen in the entries in the final bin of each distribution that lie outside of the uncertainty bands of the SM-only expectation, thus illustrating the ability of MUSiC to identify deviations arising from a signal. The observation of such deviations would prompt dedicated studies in the event classes of interest probing the possibility of BSM physics phenomena. The signal corresponding to 4\(\,\text {TeV}\) leads to significant deviations only in the \(1\text {e} +p_{\mathrm{T}} ^{\mathrm{miss}} \) and \(1\upmu +p_{\mathrm{T}} ^{\mathrm{miss}} \) event classes. As shown in Fig. 5, the scan performed for the \(\text {W} ^{\prime } \) boson with a mass of 5\(\,\text {TeV}\) did not show any significant deviations. These results are consistent with the dedicated analysis of the same data set [58], where stronger exclusion limits for the mass of the \(\text {W} ^{\prime } \) boson of 4.9\(\,\text {TeV}\) were placed at 95% confidence level based on the individual analyses in the \(1\text {e} +p_{\mathrm{T}} ^{\mathrm{miss}} \) and \(1\upmu +p_{\mathrm{T}} ^{\mathrm{miss}} \) channels, respectively, and 5.2\(\,\text {TeV}\) for the combination of both channels.

Distribution of \(\tilde{p}\text { values}\) for the RoI scan in exclusive classes for the invariant mass (transverse mass for classes with \(p_{\mathrm{T}} ^{\mathrm{miss}}\)) with assumed values for the mass of the SSM \(\text {W} ^{\prime } \) boson of 2 (upper left), 3 (upper right), 4 (lower left), and 5\(\,\text {TeV}\) (lower right). The uncertainty in the distribution of \(\tilde{p}\text { values}\) for the signal is obtained from the variations in the pseudo-data performed with the \(\text {W} ^{\prime } \) signal simulation

Another hypothetical BSM signal that has been used to test the capabilities of MUSiC is the EW production of sphalerons [59,60,61,62]. This model is based on a possible nonperturbative solution to the SM Lagrangian of the EW sector, which includes a vacuum transition referred to as a “sphaleron”. It plays an important role in the EW baryogenesis theory [63], which can explain the matter-antimatter asymmetry of the universe. The CMS experiment has published the results of a search for sphalerons in inclusive final states that are dominated by jets associated with the QCD multijet process [64]. No analysis targeting leptonic final states has been performed to date. The sphaleron signal sample used for the sensitivity study is generated at LO with the BaryoGEN v1.0 generator [65] with the CT10 LO PDF set [66] using a threshold energy \(E_{\mathrm{sph}}\) = 8\(\,\text {TeV}\) for the sphaleron transition. The cross section for sphaleron production is given by \(\sigma \) = PEF \(\sigma _{0}\) [62], where \(\sigma _{0} = 121\) fb for \(E_{\mathrm{sph}} = 8\,\text {TeV} \), and PEF denotes the pre-exponential factor, defined as the fraction of all quark–quark interactions above the sphaleron energy threshold \(E_{\mathrm{sph}}\) that undergo the sphaleron transition. The result of the MUSiC RoI scan for \(S_{\mathrm{T}}\) distributions in inclusive event classes is shown in Fig. 6, where the simulation of the sphaleron production with PEF = 0.05 is used as the signal. Several event classes with large deviations beyond the expectation from the SM-only hypothesis are identified in the final bins of the \(\tilde{p}\text { values}\) distribution. Among the most deviating event classes are the \(1 \upmu + 5 \text {jets} + p_{\mathrm{T}} ^{\mathrm{miss}}\ +X\), \(1 \text {e} + 5 \text {jets} + p_{\mathrm{T}} ^{\mathrm{miss}}\ +X\), \(1 \upmu + 1 \text {b} + 2 \text {jets} + p_{\mathrm{T}} ^{\mathrm{miss}}\ +X\), and \(1 \text {e} + 1 \upmu + 3 \text {jets} + p_{\mathrm{T}} ^{\mathrm{miss}}\ +X\) event classes. In the inclusive CMS analysis [64] based on the same data set, an upper limit of PEF = 0.002 was set at the 95% confidence level. This result demonstrates the sensitivity of MUSiC to an example of BSM physics in final states where no previous search has been conducted by the CMS experiment.

In a second approach to evaluate the sensitivity of the MUSiC analysis, a single SM process is removed from the SM simulation, and the scanning algorithm is applied against the recorded CMS data using the modified SM simulation. In the example shown here, the process of \(\text {W} \text {Z} \) diboson production is removed. Several final states show large and significant deviations with \(\tilde{p} < 0.0002\), compared to the prediction of having no final states showing such a deviation based on the simulation. The most significant final states concern classes with three leptons, as well as three leptons and \(p_{\mathrm{T}} ^{\mathrm{miss}}\), corresponding to event classes where the \(\text {W} \text {Z} \) process is expected to contribute, confirming the ability of the MUSiC analysis to detect deviations corresponding to the missing \(\text {W} \text {Z} \) process. Figure 7 shows the event class \(3\upmu +p_{\mathrm{T}} ^{\mathrm{miss}} \) with and without the \(\text {W} \text {Z} \) process as part of the SM simulation. The sensitivity has also been verified by removing other SM processes with smaller cross sections, such as \(\text {Z} \text {Z} \) and \(t \overline{t} \text {Z} \) production, from the SM simulation, leading to similar conclusions. For the case where the \(t \overline{t}\text {Z} \) process is removed from the SM background, the \(3 \text {e} + 1 \text {b} + 2 \text {jets} +\text {Njets}\) jet-inclusive event class shows the most significant deviation with \(\tilde{p} < 0.0002\) for the RoI scan of the \(S_{\mathrm{T}}\) distributions in jet-inclusive event classes.

Distribution of \(\tilde{p}\text { values}\) for the RoI scan in inclusive classes for the \(S_{\mathrm{T}}\) distributions for a sphaleron signal with \(E_{\mathrm{sph}} = 8\,\text {TeV} \) and PEF = 0.05. The uncertainty in the distribution of \(\tilde{p}\text { values}\) for the signal is obtained from the variations in the pseudo-data performed with the sphaleron signal simulation

Distributions of \(S_{\mathrm{T}}\) for the \(3\upmu +p_{\mathrm{T}} ^{\mathrm{miss}} \) exclusive class without (upper) and with (lower) \(\text {W} \text {Z} \) production as part of the SM simulation. The data events are shown in black and the simulations of the SM processes are shown as coloured histograms. The region enclosed within the red dashed lines is the region of interest

These sensitivity studies emphasize the ability of the MUSiC algorithm to identify deviations of the data from the simulated background.

8 Results

Using the MUSiC classification procedure we observe 498 exclusive event classes and 571 (530) inclusive (jet-inclusive) event classes with at least one data event. For the number of classes in the simulation, we use a lower threshold of 0.1 on the expected total yield to make the number of classes stable against small changes in the total number of simulated events, and to ensure well-defined statistical properties for the comparison of deviations. We did not find any event class that contained data but no simulated events at all. No event class with a total expected yield below 0.1 events from the simulation was found that contained more than one data event, which would have required further investigation.

Before the results of the scan algorithm are presented in detail, the overall performance to reconstruct and identify objects and their multiplicities is discussed, based on a set of final states where a single SM process is expected to dominate, and where contributions from a potential signal are unlikely, based on previously published search and precision measurement results.

8.1 Commissioning studies and vetoed event classes

The final state \(\text {Z} \rightarrow \ell \ell +X\) is defined by the presence of at least two same-flavour leptons (e or \(\upmu \)) and any additional number of particles. For the total inclusive selection, the invariant mass of the lepton pair in the event is studied along with the distribution of the number of jets and the \(\varDelta R\) distribution between the leading lepton and a jet, to verify the event cleaning introduced in Sect. 4. The distribution of the number of \(\text {b} \) jets is checked for \(\text {Z} \rightarrow \ell \ell +X\) with at least two jets. We choose events with electron or muon pairs within a \(20\,\,\text {GeV} \) window around the mass of the Z boson to further validate our ability to reliably reconstruct the lepton kinematic properties, using the \(p_{\mathrm{T}} \) distribution and angular distributions for \(\eta \) and \(\phi \) of the two leading leptons. The distributions are in agreement with the SM simulation within the uncertainties. In addition, the global event properties \(p_{\mathrm{T}} ^{\mathrm{miss}}\), \(S_{\mathrm{T}}\), and \(H_{\mathrm{T}}\) (defined as the sum of \(p_{\mathrm{T}} \) of all jets and \(\text {b} \) jets in an event) are checked for events in the Z mass window, and are in agreement with the SM simulation. Events in the Z boson mass window along with one additional lepton of a different flavour and without substantial \(p_{\mathrm{T}} ^{\mathrm{miss}}\) (\(< 100 \,\,\text {GeV} \)) are selected, which form a region dominated by SM processes with relatively small cross sections and sensitive to possible misidentification of charged leptons. The \(S_{\mathrm{T}}\) distribution for that selection is in agreement with the prediction within the uncertainties.

The final state \(t \overline{t} \rightarrow \ell +2 \text {jets} + 2 \text {b} + X\) is defined by the presence of at least one lepton (e or \(\upmu \)), two jets, two \(\text {b} \)-tagged jets, and any additional number of final-state objects (i.e. additional leptons, jets, or \(\text {b} \) jets). We use this final state to validate our ability to describe the kinematic properties in events with a complicated event topology and a larger contribution from misidentified objects. An overall good agreement is observed between the data and simulation. In addition to the inclusive selection, we require the mass of the jet pair to be within a \(30\,\,\text {GeV} \) window centered on the W boson mass, and the \(M_{\mathrm{T}}\) of the lepton plus \(p_{\mathrm{T}} ^{\mathrm{miss}}\) system to be larger than \(60\,\,\text {GeV} \). Within this selected region we check the hadronic activity \(H_{\mathrm{T}}\) and find no significant deviations of the data from the expectation.

Data and SM predictions for the most significant exclusive event classes, where the significance of an event class is calculated in a single aggregated bin. Measured data are shown as black markers, contributions from SM processes are represented by coloured histograms, and the shaded region represents the uncertainty in the SM background. The values above the plot indicate the observed \(p\text { value}\) for each event class

Overview of total event yields for event classes corresponding to the double-electron (upper) and for the single-muon + \(p_{\mathrm{T}} ^{\mathrm{miss}}\) object groups (lower). Measured data are shown as black markers, contributions from SM processes are represented by coloured histograms, and the shaded region represents the uncertainty in the SM background. The numbers above each plot indicate the observed \(p\text { value}\) for the agreement of data and simulation for the corresponding event class

Kinematic distributions of photons are studied in photon-triggered events, using \(\upgamma \)+jets events with one photon, one jet, and no leptons nor substantial \(p_{\mathrm{T}} ^{\mathrm{miss}}\). The kinematic distributions of the photons, such as the \(p_{\mathrm{T}} \), \(\eta \), and \(\phi \), are in reasonable agreement with the SM simulation.

A few final states have been found to be unsuitable for the present analysis, since they require special treatment of simulated samples in these specific final states, which cannot be applied generally, and are therefore removed from the analysis. This is the case for the event classes containing two same-flavour leptons and one photon, but no additional leptons or photons. These classes are affected by the overlap between simulated samples for the inclusive \(\text {Z} (\rightarrow \ell ^+\ell ^-)+\text {jets}\) process and specific samples for the SM production of a Z boson in association with a photon, leading to an overestimation of the background by the simulation. Since no consistent overlap removal could be performed in these event classes, they are removed from further analysis. Dedicated analyses of the same data set target such final states [67].

8.2 Total yield scans and object group representation

Scans are performed based on the total event yield in the different event classes between data and SM expectation, calculating the \(p\text { value}\) for each event class based on the total yield. Broad agreement is observed between the data and simulation, with no particular event class being found to have a significant discrepancy between the data and the SM simulation. Selected results for the exclusive event classes are shown in Fig. 8, where the 20 most significant event classes are displayed, along with the \(p\text { value}\) for each event class calculated based on the total event yield. The \(p\text { values}\) for the most significant classes are within the expectations of the SM considering the number of classes.

Further results are presented of the comparison of the total event yield in event classes between data and the SM expectation, grouped by their object content. The term “object group” is used to describe a set of classes based on the composition of its event content, e.g. the double electron object group consists of all classes with exactly two electrons and any number of jets (or \(\text {b} \) jets). Two examples are shown in Fig. 9, for the double electron object group and for the single-muon + \(p_{\mathrm{T}} ^{\mathrm{miss}}\) group. For a quantitative comparison of data and simulation, the event classes are displayed along with the corresponding \(p\text { value}\) for each event class. Different jet multiplicities are overall well described, and the total event yields agree with the SM simulation within their uncertainties for different dominating processes, where Z and W boson decays dominate when additional light-flavour jets are present, whereas final states with additional \(\text {b} \) jets are dominated by \(t \overline{t}\) production. Figures for additional object groups can be found in Appendix.

8.3 Results of the RoI scans

Some typical examples of kinematic distributions are shown. The distributions in Fig. 10 for \(S_{\mathrm{T}}\) and \(M\) belong to the \(2\upmu \) exclusive event class, and the \(p_{\mathrm{T}} ^{\mathrm{miss}}\) distribution is from the \(2\upmu +p_{\mathrm{T}} ^{\mathrm{miss}} +X\) inclusive event class. No significant deviations are found with respect to the SM expectations. The aforementioned distributions illustrate the variable binning depending on the resolution, and the contributions of the different physics processes. They also show experimental features arising from a combination of the threshold effects, such as the trigger and the minimum \(p_{\mathrm{T}}\) of the selected objects, along with effects related to the underlying physics, such as the peak associated with the Z boson. In the \(p_{\mathrm{T}} ^{\mathrm{miss}}\) distribution, a global offset between data and SM simulation is observed, covered by the uncertainties, which are mostly related to \(p_{\mathrm{T}} ^{\mathrm{miss}}\) and dominated by the uncertainties in the jet energy scale and resolution. In general, the observed differences between data and SM simulation are covered by the systematic uncertainties over the entire kinematic ranges, and the resulting \(\tilde{p}\text { values}\) for the regions of interest indicate agreement between the two.

Example \(S_{\mathrm{T}}\) (upper left) and \(M\) (upper right) distributions for the \(2\upmu \) exclusive event class, and the \(p_{\mathrm{T}} ^{\mathrm{miss}}\) distribution for the \(2\upmu +p_{\mathrm{T}} ^{\mathrm{miss}} +X\) inclusive event class (lower). Measured data are shown as black markers, contributions from SM processes are represented by coloured histograms, and the region enclosed by red dashed lines in each figure corresponds to the region of interest determined by the RoI algorithm described in Sect. 5

The global overview plots for the \(M\), \(S_{\mathrm{T}}\), and \(p_{\mathrm{T}} ^{\mathrm{miss}}\) RoI scans for the exclusive event classes are shown in Fig. 11. The corresponding plots for the inclusive and the jet-inclusive classes are shown in Figs. 12 and 13, respectively. The distributions observed based on the scans of the data are consistent with the expectations based on simulation within the uncertainty bands. In general, slightly fewer event classes are observed in data in the second bin of the distributions compared to the expectation, while there are more event classes in data in the first bin, where the observed deviation is smaller. This is a consequence of a possible overestimation of systematic uncertainties (see Sect. 5.5).

No event classes with an outstanding deviation from the SM simulation beyond the expectation, which could be studied for signs of BSM physics, have been found in the analysed data set. The largest deviations seen are consistent with the statistical analysis based on the SM-only hypothesis. The exact number of event classes and the corresponding values of \(\tilde{p}\) required to be considered significant deviations beyond the SM-only hypothesis depend on the kinematic distributions and the type of event class being probed, and can be inferred from the global overview plots (Figs. 11, 12, 13). The two most significant event classes from the RoI scan for each kinematic variable are described in Table 5, separately for exclusive, inclusive, and jet-inclusive event classes, respectively. The event classes showing the most significant deviations have been studied in detail, and no systematic trend in related or neighboring event classes has been found. Since the individual event classes do not show a deviation that is statistically significant compared to the expectation, a deeper inspection for possible signs of BSM physics is not required as a part of this analysis.

Some of these event classes have low numbers of events and high object multiplicity, such as the \(4 \upmu + 1 \text {b} + 1 \text {jet} + p_{\mathrm{T}} ^{\mathrm{miss}} \) event class with two data events compared to an overall expectation of \(0.16 \pm 0.11\) events in the entire event class (the numbers displayed in Table 5 refer to the data events and simulated expectation within the RoI), where the deviation can be attributed to a fluctuation. The events in this event class also contribute to the \(4 \upmu + 1 \text {b} + 1 \text {jet} + p_{\mathrm{T}} ^{\mathrm{miss}}\ +X\), \(4 \upmu + 1 \text {jet} + p_{\mathrm{T}} ^{\mathrm{miss}}\ +X\), and \(4 \upmu + 1 \text {b} + 1 \text {jet} + p_{\mathrm{T}} ^{\mathrm{miss}}\ +\text {Njets}\) event classes, which also appear among the event classes with the largest deviations for the inclusive and jet-inclusive categories. There are high jet multiplicity event classes with relatively low numbers of events, particularly \(2 \text {e} + 1 \upmu + 1 \text {b} + 5 \text {jets} +X\), \(2 \text {e} + 1 \upmu + 5 \text {jets} +\text {Njets}\), \(1 \upmu + 4 \text {b} + 1 \text {jet} + p_{\mathrm{T}} ^{\mathrm{miss}} \), \(1 \text {e} + 1 \upmu + 3 \text {b} + 2 \text {jets} + p_{\mathrm{T}} ^{\mathrm{miss}} +\text {Njets}\), and \(2 \text {e} + 1 \upmu + 1 \text {b} + 3 \text {jets} +\text {Njets}\), most of which are also inclusive at least in terms of the number of jets. The \(\tilde{p}\text { values}\) of these deviations are not very significant, and they can be ascribed either to fluctuations or to inadequate modelling of the data by the simulation at high jet multiplicities.