Abstract

New estimates for the minimum number of edges in subgraphs of a Johnson graph are obtained.

Similar content being viewed by others

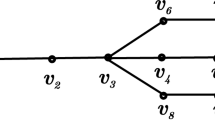

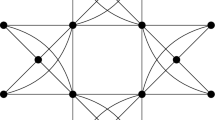

Let \(n > r > s\). Consider the following family of graphs:

i.e., the graph vertices are all possible r-element subsets of \([n]: = \{ 1, \ldots ,n\} \) and the edges join pairs of sets intersecting exactly in s elements. These graphs are known as Johnson graphs, and they play an important role in coding theory [1], Ramsey theory [2–4], combinatorial geometry [5–11], and hypergraph theory [12–19].

Recall that an independent vertex set of a graph G is any subset of its vertices such that no two vertices in the subset represent an edge of G, and the independence number \(\alpha (G)\) of G is equal to the cardinality of any maximum independent vertex set of G. A classical problem in graph theory going back to Turán is to find the minimum number \({{r}_{G}}(l)\) of edges of G that belong to its vertex set of cardinality l. Of course, if \(l \leqslant \alpha (G)\), then \({{r}_{G}}(l) = 0\), so only the cases \(l > \alpha (G)\) are of interest.

The classical Turán theorem states that the following estimate is sharp on the set of all graphs:

In particular, if Gn is a sequence of graphs with the numbers of vertices growing to infinity, \(l = l(n) \to \infty \), \(\alpha = \alpha ({{G}_{n}})\), and \(\alpha = o(l)\), then

However, the situation is different for graphs \(G(n,r,s),\) and it is this situation that is addressed below. First, we note that the graphs \({{G}_{n}} = G(n,3,1)\) were studied in detail in a series of Pushnyakov’s papers (see [6]), where, among other things, it was proved that, with the notation of (2) and under its conditions,

In other words, the lower bound in (3) is three times larger than estimate (2), which is sharp in the general case.

For arbitrary graphs \(G(n,r,s)\), there have been no estimates previously. However, these are distance graphs, i.e., their vertices can be regarded as points in \( {\mathbb{R}}^n \) (more specifically, n-dimensional vectors with r 1’s and n – r 0’s), and their edges are then pairs of points separated by a distance of \(\sqrt {2(r - s)} \). For graphs of this type, the following estimate was proved in [20]. Suppose that the conditions are the same as in inequality (2) and, additionally, \(\alpha n = o(l)\). Then

Thus, estimate (4) is twice as strong as (2).

First, we prove the following simple theorem.

Theorem 1. Given numbers r and s, let Gn = \(G(n,r,s)\), and let \(l = l(n) \to \infty \). Then

To compare Theorem 1 with estimates (1)–(4), we recall the behavior of independence numbers of graphs \(G(n,r,s)\). The following result was proved in [21].

Theorem 2. Given numbers r and s, the following assertions hold:

1. If \(r > 2s + 1\), then, for sufficiently large n,

2. If \(r \leqslant 2s + 1\) and \(r - s\) is a prime power, then

3. For any \(r\) and \(s,\) there exist \(c(r,s)\) and \(d(r,s)\) such hat

Theorem 2 implies that the new Theorem 1 gives the best of previously known upper bounds in the case of parameters n, 3, 1, namely, estimate (3). Moreover, for \(r \leqslant 2s + 1\), we see that the order of the new upper bound from Theorem 1 coincides with the order of classical lower bounds, since the denominators of the latter involve an independence number that is of order ns in the given case. Only for \(r > 2s + 1\) there is a considerable gap in the order. Especially curious is the case s = 0, which corresponds to Kneser graphs (see [2]). In this case, the new upper bound from Theorem 1 is trivial, because it is asymptotically equal to \(\tfrac{{{{l}^{2}}}}{2}\), which is the asymptotics of the number of edges of the complete graph on l vertices! Although it may seem strange, this does not mean the weakness of the new bound.

Theorem 3. Let \({{G}_{n}} = G(n,r,0)\). Let l > α(G(n, \(r,0)) = C_{{n - 1}}^{{r - 1}}\). Then

It can be seen that if l is much greater than the independence number, i.e., \({{n}^{{r - 1}}} = o(l)\), then the bound in Theorem 3 is asymptotically equal to \(\frac{{{{l}^{2}}}}{2}\). Indeed, the difference \(C_{n}^{r} - C_{{n - r}}^{r}\) has the order of \({{n}^{{r - 1}}}\).

Finally, there is an interesting case in which Theorem 3 does not work, namely, when \(l \sim C_{{n - 1}}^{{r - 1}}\). In this case, the bound of Theorem 3 becomes negative. Then the following result can be proved.

Theorem 4. Let \({{G}_{n}} = G(n,r,0)\). Let l > α(G(n, \(r,0)) = C_{{n - 1}}^{{r - 1}}\). Then

For example, if \(l = \alpha (G(n,r,0)) + 1\), then all classical bounds take a value of 1. However, in this case, we have a much stronger result. Indeed, if \(\beta \leqslant 2{{r}^{2}}C_{{n - 2}}^{{r - 2}}\), then the first of the two quantities under the maximum sign has an order of at least nr. For large β, the second quantity has an order of at least \({{n}^{{r - 2}}}\). It is possible to obtain a more accurate estimate, but the idea is clear.

In the next section, we describe the proof sketches of Theorems 1, 3, and 4.

PROOF SKETCHES

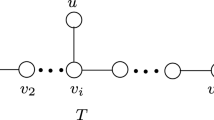

1 Proof Sketch of Theorem 1

Consider the set of all induced subgraphs of \(G(n,r,s)\) that have l vertices. For each graph H from this set and for each edge of \(G(n,r,s)\), consider the indicator function of this edge belonging to H. The indicators are summed over all edges and all graphs in two ways. On the one hand, we obtain the product of the number of edges and the number of graphs containing this edge:

On the other hand, we obtain the sum, over H, of the numbers of edges in H. Thus, the minimum number of edges is at least

The theorem is proved.

2 Proof Sketch of Theorem 3

Let W be a vertex set of \(G(n,r,0)\) having cardinality \(l\). Then, for any vertex \(v \in W\),

Taking the sum over all vertices completes the proof.

3 Proof Sketch of Theorem 4

Let H be a subgraph of \(G(n,r,0)\) having l vertices. Let \(\beta \) be the maximum cardinality of an independent vertex set in H. Obviously, \(\beta \leqslant \alpha (G(n,r,0)) = C_{{n - 1}}^{{r - 1}}\). Let B be any independent vertex set of H of cardinality \(\beta \). On the one hand, there is an argument going back to Turán and yielding bound (1) with \(\alpha \) replaced by \(\beta \). This bound goes first under the maximum sign. On the other hand, as at the beginning of Turán's argument, we note that each vertex in H that does not belong to \(B\) has at least one edge going to B. Stopping at this point, we obtain the bound \(l - \beta \), which is much weaker than Turán's, because it is involved as a term in Turán's inequality. However, the form of the second quantity under the maximum sign in Theorem4 suggests that the statement on at least one edge going to B can be strengthened. Indeed, we show that there are at least \(\beta - {{r}^{2}}C_{{n - 2}}^{{r - 2}}\) such edges. Let \(v\not\in B\) and \(w\) be its guaranteed neighbor from B. Let \(z \in B\) and \(z\not\sim v\). Of course, \(z\not\sim w\), since \(B\) is an independent set. Therefore, r-element subsets \(R,S\) of the set [n] corresponding to the vertices \(v,\;w\) do not intersect (the vertices form an edge), while an \(r\)-element subset T corresponding to z intersects both \(R\) and \(S\). There are at most \({{r}^{2}}C_{{n - 2}}^{{r - 2}}\) such sets T. Therefore, at most \({{r}^{2}}C_{{n - 2}}^{{r - 2}}\) vertices in B are not joined to \(v\), whence the number of vertices in B that are adjacent to \({v}\) is at least \(\beta - {{r}^{2}}C_{{n - 2}}^{{r - 2}}\). The theorem is proved.

REFERENCES

F. J. MacWilliams and N. J. A. Sloane, The Theory of Error-Correcting Codes (North-Holland, Amsterdam, 1977).

A. M. Raigorodskii and M. M. Koshelev, Discrete Appl. Math. 283, 724–729 (2020).

A. B. Kupavskii and A. A. Sagdeev, Russ. Math. Surv. 75 (5), 965–967 (2020).

A. A. Sagdeev, J. Math. Sci. 247 (3), 488–497 (2020).

A. M. Raigorodskii, Dokl. Math. 102 (3), 510–512 (2020).

F. A. Pushnyakov and A. M. Raigorodskii, Math. Notes 107 (2), 322–332 (2020).

A. M. Raigorodskii and E. D. Shishunov, Dokl. Math. 99 (2), 165–166 (2019).

R. Prosanov, Discrete Appl. Math. 276, 115–120 (2020).

R. I. Prosanov, Math. Notes 105 (6), 874–880 (2019).

A. A. Sagdeev, Probl. Inf. Transm. 55 (4), 376–395 (2019).

P. Frankl and A. Kupavskii, Electron. J. Comb. 28 (2), Research Paper P2.7 (2021).

P. Frankl and A. Kupavskii, Electron. J. Comb. 28 (2), Research Paper P2.7 (2021).

P. Frankl and A. Kupavskii, Discrete Anal., Paper No. 14 (2020).

D. A. Shabanov, N. E. Krokhmal, and D. A. Kravtsov, Eur. J. Combin. 78, 28–43 (2019).

D. Cherkashin and F. Petrov, SIAM J. Discrete Math. 34 (2), 1326–1333 (2020).

A. M. Raigorodskii and D. D. Cherkashin, Russ. Math. Surv. 75 (1), 89–146 (2020).

D. A. Shabanov and T. M. Shaikheeva, Math. Notes 107 (3), 499–508 (2020).

A. Semenov and D. Shabanov, Discrete Appl. Math. 276, 134–154 (2020).

M. B. Akhmejanova and D. A. Shabanov, Discrete Appl. Math. 276, 2–12 (2020).

K. A. Mikhailov and A. M. Raigorodskii, Sb. Math. 200 (12), 1789–1806 (2009).

P. Frankl and Z. Furedi, J. Comb. Theory Ser. A 39, 160–176 (1985).

Funding

This work was supported by the Russian Foundation for Basic Research (project no. 18-01-00355) and by President’s grant NSh-6760.2018.1.

Author information

Authors and Affiliations

Corresponding author

Additional information

Translated by I. Ruzanova

Rights and permissions

Open Access. This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pushnyakov, F.A., Raigorodskii, A.M. Estimate of the Number of Edges in Subgraphs of a Johnson Graph. Dokl. Math. 104, 193–195 (2021). https://doi.org/10.1134/S106456242104013X

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S106456242104013X