-

PDF

- Split View

-

Views

-

Cite

Cite

R. B. Menezes, J. E. Steiner, T. V. Ricci, A treatment procedure for Gemini North/NIFS data cubes: application to NGC 4151, Monthly Notices of the Royal Astronomical Society, Volume 438, Issue 3, 01 March 2014, Pages 2597–2620, https://doi.org/10.1093/mnras/stt2381

Close - Share Icon Share

Abstract

We present a detailed procedure for treating data cubes obtained with the Near-Infrared Integral Field Spectrograph (NIFS) of the Gemini North telescope. This process includes the following steps: correction of the differential atmospheric refraction, spatial re-sampling, Butterworth spatial filtering, ‘instrumental fingerprint’ removal and Richardson–Lucy deconvolution. The clearer contours of the structures obtained with the spatial re-sampling, the high spatial-frequency noise removed with the Butterworth spatial filtering, the removed ‘instrumental fingerprints’ (which take the form of vertical stripes along the images) and the improvement of the spatial resolution obtained with the Richardson–Lucy deconvolution result in images with a considerably higher quality. An image of the Brγ emission line from the treated data cube of NGC 4151 allows the detection of individual ionized-gas clouds (almost undetectable without the treatment procedure) of the narrow-line region of this galaxy, which are also seen in an [O iii] image obtained with the Hubble Space Telescope. The radial velocities determined for each one of these clouds seem to be compatible with models of biconical outflows proposed by previous studies. Considering the observed improvements, we believe that the procedure we describe in this work may result in more reliable analysis of data obtained with this instrument.

INTRODUCTION

Data cubes are large data sets with two spatial dimensions and one spectral dimension. As a consequence, in a data cube, one can obtain images of an object at different wavelengths or spectra of different spatial regions of this object. With the advent of instruments like Integral Field Spectrographs (IFSs) and modern Fabry–Perot spectrographs in the last decades, data cubes have been produced with increasing pace. Due to the high dimensions of these data sets, it is usually hard to fully analyse them using traditional methods and, therefore, only a subset of the data ends up being taken into account (kinematical maps, line flux maps, etc.). We have developed PCA Tomography (Steiner et al. 2009), a procedure that consists of applying principal component analysis (PCA; Murtagh & Heck 1987; Fukunaga 1990) to data cubes, in order to extract information from them in an optimized way. However, a powerful analysis tool on its own is not enough to work with data cubes. These data sets are usually contaminated by a series of artefacts (like high spatial-frequency noise, for example), often related to the instruments involved, which make it difficult to perform a detailed analysis. Therefore, in order to extract reliable information from data cubes, it is advisable to remove these artefacts with a proper data treatment.

The Near-Infrared Integral Field Spectrograph (NIFS; McGregor et al. 2002) of the Gemini North telescope provides 3D imaging spectroscopy in a spectral range of 0.95–2.4 μm (covering the spectral bands Z, J, H and K), with a field of view (FOV) of 3.0 arcsec × 3.0 arcsec. NIFS is designed to work in association with the adaptive optics (AO) system Altair, resulting in observations with spatial resolutions of about 0.1 arcsec. The incident light in the FOV of NIFS passes to an image slicer, where 29 small concave mirrors direct the light to another array of concave mirrors, called pupil mirrors. This second group of mirrors redirects all the light to a third array of mirrors, called field mirrors. However, besides redirecting the light, the pupil mirrors also reconfigure it in such a way that, when the light reaches the field mirrors, it is disposed as a long thin slit. With this new configuration, the light passes through a collimator, through a collimator corrector, through the grating and finally, it is directed to the detector. All of the optical and geometric properties of NIFS result in rectangular spatial pixels of 0.103 arcsec × 0.043 arcsec.

This is the first of a series of papers in which we will describe data treatment techniques, developed by us (Menezes 2012), to be applied to data cubes obtained with different instruments. In this paper, we present a detailed procedure for treating NIFS data cubes, after the data reduction. This procedure is presented in a specific sequence, which, according to our tests, gives optimized results. The main purpose of this data treatment is to remove artefacts typically seen in NIFS data cubes, which can affect considerably the data analysis. In Section 2, we describe the observations and the reduction of the data used in this paper to describe the procedure. In Section 3, we discuss the correction of the differential atmospheric refraction (DAR). In Section 4, we describe the spatial re-sampling of the data. In Section 5, we present the Butterworth spatial filtering, used to remove high spatial-frequency noise. In Section 6, we describe the process for removing ‘instrumental fingerprints’. In Section 7, we discuss the Richardson–Lucy deconvolution, used to improve the spatial resolution of the observations. In Section 8, we present the analysis of the data cubes of NGC 4151 as a scientific example illustrating the benefits obtained with the data treatment methodology we describe. Finally, we draw a summary and our conclusions in Section 9.

OBSERVATIONS AND DATA REDUCTION

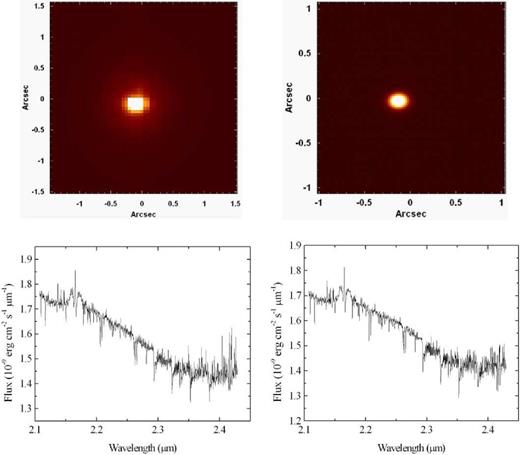

The data used in most of this paper to show the data treatment procedure are observations of the star GQ Lup, available in the NIFS public access data bank (www3.cadc-ccda.hia-iha.nrc-cnrc.gc.ca/gsa/). The observations were made in the K band on 2007 June 27, (Programme: GN-2007A-Q-46; PI: J-F. Lavigne), using a natural guide star (in this case, GQ Lup) for the AO. A total of nine 50 s exposures of GQ Lup were retrieved from the data bank to be used in this work. In order to analyse the spatial displacements along the spectral axis of NIFS data cubes, we also retrieved observations of this star in the J and H bands. One 400 s exposure in the J band and another 300 s exposure in the H band of GQ Lup (both from the same programme mentioned before and using the observed star for the guiding of the AO) were retrieved. The observation in the J band was made on 2007 May 30, and the observation in the H band was made on 2007 June 1. In order to show the process for removing ‘instrumental fingerprints’ (in Section 6), we used observations of the nuclear region of the galaxy M87. These observations were made in the K band on 2008 April 22 (Programme: GN-2008A-Q-12; PI: D. Richstone). A total of nine 600 s exposures of the nuclear region of M87 were obtained. Finally, in order to show a scientific example that illustrates the benefits obtained with the data treatment methodology we describe, we used observations of the nuclear region of the galaxy NGC 4151. These observations were made in the K band on 2006 December 12 (Programme: GN-2006B-C-9; PI: P. McGregor). A total of eight 90 s exposures were obtained. Although we show the data treatment process in this paper using, mainly, data in the K band, the procedure is entirely analogous for data in the Z, J and H bands.

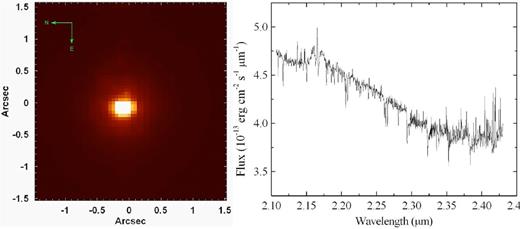

Standard calibration images of flat-field, dark flat-field (used in the data reduction for the subtraction of the dark current), Ronchi-flat (used in the data reduction to compute spatial distortions and to perform a spatial rectification), arc lamp and sky field were obtained to be used in the data reduction. Calibration images of A0V standard stars were also used. The data reduction was made in the iraf environment and included the following steps: determination of the trim, sky subtraction, bad pixel correction, flat-field correction, spatial rectification, wavelength calibration, telluric absorption removal, flux calibration (assuming that the used A0V standard star has a blackbody spectrum) and data cube construction. At the end of this process, we obtained 11 data cubes of GQ Lup (9 in the K band, 1 in the J band and 1 in the H band), 9 data cubes of M87 in the K band and 8 data cubes of NGC 4151 in the K band. All of these data cubes were reduced with spatial pixels (spaxels) of 0.05 arcsec × 0.05 arcsec. Fig. 1 shows an image of one of the nine data cubes of GQ Lup in the K band collapsed along the spectral axis and also its average spectrum. This data cube actually extends to 2.0 μm at the blue. However, due to some flux calibration problems in that part of the spectrum, we decided to not discuss it in this paper, except in Section 2, where we included this blue region of the spectrum to analyse the spatial displacements of the object along the spectral axis of the data cube.

Left: image of one of the nine data cubes of GQ Lup collapsed along the spectral axis. Right: average spectrum of the data cube shown at left.

CORRECTION OF THE DAR

It is known that the refraction caused by the Earth's atmosphere affects the light emitted by any celestial object that is observed by ground-based telescopes. The atmospheric refraction alters the original trajectory of the light and, as a consequence, an observed object looks closer to the zenith than it really is. This effect depends on the wavelength of the light and can affect considerably astronomical observations made from the ground.

Usually, during the observation of a given object, one can assume that the zenith distance and other parameters, like pressure and temperature, are, approximately, constant (as long as the exposure time is not very high). However, the wavelength is a variable parameter in a data cube, as the image of the observed object can be seen in different spectral regions. Due to the dependence of R on wavelength, one can conclude that the refraction angle varies along the spectral axis of the data cube, the origin of the so-called DAR. The main characteristic of this effect is that it changes the position of the observed object along the spectral axis of the data cube.

The DAR is considerably higher in the optical than in the infrared (see equation 2). However, as can be seen in the previous equations, other parameters (zenith distance, temperature, atmospheric pressure and water vapour pressure), besides the wavelength, have an important influence in this effect. The DAR is also higher, for example, for objects at a higher zenith distance (see equation 1). For more details about the DAR effect, see Filippenko (1982).

After the data reduction process, the first step in the proposed sequence for treating NIFS data cubes is the correction of the DAR. The main purpose of this section, however, is not to present a new method for calculating the DAR effect, as, in our data treatment procedure, we simply apply, when it is necessary, the equations from Bönsch & Potulski (1998) and Filippenko (1982) to perform this calculation. The main purposes of this section are the following: to show that, although the DAR is lower in the infrared than in the optical, the spatial displacements of the observed objects along the spectral axis of NIFS data cubes may be significant for some studies; to analyse, in detail, the spatial displacements along the spectral axis of NIFS data cubes, in order to evaluate if the behaviour of these displacements is compatible with the DAR effect.

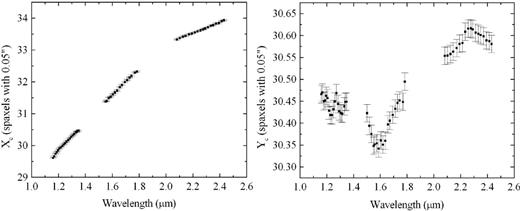

Fig. 2 shows the graphs of the coordinates Xc and Yc of the centres of the star GQ Lup in three data cubes, obtained after the data reduction, in the J, H and K bands (one data cube for each band). There is no spatial dithering between these three data cubes. We can see that the total displacement of the centre of the star from the J band to the K band is ∼ 0.2 arcsec along the horizontal axis and ∼ 0.015 arcsec along the vertical axis. Since the total displacement along the horizontal axis is twice the typical NIFS spatial resolution (0.1 arcsec), we conclude that such displacement is significant for studies involving the entire continuum of the J, H and K bands. One example of such a study is a spectral synthesis, which is usually performed using data in the J, H and K bands together. Studies involving spectral lines may also be affected by this effect if one wishes to compare images of lines located in different spectral bands. One example would be studies of emission line ratios. Fig. 3 shows an RGB composition containing images of GQ Lup in the J (blue), H (green) and K (red) bands. This RGB makes it easier to see the scientific necessity of the correction of the DAR effect, when the spectral continua of the J, H and K bands are considered simultaneously. On the other hand, in Fig. 2, we can see that the horizontal displacements in the J, H and K bands are ∼0.04, ∼0.05 and ∼ 0.03 arcsec, respectively, and the vertical displacements in these bands are ∼0.003, ∼0.008 and ∼ 0.003 arcsec, respectively. These spatial displacements are significantly smaller than the typical NIFS spatial resolution, indicating that studies involving only one of these spectral bands may not require a correction of this effect (although a horizontal displacement of ∼ 0.05 arcsec, for example, which is equal to 50 per cent of the typical NIFS spatial resolution, may be significant when accurate astrometry is required). Considering all of that, we conclude that the correction of the DAR effect in NIFS data cubes may be important in certain cases, especially for studies involving the entire spectral continuum in the J, H and K bands. It is also important to mention that the zenith distances of the data cubes of GQ Lup in the J, H and K bands are 55| $_{.}^{\circ}$|42, 55| $_{.}^{\circ}$|38 and 56| $_{.}^{\circ}$|55, respectively, which are relatively high values for a zenith distance. Observations with lower zenith distances will have smaller spatial displacements along the spectral axis of the data cubes, and in these cases, even studies involving the spectral continua in the J, H and K bands simultaneously may not require a correction of the DAR effect. The fact that the spatial displacements along the spectral axis of NIFS data cubes are significant in certain cases may be a little surprising, as a correction of the DAR effect is usually ignored in the infrared. However, this can be explained by the fact that NIFS observations are usually performed using the AO from Altair, resulting in spatial resolutions of ∼ 0.1 arcsec. Therefore, although the DAR is small in the infrared, the high spatial resolutions of NIFS observations provided by the AO make this effect significant in certain cases.

Graphs of the coordinates Xc and Yc of the centres of the star GQ Lup in three data cubes, obtained after the data reduction, in the J, H and K bands (one data cube for each band). There is no spatial dithering between the data cubes.

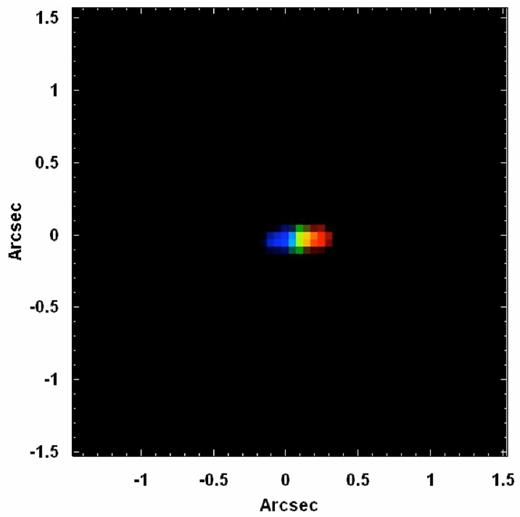

RGB composition containing images of GQ Lup in the J, H and K bands. The blue colour represents the wavelength range 1.1600-1.1615 μm, the green colour represents the wavelength range 1.6550-1.6565 μm and the red colour represents the wavelength range 2.4250-2.4265 μm.

With the next generation of extremely large telescopes, the AO-corrected point-spread functions (PSFs) obtained in the observations will be significantly smaller. As a consequence, the DAR will have a larger impact on data cubes, even in situations where this effect is usually ignored, like observations in the infrared, for example. This fact certainly must be addressed in the data reduction procedures for these future data sets.

In order to perform the correction of the DAR effect, we use a script, written in Interactive Data Language (idl), based on an algorithm developed by us. This script shifts each image of the data cube in a way that, at the end of the process, the position of the observed object does not change along the spectral axis of the data cube. The correction of the DAR can be done using two strategies: the theoretical approach and the practical approach. In the theoretical approach, the values of ΔR (along the spectral axis) of the centres of a given structure in the data cube are calculated using the theoretical equations of the DAR effect. After that, in order to obtain the coordinates Xc and Yc of the centres of the structure, the values of ΔR are projected on the horizontal and vertical axis of the FOV, using the parallactic angle η (which is given by the equations in Filippenko 1982). Using the simulated coordinates, the algorithm calculates and applies shifts to the images of the data cube, in order to remove the spatial displacement of the structure along the spectral axis. On the other hand, in the practical approach, the coordinates of the centres of a given structure are not calculated, but are measured directly. The practical approach is usually performed in the following way: first, wavelength intervals along the spectral axis of the data cube are chosen. Usually, 20 or 25 intervals are enough and it is advisable that these intervals do not contain spectral lines. The images in these intervals are, then, summed (resulting in one image for each interval) and the coordinates of the centres of a given structure in each one of the obtained images are determined using a proper method (one possibility is the task ‘imexamine’ of the iraf software). After that, the coordinates Xc and Yc of the centres are plotted as a function of the wavelength (it is important to mention that, since each image results from the sum of many others in each one of the wavelength intervals, the elaborated graphs associate the coordinates Xc and Yc to the mean wavelengths corresponding to each image). Third degree polynomials are fitted to the graphs of Xc × λ and Yc × λ. Finally, using the coefficients of the third degree polynomials obtained, the script calculates and applies shifts to the images of the data cube, in order to remove the spatial displacement of the structure along the spectral axis. It is important to emphasize that our script does not use any new method for calculating the DAR effect (only the equations from Bönsch & Potulski 1998 and Filippenko 1982). The main advantages of our algorithm are that it can be used with both the theoretical and the practical approaches and that, at the end of the procedure, the position of the observed object remains stable along the spectral axis of an NIFS data cube with a precision higher than 0.01 arcsec (see more details below). However, there are many other algorithms capable of shifting each image of a data cube, in order to remove spatial displacements along the spectral axis. Many of these algorithms (like the task ‘imshift’ of the iraf software) are essentially as precise as our algorithm and can be used in this treatment procedure.

The theoretical approach is advisable when the theoretical equations of the DAR effect reproduce the exact behaviour of the spatial displacement of a given structure along the spectral axis of the data cube. However, if (for any reason) that is not the case, the practical approach should be used.

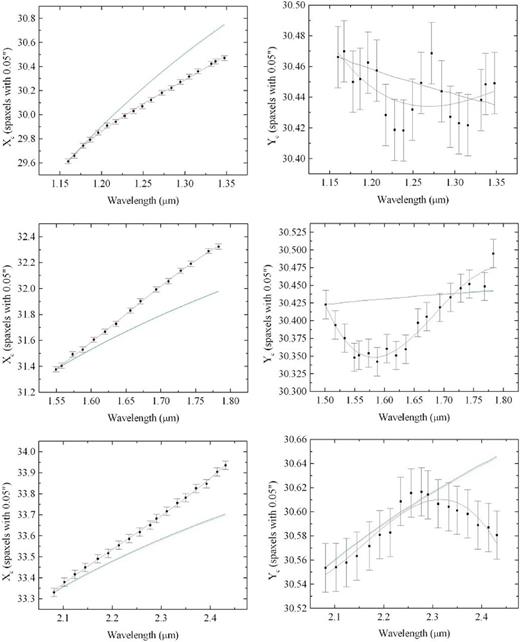

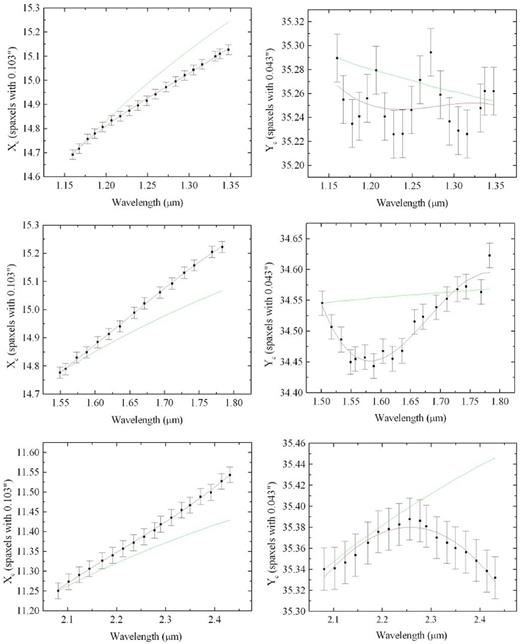

Equation (1) was obtained assuming a plane-parallel atmosphere. However, there are more precise routines that perform this calculation taking into account the curvature of the Earth's atmosphere. One example of these routines is the task ‘refro’ from the ‘slalib’ package. Fig. 4 shows the graphs of Xc × λ and Yc × λ of three data cubes of GQ Lup, obtained after the data reduction, in the J, H and K bands (one data cube for each band). In these graphs, we can also see the third degree polynomials fitted to the points, the theoretical curves obtained assuming a plane-parallel atmosphere (using the equations from Bönsch & Potulski 1998 and Filippenko 1982), and the theoretical curves obtained with the task ‘refro’ (since the task ‘refro’ only calculates the values of ΔR, we projected them on the horizontal and vertical axis of the FOV, using the parallactic angle η, which is given by the equations in Filippenko 1982).

Graphs of the coordinates Xc and Yc of the centres of the star GQ Lup in three data cubes, obtained after the data reduction, in the J, H and K bands (one data cube for each band). The third degree polynomials fitted to the points are shown in red, the theoretical curves obtained assuming a plane-parallel atmosphere are shown in green and the theoretical curves obtained with the task ‘refro’ from the ‘slalib’ package are shown in blue.

The first thing we can notice in Fig. 4 is that the theoretical curves obtained assuming a plane-parallel atmosphere and taking into account the curvature of the atmosphere were very similar to each other. In some graphs, we cannot even differentiate these two curves. Besides that, none of the theoretical curves reproduce properly the values of Xc along the spectral axis of the data cubes. There are also discrepancies between the theoretical curves and the values of Yc along the spectral axis of the data cubes in the H and K bands. In the case of the data cube in the J band, although the theoretical curves do not reproduce the exact behaviour of the points in the graph of Yc × λ, there is no clear discrepancy, as the curves are within the error bars of the points. Our previous experiences revealed that the discrepancies observed in Fig. 4 are very common in NIFS data cubes. Considering all of that, we conclude that the theoretical curves of the DAR effect obtained assuming a plane-parallel atmosphere or taking into account the curvature of the atmosphere do not reproduce the exact behaviour of the spatial displacements of structures along the spectral axis of NIFS data cubes.

One natural question at this point is: What is the cause of the discrepancies observed in Fig. 4? Clearly, they cannot be attributed to a simplistic calculation of the DAR effect, as even the task ‘refro’ (which performs a very detailed calculation of the DAR effect, considering both the curvature of the Earth's atmosphere and an approximation to the change in atmospheric temperature and pressure with altitude) could not reproduce the observed behaviour of the spatial displacements. One possibility is that the discrepancies were caused by the fact that, during the data reduction, there was a spatial re-sampling to spaxels of 0.05 arcsec × 0.05 arcsec, from the 0.103 arcsec × 0.043 arcsec raw spaxels. In order to evaluate this hypothesis, we reduced again the data cubes in the J, H and K bands (with the iraf software), but, this time, keeping the original size of the spaxels (0.103 arcsec × 0.043 arcsec). After that, we determined the values of Xc and Yc along the spectral axis of the data cubes. The results are shown in Fig. 5. We can see that the discrepancies observed in Fig. 4 between the coordinates of the centres of GQ Lup and the theoretical curves obtained assuming a plane-parallel atmosphere (which are essentially equal to the theoretical curves obtained taking into account the curvature of the Earth's atmosphere) remain practically unchanged.

Graphs of the coordinates Xc and Yc of the centres of the star GQ Lup in three data cubes, obtained after the data reduction, in the J, H and K bands (one data cube for each band), keeping the original size (0.103 arcsec × 0.043 arcsec) of their spaxels. The third degree polynomials fitted to the points are shown in red and the theoretical curves obtained assuming a plane-parallel atmosphere are shown in green.

Considering the results obtained in Fig. 4 and in Fig. 5, we conclude that the discrepancies between the coordinates of the centres of GQ Lup and the theoretical curves of the DAR effect cannot be attributed to a simplistic calculation of the effect or to the spatial re-sampling to spaxels of 0.05 arcsec × 0.05 arcsec during the data reduction. The exact cause of these discrepancies remains unknown. One possibility is that this is an instrumental effect. If this hypothesis is correct, the spatial displacements of the structures along the spectral axis of NIFS data cubes may even depend on observational parameters and an analysis of this effect would require the use of data cubes from different observations. Such analysis is beyond the scope of this paper and will not be performed here.

Since the theoretical curves do not reproduce properly the spatial displacements along the spectral axis, the practical approach is the most precise for removing this effect from NIFS data cubes. However, as Fig. 4 shows, the discrepancies between the coordinates of the centres of GQ Lup and the theoretical curves are all lower than 0.02 arcsec. In our previous experiences, we verified that it is very difficult to observe discrepancies higher than this. Since this value is significantly lower than the typical NIFS spatial resolution, we conclude that the theoretical approach may also be used to remove, with a good precision, the spatial displacements of structures along the spectral axis of NIFS data cubes.

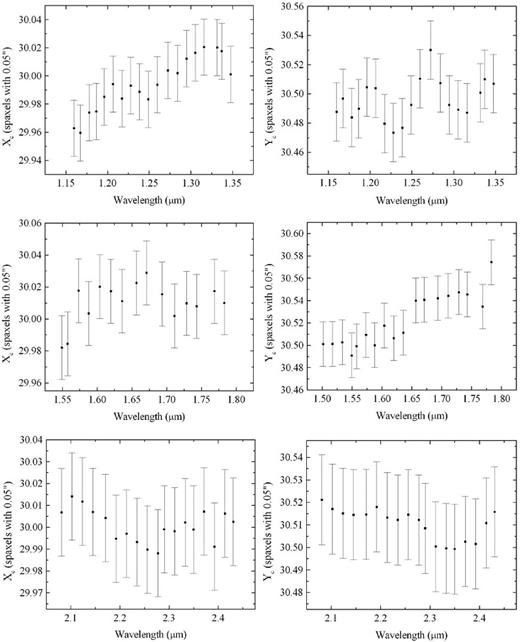

Using the practical approach, we applied our algorithm in order to remove the spatial displacements along the spectral axis of the three data cubes of GQ Lup used to generate Figs 4 and 5. After that, we measured again the coordinates Xc and Yc of the star along the spectral axis of these three data cubes. The results are shown in Fig. 6. We can see that the procedure reduced considerably the total displacement of Xc along the spectral axis of the data cubes. In the case of Yc, we did not expect considerable improvements, since the maximum displacement observed in this coordinate in Figs 4 and 5 was close to 0.008 arcsec and the precision of our algorithm is usually not higher than 0.005 arcsec. Nevertheless, Fig. 6 shows that the procedure also reduced the total displacement of Yc along the spectral axis of the data cubes, although the gain was very subtle. The expected values, after the correction, for Xc and Yc were 30.0 and 30.5, respectively. Therefore, we conclude that the precision (1σ) of our algorithm, in this case, was higher than 0.001 arcsec in Xc and higher than 0.002 arcsec in Yc. Usually, the algorithm results in precisions between 0.001 arcsec and 0.01 arcsec, for NIFS data cubes.

Graphs of the coordinates Xc and Yc of the centres of the star GQ Lup in three data cubes, obtained after the data reduction, in the J, H and K bands (one data cube for each band), after the correction of the spatial displacements along the spectral axis, using the practical approach.

Using the practical approach, we also applied our algorithm in order to remove the spatial displacements along the spectral axis of the other data cubes of GQ Lup in the K band. After that, in order to combine the nine data cubes in the K band into one, they were divided in three groups with three data cubes each. A median of the data cubes of each group was calculated, resulting in three data cubes at the end of the process. Finally, we calculated the median of these three data cubes and obtained the combined data cube.

SPATIAL RE-SAMPLING

After the data reduction and the correction of the spatial displacements along the spectral axis of the data cubes, the next step in the proposed sequence for treating NIFS data cubes is the spatial re-sampling, in order to reduce the size of the spaxels. We verified that, in most cases, this procedure improves the visualization of the spatial structures. It is important to mention that the spatial re-sampling does not change the spatial resolution of the images, but only improves their appearance (by providing a clearer visualization of the contours of the structures). However, one of the main reasons for applying the spatial re-sampling to NIFS data cubes is the result obtained when this process is used together with the Richardson–Lucy deconvolution, which is the last step in the proposed sequence (see Section 7). Our previous experiences revealed that not only the final appearance of the images are better, but also the final spatial resolution is higher when the Richardson–Lucy deconvolution is applied in a spatial re-sampled data cube.

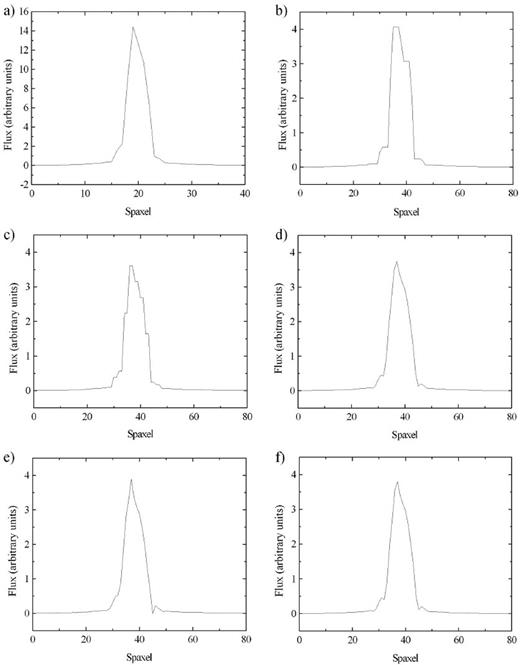

In order to apply this procedure, we use a script, written in idl, that increases the number of spaxels in each one of the images of the data cubes, conserving the surface flux. The use of this process only, however, does not improve considerably the appearance of the images, because the re-sampled image ends up with groups of adjacent spaxels with equal or similar values. An example of that is when an image is re-sampled in order to double the number of spaxels along the horizontal and vertical axis. In that case, in order to conserve the surface flux, each spaxel of the initial image originates four others, each one of them with a value equal to one quarter of the original value. The final result is an image without significant improvements, as each group of four adjacent spaxels is visually equal to a spaxel in the initial image. In order to solve this problem, after the spatial re-sampling of an image, we also apply an interpolation in the values of the spaxels in each one of the lines and, after that, in each one of the columns of the image. It is important to emphasize that this interpolation is applied when the re-sampling is already done and only to improve the appearance of the images (removing the groups of adjacent spaxels with similar values). There are many interpolation methods that can be used here. Our previous experiences revealed that the most appropriate of these interpolation methods are: lsquadratic, quadratic and spline. The lsquadratic interpolation is performed by fitting a quadratic function, in the form y = a + bx + cx2, to each group of four adjacent spaxels along a line (or a column). The quadratic interpolation is very similar to the lsquadratic interpolation, but the function y = a + bx + cx2 is fitted to each group of three adjacent spaxels. The spline interpolation is performed by fitting a cubic spline to each group of four adjacent spaxels. In order to show the importance of using the interpolation after the re-sampling procedure, and also to compare the different interpolation methods, we applied the spatial re-sampling to the data cube of GQ Lup using four approaches: a simple re-sampling (without the interpolation), a re-sampling followed by a lsquadratic interpolation, a re-sampling followed by a quadratic interpolation and a re-sampling followed by a spline interpolation. Since the side effects of the non-use of the interpolation are more clearly detected when the number of spaxels in the re-sampled image is a multiple of the number of spaxels in the initial image, we applied the procedure to the data cube of GQ Lup in order to double the number of spaxels along the horizontal and vertical axis of the images. One natural question at this point is: Considering that the reduced data cubes were already spatially re-sampled from the native 0.103 arcsec × 0.043 arcsec spaxels to 0.05 arcsec × 0.05 arcsec spaxels, why not to simply reduce the data cubes (with the iraf pipeline) with smaller spaxels, instead of applying a spatial re-sampling to the data cubes? In order to answer this question, we reduced again the data cubes of GQ Lup with spaxels of 0.025 arcsec × 0.025 arcsec and also re-applied the procedures described in Section 3. Fig. 7 shows graphs of the horizontal brightness profiles of the images of collapsed intermediate-wavelength intervals of the following data cubes of GQ Lup: a data cube with spaxels of 0.05 arcsec × 0.05 arcsec without the spatial re-sampling; a data cube with spaxels of 0.025 arcsec × 0.025 arcsec obtained with a simple spatial re-sampling without any interpolation; data cubes with spaxels of 0.025 arcsec × 0.025 arcsec obtained with a spatial re-sampling followed by lsquadratic, quadratic and spline interpolations; a data cube with spaxels of 0.025 arcsec × 0.025 arcsec obtained with a different data reduction procedure (which originated the spaxels of 0.025 arcsec × 0.025 arcsec) and no spatial re-sampling.

Graphs of the horizontal brightness profiles of the images of a collapsed intermediate-wavelength interval of the data cube of GQ Lup (a) with spaxels of 0.05 arcsec × 0.05 arcsec without the spatial re-sampling, (b) with spaxels of 0.025 arcsec × 0.025 arcsec obtained with a different data reduction procedure (which originated the spaxels of 0.025 arcsec × 0.025 arcsec), (c) with spaxels of 0.025 arcsec × 0.025 arcsec obtained with a simple spatial re-sampling without any interpolation, (d) with spaxels of 0.025 arcsec × 0.025 arcsec obtained with a spatial re-sampling followed by a lsquadratic interpolation, (e) with spaxels of 0.025 arcsec × 0.025 arcsec obtained with a spatial re-sampling followed by a quadratic interpolation and (f) with spaxels of 0.025 arcsec × 0.025 arcsec obtained with a spatial re-sampling followed by a spline interpolation.

In Fig. 7, we can see that a spatial re-sampling followed by an interpolation of the values provides a clearer visualization of the contours of the star in the image than a simple spatial re-sampling. Besides that, the three interpolation methods used here provided very similar results. Therefore, we conclude that any of these methods may be used in the data treatment procedure described in this paper. Finally, Fig. 7 also reveals that the reduction of the data cube (with the iraf pipeline) with smaller spaxels provided a much poorer visualization of the contours of the star in the image than the re-sampling procedure followed by an interpolation.

The poorer visualization of the contours of the structures, however, is not the only disadvantage of reducing the data cubes with spaxels smaller than 0.05 arcsec × 0.05 arcsec. Another disadvantage is related with the main side effect of a spatial re-sampling: any spatial re-sampling of an image (with or without an interpolation) introduces high spatial-frequency components in the images. That is a natural consequence of this kind of procedure. If a data cube is reduced with smaller spaxels, then it will also contain a higher number of high spatial-frequency components. Such components may interfere in the process of determining the coordinates of the centres of spatial structures, which is a necessary step in the practical approach for removing the spatial displacements of structures along the spectral axis of NIFS data cubes (see Section 3). In other words, the reduction of data cubes with smaller spaxels may interfere in the correction of the DAR effect. Our previous experiences revealed that this interference only starts to be relevant if a data cube is reduced with spaxels smaller than 0.05 arcsec × 0.05 arcsec. Considering that and the poorer visualization of the structures obtained, we conclude that the most appropriate procedure for obtaining spaxels with dimensions smaller than 0.05 arcsec × 0.05 arcsec is the spatial re-sampling followed by an interpolation and not the reduction of the data cube with smaller spaxels.

One important point that is worth mentioning here is related to the signal-to-noise (S/N) ratio of the spectra of the data cubes. Although the spatial re-sampling (followed by an interpolation) improves significantly the visualization of the contours of the structures in the images, it may degrade the S/N ratio, as it is actually a second re-sampling procedure applied to the data (the first was performed during the data reduction, in order to obtain spaxels of 0.05 arcsec × 0.05 arcsec from the native 0.103 arcsec × 0.043 arcsec spaxels). In order to evaluate if this degradation of the S/N ratio is significant, we extracted a spectrum from a circular region with a radius of 0.05 arcsec, centred on the star, of the data cube of GQ Lup, before and after the spatial re-sampling. After that, we calculated the S/N ratio in the spectral range 2.2685-2.2800 μm in the two spectra. The results were 113.9 and 113.5 for the spectra from the data cubes before and after the spatial re-sampling, respectively. This indicates that the spatial re-sampling reduced ∼0.4 per cent of the S/N ratio of the spectra. In our previous experiences, we have never observed a degradation higher than 1.0 per cent. Considering these very low degradations and the significant improvements obtained in the visualization of the contours of the structures, we conclude that the spatial re-sampling is a procedure that is worth applying to NIFS data cubes.

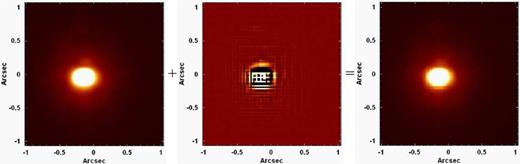

The previous spatial re-sampling, applied to the data cube of GQ Lup in order to double the number of spaxels along the horizontal and vertical axis of the images, was only used to show the importance of the interpolation after the re-sampling (the resulting data cubes will not be used for any other purpose in this work). However, the spatial re-samplings we usually apply to NIFS data cubes result in spaxels of 0.021 arcsec × 0.021 arcsec. We choose this value for the final size of the spaxels because it is approximately a submultiple of the original dimensions of NIFS spatial pixels (0.103 arcsec × 0.043 arcsec). Using this strategy, we applied the spatial re-sampling, followed by an interpolation, to the data cube of GQ Lup (with original spaxels of 0.05 arcsec × 0.05 arcsec) and obtained a data cube with spaxels of 0.021 arcsec × 0.021 arcsec. Fig. 8 shows images of a collapsed intermediate-wavelength interval of the data cube of GQ Lup, before and after the spatial re-sampling.

Left: image of a collapsed intermediate-wavelength interval of the data cube of GQ Lup, before the spatial re-sampling. Right: image of the same collapsed wavelength interval of the image at left, after the spatial re-sampling.

Fig. 8 shows that the spatial re-sampling improved the visualization of the star GQ Lup; however, as mentioned before, it also introduced high spatial-frequency components, which can be seen as thin horizontal and vertical stripes in the image (these structures are very subtle in Fig. 8). It is important to mention that these high spatial-frequency components do not represent a considerable problem here, as they are almost entirely removed by the Butterworth spatial filtering (see Section 5).

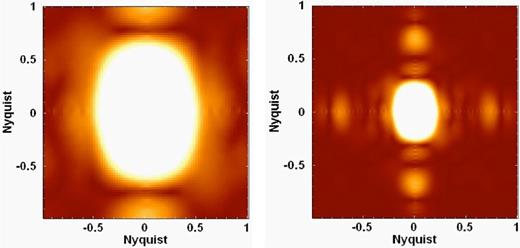

In order to determine the high-frequency components introduced by the spatial re-sampling, we calculated the Fourier transforms of the two images in Fig. 8. Fig. 9 shows the moduli of the Fourier transforms of the images in Fig. 8.

Left: modulus of the Fourier transform of the image of GQ Lup in Fig. 8, before the spatial re-sampling. Right: modulus of the Fourier transform of the image of GQ Lup in Fig. 8, after the spatial re-sampling. The high spatial-frequency components introduced by the procedure appear as bright areas farther from the centre of the image.

In Fig. 9, the high-frequency components introduced by the spatial re-sampling appear very clearly as bright areas farther from the centre of the image. Most of these components have spatial frequencies higher than 0.5 Ny (where Ny represents the Nyquist frequency, which corresponds to half of the sampling-frequency of the image). It is important to mention that the definition of the Nyquist frequency in the images in Fig. 9, and elsewhere in this paper, is related to the spaxel sampling of each image. Therefore, according to this definition, a complete oscillation within two spaxels has a frequency of 1 Ny.

BUTTERWORTH SPATIAL FILTERING

The next step in the proposed sequence for treating NIFS data cubes is the Butterworth spatial filtering (Gonzalez & Woods 2002), which consists of a filtering process performed directly in the frequency domain. It is well known that a Fourier transform of a function passes this function to the frequency domain and allows one to analyse its frequency components. As a consequence, using the Fourier transform, it is possible to modify the frequency components of a function. A filtering process performed in the frequency domain is based on the idea of calculating the Fourier transform of a function and eliminating certain frequency components. After the removal of the frequency components is done, an inverse Fourier transform of the function is calculated, completing the process.

In the case of the Butterworth spatial filtering of images, the main steps of the procedure can be summarized as: calculation of the Fourier transform (F(u, v)) of the image; multiplication of the Fourier transform F(u, v) by the image corresponding to the Butterworth filter (H(u, v)); calculation of the inverse Fourier transform of the product F(u, v) · H(u, v); extraction of the real part of the calculated inverse Fourier transform. Since the original image and the filter H(u, v) have only real values, the inverse Fourier transform of F(u, v) · H(u, v) is expected to be real as well; however, due to computational uncertainties, such an inverse Fourier transform often shows a low-modulus imaginary component. That is the reason why the last step of the procedure is the extraction of the real part of the inverse Fourier transform of F(u, v) · H(u, v). Since the purpose of the filtering process in this work is the removal of high spatial-frequency noise, only low-pass filters were used.

An analysis of the Fourier transform in Fig. 9 revealed that an appropriate value for the cut-off frequency to be used in the Butterworth spatial filtering of the data cube of GQ Lup is approximately 0.39 Ny. However, in order to determine the most adequate value of n to be used, first of all, we applied a spatial wavelet decomposition (Starck & Murtagh 2006) to the data cube. A wavelet decomposition is a process in which a signal is decomposed in many components with different characteristic frequencies. In this case, we used this procedure to decompose each image of the data cube of GQ Lup in five wavelet components (w0, w1, w2, w3 and w4) and wc, which corresponds to a smooth version of the original image. In this work, to perform this spatial wavelet decomposition, we used the À Trous algorithm, which has two important characteristics:

the sum of the components w0, w1, w2, w3, w4 and wc obtained for each image, corresponds to the original image,

the average of each wavelet component is 0.

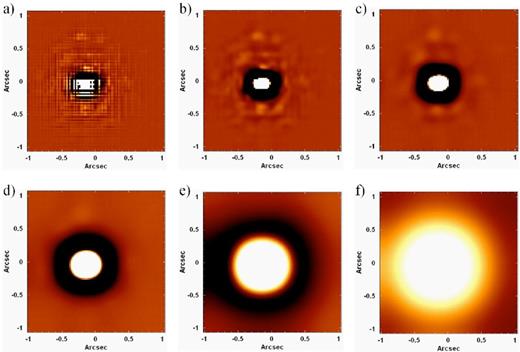

Since this wavelet decomposition was applied to each image of the data cube, at the end of the process, we obtained six data cubes (W0, W1, W2, W3, W4 and WC). In this notation, w0 and w4 represent the wavelet components with the highest and lowest spatial frequencies, respectively. Fig. 10 shows images of a collapsed intermediate-wavelength interval of the data cubes W0, W1, W2, W3, W4 and WC, obtained with the wavelet decomposition of the data cube of GQ Lup. We can see that W0 reveals the existence of a considerable amount of high spatial-frequency noise, which appear in the form of thin horizontal and vertical stripes across the image. These structures are so abundant that we can barely see the star in the image of W0. Some of these stripes may be associated with instrumental noise; however, it is probable that part of them correspond to the high-frequency components introduced by the spatial re-sampling procedure (Section 4).

Images of a collapsed intermediate-wavelength interval of the data cubes (a) W0, (b) W1, (c) W2, (d) W3, (e) W4 and (f) WC, obtained with the spatial wavelet decomposition of the data cube of GQ Lup.

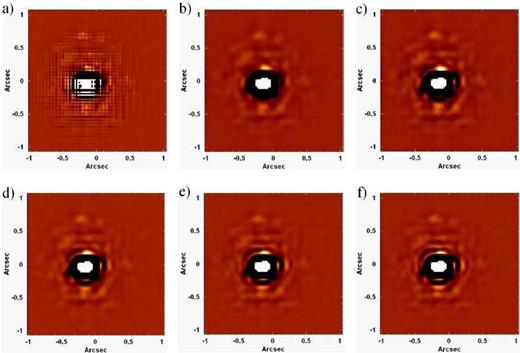

After that, we applied the Butterworth spatial filtering procedure to W0 (with a cut-off frequency of 0.39 Ny) with n = 2, n = 3, n = 4, n = 5 and n = 6. Fig. 11 shows images of a collapsed intermediate-wavelength interval of the W0 data cube, before and after the filtering process with these values of n.

Images of a collapsed intermediate-wavelength interval of the W0 data cube of GQ Lup, (a) before the Butterworth spatial filtering and after the Butterworth spatial filtering with (b) n = 2, (c) n = 3, (d) n = 4, (e) n = 5 and (f) n = 6.

Fig. 11 reveals that the Butterworth spatial filtering improved considerably the visualization of the star GQ Lup, which was barely visible in the original image of W0. However, the data cubes filtered with orders higher than 2 show structures similar to concentric rings. These structures were introduced by the filtering process and start to be visible with n = 3 (although possible traces of an inner ring can be seen in the image corresponding to n = 2), but become more and more intense as n grows. This is an expected consequence of the use of the Butterworth spatial filtering with high orders. In order to explain this behaviour, first of all, it is important to mention that, according to the convolution theorem, the product between two functions in the frequency domain is equivalent to the convolution between these functions in the spatial domain. In other words, the convolution between two functions is equivalent to the product between the Fourier transforms of these functions. Therefore, in the case of the Butterworth spatial filtering of a data cube, the product between the Fourier transform of each image of the data cube and the image corresponding to the Butterworth filter is equivalent to the convolution between each original image of the data cube and the inverse Fourier transform of the Butterworth filter. Fig. 12 shows the moduli of the inverse Fourier transforms of the Butterworth filters used to generate each image in Fig. 11.

Moduli of the inverse Fourier transforms of Butterworth filters with cut-off frequency of 0.39 Ny and order (a) n = 2, (b) n = 3, (c) n = 4, (d) n = 5 and (e) n = 6.

In Fig. 12, the inverse Fourier transform of the filter with n = 6 shows a series of concentric rings that decrease in number and intensity as n decreases. The inverse Fourier transform of the filter with n = 2 shows only one weak ring (which is almost undetectable). Therefore, considering the behaviour of the images in Fig. 12 and the fact that the Butterworth spatial filtering of a data cube is equivalent to the convolution between the inverse Fourier transform of the Butterworth filter and the original images of the data cube, we can see why the W0 data cubes of GQ Lup filtered with high values of n show structures similar to concentric rings. In Fig. 11, we can also see a considerable number of relatively small structures around GQ Lup. Most of these structures are artefacts introduced by the AO in the observation. The only exception is the farthest source at west of GQ Lup, which corresponds to GQ Lup b, a brown dwarf already analysed in previous studies (Lavigne et al. 2009).

One important point to be mentioned is that the Fourier transform of a function has a periodicity, due to the form by which it is defined, and a convolution between functions with some periodicity generates results different from a convolution between functions that do not have this property. This fact can cause problems in a Butterworth spatial filtering, as this procedure, according to what was mentioned before, is equivalent to a convolution between the original images and the inverse Fourier transform of the Butterworth filter. In order to solve this issue, we apply a ‘padding’ procedure. We will not describe this process here, but more details can be found in Gonzalez & Woods (2002).

The rings observed in Fig. 11 could be detected so clearly only because we analysed images of the W0 data cube of GQ Lup. In images of original NIFS data cubes (without any wavelet decomposition), it is much harder to see such structures, due to the fact that they are immersed in the low-frequency components of the PSFs of the objects, being obfuscated by these. It is worth mentioning that, due to their nature, these concentric rings are only visible around point-like sources.

Fig. 11 shows that the Butterworth spatial filtering with n = 2 removed most of the high spatial-frequency noise without introducing significant rings in the images. The explanation given above states that this is a mathematical consequence of the Butterworth filtering and not only a particular result obtained with the data cube of GQ Lup. Therefore, we conclude that a Butterworth spatial filtering with n = 2 is the most appropriate to be applied to NIFS data cubes.

The cut-off frequency of the filtering process (0.39 Ny in the case of GQ Lup) of NIFS data cubes changes considerably depending on the observed object. We have observed (in previous experiences) values ranging from 0.19 Ny to more than 0.40 Ny. This significant variation in the cut-off frequency is caused by different seeing conditions of the observations, together with the fact that the AO correction of this instrument does not have the same efficiency for all objects. A good strategy to determine the most appropriate cut-off frequency for the Butterworth spatial filtering of a data cube is to perform a wavelet decomposition of the original data cube and then apply the filtering procedure, with different cut-off frequencies, to the W0 data cube. Since the w0 wavelet component has the highest spatial-frequency components, performing the filtering procedure on W0 will reveal very clearly the amount of noise removed and possible distortions or artefacts introduced by the process. An appropriate Butterworth spatial filtering must remove the greatest possible amount of noise, without affecting the PSF of the object.

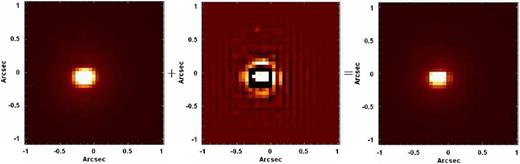

We applied the Butterworth spatial filtering to the original data cube (without any wavelet decomposition) of GQ Lup, using a filter given by equation (8), a cut-off frequency of 0.39 Ny, and n = 2. Fig. 13 shows images of a collapsed intermediate-wavelength interval of the following data cubes: the original data cube of GQ Lup, the filtered data cube of GQ Lup and the data cube corresponding to the difference between the previous two.

Left: image of a collapsed intermediate-wavelength interval of the data cube of GQ Lup, after the Butterworth spatial filtering. Right: image of the same collapsed intermediate-wavelength interval of the data cube of GQ Lup, before the Butterworth spatial filtering. Centre: image of a collapsed intermediate-wavelength interval of the data cube corresponding to the difference between the previous two.

Fig. 13 reveals that the Butterworth spatial filtering removed a considerable amount of high-frequency noise (in the form of thin vertical and horizontal stripes) from the images of the original data cube of GQ Lup. One important question at this point is: Were the high spatial-frequency components in Fig. 13 entirely introduced by the spatial re-sampling procedure or were at least part of them already present in the data cube before the spatial re-sampling? In order to answer this question, we applied the Butterworth spatial filtering to the data cube of GQ Lup before the spatial re-sampling. Fig. 14 shows images analogous to the ones in Fig. 13 but from the data cube of GQ Lup before the spatial re-sampling. In order to evaluate also the influence of the interpolation (applied after the spatial re-sampling) on the high spatial-frequency components in Fig. 13, we applied the Butterworth spatial filtering to the data cube of GQ Lup after the spatial re-sampling (to spaxels of 0.021 arcsec × 0.021 arcsec) without any interpolation. Fig. 15 shows images analogous to the ones in Fig. 13 and in Fig. 14 but from the data cube of GQ Lup after the spatial re-sampling without any interpolation.

Left: image of a collapsed intermediate-wavelength interval of the data cube of GQ Lup with spaxels of 0.05 arcsec, after the Butterworth spatial filtering. Right: image of the same collapsed intermediate-wavelength interval of the data cube of GQ Lup with spaxels of 0.05 arcsec, before the Butterworth spatial filtering. Centre: image of a collapsed intermediate-wavelength interval of the data cube corresponding to the difference between the previous two.

Left: image of a collapsed intermediate-wavelength interval of the data cube of GQ Lup spatially re-sampled (to spaxels of 0.021 arcsec) without any interpolation, after the Butterworth spatial filtering. Right: image of the same collapsed intermediate-wavelength interval of the data cube of GQ Lup spatially re-sampled (to spaxels of 0.021 arcsec), before the Butterworth spatial filtering. Centre: image of a collapsed intermediate-wavelength interval of the data cube corresponding to the difference between the previous two.

In Fig. 14, we can see high spatial-frequency components (in the form of horizontal and vertical stripes across the image) similar to the ones observed in Fig. 13, although the spatial sampling is different. This indicates that at least part of the high spatial-frequency components observed in Fig. 13 were already present in the data cube with spaxels of 0.05 arcsec × 0.05 arcsec and were not introduced by the spatial re-sampling procedure. Fig. 15 reveals high spatial-frequency components almost identical to the ones observed in Fig. 13. This indicates that, although the spatial re-sampling has introduced high spatial-frequency components in the images, the interpolation applied had little effect on them.

‘INSTRUMENTAL FINGERPRINT’ REMOVAL

We have observed that many of the NIFS data cubes we have previously analysed showed certain ‘instrumental fingerprints’ that, usually, presented the form of large vertical stripes in the images. Such structures also had very specific spectral signatures. The removal of instrumental fingerprints from NIFS data cubes involves the use of the PCA Tomography technique (Steiner et al. 2009).

PCA is a statistical technique often used to analyse multidimensional data sets. This method is efficient to extract information from such a large amount of data because it allows one to identify patterns and correlations in the data that, otherwise, would probably not be detected. Another use of PCA is its ability to reduce the dimensionality of the data, without significant loss of information. This is very useful to handle data sets with considerably large dimensions. Mathematically, PCA is defined as an orthogonal linear transformation of coordinates that transforms the data to a new system of (uncorrelated) coordinates, such that the first of these new coordinates (eigenvector E1) explains the highest fraction of the data variance, the second of these new coordinates (eigenvector E2) explains the second highest fraction of the data variance and so on. The coordinates generated by PCA are called principal components, and it is important to mention that, by construction, all of them are orthogonal to each other (and therefore uncorrelated – for more details, see Murtagh & Heck 1987; Fukunaga 1990).

PCA Tomography is a method that consists of applying PCA to data cubes. In this case, the variables correspond to the spectral pixels of the data cube and the observables correspond to the spaxels of the data cube. Since the eigenvectors are obtained as a function of the wavelength, they have a shape similar to spectra and, therefore, we call them eigenspectra. On the other hand, since the observables are spaxels, their projections on the eigenvectors are images, which we call tomograms.

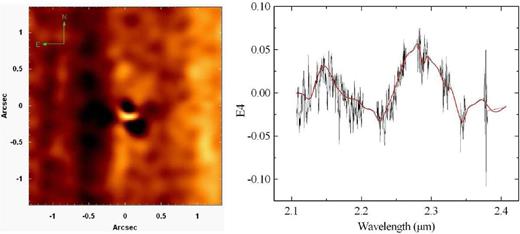

In order to remove instrumental fingerprints from an NIFS data cube, first of all, we remove its spectral lines. This step is necessary because the spectral signature of the instrumental fingerprint is associated only with the continuum and not with the spectral lines; therefore, the removal of these lines makes the detection of the problem easier. We then apply the PCA Tomography technique to the data cube without the spectral lines and select the obtained eigenvectors that are related to the fingerprint. It is important to mention that, if PCA Tomography is applied to the data cube with spectral lines, it is probable that the obtained eigenvectors that are related to the fingerprint have also some relation to other phenomena associated with spectral lines. In other words, it would be more difficult to isolate the fingerprint in specific eigenvectors without the ‘contamination’ of them by spectral lines. After the selection of the eigenvectors related to the fingerprint, we fit splines to the corresponding eigenspectra and construct a data cube using the tomograms associated with these eigenspectra and the fitted splines (more details about the reconstruction of data cubes using tomograms and eigenspectra can be found in Steiner et al. 2009). The result of this process is a data cube containing, only, the instrumental fingerprint. Finally, we subtract the data cube containing the fingerprint from the original one. All previous steps are performed using scripts written in idl. More details about the detection and removal of instrumental fingerprints from data cubes will be discussed in Steiner et al. (in preparation). We applied the procedure for instrumental fingerprint removal to the data cube of the nuclear region of the galaxy M87, which is described in Section 2. This data cube was treated with all the techniques discussed in the previous sections. Fig. 16 shows the tomogram and the eigenspectrum (together with the fitted spline) corresponding to eigenvector E4, obtained with PCA Tomography of the data cube of M87, after the removal of the spectral lines. Eigenvector E4 explains ∼6.0 × 10−4 per cent of the variance of the data cube without the emission lines.

Tomogram and eigenspectrum (together with the fitted spline, shown in red) corresponding to eigenvector E4, obtained with PCA Tomography of the data cube of M87, after the removal of the spectral lines.

In Fig. 16, we can see that the spectral signature of the instrumental fingerprint in the data cube of M87 has a low-frequency pattern, which is essentially the same in all NIFS data cubes, in the K band, we have analysed so far. In data cubes obtained in the Z, J and H bands, the spatial morphology and the spectral signature of the fingerprint are also very similar to what is shown in Fig. 16. We used the data cube of M87 to show the fingerprint typically found in this instrument because this artefact is better visualized in data cubes of extended objects. In data cubes of point-like sources (like GQ Lup, for example), the fingerprint is detected in a much more diffuse way and, in some cases, may not be detected at all. In cases like that, the procedure for fingerprint removal is, obviously, not necessary.

Since eigenvector E4 in Fig. 16 explains only ∼6.0 × 10−4 per cent of the variance of the data cube without the emission lines, one could think that this effect is too weak to affect significantly any scientific analysis to be performed. However, that is not the case. In order to show that, we elaborated images of two collapsed wavelength intervals of the data cube of M87 before the instrumental fingerprint removal: 2.2510–2.2600 μm and 2.1820–2.1911 μm. Then, we subtracted the second image from the first one. This same procedure was also applied to the data cube of M87 after the instrumental fingerprint removal. The results are shown in Fig. 17. The instrumental fingerprint clearly affects the image without the correction. However, we can also see that our procedure was so effective that left almost no traces of the fingerprint. The reason why this fingerprint appeared so clearly in an image resulting from the subtraction of two continuum images is that, as explained above (and as Fig. 16 shows), this effect is associated with the continuum and not with the spectral lines. A subtraction of continuum images in different spectral regions in a data cube may be performed to evaluate differences in the inclination of the continuum in different spatial regions. In the case of Fig. 17, for example, the bright regions have redder spectra than the dark ones, which may be due to the presence of thermal emission from dust in the formers. There are, however, other studies of the spectral continuum that may be affected by the instrumental fingerprint. One example is the spectral synthesis. Considering all of that, we conclude that the instrumental fingerprint in NIFS data cubes may affect significantly the analysis in some studies and, therefore, should be removed in these cases.

Left: subtraction of the image of the collapsed wavelength interval 2.1820-2.1911 μm from the image of the collapsed wavelength interval 2.2510-2.2600 μm, both from the data cube of M87 before the instrumental fingerprint removal. Right: the same image shown at left, but from the data cube of M87 after the instrumental fingerprint removal.

The origin of the instrumental fingerprint in NIFS data cubes is still uncertain. NIFS reads out through four amplifiers in 512 pixel blocks in the spectral direction. Therefore, slight bias level differences between the four amplifiers could be one possible explanation for this fingerprint. If we try to evaluate any periodicity in the spectral behaviour revealed by Fig. 16 (despite the irregularities of this behaviour), we obtain a value of ∼ 0.1 μm. Such a periodicity in a full spectrum of 0.4 μm supports the idea of bias level differences between the four amplifiers generating the fingerprint. However, we cannot exclude other possibilities, like the fingerprint being generated by some step during the data reduction. Although we do not know the origin of the instrumental fingerprint, the method we present here has proved to be very effective in removing this effect from NIFS data cubes.

RICHARDSON–LUCY DECONVOLUTION

A deconvolution is an iterative process whose purpose is to revert the effect of a convolution. In the astronomical case, the deconvolution problem is usually put in the following way: knowing the observed image I(x, y) and the PSF of the observation P(x, y), one wishes to obtain the original image O(x, y).

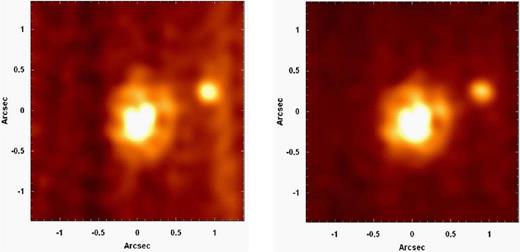

Left: horizontal brightness profile of the image of a collapsed intermediate-wavelength interval of the data cube of GQ Lup, with a simple Gaussian fit. Right: same brightness profile, with a Gaussian + Lorentzian fit.

By analysing Fig. 18, one can clearly see that the brightness profile of the image of the data cube of GQ Lup is better described by a two component fit (Gaussian + Lorentzian) than a simple Gaussian fit. This is caused by the fact that, as mentioned before, the halo around the Gaussian component, generated by the AO, has a Lorentzian shape. It is important to emphasize, however, that the relative weight between the Gaussian and the Lorentzian component in the PSF of an observation depends on the effect of the AO in the observation.

The Airy function is wavelength dependent; the NIFS spatial sampling is not. Therefore, the PSFs of NIFS data cubes do not change significantly along the spectral axis. For this reason, we can apply the Richardson–Lucy deconvolution to each image of an NIFS data cube using a constant PSF, with no wavelength dependence.

Due to the existence of a Gaussian and a Lorentzian component, the PSFs of NIFS data cubes are quite complex. Besides that, the Richardson–Lucy deconvolution is quite sensitive to the knowledge of the PSF. For this reason, the best results of the Richardson–Lucy deconvolution in NIFS data cubes are achieved using real PSFs, obtained directly from the data. In the case of observations of isolated stars, for example, one can use as PSF an image of an intermediate wavelength of the data cube. Observations of type 1 active galactic nuclei (AGNs) can also be used to obtain real PSFs: the broad-line region (BLR), which is the source of the broad components in permitted lines, can be regarded as a point-like source at the distance of typical AGNs; therefore, an image of a broad wing of one of the permitted lines can be used as PSF. If it is not possible to use a real image as PSF, one can also construct a synthetic PSF, based on equation (13), taking into account the fact that the PSF may not be circular. In certain cases, it may be difficult to obtain any estimate of the PSF of the observation from the analysed data cube. In these cases, one possibility is to obtain this estimate from the data cube of the standard star used in the data reduction for telluric absorption removal and flux calibration. This strategy, however, is potentially problematic because there may be differences between the effects of the AO applied to the science data cube and to the standard star data cube. There may be also differences between the seeing of these observations. Nevertheless, in many cases, these differences are of second order and the PSF obtained from the standard star data cube allows one to perform a reliable Richardson–Lucy deconvolution. In any case, one should be cautious and test if the process is really effective. Finally, for the cases in which it is absolutely impossible to obtain any estimate of the PSF (from the science data cube or from the standard star data cube), the Richardson–Lucy deconvolution should not be applied.

One important point to be mentioned here is that the PSF of an NIFS observation may be unstable along the FOV, revealing a series of asymmetries. These irregularities (dependent on the position of the objects along the FOV) are probably caused by NIFS spatial pixels, which (as mentioned in Section 1) are not square, but rectangular and comparable to or larger than the FWHM of the Airy function. This variation of the PSF along the FOV can affect the Richardson–Lucy deconvolution. When more than one observation, with an appropriate dithering, is available, the irregularities of the PSF may be eliminated, or significantly reduced, by simply combining the data cubes in the form of a median. However, when only one observation is available and the instabilities of the PSF along the FOV are considerable, the Richardson–Lucy deconvolution should not be applied.

Our previous experiences revealed that, in order to optimize the results obtained with the Richardson–Lucy deconvolution, a number of iterations between 6 and 10 is recommended. A Richardson–Lucy deconvolution with more than 10 iterations will not improve significantly the results obtained with 10 iterations, and will amplify the low-frequency noise in the images. A Richardson–Lucy deconvolution with less than six iterations will not improve sufficiently the spatial resolution of the observation to justify the effort.

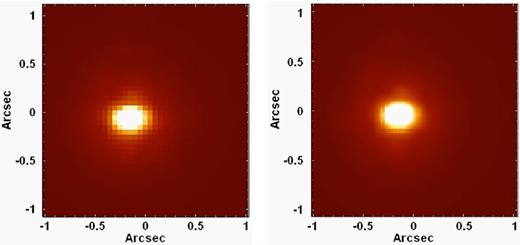

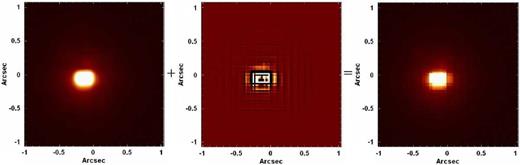

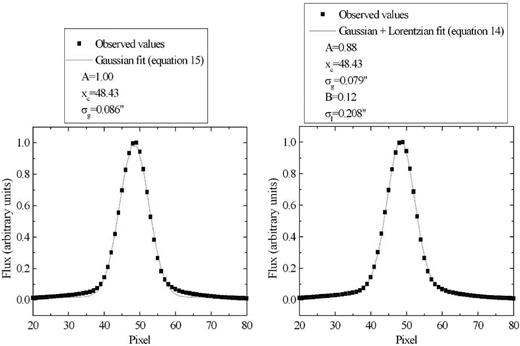

Fig. 19 shows images of a collapsed intermediate-wavelength interval of the data cube of GQ Lup, before and after the Richardson–Lucy deconvolution, using a real PSF (corresponding to an image of the data cube in an intermediate wavelength) and 10 iterations. The measured Strehl ratios improved from Strehl = 0.08 to Strehl = 0.21. This shows that the Richardson–Lucy deconvolution resulted in a significant improvement in the spatial resolution of the data cube of GQ Lup.

Left: image of a collapsed intermediate-wavelength interval of the data cube of GQ Lup, before the Richardson–Lucy deconvolution. Right: image of the same collapsed intermediate-wavelength interval of the data cube of GQ Lup, after the Richardson–Lucy deconvolution.

One relevant topic that is worth mentioning here is the fact that, even under excellent seeing conditions, the observed Strehl ratios in NIFS data cubes are normally not higher than 0.2. We believe that these considerably low values of the Strehl ratio are not only the result of limitations in the AO, but also a consequence of the size of NIFS spatial pixels. In order to evaluate this hypothesis, we sampled Airy functions, in the J, H and K bands, in spatial pixels of 0.103 arcsec × 0.043 arcsec (the same size of NIFS spatial pixels) and calculated the Strehl ratio of the resulting images. We obtained values of ∼0.18, ∼0.30 and ∼0.54, in the J, H and K bands, respectively. This result indicates that, even if the AO technique was perfect, we could not obtain Strehl ratios higher than ∼0.18, ∼0.30 and ∼0.54 in NIFS data cubes observed in the J, H and K bands, respectively.

A SCIENTIFIC EXAMPLE: NGC 4151

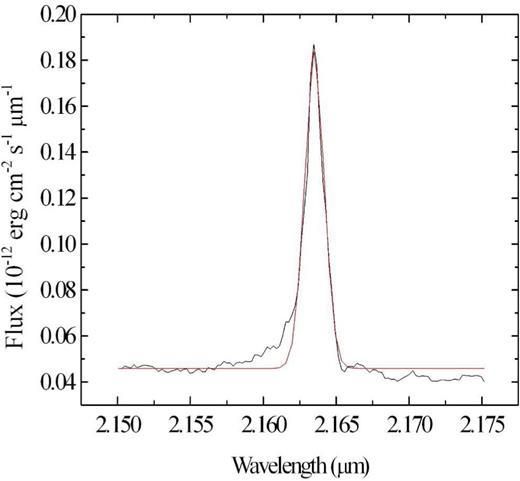

In order to show the benefits obtained with our data treatment procedure, we performed an analysis of the Brγ and H2λ21218 emission lines of the data cube of NGC 4151, in the K band, which was treated with all the data treatment techniques described in the previous sections. These data were already published in two papers by Storchi-Bergmann et al. (2009, 2010). These papers used standard methodologies and we are reanalyzing these data with our data treatment methodology, showing new relevant scientific aspects.

NGC 4151 is a SAB(rs)ab galaxy at a distance of 13.3 Mpc. Since it is the closest Seyfert 1 galaxy, this object is also one of the most studied AGNs. Osterbrock & Koski (1976) classified this galaxy as a Seyfert 1.5, as it has both broad permitted lines and strong narrow forbidden lines. NGC 4151 was in the list of objects analysed by Seyfert (1943); however, the first detailed study of the Seyfert nucleus of this galaxy was made by Ulrich (1973), who demonstrated the existence of, at least, four distinct clouds in the central 300 pc.

Evans et al. (1993) analysed [O iii] λ5007 and Hα + [N ii] λλ6548, 6583 images of the nuclear region of NGC 4151, obtained with the Hubble Space Telescope (HST). The authors concluded that the Hα + [N ii] λλ6548, 6583 emission comes from an unresolved nuclear point-like source, probably associated with the BLR of the AGN. On the other hand, the [O iii] λ5007 emission, associated with the narrow-line region (NLR), comes from clouds distributed under the form of a bicone. The authors also verified that the bicone has a projected opening angle of 75° ± 5° and extends along a position angle of 60° ± 5°.

By analysing images and spectra from the HST, Hutchings et al. (1998) determined velocities of individual clouds in the NLR of NGC 4151 and concluded that the model of outflows along the bicone is the most appropriate to describe the observed behaviour. The authors also concluded that the edge of the ionization cone must be close to the line of sight. Crenshaw et al. (2000) and Das et al. (2005) also analysed data from the HST of the nuclear region of NGC 4151 and elaborated models of biconical outflows. They concluded that the main aspects of the ionized-gas kinematics in the NLR of this galaxy could be reproduced by these models.

Knop et al. (1996) analysed spectroscopic data of NGC 4151 in the near-infrared (1.24-1.30 μm) and identified individual velocity components for the Paβ, [Fe ii] λ12567, and [SI X] λ12 524 emission lines, which is consistent with the presence of individual clouds.

Storchi-Bergmann et al. (2009) verified that the intensity distributions of the H, He, [S iii], [P ii], [Fe ii] and [S iii] emission lines have a morphology of a bicone, as expected from previous studies. The intensity distribution of H2, on the other hand, is completely different, as almost no emission of H2 was detected along the bicone, possibly due to the destruction of the H2 molecules in this area. The authors proposed that the H2 emission is probably a tracer of a large molecular gas reservoir, which feeds the AGN. Storchi-Bergmann et al. (2010) identified three kinematical components for the ionized gas: an extended emission, with a systemic velocity, in a circular region around the nucleus; an outflow component along the bicone; a component due to the interaction of the radio jet with the disc of the galaxy.

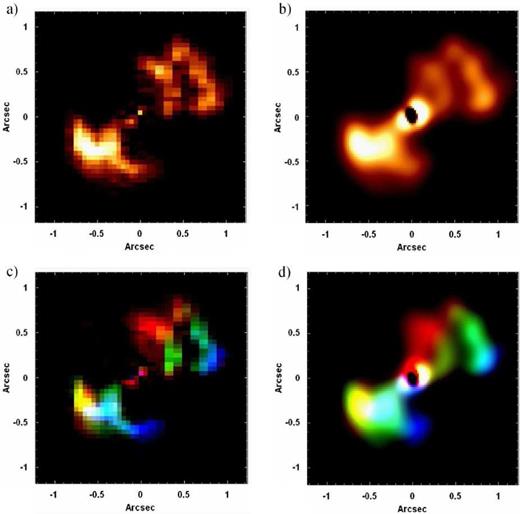

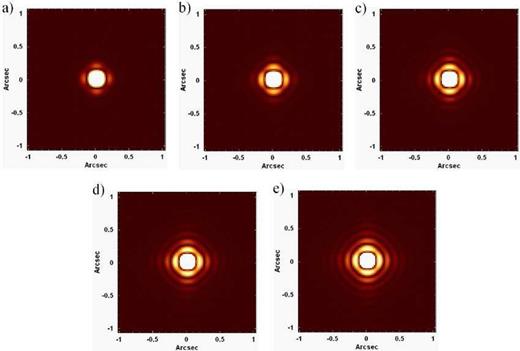

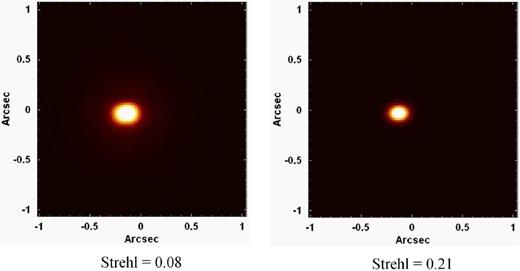

In order to evaluate the effects of our data treatment procedure in the analysis of the data cube of NGC 4151, first of all, we constructed images of the Brγ emission line (with the adjacent spectral continuum subtracted) from the data cubes of NGC 4151 with and without the use of our data treatment procedure. We also elaborated RGB compositions (based on the radial velocity values) for the images obtained. The results are shown in Fig. 20. All the images reveal a biconical morphology for the NLR of this galaxy, as observed by many previous studies (Evans et al. 1993; Hutchings et al. 1998; Crenshaw et al. 2000; Das et al. 2005; Storchi-Bergmann et al. 2009, 2010). However, the visualization of this morphology is more difficult in the Brγ image from the non-treated data cube. Our data treatment procedure not only improved the visualization of the bicone, but also made it easier to detect even some individual ionized-gas clouds, which are much harder to identify (some of them are almost undetectable) in the non-treated data cube.

(a) Image of the Brγ emission line from the non-treated data cube of NGC 4151. (b) Image of the Brγ emission line from the treated data cube of NGC 4151. (c) RGB composition for the image shown in (a), with the colours blue, green and red representing the velocity ranges −482 km s−1 ≤ Vr ≤ −158 km s−1, −128 km s−1 ≤ Vr ≤ 167 km s−1 and 196 km s−1 ≤ Vr ≤ 520 km s−1, respectively.

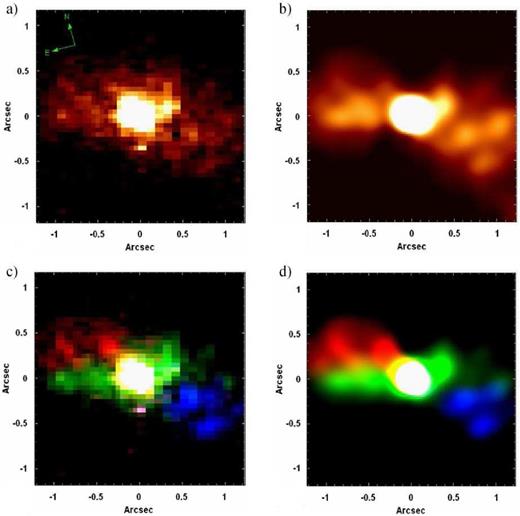

We retrieved an [O iii] image of the nuclear region of NGC 4151, obtained with Wide-Field Planetary Camera 2 (WFPC2) of the HST (this image was analysed by Hutchings et al. 1998). Fig. 21 shows a comparison between this [O iii] image and the Brγ image obtained from the treated data cube of NGC 4151. We can see that, although our spatial resolution is not as high as the spatial resolution obtained with the HST (as expected), the improvement provided by our data treatment procedure is so high that many of the ionized-gas clouds visible in the [O iii] image are also very clearly detected in the Brγ image from the treated data cube. Some pairs of clouds (15 + 17, 6 + 11 and 7 + 8), however, remained blended in the Brγ image. Table 1 shows a comparison between the ionized-gas clouds identified by Hutchings et al. (1998) and the ones identified in the Brγ image from the treated data cube.

Left: [O iii] image of the nuclear region of NGC 4151, with the same FOV of NIFS, obtained with WFPC2 of the HST. The identification numbers of the clouds are the same ones used by Hutchings et al. (1998). Right: image of the Brγ emission line from the treated data cube of NGC 4151, with the detected ionized-gas clouds identified.

Comparison between the ionized-gas clouds in the nuclear region of NGC 4151 identified using NIFS data and HST data (Hutchings et al. 1998, using HST data).

| Identification of the cloud . | Identification of the cloud . | Radial velocity obtained . | Radial velocity obtained by . |

|---|---|---|---|

| in the image of Brγ . | in the [O iii] image, made . | using NIFS data (km s−1) . | Hutchings et al. (1998), using . |

| . | by Hutchings et al. (1998) . | . | HST data (km s−1) . |

| A | 22 | −238 ± 2 | −260 ± 40 |

| B | 23 | −222 ± 2 | −213 ± 3 |

| C | 19 | −173 ± 5 | −126 ± 32 |

| D | 15 + 17 | −18 ± 7 | −210 ± 12 (cloud 15) |

| −210 ± 11 (cloud 17) | |||

| E | 26 | −200 ± 9 | −340 ± 20 |

| F | 20 | 17 ± 5 | 37 ± 8 |

| G | 1 | 222 ± 17 | 223 ± 9 |

| H | 6 + 11 | 36 ± 2 | 12 ± 21 (cloud 6) |

| 20 ± 18 (cloud 11) | |||

| I | 9 | 24 ± 2 | 60 ± 18 |

| J | 12 | 122 ± 11 | 100 ± 85 |

| K | 7 + 8 | 213 ± 8 | 235 ± 70 (cloud 7) |

| 311 ± 47 (cloud 8) |

| Identification of the cloud . | Identification of the cloud . | Radial velocity obtained . | Radial velocity obtained by . |

|---|---|---|---|

| in the image of Brγ . | in the [O iii] image, made . | using NIFS data (km s−1) . | Hutchings et al. (1998), using . |

| . | by Hutchings et al. (1998) . | . | HST data (km s−1) . |

| A | 22 | −238 ± 2 | −260 ± 40 |

| B | 23 | −222 ± 2 | −213 ± 3 |

| C | 19 | −173 ± 5 | −126 ± 32 |

| D | 15 + 17 | −18 ± 7 | −210 ± 12 (cloud 15) |

| −210 ± 11 (cloud 17) | |||

| E | 26 | −200 ± 9 | −340 ± 20 |

| F | 20 | 17 ± 5 | 37 ± 8 |

| G | 1 | 222 ± 17 | 223 ± 9 |

| H | 6 + 11 | 36 ± 2 | 12 ± 21 (cloud 6) |

| 20 ± 18 (cloud 11) | |||

| I | 9 | 24 ± 2 | 60 ± 18 |

| J | 12 | 122 ± 11 | 100 ± 85 |

| K | 7 + 8 | 213 ± 8 | 235 ± 70 (cloud 7) |

| 311 ± 47 (cloud 8) |

Comparison between the ionized-gas clouds in the nuclear region of NGC 4151 identified using NIFS data and HST data (Hutchings et al. 1998, using HST data).

| Identification of the cloud . | Identification of the cloud . | Radial velocity obtained . | Radial velocity obtained by . |

|---|---|---|---|

| in the image of Brγ . | in the [O iii] image, made . | using NIFS data (km s−1) . | Hutchings et al. (1998), using . |

| . | by Hutchings et al. (1998) . | . | HST data (km s−1) . |

| A | 22 | −238 ± 2 | −260 ± 40 |

| B | 23 | −222 ± 2 | −213 ± 3 |

| C | 19 | −173 ± 5 | −126 ± 32 |

| D | 15 + 17 | −18 ± 7 | −210 ± 12 (cloud 15) |

| −210 ± 11 (cloud 17) | |||

| E | 26 | −200 ± 9 | −340 ± 20 |

| F | 20 | 17 ± 5 | 37 ± 8 |

| G | 1 | 222 ± 17 | 223 ± 9 |

| H | 6 + 11 | 36 ± 2 | 12 ± 21 (cloud 6) |

| 20 ± 18 (cloud 11) | |||

| I | 9 | 24 ± 2 | 60 ± 18 |

| J | 12 | 122 ± 11 | 100 ± 85 |

| K | 7 + 8 | 213 ± 8 | 235 ± 70 (cloud 7) |

| 311 ± 47 (cloud 8) |

| Identification of the cloud . | Identification of the cloud . | Radial velocity obtained . | Radial velocity obtained by . |

|---|---|---|---|

| in the image of Brγ . | in the [O iii] image, made . | using NIFS data (km s−1) . | Hutchings et al. (1998), using . |

| . | by Hutchings et al. (1998) . | . | HST data (km s−1) . |

| A | 22 | −238 ± 2 | −260 ± 40 |

| B | 23 | −222 ± 2 | −213 ± 3 |

| C | 19 | −173 ± 5 | −126 ± 32 |

| D | 15 + 17 | −18 ± 7 | −210 ± 12 (cloud 15) |

| −210 ± 11 (cloud 17) | |||

| E | 26 | −200 ± 9 | −340 ± 20 |

| F | 20 | 17 ± 5 | 37 ± 8 |

| G | 1 | 222 ± 17 | 223 ± 9 |

| H | 6 + 11 | 36 ± 2 | 12 ± 21 (cloud 6) |

| 20 ± 18 (cloud 11) | |||

| I | 9 | 24 ± 2 | 60 ± 18 |

| J | 12 | 122 ± 11 | 100 ± 85 |

| K | 7 + 8 | 213 ± 8 | 235 ± 70 (cloud 7) |

| 311 ± 47 (cloud 8) |