Abstract

This work approaches the Michaelis-Menten model for enzymatic reactions at a nanoscale, where we focus on the quasi-stationary state of the process. The entropy and the kinetics of the stochastic fluctuations are studied to obtain new understanding about the catalytic reaction. The treatment of this problem begins with a state space describing an initial amount of substrate and enzyme-substrate complex molecules. Using the van Kampen expansion, this state space is split into a deterministic one for the mean concentrations involved, and a stochastic one for the fluctuations of these concentrations. The probability density in the fluctuation space displays a behavior that can be described as a rotation, which can be better understood using the formalism of stochastic velocities. The key idea is to consider an ensemble of physical systems that can be handled as if they were a purely conceptual gas in the fluctuation space. The entropy of the system increases when the reaction starts and slightly diminishes once it is over, suggesting: 1. The existence of a rearrangement process during the reaction. 2. According to the second law of thermodynamics, the presence of an external energy source that causes the vibrations of the structure of the enzyme to vibrate, helping the catalytic process. For the sake of completeness and for a uniform notation throughout this work and the ones referenced, the initial sections are dedicated to a short examination of the master equation and the van Kampen method for the separation of the problem into a deterministic and stochastic parts. A Fokker-Planck equation (FPE) is obtained in the latter part, which is then used as grounds to discuss the formalism of stochastic velocities and the entropy of the system. The results are discussed based on the references cited in this work.

Export citation and abstract BibTeX RIS

Introduction

The Michaelis-Menten catalysis model is routinely used in biochemistry, as it describes the reaction velocity,  defined as the time derivative of the product. The equation for

defined as the time derivative of the product. The equation for  was obtained by Leonor Michaelis and Maud Menten in 1913 [1] and is preceded by Victor Henri [2], who published in 1903 an article proposing that the basis for explaining the phenomenon of catalysis could be a reversible reaction between an enzyme (

was obtained by Leonor Michaelis and Maud Menten in 1913 [1] and is preceded by Victor Henri [2], who published in 1903 an article proposing that the basis for explaining the phenomenon of catalysis could be a reversible reaction between an enzyme ( ) and a substrate (

) and a substrate ( ), to produce an enzyme-substrate complex (

), to produce an enzyme-substrate complex ( ), from which an irreversible reaction could yield a product (

), from which an irreversible reaction could yield a product ( ) and a free enzyme.

) and a free enzyme.

The conditions of the validity of the algebraic equation resulting from this model has been widely studied [3, 4], and a variety of methods have been used to study the dynamics of the reaction [5–7]. From the point of view of stochastic processes, it is worth to mention A. F. Bartholomay [8], who formulated the problem of chemical reactions as a probability density that was a function of time and of the concentrations of substrate and enzyme-substrate complex. He obtained the corresponding master equation and demonstrated that the time evolution of the means of the concentrations match with the non-linear differential equations known in the textbooks of chemical kinetics. Sandra Hasstedt [9] studied the same problem with bivalued variables {0, 1}, to indicate the presence or absence of a single enzyme molecule. Arányi and Thöt [10] developed a similar approach for states with zero or one enzyme molecule but an unlimited amount of substrate molecules. After 1990, the advancements in technology and measurement techniques using Raman spectroscopy and methods of photo-physics and photochemistry [11–13], have made the study of random fluctuations a necessity. In the XXI century the study of stochastic systems has proliferated [14–16] brought to attention to the fact that, in the smaller dimensions inside of the cell, enzymes are subject to random fluctuations due to the Brownian motion, causing random displacements of these, therefore changing the reaction rates. After noting that the number of proteins is also very small, they questioned the description of reactions based in the continuous flow of matter and proposed a formulation in terms of discrete stochastic equations. Puchaka and Kierzek [17] suggested a method named 'maximal time step method' aimed at stochastic simulation of systems composed of metabolic reactions and regulatory processes involving small quantities of molecules. Turner et al [18] reviewed the efforts intended to include the effects of fluctuations in the structural organization of the cytoplasm and the limited diffusion of molecules due to molecular aggregation, and discussed the advantages of these for the modelling of intracellular reactions. In 2008 Valdus Saks et al [19] showed that cells have a highly compartmentalized inner structure, thus they are not to be considered as simple bags of protein where enzymes diffuse as a gas. Also, this type of works has inspired specialists to design drugs, who have initiated studies about the required sizes for better substrate processing. Among these, one analyzed pairs of compartments in cyanobacterias, which contain two compartments named α-carboxysome and β-carboxysome, with dimensions of the order of nanometers [20]. These elements lead us to maintain our position that the analysis of random fluctuations of substance concentrations are relevant in biochemical systems.

While there are many enzyme reactions that can be described by the Michaelis-Menten model, it is better suited for cases that follow two conditions:

- 1.The number of substrate species that can bind to an enzyme is one.

- 2.The system does not exhibit cooperativity, therefore the curve of the reaction velocity, as a function of substrate concentration, has a hyperbolic shape.

It is to be expected that random phenomena take greater importance when experiments are carried out at a nanoscale, therefore, it is convenient to thoroughly understand the role of random behavior in cases where the number of reactants is of the order of a few thousands or hundreds. When the van Kampen method is applicable, the system under study can be split in two spaces: one for the deterministic solutions of the means of physical variables, and another for the random fluctuations that occur around these mean values. Amid the results found until now, there is the fact that the probability density of the random fluctuations can be described adequately with a gaussian distribution. In summary, each physical system has a state space associated to it, such that a point in a state space corresponds to a specific set of values for all the system variables.

The objective of this work is to calculate the entropy of fluctuations and present kinematics that describe the behavior of these fluctuations from the point of view of their state space, given the name of fluctuation space. We introduce various stochastic velocities and take advantage of two of these to describe the regions of higher and lower probability. It will be shown that, during the course of the chemical reaction, an entropy of fluctuation arises that presents two characteristics:

- 1.The initial increment is positive, which guarantees the spontaneity nature of the reaction.

- 2.There is a subsequent decrease in entropy, which in turn reveals two aspects:

- a.There exists a source of energy in the process.

- b.This decrease in the change of entropy translates into the heat capacity at constant pressure,Cp , is negative in the catalysis. This is a result that has been confirmed in previous literature.

The stochastic velocities surged as part of the efforts to understand quantum phenomena as a probabilistic problem [21–24]. However, the mathematical expansion is valid for any stochastic phenomenon that can be described by diffusion equations. These velocities have been useful to study the active Brownian motion by one of the authors of this work [25] and now this is applied to the field mentioned in this section.

This article is organized as follows:

We begin by defining the physical system and present the results of a simulated reaction using the Gillespie algorithm. In the following section, based on the graphs obtained from it, we review the theory developed by Bartholomay, which is used to split the state space in two: one for the average concentrations and another for their fluctuations (the latter one receiving the name of fluctuation space). In this same section we clarify what is understood by a state of equilibrium in this work, and its difference with the stationary state under study.

In the next section we analyze the behavior of the state points in the fluctuation space obtained previously and do a short review of the stochastic velocities formalism, as well as obtain the expression for the entropy in this system. We pay special attention to the form of these velocities in time dependent Ornstein-Uhlenbeck processes. We examine what it means to reach a state of equilibrium in the simulated reaction, and the conduct of the probability density of the state points in the fluctuation space during the quasi-stationary state.

We then retake the discussion of the entropy, performing an estimation of its value at different points in time of the reaction. We find that, during the evolution of the reaction, the Michaelis-Menten model is capable of describing an expected decrease of the entropy of the system.

We close this article by discussing the results obtained and our conclusions.

Physical system

The physical system under consideration is the chemical reaction posed in the introduction section. During the time interval  enzyme and substrate molecules exist without interaction within a fluid that serves as a medium that is in thermodynamic equilibrium. At time

enzyme and substrate molecules exist without interaction within a fluid that serves as a medium that is in thermodynamic equilibrium. At time  the system suffers a change that gives way to the start of the reaction, an event that could be, for example, the stirring of the fluid with the enzymes and substrate molecules. During the interval

the system suffers a change that gives way to the start of the reaction, an event that could be, for example, the stirring of the fluid with the enzymes and substrate molecules. During the interval  the system undergoes the process of a catalytic reaction until all substrate molecules have been depleted.

the system undergoes the process of a catalytic reaction until all substrate molecules have been depleted.

We started by simulating a reaction using the Gillespie algorithm [26]. The number of enzymes ( ), substrate (

), substrate ( ), enzyme-substrate complex (

), enzyme-substrate complex ( ) and product (

) and product ( ) are under observation. One hundred realizations are carried out with the following initial conditions:

) are under observation. One hundred realizations are carried out with the following initial conditions:  The reaction rates were taken from the work by Weilandt et al [27] and selecting the special case where the reaction is irreversible, thus

The reaction rates were taken from the work by Weilandt et al [27] and selecting the special case where the reaction is irreversible, thus  In this work the notation used for the reaction rates are presented in table 1.

In this work the notation used for the reaction rates are presented in table 1.

Table 1. Reaction rate used in the simulation.

| 1.52 × 105 |

|

| k2 | 10 |

|

| k3 | 22 |

|

| r | 6.5579 × 10−5 |

|

| kM | 2.105 × 10−4 | (M) |

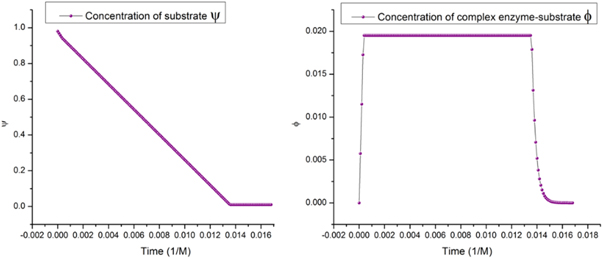

The simulation results were data structures for ( ), where

), where  is the time between reactions. An example of a time evolution of

is the time between reactions. An example of a time evolution of  and

and  as it progresses towards a stationary state is given in figure 1.

as it progresses towards a stationary state is given in figure 1.

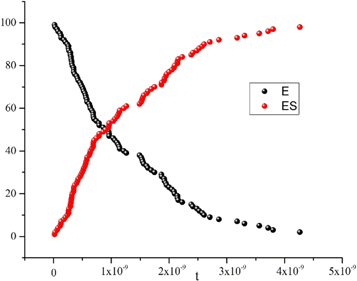

Figure 1. Time evolution of the number of enzyme molecules (black) and ES complex (red) reaching stationary states. One realization with the Gillespie algorithm. Initial conditions: E = 100, S = 4900, ES = 0, P = 0.

Download figure:

Standard image High-resolution imageWe found that as the reaction progresses, the number of enzymes decreases to almost zero due to them having transitioned to the enzyme-substrate complex state. Once in this state, the quantity of  s kept almost constant in time. The amount of

s kept almost constant in time. The amount of  decreases as it is consumed to form

decreases as it is consumed to form  and when the process is nearing depletion of

and when the process is nearing depletion of  the same occurs to

the same occurs to  returning the amount of free enzyme

returning the amount of free enzyme  to its initial value. The evolution of

to its initial value. The evolution of  and

and  is shown in figure 2.

is shown in figure 2.

Figure 2. Time evolution the number of enzyme molecules (black) and ES complexes (red). The number of ES go to zero. One realization with the Gillespie algorithm. Initial conditions: E = 100, S = 4900, ES = 0, P = 0.

Download figure:

Standard image High-resolution imageBetween the initial and final stages exists a stage that called the quasi-stationary state of the chemical reaction. This is presented in figure 3 and is the regime that will be addressed in greater detail in this work.

Figure 3. The number of ES complexes is almost constant. This is the state considered as stationary in this work. Average over 100 realizations with the Gillespie algorithm. Initial conditions: E = 100, S = 4900, ES = 0, P = 0.

Download figure:

Standard image High-resolution imageFigures 1 and 2 show the stochastic nature of this process, and these random fluctuations are the focal point of our work. An adequate treatment for this kind of problems was developed in the past; this is presented in the section below.

One of the results obtained predicts that the profile of the mean of the ES complex is a decreasing curve. This occurs when the product is reaching its constant value and the amount of substrate is nearly depleted. We will also demonstrate that this is what is called the state of equilibrium.

Master equation and the Van Kampen omega expansion

The usual mathematical treatment sets off from the consideration of a process where a number of  enzyme molecules and

enzyme molecules and  substrate molecules react, first to form an enzyme-substrate complex in a reversible reaction, followed by an irreversible reaction that can form a product plus a free enzyme. We model this system through 4 amounts that at a given time

substrate molecules react, first to form an enzyme-substrate complex in a reversible reaction, followed by an irreversible reaction that can form a product plus a free enzyme. We model this system through 4 amounts that at a given time  have:

have:  enzyme particles,

enzyme particles,  substrate particles,

substrate particles,  enzyme-substrate complexes, and

enzyme-substrate complexes, and  product particles.

product particles.

Two laws of conservation are followed:

- 1.The number of enzyme molecules at any given time are conserved and can be described by:

- 2.The number of substrate molecules at any given time are conserved and can be described by:

From above follows that there are only two independent variables, therefore  are taken as the state variables that evolve with time, and to introduce

are taken as the state variables that evolve with time, and to introduce  as the probability that there are

as the probability that there are  substrate particles and

substrate particles and  enzyme-substrate complexes at time

enzyme-substrate complexes at time  From now on these will be called

From now on these will be called  to ease reading and to connect with the notation used in [28]. The time evolution equation of

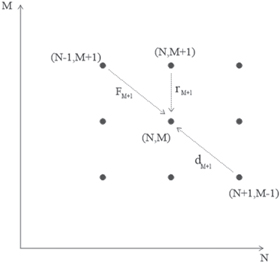

to ease reading and to connect with the notation used in [28]. The time evolution equation of  is obtained on the basis of three transitions that can occur in the state space representable in a two-dimensional plane, as shown in figure 4.

is obtained on the basis of three transitions that can occur in the state space representable in a two-dimensional plane, as shown in figure 4.

Figure 4. State space. The number of complexes M versus the number of substrates N. The arrows show the transitions needed to build the master equation.

Download figure:

Standard image High-resolution imageThe probability  can be calculated considering the conservation of probability. Each term consists of two factors, such that each factor is the probability of an independent event. For instance, in the event where the state transitions from

can be calculated considering the conservation of probability. Each term consists of two factors, such that each factor is the probability of an independent event. For instance, in the event where the state transitions from  to

to  in the interval

in the interval  considers the product of the probability of the state to be at

considers the product of the probability of the state to be at  at time

at time  and the probability of the transition to be towards

and the probability of the transition to be towards  A similar argument is applicable for each of the three transitions drawn in figure 4. There also exist the passive transition, that consists of the system already being at state

A similar argument is applicable for each of the three transitions drawn in figure 4. There also exist the passive transition, that consists of the system already being at state  at time

at time  and stays in that state during the time interval

and stays in that state during the time interval  The general formulation of this can be consulted in [28]. The resulting master equation is

The general formulation of this can be consulted in [28]. The resulting master equation is

where

with step operators

The van Kampen omega expansion allows one to separate the state space represented in figure 4 in two distinct spaces, one for the deterministic side of the problem, and another for the random fluctuations. For this purpose, the next intensive variables are defined:

with  It is important to note that, while it is common practice to define concentration as the quotient between the number of molecules within a volume, in this work it is defined in respect to the total number of reactants in the system. The relation between both ratios is a constant.

It is important to note that, while it is common practice to define concentration as the quotient between the number of molecules within a volume, in this work it is defined in respect to the total number of reactants in the system. The relation between both ratios is a constant.

The pair  represent the deterministic conduct (also called the macroscopic description) of the particle densities (concentrations), and the pair

represent the deterministic conduct (also called the macroscopic description) of the particle densities (concentrations), and the pair  are their respective random fluctuations.

are their respective random fluctuations.

The step operator acts as shown in equation (6) below:

Such that the action of this step operator causes the change  There is an analogous expression for

There is an analogous expression for  Working with an arbitrary function, of which the second derivatives exists, from their Taylor expansion follows that an approximation for the operators can be expressed as:

Working with an arbitrary function, of which the second derivatives exists, from their Taylor expansion follows that an approximation for the operators can be expressed as:

These will be used in expression (4), along with transition rates

If one is looking for a probabilistic treatment where a well-defined probability density is obtained, in the sense that it is non-negative for every value of time  in all the fluctuation space

in all the fluctuation space  the only option is to cut (7) at its second order. Higher order expansions allow working with statistical moments or cumulants, but the pseudoprobability being used is not well defined; non-negativity is only recovered if all the terms in the series are used, in other words, if

the only option is to cut (7) at its second order. Higher order expansions allow working with statistical moments or cumulants, but the pseudoprobability being used is not well defined; non-negativity is only recovered if all the terms in the series are used, in other words, if  is of the form shown in the expression below:

is of the form shown in the expression below:

The other three operators involved would also have to have analogous expressions. In other words, according to the Pawula theorem, the expansion should stop after the first term or after the second term. On the contrary, it must contain an infinite number of terms, for the solution to the equation to be interpretable as a probability density function [29].

The transition rates are usually introduced by means of the law of mass action, resulting in

with  as the reaction rates given by the Arrhenius law. The description in the state space

as the reaction rates given by the Arrhenius law. The description in the state space  is recovered by considering

is recovered by considering  in

in

Substituting (5) in (8) and rescaling  results in

results in

Also, now the probability  has the following functional dependence for every pair of

has the following functional dependence for every pair of

Substituting (7) and (9) to (11) in (4), the right-hand side of the master equation (3) takes the following form:

The left-hand side is also rewritten as:

Substituting (13) and (14) in the master equation, and comparing terms with like coefficients it is possible to obtain:

For

For

The equations for the deterministic part of the problem, also called the macroscopic part, can be obtained through  and the partial differential equation that allows the study of the random fluctuations can be obtained through

and the partial differential equation that allows the study of the random fluctuations can be obtained through  The procedures for these are widely covered in the literature [24]. From here onwards we will be using them in the context of the notation initially proposed in this work as was done in [28].

The procedures for these are widely covered in the literature [24]. From here onwards we will be using them in the context of the notation initially proposed in this work as was done in [28].

Deterministic equations

The equations for the mean substrate concentration,  and the mean concentration of enzyme-substrate complex,

and the mean concentration of enzyme-substrate complex,  that describe the macroscopic part are:

that describe the macroscopic part are:

This approach is versatile enough to be of use at any enzyme-substrate ratio, since these differential equations can be solved numerically for different initial conditions; but it will be used with the usual proportions of reactants found in in-vitro experiments, where the initial substrate concentration is much greater than the initial enzyme concentration. The logic behind this is that smaller quantities of enzyme can catalyze much larger quantities of substrate, thus it is a topic of efficiency; however, this is not the only situation that can be studied in the laboratories. Albe et al [30] report the progress of a chemical reaction with different enzyme-substrate ratios, including cases where these ratios are inverted, so that the amount of enzyme exceeds that of the substrate.

Equations (17) can take a very practical form when multiplied by  and one can define the evolution parameter

and one can define the evolution parameter  with units of

with units of  The resulting expressions are:

The resulting expressions are:

Where  is an efficiency parameter that measures the rate of dissociation of the

is an efficiency parameter that measures the rate of dissociation of the  complex that is not transformed into product.

complex that is not transformed into product.

Quasi-stationary state

The quasi-stationary state that is of interest in the in-vitro experiments is obtained through the condition that the density of enzyme-substrate complex changes very little across time, which is mathematically expressed as  This is the topic of discussion in this subsection.

This is the topic of discussion in this subsection.

In terms of the densities, the conservation laws take the form of

Where  and

and  are the densities of the enzyme and the product, respectively. It is possible to demonstrate that the time evolution equations can be obtained through the following expressions:

are the densities of the enzyme and the product, respectively. It is possible to demonstrate that the time evolution equations can be obtained through the following expressions:

Introducing the quasi-stationary condition in the time evolution equation of  in (17) results in

in (17) results in

The conservation laws yield the relation  such that

such that

where  and receives the well-known name of the Michaelis-Menten constant.

and receives the well-known name of the Michaelis-Menten constant.

Denoting as  the rate of growth of the product density

the rate of growth of the product density  one obtains

one obtains

The in-vitro experiments are performed with amounts on the order micromoles. In such cases the behavior observed is of a slow decrease of the functions  and

and  It is then where the condition of

It is then where the condition of  is applicable.

is applicable.

From the deterministic equation for  in (18) results

in (18) results

One can obtain the following expression for the mean concentration of enzyme-substrate complex:

Using de definition  and the time evolution of

and the time evolution of  in (20), the demonstration of the following expression is straightforward.

in (20), the demonstration of the following expression is straightforward.

Where  The usual notation is recovered by simply rewriting

The usual notation is recovered by simply rewriting  as

as ![$\left[S\right].$](https://content.cld.iop.org/journals/1402-4896/96/8/085006/revision3/psabfd65ieqn100.gif)

Equilibrium state

It is common practice to indistinctively use the terms 'stationary' and 'equilibrium' as synonyms, but it is imperative to establish a clear distinction between them. This section is dedicated to this purpose, showing the difference between a stationary state achieved in in-vitro experiments and the equilibrium state. This latter one corresponds to the mathematical condition:

such that the following equations are followed:

The algebraic solution is  it corresponds to the complete consumption of the substrate and enzyme-substrate complex. It is for this reason that the state of equilibrium is only attainable when all the substrate has been consumed, such that only the initial amounts of free enzymes and the product remain in the system.

it corresponds to the complete consumption of the substrate and enzyme-substrate complex. It is for this reason that the state of equilibrium is only attainable when all the substrate has been consumed, such that only the initial amounts of free enzymes and the product remain in the system.

It is possible to study the stability of the equilibrium state. Linearizing the differential equations around  results in:

results in:

To ease reading, we introduce a shorter notation:

Thus, the eigenvalues of the matrix above are given as:

The transition rates and initial conditions are defined positive, this results in the eigenvalues satisfying the inequality:  The fundamental solutions

The fundamental solutions  generate the most general solution:

generate the most general solution:

Which will always converge towards the equilibrium state. This mathematic expression explains the profile of the curve for the  complex seen in figure 1 and the decreasing stage shown in figure 14.

complex seen in figure 1 and the decreasing stage shown in figure 14.

Analysis of the random fluctuations

General solution

The following equation is called the Fokker-Planck equation (FPE):

The expression above describes the probability that the fluctuation of substrate concentration takes the value  and the fluctuation of enzyme-substrate complex concentration takes the value

and the fluctuation of enzyme-substrate complex concentration takes the value  at time

at time  The systematic term can be written as

The systematic term can be written as

Where  is the flux of random concentration fluctuations of

is the flux of random concentration fluctuations of  and

and

And its general solution [31] is a gaussian function of the form:

with  as the self-correlation matrix, and

as the self-correlation matrix, and  as its inverse. This is the matrix that contains the variance of the random concentration fluctuations of

as its inverse. This is the matrix that contains the variance of the random concentration fluctuations of  and

and  as well as their correlations.

as well as their correlations.

In the case under study, the elements of  and

and  are given by:

are given by:

Given a physical magnitude  that is relevant to the system, its mean can be calculated by:

that is relevant to the system, its mean can be calculated by:

The time evolution equations of the mean of the fluctuations of the concentrations of  and

and  take the form of:

take the form of:

and the time evolution equations for the self-correlation functions are:

Defining ![$\vec{{\rm{\Xi }}}\left(t\right)=\left[{{\rm{\Xi }}}_{11}\left(t\right),{{\rm{\Xi }}}_{12}\left(t\right),{{\rm{\Xi }}}_{22}\left(t\right)\right]$](https://content.cld.iop.org/journals/1402-4896/96/8/085006/revision3/psabfd65ieqn121.gif) and

and ![$\vec{D}\left(t\right)=\left[{D}_{11}\left(t\right),{D}_{12}\left(t\right),{D}_{22}\left(t\right)\right],$](https://content.cld.iop.org/journals/1402-4896/96/8/085006/revision3/psabfd65ieqn122.gif) the equation above can be rewritten as follows:

the equation above can be rewritten as follows:

where

Defining the entropy of fluctuation of the concentrations of  and

and  as

as

Substituting the general solution in the expression for the entropy (35), results in expression (36) seen below:

Given two temporal points such that  the change in entropy is

the change in entropy is

so, the condition of  translates into the following condition:

translates into the following condition:

Expression (38) will later be utilized to confirm that the entropy of fluctuation decreases when the reaction is taking place. Expression (27) provides the mathematical form of the time dependent probability density for the fluctuation variables which, as will be shown in the numerical example in a later section, performs a clockwise turning motion as the reaction progresses. This result showcases the importance of fluctuations in the dynamics of catalysis; therefore, in-depth study of this topic is relevant.

If the phenomenon is analyzed using the dynamics of stochastic velocities, the behavior observed can be better understood. Thus, the section below is dedicated to the introduction of this formalism that may prove useful to the uninitiated reader.

Stochastic velocities

The time evolution of the fluctuations can be described by the motion of a state point  It is common to assume that its study is completed once its behavior has been formulated, as we did in the previous section. We will see that it is possible to add new knowledge to better understand the behavior of the state point

It is common to assume that its study is completed once its behavior has been formulated, as we did in the previous section. We will see that it is possible to add new knowledge to better understand the behavior of the state point

In this section we present an intuitive approach to stochastic velocities. We begin with an analysis of the difficulty found when attempting to define velocities in stochastic processes. The typical example given of a stochastic process is the Brownian motion; this describes the conduct of a micrometric mass floating inside a liquid at temperature  Seen through a modern video camera, its movement takes place in two dimensions, but for ease of writing mathematical expressions, we consider only one dimension. The model treats the Brownian particle as if it is a point mass, and due to the movement possessing random behavior, each point

Seen through a modern video camera, its movement takes place in two dimensions, but for ease of writing mathematical expressions, we consider only one dimension. The model treats the Brownian particle as if it is a point mass, and due to the movement possessing random behavior, each point  has a time dependent probability associated to it. This probability is a probability density function, denoted as

has a time dependent probability associated to it. This probability is a probability density function, denoted as  such that a given interval,

such that a given interval,  on the line of accessible positions, the expression

on the line of accessible positions, the expression  yields the probability of the particle being found within that interval at time

yields the probability of the particle being found within that interval at time

It can be demonstrated that  follows an equation of the form shown below:

follows an equation of the form shown below:

where  is named the diffusion coefficient. With the initial condition

is named the diffusion coefficient. With the initial condition  the solution obtained for (39) is

the solution obtained for (39) is

In this case, the probability densities evolve with time. From (40), the statistical properties  and

and  can be demonstrated. It is important to note that the mean movement is zero and that the standard deviation changes with time as

can be demonstrated. It is important to note that the mean movement is zero and that the standard deviation changes with time as  In the approach presented by Paul Langevin in 1908, the Second Law of Newton is applied to a Brownian particle of mass

In the approach presented by Paul Langevin in 1908, the Second Law of Newton is applied to a Brownian particle of mass  with a force

with a force  acting upon it; plus a friction force proportional to the velocity,

acting upon it; plus a friction force proportional to the velocity,  plus a stochastic force,

plus a stochastic force,  with the following properties:

with the following properties:

where  is a constant. There are technical reasons for calling white noise a random magnitude that displays these properties. The fundamental dynamic law resulting from this is called the Langevin equation, and is written as shown below:

is a constant. There are technical reasons for calling white noise a random magnitude that displays these properties. The fundamental dynamic law resulting from this is called the Langevin equation, and is written as shown below:

Using the method of moments, one obtains similar results for the Brownian motion. If the trajectories as functions of time  are to be plotted, the resulting graphs would present functions with very sharp peaks and valleys, therefore one can perceive intuitively that there would be problems when attempting to define the displacement velocity as

are to be plotted, the resulting graphs would present functions with very sharp peaks and valleys, therefore one can perceive intuitively that there would be problems when attempting to define the displacement velocity as  Next, we will focus on this dilemma with close attention.

Next, we will focus on this dilemma with close attention.

In mathematical terms, the Weiner process has been defined to formalize the events that follow the Brownian motion. Taking as a starting point the case where  and denoting the process as

and denoting the process as  the postulated properties are:

the postulated properties are:

- 1.

- 2.

is continuous.

is continuous. - 3.

has independent increments, that is to say, if it follows that

has independent increments, that is to say, if it follows that  then the differences

then the differences  and

and  are independent random variables.

are independent random variables. - 4.

is a random variable with normal distribution of mean

is a random variable with normal distribution of mean  and variance

and variance

In rigorous terms, equation (42) presents a difficulty that is analyzed next. Using the traditional concepts of defining the rate of change in a trajectory  we take the quotient of finite increments:

we take the quotient of finite increments:

but from the fourth postulate one must have  where it follows that

where it follows that  Therefore, the quotient diverges in the limit

Therefore, the quotient diverges in the limit  consequently, it is impossible to define the rate of change through traditional methods.

consequently, it is impossible to define the rate of change through traditional methods.

For the issue encountered above, instead of using the Langevin equation, as it was originally formulated, it is better to consider an approach using finite differences to suggest an equation that avoids the use of derivatives and translates all calculations to integrals, giving place to two types of integral calculus: that of Kiyoshi Ito and of Ruslan Stratonovich [32]. A less known option is the one developed by Edward Nelson, based in a system of averages over the realizations of the stochastic processes, and gives place to the concept of stochastic velocities [22, 33]. These have been used to describe quantum phenomena from a stochastic perspective, giving rise to a line of work called stochastic mechanics. In this work we take advantage of the mathematical tools developed and use them in our topic of interest. The condition is that the stochastic process must be describable by means of Fokker-Planck equations (FPE) [25].

When an ensemble is associated to a stochastic process  a state space of dimension

a state space of dimension  is available in which a point at a time

is available in which a point at a time  corresponds to each member of the ensemble. A large number of members of the ensemble produce a cloud of points that move at random as time progresses; this idea allows the introduction of an analogy with a gas, such that it is possible to include in the description some properties typical of fluids. One of these is that of vorticity, which lets us know if the cloud of state points tends to rotate, or if it behaves like a fluid whose velocity is irrotational. This gives us the opportunity of using this concept to analyze the manner with which the agitation of this cloud of state points occurs, and with it establish a difference between a stationary process and that of a process at equilibrium. The former abides to the condition that the statistical moment of order

corresponds to each member of the ensemble. A large number of members of the ensemble produce a cloud of points that move at random as time progresses; this idea allows the introduction of an analogy with a gas, such that it is possible to include in the description some properties typical of fluids. One of these is that of vorticity, which lets us know if the cloud of state points tends to rotate, or if it behaves like a fluid whose velocity is irrotational. This gives us the opportunity of using this concept to analyze the manner with which the agitation of this cloud of state points occurs, and with it establish a difference between a stationary process and that of a process at equilibrium. The former abides to the condition that the statistical moment of order  must be time invariant:

must be time invariant:

while the latter also follows the condition that each of the possible transitions must be balanced out by a transition that occurs on the opposite side. This condition is given the name of detailed balance. If the detailed balance is not present, then the cloud of state points tends to rotate, therefore, the use of the concept of vorticity contributes to the understanding of the dynamics of the gas cloud. Vorticity is defined as the curl of the velocity thus it is necessary to revise this point.

Smoothening the trajectory using a moving average

The mean over the realizations, as originally developed by Nelson, can be understood with the concept of moving averages used in the study of time series. In this section we introduce these concepts.

Let  be a stochastic process that occurs inside a state space

be a stochastic process that occurs inside a state space  and let the points

and let the points  such that they are reached by some realizations of the stochastic process

such that they are reached by some realizations of the stochastic process  at times

at times  respectively, where

respectively, where  Let the increments be

Let the increments be  see figure 5.

see figure 5.

Figure 5. The state space  The neighborhood of the point

The neighborhood of the point

is the region of interest where we determine the state points going inside or outside. One can arrive to the definition of the access and exit velocities if the times are considered. The stochastic process

is the region of interest where we determine the state points going inside or outside. One can arrive to the definition of the access and exit velocities if the times are considered. The stochastic process  can jump to points:

can jump to points:  The velocities are defined depending on the jump. Each point is reached at times

The velocities are defined depending on the jump. Each point is reached at times  The increments considered in the definitions are:

The increments considered in the definitions are:

Download figure:

Standard image High-resolution imageNext, we will explain the relations that must be followed between the time intervals involved in the description; for that purpose, we continue using the Brownian movement as a case study. Suppose a camera capable of registering the random movements of a state point; due to the difference in mass, the collision of a single molecule against the Brownian particle does not produce an effect that is registerable by the lab instrument. What moves the Brownian particle is the difference in the number of collisions that it receives because of the random fluctuations of the density of particles comprising the surrounding medium. By this manner, the path traced by the Brownian particle, as observed by a camera recording through an optical microscope, are polygonal shaped. However, because of the easiness in the mathematical language, we say that a collision occurs each time there is a change in the path taken by the particle of interest.

There are three instants of time that are relevant in this approach:

- 1.

the time required to accumulate enough collisions capable of causing a registerable random change in the path of the particle.

the time required to accumulate enough collisions capable of causing a registerable random change in the path of the particle. - 2.

the time that passes between two successive frames captured by the camera. If

the time that passes between two successive frames captured by the camera. If  is too short, the displacements registered may follow the relation

is too short, the displacements registered may follow the relation  as is the case in classical mechanics; but it is more common to find

as is the case in classical mechanics; but it is more common to find  in the Brownian case. For the latter conduct it is necessary that

in the Brownian case. For the latter conduct it is necessary that

- 3.

t, the time necessary to smoothen trajectories by the moving averages method. To study the movement of the state points, the stochastic trajectories are smoothed by introducing moving averages; these are calculated in time intervals

t, the time necessary to smoothen trajectories by the moving averages method. To study the movement of the state points, the stochastic trajectories are smoothed by introducing moving averages; these are calculated in time intervals  that must be long enough to include various spikes of the trajectory, as can be seen in figure 7, but also short enough that the camera registering the data cannot tell that the trajectory has been smoothed.

that must be long enough to include various spikes of the trajectory, as can be seen in figure 7, but also short enough that the camera registering the data cannot tell that the trajectory has been smoothed.

Therefore,  must be followed. This regime is called the coarse grain time scale, as shown in figure 6 below.

must be followed. This regime is called the coarse grain time scale, as shown in figure 6 below.

Figure 6.

is the time required to accumulate enough collisions to achieve a random motion.

is the time required to accumulate enough collisions to achieve a random motion.  is the time between two successive images, or measurements, taken by a camera, or measuring device.

is the time between two successive images, or measurements, taken by a camera, or measuring device.  is the time required to calculate a moving average.

is the time required to calculate a moving average.

Download figure:

Standard image High-resolution imageThe moving average of  points is defined as

points is defined as  where

where  takes values such that the sum is not out of the bounds of the interval. For continuous signals, the moving average is defined as:

takes values such that the sum is not out of the bounds of the interval. For continuous signals, the moving average is defined as:  We now set out to study the derivative of a function

We now set out to study the derivative of a function  For this purpose, we establish a set of times expressed in (45) below:

For this purpose, we establish a set of times expressed in (45) below:

and considering finite increments of the function

with

with  one can write:

one can write:

Rearranging and multiplying by  gives

gives

This expression receives the name of coarse grain time derivative. Notice the incorporation of the sum of finite differences inside a moving average within an interval of width  Once the smoothening process has been applied, the resulting trajectories can be studied using the usual tools of calculus. An illustrative example is given in figure 7.

Once the smoothening process has been applied, the resulting trajectories can be studied using the usual tools of calculus. An illustrative example is given in figure 7.

Figure 7. The result of the smoothening process is a curve without the spikes that are characteristic of the Brownian motion.

Download figure:

Standard image High-resolution imageThe study of smoothened stochastic functions

Points in the state space and a statistical description of their movement

Suppose a statistical ensemble of equally prepared experiments at a macro scale. Each of these carry out a realization of the stochastic process  At a given point in time, we have a cloud of points whose number is theoretically infinite. If one is to let the clock run out, a static image (like that of a photograph) would be substituted for a series of images of points moving at random, similar to a swarm of mosquitos swirling in summer. These will enter and exit of the previously marked region

At a given point in time, we have a cloud of points whose number is theoretically infinite. If one is to let the clock run out, a static image (like that of a photograph) would be substituted for a series of images of points moving at random, similar to a swarm of mosquitos swirling in summer. These will enter and exit of the previously marked region  as seen in figure 5. The question that follows is: How many points enter or exit

as seen in figure 5. The question that follows is: How many points enter or exit  in a second? This problem is similar as counting the number of smoke particles in a given region of space.

in a second? This problem is similar as counting the number of smoke particles in a given region of space.

The concept of systematic velocity, which has already been presented, measure the net number of state points that cross  per second.

per second.

Systematic derivative and systematic velocity

We define the systematic derivative as the mean over the ensemble of all the realizations

To have an analytical representation of the previous definition, we introduce various hypotheses that lead to the Taylor series expansion. The hypotheses are:

- The stochasticity of the physical phenomenon is introduced by a source that is: stationary, isotropic and homogenous.

- The second statistical moments of the increments

and

and  are such that they follow:

are such that they follow:

The diffusion matrix can be defined as

or also as

Notice that the increments grow as  so that the average that appears in the definition above grows as

so that the average that appears in the definition above grows as  It also must be noted that the products of increments appear as coefficients in the second order derivatives of any function

It also must be noted that the products of increments appear as coefficients in the second order derivatives of any function  expanded using Taylor series.

expanded using Taylor series.

To work at higher orders of ( ) would involve considering Taylor expansions with derivatives of orders of

) would involve considering Taylor expansions with derivatives of orders of  although it is mathematically possible, there exist a restriction when the problem is translated to determining the probability

although it is mathematically possible, there exist a restriction when the problem is translated to determining the probability  by means of a partial differential equation. The function

by means of a partial differential equation. The function  that satisfies an equation of order higher than 2 ceases to be defined as nonnegative for all

that satisfies an equation of order higher than 2 ceases to be defined as nonnegative for all  and

and  therefore it cannot be interpreted as a probability density function.

therefore it cannot be interpreted as a probability density function.

In vector calculus, a function  has a total time derivative that is of the form:

has a total time derivative that is of the form:

where  is the velocity. The expression above is called the convective derivative.

is the velocity. The expression above is called the convective derivative.

This concept can be adapted to the case where the function  depends on a stochastic process

depends on a stochastic process  This is the systematic derivative:

This is the systematic derivative:

such that

Access velocities, of exit and of diffusion

In fluid dynamics appears the phenomenon of swirls, or eddies, that cannot be understood with the systematic velocity alone; an illustrative example would be the that of cigar smoke traveling upwards through the air. To approach this topic let us consider a point  in the state space and both displacements,

in the state space and both displacements,  and

and  as shown in figure 5. We have the next relations:

as shown in figure 5. We have the next relations:

If  is a state point contained in vicinity

is a state point contained in vicinity  it is understood that the displacement towards

it is understood that the displacement towards  in a time interval

in a time interval  is related to the exit of state points. Likewise, the displacement from

is related to the exit of state points. Likewise, the displacement from  towards

towards  also in a time interval

also in a time interval  is related with the entry of state points into vicinity

is related with the entry of state points into vicinity

Let us suppose we track a state point whose route is as follows:

- At instant

the state point is located at

the state point is located at

- At instant

the state point is located at

the state point is located at

Separating by components and working them individually, one can obtain

We now consider the physical magnitude of the system denoted as  To treat the displacement from

To treat the displacement from  to

to  the function is expanded in Taylor series as shown below:

the function is expanded in Taylor series as shown below:

such that repeated indexes indicate a sum.

In the same fashion, one can study the displacement from  to

to  resulting in:

resulting in:

The difference between  and

and  is:

is:

It is possible to find that the next relations are followed:

Such that (59) can be written as:

Multiplying by  and calculating the mean results in

and calculating the mean results in

From (64) we have that the coefficient of the first derivative with respect to the position, is the ith component of the systematic velocity,  which has been previously found. We also find the following:

which has been previously found. We also find the following:

Also, the expression  is the systematic derivative of

is the systematic derivative of  thus, we have:

thus, we have:

From (64) is easy to identify the systematic derivative operator as:

If one takes as a special case the identity function:  the expression is reduced to the systematic velocity in vicinity

the expression is reduced to the systematic velocity in vicinity

Adding (57) and (58), it results:

Rearranging, multiplying by  and averaging, one obtains:

and averaging, one obtains:

The term that accompanies the first derivative with respect to the positions is used to define the diffusion velocity in vicinity

Which is useful to measure the stirring that the state point undergoes in each subset  in the fluctuations space. We can back up this definition if we add the systematic velocity and the diffusion presented above, resulting in:

in the fluctuations space. We can back up this definition if we add the systematic velocity and the diffusion presented above, resulting in:

Which can be interpreted as a total velocity measuring the mean path of state points from  to

to  in the time interval

in the time interval  In that case

In that case

such that the cigarette smoke phenomenon can be described in terms of two velocities, one of translation and other of swirling for each of the  vicinities in the fluctuation space.

vicinities in the fluctuation space.

With these conceptual tools, one can identify the operators that allow the calculation of the velocities mentioned in this work. Combining results, we find the next expression:

where

With the elements considered up until now, we identify the left-hand side as the stochastic derivative, or of diffusion, of the function  and identify the operator of the stochastic derivative as

and identify the operator of the stochastic derivative as

such that

To study the exit velocity,  we study the displacement from

we study the displacement from  to

to  in a

in a  time interval. For this purpose, we reuse the Taylor expansion given in (57) and rearrange it as:

time interval. For this purpose, we reuse the Taylor expansion given in (57) and rearrange it as:

Multiplying by  and calculating the mean

and calculating the mean

Defining the  -th component of the exit velocity as follows

-th component of the exit velocity as follows

and identifying that

thus, we have

Defining the left-hand side as a forward derivative of function  and introduce the forward operator as:

and introduce the forward operator as:

Such that we represent the forward derivative as  In the case when

In the case when  one obtains the

one obtains the  -th component of the exit velocity in vicinity

-th component of the exit velocity in vicinity

Finally, one can relate the entry velocity with the translation of the state point from  to

to  in time interval

in time interval  (see figure 5). Now consider the Taylor series expansion:

(see figure 5). Now consider the Taylor series expansion:

Rearranging, multiplying by  and calculating the mean:

and calculating the mean:

Defining the ith component of the entry velocity in vicinity  as:

as:

and identifying

It is possible to rewrite (81) as:

Defining the backwards derivative operator as

And rewriting (84) as

Once again, if  one can obtain

one can obtain

which is the ith component of the entry velocity in vicinity

Combining operators

Passing the operators through an algebraic process, one can obtain the following expressions:

If we were to add (88) and (89), then multiply by

Defining

we have

Now, calculating the difference between (88) and (89) and multiplying by

such that it is convenient to define:

So (93) can be rewritten as:

The stochastic velocities provide a description at the level of vicinities such that it is possible to calculate a variety of means previously mentioned. Table 2 summarizes the previous results:

Table 2. Stochastic velocities. Notation and associated operator.

| Velocity | Notation | Operator |

|---|---|---|

| Access entry |

|

|

| Exit |

|

|

| Systematic |

|

|

| Diffusion |

|

|

In their current form, their usefulness is not very clear. In the following section we will see a version of these that allows us to study the quasi-local conduct of the state points in the fluctuation space. Figures 5 and 7 lead to a description where the idea of instantaneous velocities, used regularly in the context of classical mechanics, has to be abandoned. In the topic under study there is a necessity to associate the concepts of velocities to vicinities that are small enough, but without reducing their size to an infinitesimal area, as is the standard in differential calculus.

Stochastic velocities in a time dependent Ornstein-Uhlenbeck process

The stochastic velocities have a practical application in time dependent Ornstein-Uhlenbeck stochastic processes because they can be written in terms of the convection, diffusion and self-correlation matrices of these processes. To make the understanding of the conduct of the state point in the fluctuation space more accessible, in this section we obtain the form of these velocities for the system under consideration.

The time dependent Ornstein-Uhlenbeck processes are normally distributed,  in which their means and self-correlation function change with time. The forward Fokker-Planck equation (FPE) that satisfies

in which their means and self-correlation function change with time. The forward Fokker-Planck equation (FPE) that satisfies  is written as follows:

is written as follows:

The factor of  frequently used when writing a FPE has been absorbed by

frequently used when writing a FPE has been absorbed by  and the repeated indexes run from

and the repeated indexes run from  to

to  with

with  denoting the number of degrees of liberty of the system. The flux term,

denoting the number of degrees of liberty of the system. The flux term,  is linear in the noise

is linear in the noise  as shown below:

as shown below:

where  is called the convection matrix.

is called the convection matrix.

The probability distribution that satisfies the forward FPE is given in (98)

We can identify the exit velocity  with the flux term, thus we have:

with the flux term, thus we have:

so, we can rewrite the forward FPE as

On the other hand, the backward FPE that corresponds to this process takes the following form:

In a similar fashion, the backward FPE is related to the operator  and with the entry velocity, resulting in

and with the entry velocity, resulting in

There is an analytical solution when the diffusion velocity has zero divergence. Calculating the difference between (100) and (102) and multiplying by

The term between brackets is a magnitude with divergence of zero:

thus, it can be interpreted as a magnitude that is conserved:

where  is a constant that can be taken as equal to zero, then

is a constant that can be taken as equal to zero, then  is given in terms of

is given in terms of  and

and

From above it results that the diffusion velocity can be obtained if  is known. This is the case for time dependent Ornstein-Uhlenbeck processes.

is known. This is the case for time dependent Ornstein-Uhlenbeck processes.

Substituting (98) in (106) and using the short notation of: ![$G\left(t\right)=\tfrac{1}{2}{y}_{\alpha }\left(t\right){\left[{{\rm{\Xi }}}^{-1}\left(t\right)\right]}_{\alpha \beta }{y}_{\beta }\left(t\right),$](https://content.cld.iop.org/journals/1402-4896/96/8/085006/revision3/psabfd65ieqn304.gif) one obtains

one obtains

where we have written  to simplify notation. Working (107) some more results in:

to simplify notation. Working (107) some more results in:

Calculating the derivative and using that  is symmetric results that

is symmetric results that

From previous results we have the next relations for the velocities:

Relation (111) leads to

Substituting this result in (110) gives:

so, the components of the entry velocity have the form:

and the components of the systematic velocity are:

With this, the set of stochastic velocities in terms of the probability density is now complete. The results above can be written in matrix notation. Table 3 displays the four stochastic velocities for time dependent Ornstein-Uhlenbeck processes:

Table 3. Stochastic velocity operators for Ornstein-Uhlenbeck processes.

| Velocity | Notation | Operator |

|---|---|---|

| entry |

|

|

| Exit |

|

|

| Systematic |

|

|

| Diffusion |

|

|

Table 4. Values of the mass and number of molecules for enzyme and substrate.

|

|

|

|

The diffusion velocity and the systematic velocity will be used below to describe the behavior of a state point in each vicinity  in the fluctuation space.

in the fluctuation space.

Reaching the state of thermodynamic equilibrium

The analysis of the simulated catalysis reaction allows the determination of when thermodynamic equilibrium has been reached. From expression (37), it is evident that the determinant can be used for that purpose. The state of equilibrium is reached if  where

where  In numerical calculation it is possible to define a tolerance

In numerical calculation it is possible to define a tolerance

This is the regime called equilibrium state, and it corresponds to the moment when substrate and enzyme-substrate complex have been depleted. It is achieved at

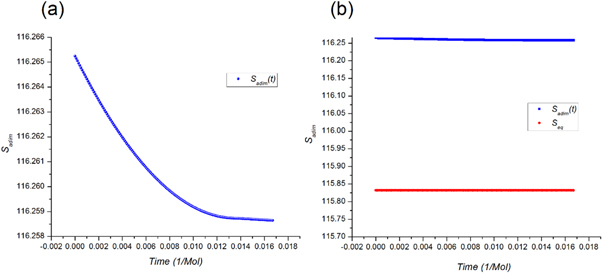

The quasi-stationary process is studied through the numerical solution of equations (32). The expressions for the time evolution of the substrate and enzyme-substrate complex presented in table 6 in section Entropy of Fluctuations are utilized to calculate the autocorrelation functions shown in figure 8 below

Figure 8. At the end of the quasi-stationary state, all correlations tend to a constant value. (a) is the variance of the fluctuations of the substrate concentration, it increases as the reaction progresses. (b) is the enzyme-substrate complex concentration, it diminishes as the reaction progresses. (c) shows the correlation between the fluctuations of the substrate and enzyme-substrate complex, it increases.

Download figure:

Standard image High-resolution imageFigure 8(a) exhibits the autocorrelation of the fluctuation of the substrate, figure 8(b) is the graph of the autocorrelation of the fluctuation of the enzyme-substrate complex, and figure 8(c) the correlation of both. The numerical analysis could be performed up to  but the description would no longer correspond to the state under study, the quasi-stationary state; instead, it would be a state with only the remnants of the noise of the substrate and complex concentrations, which is of little practical interest in this work.

but the description would no longer correspond to the state under study, the quasi-stationary state; instead, it would be a state with only the remnants of the noise of the substrate and complex concentrations, which is of little practical interest in this work.

Probability density

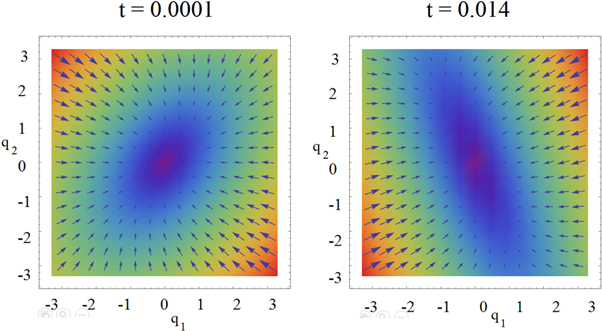

The probability density can be calculated with expression (27). Figure 9 shows its initial form at  and its final form at

and its final form at  once it reaches the end of the quasi-stationary state. At first glance the differences between the gaussian distributions at its initial and final states are not apparent. But a more careful observation reveals that there has been a clockwise rotation of approximately

once it reaches the end of the quasi-stationary state. At first glance the differences between the gaussian distributions at its initial and final states are not apparent. But a more careful observation reveals that there has been a clockwise rotation of approximately  as shown in figure 10.

as shown in figure 10.

Figure 9. Comparison of the probability density at the start and at the end of the quasi-stationary state.

Download figure:

Standard image High-resolution imageFigure 10. The longest axis of symmetry of the probability density rotates clockwise during the quasi-stationary state.

Download figure:

Standard image High-resolution imageAn analytical way of detecting a change is by calculating the difference between probability densities at  and

and

The result can be seen in figure 11, where there is a region at the center with higher probability, while above and below there is a region with lower probability. What this means is that, during the quasi-stationary state of the catalytic process, the probability density shifts towards the center.

The result can be seen in figure 11, where there is a region at the center with higher probability, while above and below there is a region with lower probability. What this means is that, during the quasi-stationary state of the catalytic process, the probability density shifts towards the center.

Figure 11. The difference between the last probability density and the initial probability density during the quasi-stationary state. It displays the transition of the probability from the upper and lower regions towards the center. It becomes narrower due to the decrease of magnitude of

Download figure:

Standard image High-resolution imageThis is an expected outcome once proper attention is paid to the graphs of  and

and  where the former increases as the latter decreases with time. Further details on information contained within the quasi-station state, that is relevant to the understanding of the biochemical process, will be addressed in a later section titled Entropy of Fluctuations.

where the former increases as the latter decreases with time. Further details on information contained within the quasi-station state, that is relevant to the understanding of the biochemical process, will be addressed in a later section titled Entropy of Fluctuations.

Before going to the next section, where we address the topic of the entropy in this model, let us focus on the total stochastic velocity, which results from the sum of the systematic velocity and the diffusion velocity. Figure 12 shows a comparison of the initial state, at  and a state close to equilibrium, at

and a state close to equilibrium, at  of the total stochastic velocity.

of the total stochastic velocity.

Figure 12. The total stochastic velocity at the start and the end of the quasi-stationary state. The state points  farthest from the center move at a greater velocity, while the points in the center are comparatively static. The velocities in the

farthest from the center move at a greater velocity, while the points in the center are comparatively static. The velocities in the  regions indicate the increase in probability at the central region.

regions indicate the increase in probability at the central region.

Download figure:

Standard image High-resolution imageThe total stochastic velocity, shown in figure 12, is a two-dimensional field that plots the tendency of the state points  to move towards a region in the center, which intuitively coincides with the plot of the probability density. At the start of the reaction (

to move towards a region in the center, which intuitively coincides with the plot of the probability density. At the start of the reaction ( ) it is mainly the points from the second and fourth quadrant that move at a greater velocity, contributing the most in keeping the saturation on the center. In contrast, when very close to the equilibrium (

) it is mainly the points from the second and fourth quadrant that move at a greater velocity, contributing the most in keeping the saturation on the center. In contrast, when very close to the equilibrium ( ) the roles have reversed, and it is now the first and third quadrants responsible of keeping the saturation in the center.

) the roles have reversed, and it is now the first and third quadrants responsible of keeping the saturation in the center.

We calculate the curl of the total stochastic velocity to further explore this behavior, as shown in figure 13. From a geometrical viewpoint, the tendency of the field to rotate suggests that the averaged transitions performed by the state points,  within each region

within each region  occur due to the lack of balance between the displacements

occur due to the lack of balance between the displacements  which is something that is also reflected by the averaged

which is something that is also reflected by the averaged  This conduct causes the semi-axes of symmetry of the probability distribution to not remain static. The magnitude of this phenomenon diminishes with time, corresponding to the end of the quasi-stationary stage.

This conduct causes the semi-axes of symmetry of the probability distribution to not remain static. The magnitude of this phenomenon diminishes with time, corresponding to the end of the quasi-stationary stage.

Figure 13. The curl of the total stochastic velocities is negative. We associate it with the tendency of the probability distribution to rotate clockwise during the quasi-stationary state.

Download figure:

Standard image High-resolution imageAbout the different terms of the entropy of the Michaelis-Menten model

Now we get back to the discussion about the entropy of the system that was left pending in expressions (36)–(38). The system under study is a laboratory experiment that progresses through time:

- At an initial stage, at a time interval we label as

the enzyme and substrate molecules exist separate from one another.

the enzyme and substrate molecules exist separate from one another. - At an instant

both substances get in contact with each other within a fluid that serves as a medium, at this point in time the reaction has not started. While the system is at

both substances get in contact with each other within a fluid that serves as a medium, at this point in time the reaction has not started. While the system is at  where the reaction is yet to begin, the system can be considered as being in a state of thermodynamic equilibrium: It is for this reason that its entropy can be calculated using standard methods found in physical statistics.

where the reaction is yet to begin, the system can be considered as being in a state of thermodynamic equilibrium: It is for this reason that its entropy can be calculated using standard methods found in physical statistics. - Let us suppose that the physical system is stirred to aid the start of the reaction. Once it starts, the time interval is

which corresponds to the random process that has been discussed in previous sections. It is then than the entropy of fluctuations given in expression (26) makes itself apparent.

which corresponds to the random process that has been discussed in previous sections. It is then than the entropy of fluctuations given in expression (26) makes itself apparent.

This section is dedicated to the study of entropy of the substrate and the enzyme-substrate complex. The working hypothesis is that the substrate and enzyme molecules are diluted in an aqueous medium, where they perform irregular motions. The physical system can be illustrated by the antibiotic penicillin playing the role of the substrate, and the beta-lactamase acting as the enzyme. The latter is used by bacteria to protect itself against the antibiotic.

At instant  when the reaction is about to start, we consider the substrate and enzyme molecules as if they are two ideal gasses with their respective entropy, which are originated by their degrees of freedom: translational, rotational and electronic. At instant

when the reaction is about to start, we consider the substrate and enzyme molecules as if they are two ideal gasses with their respective entropy, which are originated by their degrees of freedom: translational, rotational and electronic. At instant  the entropy of equilibrium, denoted as

the entropy of equilibrium, denoted as  contains the terms shown in (114):

contains the terms shown in (114):

Where  is the translational entropy,

is the translational entropy,  is the entropy of mixing,

is the entropy of mixing,  is the vibrational entropy,

is the vibrational entropy,  is the rotational entropy, and

is the rotational entropy, and  is the electronic entropy. Save for

is the electronic entropy. Save for  we will calculate estimates for the values of the entropy of each contribution mentioned with the purpose of knowing an estimated value of

we will calculate estimates for the values of the entropy of each contribution mentioned with the purpose of knowing an estimated value of

In this work we also add the fluctuation entropy,  for substrate and enzyme, which arises due to the dynamics of the Michaelis-Menten model. The resulting total entropy is given by (115):

for substrate and enzyme, which arises due to the dynamics of the Michaelis-Menten model. The resulting total entropy is given by (115):

It is compulsory to note the non-uniqueness of the definition of entropy in non-equilibrium systems. The definition of  is based in the one used in the theory of stochastic processes, but it should be made clear that there is no expression available for it that is generally accepted. In 2019, de Decker [34] demonstrated that, in the case of non-equilibrium systems, there are at least two definitions of entropy that, being both physically sound, differ in the time evolution of the production of entropy, even if both reproduce the same equilibrium state. However, even though the uniqueness of the time evolution is under contention, we consider appropriate the study of the special case of entropy in the processes that can be described by the Michaelis-Menten model.

is based in the one used in the theory of stochastic processes, but it should be made clear that there is no expression available for it that is generally accepted. In 2019, de Decker [34] demonstrated that, in the case of non-equilibrium systems, there are at least two definitions of entropy that, being both physically sound, differ in the time evolution of the production of entropy, even if both reproduce the same equilibrium state. However, even though the uniqueness of the time evolution is under contention, we consider appropriate the study of the special case of entropy in the processes that can be described by the Michaelis-Menten model.

Requirements for the decrease of entropy

The second law of thermodynamics establishes that, in the absence of external work being done on a system, entropy follows the inequality shown in (116):

Such that the equal sign is present once the state of equilibrium is reached.

Instead, entropy can diminish if energy is applied to a system through appropriate processes. We see that this is the case in enzymatic catalysis that are studied with the Michaelis-Menten model.

From the definition of Markov processes [34], it is clear that, in mathematical terms, the decrease of entropy through time is not forbidden. For a physical system with microscopic states numbered with  and its probability is denoted by

and its probability is denoted by  its entropy can be expressed as seen in (117):

its entropy can be expressed as seen in (117):

Its rate of change is given by (118):

From  results that

results that  Therefore, the inequality

Therefore, the inequality  can be fulfilled if either of the conditions (119) or (120) are followed.

can be fulfilled if either of the conditions (119) or (120) are followed.

Calculating an estimation of the entropy of equilibrium