Abstract

Explaining the origins of cumulative culture, and how it is maintained over long timescales, constitutes a challenge for theories of cultural evolution. Previous theoretical work has emphasized two fundamental causal processes: cultural adaptation (where technologies are refined towards a functional objective) and cultural exaptation (the repurposing of existing technologies towards a new functional goal). Yet, despite the prominence of cultural exaptation in theoretical explanations, this process is often absent from models and experiments of cumulative culture. Using an agent-based model, where agents attempt to solve problems in a high-dimensional problem space, the current paper investigates the relationship between cultural adaptation and cultural exaptation and produces three major findings. First, cultural dynamics often end up in optimization traps: here, the process of optimization causes the dynamics of change to cease, with populations entering a state of equilibrium. Second, escaping these optimization traps requires cultural dynamics to explore the problem space rapidly enough to create a moving target for optimization. This results in a positive feedback loop of open-ended growth in both the diversity and complexity of cultural solutions. Finally, the results helped delineate the roles played by social and asocial mechanisms: asocial mechanisms of innovation drive the emergence of cumulative culture and social mechanisms of within-group transmission help maintain these dynamics over long timescales.

Similar content being viewed by others

Introduction

Humans are prodigious problem solvers. Unlike many non-human animals, humans seem particularly adept at incrementally discovering and exploiting solutions with higher payoffs (Acerbi et al., 2016; Tennie et al., 2009), as evident in the bewildering growth of technological sophistication over the last several thousand years. Access to increasingly complex solutions allowed human groups to enter into and thrive across a variety of niches via cultural as opposed to biological adaptations (Henrich, 2015; Laland, 2018; Richerson and Boyd, 2005). No better is this illustrated than in our use of clothing and weapons as technological solutions for environmental challenges. While polar bears evolved thick layers of blubber, large paws, and specialized carnassials to survive and hunt in Arctic environments, the Inuit achieved comparable outcomes by modifying and extending existing technologies to invent parkas, mukluks, and harpoons.

These distinct advantages are often attributed to the ability of cultural evolutionary dynamics to facilitate a cumulative process: here, solutions with higher payoffs need not be independently rediscovered, but can instead be transmitted from individual-to-individual via social learning mechanisms (Boyd and Richerson, 1985; Dean et al., 2014; Mesoudi and Thornton, 2018). Whilst non-human animals have culture (Hunt and Gray, 2004; Kawamura, 1959; Krützen et al., 2005; Whiten et al., 1999), and exhibit a high level of cognitive and behavioral sophistication (Emery and Clayton, 2004; Penn and Povinelli, 2007; Piantadosi and Cantlon, 2017), humans are alone in their capacity to modify cultural traditions in a direction of open-ended complexity (Boyd and Richerson, 1996; Heyes, 1993; Mesoudi and Thornton, 2018; Tomasello et al., 1993). As with biological evolution, this cumulative process provides a powerful explanation for the fit between technological solutions and ecological problems via cultural adaptation: by generating different solutions for a given problem, a population can gradually select from this pool of variation and move closer to an optimal solution (Boyd and Richerson, 1985, 1995; Enquist et al., 2007; Henrich, 2015; Laland, 2018; Richerson and Boyd, 2005).

Less attention has been paid to another prominent process in the evolution of technology: cultural exaptation (Andriani and Cohen, 2013; Arthur, 2009; Johnson, 2011; Kauffman, 1993; Mokyr, 1998; Solée et al., 2013). Initially coined by Gould and Vrba (1982), although the concept itself dates back to Darwin (1859) in biology (see preadaptation) and to Schumpeter (1939) in economics, exaptation is when a biological or cultural trait originally adapted for use in one functional role is co-opted and repurposed towards a new function. Whereas cultural adaptation follows from a search over the pool of possible solutions, cultural exaptation inverts the causality of this process: it searches the space of possible problems in an effort to find a novel problem for a given solution. Viagra, for instance, was originally invented as a potential solution for angina, but during clinical trials it was discovered to be a far more effective remedy for erectile dysfunction (Andriani et al., 2015). The history of technology is replete with similar instances: from Gutenberg co-opting the screw-driven wine press in the creation of the printing press (Solée et al., 2013) to the repurposing of iron door hinges as ship rudders (Boyd et al., 2013) and the discovery that safely disposing of radioactive or biological hazards is achievable via the high temperatures required for vitrification (Dew et al., 2004).

How do these two processes of cultural adaptation and cultural exaptation interact to shape and constrain cultural evolutionary dynamics? In biological evolution, Darwin envisaged adaptation and exaptation as part of a cycle of open-ended novelty and refinement: it is a process of taking an already adapted trait, repurposing it for a new function, and then adaptively tuning this trait for its new functional role via natural selection. An oft-cited example of exaptation is the evolution of feathers, which were originally used for thermoregulation, as a means of facilitating flight (Gould and Vrba, 1982). Theoretical frameworks in cultural evolution have also recognized the importance of exaptation in the domains of technology (Boyd et al., 2013) and language (Lass, 1990). Yet, despite the prominence of cultural exaptation in these frameworks, this process is curiously absent from models and experiments into cumulative culture (Mesoudi and Thornton, 2018).

One reason for this omission is the focus on specific types of cumulative culture. Debates over what constitutes cumulative culture, as well as questions over its presence in non-human animals, is the source of much consternation (for recent reviews, see Mesoudi and Thornton (2018); Miton and Charbonneau (2018)). Social transmission experiments, for instance, tend to investigate processes of functional refinement. In these cases, the dynamics of change are more akin to a cumulative optimization process, whereby an experimental population moves closer to an optimal solution within the bounds of its input problem(s) and the available resources. An example of this is found in a recent experimental study of pigeon flight path optimization (Sasaki and Biro, 2017): here, pigeons are only able to discover the quickest route when information about flight routes are socially transmitted. Similar experiments with human participants, such as transmission chains of paper aeroplanes, spaghetti towers, and artificial languages, all reach comparable outcomes (Caldwell et al., 2016; Caldwell and Millen, 2008; Kirby et al., 2008).

As others have suggested (Mesoudi and Thornton, 2018), cumulative optimization in this sense qualifies as an instance of cumulative culture, but it is insufficient to produce the open-ended dynamics inherent to human cultural domains (such as technological evolution). Pigeons do not apply insights from the optimization of flight paths to other domains and the cumulative dynamics only persist until an optimal flight path is reached (a fact recognized by Sasaki and Biro (2017)). The only available options in such instances are to remain in the current state or transition to a less-optimal configuration. In essence, populations become stuck in an optimization trap where the dynamics of change cease and enter a state of equilibrium. By contrast, inventions such as writing or the steam engine are capable of changing the bounds within which cultural dynamics operate, introducing populations to novel problems, as well as allowing them to more readily generate novel solutions.

Much of the emphasis in computational models is on the open-endedness of cumulative culture. In recent models, this often corresponds to cumulative growth in either the diversity (Creanza et al., 2017; Kolodny et al., 2015; Mesoudi, 2011) or complexity (Derex et al., 2018; Enquist et al., 2011; Lewis and Laland, 2012) of cultural traits. In many ways, these models suffer from the opposite problem to that found in experiments: the absence of any overt functional target. Functional constraints, such as the task requirements for gathering termites, are often not explicitly modeled. In cases where function is modeled, it forms a single fitness proxy and is assumed to be an intrinsic feature of a cultural trait (e.g., via a utility function; see Lewis and Laland (2012)). As such, there is no notion of how different functional constraints shape the evolution of these traits. Even though this simplifying assumption proves useful in many circumstances, it overlooks the role played by functional constraints and marginalizes the contributions of cultural exaptation in driving open-ended cumulative culture.

Computationally-inspired theories offer a promising avenue for addressing such issues by modeling cultural dynamics as search processes over solution and problem spaces. In the next section, a computational framework of cultural evolution is outlined, and used to demonstrate that high-dimensional problem spaces introduce constraints rarely considered in standard cultural evolutionary approaches. Then, using an agent-based model where agents attempt to solve problems in this high-dimensional problem space, the current paper investigates the relationship between cultural adaptation and cultural exaptation in shaping cultural evolutionary dynamics. Two general parameters, corresponding to the extent to which solutions undergo optimization (\(\lambda\)) and the rate with which agents explore the problem space (\(\Theta\)), are manipulated. Four important findings follow from the inclusion of a high-dimensional problem space:

- (i)

Strong optimization pushes populations into optimization traps with low complexity solutions;

- (ii)

Escaping these optimization traps requires increasingly high rates of exploration-driven exaptation relative to the strength of optimization;

- (iii)

The emergence of open-ended cumulative dynamics relies on high rates of innovation relative to within-group transmission;

- (iv)

As solutions grow in complexity and problems become more difficult to solve, the selection of socially transmitted solutions plays an increasingly prominent role in maintaining this open-ended cumulative dynamic.

Model

Approaching cultural evolutionary dynamics in this model starts from the premise that cultural information is algorithmic. The term algorithm is used in a general sense to mean a procedure or recipe that consists of an input problem (which may be empty) and an organized series of steps that results in a solution (Christian and Griffiths, 2016; Mayfield, 2013). Computer programs are algorithmic because they provide a set of specific instructions for transforming a given input into an output that can then be stored. Sorting algorithms, for instance, take a list of randomly ordered elements and transform this into a new sequence based on some predefined order (such as numerical or lexicographic). Similarly, cultural information stored in recipes, grammars, and motor sequences is algorithmic; a set of mental instructions can be used to cook tomato soup, learn a language, and produce Oldowan flakes (Arthur, 2009; Charbonneau, 2015).

In this sense, cultural information exists as generative procedures inside the minds of individuals, and is manifest in populations as observable behaviors or tangible artifacts. Through a repeated process of production and learning, individual minds are causally linked across space and time to form traditions (Ferdinand, 2015; Kirby and Hurford, 2002; Morin, 2016; Sperber, 1996). Thinking of cultural information as algorithmic allows us to formulate constraints on cultural evolutionary dynamics in terms of solutions and input problems. Solutions exist as the physical manifestations of culture and input problems are the specific functional challenges.

Cultural evolutionary dynamics can therefore be modeled as a process of searching and sampling the space of both solutions and problems. If a search process is biased to find solutions that better approximate a given input problem, then we can think of this optimization process as cultural adaptation. Alternatively, if the search process seeks out novel input problems for a specific solution, then this process of repurposing solutions is a form of cultural exaptation. To capture these processes, a model is constructed in which solutions and problems are represented as binary strings of \(N\)-length. Modeling solutions and problems in this way affords (potentially) unbounded searches over solution and problem spaces.

Cultural adaptation

Input problems are a key constraint on cultural evolutionary dynamics via cultural adaptation: a search optimization process over the space of solutions that results in an improved fit between a solution and an input problem. Limitations on the design of a solution exist in the form of functional constraints, i.e., how well adapted a solution is at solving a problem. This refers to the specification of the input problem (building a solution for cutting meat) and the ways in which it constrains the possible outcomes by creating an adaptive target (useful meat cutting solutions need to induce a certain level of shear stress).

Cultural adaptation is conceptualized here as a process of improving the fit between an input problem and a solution string. The Levenshtein distance (LD) allows us to formally measure this fit:

where \({\mathrm{LD}}_{s,p}(i,j)\) is the distance between the \(i\)th element of solution \(s\) and the \(j\)th element of problem \(p\). As such, the Levenshtein distance between two strings tells us the minimum number of single-element edits (insertions, deletions or substitutions) required to transform one string into the other. Fewer transformations between a problem and a solution acts as a proxy for higher levels of optimization. Solution-problem mappings needing more transformations are less optimized than those with lower values. A fully optimized solution therefore corresponds to \({\min }_{s,p}{\mathrm{LD}}(s,p)=0.0\) as a solution and its input problem are identical.

Cultural exaptation

Cultural exaptation was defined earlier as a process where solutions used for one problem are repurposed to solve a novel problem. It was also argued that this can be framed as a search process over the space of possible problems. One recurrent observation is that exaptation normally occurs in domains where there are functional overlaps between the original and novel input problems (Arthur, 2009; Mastrogiorgio and Gilsing, 2016). Co-opting technology in such a way also implies that repurposed solutions are to some extent optimized for solving their original input problem. In some senses, Viagra was well-designed for the purpose of inducing vasodilation; it just happened to be better suited for encouraging blood flow to certain regions as opposed to others.

Introducing a high-dimensional problem space is required if we are to simulate the process of cultural exaptation as a search process over the space of problems. The problem space here forms a connected graph consisting of all possible permutations of an \(N\)-length binary string. Connected nodes represent problems that differ from one another by a single Levenshtein distance (e.g., \(001\) is a neighbor of \(00\), \(011\), and \(0010\), but not \(111\)). Movement through this space is therefore restricted: agents only have the option of moving to a single neighboring problem at a given time-step (see Fig. 1).

An illustration of movement through a small portion of the problem space. Here, an agent starts at input problem \(10\), and moves to other problems within the space (black directional lines). Gray lines and problems represent possible problems an agent could move to given their current input problem.

Structure of model

All model runs took place over \(100\) generations and every generation comprised of \(10\) time steps. A single run was initialized with a fixed population of agents (\(N=100\)) who were randomly assigned to input problems of length \(\ell (p)=2\) and provided with randomly generated starting algorithms that produced solutions in the range \(\ell (s)=[2,4]\). The agents in the model attempt to solve their current input problem by searching for possible solutions. As previously described, the success of any given fit between a solution and a problem is operationalized as the Levenshtein distance.

At each time step, an agent generates a pool of possible solutions from asocial and social sources (see Fig. 2). On the basis of the optimization strength (\(\lambda\)), as well as the current input problem, one of these solutions is then adopted and assigned to an agent’s memory as their stored solution. The exploration threshold (\(\Theta\)) interacts with the current fit between a solution and an input problem to determine whether or not an agent moves to a new problem. If the solution is well-fitted to the problem, then an agent will remain with the current input problem. Otherwise, if the solution is a poor fit for the problem, then an agent will relocate and attempt to solve a new problem. Following 10 time steps, all agents in a population die and their currently stored solution is inherited by newly created offspring agents (i.e., a 1:1 replacement rate). Crucially, this reflects the intergenerational transfer of cultural information, as inherited solutions also undergo transmission from parent-to-child, which additionally means these solutions are subject to simplicity constraints during reconstruction (see section Social transmission mechanisms).

The process an individual agent performs within a single time-step. Step 1: Agents use a series of mechanisms to indirectly alter a solution via the underlying algorithm. The stored algorithm refers to the graph that currently occupies an agent’s memory (as determined by the previous time step). This stored algorithm is acted upon by three asocial mechanisms of modification, invention, and subtraction. Asocial mechanisms can only make single modifications per time-step. Transmission occurs when an agent receives an algorithm from another agent within the population. Step 2: Each of these mechanisms generate a pool of solutions by translating the algorithm into a bit string (i.e., a solution). Step 3: Which of these solutions is adopted depends on whether optimization is biased or stochastic (as determined by \(\lambda\)). If optimization is biased, an agent compares each solution to their input problem and chooses the one with the best fit (otherwise, if the choice is stochastic, then a solution is randomly chosen from the pool). Step 4: An agent uses their current solution-problem mapping to motivate whether or not they consider a novel problem (as determined by the exploration threshold \(\Theta\)). Step 5: This movement is restricted to local problems (i.e., those that differ from the current input problem by single-edit substitutions).

Topology of the problem space

In the model, input problems are procedurally generated and stored on the basis of the movements by individual agents. The topology of this problem space is decomposable into three general properties: the difficulty of specific input problems, the size of the problem space, and the interconnectedness between problems.

Differences in difficulty reflect a general observation that not all problems are equal in terms of tractability. Getting from the Earth to the Moon requires solutions that are orders of magnitude more complex than fishing for termites with a stick (unless the termites happen to be on the Moon). Difficulty, in this sense, is indirectly referencing constraints on the search process over solutions, i.e., termite fishing is easier to learn and more readily innovated than a Moon-capable rocket. For this model, longer input problems \(\ell (p)\) increase the number of permutations in the space of possible solutions, which translates into a more computationally intensive search process for finding an optimal solution. Furthermore, even in instances where there are two problems of the same length, one problem can be more predictable than the other. By containing computable regularities, predictable problems are more amenable to concise descriptions than less predictable counterparts (for fuller formal treatments, see: Cover and Thomas (2012); Li and Vitányi (2008)).

Computational constraints are also relevant for our second topological property: that the size of the problem space grows as a function of \(\ell (p)\). Enumerating all possible permutations for \(\ell (p)=4\) results in a smaller space (\(16\) problems) than when \(\ell (p)=10\) (\(1024\) problems). Whereas input problem difficulty acts as a computational constraint on the search process over solutions, the size of the problem space is a computational constraint on searching across problems: Exhaustively traversing a problem space becomes less tractable as the size increases. To illustrate, two maximally distant problems in \(\ell (p)=4\) (e.g., \(0000\) and \(1111\)) are more distant than two maximally distant problems in \(\ell (p)=8\) (e.g., \(00000000\) and \(11111111\)).

Finally, the third topological property recognizes that the relatedness between input problems introduces a source of path-dependency. As movement through this space is restricted to single edit jumps, the current input problem limits what problems will be considered in the immediate future. For instance, a problem of \(0100\) is nearer to \(0101\) than \(0111\) in terms of the minimal number of substitutions required to transform one problem into another. Movement between input problems of different lengths is additionally constrained by a fixed probability. In particular, movement to a longer problem has a fixed probability of \(P({\mathrm{Longer}})\,=\,0.3\), which can be thought of as a cost on unconstrained movement towards increasingly longer input problems. Without this cost, movement through the problem space would be heavily biased towards longer input problems (because there are generally more longer input problems than problems of the same or shorter length).

Representing solutions

Solutions in this model represent technological artifacts and are generated using directed graphs (for a similar approach, see Enquist et al. (2011)). This approximates two features of technological solutions: the cultural artifact (a bit string) and the underlying algorithm (a graph). Graphs were initially constrained so that agents start with solutions of lengths \(\ell (s)=[2,3,4]\). Formally, a graph \(G\) consists of a triple \((V,E,\Omega )\) where \(V\) is the set of nodes \(v\in V\), \(E\) is the set of edges \(e\in E\), and \(\Omega\) is a function mapping every edge to an ordered set of values \(\Omega :E\to {\mathbb{N}}\). Each node comprises of a value in the interval \([0,1]\) and each edge is assigned a bit of either \(0\) or \(1\). A single bit is derived from the average of two nodes that are connected via an edge and rounded the nearest integer. As edges are directed, any node can connect to another node within \(V\). Two exceptions are no loops (i.e., nodes that connect to themselves) and no duplicate edges (i.e., a directed connection can only exist once). \(\Omega\) arranges the set of edges to produce the bit string (the solution) and is determined by an ordinal value (Fig. 3).

Agents have access to two general processes for generating solutions: asocially (via mechanisms of innovation) and socially (via within group transmission).

Asocial generative mechanisms

Generating solutions refers to the introduction of novelty and diversity into a population via asocial mechanisms. In this model, changes to a solution are done indirectly via the graph-based procedure, with agents having access to three general mechanisms for innovating (see Fig. 2):

Invention introduces a new bit by creating and then connecting a new node to an existing one. New nodes are assigned a randomly generated value in the range \([0,1]\).

Modification changes a pre-existing solution by connecting two existing nodes to form a new edge.

Subtraction shortens a bit string by randomly removing an existing edge and ensures that innovation is not unidirectional.

A general assumption is that these generative mechanisms are restricted: Agents can only create or remove a single bit. Imposing such limitations approximates the idea that innovations are often introduced via limited experimentation within a restricted search space. Some solutions are easier to discover than others because they require less time and resources to produce (given a starting state). Similar notions are present in Tennie and colleagues’ Zone of Latent Solutions (Tennie et al., 2009): here, solutions that are reachable via asocial means have a high probability of being independently (re-)invented.

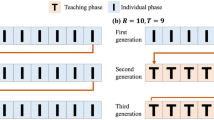

Social transmission mechanisms

Transmission is the movement of information between individuals and corresponds to how individuals learn from others via observation and teaching (Gergely and Csibra, 2006). Two types of social transmission are present in this model: a vertical transmission process of inheritance and a within-group process of horizontal transmission. Vertical transmission happens at each generation (every \(10\) time-steps) and takes place between a parent agent (who dies) and a newly created child agent. The choice of \(10\) time-steps is arbitrary, but it does capture the finite lifetimes of individuals and recognizes that the contributions of a single individual in a given generation are generally circumscribed (especially when considering long timescales). As the name suggests, within-group transmission takes place between individuals at a given generation, and involves one agent learning a cultural algorithm from another randomly selected agent within the population.

Both forms of transmission are indirect (agents transmit algorithms, not solutions), reconstructive (solutions are generated using the underlying algorithm), and biased (reconstructions are biased towards efficient representations). This aligns with the general idea that transmission is an inductive process guided by both the input data and the prior cognitive biases of learners (Chater and Vitányi, 2003; Culbertson and Kirby, 2016; Griffiths and Kalish, 2007; Kirby et al., 2007). Transmitting an algorithm is thus analogous to learning a recipe or procedure and is instantiated here as a process of reconstructing the shortest path between nodes. Dijkstra’s algorithm is used to construct a graph distance matrix \(({d}_{ij})\) that computes all distances from \({v}_{i}\) to node \({v}_{j}\). The shortest path is one which visits all connected nodes in the shortest number of steps. This assumes graphs are directed with equally weighted edges and that the starting point is the first node in the graph (as determined by \(\Omega\), see section Representing solutions).

Strength of optimization (\(\lambda\))

Optimization is modeled as an individual-level decision making process over the pool of solutions derived from social and asocial sources. The goal for agents is to find a solution that improves the fit with the current input problem. One advantage of the approach used here is that it explicitly builds a bridge between individual-level processes and population-level outcomes (Derex et al., 2018). This presents a notable departure from some recent cultural evolutionary models of cumulative culture in which individual-level processes are ignored in favor of solely focusing on the population-level distribution of cultural traits (Enquist et al., 2011; Lewis and Laland, 2012).

Manipulating the strength of optimization (\(\lambda\)) allows us to directly investigate the extent to which this decision-making process is biased or stochastic. The current model examined the following parameter values for \(\lambda\): \([0.0,0.2,0.5,0.8,1.0]\). When the strength of optimization is at maximum (\(\lambda =1.0\)), agents choose a solution based solely on its ability to optimally solve the current input problem. The pool from which these solutions are chosen is restricted to the currently stored solution and variants generated via asocial or social means. A maximally biased choice is one where an agent compares the Levenshtein distance of an input problem (\(p\)) with each solution (\(s\)) in the pool \(X\) and selecting the most optimized one:

As the strength of optimization is decreased, stochastic factors play an increasingly prominent role in determining which solution is or is not adopted. If the strength of optimization is \(\lambda =0.0\), the process of choosing solutions is purely stochastic, i.e., there is no preference for solutions based on the Levenshtein distance, whereas a \(\lambda =0.8\) means that on average \(80 \%\) of agent’s productions will be biased and \(20 \%\) will be stochastic.

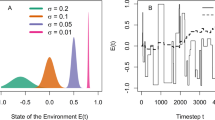

Exploration threshold (\(\Theta\))

An exploration threshold (\(\Theta\)) is introduced to capture how the level of optimization limits exploration of the problem space. This aims to model situations where solutions resist repurposing due to pressures on maintaining existing functionalities. Agents consider alternative problems if the (normalized) Levenshtein distance between a solution and the current input problem is above this threshold:

where \({P}_{\text{pos}}\) is the set of possible problems an agent can explore in a localized region of the problem space. Possible problems are those which are a single Levenshtein distance from the current input problem. Exploration of alternative problems takes place when the \({}_{{\mathrm{norm}}}{\mathrm{LD}}\) of the current solution \(S\) and input problem \(P\) is greater than the exploration threshold \(\Theta\). The following parameter values were examined: \(\Theta =[0.2,0.4,0.6,0.8,1.0]\).

Considering a range of parameter values allows us to investigate how the strength of optimization interacts with these different thresholds. When the threshold is high (e.g., \(\Theta =0.8\)), exploration of the problem space is restricted to a narrow range of poorly optimized solutions, as agents only repurpose solutions for solution-problem mappings with an \({}_{{\mathrm{norm}}}{\mathrm{LD}}\ >\ 0.8\). Having a high \(\Theta\) makes it relatively easy for optimization dynamics to inhibit the rate of exploration: a minimal amount of optimization is required to maintain the current function of a solution. Conversely, lower thresholds (e.g., \(\Theta =0.2\)) encompass a wider range of possible fits, as agents now repurpose solutions for mappings with a \({}_{{\mathrm{norm}}}{\mathrm{LD}}\ >\ 0.2\). Due to the demands for more optimized solutions, and the increased rates of exploration, low thresholds make it difficult to maintain an existing function.

Solution complexity \({H}_{L}(S)\)

Solution complexity is measured as the product of Shannon Entropy (Cover and Thomas, 2012; Shannon and Weaver, 1949) and the length of a solution:

where \({S}_{i}\) is a binary value found within a solution, \(P({S}_{i})\) is the probability of value \(i\) given a solution string \(S\), and \(\ell (S)\) is the length of the solution. \({H}_{L}(S)\) is therefore the average amount of information within a specific solution string of \(N\)-length. In this sense, \({H}_{L}(S)\) acts as a proxy for solution complexity: lower \({H}_{L}(S)\) strings are less complex than ones with a higher \({H}_{L}(S)\).

This assumes complex solutions are longer strings where the distribution of bits is close to uniform (i.e., \(1\) bit) and provides a relatively simple way of capturing simple solutions (i.e., those that are closer to \(0\) bit). However, a well-recognized limitation of this approach is that it fails to discriminate between strings of equal length, where one forms a highly ordered sequence (e.g., \(01010101\) or \(00001111\)) and the other approximates an algorithmically irregular sequence (e.g., \(01101001\)).

An example of cultural algorithm. Black circles represent nodes and the arrows connecting nodes represent directed edges. Each edge has a pair of numbers: the first denotes the order of that edge in the set and the second corresponds to the value of that edge. An edge value is the average of its two nodes. This value is rounded to the nearest integer (either \(0\) or \(1\)) to produce a single bit. Edge order is an ordinal value that represents the position of a bit in a solution string.

Results

Strong optimization leads to optimization traps

One challenge facing cultural evolutionary dynamics is to avoid optimization traps. An optimization trap occurs when the exploration process is inhibited due to an efficient fit between a solution and a problem. What constitutes an efficient fit is modulated by the exploration parameter (\(\Theta\)). Optimization traps are most frequent when the strength of optimization is at maximum (\(\lambda =1.0\)): here, the dynamics of change rapidly reach a stable equilibrium, where exploration halts and populations converge on highly optimized solutions (Fig. 4). One consequence is that these are also the regions of the parameter space where open-ended cumulative culture never emerges—solutions remain forever stuck in states of low complexity and minimal diversity.

Top: Heatmap showing the average level of optimization at Generation \(=100\). Cells represent a specific parameter combination of \(\lambda\) and \(\Theta\) and colors corresponds to the (normalized) Levenshtein distance. Lighter colors denote solution-problem mappings with a lower Levenshtein distance. The x-axis is the strength of optimization (\(\lambda\)) and the y-axis is the range of exploration thresholds (\(\Theta\)). Bottom: The average (normalized) Levenshtein distance of solution-problem mappings for a maximum optimization strength (\(\lambda =1.0\)) and an exploration threshold of \(\Theta =0.4\). Colored lines correspond to single runs over \(100\) generations (\(1000\) time-steps).

Two factors sufficiently slow the exploration process to trap agents in these optimization cul-de-sacs. First, the topology of the problem space means that agents start out solving simpler input problems, which makes it easier for populations to converge on efficient fits. Second, if the exploration threshold is high (e.g., \(\Theta =0.8\)) and optimization is strong (e.g., \(\lambda =1.0\)), then the slow exploration of the problem space provides a stable enough target for optimization. Both the simplicity and stability of the input problems increase the probability of agents finding solutions below the exploration threshold: simpler input problems are computationally more tractable and stable problems provide more opportunities for agents to discover optimized solutions.

A key question concerns the extent to which collective dynamics amplify this optimization process. One possibility is that within group transmission simply accelerates the rate of convergence in a population. If so, we should expect populations lacking within group transmission to eventually converge on similarly optimized solutions when compared to those populations utilizing within group transmission. As Fig. 5 shows, when within group transmission is removed the optimizing potential of cultural evolution is diminished: populations asymptotically converge on sub-optimal solutions. It seems that having access to the pool of collective solutions not only accelerates convergence, but also leverages the collective computational potential of a population to discover and disseminate more optimized solutions.

Open-ended cumulative dynamics require increasingly high rates of exploration relative to the strength of optimization

Escaping these optimization traps requires increasingly high rates of exploration relative to the strength of optimization. An example of this is shown in Fig. 6 where the strength of optimization is at \(\lambda =0.6\) and the exploration threshold corresponds to \(\Theta =0.2\). Having a low exploration threshold facilitates rapid exploration of the problem space and allows agents to avoid optimization traps. Whereas optimization processes increase the fit between a solution and its input problem, exploration creates a moving target for optimization by repeatedly seeking out novel problems. The result is a positive feedback loop where there is a concomitant growth in the diversity and complexity of solutions.

Time series of the average solution complexity (top row) and the average solution diversity (bottom row) for \(100\) generations. Solution complexity refers to \({H}_{L}(S)\) and solution diversity is the number of unique solutions in a population \(s\in S\). As the population is fixed (\(N=100\)), there exists an upper bound on the total number of unique solutions a population can entertain. Each graph corresponds to a batch of 30 runs (colored lines) for a specific parameter combination of \(\lambda\) and \(\Theta\). Middle: Outcomes where the strength of optimization is at \(\lambda =0.6\) and the exploration threshold is at \(\Theta =0.2\) result in open-ended cumulative culture. Left: If the strength of optimization is increased (\(\lambda =1.0\)), then the exploration threshold (\(\Theta =0.2\)) is insufficient for open-ended cumulative culture to emerge. Right: Lowering the strength of optimization (\(\lambda =0.6\)) and increasing the exploration threshold (\(\Theta =0.2\)) results in slower growth for both solution complexity and diversity.

Increases in solution complexity reflect a general tendency towards solution strings that are longer and more entropic, whereas increases in diversity tell us that successive generations are host to more unique solution strings. Considering both measures together, the results suggest that populations settle into a division of labor where growth is driven by agents exploring a wider range of increasingly difficult input problems. Harder input problems tend to be longer and less predictable and the probability of encountering such problems grows with \(\ell (p)\). The intractability of harder input problems places a hard (computational) constraint on the extent to which populations can optimize and inhibits individual agents from dipping below low exploration thresholds.

Optimization must therefore be strong enough to keep apace with the difficulty of the input problem, but not so strong that populations end up in an optimization trap. As Fig. 6 shows, when optimization strength is increased (\(\lambda =1.0\)) relative to exploration (\(\Theta =0.2\)), the process of optimization dominates the amplification dynamics and is sufficient to stop open-ended cumulative culture from emerging. Relaxing the strength of optimization (\(\lambda =0.6\)) allows stochastic factors to play a role. With the rate of optimization slowed, amplification dynamics lead to an initial growth in diversity and complexity, which facilitates open-ended cumulative dynamics. However, if the exploration threshold is too high (\(\Theta =0.6\)), then growth in diversity is slowed and the complexity of solutions eventually stagnates in the long run.

Social transmission plays an increasingly prominent role in maintaining open-ended cumulative dynamics

Optimization alone is not sufficient to fully explain why we observe open-ended cumulative dynamics in some parameter combinations and not others. To understand why, we must also consider the contributions of different individual-level mechanisms. Previous theoretical and empirical studies have argued that open-ended cumulative culture requires asocial mechanisms for generating variation and social mechanisms for transmitting variation (Tomasello, 2009). The results here build on this by specifically delineating the contributions of these mechanisms in the origin of cumulative dynamics and how such processes are maintained over long timescales.

Fig. 7 shows the etiology of solutions for the same three parameter combinations as in the previous section. Runs where open-ended cumulative culture (\(\lambda =0.6\); \(\Theta =0.2\)) emerges show a distinct dynamic to situations where solutions are either highly optimized (\(\lambda =1.0\); \(\Theta =0.2\)) or are bounded at a certain level of complexity (\(\lambda =0.6\); \(\Theta =0.6\)). Initially, asocial mechanisms are necessary and sufficient to bridge the gap between problem difficulty and solution complexity, with invention and modification providing the main contributions to the emergence of open-ended cumulative culture.

Average selection probability of solutions from social and asocial sources over \(100\) generations. Colored lines represent the specific social or asocial source. Middle: Proportion of chosen solutions when strength of optimization is at \(\lambda =0.6\) and the exploration threshold corresponds to \(\Theta =0.2\) (resulting in open-ended cumulative culture). Left: Proportion of chosen solutions when the strength of optimization is at \(\lambda =1.0\) and the exploration threshold is \(\Theta =0.2\) (resulting in highly optimized cultures). Right: Proportion of chosen solutions when the strength of optimization is at \(\lambda =0.6\) and the exploration threshold is \(\Theta =0.6\) (resulting in cultures where complexity stagnates).

However, following this initial phase of growth, optimization increasingly relies on within-group transmission dynamics. Within-group social transmission allows a population to leverage its collective computational potential and bypass individual limitations in searching the space of possible solutions. Innovations by one individual can be disseminated to others in a population far quicker than an individual independently arriving at an equivalent solution. This benefit is particularly apparent when optimization needs to track increasingly difficult input problems: unbounded growth in complexity is only maintained when a significant proportion of solutions are transmitted between agents.

To test this assumption, with fixed optimization (\(\lambda =0.6\)) and exploration (\(\Theta =0.2\)) parameters, an additional simulation was run where within-group transmission was removed as a mechanism. When compared to simulation runs where within-group transmission is present (see Fig. 8), the levels of complexity are far lower in runs for agents who solely rely on asocial generative mechanisms. What this tells us is that the potential of asocial mechanisms is fundamentally capped at a certain level of solution complexity. Overcoming this upper bound on complexity requires within-group social transmission.

Discussion

Two general processes are usually invoked as explanatory concepts of technological evolution: the first is an optimizing process where technologies are refined towards a functional objective (cultural adaptation) and the second is a repurposing of existing technologies towards a new functional goal (cultural exaptation). The current paper modeled cultural adaptation as an optimizing search over a solution space and cultural exaptation as a local search over the space of possible problems. By manipulating two general parameters, corresponding to the strength of optimization (\(\lambda\)) and an exploration threshold (\(\Theta\)), this model helps delineate the contributions of these processes to cultural evolutionary dynamics. In particular, this paper showed that:

- (i)

Cultural dynamics often lead to optimization traps when the strength of optimization is strong relative to the rate of exploration;

- (ii)

Escaping these optimization traps relies on a feedback loop between exploration and optimization that results in a concomitant growth in the complexity and diversity of solutions;

- (iii)

This initial emergence of open-ended cumulative culture is reliant on asocial generative mechanisms of innovation;

- (iv)

But maintaining these open-ended dynamics increasingly requires social transmission.

Optimization traps and the strength of optimization (\(\lambda\))

Cultural evolutionary dynamics are often envisaged as an optimization process (Kirby et al., 2014; Lewandowsky et al., 2009; Reali and Griffiths, 2009). The results here build on the existing literature by clearly delineating where cumulative dynamics lead to open-ended growth in complexity and where populations end up in optimization traps. Generally, when the strength of optimization is at maximum (\(\lambda =1.0\)), and the collection of input problems form a stable target, populations are able to reach highly optimized solutions. Having stable input problems increases the probability of populations dipping below the exploration threshold and limits any further exploration of the problem space.

Optimization traps might help explain why open-ended cumulative culture is rare in nature. If populations are restricted to a limited set of stable input problems, and populations have high levels of within-group transmission, then strong optimization pressures will inhibit exploration of novel problems and trap cultures in local regions of the solution space. Such insights complement existing explanations for periods of relative stasis (Powell et al., 2009) or reversals (Henrich, 2004) in the technological complexity of toolkits during human pre-history. It also mirrors, in some respect, what we observe in social transmission experiments: a stable target and a cultural evolutionary process will tend to converge on highly optimized outcomes.

Within-group transmission acts a strong amplifier on optimization: optimal solutions more rapidly disseminate between individuals, drastically reducing the search load on asocial mechanisms (and inheritance). In the absence of within-group transmission, the optimizing potential of cultural evolution is greatly diminished, with populations tending to stabilize around sub-optimal solutions. High levels of within-group transmission seem to act as a barrier to the emergence of open-ended cumulative culture. Further work is required to establish whether this finding generalizes empirically. However, if accurate, we are left with a curious conundrum: within-group transmission needs to be inhibited for open-ended cumulative culture to emerge, but it is central to maintaining these dynamics over long timescales.

Open-ended cumulative culture and the exploration threshold (\(\Theta\))

Relaxing the strength of optimization allows populations to escape optimization traps by facilitating growth in both the diversity and complexity of solutions. Reaching open-ended cumulative culture also requires increasingly high rates of exploration. Motivating this search over the problem space is the extent to which solutions are already optimized. Lower thresholds generally correspond to increased rates of exploration as agents are continually seeking out new opportunities for repurposing. Importantly, for open-ended cumulative culture to emerge, optimization dynamics must be able to chase this movement through the problem space.

Maintaining this dynamic requires that the exploration threshold be low enough for agents to discover harder input problems and create a pressure for more complex solutions. The topology of the problem space plays an outsized role in this process. In particular, harder input problems place greater constraints on the potential for optimization. Having a sufficiently low exploration threshold, as well as an increased probability of encountering harder input problems, results in an exploration rate that is quick enough to slow down the optimization process and stops populations from becoming trapped in certain regions of the problem space. Crucially, these findings come from separating out the search processes over solution and problem spaces. Doing so also allows us to more readily classify problems based on their difficulty and recognizes that finding an optimal solution is intractable for certain classes of problem. Characterizing the dynamics of culture in terms of computation also raises an important yet rarely appreciated point: that the properties of a given input problem can act as a constraint independently of specific cognitive, ecological, or culturally endogenous factors.

One general hypothesis that follows from this is that cultural exaptation is driven by differences in the level at which solutions are optimized. This predicts that highly optimized solutions resist repurposing and lead to optimization traps. Why might highly optimized solutions have a lower repurposing potential than suboptimal ones? One possibility, which is considered here, is that optimization can be likened to overfitting in statistical models. Relative to optimized solutions, which are finely tailored to their input problem, suboptimal solutions have a latent potential for finding a range of more appropriate fits. Optimized solutions, by contrast, are less generalizable to novel problems due to a high degree of specialization: any movement to a new problem has a high probability of significantly reducing the fit.

Case studies of technological exaptation note that repurposing is sometimes correlated with limitations in a solution’s existing functional role (Arthur, 2009). Today, the main use of cavity magnetrons is in microwave ovens, but originally they were a vital component of radar technology. Yet, even in their heyday, a well-documented limitation of radar-driven magnetrons was an inability to easily remove clutter on displays (Brookner, 2010). Eventually, this led to magnetrons being replaced in radar by more suitable alternatives. Still, it remains an open question as to whether or not some technologies differ in their underlying capacity to be repurposed, and more empirical work is needed to establish if the potential of cultural exaptation is linked to the degree of optimization.

Asocial and social mechanisms in the emergence and maintenance of open-ended cumulative culture

Considerable debate exists over the underlying capacities for cumulative culture and the respective roles played by individual creativity and social transmission (Charbonneau, 2015; Enquist et al., 2011; Lewis and Laland, 2012; Tomasello, 2009; Zwirner and Thornton, 2015). The findings here suggest that asocial mechanisms of innovation (specifically invention and modification) are generally more important than within-group transmission in the emergence of cumulative culture. However, asocial mechanisms are insufficient to maintain open-ended growth past a certain level of solution complexity. Once individuals reach this complexity ceiling, differences between the input problem and the current solution are large enough that most single-edit changes fail to improve the fit, and agents will instead choose to remain with their current solution.

By distinguishing between the emergence and maintenance of open-ended cumulative culture, the model here presents a more nuanced role for both within-group transmission and asocial mechanisms of innovation. Initially, within-group transmission plays a marginal role in the emergence of open-ended cumulative culture, with solutions from asocial sources providing the principle gains in diversity and complexity. Besides the inherent advantages of asocial sources, socially transmitted solutions are disadvantaged at this early stage for two reasons. First, at the individual level, the reconstructive nature of learning means that solutions are prone to information loss, which was modeled here as a bias for efficient representations. Second, the adoption of socially transmitted solutions generally promotes convergence at a population level, resulting in a homogenizing process where diversity is lost and searches are confined to local regions of the solution space.

Only when populations are faced with diverse and increasingly difficult problems are the benefits of within-group social transmission unmasked. By rapidly spreading solutions between individuals, transmission stops individual agents from becoming trapped in local regions of the solution space, and provides populations with the opportunity to breach the complexity ceiling faced by solutions from asocial sources. Such benefits cannot be understated: populations become increasingly reliant on within-group transmission even though the process is biased against complexity. Part of the rationale for this is that the upside of convergence is enough to mitigate the simplifying effects of information loss. All that is required is for transmission to raise the average level of complexity enough for asocial mechanisms and optimization to keep apace with the high rates of exploration. Moreover, the fact that transmission is distinctly non-faithful runs contrary to claims that cumulative culture requires preservative mechanisms, such as imitation (Richerson and Boyd (2005); Tennie et al. (2009); for similar arguments against high-fidelity transmission, see: Claidière et al. (2018); Morin (2016)).

Assumptions

Examining the assumptions of this model is important for future work to critically assess and empirically test the robustness and generalizability of these claims.

Optimization is conceptualized here as an individual-level decision-making process. One advantage of this approach is it provides us with a means of directly comparing the contributions of social and asocial mechanisms. A key assumption is that making a decision is solely focused on discovering a locally optimal solution. However, as documented in behavioral economics (Gigerenzer and Gaissmaier, 2011), there are many other factors at work in shaping the choices of individuals. Furthermore, these biasing factors can act antagonistically to functional pressures. For instance, in the now almost-forgotten format war over the de facto video cassette standard, the success of VHS over the technically superior Betamax was driven by economic and social factors.

A second assumption concerns the constraints on innovation and transmission. Nearly all models of cognition introduce some means of transformation on the way agents produce and process information. Innovation mechanisms were assumed to be incremental and derivative: individual agents can only perform single-edit changes to existing solutions. The goal here was to approximate limitations on innovation by restricting trial and error tinkering to local regions of the solution space. Of course, it is often the case that innovations are not constrained to incremental searches, and instead make large jumps via revelatory insights (Villani et al., 2007) or recombination (Charbonneau, 2016). Social transmission, on the other hand, was biased towards parsimonious representations. This links with prominent and strongly supported models both in cognitive science (Chater and Vitányi, 2003) and cultural evolution (Culbertson and Kirby, 2016), but there are numerous other factors that bias the reconstructive process (Acerbi et al., 2019; Sperber, 1996).

The third major assumption is that the population dynamics were relatively impoverished. The process of within-group transmission was determined by randomly sampling a single solution of another agent from the population. Restricting the pool of solutions meant that a single agent only samples a small proportion of the total culture at any given time step. Furthermore, there was no consideration of population growth, and agents did not take into account non-functional information when sampling from individuals (e.g., social status). Yet, we know that the network topology of human populations are not randomly connected graphs (Albert and Barabási, 2002), population size is not a static feature (Creanza et al., 2017), and the sampling of individuals and solutions is often socially biased (e.g., conformity Richerson and Boyd (2005)). Alternative models of network structure, which incorporate growth (see Barabási-Albert model Barabási and Albert (1999)) and rewiring (see Watts and Strogatz model Watts and Strogatz (1998)), provide potential avenues for future work to provide a richer link between individual-level processes and population-level dynamics.

Finally, several assumptions were made with respect to the exploration threshold, how the problem space was structured, and the way in which agents move between different input problems. For instance, there are several alternative ways the exploration threshold could have been approached. The simplest formulation is one where a parameter determines a fixed rate of movement. One issue with this specific formulation is that agents would move irrespective of whether a solution was perfectly or poorly optimized. Crucially, it precludes the possibility that populations can become trapped in regions of the space. Coupling the exploration process to optimization allows us to observe how different strengths of optimization interact with exploration in influencing cultural evolutionary dynamics. Nevertheless, it is likely that changes to the exploration threshold, as well as manipulations to the ability with which agents can traverse the problem space, are necessary steps for future work.

Conclusion

Explaining the origins of cumulative culture, and how it is maintained over long timescales, constitutes a fundamental challenge for theories of cultural evolution. Previous theoretical work has emphasized two fundamental causal processes: cultural adaptation (where technologies are refined towards a functional objective) and cultural exaptation (the repurposing of existing technologies towards a new functional goal). Yet, despite the prominence of cultural exaptation in these theoretical explanations, this process is often absent from models and experiments investigating cumulative culture. Using an agent-based model, where agents attempt to solve problems in a high-dimensional problem space, this paper found that open-ended cumulative culture only emerges under a restricted set of parameters.

In many cases, cultural dynamics push populations into optimization traps: here, excessive optimization of solutions cause the dynamics of change to cease, with populations entering a state of equilibrium. Escaping these optimization traps requires cultural dynamics to explore the problem space rapidly enough to create a moving target for selection. This sets in motion a positive feedback loop where there is open-ended growth in the complexity of cultural solutions. Finally, the results helped delineate the roles played by social and asocial mechanisms, with asocial mechanisms of innovation driving the emergence of cumulative cultural evolution and social mechanisms of within-group transmission helping maintain these dynamics over long timescales.

Data availability

All code and data is available at the following GitHub repository under under a Creative Commons Attribution 4.0 license: https://github.com/j-winters/cumulative.

Change history

17 December 2019

An amendment to this paper has been published and can be accessed via a link at the top of the paper.

References

Acerbi A, Charbonneau M, Miton H, Scott-Phillips T (2019) Cultural stability without copying. OSF Preprints https://doi.org/10.31219/osf.io/vjcq3

Acerbi A, Tennie C, Mesoudi A (2016) Social learning solves the problem of narrow-peaked search landscapes: experimental evidence in humans. R Soc Open Sci 3(9):160215

Albert R, Barabási A-L (2002) Statistical mechanics of complex networks. Rev Mod Phys 74(1):47

Andriani P, Ali AH, Mastrogiorgio M (2015) Measuring exaptation in the pharmaceutical industry. Acad Manag Proc 2015:17085

Andriani P, Cohen J (2013) From exaptation to radical niche construction in biological and technological complex systems. Complexity 18(5):7–14

Arthur B (2009) The nature of technology: what it is and how it evolves. Simon and Schuster

Barabási A-L, Albert R (1999) Emergence of scaling in random networks. Science 286(5439):509–512

Boyd R, Richerson PJ (1985) Culture and the evolutionary process. University of Chicago Press

Boyd R, Richerson PJ (1995) Why does culture increase human adaptability? Ethol Sociobiol 16(2):125–143

Boyd R, Richerson PJ (1996) Why culture is common, but cultural evolution is rare. Proc Br R Soc 88:77–93

Boyd R, Richerson PJ, Henrich J (2013) The cultural evolution of technology: facts and theories. Cult Evol 12:119–142

Brookner E (2010) From $10,000 magee to $7 magee and $10 transmitter and receiver (t/r) on single chip. In 2010 international conference on the origins and evolution of the cavity magnetron. pp. 1–2, IEEE.

Caldwell CA, Atkinson M, Renner E (2016) Experimental approaches to studying cumulative cultural evolution. Curr Directions Psychol Sci 25(3):191–195

Caldwell CA, Millen AE (2008) Experimental models for testing hypotheses about cumulative cultural evolution. Evol Hum Behav 29(3):165–171

Charbonneau M (2015) All Innovations are equal, but some more than others: (re)integrating modification processes to the origins of cumulative culture. Biol Theory 10(4):322–335

Charbonneau M (2016) Modularity and recombination in technological evolution. Philos Technol 29(4):373–392

Chater N, Vitányi P (2003) Simplicity: a unifying principle in cognitive science? Trends Cogn Sci 7(1):19–22

Christian B, Griffiths T (2016) Algorithms to live by: the computer science of human decisions. Macmillan

Claidière N, Amedon GK-k, André J-B, Kirby S, Smith K, Sperber D, Fagot J (2018) Convergent transformation and selection in cultural evolution. Evol Hum Behav 39(2):191–202

Cover TM, Thomas JA (2012) Elements of information theory. John Wiley & Sons

Creanza N, Kolodny O, Feldman MW (2017) Greater than the sum of its parts? Modelling population contact and interaction of cultural repertoires. J R Soc Interface 14(130):20170171

Culbertson J, Kirby S (2016) Simplicity and specificity in language: domain-general biases have domain-specific effects. Front Psychol 6:1964

Darwin C (1859) On the origin of species. John Murray

Dean LG, Vale GL, Laland KN, Flynn E, Kendal RL (2014) Human cumulative culture: a comparative perspective. Biol Rev 89(2):284–301

Derex M, Perreault C, Boyd R (2018) Divide and conquer: intermediate levels of population fragmentation maximize cultural accumulation. Philos Trans R Soc B 373(1743):20170062

Dew N, Sarasvathy SD, Venkataraman S (2004) The economic implications of exaptation. J Evol Econ 14(1):69–84

Emery NJ, Clayton NS (2004) The mentality of crows: convergent evolution of intelligence in corvids and apes. Science 306(5703):1903–1907

Enquist M, Eriksson K, Ghirlanda S (2007) Critical social learning: a solution to rogersas paradox of nonadaptive culture. Am Anthropol 109(4):727–734

Enquist M, Ghirlanda S, Eriksson K (2011) Modelling the evolution and diversity of cumulative culture. Philos Trans R Soc B 366(1563):412–423

Ferdinand VA (2015) Inductive evolution: cognition, culture, and regularity in language. Unpublished Doctoral Dissertation

Gergely G, Csibra G (2006) Sylvia’s recipe: the role of imitation and pedagogy in the transmission of cultural knowledge. In: Roots of human sociality: culture, cognition, and human interaction. pp 229–255

Gigerenzer G, Gaissmaier W (2011) Heuristic decision making. Annu Rev Psychol 62:451–482

Gould SJ, Vrba ES (1982) Exaptation|a missing term in the science of form. Paleobiology 8(1):4–15

Griffiths TL, Kalish ML (2007) Language evolution by iterated learning with bayesian agents. Cogn Sci 31(3):441–480

Henrich J (2004) Demography and cultural evolution: how adaptive cultural processes can produce maladaptive losses–the tasmanian case. Am Antiquity 69(2):197–214

Henrich J (2015) The secret of our success: how culture is driving human evolution, domesticating our species, and making us smarter. Princeton University Press

Heyes CM (1993) Imitation, culture and cognition. Anim Behav 46(5):999–1010

Hunt GR, Gray RD (2004) Direct observations of pandanus-tool manufacture and use by a new caledonian crow (Corvus moneduloides). Anim Cogn 7(2):114–120

Johnson S (2011) Where good ideas come from: the natural history of innovation. Penguin

Kauffman SA (1993) The origins of order: self-organization and selection in evolution. Oxford University Press

Kawamura S (1959) The process of sub-culture propagation among Japanese macaques. Primates 2(1):43–60

Kirby S, Cornish H, Smith K (2008) Cumulative cultural evolution in the laboratory: an experimental approach to the origins of structure in human language. Proc Natl Acad Sci 105(31):10681–10686

Kirby S, Dowman M, Griffiths TL (2007) Innateness and culture in the evolution of language. Proc Natl Acad Sci 104(12):5241–5245

Kirby S, Griffiths T, Smith K (2014) Iterated learning and the evolution of language. Curr Opin Neurobiol 28:108–114

Kirby S, Hurford JR (2002) The emergence of linguistic structure: an overview of the iterated learning model. In Simulating the evolution of language. Springer, pp 121–147

Kolodny O, Creanza N, Feldman MW (2015) Evolution in leaps: the punctuated accumulation and loss of cultural innovations. Proc Natl Acad Sci 112(49):E6762–E6769

Krützen M, Mann J, Heithaus MR, Connor RC, Bejder L, Sherwin WB (2005) Cultural transmission of tool use in bottlenose dolphins. Proc Natl Acad Sci USA 102(25):8939–8943

Laland KN (2018) Darwinas unfinished symphony: how culture made the human mind. Princeton University Press

Lass R (1990) How to do things with junk: exaptation in language evolution. J Linguist 26(1):79–102

Lewandowsky S, Griffiths TL, Kalish ML (2009) The wisdom of individuals: exploring people’s knowledge about everyday events using iterated learning. Cogn Sci 33(6):969–998

Lewis HM, Laland KN (2012) Transmission fidelity is the key to the build-up of cumulative culture. Philos Trans R Soc B 367(1599):2171–2180

Li M, Vitányi PMB (2008) An introduction to Kolmogorov complexity and its applications, 3rd edn. Springer-Verlag, New York

Mastrogiorgio M, Gilsing V (2016) Innovation through exaptation and its determinants: the role of technological complexity, analogy making and patent scope. Res Policy 45(7):1419–1435

Mayfield JE (2013) The engine of complexity: evolution as computation. Columbia University Press

Mesoudi A (2011) Variable cultural acquisition costs constrain cumulative cultural evolution. PLoS ONE 6(3):e18239

Mesoudi A, Thornton A (2018) What is cumulative cultural evolution? Proc R Soc B 285(1880):20180712

Miton H, Charbonneau M (2018) Cumulative culture in the laboratory: methodological and theoretical challenges. Proc R Soc B 285(1879):20180677

Mokyr J (1998) Induced technical innovation and medical history: an evolutionary approach. J Evolut Econ 8(2):119–137

Morin O (2016) How traditions live and die. Oxford University Press

Penn DC, Povinelli DJ (2007) Causal cognition in human and nonhuman animals: a comparative, critical review. Annu Rev Psychol 58:97–118

Piantadosi ST, Cantlon JF (2017) True numerical cognition in the wild. Psychol Sci 28(4):462–469

Powell A, Shennan S, Thomas MG (2009) Late pleistocene demography and the appearance of modern human behavior. Science 324(5932):1298–1301

Reali F, Griffiths TL (2009) The evolution of frequency distributions: relating regularization to inductive biases through iterated learning. Cognition 111(3):317–328

Richerson PJ, Boyd R (2005). Not by genes alone: how culture transformed human evolution. University of Chicago Press

Sasaki T, Biro D (2017) Cumulative culture can emerge from collective intelligence in animal groups. Nat Commun 8(April):15049

Schumpeter JA (1939) Business cycles: a theoretical, historical, and statistical analysis of the capitalist process (Vol. 2). McGraw-Hill, New York

Shannon CE, Weaver W (1949) A mathematical model of communication. University of Illinois Press

Solée RV, Valverde S, Casals MR, Kauffman SA, Farmer D, Eldredge N (2013) The evolutionary ecology of technological innovations. Complexity 18(4):15–27

Sperber D (1996) Explaining culture: a naturalistic approach. Cambridge, Cambridge

Tennie C, Call J, Tomasello M (2009) Ratcheting up the ratchet: on the evolution of cumulative culture. Philos Trans R Soc B 364(1528):2405–2415

Tomasello M (2009) The cultural origins of human cognition. Harvard University Press

Tomasello M, Kruger AC, Ratner HH (1993) Cultural learning. Behav Brain Sci 16(3):495–511

Villani M, Bonacini S, Ferrari D, Serra R, Lane D (2007) An agent based model of exaptive processes. Eur Manag Rev 4(3):141–151

Watts DJ, Strogatz SH (1998) Collective dynamics of ‘small-world’ networks. Nature 393(6684):440

Whiten A, Goodall J, McGrew WC, Nishida T, Reynolds V, Sugiyama Y, Boesch C (1999) Cultures in chimpanzees. Nature 399(6737):682–685

Zwirner E, Thornton A (2015) Cognitive requirements of cumulative culture: teaching is useful but not essential. Sci Rep 5:16781

Acknowledgements

The author would like to thank Maria Brackin, Olivier Morin, and Oleg Sobchuk for helpful and insightful comments on earlier versions of this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Winters, J. Escaping optimization traps: the role of cultural adaptation and cultural exaptation in facilitating open-ended cumulative dynamics. Palgrave Commun 5, 149 (2019). https://doi.org/10.1057/s41599-019-0361-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-019-0361-3

This article is cited by

-

Conditions under which faithful cultural transmission through teaching promotes cumulative cultural evolution

Scientific Reports (2023)

-

Culture and Evolvability: a Brief Archaeological Perspective

Journal of Archaeological Method and Theory (2023)