Abstract

Photonic neural networks (PNNs) are remarkable analogue artificial intelligence accelerators that compute using photons instead of electrons at low latency, high energy efficiency and high parallelism; however, the existing training approaches cannot address the extensive accumulation of systematic errors in large-scale PNNs, resulting in a considerable decrease in model performance in physical systems. Here we propose dual adaptive training (DAT), which allows the PNN model to adapt to substantial systematic errors and preserves its performance during deployment. By introducing the systematic error prediction networks with task-similarity joint optimization, DAT achieves high similarity mapping between the PNN numerical models and physical systems, as well as highly accurate gradient calculations during dual backpropagation training. We validated the effectiveness of DAT by using diffractive and interference-based PNNs on image classification tasks. Dual adaptive training successfully trained large-scale PNNs under major systematic errors and achieved high classification accuracies. The numerical and experimental results further demonstrated its superior performance over the state-of-the-art in situ training approaches. Dual adaptive training provides critical support for constructing large-scale PNNs to achieve advanced architectures and can be generalized to other types of artificial intelligence systems with analogue computing errors.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The MNIST dataset is available at http://yann.lecun.com/exdb/mnist/, whereas the FMNIST dataset is available at https://github.com/zalandoresearch/fashion-mnist. Other data needed to evaluate the conclusions are present in the main text or Supplementary Information. Source Data are provided with this paper.

Code availability

The code for training MPNN with DAT and the pre-trained models for replication are available at https://github.com/THPCILab/DAT_MPNN (ref. 42) (https://doi.org/10.5281/zenodo.8257385).

References

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Bahdanau, D., Cho, K. & Bengio, Y. Neural machine translation by jointly learning to align and translate. In 3rd International Conference on Learning Representations (2015).

Rawat, W. & Wang, Z. Deep convolutional neural networks for image classification: a comprehensive review. Neural Comput. 29, 2352–2449 (2017).

Capper, D. et al. Dna methylation-based classification of central nervous system tumours. Nature 555, 469–474 (2018).

Torlai, G. et al. Neural-network quantum state tomography. Nat. Phys. 14, 447–450 (2018).

Xu, X. et al. Self-calibrating programmable photonic integrated circuits. Nat. Photon. 16, 595–602 (2022).

Patterson, D. et al. Carbon emissions and large neural network training. Preprint at https://arxiv.org/abs/2104.10350 (2021).

Shainline, J. M., Buckley, S. M., Mirin, R. P. & Nam, S. W. Superconducting optoelectronic circuits for neuromorphic computing. Phys. Rev. Appl. 7, 034013 (2017).

Lin, X. et al. All-optical machine learning using diffractive deep neural networks. Science 361, 1004–1008 (2018).

Yan, T. et al. Fourier-space diffractive deep neural network. Phys. Rev. Lett. 123, 023901 (2019).

Zhou, T. et al. Large-scale neuromorphic optoelectronic computing with a reconfigurable diffractive processing unit. Nat. Photon. 15, 367–373 (2021).

Shen, Y. et al. Deep learning with coherent nanophotonic circuits. Nat. Photon. 11, 441–446 (2017).

Hughes, T. W., England, R. J. & Fan, S. Reconfigurable photonic circuit for controlled power delivery to laser-driven accelerators on a chip. Phys. Rev. Appl. 11, 064014 (2019).

Williamson, I. A. et al. Reprogrammable electro-optic nonlinear activation functions for optical neural networks. IEEE J. Sel. Top. Quantum Electron. 26, 1–12 (2019).

Xu, X. et al. 11 TOPS photonic convolutional accelerator for optical neural networks. Nature 589, 44–51 (2021).

Feldmann, J. et al. Parallel convolutional processing using an integrated photonic tensor core. Nature 589, 52–58 (2021).

Chang, J., Sitzmann, V., Dun, X., Heidrich, W. & Wetzstein, G. Hybrid optical-electronic convolutional neural networks with optimized diffractive optics for image classification. Sci. Rep. 8, 1–10 (2018).

Miscuglio, M. et al. Massively parallel amplitude-only fourier neural network. Optica 7, 1812–1819 (2020).

Feldmann, J., Youngblood, N., Wright, C. D., Bhaskaran, H. & Pernice, W. H. All-optical spiking neurosynaptic networks with self-learning capabilities. Nature 569, 208–214 (2019).

Hamerly, R., Bernstein, L., Sludds, A., Soljačić, M. & Englund, D. Large-scale optical neural networks based on photoelectric multiplication. Phys. Rev. X 9, 021032 (2019).

Chakraborty, I., Saha, G. & Roy, K. Photonic in-memory computing primitive for spiking neural networks using phase-change materials. Phys. Rev. Appl. 11, 014063 (2019).

Hughes, T. W., Williamson, I. A., Minkov, M. & Fan, S. Wave physics as an analog recurrent neural network. Sci. Adv. 5, 6946 (2019).

Bueno, J. et al. Reinforcement learning in a large-scale photonic recurrent neural network. Optica 5, 756–760 (2018).

Van der Sande, G., Brunner, D. & Soriano, M. C. Advances in photonic reservoir computing. Nanophotonics 6, 561–576 (2017).

Larger, L. et al. High-speed photonic reservoir computing using a time-delay-based architecture: million words per second classification. Phys. Rev. X 7, 011015 (2017).

Brunner, D. et al. Tutorial: Photonic neural networks in delay systems. J. Appl. Phys. 124, 152004 (2018).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324 (1998).

Zuo, Y. et al. All-optical neural network with nonlinear activation functions. Optica 6, 1132–1137 (2019).

Wright, L. G. et al. Deep physical neural networks trained with backpropagation. Nature 601, 549–555 (2022).

Hughes, T. W., Minkov, M., Shi, Y. & Fan, S. Training of photonic neural networks through in situ backpropagation and gradient measurement. Optica 5, 864–871 (2018).

Zhou, T. et al. In situ optical backpropagation training of diffractive optical neural networks. Photon. Res. 8, 940–953 (2020).

Filipovich, M. J. et al. Silicon photonic architecture for training deep neural networks with direct feedback alignment. Optica 9, 1323–1332 (2022).

Gu, J. et al. L2ight: enabling on-chip learning for optical neural networks via efficient in-situ subspace optimization. In Advances in Neural Information Processing Systems Vol. 34, 8649–8661 (NeurIPS, 2021).

Spall, J., Guo, X. & Lvovsky, A. I. Hybrid training of optical neural networks. Optica 9, 803–811 (2022).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-assisted Intervention 234–241 (Springer, 2015).

Xiao, H., Rasul, K. & Vollgraf, R. Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms. Preprint at https://arxiv.org/abs/1708.07747 (2017).

Pai, S., Bartlett, B., Solgaard, O. & Miller, D. A. Matrix optimization on universal unitary photonic devices. Phys. Rev. Appl. 11, 064044 (2019).

Pai, S. et al. Parallel programming of an arbitrary feedforward photonic network. IEEE J. Sel. Top. Quantum Electron. 26, 1–13 (2020).

Trabelsi, C. et al. Deep complex networks. In 6th International Conference on Learning Representations (2018).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. In 3rd International Conference on Learning Representations (2015).

Zheng, Z. et al. Dual adaptive training of photonic neural networks. Zenodo https://doi.org/10.5281/zenodo.8257385 (2023).

Clements, W. R., Humphreys, P. C., Metcalf, B. J., Kolthammer, W. S. & Walmsley, I. A. Optimal design for universal multiport interferometers. Optica 3, 1460–1465 (2016).

Acknowledgements

This work is supported by the National Key Research and Development Program of China through grant no. 2021ZD0109902 (to X.L.), and the National Natural Science Foundation of China through grant nos. 62275139 (to X.L.), 61932022 and 62250055 (to H.X.).

Author information

Authors and Affiliations

Contributions

X.L. and H.X. initiated and supervised the project. X.L., Z.Z. and Z.D. conceived the research. X.L. designed the methods. Z.Z., Z.D. and H.C. implemented the algorithm and conducted experiments. Z.Z., Z.D., H.C., R.Y., S.G. and H.Z. processed the data. X.L., Z.Z., Z.D., H.C. and R.Y. analysed and interpreted the results. All authors prepared the manuscript and discussed the research.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks the anonymous reviewers for their contribution to the peer review of this work. Primary Handling Editor: Mirko Pieropan, in collaboration with the Nature Machine Intelligence team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

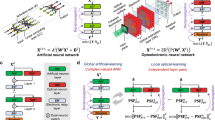

Extended Data Fig. 1 Procedure of DAT with internal states for the DPNN in the nth PNN block.

The flow charts with blue and yellow backgrounds denote the forward inferences in the physical system and the numerical model, respectively. Four steps of DAT with internal states, labelled using dotted arrows with four different colours, are repeated over all training samples to minimize the loss functions until convergence for obtaining the numerical model and physical parameters for the system, that is, the phase modulation matrices \({\rm{M}}_{ni}\) for i = 1, 2, 3, 1≤ n ≤N. See Supplementary Section 2 for a detailed description.

Extended Data Fig. 2 Procedure of DAT without internal states for the MPNN.

The flow charts with blue and yellow backgrounds denote the forward inferences in the physical system and the numerical model, respectively. Four steps of DAT without internal states, labelled using dotted arrows with four different colours, are repeated over all training samples to minimize the loss functions and optimize the physical model, that is, the phase coefficients Θn and Φn of the nth photonic mesh for 1≤n≤N. See Supplementary Section 10 for a detailed description.

Extended Data Fig. 3 Architecture of the SEPN constructed with a complex-valued mini-UNet.

See Methods for a detailed description.

Extended Data Fig. 4 Training DPNN under joint systematic errors for the MNIST and FMNIST classification.

The performance of DAT is evaluated on the DPNN-S and DPNN-M architectures and compared with the PAT and direct deployment of in silico-trained model under different joint systematic error configurations, as shown in Table c. The first configuration of DPNN-M listed in Table c was selected for the visualization of the internal states with the example digit ‘7’, phase modulation layers, and confusion matrices on the MNIST classification in a, b, and d, respectively. See Supplementary Section 6 for a detailed description.

Extended Data Fig. 5 Training DPNNs under three types of systematic errors for the FMNIST classification.

The performances of DAT for DPNN-S (a) and DPNN-M (b) are compared with the PAT and direct deployment of in silico-trained models under different amounts of systematic errors. The DPNN-S is only trained without internal states (DAT w/o IS), while the DPNN-M is trained with (DAT w/ IS) and without internal states.

Extended Data Fig. 6 Convergence plots of DPNN-M evaluated with the blind test accuracy on the MNIST dataset.

a and b represent the results of training process under mild individual errors; c, d, and e are the results under the severe individual errors, and f is the result under the joint errors, with the error configurations shown above the subfigures. For the numerical comparisons of training DPNN-M, DAT uses the test accuracy at the tenth epoch, while PAT uses the highest test accuracy among the fifty epochs due to the different convergence speeds and stability. DAT outperforms PAT with a more robust and stable training process for optimizing the DPNN-M under both mild and severe errors.

Extended Data Fig. 7 Illustration of constantly changing systematic errors.

a, Experimental DPNN prototypes, where L1, L2 and L3 are relay lenses; POL1 and POL2 are polarizers; NPBS1 and NPBS2 are non-polarized beamsplitters. b, Measurement variation of three points at the CCD sensor using 30 successive frames for the physical DPNN-S. c, Variation of testing accuracies of in silico-trained physical DPNN-C within six days when directly deploying to the physical system with geometric calibrations. The fluctuations are caused by the recording errors and XY-Plane shift errors at the CCD sensor. The event ‘Calib.’ means that the CCD sensor is geometric calibrated with affine transformations. See Supplementary Section 7 for a detailed description.

Extended Data Fig. 8 Performance evaluation of SEPNs for a 3-block DPNN-C constructed by the physical system.

a, The internal states and final output with the input digit of ‘5’ from the MNIST test set (left), and the output differences between the numerical model and physical system of blocks 1, 2, and 3 with and without SEPNs by using pixel-level statistics (right). b, Output differences averaged over all samples from the MNIST test set with and without SEPNs. See Supplementary Section 8 for a detailed description.

Extended Data Fig. 9 Comparisons of DAT performances with all, partial, and without internal states for the 3-layer MPNN in the task of MNIST classification.

The DAT methods are implemented with each SEPN parameter of 9,648 in the unitary mode. The performance of DAT with all internal states P1, P2 (2 IS), one internal state P2 (1 IS), and without internal states are evaluated. The classification accuracy improves with more measurements of internal states, especially under severe systematic errors.

Extended Data Fig. 10 Computational complexities of different training methods.

The computational complexities, evaluated with FLOPs, of AT, PAT, and DAT with internal states (IS) for training DPNNs and MPNNs are compared under different input sizes (a) and PNN block numbers (b). The legend ‘AT’ represents adaptive training, and the postfix ‘(A)’ indicates the use of the angular spectrum method to implement diffractive weighted interconnections. The configurations used in the numerical or physical experiments are indicated by dotted vertical lines. The curves are plotted based on Supplementary Tables 1 and 2. See Supplementary Section 14 for a detailed description.

Supplementary information

Supplementary Information

Supplementary Sections 1–16, Algorithms 1 and 2, Figs. 1–4 and Tables 1 and 2.

Supplementary Data 1

Raw data obtained from the CCD detector in physical experiments for illustrating Fig. 3, Extended Data Fig. 8 and Supplementary Figs. 1 and 3.

Source data

Source Data Fig. 2

Statistical source data.

Source Data Fig. 3

Statistical source data.

Source Data Fig. 4

Statistical source data.

Source Data Extended Data Fig. 5

Statistical source data.

Source Data Extended Data Fig. 6

Statistical source data.

Source Data Extended Data Fig. 7

Statistical source data.

Source Data Extended Data Fig. 8

Statistical source data.

Source Data Extended Data Fig. 9

Statistical source data.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zheng, Z., Duan, Z., Chen, H. et al. Dual adaptive training of photonic neural networks. Nat Mach Intell 5, 1119–1129 (2023). https://doi.org/10.1038/s42256-023-00723-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-023-00723-4

This article is cited by

-

Demixing microwave signals using system-on-chip photonic processor

Light: Science & Applications (2024)