Abstract

The study primarily aimed to understand whether individual factors could predict how people perceive and evaluate artworks that are perceived to be produced by AI. Additionally, the study attempted to investigate and confirm the existence of a negative bias toward AI-generated artworks and to reveal possible individual factors predicting such negative bias. A total of 201 participants completed a survey, rating images on liking, perceived positive emotion, and believed human or AI origin. The findings of the study showed that some individual characteristics as creative personal identity and openness to experience personality influence how people perceive the presented artworks in function of their believed source. Participants were unable to consistently distinguish between human and AI-created images. Furthermore, despite generally preferring the AI-generated artworks over human-made ones, the participants displayed a negative bias against AI-generated artworks when subjective perception of source attribution was considered, thus rating as less preferable the artworks perceived more as AI-generated, independently on their true source. Our findings hold potential value for comprehending the acceptability of products generated by AI technology.

Similar content being viewed by others

Introduction

The rapid advancement of artificial intelligence (AI) has sparked, especially in recent years, a significant debate about its impact on various industries and the future of work and society1,2. The significant rise of artificial intelligence systems in recent technological breakthroughs has led to our era being dubbed the “Age of AI”3 and the “Fourth Industrial Revolution”4. While AI has already made its presence felt in fields such as education5,6, healthcare7, banking and finance8, retail9, and transportation10, its use in other fields such as communication and art 11,12 has also been on the rise particularly with the release of powerful and user friendly generative AI tools like ChatGPT, developed by OpenAI or the Bard model developed by Google, as well as other tools specialized in text-to-images creation as for example DALL-E or MidJourney13,14.

The ongoing debate on the relationship between humans, machines, and art is only a recent development of a discussion that emerged during the first half of the 20th century. Already in 1935 Walter Benjamin examined the impact of technical reproducibility on art in his influential essay “The Work of Art in the Age of Mechanical Reproduction”15. Benjamin argued that mechanical reproduction techniques, such as photography and film, fundamentally altered the nature of art by diminishing the unique presence or “aura” of the original artwork. However, differently from the technically produced artworks mentioned by Benjamin, generative AI systems have evolved beyond merely copying existing artworks (e.g., art prints) or reproducing images with human assistance (photography), and they currently possess the capability to create seemingly “unique” (at least from a statistical point of view16,17) art pieces.

The concept of machines generating original creative and informative content, including text and artwork, raises concerns regarding the relationship between humans and machines, as well as the future of creativity18. Although AI technology has automated certain aspects of the creative process, the resulting output has been often reported to lack true originality19. Such limitation can be attributed to AI systems' reliance on input data, which constrains their capacity for innovation and novel expression20. Despite this, recent research has shown that AI performs excellently tasks commonly employed to assess human creativity21. Furthermore, emerging research demonstrates that AI can engage in authentic artistic creation that transcends the mere imitation of existing styles22,23,24.

AI has not only produced paintings reminiscent of famous artists3 but also developed original artistic styles4 and art pieces of remarkable quality, that have been purchased for significant prices at auctions25. AI has participated in a variety of artistic activities, such as composing original songs26 and poetry27. Previous research has shown that AI-generated works can be indistinguishable from human-made art27,28, and blind tests have revealed that people assign high artistic value to them29. Consequently, nowadays individuals increasingly face scenarios akin to the Turing test30, where they may struggle to differentiate between AI-generated and human-generated art or music.

Advancement in AI technology raises concerns about unemployment, the extinction of humankind31,32,33, and the erosion of humans' privileged and unique position in the world34. A widespread dissemination of AI-generated art has the potential to challenge people's beliefs about human nature and artistic creativity, with potential ethical and social consequences35. These perceived threats are likely due to AI's ability to shake humans rooted anthropocentric views. The term Anthropocentrism refers to the perceived precedence of the human beings over other species36, and it is a widespread bias often evident even in children, although it is more likely to be culturally shaped than innate37. This bias, also referred to as human supremacy or human exceptionalism, influences many aspects of life, such as biological thinking38, ecology39, animal rights40, and in general how humans interact with the environment41. Schmitt42 suggested that a negative bias towards machines could hinder widespread AI acceptance, particularly in AI-generated activities that have been historically believed to be exclusive domain of humans, and AI art may challenge anthropocentric beliefs even more than other AI activities, which often involve analytical or computational skills. People may be less inclined to accept AI involvement in more human-like tasks43 or in products with higher symbolic value44. Artistic creativity is often considered a core human feature22,45,46, so the emergence of AI art could threaten people's anthropocentric worldview. Speciesism, an idea strongly associated with anthropocentrism47, has also been individuated in the literature as a possible obstacle for the acceptance of AI.

As a result of such possible threat to their worldview, people may tend to defend their anthropocentric beliefs by downgrading the artistic value of AI-generated art, following a pattern of behavior observed in the evaluation of other moral contexts48,49. Individuals frequently remember, create, and assess information in a manner consistent with their present motivations50. Considering that AI art can pose a significant psychological challenge, impacting people's ontological security, individuals are likely to be biased in processing information in this context. In addition, the perception of AI-generated content may be influenced by the biases and expectations of human evaluators, who may attribute more value to human-created works due to their assumptions about the inherent qualities of human creativity, or because humans tend to value objects and product more when they believe that they somehow connect them with other humans via their manual work51. In ambiguous situations where stimuli can be interpreted in multiple ways, biased information processing driven by motivation is more likely52, and artistic production provides a high degree of such interpretational flexibility. Art experiences are highly subjective, making them susceptible to motivation-driven biases and less measurable attribute, enabling the individuals to exhibit biased emotional responses to AI-generated art without directly violating explicit objectivity and measurable rules. In a recent study53 it was found that humans have a strong bias against AI-made artworks, which are perceived as less creative and induce less sense of awe compared to human-made art and that the bias is stronger among individuals with stronger anthropocentric beliefs. The authors proposed that the systematic devaluation of AI-made art is a response to a threatened anthropocentric view that reserves creativity exclusively for humans.

In a likely future where people will encounter more and more AI-generated contents, it is increasingly important to examine how individuals perceive and evaluate these contents and identifying the factors that shape their judgments, including their beliefs about the role of AI in creative processes54. Despite the recent development and the large debate generated around AI-generated content, there is still a significant gap in our understanding of how individuals evaluate and perceive such content. It is essential to explore whether individuals can accurately differentiate between content created by humans and those generated by AI technologies and how attribution knowledge (i.e., information about the creators of the content) affects their evaluation and reception of the work. Moreover, despite recent evidences that individual factors may mediate how much an individual likes and trust AI systems55, it has not been studied how individual differences, such as personality, relationship with technology, level of education, and cultural background, may mediate the way that people perceive and analyze information that perceived as AI generated.

In the present study, the following primary, pre-registered research question was tested:

RQ1: Do individual factors predict how people positively/negatively perceive and evaluate images that are believed to be produced using artificial intelligence?

In the present study, we focus on factors that relate to personality and personal attitudes that are well established in psychological research or that have shown relationship with how individuals interact with art and technology. These variables that selected a-priori before data collection were the Big-Five personality traits56, empathy (empathy quotient)57, creative identity (creative self-efficacy and creative personal identity)58, relationship with art (art interest)59, and relationship with technology (attitudes toward media and technology)60.

H1

Higher levels of empathy (H1a), a stronger creative identity (H1b), and a deeper relationship with art (H1c) will predict a negative bias towards the stimuli that are believed to be AI-generated art. This hypothesis is grounded in the assumption that individuals who highly value human interactions (such as empathetic people) and those with a creative identity may perceive AI-generated art as less authentic or expressive compared to human-created art, leading to a negative bias. Moreover, those with a profound connection to art might view AI art as a challenge to the conventional artistic process and human creativity, further contributing to the negative bias.

H2

A positive attitude towards technology will predict a relatively more favorable view towards stimuli that are believed to be AI-generated. As people become increasingly accustomed to technology, they may be less skeptical of AI's capabilities and less apprehensive about embracing the technology.

H3

Openness, as one of the Big-Five personality traits, will have a negative correlation with a positive attitude towards art believed to be AI generated. This is because individuals with high openness tend to exhibit a greater interest in art and possess stronger creative identities, potentially fostering skepticism towards AI-generated art.

Regarding the other four personality traits (conscientiousness, extraversion, agreeableness, and emotional stability), we did not formulate specific predictions but will incorporate them as exploratory (explicitly mentioned as exploratory in the pre-registration) predictors in our analyses.

In addition, both the preliminary and the follow-up data analyses, as outlined in the pre-registered data analysis plan, enabled us to investigate the following exploratory research questions:

RQ2: Are participants able to distinguish human-made from AI-generated images? Are individual factors predicting who is better in distinguishing them?

Previous research has shown that humans may not be able any longer to distinguish human products from the products of AI27,28. For the case that this hypothesis would be supported (preliminary analyses), an adjustment to our data analysis strategy was included in the pre-registered data analysis plan, as detailed in the Method section. We did not have any initial assumptions regarding whether individual factors might influence the ability to accurately distinguish between human-made and AI-generated images.

RQ3: Do humans have a negative bias toward AI-generated art?

Recent published literature has shown that a negative bias is commonly reported against AI artworks53, therefore it is possible that the same bias will be observed in our study. Please note that the pre-registered research question was developed expecting that such bias toward AI-generated outputs exist and that may be mediated by individual characteristics. However, the present research question was not explicitly mentioned in the pre-registration of the study but considered in the data-analysis plan. Conducting the pre-registered analyses enabled us to assess whether such negative bias is evident in our study. These analyses served to examine the general perception of artworks generated by both humans and AI, and to analyze how the perceived source of the artworks influenced the scores for liking and positive emotions.

Results

Table 1 presents the descriptive statistics for the Big-Five traits and empathy and Table 2 for the TIPI and the EQ scales. The intercorrelations between the variables are reported in the Supplementary Materials file.

Paired-samples t-tests examined the difference in the dependent variables between human-generated and AI-generated images. The AI-generated images (M = 59.2, SD = 12.7) were liked more than human-generated images (M = 47.3, SD = 13.1), t(200) = 21.03, p < 0.001, d = 1.48. Similarly, The AI-generated images (M = 52.2, SD = 13.4) evoked positive emotions more than human-generated images (M = 42.3, SD = 13.7), t(200) = 20.08, p < 0.001, d = 1.42. However, the participants could not discriminate whether the images were generated by humans or AI (0 = most likely human, 100 = most likely AI). The AI-generated images (M = 41.7, SD = 11.6) were rated with small effect size as more likely to be products of humans than the human-generated images (M = 43.3, SE = 11.8), t(200) = 2.07, p = 0.040, d = 0.15. Please note that these results should be interpreted as specific for the set of images used in this study. They should not be broadly generalized to suggest that AI-generated images are universally preferred or consistently evoke more positive emotions compared to human-made artworks.

Because the participants could not discriminate between human- and AI-generated images, we did not perform separate analyses on the data from human- and AI-generated images (see also RQ2 as reported in the pre-registration). In Supplementary Materials we present also exploratory analyses which show that the above results concerning liking, positive emotions, and the participants inability to discriminate the source of the images generalizes across the included art styles (cubism, expressionism, impressionism, Japanese art, and abstract art).

Background Variables

Gender did not have any statistically significant effects on liking the images, positive emotions, or on evaluating whether the images were generated by humans or AI. However, age interacted with beliefs about human vs AI -generated nature of the images, suggesting that the older the participants were and the more likely the images were rated as generated by AI, the less it was liked, B = − 0.003, 95% CI [− 0.004, − 0.002], p < 0.001, and the less positive emotions it evoked, B = − 0.004, 95% CI [− 0.005, − 0.003], p < 0.001. Age and category interacted in showing that, the older the participants were, the more likely they were to believe that the AI-generated images were made by humans, B = 0.173, 95% CI [0.100, 0.247], p < 0.001.

Personality Variables

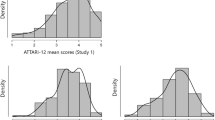

The models on liking and the origin of the images with personality traits, empathy, and beliefs about whether the images were generated by humans or AI as predictors (Table 3) showed that conscientiousness interacted with beliefs about the origin of the images such that the less conscientious the participants were, the larger the difference in liking and in positive emotions between images that were believed to be generated by humans as compared to images believed to be made by AI (Fig. 1a,d). The negative main effect showed for the variable “generated” (Table 3), indicates that the more strongly the images are believed to be generated by AI, the less they are liked and the less positive are the emotions toward them, point towards a general negative bias toward AI-generated artworks. In addition, the results for the trait emotional stability showed that the more emotionally stable the participants were, the more they liked and the more positive emotions were evoked when the images were believed to be generated by humans, compared to images believed to be produced by AI (Fig. 1b,e). Openness was related to liking the images and positivity of emotions: highly open persons liked the images and experienced positive emotions in general more than individuals on average. In addition, for highly open participants the difference in liking between images they believed were made by humans and those believed to be made by AI was larger than in participants on average (Fig. 1c).

Next, we studied the effects of personality, empathy, and the real category of the images (human vs. AI -generated) on the beliefs about whether the images were generated by humans or AI (Table 4). The intercept was at 41.7, 95% CI [37.12, 46.20], on the scale from 0 (most likely human) to 100 (most likely AI), meaning that on average the AI-generated images (= the reference category) were rated as having been generated by humans. Agreeableness interacted with category, indicating that highly agreeable persons tended to rate human-made images as more likely to be made by AI than AI-generated images, whereas for individuals low in agreeableness the pattern was the opposite (Fig. 2a). In addition, empathy interacted with category, showing that the more empathic the persons, the better they could discriminate between AI- and human-generated (Fig. 2b), although even highly empathic persons could not discriminate between AI-made and human-made images in such way that their score would have been above 50 for AI-generated images (scores > 50 indicate that the image was attributed to AI, whereas scores < 50 indicate attribution to humans).

Top: Participants’ beliefs about whether the images were generated by humans or AI (0 = most likely human, 100 = most likely AI) as a Function of Agreeableness, Empathy, Positive Attitude Towards Media and Technology Usage, and the real category of the images (AI vs. Human). Bottom: liking and positive emotions elicited by the images as a function of Creative Self-efficacy and Personal Identity, Attitudes Towards Media and Technology Usage, and beliefs about whether the images were generated by humans or AI (0 = most likely human, 100 = most likely AI).

The next models examined liking and positive emotions as a function creative self-efficacy and personal identity, attitudes and usage of media and technology, interest and exposure to art, and beliefs about the source of the images (Table 5). As detected in previous analyses (see Table 3), also the current analysis reveals a significant trend for the variable "generated" (Table 5). Specifically, the results indicate that the stronger the belief that images are produced by AI, the less favorably they are perceived, and the more negative the emotional response towards them becomes, suggesting again a negative bias towards artworks generated by AI. Participants with high creative identity liked more than people on average the images they believed to be produced by humans, compared with those believed to be generated by AI (Fig. 2d). Participants with high positive attitude (Fig. 2e,h) towards media and technology liked and experienced positive emotions for images believed to be made by AI more than people on average, whereas participants with low negative attitude (Fig. 2f,i) showed the opposite pattern. However, participants with a highly positive attitude towards media and technology also generally liked images more than people on average. In addition, the higher the multitasking attitude in media and technology usage, the more the images were liked when they were believed to be human products, compared with images thought be produced by AI (Fig. 2g).

Finally, we studied the participants’ believes on the generator of the images with the real category, creative self-efficacy and personal identity, the attitudes and usage of media and technology, and the real category (human- vs. AI-generated) of the images as predictors (Table 6). The only statistically significant effect was the category x MTUAS-A-P interaction Fig. 2c). This finding suggests that the more positive the attitude was towards media and technology, the less human-made images were believed to be AI-generated.

Discussion

The present study primarily aimed to investigate how individual factors might influence the way people perceive and evaluate artworks, particularly based on their beliefs about the origins of these images—whether they are human-made or AI-generated (RQ1). Exploratorily, drawing from the pre-registered data analysis plan, the study aimed to evaluate the participants' ability to effectively differentiate between the two image categories (Human-made or AI-generated) and identify which individual characteristics might predict their success in such differentiation (RQ2). Furthermore the study attempted to determine if participants had a negative bias toward AI-generated artworks (RQ2).

In agreement with our hypothesis (H1b), participants with a high creative identity demonstrated a stronger liking for images they believed to be produced by humans, as opposed to those they thought were generated by AI. This inclination may stem from the perception that AI-generated art lacks the authenticity or expressiveness typically associated with human-created art, resulting in a negative bias towards these creations54. Furthermore, individuals with a strong attachment to art may view AI-generated art as a threat to the traditional artistic process, which is deeply rooted in human creativity and expression61. The emergence of AI-generated art challenges the conventional understanding of art as an exclusively human endeavor, possibly provoking feelings of skepticism or disapproval from those with a high creative identity. This reaction could further exacerbate the negative bias towards AI-generated images, as these individuals may perceive AI art as a lesser form of creativity, lacking the emotional depth and unique perspective offered by human artists in an anthropocentric perspective53. Additionally, this negative bias might be influenced by concerns about the potential implications of AI-generated art on the art world, such as the devaluation of human-created art and the loss of artistic jobs as AI technology becomes more cost-effective, fast, and accessible62. Furthermore, some people may think AI-art is unethical due to the ongoing dispute about the use of copyrighted art images in the AI algorithm trainings63,64,65. As a result, individuals with a high creative identity may not only appreciate human-generated art more but also actively resist the adoption of AI-generated art due to their emotional investment in the traditional artistic process. However, contrary to our hypothesis, creative self-efficacy did not show an effect on liking the images depending on their source attribution. Against our hypothesis (H1a,c), empathy and art exposure/interest did not influence how the participants perceived the images in relation to how much they believed to be human-made or AI-generated.

In line with our hypothesis (H2), individual relationship with technology predicts a more positive bias towards AI-generated art, with people displaying higher scores of positive attitudes toward technology liking more and perceiving more positive emotions for images that are perceived more likely to be AI generated rather than human-generated, while the opposite effect was shown for negative attitude toward technology. Also, the higher the task-switching/multitasking technology attitude, the smaller was the difference between liking the images perceived as AI-generated, compared with those perceived as human-generated. This latter finding can be related to the fact that users who prefer multitasking are more used to technology and previous research show preference for task switching and positive attitude toward technology to be positively related66.

Contrary to our hypothesis (H3), we found that openness, a personality trait generally associated with an interest in art and creativity67,68,69, positively predicted liking of images that were perceived as AI-generated. Furthermore, participants scoring high in open to experience liked the images more and experienced more positive emotions in general compared to individuals on average. This finding points towards an alternative hypothesis compared to our pre-registered one. Open to experience individuals may be more accepting towards the products of new technologies than people on average and may be more likely to embrace the potential of new technologies70,86,72. These results are consistent with some previous studies that have found a positive association between openness and appreciation of various forms of innovative art73, or attitude to experience art with the support of modern technologies74,75.

In our exploratory analysis of the other big-five personality traits, we found that conscientiousness and emotional stability influenced the perception of the origin of the images. Less conscientious participants showed a larger difference in liking and positive emotions between images believed to be generated by humans than those believed to be made by AI. In addition, more emotionally stable participants liked images they believed were human-generated more and experienced more positive emotions in response to them compared to images they believed to be AI-generated. These results suggest that personality traits beyond openness may also play a role in the perception and evaluation of AI-generated art, reflecting the multifaceted nature of individual differences in aesthetic experiences76.

Initial analyses showed that the study participants could not reliably discriminate between human and AI-generated images, which suggests that AI-generated art has become highly human-like, consistent with the increasing sophistication of AI technologies29,77,78. Follow-up exploratory analyses found that highly empathic people may be more sensitive than people on average in discriminating between AI- and human-generated art. This result may be related to previous findings showing a relationship between empathy and the appreciation of emotional content in art79. It also raises the possibility that individuals may be more attuned to the social traces of human creativity in the images51. However, our results shows that these cues are not sufficient to enable accurate attribution of the images' origin even in highly empathetic individuals, as even these individuals were unable to discriminate human-made from AI-generated images above chance level.

The exploratory analyses performed to understand a possible negative bias toward AI-generated art, revealed firstly that participants liked AI-generated images more than our selection of human-made images and experienced more positive emotions with AI-generated images (considering the true sources of the images). These findings confirm other evidence that has shown that AI-generated art is perceived as evoking emotional reactions and that AI art has technologically reached a high level when it comes to quality and ability to communicate emotions80,81. Nevertheless, the observation that our participants rated the AI-generated artworks more positively compared to the human-made ones, yet rated artworks they subjectively perceived as AI-generated more negatively, effectively determined the presence of a negative bias in the evaluation of artworks believed to be AI-generated. Although the artworks created by AI were liked more and evoked more positive emotions compared to the selection of human-made artworks in our study, the opposite trend emerged when considering the participants' subjective ratings. When participants perceived images as AI-generated, they tended to rate them as being of lower quality and eliciting lower level of positive emotions. These findings support the notion, in line with previous literature28 and with our expectations, that humans exhibit a negative bias towards artworks generated by AI. Such bias may extend behind artworks into other AI-generated outputs. Future studies should investigate if such bias is universal for AI-generated products, and what can be done to mitigate such bias, especially in the context of AI applications where AI could critically assist humans and increase human performance.

Several limitations should be considered when interpreting our findings. First, our sample consisted mostly of highly educated participants, which may limit the generalizability of our results in the other age groups. Second, the experimental design relied on a relatively small set of images representing five art styles, which does not capture the full diversity of human- and AI-generated art. Future research could address these limitations by employing a more diverse sample and examining a broader range of art styles.

Using a different set of stimuli potentially influences the reported finding wherein humans were unable to accurately identify the true source of the images. It is plausible that certain types of artworks exhibit characteristics widely recognized as indicative of human creativity, thereby enabling discrimination between human-made and AI-generated artworks. Nevertheless, the observation that our participants rated the AI-generated artworks more positively compared to the human-made ones, yet rated artworks they subjectively perceived as AI-generated more negatively, effectively pinpointed the presence of an negative bias in the evaluation of artworks believed to be AI-generated.

The study's sample was gathered from individuals volunteering for research tasks through the online platform Prolific, which may result in a sample primarily consisting of individuals who possess greater IT proficiency than the average population. Although Prolific users are generally known to provide high-quality data82, online study participants may exhibit less motivation than, for instance, students completing surveys on campus. Nonetheless, the implementation of attention-check control questions should have restricted or eliminated participants who answered randomly or without carefully reading each item of the online survey and experiment.

Further research is necessary to examine the intricate relationship between individual factors and the perception and evaluation of AI-generated art in various contexts and across a diverse range of art styles. The inability of participants to consistently differentiate between human- and AI-generated images indicates that AI has achieved a level of refinement capable of creating art resembling human-produced works. This raises questions about the intrinsic value and distinctiveness of human-generated art and the potential for AI-generated art to impact and even transform the human perception of creative work.

Moreover, we recommend conducting a study repeating the current experimental design in a few years. This would enable us to test whether attitudes towards AI-generated art evolved over time, potentially due to increased exposure and familiarity with AI-generated artworks or shifts in cultural or aesthetic norms. It could also provide valuable insights into whether the traits that influence the perception and evaluation of AI-generated art today continue to play a role in the future, or whether these traits play a role only during the early contact to the new technology.

Conclusions

In conclusion, our research offers novel perspectives on the perception and assessment of AI-generated art, enriching the overall understanding of machine-created artwork. Our findings emphasize the importance of individual factors, such as personality traits and attitudes towards technology, in the perception and evaluation of stimuli subjectively perceived as human-made or AI-generated. We believe that such findings may be generalizable to other AI-generated contents.

Our results support the idea that humans may devaluate AI-generated artworks. This is possibly due to a cognitive bias (as e.g., proposed by Anthropocentrism theory53). In fact, the participants of our study perceived AI-generated artworks as generally more likeable and eliciting a higher degree of positive emotions compared to human-made ones. However, when the subjective belief of the source of the artworks was considered, the participants preferred what they believed to be a human-made artwork and not an AI-generated one.

Method

The study hypotheses, methodology, and data analysis were developed before the data collection, and pre-registered using OSF. The pre-registration can be found at this link: https://osf.io/8n3bv?view_only=16b900f1c0fe440b82a58c6a6b5498f7. Deviations from the pre-registration are directly mentioned in the article text or defined as exploratory.

Participants

A group consisting of 206 adults from the UK population was recruited online through the website Prolific to participate in the study. This convenience sample was balanced for gender and included individuals who self-reported having normal or corrected-to-normal vision, and who used a PC or laptop with a physical keyboard. Participants completed an experiment and a battery of questionnaires, implemented using Psyktoolkit83,84. The battery contained four attention check questions, such as selecting the highest or lowest value for a given item. Data from those who failed to answer one or more attention checks correctly was excluded from the final sample. In total, data from five participants was excluded due to incorrect responses to the attention check questions.

The experiment was performed in accordance with relevant national and international guidelines and regulations for research ethics and data privacy. All participants confirmed their willingness to participate in the experiment and provided informed consent after reading and accepting the terms prior to the start of the survey. All experimental protocols were approved by the University of Bergen and were accomplished according to the guidelines of Norwegian Agency for Shared Services in Education and Research regarding data privacy and processing.

Generally, 10 to 20 participants per predictor are considered an appropriate number for regression-based analyses. On this basis, we estimated that around 200 participants will be an appropriate number for analyses having more than 10 but less than 20 predictors (i.e., fixed effects in linear mixed-effect analyses). The final sample consisted of 201 participants (99 females, 100 males, and 2 other). Their mean age was 41.0 years (SD = 14.6, min = 18, max = 76). One-hundred and twenty-one of them (62%) had a university degree, 77 had a high school degree (38%), 1 had a middle school and 2 had an elementary school degree.

Materials

In this study, a total of 40 images were utilized, with 20 generated using generative AI text-to-image prompts and 20 sourced from the internet as human-created art pieces. The AI-generated images were produced by Midjourney v. 4 AI text-to image tool, using prompts related to five distinct art styles that were chosen according to our discretion. The selected styles were Expressionism, Impressionism, Cubism, Abstract, and Traditional Japanese art (also prompted as “Edo” art). The prompt was repeated until acceptable quality of the images was obtained (e.g., avoiding produced images with distorted figures or parts written in them). The prompts used were of the format, e.g., "Impressionism landscape, painting." To select human-made art pieces, the study authors chose copyright-free (CC) artworks (both paintings for historical art styles, and digital art for abstract art styles) using queries in Google Images and using research words like those provided as prompt to the AI. This approach was intended to ensure that the human-made stimuli exhibited comparable general content to their AI-generated counterparts. Please note that the human-made images were selected solely according to the discretion of the study authors, without considering aesthetic characteristics, therefore they should not be meant as a direct and fair comparison to the AI-generated images used in the study. Human-made art pieces were subsequently cropped to maintain consistency with the square format generated by Midjourney v. 4. During this process, the primary goal was to preserve the main scene of each artwork. As the digital quality of the human-made images, which were obtained through photography or scanning processes, varied and was generally lower compared to the AI-generated images, random white noise was introduced to attempt to match the graphic quality across all images (or at least reduce the difference). This was done as the AI-generated art, being optimized to be displayed on screen, looks in general sharper and brighter in colors compared to human-made art, that is instead generally painted on canvas and digitalized using photos. This manipulation was designed to equalize the digital quality of both AI-generated and human-made images. By doing so, it aimed to facilitate a more accurate and reliable comparison between these two distinct types of visual stimuli.

Scales

The following list reports the scales that were used in the study. The scales are presented in order as they were presented in the original online survey.

The Ten-Item Personality Inventory (TIPI)85 is a brief self-report measure designed to assess the Big-Five personality dimensions: extraversion, agreeableness, conscientiousness, emotional stability (the reverse of neuroticism), and openness to experience. The TIPI has been proposed as a time-efficient alternative to longer Big-Five personality assessments. The TIPI consists of 10 statements, with each dimension represented by two items—one positively worded and the other reverse-coded. Participants rate themselves on a 7-point Likert scale ranging from “Strongly Disagree” to “Strongly Agree.” Despite its brevity, the TIPI demonstrates acceptable levels of reliability and validity when compared to more extensive personality measures86,87. For its brevity, the scale is particularly adequate for long surveys and online studies.

The Empathy Quotient (EQ): self-report measure designed to assess empathy levels in adults. The EQ scale consists of 40-item88 as a comprehensive tool to measure cognitive and affective empathy across three domains: cognitive empathy, emotional reactivity, and social skills. Participants rate themselves on a 4-point Likert scale, ranging from “Strongly Disagree” to “Strongly Agree.” The EQ has demonstrated satisfactory reliability and validity89,90 and in the present data its Cronbach’s α was 0.91. It has been widely used in research on social cognition91, and related areas such as autism spectrum conditions92.

The Short Scale of Creative Self (SSCS): this scale93,94 is a self-report measure developed to assess trait-like creative self-efficacy (CSE) and creative personal identity (CPI). CPI refers to the belief that creativity is a crucial aspect of an individual's self-description. The SSCS consists of 11 items, with six items measuring CSE and five items measuring CPI. Participants rate their agreement with each statement on a 5-point Likert scale. While CSE and CPI are often studied together, the SSCS subscales for CSE and CPI can also be used independently95. Cronbach’s α was 0.84 for CSE and 0.94 for CPI in the present data.

The Media and Technology Usage and Attitudes Scale (MTUAS): The MTUAS scale96 is a self-report measure developed to assess individuals' usage patterns and attitudes towards various forms of media and technology. The MTUAS examines multiple dimensions of media and technology use, including daily usage time, multitasking, and anxiety or negative feelings related to media and technology. The full MTUAS is composed of 44 items covering 14 subscales, such as smartphone usage, social networking, texting, email, video watching, and video gaming, among others. However, in the present study only 16 items related to attitudes towards technology (positive, negative, anxiety, and task-switching) were used. Participants rated their agreement with each of the proposed statements on a 5-point Likert scale (strongly agree, agree, neither agree nor disagree, disagree, strongly disagree). The Cronbach’s α in the present data was 0.80 for positive attitude, 0.77 for negative attitude, 0.85 for anxiety, and 0.89 for task-switching. The scale has been extensively used in the field of human computer interaction97.

The Vienna Art Interest and Art Knowledge Questionnaire (VAIAK). The questionnaire98 is a self-report measure designed to assess both art interest and art knowledge in individuals. The VAIAK was developed to provide a unified and validated instrument for exploring people's engagement with and understanding of visual art across various research contexts, such as psychology, art history, and education. The VAIAK consists of 24 items divided into two subscales: Art Interest (11 items) and Art Knowledge (10 items). However, only the items regarding art interest were used in the present study. Participants rated their agreement with the Art Interest statements (7 items) on a 7-point Likert scale (from not at all to completely), or stating how often they engaged in activities related to art (e.g., visiting museums and art galleries) (4 items), using a 7-point scale (less than once a year, once per year, once per half-year, once every three months, once per month, once every fortnight, once a week or more often). In our analyses these two groups of questions of the VAIAK Art Interest were treated as two different sub-scales (labeled in our study as art interest and art exposure). In the present data, the Cronbach’s α for Art interest was 0.94 and for Art exposure 0.76.

Procedure

Upon obtaining informed consent and gathering background details such as age, gender, and level of education, the participants started the image-presentation stage of the study, which was then succeeded by the questionnaire phase. In the image-presentation phase of the study, the participants were presented with the images in random sequence (in total 40 images, 20 AI-generated and 20 human-made). Please note that during the image presentation, the participants were not aware of the true origin of the images. The participants were instructed to answer four questions relative to the image that was shown, using a sliding bar from 0 to 100. The proposed questions were: How much do you like the image? (from 0: not at all, to 100: very much), Does the image evoke positive emotions? (from 0: not at all, to 100: very much); do you think that the image was made by a human or generated by artificial intelligence? (from 0: most likely made by a human to 100: most likely generated by artificial intelligence); Have you seen this image before? (online, in a book, in a museum etc.), from (0: I am sure I have never seen it, to 100: I am sure I have seen it before). There was no time limit for the participants to give the responses. After all the images were shown and response collected, the study proceeded to the questionnaire phase. In this phase, the participants had to complete a series of questionnaires about their personality, attitudes, relationship with art, and relationship with technology. The questionnaires used were (in this order): TIPI, EQ, SSCS, MTUAS (only attitude sub-scale), and the VAIAK (only art interest sub-scale).

Statistical analyses

Initial analyses found that our participants were not able to discriminate between human-made and AI-generated images. Therefore—following one of the planned options in the analysis plan in the pre-registration—we decided to not to analyze the individual predictors separately for (objective source) AI-generated and Human-made images, and to analyze all the images together in the same model. Consequently, the research question number 2 reported in the pre-registration was not directly assessed. Recognizing from these analyses that the source of the images was in fact “blinded” to the participants, further exploratory analyses were performed. These exploratory analyses were aimed to understand the level of appreciation of the image categories in general (objective sources) and then to assess the level of appreciation for the images in function of the subjective perception of the participants perceiving them as human-made or AI-generated. These analyses were performed to assess a possible negative bias toward AI-generated artworks. Further exploratory analyses were performed to understand if some individual factors predicted the participant’s ability to effectively discriminate human-made from AI-generated images.

The statistical analyses were conducted with jamovi99 for descriptive statistics and correlations and the linear mixed-effect analyses were performed with R. First, we computed descriptive statistics and Pearson’s correlations, examining intercorrelations between the predictor variables and between the predictor variables and the dependent variables. Then paired samples t-tests were used to explored whether AI- or human-generated images were perceived as more likeable, whether AI- or human-generated images evoked more positive emotions, and whether the participants can discriminate between AI- and human-generated images. The likeability variables were normally distributed (Shapiro–Wilk test, p > 0.05), while the other variables were slightly skewed to the left (Shapiro–Wilk test, p < 0.05). We used t-test as it can be relatively robust to small violations of normality assumptions, especially when the skewness is in the same direction and the sample size is relatively large.

The linear mixed-effect models examined how individual factors predict the ratings were performed with linear mixed effect analyses on item level data. Note that in the linear mixed effect analyses that we report the coefficient B refers to unstandardized coefficients. Because the t-tests indicated that the participants could not discriminate between human- and AI-generated images, the following analyses were performed on all images without separating human-generated and AI-generated images, as preregistered. Separate models were performed for each dependent variable (1) liking of the images, (2) positivity of emotions, and (3) perception of image source: human- vs. AI-generated. These analyses were performed for three sets of predictor variables, the first involving age and gender, the second involving personality (TIPI Big-Five and EQ), and the third one involving MTUAS, VAIAK, and SSCS.

In the first set of linear mixed-effect model, we examined the effects of background variables on liking the images and on positive emotions towards them. Most of the participants (98%) had a high school or university degree so that they were mostly relatively highly educated and therefore education level was not included as a predictor in the statistical models. Thus, the models included age, gender (female, male; n = 199 as two participants did not identify as male or female) and their interactions with beliefs about whether the images were generated by humans or AI as fixed effects. The random effects were the random intercepts for the participants and for the items (i.e., each specific image) here and in all the models that follow.

After examining the background variables, linear mixed effect models were performed on the personality variables by entering the Big-Five traits and empathy as the fixed effects as well as their interactions with the predictor variable concerning participants' beliefs on the image source (human- vs. AI-generated). The liking and positivity of emotions were dependent variables in separate models, and the random intercepts for the participants and for the items (i.e., images) served as the random effects. The model including the subjective beliefs on the image source (human- vs. AI-generated) as the dependent variable involved the personality variables and the objective category (human- vs AI-generated) with its interactions with the personality variables as the fixed effects.

The final set of linear mixed effect models involved as fixed effects the scores from the other than personality scales (MTUAS, VAIAK, SSCS) and their interactions with the predictor variable concerning participants' beliefs on the image source (human- vs. AI-generated). Deviating from the preregistered analysis plan, we included in these analyses all the measured sub-scale scores from the MTUAS (attitude component), VAIAK, and SSCS inventories, because unexpectedly there was no problems with collinearity (VIF-values < 5), despite the strong correlations between scales. Otherwise, the structure of the models was identical to the models performed on the personality variables. We did not have explicit hypotheses for the sub-scales of MTUAS (attitude component) regarding technology anxiety and multitasking, however as we collect this data in the context of MTUAS-attitude, we included these sub-scales in the statistical models, and we report the results for these scale in the article for completeness.

Data availability

The dataset used for the analyses reported in the study is available in osf.io at the following link: https://osf.io/ugfps/?view_only=0d78aefec02549f4afc2b14fa635cb0a.

References

Jaiswal, A., Arun, C. J. & Varma, A. Rebooting employees: Upskilling for artificial intelligence in multinational corporations. Int. J. Hum. Resour. Manag. 33, 1179–1208 (2022).

Safavi, K. & O’Neal, M. The Future of Work: A Human and Machine Mindset (Nurse Leader, 2023).

Iansiti, M. & Lakhani, K. R. Competing in the age of AI: Strategy and leadership when algorithms and networks run the world (Harvard Business Press, Boston, 2020).

Schwab, K. The fourth industrial revolution (Currency, 2017).

Chen, L., Chen, P. & Lin, Z. Artificial intelligence in education: A review. IEEE Access 8, 75264–75278 (2020).

Pataranutaporn, P. et al. AI-generated characters for supporting personalized learning and well-being. Nat. Mach. Intell. 3, 1013–1022 (2021).

Secinaro, S., Calandra, D., Secinaro, A., Muthurangu, V. & Biancone, P. The role of artificial intelligence in healthcare: A structured literature review. BMC Med. Inform. Decis. Mak. 21, 1–23 (2021).

Hentzen, J. K., Hoffmann, A., Dolan, R. & Pala, E. Artificial intelligence in customer-facing financial services: A systematic literature review and agenda for future research. Int. J. Bank Mark. 40, 1299–1336 (2022).

Kaur, V., Khullar, V. & Verma, N. Review of artificial intelligence with retailing sector. J. Comput. Sci. Res. 2, 1–7 (2020).

Abduljabbar, R., Dia, H., Liyanage, S. & Bagloee, S. A. Applications of artificial intelligence in transport: An overview. Sustainability 11, 189 (2019).

Lund, B. D. & Wang, T. Chatting about ChatGPT: How may AI and GPT impact academia and libraries? (Library Hi Tech News, 2023).

Cetinic, E. & She, J. Understanding and creating art with AI: Review and outlook. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 18, 1–22 (2022).

Mathew, A. Is artificial intelligence a world changer? A case study of OpenAI’s chat GPT. Recent Prog. Sci. Technol. 5, 35–42 (2023).

Vartiainen, H. & Tedre, M. in Digital Creativity 1–21 (2023).

Benjamin, W. The Work of Art in the Age of Mechanical Reproduction (1935).

Brown, T. B. et al. Language models are few-shot learners. In Advances in Neural Information Processing Systems, vol. 33 (2020).

Radford, A., Narasimhan, K., Salimans, T. & Sutskever, I. Improving language understanding by generative pre-training. OpenAI (2018).

Still, A. & d'Inverno, M. Can machines be artists? A Deweyan response in theory and practice. Arts 8(1), 36. https://doi.org/10.3390/arts8010036 (2019).

Lee, E. Digital originality. Vand. J. Entain. Technol. L 14, 919 (2011).

Biswas, S. ChatGPT and the future of medical writing. Radiology https://doi.org/10.1148/radiol.223312 (2023).

Koivisto, M. & Grassini, S. Best humans still outperform artificial intelligence in a creative divergent thinking task. Sci. Rep. 13, 13601 (2023).

Arriagada, L. CG-Art: Demystifying the anthropocentric bias of artistic creativity. Connect. Sci. 32, 398–405 (2020).

Carnovalini, F. & Rodà, A. Computational creativity and music generation systems: An introduction to the state of the art. Front. Artif. Intell. 3, 14 (2020).

Toivanen, J. M. et al. Towards transformational creation of novel songs. Connect. Sci. 31, 4–32 (2019).

Jones, J. A portrait created by AI just sold for $432,000. (The Guardian, 2018).

Rubinstein, Y. Uneasy listening: Towards a Hauntology of AI-generated music. Reson. J. Sound Cult. 1, 77–93 (2020).

Köbis, N. & Mossink, L. D. Artificial intelligence versus Maya Angelou: Experimental evidence that people cannot differentiate AI-generated from human-written poetry. Comput. Hum. Behav 114, 106553 (2021).

Gangadharbatla, H. The role of AI attribution knowledge in the evaluation of artwork. Empir. Stud. Arts. 40(2), 125–142 (2022).

Elgammal, A., Liu, B., Elhoseiny, M. & Mazzone, M. Can: Creative adversarial networks, generating “art” by learning about styles and deviating from style norms. arXiv preprint arXiv:1706.07068 (2017).

Turing, A. M. Mind. Mind 59, 433–460 (1950).

Granulo, A., Fuchs, C. & Puntoni, S. Psychological reactions to human versus robotic job replacement. Nat. Hum. Behav. 3, 1062–1069 (2019).

Huang, M. H. & Rust, R. T. Artificial intelligence in service. J. Serv. Res. 21, 155–172 (2018).

Grassini, S. & Ree, A. S. in Proceedings of the European Conference on Cognitive Ergonomics 2023. 1–7.

Złotowski, J., Yogeeswaran, K. & Bartneck, C. Can we control it? Autonomous robots threaten human identity, uniqueness, safety, and resources. Int. J Hum.-Comput. Stud. 100, 48–54 (2017).

Partadiredja, R. A., Serrano, C. E. & Ljubenkov, D. in 2020 13th CMI Conference on Cybersecurity and Privacy (CMI)-Digital Transformation-Potentials and Challenges (51275). 1–6 (IEEE).

Fortuna, P., Wróblewski, Z. & Gorbaniuk, O. The structure and correlates of anthropocentrism as a psychological construct. Curr. Psychol. 1–13 (2021).

Herrmann, P., Waxman, S. R. & Medin, D. L. Anthropocentrism is not the first step in children’s reasoning about the natural world. Proc. Natl. Acad. Sci. 107, 9979–9984 (2010).

Coley, J. D. & Tanner, K. D. Common origins of diverse misconceptions: Cognitive principles and the development of biology thinking. CBE Life Sci. Educ. 11, 209–215 (2012).

Kortenkamp, K. V. & Moore, C. F. Ecocentrism and anthropocentrism: Moral reasoning about ecological commons dilemmas. J. Environ. Psychol. 21, 261–272 (2001).

Batavia, C. Is anthropocentrism really the problem?. Anim. Sentience 4, 20 (2020).

Anthropocentrism: Humans, animals, environments. (Brill, 2011).

Schmitt, B. Speciesism: An obstacle to AI and robot adoption. Mark. Lett. 31, 3–6 (2020).

Castelo, N. Blurring the Line Between Human and Machine: Marketing Artificial Intelligence (Columbia University, New York, 2019).

Granulo, A., Fuchs, C. & Puntoni, S. Liking for human (vs. robotic) labor is stronger in symbolic consumption contexts. J. Consum. Psychol. 31, 72–80 (2021).

Chamberlain, R., Mullin, C., Scheerlinck, B. & Wagemans, J. Putting the art in artificial: Aesthetic responses to computer-generated art. Psychol. Aesthet. Creat. Arts 12, 177 (2018).

Hertzmann, A. Can computers create art?. Arts 7, 18 (2018).

Caviola, L., Everett, J. A. & Faber, N. S. The moral standing of animals: Towards a psychology of speciesism. J. Personal. Soc. Psychol. 116, 1011 (2019).

Bastian, B. & Loughnan, S. Resolving the meat-paradox: A motivational account of morally troublesome behavior and its maintenance. Personal. Soc. Psychol. Rev. 21, 278–299 (2017).

Piazza, J. & Loughnan, S. When meat gets personal, animals’ minds matter less: Motivated use of intelligence information in judgments of moral standing. Soc. Psychol. Personal. Sci. 7, 867–874 (2016).

Epley, N. & Gilovich, T. The mechanics of motivated reasoning. J. Econ. Perspect. 30, 133–140 (2016).

Job, V., Nikitin, J., Zhang, S. X., Carr, P. B. & Walton, G. M. Social traces of generic humans increase the value of everyday objects. Personal. Soc. Psychol. Bull. 43, 785–792. https://doi.org/10.1177/0146167217697694 (2017).

Ditto, P. H. in Delusion and self-deception: Affective and motivational influences on belief formation. Macquarie monographs in cognitive science. 23–53 (Psychology Press, Hove 2009).

Millet, K., Buehler, F., Du, G. & Kokkoris, M. D. Defending humankind: Anthropocentric bias in the appreciation of AI art. Comput. Hum. Behav. 143, 107707 (2023).

Ragot, M., Martin, N. & Cojean, S. Ai-generated vs. human artworks. a perception bias towards artificial intelligence? In Extended abstracts of the 2020 CHI conference on human factors in computing systems, pp. 1–10 (2020).

Park, J. & Woo, S. E. Who likes artificial intelligence? Personality predictors of attitudes toward artificial intelligence. J. Psychol. 156, 68–94 (2022).

McCrae, R. R. & Costa Jr, P. T. in The SAGE handbook of personality theory and assessment, Vol 1: Personality theories and models. 273–294 (Sage Publications, Inc, Thousand Oaks, 2008).

Davis, M. H. Measuring individual differences in empathy: Evidence for a multidimensional approach. J. Personal. Soc. Psychol. 44, 113 (1983).

Dollinger, S. J., Clancy Dollinger, S. M. & Centeno, L. Identity and Creativity. Identity 5, 315–339. https://doi.org/10.1207/s1532706xid0504_2 (2005).

Winner, E. How Art Works: A Psychological Exploration (Oxford University Press, Oxford, 2019).

Davis, F. D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13, 319–340 (1989).

Davies, S. The artful species: Aesthetics, art, and evolution (OUP Oxford, 2012).

Zhou, K.-Q. & Nabus, H. The Ethical Implications of DALL-E: Opportunities and Challenges. Mesop. J. Comput. Sci. 2023, 17–23 (2023).

Carballo-Calero, P. F. 25 things you should know about Artificial Intelligence Art and Copyright (ARANZADI/CIVITAS, 2023).

Kalpokiene, J. & Kalpokas, I. Creative encounters of a posthuman kind–anthropocentric law, artificial intelligence, and art. Technol. Soc. 72, 102197 (2023).

Shan, S. et al. Glaze: Protecting artists from style mimicry by text-to-image models. arXiv preprint arXiv:2302.04222 (2023).

Terry, C. A., Mishra, P. & Roseth, C. J. Preference for multitasking, technological dependency, student metacognition, & pervasive technology use: An experimental intervention. Comput. Hum. Behav. 65, 241–251 (2016).

McCrae, R. R. Creativity, divergent thinking, and openness to experience. J. Pers. Soc. Psychol. 52, 1258 (1987).

King, L. A., Walker, L. M. & Broyles, S. J. Creativity and the five-factor model. J. Res. Pers. 30, 189–203 (1996).

Tan, C. S., Lau, X. S., Kung, Y. T. & Kailsan, R. A. L. Openness to experience enhances creativity: The mediating role of intrinsic motivation and the creative process engagement. J. Creat. Behav. 53, 109–119 (2019).

Nov, O. & Ye, C. In Proceedings of the 41st annual Hawaii international conference on system sciences (HICSS 2008). 448–448 (IEEE, 2008).

Svendsen, G. B., Johnsen, J.-A.K., Almås-Sørensen, L. & Vittersø, J. Personality and technology acceptance: The influence of personality factors on the core constructs of the Technology Acceptance Model. Behav. Inf. Technol. 32, 323–334 (2013).

Behrenbruch, K., Söllner, M., Leimeister, J. M. & Schmidt, L. In Human-Computer Interaction–INTERACT 2013: 14th IFIP TC 13 International Conference, Cape Town, South Africa, September 2-6, 2013, Proceedings, Part IV 14. 306–313 (Springer, 2013).

Mastandrea, S., Bartoli, G. & Bove, G. Preferences for ancient and modern art museums: Visitor experiences and personality characteristics. Psychol. Aesthet. Creat. Arts 3, 164 (2009).

Pratisto, E. H., Thompson, N. & Potdar, V. Virtual reality at a prehistoric museum: Exploring the influence of system quality and personality on user intentions. In ACM Journal on Computing and Cultural Heritage (2023).

Rodriguez-Boerwinkle, R. M. & Silvia, P. J. Visiting virtual museums: How personality and art-related individual differences shape visitor behavior in an online virtual gallery. Empir. Stud. Arts. https://doi.org/10.1177/02762374231196491 (2023).

Chamorro-Premuzic, T., Burke, C., Hsu, A. & Swami, V. Personality predictors of artistic preferences as a function of the emotional valence and perceived complexity of paintings. Psychol. Aesthet. Creat. Arts 4, 196 (2010).

Dehouche, N. & Dehouche, K. What’s in a text-to-image prompt? The potential of stable diffusion in visual arts education. Heliyon 9(6), e16757 (2023).

Wenjing, X. & Cai, Z. Assessing the best art design based on artificial intelligence and machine learning using GTMA. Soft Comput. 27, 149–156 (2023).

Freedberg, D. & Gallese, V. Motion, emotion and empathy in esthetic experience. Trends Cogn. Sci. 11, 197–203 (2007).

Mazzone, M. & Elgammal, A. Art, creativity, and the potential of artificial intelligence. Arts 8(1), 26. https://doi.org/10.3390/arts8010026 (2019).

Roose, K. An AI-generated picture won an art prize. Artists aren’t happy. The New York Times (2022).

Peer, E., Brandimarte, L., Samat, S. & Acquisti, A. Beyond the Turk: Alternative platforms for crowdsourcing behavioral research. J. Exp. Soc. Psychol. 70, 153–163 (2017).

Stoet, G. PsyToolkit: A novel web-based method for running online questionnaires and reaction-time experiments. Teach. Psychol. 44, 24–31 (2017).

Stoet, G. PsyToolkit—A software package for programming psychological experiments using Linux. Behav. Res Methods 42, 1096–1104 (2010).

Gosling, S. D., Rentfrow, P. J. & Swann, W. B. A very brief measure of the Big-Five personality domains. J. Res. Personal. 37, 504–528. https://doi.org/10.1016/S0092-6566(03)00046-1 (2003).

Renau, V., Oberst, U., Gosling, S. D., Rusiñol, J. & Chamarro, A. Translation and validation of the Ten-Item Personality Inventory into Spanish and Catalan. Rev. Psicol. Cièn. Educ. Esport 31, 85–97 (2013).

Muck, P. M., Hell, B. & Gosling, S. D. Construct validation of a short five-factor model instrument. Eur. J. Psychol. Assess 23, 166–175 (2007).

Baron-Cohen, S. & Wheelwright, S. The empathy quotient: An investigation of adults with Asperger syndrome or high functioning autism, and normal sex differences. J. Autism Dev. Disord. 34, 163–175 (2004).

Muncer, S. J. & Ling, J. Psychometric analysis of the empathy quotient (EQ) scale. Personal. Individ. Differ. 40, 1111–1119 (2006).

Zhao, Q. et al. Validation of the empathy quotient in Mainland China. J. Personal. Assess. 100, 333–342 (2018).

Bora, E. Social cognition and empathy in adults with obsessive compulsive disorder: a meta-analysis. Psychiatry Res. 316, 114752 (2022).

Wheelwright, S. et al. Predicting autism spectrum quotient (AQ) from the systemizing quotient-revised (SQ-R) and empathy quotient (EQ). Brain Res. 1079, 47–56 (2006).

Karwowski, M., Lebuda, I. & Wiśniewska, E. Measuring creative self-efficacy and creative personal identity. Int. J. Creat. Probl. Solving 28, 45–57 (2018).

Karwowski, M. Did curiosity kill the cat? Relationship between trait curiosity, creative self-efficacy and creative personal identity. Eur. J. Psychol. 8, 547–558 (2012).

Beghetto, R. A. & Karwowski, M. in The Creative Self 3–22 (Academic Press, San Diego, 2017).

Rosen, L. D., Whaling, K., Carrier, L. M., Cheever, N. A. & Rokkum, J. The media and technology usage and attitudes scale: An empirical investigation. Comput. Hum. Behav. 29, 2501–2511 (2013).

Ellis, D. A., Davidson, B. I., Shaw, H. & Geyer, K. Do smartphone usage scales predict behavior?. Int. J. Hum. Comput. Stud. 130, 86–92 (2019).

Specker, E. et al. The Vienna Art Interest and Art Knowledge Questionnaire (VAIAK): A unified and validated measure of art interest and art knowledge. Psychol. Aesthet. Creat. Arts 14, 172 (2020).

The jamovi project (jamovi version 2.3.21).

Funding

Open access funding provided by University of Bergen.

Author information

Authors and Affiliations

Contributions

S.G. was responsible for funding acquisition, conceptualization, development, methodology, writing—original draft, review & editing. M.K. was responsible for the development, method, data analysis, writing—data analysis & supplementary material, writing—review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Grassini, S., Koivisto, M. Understanding how personality traits, experiences, and attitudes shape negative bias toward AI-generated artworks. Sci Rep 14, 4113 (2024). https://doi.org/10.1038/s41598-024-54294-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-54294-4

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.