Abstract

Clustering is an important tool for data mining since it can determine key patterns without any prior supervisory information. The initial selection of cluster centers plays a key role in the ultimate effect of clustering. More often researchers adopt the random approach for this purpose in an urge to get the centers in no time for speeding up their model. However, by doing this they sacrifice the true essence of subgroup formation and in numerous occasions ends up in achieving malicious clustering. Due to this reason we were inclined towards suggesting a qualitative approach for obtaining the initial cluster centers and also focused on attaining the well-separated clusters. Our initial contributions were an alteration to the classical K-Means algorithm in an attempt to obtain the near-optimal cluster centers. Few fresh approaches were earlier suggested by us namely, far efficient K-means (FEKM), modified center K-means (MCKM) and modified FEKM using Quickhull (MFQ) which resulted in producing the factual centers leading to excellent clusters formation. K-means, which randomly selects the centers, seem to meet its convergence slightly earlier than these methods, which is the latter’s only weakness. An incessant study was continued in this regard to minimize the computational efficiency of our methods and we came up with farthest leap center selection (FLCS). All these methods were thoroughly analyzed by considering the clustering effectiveness, correctness, homogeneity, completeness, complexity and their actual execution time of convergence. For this reason performance indices like Dunn’s Index, Davies–Bouldin’s Index, and silhouette coefficient were used, for correctness Rand measure was used, for homogeneity and completeness V-measure was used. Experimental results on versatile real world datasets, taken from UCI repository, suggested that both FEKM and FLCS obtain well-separated centers while the later converges earlier.

Similar content being viewed by others

Introduction

In every aspect of our day to day requirements it is often necessary to sensibly organize data into their relevant groups. This not only gives clarity about their whereabouts but also helps us to pick them from their respective assemblage much faster. So, the most important thing that needs to be considered is the correct grouping among them. Consequently, in order to expedite the retrieval of relevant information from a group or sub-group, numerous consistent practices have been developed, one of which is data clustering (Odell and Duran 1). The main goal is to create divisions for the whole data set into reasonably smaller homogenous subdivisions so that the objects present in a subgroup will be having similar characteristics with each other and reasonably differ from those present in other subgroups.

For any clustering technique to come to practice, the first and foremost step is the selection of initial cluster centers which defines the number of clusters to be created. There are various ways in which the initial cluster centers are initialized. This initialization plays a crucial role for the end result of clustering. Random selections of the initial centroids are preferred by many clustering algorithms. This adds to the simplicity of the approach and also to the computation time of the algorithm. When the initial centroids are chosen randomly, roughly no time is spent on their selection step, which lessens the overall execution time as well as the time complexity of the algorithm. However, with random initialization of centroids, different runs will produce different clustering results. Sometimes, the result will show excellent subgroups formation while in most cases the resulting clusters are often poor. It is possible to obtain an optimal clustering when the two randomly selected initial cluster centers fall somewhere in a pair of clusters, because the cluster centers will reorganize among themselves, one to each cluster. Unfortunately, in some cases if the number of clusters is more, it is more and more likely that in any case one pair of clusters will have merely one initial cluster center. In this case, since the pairs of clusters are farther apart than clusters within a pair, the clustering algorithm will fail to reallocate the centroids among the pairs of clusters, and simply local minima will result. In other words, empty clusters may be achieved if no points are allocated to a cluster during the assignment phase of clustering. “Because of the problems with using randomly selected initial centroids, which even repeated runs may not overcome, there is an need to develop some ways for better initialization of initial center of clusters.”

In most cases it is found the clustering model designed for the purpose faces difficulties in identifying the “natural clusters”. These cases arise when the clusters have widely different shapes, sizes and compactness. For example, while considering a dataset to be grouped into three clusters, if one of the clusters formed is relatively bigger than the other two, the bigger cluster is broken down and one of the smaller clusters is combined with a section of the bigger one. In another instance, a clustering model may fail to create well separated subgroups when the two smaller clusters are much compact than the bigger one and obviously lead to inaccurate conclusions about the structure in the data. “There is a need to design a clustering model which will be efficient enough to perform the required formation of subgroups with improved reliability and accuracy and more importantly achieving the result much faster with varying datasets”.

These are the two motivational factors which gave us a direction to continue our work in this aspect.

Although, we as individuals are exceptional cluster seekers, but there is a necessity of excellent clustering algorithms which can operate on versatile data sets and provide us with effective cluster formation. A bunch of clustering ensemble methods proposed by eminent researchers has been projected over the last few years to present a solution for selection of initial cluster centers as well as obtaining good cluster formation. A few of those are discussed here.

Na et al.2 conducted an examination of the constraining facets of the K-Means algorithm, proposing an alternative approach for assigning data points to distinct clusters. Their method mitigates the computational time required for K-Means. In a comprehensive survey, Xu et al.3 scrutinized a diverse array of clustering methodologies along with their practical applications. Additionally, they deliberated on various proximity criteria and validity metrics that influence the resultant cluster configuration. Cheung4 introduced a tailored K-Means variant capable of achieving precise clustering without the need for initial cluster assignments. This technique demonstrates notable efficacy in clustering elliptically-shaped data, a pivotal aspect of the research. In an innovative endeavor, Li5 advocated for the adoption of the nearest neighbor pair concept in determining the initial centroids for the K-Means algorithm. This method identifies two closely neighboring pairs that exhibit significant dissimilarity and reside in separate clusters. This represents one among several approaches aimed at advancing the determination of initial cluster centroids. Nazeer et al. 6 proposed a further progression towards ascertaining nearly accurate initial centroids and subsequently assigning objects to clusters, albeit with the stipulation that the initial number of clusters must be specified as input. In order to mitigate the stochastic selection of initial cluster centers in the K-Means algorithm, Cao et al.7 introduced a model wherein the cohesion degree within a data point's neighborhood and the coupling degree among neighborhoods are defined. This model is complemented by a novel initialization technique for center selection.

Kumar et al.8 suggested a kernel density-based technique to determine the initial centers for K-Means. The plan is to pick an initial data from the denser part of the data set since it actually reflects the characteristics of the data set. By doing this the presence of outliers are avoided. The performance of their method was tested on different data sets using various validity indices. The result showed that the given method has superior clustering performance over the traditional K-Means and K-Means++ algorithm. Kushwaha et al.9 proposed a clustering technique based on magnetic strength with an objective to locate the best location of centroids in their respective clusters. Data generate force directly to magnetic force and the best possible position for centroids is when the force by all data draws nearer to zero. Results from experiments imply that the suggested method getaway from local optima. However, it’s only limitation is, it needs to have prior information of the number of clusters to be created. Mohammed et al.10 introduced WFA selection, a modified weight-based firefly selection algorithm designed to attain optimal clusters. This algorithm amalgamates a selection of clusters to generate clusters of superior quality. The results demonstrate that this algorithm yields newly condensed clusters when compared to a subset of alternative approaches.

In a recent study, Fahim11 conducted a comprehensive review of the classical Density-based spatial clustering of application with noise (DBSCAN) algorithm, scrutinizing its inherent limitations and proposing a method to mitigate them. The suggested technique identifies the maximum allowable density level within each cluster, enabling DBSCAN to evaluate clusters with varying densities. Comparative analysis confirmed the effectiveness of the proposed method in accurately determining the actual clusters. Fahim12 suggested a technique to discover an optimal value for ‘k’ and initial centers of K-means algorithm. A pre-processing step is used for this purpose before K-means is applied. Density-based method is used for this purpose as it does not need to initially mention the number of clusters and also it calculates the mean of data in each cluster. The suggested method also uses the DBSCAN algorithm as a pre-processing step. Experimental data suggested that, this method ultimately improves the final result of clustering and also reduces the number of iterations of K-means. Khandare et al.13 presented a modification to the K-Means and DBSCAN clustering algorithms. Their proposed approach enhances clustering quality and establishes well-organized clusters through the incorporation of spectral analysis and split-merge-refine methods. Notably, their algorithm addresses the minimization of empty cluster formation. Experimental assessments were conducted, taking into account parameters such as cluster indices, computation time, and accuracy, on datasets of diverse dimensions.

Yao et al.14 grouped the non-numeric attributes present in the dataset according to their properties and discovered the analogous similarity metrics in that order. They proposed a method for finding the initial centroids based on the dissimilarities and compactness of data. Once the centers were obtained, clustering was performed on modified inter-cluster entropy for miscellaneous data. Results concluded that since the initial centers determined were optimal so it resulted in good clustering accuracy rate (CAR). Ren et al.15 suggested a two-step structure for scalable clustering in which the initial step determines the frame structure of data and the final step does the actual clustering. Data objects are initially placed across a 2-D grid and are clustered using different algorithms, each giving a set of partial core points. These points correspond to the dense parts of data, which form centers for center-based, modes for density-based or means for probability-based types of clustering. This method can speed-up the computation and produces robust clustering. Results have shown the usefulness of the method.

Franti et al. 16 suggested a better initialization technique that improves the clustering efficiency of K-means. When there are overlapping clusters, using farthest point heuristic, malicious clusters may be reduced from 15 to 6% and when the method was repeated for 100 times, a further reduction to 1% was noted. However, they remarked that dataset with well separated clusters depends mostly on proper initialization of centers.

Mehta, et al.17 discussed and analyzed several proximity measures and suggested a way for choosing a proximity measure that can be used in hierarchical and partitioned clustering. They concluded that the average performance of clustering changes when diverse proximity measures were implemented. Mehta et al.18 further researched in document clustering in text mining and proposed a method by hybridizing the statistical and semantic features. The technique uses a fewer number of features but this hybridization improves the textual clustering and provides better precision within acceptable time limit.

Shuai19 proposed an improved feature selection and clustering framework. This model initially does the data processing and then uses the feature selection to obtain important features from the dataset. This was followed by hybridizing K-means and SOM neural network to perform the actual clustering. Finally, collaborative filtering was used to cluster datasets which constituted missing data to make sure that all samples can acquire results. Results obtained showed high accuracy in clustering and interpretability. Nie et al.20 proposed a clustering technique where there is no need to calculate the cluster centers in each iteration. In addition, the proposed technique provides an efficient iterative re-weighted approach to solve the optimization problem and shows a faster convergence rate.

Ikotun et al.21 broadly presented a summary and taxonomy of the widely used K-means clustering algorithm and its different variants created by various researchers. The record of K-means, recent developments, different issues and challenges, and suggested potential research viewpoint are discussed. They have found most of the research work has been carried out on solving the initialization issues of K-means however, very little focus has been given on addressing the problem of mixed data type. This survey will help practitioners to work on this aspect.

Methods

One of the major drawbacks of traditional K-Means approach is the initial center selection which is done randomly. Random selection of centers may perhaps result in incorrect creation of clusters. Due to this issue, there are few suggestions mentioned in this work with an aim to minimise this limitation. In addition to this, there are suggestions for reducing the time complexity, actual computation time and the convergence criteria of the projected methods. Subsequently, all the methods are evaluated to verify how good each of them creates the subgroups. The methods for choosing the initial cluster centers are discussed below:

Method-1: K-means

This is an unsupervised learning algorithm (Mac Queen22) to find K sub-groups from a given set of data, where the value of K is defined by the user. Initially, random K data points are selected as cluster centers. Each data point is assigned to its nearest cluster center to form disjoint sub-groups. Then the cluster centers are overwritten with the values obtained by taking mean of the data points present inside it. This process of reassigning data points to the nearest center and updating the cluster centers is repeated until there are no changes to the centers. The steps followed in K-means are:

-

Take random K data points as initial centers

-

Assign each data to nearest cluster center

-

Update cluster centers by taking mean

-

Repeat steps 2–3 until convergence

K-Means is the simplest way for getting different sub-groups of a given input. However, taking K random centers in step-1 makes it unpredictable. Every time this algorithm is executed it produces different results. So there is no precision in the clustering outcome. Even there is a possibility that at times it may result in creation of empty clusters.

It has an indefinite time complexity, since it is computationally a NP-hard problem. Step-2 of the procedure may repeat for an uncertain amount of time. However, if we constraint the clustering loop to a fixed value i, such that i < n, then the complexity of the algorithm will become O (i * K * n * d), where K and n are the number of clusters and data points respectively and d is the dimension of each data. Practically, the values of K and dare fixed and significantly smaller than n, hence the complexity is O (n).

Method-2: far efficient K-means (FEKM)

To overcome the limitations of selecting the initial cluster centers randomly, Mishra et al.23 suggested an innovative approach. The central idea of their approach is to obtain distant points as initial center which will result in disjoint, tight and precise cluster formation. The pseudo code representation of algorithm is as follows:

The algorithm works as follows. Distance of each data point to all other points present in the data set are computed and the data pair which lies farthest from each other are considered as first two initial centers in step 1 and step 2. Step 4 assigns data points to their nearest center until a pre-defined threshold value is reached. In step 5 and 6 centers are updated by taking mean of the partial clusters formed in previous step. The remaining K−2 centers are computed in step 8. The process of obtaining the centers is illustrated in Fig. 1.

Due to brute force comparisons between all the data points to find the farthest pair in Step 1, the loop has to run n2 times making its complexity Ɵ (n2). Hence, the overall complexity of FEKM is Ɵ (n2). The major drawback of FEKM is its worst case running time complexity which is Ɵ (n2). Methods like FEKM with quadratic time complexity are feasible for small data sets.

Method-3: modified center K-means (MCKM)

Considering the quadratic time complexity of FEKM, (Mishra et al. IJISA24) suggested MCKM for selecting the initial cluster centers with complexity less than Ɵ (n2). The idea is to sort the data points with respect to a fixed point of reference, which is the last data as present in the data set matrix. Then divide the data set into K equal subgroups and compute the mean of each group to find initial centers. The procedure is presented as a pseudo code which is as follows:

Step 2 sets the very last element present in the data set matrix as the first center which is the point of reference. In step-4, distance of each element is determined from the point of which makes its complexity O (n). Step 5 sorts all data using a sorting technique of complexity O (n* log (n)). In step 6, the sorted list is splitted into K equal subgroups which will cause the loop to run n times making its complexity as Ɵ (n). The centers are updated and mean of each group gives the rest k-1 center in step 7 whose complexity is Ɵ (n). Thus, overall complexity of MCKM is O (n* log (n)). This method is able to provide a systematic and efficient procedure to obtain initial centers. Unlike FEKM it has a better running time complexity. However, FEKM has an upper hand over MCKM in obtaining distant initial centers which results in better cluster formation.

Hastening FEKM by constructing convex hull

FEKM uses brute force technique to find the farthest data pair and considers them as the first two initial centers. This results in quadratic time complexity of the algorithm. So, in order to reduce the complexity of FEKM, the concept of convex hull (Cormen25) is used. Convex hull is the smallest convex polygon that encloses all the data points of a given data set. These points are selected in such a way that, there exist no other points which remains outside the hull. By computing the convex hull, it is only required to compare the data points which form the vertices of the hull instead of considering every data points to obtain the farthest pair. There are two approaches considered in this research to achieve the farthest centers using convex hull. They are as follows:

Farthest centers using graham scan (FCGS)

Graham scan approach (Graham26) maintains a stack which contains only the vertices of the hull. The procedure pushes all the data points into the stack and ultimately pops the point that is not a vertex. The distance between each vertex is determined to obtain the farthest pair to be considered as first two centers. Hence, it is not required to compare the distance between all other points. This reduces the complexity of the algorithm. The algorithm for FCGS is as follows:

Step 1 of the algorithm chooses a point p0 with smallest y-coordinate value or the leftmost x-coordinate value if two or more points have equal y-coordinates. For this step 1 need to traverse to reach every data points which make its complexity Ɵ (n). In step 2 data points excluding p0 are sorted as per the polar angle around p0 in anti-clockwise order using a sorting algorithm of complexity O (n * log (n)). First three points of the sorted data set i.e. p0, p1, p2 are pushed into a stack in step 4. In each iteration of step 5, a data point is pushed into the stack and the orientation (Cormen25) formed by top three elements of the stack is checked. If the orientation is clock wise or non-left then pop( ) operation is performed. As it traverses n−3 points, step 5 has a complexity of O (n). After obtaining the stack of hull vertices, each vertex is distance-wise compared to find the farthest pair in step 7. Assuming number of elements in stack is m, the complexity of step 7 is Ɵ (m2). The remaining k−2 centers are computed in step 9 following FEKM. As m will be always less than n, overall complexity of FCGS is O (n * log(n)). Therefore, this method is able to obtain initial centers which will boost clustering performance with a reduced complexity of O (n * log(n)).

However, Graham scan is designed only for data sets with two attributes. If the dataset consists of more than two attributes then the algorithm fails because there are multiple values of polar angles. Dimensionality reduction is a way but may increase the running time of the algorithm drastically. Now, one of the solutions of FCGS is by using a method which can construct the convex hull in multiple dimensions. Modified FEKM using Quick hull is a solution to this aspect.

Modified FEKM using Quickhull (MFQ)

Quickhull proposed by (Bradford Barber27) may be used to construct convex hull for n-dimensional data. It computes the convex hull in a divide and conquer approach recursively. The pseudo code of algorithm is as follows:

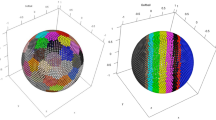

First seven steps of the algorithm are used to construct a convex hull. In this method, a list named hull is declared which store the vertices of the convex hull. The points with maximum and minimum x coordinate values are assigned to variables max_x and min_x respectively. A line L is constructed by joining min_x and max_x. This line will divide the data sets into two parts. A point p is found which is farthest from L and is added to hull. A triangle is formed by joining p and two end points of L. The points lying inside the triangle are not considered for further construction of the hull. For the remaining points present outside the triangle, each side of the triangle is assigned as L and steps5& 6of the algorithm are repeated recursively until there are no points left outside the last triangle formed. After obtaining the convex hull, the vertices lying on it are compared with each other to find the farthest pair, which is considered as first two initial cluster centers. Finally, step8 of FEKM is repeated to obtain K−2 remaining centers. The above procedure is illustrated in Fig. 2.

Step 2 of the method costs O (n) for traversing the data set to find minimum and maximum x-coordinate values. Step 5 computes a point farthest from line L making its complexity O (n). Step 5 and 6 is repeated recursively until no points are left outside the new triangle formed. The worst case complexity of Quickhull is O (n * log (n)) if dimension of the data is less than or equal to three. When the number of attributes is more than three then the complexity of this method increases. Next step8 of FEKM is called to determine the remaining K-2 initial centers which make its complexity O(n). So, unlike FCGS this method is able effectively operate on datasets with more number of attributes with a worst case of O (n * log (n)).However, the worst case complexity of this algorithm may exceed O (n2) when we have large number of attributes in data sets. In these cases, this method will prove to be inefficient than FEKM. For this reason, we suggest a method called Farthest Leap Center Selection (FLCS) to compute the farthest data pair in less than quadratic time complexity.

Proposed method: farthest leap center selection (FLCS)

Due to the limitations of Graham Scan and Quick hull mentioned above, we came up with a new approach called FLCS, to solve the farthest pair problem. FLCS takes a greedy approach to solve this problem. Instead of traversing every data point, it only leaps to the next point which is at a maximum distance from the current point. The method stops leaping when the next farthest point is the same previous point. The pseudo code of algorithm is as follows:

Initially, the mean of all data points is calculated and is assigned as data1 and prev. The farthest point from data1 is computed by comparing it with other data points and assigned to data2. Then, data2 is assigned to data1 and data1 is assigned to prev. Again the farthest point from data1 is determined and assigned to data2. These steps are repeated until data2and prev refers to the same data point. These steps are illustrated in Fig. 3. The farthest pairs data1 and data2 obtained are assigned as first two initial centers. To obtain remaining K−2 centers step-8 of FEKM is repeated.

Selection of first two initial centers by discovering the farthest pair (a) Mean of data set is assigned as data1 and prev (b) Farthest point from data1 is data2 (c) Farthest point from data2 found and new farthest point is labeled as data2 and original data2 is labeled as data1 (d) data1 is labeled as prev(e) Step (c) and (d) are repeated until prev and data2 refers to the same data point (f) data1 and data2 are the farthest pair and two initial centers.

Step 1 of the algorithm traverses all data points once to find their mean making its complexity Ɵ (n). Step 5 is used to leap from the current data point to the farthest point in order to skip redundant comparisons needed to compute the farthest pair. If we assumes number of leaps is i, then step 5 iterates i * n times. However, i is much smaller than n. So, the complexity of step5 is O(n).Then step 8 of the FEKM is used to compute remaining K−2 centers making complexity of step7 of FLCS O (n). Therefore, overall complexity of FLCS is O (n).

Lemma:

The number of leaps in FLCS will always be less than the total number of data points.

Given: A set of data points S, and number of leaps I.

To prove: I <|S|

Proof:

Let us construct a convex hull on data set S with a set of vertices V. So, there exist no data points which will be present outside the hull (Cormen25).

Now, there are exactly two spaces from which the method can leap.

Case I: Leaping from within the convex hull:

The farthest point from any point inside the convex hull is a vertex of the hull, as there exist no other points beyond the convex hull.

Case II: Leaping from any vertex of the convex hull:

The farthest point from a vertex of the hull will be another vertex.

By considering the above two cases it can be concluded that, once the procedure starts leaping from the first point present inside the convex hull (the first point is obtained by taking the mean of all data points), then it is not possible to leap to any other points present inside the hull. The only possibility is a leaping between the points which belongs to V.

Therefore, number of leaps, I ≤|V|≤ <\({|\mathrm{S}|}^\frac{2}{3}\)|S| (Hence, proved).

Parameters for evaluation

The quality of clusters formed after the clustering process is evaluated by using few validity indices such as DI, DBI and SC. These indices measure the goodness of clusters on basis of their inter-cluster, intra-cluster distance and similarity of instances present in their created clusters. The data sets contain the class label which is used as ground truth label to compute the clustering accuracy through Rand Index. The clustering outcomes are assessed to verify if they generate perfectly homogenous and complete subgroups. For this V measure was chosen as a parameter for evaluation. The execution time of all considered methods is recorded for each of the input data sets to practically verify their efficiency.

Validity indices

Cluster validation techniques are used to measure the quality of a cluster appropriately. Few validity indices used for this purpose are given as follows:

Dunn’s index (DI)

DI measure (Dunn29) is used to minimize the intra-cluster and maximize the inter-cluster distances. It can be defined as follows-

d is any given distance function used for this purpose, and Aj is a set consisting of the data points which are assigned to the ith cluster. Usually, any method which produces a larger value of DI determines that the cluster formed is compact and well-separated from other clusters.

Davies–Bouldin’s index (DBI)

DBI (Davies et al.30) is the ratio of the sum of data currently present within a cluster to those data remaining outside it. The data present within ith cluster distribution is given by:

The data which are between ith and jth partition is given by:

where \(\underline {v}_{i}\) is denoted as the ith cluster center, and both q & t are numerical values that can be chosen independently of each other and (q, t) ≥ 1. \(\left| {A_{i} } \right|\) is the number of elements that are present in Ai.

Subsequently, \({\text{R}}_{{{\rm i,qt}}}\) is determined which is given by the equation:

Finally, Davies–Bouldin’s index is obtained which is specified as follows:

The objective of the clustering methods should focus on obtaining a minimum value of DBI for achieving proper clustering.

Silhouette coefficient (SC)

In SC (Rousseeuw and Silhouettes31), for any data point di , initially the average distance from di to all other data points belonging to its own cluster is determined, which is denoted as a. Then, the minimum average distance from di to all other data points present in other clusters are determined, which is b. Then the silhouette coefficient is calculated as follows:

The silhouette value s ranges between 0 and 1. If s is close to 1, it indicates that the sample is well-clustered, if silhouette value is almost equal to zero, it indicates that the sample lies equally far away from both the clusters, and if silhouette value is equal to -1, it indicates that the sample is somewhere in between the clusters. The number of cluster with maximum average silhouette width is taken as the optimal number of the clusters.

Clustering accuracy

The accuracy of a cluster can be measured by using Rand Index (Rand32). Given N data points in a set D = {D1, D2, …, DN} and two clustering of them to compare C = {C1, C2, …, CK1} and C′ = {C′1, C′2, …, C′K1}, we define Rand index as:

where a is number of data pairs in D that are in the same subsets of both C and C′, b is number of data pairs in D that are in different subsets of both C and C′, c is number of data pairs in D that are in same subset of C and different subsets in C′, d is number of data pairs in D that are in different subset of C and same subsets in C′. The value for R is between 0 and 1, with 0 representing two clustering do not agree on any pair of data points and 1 representing the clustering are precisely identical. Liu33, Guo et al.34, and Zou et al.35 investigated data collection in wireless powered underground sensor networks assisted by machine intelligence, matrix algebra in directed networks, and limited sensing and deep data mining, respectively. Shen et al.36, Cao et al.37, and Sheng et al.38 respectively examined the modeling of relation paths for knowledge graph completion, optimization based on mobile data, and dataset for semantic segmentation of urban scenes. Lu et al.39, Li et al.40, and Xie et al.41 considered multiscale feature extraction and fusion of images, patterns across mobile app usage, and a simple Monte Carlo method for estimating the chance of a cyclone impact. Recently, Liu et al.42, Li et al.43, and Fan et al.44 developed a multi-labeled corpus of Twitter short texts, studied the long-term evolution of mobile app usage, and proposed axial data modeling via hierarchical Bayesian nonparametric models.

Homogeneity, completeness and V-measure

A cluster is said to be perfectly homogenous when it contains data points belonging to a single class label. Homogeneity reduces as data points belonging to different class labels are present in the same cluster. Similarly, a cluster is said to be perfectly complete if all the data points belonging to a class label are present in the same cluster. When the number of data points of a particular class label are distributed in different clusters, the completeness of the cluster decreases.

If a data set contains N number of data, cl different class labels, separated into K clusters and d data points belonging class c and cluster i then,

where F(cl, K)=\(-\sum_{\mathrm{i}=1}^{\mathrm{K}}\sum_{\mathrm{c}=1}^{\mathrm{cl}}\frac{\mathrm{d}}{\mathrm{N}}\mathrm{log}\left(\frac{\mathrm{d}}{\sum_{\mathrm{c}=1}^{\mathrm{cl}}\mathrm{d}}\right)\) and F(cl) = \(-\sum_{\mathrm{c}=1}^{\mathrm{cl}}\frac{\sum_{\mathrm{i}=1}^{\mathrm{K}}\mathrm{d}}{\mathrm{cl}}\mathrm{log}(\frac{\sum_{\mathrm{i}=1}^{\mathrm{K}}\mathrm{d}}{\mathrm{cl}} )\)

where F(K, cl) =\(-\sum_{\mathrm{c}=1}^{\mathrm{cl}}\sum_{\mathrm{i}=1}^{\mathrm{K}}\frac{\mathrm{d}}{\mathrm{N}}\mathrm{log}\left(\frac{\mathrm{d}}{\sum_{\mathrm{i}=1}^{\mathrm{K}}\mathrm{d}}\right)\) and F(K) = \(-\sum_{\mathrm{i}=1}^{\mathrm{K}}\frac{\sum_{\mathrm{c}=1}^{\mathrm{cl}}\mathrm{d}}{\mathrm{cl}}\mathrm{log}(\frac{\sum_{\mathrm{c}=1}^{\mathrm{cl}}\mathrm{d}}{\mathrm{cl}} )\)

where β can be set to favour homogeneity or completeness. If β is 1, then it favours both homogeneity and completeness equally. If β is greater than 1 then completeness is favoured and β less than 1 favours homogeneity.

Results and discussion

In order to analyze the practical performance of K-Means, FEKM, MCKM, MFQ and FLCS methods, we evaluated their results on various real world data sets [UCI Repository]. The characteristics of the data set are given as follows:

A diverse range of datasets are considered to evaluate the performance of the discussed methods. These data sets in Table 1 vary in dimensions, properties and classifications. Iris data set consists of three flower classes–setosa, virginica and versicolor. It has fifty instances in each class and a total of a hundred fifty instances. Each instance has four attributes- petal length & width and sepal length & width. Similarly, seed data set consists of two hundred ten instances, seven attributes and it contains wheat kernel of three classes- Kama, Rosa and Canadian. Adult data set consists of fourteen attributes- age, work class, final weight, education, marital-status, occupation, relationship, race, sex, capital gain, capital loss, hours per week and native-country. The data set is classified into two classes- person earning more than 50 k and those with less than or equal to 50 k per year. Balance data set contains six hundred twenty-five instances of a psychological experiment. Each instance has four features which include left weight, left distance, right weight and right distance. All instances divided into three classes- scale tip to right, tip to left and balanced. Haberman is the record from study of patients who survived from surgery of breast cancer which contains a record of three hundred six patients. Patients are classified into two categories- who died within five years and who survived five years or longer. It has three attributes- age of the patient at time of operation, year of operation and number of positive auxiliary nodes detected. TAE contains teaching performance of one hundred fifty-one teaching assistances categorized into- low, medium and high as per their performances. Wine data set is the result of chemical analysis of wine growth in Italy from three different cultivators with one hundred and seventy eight samples. Each instance has thirteen features- alcohol, malic acid, ash, alkalinity of ash, magnesium, total phenols, flavonoids, no flavonoid phenols, proanthocyanins color intensity, hue, OD315 of dilute wines and proline. Mushroom data set is record of eight thousand one hundred and twenty four mushroom samples with their twenty two characteristics. The data set is classified into two categories of mushroom- edible and poisonous.

It was discussed earlier in Section “Parameters for evaluation”(A) that, a greater value of DI or SC and a smaller value of DBI suggests better quality of cluster formation. Tables 2, 3 and 4 contains the DI, DBI and SC scores respectively where clustering loop is restricted to twenty iterations and K = 3. From these tables it can be observed that methods FEKM, MCKM, MCQ and FLCS perform better and form quality clusters than K-Means. MCQ and FLCS shows promising performance, which can be seen from the tables. In each table the best performing method is highlighted. Overall, it can be observed that for majority of the data sets for MCQ and FLCS performed better than other methods.

The accuracy of cluster formed is the next parameter for experimental evaluation. Rand index score which was discussed in Section “Parameters for evaluation”(b), was considered for this aspect. Normally, the value of Rand Index lies between 0 and 1 and value nearer to 1 signifies better accuracy. From Table Table 6 Homogeneity score of K-Means, FEKM, MCKM, MFQ and FLCS.Data setsK-meansFEKMMCKMMFQFLCSIris0.73640.75020.73640.75140.7514Balance0.08450.15890.07770.34510.3451Abalone0.06750.05880.12090.09160.0916Seed0.66450.70750.69340.70750.7075TAE0.01950.02650.02520.01860.0186Wine0.35610.37840.42880.39870.3987Glass0.11560.12460.12130.11760.1176Mushroom0.14550.17590.16540.19350.1935Adult0.00020.000310.000280.000310.00031Haberman0.00060.00080.00080.00120.00125, the accuracy of clustering of each method can be observed on the referred data sets. MFQ and FLCS have scored better than other methods in majority of the data sets as their Rand index values are closer to 1. Only in few cases like seed, wine, glass and mushroom, FEKM and MCKM have a better Rand index value than FLCS and MCQ. Figure 4 shows the accuracy of different clustering methods performed on different datasets.

Next, an analysis was made to verify whether the random selection of centers as performed by K-Means will generate stable and accurate clusters or those using an approach where the centers are initially chosen by any innovative way. For better visualization, four datasets were preferred for this purpose viz, iris, seed, TAE and Haberman whose accuracy scores varies significantly. Each method including K-Means, FEKM, MCKM, MFQ and FLCS were executed seven times taking these four datasets as inputs. From Fig. 5a the unpredictability of K-Means can be clearly observed. For every execution there is a variable accuracy score Fig. 5b. Due to random initial centers, there is unpredictability in the formation of clusters due to which varied accuracy scores are generated Fig. 5c, d. This confirms that, in some cases when the random centers are chosen accurately, they produce well-organized clusters whereas in other cases, when the random centers are not precise they create malicious subgroups. Conversely, methods like FEKM, MCKM, MFQ and FLCS gives a stable and predictable output on each execution.

The following parameter for evaluation was based on determining the homogeneity and completeness of clusters using V-measure. Tables 6, 7 and 8 below illustrates these facts. For most of the data sets V-measure is closer to 1 or is on higher side for MFQ and FLCS. This implies that the clusters obtained for different data sets are precise. However, those using K-Means are not effective since the centers randomly chosen may not be the near optimal ones. Figure 6 presents an analysis of FLCS vs. K-Means, FEKM and MCKM respectively based on V-measure score for different datasets. The graph indicates V-measure score of FLCS is comparatively higher than K-Means, FEKM and MCKM which suggests that the clusters obtained with FLCS are homogenous and complete.

Further experiments were conducted for calculating the time taken by all the methods in determining the initial cluster centers and their convergence of clustering loop. All algorithms were executed on a machine with 5th Gen Intel® i3 processor, 1.9 Ghz. clock speed and 4 GB RAM. Table 9 represents the actual execution time of all the algorithms for determining the initial centroids. From this table it can be seen that K-Means obtain the initial centers earlier than the others as this selection is done randomly. The average time taken for selection of cluster centers of each method is plotted in Fig. 7. The horizontal line in the graph represents the average time taken to select the initial centers of all the methods. From the graph it can be observed that FEKM and MFQ take slightly more time for finding the centers than the rest. MFQ by far takes the maximum out of them as its complexity increases with the increase in dimension of the data. MCKM and FLCS show similar performance, yet FLCS takes less time to compute the initial centers than MCKM due to its linear time complexity.

The clustering loop convergence time i.e. time taken for the cluster formation by each method on the given datasets is noted in Table 10. The best performing method on particular data set is highlighted. It can be observed from the results that, FLCS has least convergence time in five out of ten data sets. MFQ performs similarly because their initial centers are same. FEKM also have smaller convergence time in few datasets. The average clustering loop convergence time of each method is plotted in Fig. 8. The horizontal line indicates the average clustering loop convergence time of all methods. The plot shows that K-Means takes more time for formation of clusters due to bad random initial centers. K-Means is the only method above the average line. Other methods decide the subgroup formation below the average line. FLCS perform faster than other methods due to its significantly distinct initial clusters which are obtained in less time.

Finally, overall execution time of each method employed on the given datasets is recorded. K-Means is the fastest in center selection but its random initial centers results in large convergence time. From Table 11 and Fig. 9 it can be seen that, FEKM and MFQ have overall larger execution time than others since much of their computation is spent on selecting the near-optimal centers. On the other hand, MCKM and FLCS show promising results.

The performance and accuracy scores of MFQ and FLCS are more or less equal because the outcomes of the methods are always same. This is due to the fact that, both methods obtain exactly same K initial centers. However, the process of computing initial centers is totally different. From Fig. 9 difference can be clearly observed, FLCS is more efficient than MFQ in execution time.

Conclusion

The initial centre of a cluster is a decisive factor in its final formation as erroneous centroids may results in malevolent clustering. In this research, few approaches were suggested to decide the near optimal cluster centers. FEKM was proposed with an idea to obtain well separated clusters. It was quite effective with most of the datasets, only with a complexity issue which is in the higher side. In order to get a solution to this, MCKM was suggested. It improved the computational time to some extend but still was higher than K-Means which randomly selects its centers. For these reason FCGS and MFQ were used which selects the centers present only on the convex hull thereby, trims down the chance of considering all data points to be a candidate to form the centers. However, it was found that the clustering process was effective for datasets with two or three attributes only. Due to this reason, FLCS was suggested which is simple and effective in deciding the centers with lesser complexity. All these methods were thoroughly analyzed by considering the clustering effectiveness, correctness, homogeneity, completeness, complexity and their actual execution time of convergence. For this reason performance indices like DI, DBI, and SC index were used, for correctness Rand measure was used, for homogeneity and completeness V-measure was used. All these factors considered for testing the quality of cluster formation showed excellent results for all proposed algorithms as compared to K-Means clustering. This signifies the emergence of individual groups in which data present within the group remain at a closer proximity and the separation of data of one group to that of another is very far. Zhou et al.45, Cheng et al.46 and Lu et al.47 investigated water depth bias correction of bathymetric LiDAR point cloud data, situation-aware IoT service coordination and IoT service coordination using the event-driven SOA paradigm respectively. Quantifiable privacy preservation for destination prediction in LBSs, spatio-temporal analysis of trajectory data and energy-efficient framework for internet of things underlaying heterogeneous small cell networks respectively examined by Refs.48,49,50. Peng et al.51, Bao et al.52, Liu et al.53 recently examined the community structure in evolution of opinion formation, limited real-world training data and robust online tensor completion for IoT streaming data recovery respectively. Liu et al.54 worked on federated neural architecture search for medical sciences. Refs.55,56,57 exhibits the recent and newly development in the IoT field like next-generation wireless data center network, fusion network for transportation detection and wireless sensor networks with energy harvesting relay.

This work guided us to quite a few exciting and innovative research opportunities which can be further explored viz, both the time complexity and computation time of the suggested methods can be further reduced, the concept of convex hull can still be properly used to obtain better cluster centers on versatile data sets.

Data availability

The data will be made available on a reasonable request to the corresponding author.

References

Odell, P. L. & Duran, B. S. Cluster Analysis; A Survey. Lecture Notes in Economics and Mathematical Systems Vol. 100 (LNE, 1974).

Na, S., Xumin, L. and Yong, G. Research on K-means clustering algorithm—an improved K-means clustering algorithm. In IEEE 3rd Int. Symposium on Intelligent Info. Technology and Security Informatics, pp. 63–67 (2010).

Xu, R. & Wunsch, D. Survey of clustering algorithms. IEEE Trans. Neural Netw. 16, 645–678 (2005).

Cheung, Y. M. A new generalized K-means clustering algorithm. Pattern Recogn. Lett. 24, 2883–2893 (2003).

Li, S. Cluster center initialization method for K-means algorithm over data sets with two clusters. Int. Conf. Adv. Eng. 24, 324–328 (2011).

Nazeer, K. A. & Sebastian, M. P. Improving the accuracy and efficiency of the K-means clustering algorithm. Proc. World Congr. Eng. 1, 1–5 (2009).

Fuyuan Cao, F., Liang, J. & Jiang, G. An initialization method for the -means algorithm using neighborhood model. Comput. Math. Appl. 58, 474–483 (2009).

Kumar, A. & Kumar, S. Density based initialization method for K-means clustering algorithm. Int. J. Intell. Syst. Appl. 10, 40–48 (2017).

Kushwaha, N., Pant, M., Kant, S. & Jain, V. K. Magnetic optimization algorithm for data clustering. Pattern Recogn. Lett. 115, 59–65 (2018).

Mohammed, A. J., Yusof, Y. & Husni, H. Discovering optimal clusters using firefly algorithm. Int. J. Data Min. Model. Manag. 8, 330–347 (2016).

Fahim, A. Homogeneous densities clustering algorithm. Int. J. Inf. Technol. Comput. Sci. 10, 1–10 (2018).

Fahim, A. K and starting means for k-means algorithm. J. Comput. Sci. 55, 101445 (2021).

Khandare, A. & Alvi, A. Efficient clustering algorithm with enhanced cohesive quality clusters. Int. J. Intell. Syst. Appl. 7, 48–57 (2018).

Yao, X., Wang, J., Shen, M., Kong, H. & Ning, H. An improved clustering algorithm and its application in IoT data analysis. Comput. Netw. 159, 63–72 (2019).

Ren, Y., Kamath, U., Domeniconi, C. & Xu, Z. Parallel boosted clustering. Neurocomputing 351, 87–100 (2019).

Franti, P. & Sieranoja, S. How much can k-means be improved by using better initialization and repeats?. Pattern Recognit. Lett. 93, 95–112 (2019).

Mehta, V., Bawa, S. & Singh, J. Analytical review of clustering techniques and proximity measures. Artif. Intell. Rev. 53, 5995–6023 (2020).

Mehta, V., Bawa, S. & Singh, J. Stamantic clustering: Combining statistical and semantic features for clustering of large text datasets. Expert Syst. Appl. 174, 114710 (2021).

Shuai, Y. A Full-sample clustering model considering whole process optimization of data. Big Data Res. 28, 100301 (2022).

Nie, F., Li, Z., Wang, R. & Li, X. An effective and efficient algorithm for K-means clustering with new formulation. IEEE Trans. Knowl. Data Eng. 35, 3433–3443 (2022).

Ikotun, M., Ezugwu, A. E., Abualigah, L., Abuhaija, B. & Heming, J. K-means clustering algorithms: A comprehensive review, variants analysis, and advances in the era of big data. Inf. Sci. 622, 178–210 (2023).

Queen, J. M. Some methods for classification and analysis of multivariate observations. In Fifth Berkeley Symposium on Mathematics, Statistics and Probability, pp. 281–297 (University of California Press, 1967).

Mishra, K., Nayak, N. R., Rath, A. K. & Swain, S. Far efficient K-means clustering algorithm. In Proceedings of the International Conference on Advances in Computing, Communications and Informatics, pp. 106–110 (ACM, 2012).

Mishra, K., Rath, A. K., Nanda, S. K. & Baidyanath, R. R. Efficient intelligent framework for selection of initial cluster centers. Int. J. Intell. Syst. Appl. 11, 44–55 (2019).

Cormen, T. H., Leiserson, C. E., Rivest, R. L. & Stein, C. Introduction to Algorithms (MIT Press, 2009).

Graham, R. L. An efficient algorithm for determining the convex hull of a finite planar set. Inf. Process. Lett. 1, 132–133 (1972).

Barber, B., Dobkin, D. P. & Huhdanpaa, H. The Quickhull algorithm for convex hulls. ACM Trans. Math. Softw. 22, 469–483 (1996).

Jarník, V. Uber die Gitterpunkte auf konvexen Kurven. Math. Z. 24, 500–518 (1926).

Dunn, J. C. A fuzzy relative of the ISODATA process and its use in detecting compact well-separated clusters. J. Cybern. 3, 32–57 (1973).

Davies, L. & Bouldin, D. W. A cluster separation measure. IEEE Trans. Pattern Anal. Mach. Intell. 1, 224–227 (1979).

Rousseeuw, P. & Silhouettes, J. A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 20, 53–65 (1987).

Rand, W. M. Objective criteria for the evaluation of clustering methods. J. Am. Stat. Assoc. 66, 846–850 (1971).

Liu, G. Data collection in MI-assisted wireless powered underground sensor networks: directions, recent advances, and challenges. IEEE Commun. Mag. 59, 132–138 (2021).

Guo, F., Zhou, W., Lu, Q. & Zhang, C. Path extension similarity link prediction method based on matrix algebra in directed networks. Comput. Commun. 187, 83–92 (2022).

Zou, W. et al. Limited sensing and deep data mining: A new exploration of developing city-wide parking guidance systems. IEEE Intell. Transp. Syst. Mag. 14, 198–215 (2022).

Shen, Y., Ding, N., Zheng, H. T., Li, Y. & Yang, M. Modeling relation paths for knowledge graph completion. IEEE Trans. Knowl. Data Eng. 33, 3607–3617 (2021).

Cao, B., Zhao, J., Lv, Z. & Yang, P. Diversified personalized recommendation optimization based on mobile data. IEEE Trans. Intell. Transp. Syst. 22, 2133–2139 (2021).

Sheng, H. et al. UrbanLF: A comprehensive light field dataset for semantic segmentation of urban scenes. IEEE Trans. Circuits Syst. Video Technol. 32, 7880–7893 (2022).

Lu, S. et al. Multiscale feature extraction and fusion of image and text in VQA. Int. J. Comput. Intell. Syst. 16, 54 (2023).

Li, T. et al. To what extent we repeat ourselves? Discovering daily activity patterns across mobile app usage. IEEE Trans. Mobile Comput. 21, 1492–1507 (2022).

Xie, X., Xie, B., Cheng, J., Chu, Q. & Dooling, T. A simple Monte Carlo method for estimating the chance of a cyclone impact. Nat. Hazards 107, 2573–2582 (2021).

Liu, X. et al. Developing multi-labelled corpus of Twitter short texts: A semi-automatic method. Systems 11, 390 (2023).

Li, T., Fan, Y., Li, Y., Tarkoma, S. & Hui, P. Understanding the long-term evolution of mobile app usage. IEEE Trans. Mobile Comput. 22, 1213–1230 (2023).

Fan, W., Yang, L. & Bouguila, N. Unsupervised grouped axial data modeling via hierarchical Bayesian nonparametric models with watson distributions. IEEE Trans. Pattern Anal. Mach. Intell. 44, 9654–9668 (2022).

Zhou, G. et al. Adaptive model for the water depth bias correction of bathymetric LiDAR point cloud data. Int. J. Appl. Earth Observ. Geoinform. 118, 103253 (2023).

Cheng, B., Zhu, D., Zhao, S. & Chen, J. Situation-aware IoT service coordination using the event-driven SOA paradigm. IEEE Trans. Netw. Serv. Manag. 13, 349–361 (2016).

Lu, S. et al. The multi-modal fusion in visual question answering: A review of attention mechanisms. PeerJ Comput. Sci. 9, e1400 (2023).

Jiang, H., Wang, M., Zhao, P., Xiao, Z. & Dustdar, S. A utility-aware general framework with quantifiable privacy preservation for destination prediction in LBSs. IEEE/ACM Trans. Netw. 29, 2228–2241 (2021).

Xiao, Z. et al. Understanding private car aggregation effect via spatio-temporal analysis of trajectory data. IEEE Trans. Cybern. 53(4), 2346–2357 (2023).

Jiang, H. et al. An energy-efficient framework for internet of things underlaying heterogeneous small cell networks. IEEE Trans. Mobile Comput. 21, 31–43 (2022).

Peng, Y., Zhao, Y. & Hu, J. On the role of community structure in evolution of opinion formation: A new bounded confidence opinion dynamics. Inf. Sci. 621, 672–690 (2023).

Bao, N. et al. A deep transfer learning network for structural condition identification with limited real-world training data. Struct. Control Health Monit. 2023, 8899806 (2023).

Liu, C., Wu, T., Li, Z., Ma, T. & Huang, J. Robust online tensor completion for IoT streaming data recovery. IEEE Trans. Neural Netw. Learn. Syst. https://doi.org/10.1109/TNNLS.2022.3165076 (2022).

Liu, X., Zhao, J., Li, J., Cao, B. & Lv, Z. Federated neural architecture search for medical data security. IEEE Trans. Ind. Inform. 18, 5628–5636 (2022).

Cao, B. et al. Multiobjective 3-D topology optimization of next-generation wireless data center network. IEEE Trans. Ind. Inform. 16, 3597–3605 (2020).

Chen, J. et al. Disparity-based multiscale fusion network for transportation detection. IEEE Trans. Intell. Transp. Syst. 23, 18855–18863 (2022).

Ma, K. et al. Reliability-constrained throughput optimization of industrial wireless sensor networks with energy harvesting relay. IEEE Internet Things J. 8, 13343–13354 (2021).

Acknowledgements

Researchers Supporting Project number (RSPD2023R1060), King Saud University, Riyadh, Saudi Arabia.

Funding

This research received funding from King Saud University through Researchers Supporting Project Number (RSPD2023R1060), King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

All authors are equally contributed in the research work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mishra, B.K., Mohanty, S., Baidyanath, R.R. et al. An efficient framework for obtaining the initial cluster centers. Sci Rep 13, 20821 (2023). https://doi.org/10.1038/s41598-023-48220-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-48220-3

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.