Abstract

In nature and society, problems that arise when different interests are difficult to reconcile are modeled in game theory. While most applications assume that the players make decisions based only on the payoff matrix, a more detailed modeling is necessary if we also want to consider the influence of correlations on the decisions of the players. We therefore extend here the existing framework of correlated strategies by giving the players the freedom to respond to the instructions of the correlation device by probabilistically following or not following its suggestions. This creates a new type of games that we call “correlated games”. The associated response strategies that can solve these games turn out to have a rich structure of Nash equilibria that goes beyond the correlated equilibrium and pure or mixed-strategy solutions and also gives better payoffs in certain cases. We here determine these Nash equilibria for all possible correlated Snowdrift games and we find these solutions to be describable by Ising models in thermal equilibrium. We believe that our approach paves the way to a study of correlations in games that uncovers the existence of interesting underlying interaction mechanisms, without compromising the independence of the players.

Similar content being viewed by others

Introduction

Game theory1 has been used as a powerful tool to model problems from diverse research areas. In particular, evolutionary game theory2 aims to explain why some strategies are selected in the presence of many players3. When we are dealing with a system of only two players that are solely informed by a payoff matrix the solutions are very well understood, but when several players are involved it becomes, in spite of that, hard to predict the final equilibrium only from the pair-wise interactions4. This has found many applications in biology5, economics6,7,8, politics9, and social sciences10,11. In this respect, a longstanding challenge is to understand when individuals in groups end up cooperating, while their individual, rational strategy would be to defect12, such as occurs in the Prisoner’s Dilemma game13. This situation is relevant in evolutionary game theory because a number of biological systems can be modeled by this famous game14 and as a consequence this issue has received much attention5,15,16. Also relevant is the increased understanding of complex networks17, which has proved essential to study the multiplayer situation because it allows us to obtain more insight into the effect of the interaction structure on the cooperation of the players on the network18,19. Additionally, methods from statistical physics have proved fruitful at studying the phase transition aspects of group behavior. Examples of such methods are Monte Carlo simulations and mean-field techniques, that for instance use Fermi-Dirac statistics to obtain the decisions of the players based on the decisions of their neighbors in the previous round11,20. As a natural development, these physics-based techniques have also been applied to players on different kinds of networks13,18. For games where the best individual strategy is to defect, this research has shown that cooperation, among other factors, strongly depends on specific game-theoretical parameter values and on network structure4,20. If we consider that the players play a Snowdrift game instead, the best individual payoff can sometimes be to cooperate. This explains the occurrence of cooperation as part of a mixed strategy solution2, but explaining how it becomes an emergent behavior for a large number of players is still complicated21. This game is of particular interest because it has been shown to model biological conflict22,23. Interestingly, the optimal payoff for this game is only achieved with communication between the players, and so it is worth exploring if, in certain situations, third-party information might weigh on their decisions.

Games that incorporate extra information in this way are known in the literature as “expanded games” that, in addition to the payoff matrix, include a so-called correlation device, which is an external party that draws with a fixed probability one of the final configurations of the game and informs each of the players separately of what they should play to achieve that configuration1. The correlations can either be agreed upon beforehand by the players as a correlated strategy to solve the game or they can be externally imposed. If both players do not have an incentive to not follow the recommendations of the correlation device while their opponent is following the instructions, the players are said to be in correlated equilibrium. Keeping both possible interpretations of the game in mind we are now interested in exploring the following questions. First, is it possible for each player to obtain a better payoff than in correlated equilibrium by having a different strategy over the correlations, other than always complying with the instructions from the correlation device, and that is in a Nash equilibrium? Secondly, in case the initially agreed upon or externally imposed correlations do not allow for a correlated equilibrium, is it still possible for the players to use the correlation device to find a better solution other than returning to the case without correlations, by ignoring the correlation device and using the pure or mixed strategies solutions of the game? To answer these questions, we introduce a new kind of strategy, which consists of a probability distribution over following or not following the correlation device.

With the ultimate goal to further advance the application of games to real-life situations and to explain group behavior from the pair-wise interactions, we focus in this paper on two-player games that are described by a payoff matrix and a correlation device, both of which are specified beforehand and remain unchanged during the game. In particular, the correlations can also be out of correlated equilibrium. These games we call “correlated games” from now on and they can be solved by finding the Nash equilibria in the response probabilities to follow the instructions of the correlation device. By giving an added degree of freedom to the players that are already subject to correlations, our results represent a considerable improvement on the analysis of these kind of games. For instance, our approach shows that a correlated equilibrium is always locally stable, but does not have to be stable globally, that is, there can exist different Nash equilibria that have a higher payoff. For practical applications, our treatment is promising because we expect that better outcomes can be achieved if we do not assume from the start that the players act solely based on their individual payoffs but consider as well that the decisions can be informed by underlying correlations in the system. While the treatment is generalizable to any game, we study its effects on the Snowdrift game for two players. Because we are now dealing with direct interactions between the players, we can readily express our results also in terms of probabilities given by a Boltzmann distribution.

Correlated Games

We consider symmetric, two by two games. The players have access to the payoff matrix in Fig. 1, that settles how much reward each player receives given the actions of all the players. Player 1 receives the payoffs on the left side of the comma, while player two receives the payoff on the right side of the comma, dependent on the two strategies chosen. The different games are defined by the range of the parameters: the Harmony game has 0 < s < 1 and 0 < t < 1; the Stag-Hunt game has −1 < s < 0 and 0 < t < 1; the Prisoner’s Dilemma has −1 < s < 0 and 1 < t < 2; and the Snowdrift game, also known as Chicken or Hawk-Dove game, has 0 < s < 1 and 1 < t < 2. Depending on the game being played, the players decide on the best strategy based on how much they will win given all the possible strategies of the adversaries. For the uncorrelated case, the objective is to maximize the expected payoff by assigning a probability PC to playing C (to cooperate), so that D (to defect) is played with the probability 1−PC. A Nash equilibrium24 is reached for a strategy, i.e., a value of PC, that none of the players wants to deviate from. If PC is 0 or 1, it is a pure Nash equilibrium, otherwise it is a mixed strategy equilibrium. The mixed strategy solution is of particular importance in the Snowdrift games. This game has two pure Nash equilibria in which the players adopt opposite pure strategies, but these cannot be reached without introducing correlations between the players. Therefore, the best solution is a mixed strategy Nash equilibrium, where the probability of playing C for each player is \({P}_{C}^{\ast }=s/(t+s-1)\).

This solution for the Snowdrift game is the best that the players can do without communicating, but, for most parameters, better results are obtained if they can both play opposite strategies systematically. This, however, requires the introduction of correlations between the players. To illustrate this, we consider the extreme example of a simple traffic light on a cross-road. The cross-road can also be described as a Snowdrift game, where the best payoffs are obtained when one of the drivers decides to stop (to cooperate) and the other decides to go (to defect). Since the players cannot communicate, a traffic light is needed to achieve the optimal situation. The traffic light can be seen as the correlation device, which assigns a publicly known probability pμν to a certain state μν, with Greek indices taking the values C and D. In this particular example, the device assigns equal probabilities to the states CD and DC, while assigning zero probability to CC and DD. Since the correlation is very strong and there is a big penalty if both players defect simultaneously, the players always want to follow the correlation device, and the game is thus in a “correlated equilibrium”. A general correlation device, however, assigns non-zero probabilities to all the possible outcomes of a game. If this is the case for the states with low payoff, the question arises whether always following is the best strategy for the players. According to the existing theory25, they should always follow the correlation device if they are in correlated equilibrium, and they should fall back to the uncorrelated mixed-strategy solution otherwise. This ensures that the probabilities in correlated equilibrium coincide with the final distribution of outcomes, such that they represent the actual statistics of the game. Thus, if the players always follow the instructions from the correlation device, this distribution perfectly describes the actions of the players. To show that this is not the complete picture, we now allow the players to deviate from the instructions of the correlation device in a controlled manner. This forms a new game, a “correlated game”, where the players now play against the correlation device, having an option to follow or not follow the received instructions.

Response Strategy

To solve the correlated game, a “response strategy” involving the decisions to follow or not to follow the instructions arises. The probablities to act in either way become the new actions that the players can take, while they are still not able to comunicate. To implement this, each player i can follow with probability \({P}_{F\mu }^{i}\), and thus not follow with probability \({P}_{NF\mu }^{i}=1-{P}_{F\mu }^{i}\) the instruction μ that they receive. These probabilities we call the “response probabilities”. The renormalized probability \({p}_{\mu \nu }^{R}\) that a certain final state μν is reached is given by the sum over the initial probability distribution weighted by the probability that the initial states μ′ν′ gets converted to a specific final state μν through the players’ response. Hence26,27,28,29

with \({P}_{\mu \leftarrow \mu ^{\prime} }^{i}\) the probability that player i is told to play μ′ but plays μ. As an example, the probability that the final state is CC is now

The expected payoff of a player is given by the payoffs averaged over the renormalized probabilities, which depends linearly on the response probabilities of that player as

with \({u}_{\mu \nu }^{i}\) the payoff of player i in the state μν. The coefficients CC, CD and CE arise when we group the terms in the sum according to their dependence on the probabilities of player i. They depend linearly on the initial correlation probabilities and on the response probabilities of the opponent. In essence, CC, CD tell us how the average payoff of one player changes when this player changes their response probabilities.

A Nash equilibrium in the response strategy is achieved if there is no incentive for player i to change the probabilities \({P}_{F\mu }^{i}\). This is achieved by imposing that the slope of the expected payoff with respect to each response probability, either CC or CD in the evaluation of the total player’s payoff, is zero, unless the equilibrium response probability is 0 or 1, in which case the slope should be negative or positive, respectively. The intuition is that equilibrium is reached when the payoff of the players cannot be improved anymore by changing their own response probabilities while keeping those of the other players fixed at the equilibrium values. The independence of this analysis separately for each response probability represents Bayes rationality25 of the players towards the final states given the initial information that they receive.

As a result, there are three possible Nash equilibria for each response probability: PFμ = 0, PFμ = 1 and 0 < PFμ < 1. In our two by two games, this amounts to nine possible types of equilibrium response strategies, but which ones are actually realized depends on the payoff parameters. We find that the conditions where “always follow”, i.e., PFμ = 1 is a stable solution, correspond to the Bayes rational conditions of the correlated equilibrium, indicating that this is only one possible response equilibrium. However, each renormalized set of probabilities generates a new correlated game for which the response equilibrium exactly matches a correlated equilibrium, from which the players by definition indeed do not want to deviate. Using the slopes CC and CD to evaluate the Nash equilibria, each of the response probabilities has to be subject to one of the three above-mentioned conditions simultaneously. To guarantee that none of the players wants to deviate, each slope is calculated assuming that the response probabilities of the other players are in equilibrium, such that a self-consistent solution is obtained (see Supplementary Material).

Ising Model

Another way of representing any symmetric, and thus possibly correlated, probability distribution over the four possible states is through a Boltzman distribution of an Ising system. Let each player be represented by a particle with spin, and let C and D correspond to the spin states “up” and “down”, such that μ, ν ∈ {↑, ↓}. We can rewrite the probabilities as

with β the inverse of a generalized thermal energy, Hμν the Hamiltonian of the Ising system, decomposable into the sum of a constant term that can be absorbed into the partition function, an Ising term and a Zeeman term30, and \(Z={\sum }_{\mu ,\nu }{e}^{-\beta {H}_{\mu \nu }}\) the partition function.

Having found the optimal response probabilities, we can now incorporate them in the Ising model that effectively describes the final statistics of the game. Using the language of statistical physics, the response probabilities in equilibrium can be written as

with the partition function given by

where \({B}_{\mu \leftarrow \mu ^{\prime} }^{i}\) are the appropriate energies that are explicitly given in the Methods section. The renormalized correlated probabilities, by eq. 4, are written in closed form as

Using eqs 4, 5 and 7, we are able to describe the renormalized Ising Hamiltonian as

The minus sign indicates the opposite play, i.e., −C = D and −D = C, or the corresponding interchange between spin states “up” and “down”. The renormalized Hamiltonian is obtained by the players choosing the energy parameters \({B}_{\mu \leftarrow \mu ^{\prime} }^{i}\) independently, which in this formalism is what allows the players to have complete control over the final probabilities. How eqs 5 and 8 are obtained is explicitly explained in the Methods section. Note that if eq. 8 is interpreted as a renormalization-group transformation, the Nash equilibria correspond to the fixed points of this transformation30.

Results

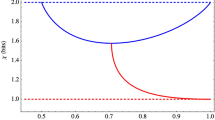

We studied the response strategies of the Snowdrift game for all initial (symmetric) correlation probabilities and show the results in Fig. 2. The lines that separate each region in 2a are obtained by imposing a particular sign on a slope of a response probability and using the associated value for that probability. Between each straight line and the curved line, given by \({p}_{DD}=1+{p}_{CC}-2\sqrt{{p}_{CC}}\), there exists a solution with one of the slopes strictly greater or smaller than zero and the other one equal to zero, such that one of the response probabilities is 0 or 1 and we can find a value for the other probability that lies between these values. Each response probability associated with a zero slope can have values that range between the value of the other probability in the extreme and its associated mixed-strategy solution (due to the limiting condition that pDD ≤ 1−pCC), represented in 2b by the dotted line. Bellow the lines, both slopes have the same sign and both probabilities are in the same extreme of the interval. The arrows in 2b depict how the value of the probabilities change as we move from the straight lines towards the curved line, which when reached sends the probabilities to the uncorrelated mixed-strategy solution that is always a solution in all regions. The upper line that delimitates regions I and II and the rightmost line that delimitates region II are the lines that arise when both slopes are positive. They correspond to the correlated equilibrium conditions. The intersection of these two lines is the mixed-strategy solution when written as a response strategy. Thus in these two regions the correlated equilibrium is a solution, although it is not unique or always optimal in payoff. Moreover, there are regions where the correlated equilibrium is unstable but other solutions exist that make use of the correlations to increase the payoff. For s < t−1 the results are similar, but there occurs a swapping among the lines: the rightmost boundary of region I becomes the leftmost, and the top boundary of region III becomes the bottom one, which changes the equilibria in regions II, III and VI correspondingly. When s = t−1 these boundaries overlap and these regions disappear. In addition to the expected existence of a correlated equilibrium region, where interestingly also other Nash equilibria exist, we see other regions with other types of equilibria that use correlations to optimize the payoff. The mixed strategy of the uncorrelated Snowdrift game is always an equilibrium solution in every region.

Symmetric correlations and associated equilibrium response strategies for the Snowdrift game with s = 0.5 and t = 1.2. (a) Illustration of all correlated Nash equilibria of the Snowdrift game in the PCC − PDD plane, for parameters representative of s > t − 1. (b) Schematic representation of the equilibrium value of the response probabilities in the PFD − PFC plane that can be found in each region enumerated in (a).

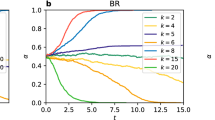

To choose the best response for each region, we compare in Fig. 3 the payoffs of all the possible response equilibria. As the parameters vary in the figures, the blue solution in region II and the orange solution in region III either increases or decreases in area. In Fig. 3b we see that the mixed strategy can be the best solution for a game with strong off-diagonal correlations, but this is not generally the case for all parameters. In the regions where the correlated equilibrium exists, there is, for certain initial probabilities, a better alternative solution. All the renormalized games will correspond to final probabilities where the correlated equilibrium is the best solution, such that the players do not want to deviate anymore from their chosen strategy. Because the mixed-strategy payoff is a constant, we see that correlations always provide a payoff that is at least as good.

Equilibrium response strategies with highest payoff per region, for different parameters. (a) Equilibria corresponding to highest payoff by region, for s = 0.5 and t = 1.2 (s > t − 1), without the mixed-strategy solution. (b) Equilibria corresponding to highest payoff, for the same parameters as (a), including the mixed-strategy solution. (c) Equilibria corresponding to highest payoff by region, for s = 0.23 and t = 1.5 (s < t − 1), including the mixed-strategy solution. The existing equilibrium solutions are compared within a region, according to the description in Fig. 2. The darkening of the colors represents a higher absolute value of the payoff when compared to the best payoffs of the neighboring regions, but the actual value changes within the region, except for the mixed-strategy solution, of which the is constant. All the payoffs corresponding to the best solution are continuous to one another.

Discussion

The introduction of the possibility to follow or not follow the instructions from a correlation device, which we called a response strategy, in correlated games opens up several new features. We showed that the correlated equilibrium is only a particular response equilibrium, but that other Nash equilibria exist. These new equilibria renormalize to a correlated equilibrium even if the initial game is out of correlated equilibrium, showing that the players even then can still use the correlations to achieve a better payoff. The extra information in the correlations is two-fold: either the final distributions of outcomes informs us about an underlying correlation structure, or the players can independently improve on externally imposed initial correlations, motivated by stability and payoff maximization. Regarding evolutionary game theory, the correlated evolutionary stable strategy27,31 is, similarly, only one equilibrium where the agents always follow the correlations, which suggests that the evolutionary stable solution is not unique. The fact that the correlations might not completely determine the actions of the players but merely inform them is an essential feature in our model that allows the encoding of the choice of the players.

While extensive research has been done to study how humans behave when they play games on networks, particularly through simulations10,32,33 and experiments32,34,35, we hope to provide analytical insight into these systems, since for the two-player game the renormalized probabilities of the optimal Nash equilibrium are equivalent to a two-site Ising model in a magnetic field. To this effect, we show how the players can introduce an energy parameter to change the Hamiltonian to effectively obtain the renormalized probabilities. This provides a new way in which statistical physics methodology can be used to model a simple network with interactions, adding to the existing research that applies physics to phenomena that involve several agents4,11,36,37, with particular emphasis to the applications in game theory13,37,38. Bridging the gap between games with and without correlations will also prove useful to better model decision-making, since the response probabilities allow us to include interactions between agents that influence their decisions. It is also worth mentioning other efforts to include the possibility of additional information and voluntary participation, such as coevolutionary games39, in particular where the players have a third strategy that represents the option to abstain from playing40, spatial games where the players are assigned a probability to abstain from playing41 and multilayer networks42. It remains an interesting open question how well our particular model would describe the non-local network effects and this constitutes future research.

Methods

Slope analysis for the Snowdrift Game

In eq. 3 we have an expression for the expected payoff of player 1, with the coefficients CC and CD. To make the various dependencies clearer, we now denote these explicitly as \({C}_{C}({p}_{CC},\,{p}_{DD},\,{P}_{FC}^{2},\,{P}_{FD}^{2})\) and \({C}_{D}({p}_{CC},\,{p}_{DD},\,{P}_{FC}^{2},\,{P}_{FD}^{2})\), respectively.

For the Snowdrift game, which is a symmetric game, it is natural to assume also a symmetric probability distribution, so pCD = pDC = (1 − pCC − pDD)/2. When both slopes are positive, the equilibrium will be at \({P}_{FC}^{1}=1\) and \({P}_{FD}^{1}=1\), and due to the symmetry of the game, player 1 will not want to change these probabilities when player 2 has the same equilibrium probabilities. To reach equilibrium, the conditions thus become

These conditions are equivalent to the correlated equilibrium conditions. Similarly, a second kind of equilibrium is reached when the slopes are negative and the response probabilities are zero, i.e.,

A third type of equilibrium exists when both slopes equate to zero

for which the solution coincides with the mixed-strategy equilibrium solution, with \({P}_{FC}^{1\ast }={P}_{FC}^{2\ast }={P}_{C}^{\ast }\) and \({P}_{FD}^{1\ast }={P}_{FD}^{2\ast }={P}_{D}^{\ast }\). The last type of equilibria comes in four possible guises, consisting of one of the conditions being zero, while the other is strictly positive or negative. For instance, we can have

If we calculate the specific value of the response probability using the first line of eq. 12 and substitute this value in the second line, a new condition arises for symmetric games, namely

Under this condition, and pDD ≤ 1−pCC, each equilibrium response probability that has a zero slope in the expected payoff, has a limited range of possible values, which, depending on the sign of the associated inequality, goes from 1 or 0 to \({P}_{C}^{\ast }\) or \({P}_{D}^{\ast }\). Each of the four possible combinations correspond to one of the four response equilibria of this kind. The solution of the example given above is \({P}_{FC}^{1\ast }=1\) and \({P}_{D}^{\ast }\) \( < {P}_{FD}^{1\ast } < 1\), with the specific value of \({P}_{FD}^{1\ast }\) depending on the initial correlated probabilities.

Due to the value of the Snowdrift game’s payoff parameters, the two other conditions that would arise from having the two conditions with opposite strict inequalities do not have solutions, so only seven equilibria exist in total for this game.

Note that in a game-theoretical notation, each of the above equilibrium conditions can be summarized as

These comprehend two conditions, one for every value of μ′. Summing over these shows that the expected payoff of player 1 cannot be improved by deviating from the equilibrium strategy, which is the requirement for a Nash equilibrium. The stronger statement that both conditions are satisfied separately expresses the Bayes rationality of player 1.

Renormalized Ising Model

To insert the response probabilities in the Ising model, each reaction from the players will have a Zeeman-like energy: either \(-{B}_{\mu }^{i}\) if they follow μ, or \(+\,{B}_{\mu }^{i}\) if they do not follow:

We can then rewrite the response probabilities as

with \({Z}_{\mu ^{\prime} }^{i}\) as given in eq. 6. Using eq. 1, we calculate the renormalized Ising energies:

Introducing eq. 16 and the initial correlation probabilities with energies Hμ′ν′, with associated partition function Z, we get a simplified version of the renormalized energies:

The first term in eq. 17 drops out because we have that

Hence, we can rewrite \({H}_{\mu \nu }^{R}\) as in eq. 8. The fact that the renormalized partition function is a product of the various partition functions expresses that the actions of the players enter the renormalized Hamiltonian in an independent manner.

References

Fudenberg, D. & Tirole, J. Game theory, 1991. MIT Press (1991).

Smith, J. M. & Price, G. R. The logic of animal conflict. Nat. 246, 15 (1973).

Smith, J. M. Evolution and the Theory of Games (Cambridge university press, 1982).

Perc, M., Gómez-Gardeñes, J., Szolnoki, A., Floría, L. M. & Moreno, Y. Evolutionary dynamics of group interactions on structured populations: a review. J. royal society interface 10, 20120997 (2013).

Nowak, M. A. & Sigmund, K. Evolutionary dynamics of biological games. Sci. 303, 793–799 (2004).

Kreps, D. M. Game theory and economic modelling (Oxford University Press, 1990).

Friedman, D. On economic applications of evolutionary game theory. J. Evol. Econ. 8, 15–43 (1998).

Van der Ploeg, F. & de Zeeuw, A. Non-cooperative and cooperative responses to climate catastrophes in the global economy: A north–south perspective. Environ. Resource Economics 65, 519–540 (2016).

Morrow, J. D. Game theory for political scientists. 30, 519.83 (Princeton University Press, 1994).

Buskens, V. & Snijders, C. Effects of network characteristics on reaching the payoff-dominant equilibrium in coordination games: a simulation study. Dyn. Games Applications 6, 477–494 (2016).

Perc, M. Phase transitions in models of human cooperation. Phys. Lett. A 380, 2803–2808 (2016).

Nowak, M. A. Five rules for the evolution of cooperation. Sci. 314, 1560–1563 (2006).

Hauert, C. & Szabó, G. Game theory and physics. Am. J. Phys. 73, 405–414 (2005).

Turner, P. E. & Chao, L. Prisoner’s dilemma in an rna virus. Nat. 398, 441 (1999).

MacLean, R. C. & Gudelj, I. Resource competition and social conflict in experimental populations of yeast. Nat. 441, 498 (2006).

Szolnoki, A. et al. Cyclic dominance in evolutionary games: a review. J. Royal Soc. Interface 11, 20140735 (2014).

Van Der Hofstad, R. Random graphs and complex networks. Available on, http://www.win.tue.nl/rhofstad/NotesRGCN.pdf 11 (2009).

Szabó, G. & Fath, G. Evolutionary games on graphs. Phys. Reports 446, 97–216 (2007).

Allen, B. & Nowak, M. A. Games on graphs. EMS Surv. Math. Sci. 1, 113–151 (2014).

Szabó, G. & Tőke, C. Evolutionary prisoner’s dilemma game on a square lattice. Phys. Rev. E 58, 69 (1998).

Hauert, C. & Doebeli, M. Spatial structure often inhibits the evolution of cooperation in the snowdrift game. Nat. 428, 643 (2004).

Gore, J., Youk, H. & Van Oudenaarden, A. Snowdrift game dynamics and facultative cheating in yeast. Nat. 459, 253 (2009).

Zomorrodi, A. R. & Segrè, D. Genome-driven evolutionary game theory helps understand the rise of metabolic interdependencies in microbial communities. Nat. Commun. 8, 1563 (2017).

Nash, J. F. et al. Equilibrium points in n-person games. Proc. National Academy Sciences 36, 48–49 (1950).

Aumann, R. J. Correlated equilibrium as an expression of bayesian rationality. Econom. J. Econom. Soc. 1–18 (1987).

Mailath, G. J., Samuelson, L. & Shaked, A. Correlated equilibria and local interactions. Econ. Theory 9, 551–556 (1997).

Wong, K. -C., Kim, C. et al. Evolutionarily stable correlation. In Econometric Society 2004 Far Eastern Meetings, 495 (Econometric Society, 2004).

Lenzo, J. & Sarver, T. Correlated equilibrium in evolutionary models with subpopulations. Games Econ. Behav. 56, 271–284 (2006).

Metzger, L. P. Evolution and correlated equilibrium. J. Evol. Econ. 28, 333–346 (2018).

Stoof, H. T., Gubbels, K. B. & Dickerscheid, D. Ultracold quantum fields (Springer, 2009).

Cripps, M. Correlated equilibria and evolutionary stability. J. Econ. Theory 55, 428–434 (1991).

Rand, D. G., Arbesman, S. & Christakis, N. A. Dynamic social networks promote cooperation in experiments with humans. Proc. Natl. Acad. Sci. 108, 19193–19198 (2011).

Broere, J., Buskens, V., Weesie, J. & Stoof, H. Network effects on coordination in asymmetric games. Sci. Reports 7, 17016 (2017).

Gracia-Lázaro, C. et al. Heterogeneous networks do not promote cooperation when humans play a prisoner’s dilemma. Proc. Natl. Acad. Sci. 109, 12922–12926 (2012).

Cassar, A. Coordination and cooperation in local, random and small world networks: Experimental evidence. Games Econ. Behav. 58, 209–230 (2007).

Wang, Z. et al. Statistical physics of vaccination. Phys. Reports 664, 1–113 (2016).

Adami, C. & Hintze, A. Thermodynamics of evolutionary games. Phys. Rev. E 97, 062136 (2018).

Perc, M. et al. Statistical physics of human cooperation. Phys. Reports 687, 1–51 (2017).

Perc, M. & Szolnoki, A. Coevolutionary games—a mini review. BioSystems 99, 109–125 (2010).

Cardinot, M., Griffith, J. & O’Riordan, C. A further analysis of the role of heterogeneity in coevolutionary spatial games. Phys. A: Stat. Mech. its Appl. 493, 116–124 (2018).

Cardinot, M., Griffith, J., O’Riordan, C. & Perc, M. Cooperation in the spatial prisoner’s dilemma game with probabilistic abstention. Sci. Reports 8 (2018).

Wang, Z., Wang, L., Szolnoki, A. & Perc, M. Evolutionary games on multilayer networks: a colloquium. The Eur. Phys. J. B 88, 124 (2015).

Acknowledgements

We thank Joris Broere and Vincent Buskens for discussions during the research. This work is supported by the Complex Systems Fund, with special thanks to Peter Koeze.

Author information

Authors and Affiliations

Contributions

A.D.C. implemented and formalized the response strategy and analyzed its consequences. H.T.C.S. contributed to the insight into and the interpretation of the response strategy. Both authors contributed to the writing of the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Correia, A.D., Stoof, H.T.C. Nash Equilibria in the Response Strategy of Correlated Games. Sci Rep 9, 2352 (2019). https://doi.org/10.1038/s41598-018-36562-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-36562-2

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.