Abstract

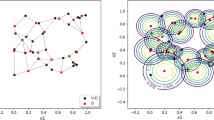

Recently, the complexity control of dynamic neural models has gained significant attention. The performance of such a process depends highly on the applied definition of ‘model complexity’. On the other hand, the learning theory creates a framework to assess the learning properties of models. These properties include the required size of the training samples as well as the statistical confidence over the model. In this Letter, we apply the learning properties of two families of Radial Basis Function Networks (RBFN's) to introduce new complexity measures that reflect the learning properties of such neural model. Then, based on these complexity terms we define cost functions, which provide a balance between the training and testing performances of the model.

Similar content being viewed by others

References

Valiant, L. G.: A theory of learnable, Comm. ACM, (1984), 1134–1142.

Najarian, K., Guy, A. Dumont and Michael, S. Davies.: PAC learning in Nonlinear FIR Models, International Journal of Adaptive Control and Signal Processing, 15(1) (2001), 37–52.

Muhlenbein, H.: Evolution and optimization '89.Selected papers on evolution theory, combinatorial optimization, and related topics. Papers from the International Workshop on Evolution Theory and Combinatorial Optimization held in Eisenach, Akademie-Verlag, Berlin, 1990.

Vapnik, V. N. and Chervonenkis, A. Y.: On the uniform convergence of relative frequencies of events to their probabilities, Theory of Probability and its Applications, 16(2) (1971), 264–280.

Vapnik, V. N.: Statistical Learning Theory, Wiley, New York, 1996.

Vapnik, V. N. and Chervonenkis, A. Ya.: The necessary and sufficient conditions of the method of empirical risk minimization, Pattern Recognition and Image Analysis, 1(3) (1991), 284–305.

Najarian, K., Dumont, G.A. and Davies, M. S.: A learning-theory-based training algorithm for variable-structure dynamic neural modeling, Proc. Inter. Joint Conf. Neural Networks (IJCNN99), 1999.

Najarian, K., Dumont, G. A., Davies, M. S., Heckman, N. E.: Learning of FIR Models Under Uniform Distribution, Proc.of The American Control Conference, San Dieo, U.S.A. (ACC1999), (June 1999), 864–869.

Bartlett, P.: The sample complexity of pattern classification with neural networks: the size of the weights is more important than the size of the network, Amer. Statistical Assoc. Math. Soc. Transactions, 17 (1996), 277–364.

Najarian, K.: Application of learning theory in neural modeling of dynamic systems, Department of Electrical and Computer Engineering, University of British Columbia, Ph.D. thesis, 2000.

Najarian, K., Dumont, G. A. and Davies, M. S.: Complexity Control of Neural Networks Using Learning Theory, Part I: Theory, Proc. IASTED Conf. on Signal and Image Processing (SIP 2000), Nov. 2000.

Najarian, K. Dumont, G. A. and Davies, M. S.: Complexity Control of Neural Networks Using Learning Theory, Part II: Application in Neural Modeling of Two-Dimensional Scanning System, Proc.of IASTED Conf. on Signal and Image Processing (SIP 2000), Nov. 2000.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Najarian, K. Learning-Based Complexity Evaluation of Radial Basis Function Networks. Neural Processing Letters 16, 137–150 (2002). https://doi.org/10.1023/A:1019999408474

Issue Date:

DOI: https://doi.org/10.1023/A:1019999408474