Abstract

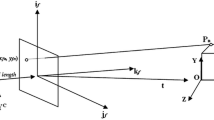

This paper examines the inherent difficulties in observing 3D rigid motion from image sequences. It does so without considering a particular estimator. Instead, it presents a statistical analysis of all the possible computational models which can be used for estimating 3D motion from an image sequence. These computational models are classified according to the mathematical constraints that they employ and the characteristics of the imaging sensor (restricted field of view and full field of view). Regarding the mathematical constraints, there exist two principles relating a sequence of images taken by a moving camera. One is the “epipolar constraint,” applied to motion fields, and the other the “positive depth” constraint, applied to normal flow fields. 3D motion estimation amounts to optimizing these constraints over the image. A statistical modeling of these constraints leads to functions which are studied with regard to their topographic structure, specifically as regards the errors in the 3D motion parameters at the places representing the minima of the functions. For conventional video cameras possessing a restricted field of view, the analysis shows that for algorithms in both classes which estimate all motion parameters simultaneously, the obtained solution has an error such that the projections of the translational and rotational errors on the image plane are perpendicular to each other. Furthermore, the estimated projection of the translation on the image lies on a line through the origin and the projection of the real translation. The situation is different for a camera with a full (360 degree) field of view (achieved by a panoramic sensor or by a system of conventional cameras). In this case, at the locations of the minima of the above two functions, either the translational or the rotational error becomes zero, while in the case of a restricted field of view both errors are non-zero. Although some ambiguities still remain in the full field of view case, the implication is that visual navigation tasks, such as visual servoing, involving 3D motion estimation are easier to solve by employing panoramic vision. Also, the analysis makes it possible to compare properties of algorithms that first estimate the translation and on the basis of the translational result estimate the rotation, algorithms that do the opposite, and algorithms that estimate all motion parameters simultaneously, thus providing a sound framework for the observability of 3D motion. Finally, the introduced framework points to new avenues for studying the stability of image-based servoing schemes.

Similar content being viewed by others

References

Adiv, G. 1985. Determining 3D motion and structure from optical flow generated by several moving objects. IEEE Transactions on Pattern Analysis and Machine Intelligence, 7:384–401.

Adiv, G. 1989. Inherent ambiguities in recovering 3-D motion and structure from a noisy flow field. IEEE Transactions on Pattern Analysis and Machine Intelligence, 11:477–489.

Aloimonos, J., Weiss, I., and Bandopadhay, A. 1988. Active vision. International Journal of Computer Vision, 2:333–356.

Aloimonos, J.Y. 1990. Purposive and qualitative active vision. In Proc. DARPA Image Understanding Workshop, pp. 816–828.

Bajcsy, R. 1988. Active perception. Proceedings of the IEEE, 76:996–1005.

Ballard, D.H. and Brown, C.M. 1992. Principles of animate vision. CVGIP: Image Understanding: Special Issue on Purposive, Qualitative, Active Vision, Y. Aloimonos (Ed.), 56:3–21.

Ballard, D.H. and Kimball, O.A. 1983. Rigid body motion from depth and optical flow. Computer Vision, Graphics, and Image Processing, 22:95–115.

Bandopadhay, A. 1986. A computational approach to visual motion perception. PhD Thesis, Department of Computer Science, University of Rochester.

Burger, W. and Bhanu, B. 1990. Estimating 3-D egomotion from perspective image sequences. IEEE Transactions on Pattern Analysis and Machine Intelligence, 12:1040–1058.

Chaumette, F. 1998. Potential problems of stability and convergence in image-based and position-based visual servoing. In The Confluence of Vision and Control, D. Kriegman, G. Hager, and A.S. Morse (Eds.). Springer-Verlag, pp. 66–78. Lecture Notes in Control and Informations Systems, Vol. 237.

Daniilidis, K. 1992. On the error sensitivity in the recovery of object descriptions. PhD thesis, Department of Informatics, University of Karlsruhe, Germany, in German.

Daniilidis, K. and Nagel, H.-H. 1990. Analytical results on error sensitivity of motion estimation from two views. Image and Vision Computing, 8:297–303.

Daniilidis, K. and Spetsakis, M.E. 1997. Understanding noise sensitivity in structure from motion. In Visual Navigation: From Biological Systems to Unmanned Ground Vehicles. Lawrence Erlbaum Associates: Mahwah, NJ, ch. 4.

Dean, T. and Wellman, M. 1991. Planning and Control. Morgan Kaufmann.

Dutta, R. and Snyder, M. 1990. Robustness of correspondence-based structure from motion. In Proc. International Conference on Computer Vision, pp. 106–110.

Espiau, B., Chaumette, F., and Rives, P. 1992. A new approach to visual servoing in robotics. IEEE Transactions on Robotics and Automation, 8:313–326.

Faugeras, O.D., Lustman, F., and Toscani, G. 1987. Motion and structure from motion from point and line matches. In Proc. International Conference on Computer Vision, pp. 25–34.

Feddema, J., Lee, C., and Mitchell, O. 1989. Automatic selection of image features for visual servoing of a robot manipulator. In IEEE International Conference on Robotics andAutomation, Scottsdale, AZ, Vol. 2, pp. 832–837.

Feddema, J.T., Lee, C.S.G., and Mitchell, O.R. 1993. Feature-based visual servoing of robotic systems. In Visual Servoing, K. Hashimoto (ed.). World Scientific: New York, pp. 105–138.

Fermüller, C. and Aloimonos, Y. 1995a. Direct perception of three-dimensional motion from patterns of visual motion. Science, 270:1973–1976.

Fermüller, C. and Aloimonos, Y. 1995. Qualitative egomotion. International Journal of Computer Vision, 15:7–29.

Hager, G.D., Grunwald, G., and Hirzinger, G. 1994. Feature-based visual servoing and its application to telerobotics. In Proc. IEEE/RSJ/GI International Conference on Intelligent Robots Systems, Vol. 1, pp. 164–171.

Heeger, D.J. and Jepson, A.D. 1992. Subspace methods for recovering rigid motion I: Algorithm and implementation. International Journal of Computer Vision, 7:95–117.

Horaud, R., Dornaika, F., and Espiau, B. 1998. Visual guided object grasping. IEEE Transactions on Robotics and Automation, 14:525–532.

Horn, B.K.P. 1986. Robot Vision. McGraw Hill: New York.

Horn, B.K.P. 1990. Relative orientation. International Journal of Computer Vision, 4:59–78.

Horn, B.K.P. and Weldon, Jr, E.J. 1988. Direct methods for recovering motion. International Journal of Computer Vision, 2:51–76.

Hutchinson, S., Hager, G.D., and Corke, P.I. 1996. Atutorial on visual servo control. IEEE Transactions on Robotics and Automation, 12:651–670.

Jepson, A.D. and Heeger, D.J. 1990. Subspace methods for recovering rigid motion II: Theory. Technical Report RBCV-TR-90-36, University of Toronto.

Koenderink, J.J. and van Doorn, A.J. 1994. Two-plus-onedimensional differential geometry. Pattern Recognition Letters, 15:439–443.

Longuet-Higgins, H.C. and Prazdny, K. 1980. The interpretation of a moving retinal image. Proc. Royal Society, London B, 208:385–397.

Maybank, S.J. 1986. Algorithm for analysing optical flow based on the least-squares method. Image and Vision Computing, 4:38–42.

Maybank, S.J. 1987. A Theoretical Study of Optical Flow. PhD thesis, University of London.

Maybank, S.J. 1993. Theory of Reconstruction from Image Motion. Springer: Berlin.

Nelson, B. and Khosla, P. 1994. The resolvability ellipsoid for visual servoing. In Proc. IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, pp. 829–832.

Philip, J. 1991. Estimation of three-dimensional motion of rigid objects from noisy observations. IEEE Transactions on Pattern Analysis and Machine Intelligence, 13:61–66.

Prazdny, K. 1980. Egomotion and relative depth map from optical flow. Biological Cybernetics, 36:87–102.

Prazdny, K. 1981. Determining instantaneous direction of motion from optical flow generated by a curvilinear moving observer. Computer Vision, Graphics, and Image Processing, 17:238–248.

Rieger, J.H. and Lawton, D.T. 1985. Processing differential image motion. Journal of the Optical Society of America A, 2:354–359.

Sharma, R. and Hutchinson, S. 1995. Optimizing hand/eye configuration for visual servo systems. In IEEE International Conference on Robotics and Automation, Nagoya, Japan, Vol. 1, pp. 172–177.

Spetsakis, M.E. and Aloimonos, J. 1988. Optimal computing of structure from motion using point correspondence. In Proc. Second International Conference on Computer Vision, pp. 449–453.

Spetsakis, M.E. and Aloimonos, J. 1989. Optimal motion estimation. In Proc. IEEE Workshop on Visual Motion, pp. 229–237.

Thomas, J.I., Hanson, A., and Oliensis, J. 1993. Understanding noise: The critical role of motion error in scene reconstruction. In Proc. DARPA Image Understanding Workshop, pp. 691–695.

Young, G.S. and Chellappa, R. 1992. Statistical analysis of inherent ambiguities in recovering 3-D motion from a noisy flow field. IEEE Transactions on Pattern Analysis and Machine Intelligence, 14:995–1013.

Zhuang, X., Huang, T.S., and Haralick, R.M. 1986. Two-view motion analysis: A unified algorithm. Journal of the Optical Society of America A, 3:1492–1500.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Fermüller, C., Aloimonos, Y. Observability of 3D Motion. International Journal of Computer Vision 37, 43–63 (2000). https://doi.org/10.1023/A:1008177429387

Issue Date:

DOI: https://doi.org/10.1023/A:1008177429387