Impact Statement

High-resolution and multiplexed imaging techniques are giving us an increasingly detailed observation of a biological system. However, sharing, exploring, and customizing the visualization of large multidimensional images without a graphical user interface, is a major challenge. Here, we introduce Samui Browser, a performant and interactive image visualization tool that runs completely in a web browser. To the best of our knowledge, we are the first to propose a web-based solution to share, explore, and annotate large multidimensional images. This is a significant advance as there are no other web-based visualization tools that can share large images. This statement spans commercial and noncommercial platforms. Examples of how someone might use the Samui Browser are to share the images with collaborators who want to visualize the spatial location of gene expression or proteins from multiplex images. Samui Browser can also be used to generate publicly available data resources for publication to make datasets easily accessible to the broader scientific community.

1. Background

Recent technological advances have led to the generation of increasingly large and multi-dimensional images that can be used for spatially resolved transcriptomics (SRT)(Reference Marx1). This enables researchers to map transcriptome-wide gene expression data to spatial coordinates within intact tissue at near- or subcellular resolution. By combining two distinct data modalities, this technology can yield unprecedented insight into the spatial regulation of gene expression(Reference Way, Spitzer and Burnham2). Yet, this combination poses a unique computational and visualization challenge—we now have high-resolution images with tens of thousands of dimensions of features, each corresponding to a gene from the transcriptome.

Existing open-source image processing tools, such as ImageJ/Fiji(Reference Schroeder, Dobson, Rueden, Tomancak, Jug and Eliceiri3) and napari(Reference Sofroniew, Evans and Nunez-Iglesias4) are often used for image visualization, but are not designed to view more than a few dimensions of data. Large images are commonly distributed using the tag image file format (TIFF). To browse and interact with these datasets, users need to load the entire dataset into memory, which limits the use of these datasets to specialized workstations with large amounts of memory (e.g., tens to hundreds of gigabytes)(Reference Levet, Carpenter, Eliceiri, Kreshuk, Bankhead and Haase5). This computational requirement limits the larger scientific community from accessing many datasets. The ability to access these datasets is also limited by the need to download datasets prior to visualization.

An alternative solution is web-based visualization tools, which allow users to view images without downloading. For example, neuroglancer(6), a volumetric data viewer, allows users to view high-dimensional datasets with a hyperlink. However, its original design goal as a connectomics viewer means that it cannot view high-dimensional annotations. Interactive web-based applications in R, such as Shiny apps(Reference Chang, Cheng and Allaire7), are a common vehicle but they rely on a server instance of R, which limits scalability and unavoidably introduces latency.

Here, we developed a web-based open-source application, Samui. Built on top of a GPU-accelerated geospatial analysis package, OpenLayers(8), Samui can responsively display large, multi-channel images (>20,000 × 20,000 pixels) and their annotations in the web browser. In addition to local files, Samui can directly open processed images from a cloud storage link, such as Amazon web services (AWS) S3 storage, without the need to download the entire dataset.

To achieve this level of performance, Samui utilizes the cloud-optimized GeoTIFF (COG) file format(9), which is designed by the geographic information systems (GIS) community to display raster maps. This format has the ability to organize the full image into tiles of varying resolution, allowing Samui to only selectively load sections of the image that the user is viewing. Feature data, such as gene expression or cell segmentation, are also similarly chunked and loaded on demand. This “lazy” technique drastically reduces the download requirements and memory consumption of Samui.

2. Results and Discussion

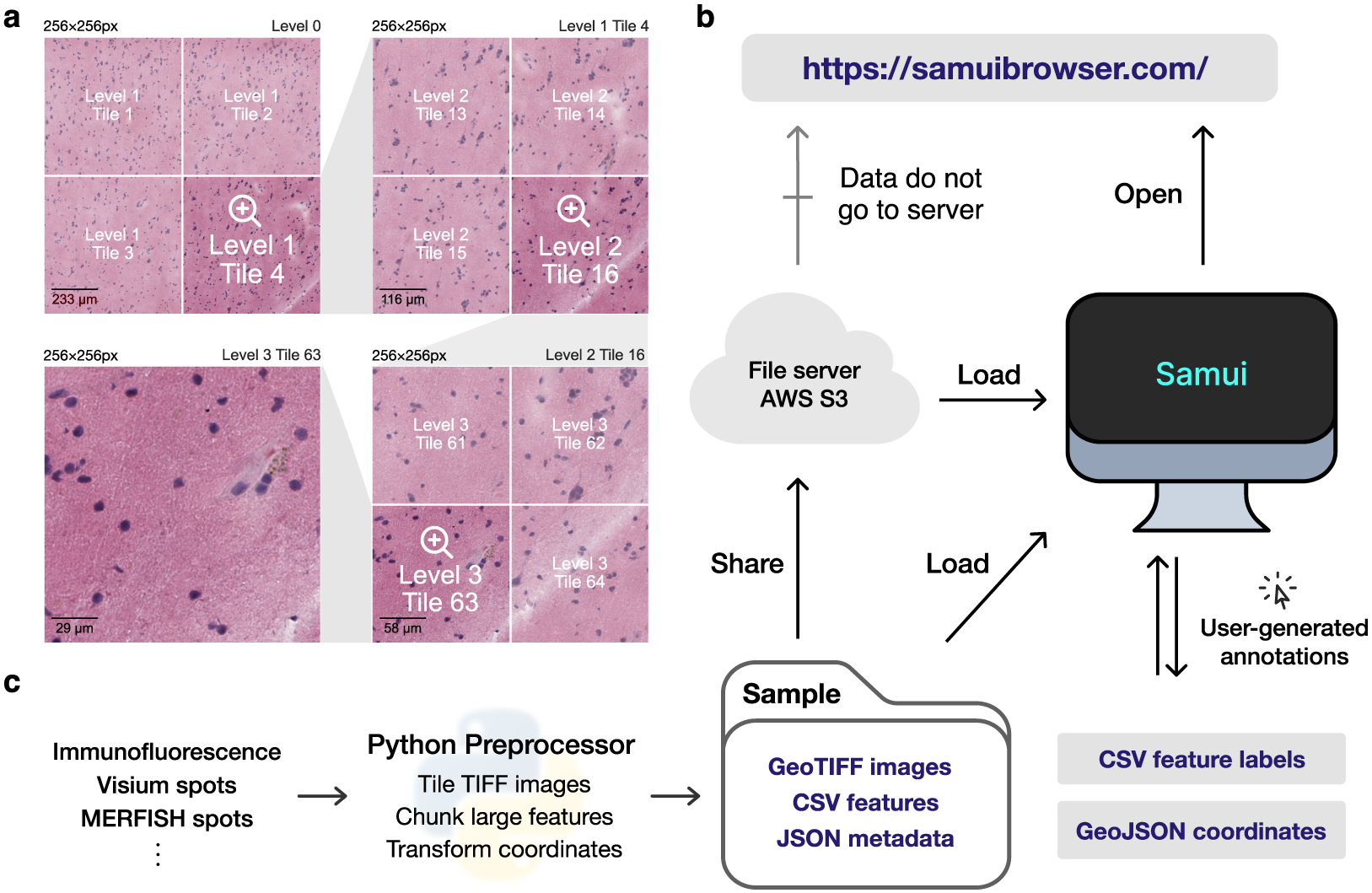

Samui enables interactive and responsive visualization of large, multidimensional images that can easily be shared with the larger scientific community (Figure 1). We focus our efforts here to provide an overview of the Samui and describe key innovations compared to currently available open-source image visualization tools with examples from SRT including spatial barcoding followed by sequencing(Reference Ståhl, Salmén and Vickovic10–Reference Stickels, Murray and Kumar13) or fluorescence imaging-based transcriptomics(Reference Eng, Lawson and Zhu14, Reference Xia, Fan, Emanuel, Hao and Zhuang15).

Figure 1. Overview of the Samui. (a) Input images are stored in a tiled GeoTIFF file format. When a user opens the browser, at the lowest zoom setting, only tiles from level 0 are downloaded. With increasing Zoom setting, tiles from increasing levels within the field of view are downloaded as needed. (b) Samui is statically served and relies completely on the client machine for all functionalities. It can load files from a cloud storage link or from the local machine. (c) Samui takes in a Sample folder that contains chunked images, CSV features, and JSON metadata. These are generated with the Python preprocessor and the browser also supports user-generated annotations.

The browser primarily takes as input (a) a JSON (Javascript object notation) file that describes the metadata of the dataset and (b) a COG file containing n-dimensional tiled images as pixel intensity (Figure 1a). Feature information (e.g., gene expression data) can be represented using standard comma-separated value (CSV) files, but can also be represented by a set of sparse and chunked data frames stored in a binary format for fast readout. COG was chosen over alternative chunked file formats, such as HDF5(16) and Zarr(17) file formats, as the byte-indexable structure of COGs easily enables simple HTTP GET(18) range requests can be used to retrieve fine-grained portions of the COG. It also is specialized for image data and is rigorously specified by the OGC GeoTiff Standard(19).

Samui is statically served and relies completely on the client machine for all functionalities. Users can open these files directly from their local file system. In addition, as a web application, Samui can directly open the links to the hosted images in cloud storage without the need to fully download them first (Figure 1b). This approach of web-based visualization has been successfully applied for other data types including genomics(Reference Nusrat, Harbig and Gehlenborg20), geospatial(21), and astronomy(Reference Diblen, Hendriks, Stienen, Caron, Bakhshi and Attema22).

The input files can be generated using a provided Python-based command line tool and graphical user interface (GUI) provided along with Samui. To support SRT data, Samui offers functionality to convert standard output from quantification tools such as 10x Genomics Space Ranger to these cloud-optimized file formats (Figure 1c).

As noted above, a particularly important data analysis step of SRT bio-images is annotation. Samui can be used to rapidly annotate features, such as Visium spots (Figure 2a) or individual cell types (Figure 2b), that can be exported as CSVs and GeoJSON files. It can also import existing annotations from other software, such as from napari or ImageJ/Fiji. For example, using data from the 10x Genomics Visium Spatial Gene Expression platform, a Samui user can annotate at the cell (segmented cells/points on the image), spot (55 μm circles), or spatial domain (polygons) level (Figure 2a). Annotation can also be done using data acquired from the 10x Genomics Visium Spatial Proteogenomics (Visium-SPG) platform, which generates multiplex images of fluorescently labeled proteins paired with gene expression(Reference Huuki-Myers, Spangler and Eagles23).

Figure 2. Visualizing and annotating multi-dimensional images in Samui. A user can easily interact with and annotate spatial coordinates on top of multidimensional images with data such as from the (a) 10x Genomics Visium Spatial Gene Expression platform or (b) Vizgen MERFISH platform.

3. Conclusions

Here, we developed Samui, an open-source and web-based image visualization system. We demonstrated the broad utility of the Browser with a rapid data exploration of images generated with Vizgen MERFISH and the 10x Genomics Visium Spatial Gene Expression or Proteogenomics platforms. The key strengths of the Samui compared with existing open-source image tools(Reference Schroeder, Dobson, Rueden, Tomancak, Jug and Eliceiri3, Reference Sofroniew, Evans and Nunez-Iglesias4, Reference Dries, Zhu and Dong24, Reference Pardo, Spangler and Weber25) for visualization and annotation include (a) leveraging COG files that efficiently stream large scientific images, (b) leveraging web-based browser to easily visualize and share images with the scientific community, and (c) the ability to easily annotate images, which is particularly important in SRT applications. Our visualization tool can be used to view multiple modalities at the same time along with multiple images each with individual n-dimensional information. Currently, only raster data (images), but not vector data (spots and features) are tiled, which limits the number of features that we can visualize at good performance to the order of 100,000 s. We plan to include tiling for vector data in a feature release which will further enhance Samui’s ability to visualize data of different modalities, including single-molecule RNA spots and cell segmentation traces. The features offered in Samui complement those of other visualization tools and provide researchers with a better understanding of these complex datasets.

4. Methods

4.1. Samui

Samui is written with SvelteKit v1.20 and TypeScript v4.6. The latest version of Samui is available at https://github.com/chaichontat/samui. A prepackaged GUI program to convert images and spaceranger outputs is available at https://github.com/chaichontat/samui/releases. Samui was tested in Google Chrome v116 and Safari 15. Performance is lower in Mozilla Firefox v114 due to its WebGL implementation.

The WebGL image renderer is an adaptation from OpenLayers v7.4, a geospatial visualization framework. Samui displays images with the COG format and stores other data in the CSV format. Each dataset is organized into a “Sample” folder that contains a master JSON (Javascript object notation) file with the metadata of the dataset. This file provides links to other COGs and CSVs that describe different overlays and features.

As data in spatial transcriptomics tend to share a common coordinate, we separated the notion of coordinates and features in Samui to reduce redundancy. An overlay can be thought of as a layer of points, each with a spatial coordinate. Features are the categorical or quantitative values associated with each point in an overlay. For example, in a Visium experiment, the position of a Visium spot would be stored as an overlay whereas the expression of a gene at each spot would be a feature. For large datasets, CSVs can be sparsified, chunked, and compressed to allow lazy loading of feature data. This is done in the Visium and MERFISH example described below.

4.2. Input data for Samui

Samui supports image conversion from a TIFF file. All TIFF images were transformed into the COG format in Python 3.10 using GDAL v3.6.0 and rasterio v1.3.4. JPEG compression was performed using gdal_translate at quality 90. An example script to generate a COG from an example TIFF file is available at https://github.com/chaichontat/samui/blob/f4c886afe92400a651cbc59619b6060975b264c7/scripts/process_image.py. Overlays and feature data can be converted from a pandas DataFrame format using the provided Python API.

4.3. Visium spatial gene expression data

Full-resolution images, filtered spaceranger output, and annotations were obtained from Ref. (Reference Maynard, Collado-Torres and Weber26) (https://github.com/LieberInstitute/HumanPilot). The script to transform this data is available at https://github.com/chaichontat/samui/blob/f4c886afe92400a651cbc59619b6060975b264c7/scripts/process_humanpilot.py. Briefly, filtered outputs from 10x Genomics spaceranger v1.3.1 were opened using anndata v0.8 (https://anndata.readthedocs.io/en/latest) and log-transformed into a chunked CSV format. Images were Gaussian filtered with a radius of 4 to reduce artifacts, and processed into the Samui format as described above.

4.4. MERFISH data

Cell by gene data matrices were obtained from the Vizgen data release program(27, 28). Images from the first z-stack were used as the background. The overlay is the position of each cell and features are the counts of MERFISH spots in each cell. Images and overlays were aligned using the provided affine transformation matrix. The script to transform Vizgen-formatted data is available at https://github.com/chaichontat/samui/blob/f4c886afe92400a651cbc59619b6060975b264c7/scripts/process_merfish.py.

4.5. Visium-SPG data

Full-resolution IF images, filtered spaceranger output, and annotations were obtained from Ref. (Reference Huuki-Myers, Spangler and Eagles23) (https://github.com/LieberInstitute/spatialDLPFC). Primary antibodies were for four brain cell-type marker proteins, NeuN for neurons, TMEM119 for microglia, GFAP for astrocytes, and OLIG2 for oligodendrocytes.

Abbreviations

- AWS

-

Amazon web services

- COG

-

cloud-optimized GeoTIFF

- CSV

-

comma-separated value

- GIS

-

geographic information systems

- GUI

-

graphical user interface

- HDF5

-

hierarchical data format version 5

- SRT

-

spatially resolved transcriptomics

- TIFF

-

tag image file format

Acknowledgments

We would like to thank Sang Ho Kwon, Madhavi Tippani, and Stephanie Cerceo Page for the generation and processing of spatial transcriptomics images and data that were utilized to demonstrate the utility of Samui as well as for helpful comments, feedback, and suggestions on Samui functionality.

Competing interest

The authors declare that they have no competing interests.

Authorship contribution

Conceptualization: C.S., S.C.H.; Data curation: C.S.; Formal analysis: C.S., S.C.H.; Funding acquisition: K.M., K.R.M., S.C.H., L.C.-T.; Investigation: C.S., A.N., N.J.E., K.M., K.R.M., S.C.H.; Project administration: K.M., K.R.M., S.C.H.; Software: C.S., A.N.; Supervision: K.M., K.R.M., S.C.H.; Writing—original draft: C.S., S.C.H.; Writing—review and editing: C.S., A.N., N.J.E., K.M., K.R.M., S.C.H. K.R.M. and S.C.H. contributed equally to this work.

Funding statement

This project was supported by the Lieber Institute for Brain Development, National Institute of Mental Health U01MH122849 and R01MH126393 (K.R.M., K.M., S.C.H., L.C.-T.), and CZF2019-002443 (S.C.H.) from the Chan Zuckerberg Initiative DAF, an advised fund of Silicon Valley Community Foundation. All funding bodies had no role in the design of the study and collection, analysis, and interpretation of data and in writing the manuscript.

Data availability statement

Samui is available at https://samuibrowser.com (v1.0.0-next.45). The 10x Genomics Visium data was obtained from Refs. (Reference Huuki-Myers, Spangler and Eagles23 and Reference Maynard, Collado-Torres and Weber26). The source MERFISH data is available from the Vizgen Data Release Program(27, 28). The code to reproduce the analyses and figures is available at https://github.com/chaichontat/samui/tree/f4c886afe92400a651cbc59619b6060975b264c7 and the software for the browser is available at https://github.com/chaichontat/samui.