Abstract

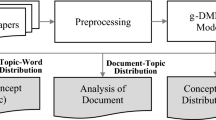

A vast number of research papers on numerous topics publish every year in different conferences and journals. Thus, it is difficult for new researchers to identify research problems and topics manually, which research community is currently focusing on. Since such research problems and topics help researchers to be updated with new topics in research, it is essential to know trends in research based on topic significance over time. Therefore, in this paper, we propose a method to identify the trends in machine learning research based on significant topics over time automatically. Specifically, we apply a topic coherence model with latent Dirichlet allocation (LDA) to evaluate the optimal number of topics and significant topics for a dataset. The LDA model results in topic proportion over documents where each topic has its probability (i.e., topic weight) related to each document. Subsequently, the topic weights are processed to compute average topic weights per year, trend analysis using rolling mean, topic prevalence per year, and topic proportion per journal title. To evaluate our method, we prepare a new dataset comprising of 21,906 scientific research articles from top six journals in the area of machine learning published from 1988 to 2017. Extensive experimental results on the dataset demonstrate that our technique is efficient, and can help upcoming researchers to explore the research trends and topics in different research areas, say machine learning.

Similar content being viewed by others

References

Aletras N, Stevenson M. Evaluating topic coherence using distributional semantics. In: Proceedings of the 10th international conference on computational semantics (IWCS 2013)–long papers. 2013. p. 13–22.

Alghamdi R, Alfalqi K. A survey of topic modeling in text mining. Int J Adv Comput Sci Appl (IJACSA). 2015;6(1), Citeseer.

Alston J, Pardey P. Six decades of agricultural and resource economics in Australia: an analysis of trends in topics, authorship and collaboration. Aust J Agric Resour Econ. 2016;60(4):554–68.

Loper E, Bird S. Nltk: the natural language toolkit. 2002. arXiv:cs/0205028.

Blei DM, Lafferty JD. Topic models. In: Text mining. Chapman and Hall/CRC; 2009. p. 101–124.

Blei DM, Ng AY, Jordan MI. Latent Dirichlet allocation. J Mach Learn Res. 2003;3(Jan):993–1022.

Blei DM, Jordan MI, et al. Variational inference for Dirichlet process mixtures. Bayesian Anal. 2006;1(1):121–43.

Boyack KW, Klavans R. Creation of a highly detailed, dynamic, global model and map of science. J Am Soc Inf Sci. 2014;65(4):670–85.

Bradford RB. An empirical study of required dimensionality for large-scale latent semantic indexing applications. In: Proceedings of the 17th ACM conference on information and knowledge management. ACM; 2008. p. 153–62.

Buckley C, Salton G. Stopword list 2. 1995. http://www.lextek.com/manuals/onix/stopwords2.html.

Campbell JC, Hindle A, Stroulia E. Latent Dirichlet allocation: extracting topics from software engineering data. In: The art and science of analyzing software data. Elsevier; 2016. p. 139–59.

Canini K, Shi L, Griffiths T. Online inference of topics with latent Dirichlet allocation. In: Artificial intelligence and statistics. 2009. p. 65–72.

Carbonell JG, Michalski RS, Mitchell TM. Machine learning: a historical and methodological analysis. AI Mag. 1983;4(3):69.

Chang J, Gerrish S, Wang C, Boyd-Graber JL, Blei DM. Reading tea leaves: how humans interpret topic models. In: Advances in neural information processing systems. 2009. p. 288–96.

Deerwester S, Dumais ST, Furnas GW, Landauer TK, Harshman R. Indexing by latent semantic analysis. J Am Soc Inf Sci. 1990;41(6):391–407.

Domingos P. A few useful things to know about machine learning. Commun ACM. 2012;55(10):78–87.

Erosheva E, Fienberg S, Lafferty J. Mixed-membership models of scientific publications. Proc Natl Acad Sci. 2004;101(suppl 1):5220–7.

Evangelopoulos N, Zhang X, Prybutok VR. Latent semantic analysis: five methodological recommendations. Eur J Inf Syst. 2012;21(1):70–86.

Gatti CJ, Brooks JD, Nurre SG. A historical analysis of the field of or/ms using topic models. 2015. arXiv:1510.05154

Griffiths TL, Steyvers M. Finding scientific topics. Proc Natl Acad Sci. 2004;101(suppl 1):5228–35.

Grimmer J, Stewart BM. Text as data: the promise and pitfalls of automatic content analysis methods for political texts. Polit Anal. 2013;21(3):267–97.

Hall D, Jurafsky D, Manning CD. Studying the history of ideas using topic models. In: Proceedings of the 2008 conference on empirical methods in natural language processing. 2008. p. 363–71.

Harris ZS. Distributional structure. Word. 1954;10(2–3):146–62.

Hoffman M, Bach FR, Blei DM. Online learning for latent Dirichlet allocation. In: Advances in neural information processing systems. 2010. p. 856–64.

Hofmann T. Probabilistic latent semantic indexing. In: Proceedings of the 22nd annual international ACM SIGIR conference on research and development in information retrieval. 1999. p. 50–7.

Hwang MH, Ha S, In M, Lee K. A method of trend analysis using latent Dirichlet allocation. Int J Control Autom. 2018;11(5):173–82.

Larsen P, Von Ins M. The rate of growth in scientific publication and the decline in coverage provided by science citation index. Scientometrics. 2010;84(3):575–603.

Marr B. A short history of machine learning—every manager should read. 2016. https://www.forbescom/sites/bernardmarr/2016/02/19/a-shorthistory-of-machine-learning-every-managershould-read.

Mavridis T, Symeonidis AL. Semantic analysis of web documents for the generation of optimal content. Eng Appl Artif Intell. 2014;35:114–30.

Newman D, Lau JH, Grieser K, Baldwin T. Automatic evaluation of topic coherence. In: Human language technologies: The 2010 annual conference of the North American chapter of the association for computational linguistics. 2010. p. 100–8.

Porter MF. An algorithm for suffix stripping. Program. 1980;14(3):130–7.

Rehurek R, Sojka P. Software framework for topic modelling with large corpora. In: Proceedings of the LREC 2010 workshop on new challenges for NLP frameworks, Citeseer. 2010.

Röder M, Both A, Hinneburg A. Exploring the space of topic coherence measures. In: Proceedings of the eighth ACM international conference on Web search and data mining. 2015. p. 399–408.

Rusch T, Hofmarcher P, Hatzinger R, Hornik K, et al. Model trees with topic model preprocessing: an approach for data journalism illustrated with the wikileaks Afghanistan war logs. Ann Appl Stat. 2013;7(2):613–39.

Saini S, Kasliwal B, Bhatia S, et al. Language identification using g-lda. Int J Res Eng Technol. 2013;2(11):42–5. Citeseer.

Sehra SK, Brar YS, Kaur N, Sehra SS. Research patterns and trends in software effort estimation. Inf Softw Technol. 2017;91:1–21.

Srivastava AN, Sahami M. Text mining: classification, clustering, and applications. Boca Raton: Chapman and Hall/CRC; 2009.

Steyvers M, Griffiths T. Probabilistic topic models. In: Handbook of latent semantic analysis, vol 427, no 7. 2007. p. 424–40.

Sun L, Yin Y. Discovering themes and trends in transportation research using topic modeling. Transport Res Part C Emerg Technol. 2017;77:49–66.

Wallach HM, Murray I, Salakhutdinov R, Mimno D. Evaluation methods for topic models. In: Proceedings of the 26th annual international conference on machine learning. ACM; 2009. p. 1105–12.

Wang X, McCallum A. Topics over time: a non-Markov continuous-time model of topical trends. In: Proceedings of the 12th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM; 2006. p. 424–33.

Westgate MJ, Barton PS, Pierson JC, Lindenmayer DB. Text analysis tools for identification of emerging topics and research gaps in conservation science. Conserv Biol. 2015;29(6):1606–14.

Yalcinkaya M, Singh V. Patterns and trends in building information modeling (bim) research: a latent semantic analysis. Autom Constr. 2015;59:68–80.

Zhao W, Chen JJ, Perkins R, Liu Z, Ge W, Ding Y, Zou W. A heuristic approach to determine an appropriate number of topics in topic modeling. BMC Bioinform BioMed Central. 2015;16:S8.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sharma, D., Kumar, B., Chand, S. et al. A Trend Analysis of Significant Topics Over Time in Machine Learning Research. SN COMPUT. SCI. 2, 469 (2021). https://doi.org/10.1007/s42979-021-00876-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-021-00876-2