Abstract

Purpose

The process of robotic harvesting has revolutionized the agricultural industry, allowing for more efficient and cost-effective fruit picking. Developing algorithms for accurate fruit detection is essential for vision-based robotic harvesting of apples. Although deep-learning techniques are popularly used for apple detection, the development of robust models that can accord information about the fruit’s occlusion condition is important to plan a suitable strategy for end-effector manipulation. Apples on the tree experience occlusions due to leaves, stems (branches), trellis wire, or other fruits during robotic harvesting.

Methods

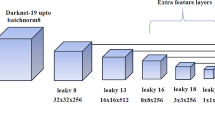

A novel two-stage deep-learning-based approach is proposed and successfully demonstrated for detecting on-tree apples and identifying their occlusion condition. In the first stage, the system employs a cutting-edge YOLOv7 model, meticulously trained on a custom Kashmiri apple orchard image dataset. The second stage of the approach utilize the powerful EfficientNet-B0 model; the system is able to classify the apples into four distinct categories based on their occlusion condition, namely, non-occluded, leaf-occluded, stem/wire-occluded, and apple-occluded apples.

Results

The YOLOv7 model achieved an average precision of 0.902 and an F1-score of 0.905 on a test set for detecting apples. The size of the trained weights and detection speed were observed to be 284 MB and 0.128 s per image. The classification model produced an overall accuracy of 92.22% with F1-scores of 94.64%, 90.91%, 86.87%, and 90.25% for non-occluded, leaf-occluded, stem/wire-occluded, and apple-occluded apple classes, respectively.

Conclusion

This study proposes a novel two-stage model for the simultaneous detection of on-tree apples and classify them based on occlusion conditions, which could improve the effectiveness of autonomous apple harvesting and avoid potential damage to the end-effector due to the objects causing the occlusion.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Abbreviations

- AOA:

-

Apple-occluded apples

- CNN:

-

Convolutional neural networks

- HSV:

-

Hue saturation value

- LOA:

-

Leaf-occluded apples

- NOA:

-

Non-occluded apples

- RGB:

-

Red green blue

- SGDM:

-

Stochastic gradient descent with momentum

- SOA:

-

Stem/wire-occluded apples

References

Annapoorna, B. R., & Babu, D. R. R. (2021). Detection and localization of cotton based on deep neural networks. Materials Today: Proceedings. https://doi.org/10.1016/J.MATPR.2021.07.249

Barbole, D. K., Jadhav, P. M., & Patil, S. B. (2022). A review on fruit detection and segmentation techniques in agricultural field. Lecture Notes in Networks and Systems, 300 LNNS, 269–288. https://doi.org/10.1007/978-3-030-84760-9_24/TABLES/2

Chen, W., Zhang, J., Guo, B., Wei, Q., & Zhu, Z. (2021). An apple detection method based on Des-YOLO v4 algorithm for harvesting robots in complex environment. Mathematical Problems in Engineering. https://doi.org/10.1155/2021/7351470

Chen, J., Liu, H., Zhang, Y., Zhang, D., Ouyang, H., & Chen, X. (2022). A multiscale lightweight and efficient model based on YOLOv7: Applied to citrus orchard. Plants, 11(23), 3260. https://doi.org/10.3390/PLANTS11233260

Choi, D., Lee, W. S., Schueller, J. K., Ehsani, R., Roka, F., & Diamond, J. (2017). A performance comparison of RGB, NIR, and depth images in immature citrus detection using deep learning algorithms for yield prediction. 2017 ASABE Annual International Meeting, 1. https://doi.org/10.13031/AIM.201700076

Chollet, F. (2016). Xception: Deep learning with depthwise separable convolutions. Proceedings - 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, 2017-January, 1800–1807. https://doi.org/10.48550/arxiv.1610.02357

Chu, P., Li, Z., Lammers, K., Lu, R., & Liu, X. (2021). Deep learning-based apple detection using a suppression mask R-CNN. Pattern Recognition Letters, 147, 206–211. https://doi.org/10.1016/J.PATREC.2021.04.022

Divyanth, L. G., Marzougui, A., González-Bernal, M. J., McGee, R. J., Rubiales, D., & Sankaran, S. (2022a). Evaluation of effective class-balancing techniques for CNN-based assessment of Aphanomyces root rot resistance in pea (Pisum sativum L.). Sensors, 22(19), 7237. https://doi.org/10.3390/S22197237/S1

Divyanth, L. G., Soni, P., Pareek, C. M., Machavaram, R., Nadimi, M., & Paliwal, J. (2022b). Detection of Coconut clusters based on occlusion condition using attention-guided faster R-CNN for robotic harvesting. Foods, 11(23), 3903. https://doi.org/10.3390/FOODS11233903

Fan, S., Liang, X., Huang, W., Jialong Zhang, V., Pang, Q., He, X., Li, L., & Zhang, C. (2022). Real-time defects detection for apple sorting using NIR cameras with pruning-based YOLOV4 network. Computers and Electronics in Agriculture, 193, 106715. https://doi.org/10.1016/J.COMPAG.2022.106715

Feng, J., Zeng, L., & He, L. (2019). Apple fruit recognition algorithm based on multi-spectral dynamic image analysis. Sensors, 19(4), 949. https://doi.org/10.3390/S19040949

Fu, L., Majeed, Y., Zhang, X., Karkee, M., & Zhang, Q. (2020). Faster R-CNN–based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosystems Engineering, 197, 245–256. https://doi.org/10.1016/J.BIOSYSTEMSENG.2020.07.007

Gan, H., Lee, W. S., Alchanatis, V., Ehsani, R., & Schueller, J. K. (2018). Immature green citrus fruit detection using color and thermal images. Computers and Electronics in Agriculture, 152, 117–125. https://doi.org/10.1016/J.COMPAG.2018.07.011

Gao, F., Fu, L., Zhang, X., Majeed, Y., Li, R., Karkee, M., & Zhang, Q. (2020). Multi-class fruit-on-plant detection for apple in SNAP system using Faster R-CNN. Computers and Electronics in Agriculture, 176, 105634. https://doi.org/10.1016/J.COMPAG.2020.105634

Gao, J., Westergaard, J. C., Sundmark, E. H. R., Bagge, M., Liljeroth, E., & Alexandersson, E. (2021). Automatic late blight lesion recognition and severity quantification based on field imagery of diverse potato genotypes by deep learning. Knowledge-Based Systems, 214, 106723. https://doi.org/10.1016/J.KNOSYS.2020.106723

Gené-Mola, J., Sanz-Cortiella, R., Rosell-Polo, J. R., Morros, J. R., Ruiz-Hidalgo, J., Vilaplana, V., & Gregorio, E. (2020). Fruit detection and 3D location using instance segmentation neural networks and structure-from-motion photogrammetry. Computers and Electronics in Agriculture, 169, 105165. https://doi.org/10.1016/J.COMPAG.2019.105165

He, Z., Karkee, M., & Zhang, Q. (2022). Detecting and localizing strawberry centers for robotic harvesting in field environment. IFAC-PapersOnLine, 55(32), 30–35. https://doi.org/10.1016/J.IFACOL.2022.11.110

He, K., Zhang, X., Ren, S., & Sun, J. (2015). Deep residual learning for image recognition. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2016-December, 770–778. https://doi.org/10.48550/arxiv.1512.03385

Jiang, K., Xie, T., Yan, R., Wen, X., Li, D., Jiang, H., Jiang, N., Feng, L., Duan, X., & Wang, J. (2022). An attention mechanism-improved YOLOv7 object detection algorithm for hemp duck count estimation. Agriculture, 12(10), 1659. https://doi.org/10.3390/AGRICULTURE12101659

Jidong, L., De-An, Z., Wei, J., & Shihong, D. (2016). Recognition of apple fruit in natural environment. Optik, 127(3), 1354–1362. https://doi.org/10.1016/J.IJLEO.2015.10.177

Kang, H., & Chen, C. (2019). Fruit detection and segmentation for apple harvesting using visual sensor in orchards. Sensors, 19(20), 4599. https://doi.org/10.3390/S19204599

Kang, H., Zhou, H., Wang, X., & Chen, C. (2020). Real-time fruit recognition and grasping estimation for robotic apple harvesting. Sensors, 20(19), 5670. https://doi.org/10.3390/S20195670

Koirala, A., Walsh, K. B., Wang, Z., & McCarthy, C. (2019a). Deep learning – Method overview and review of use for fruit detection and yield estimation. Computers and Electronics in Agriculture, 162, 219–234. https://doi.org/10.1016/J.COMPAG.2019.04.017

Koirala, A., Walsh, K. B., Wang, Z., & McCarthy, C. (2019b). Deep learning for real-time fruit detection and orchard fruit load estimation: Benchmarking of ‘MangoYOLO.’ Precision Agriculture, 20(6), 1107–1135. https://doi.org/10.1007/S11119-019-09642-0/TABLES/10

Latha, R. S., Sreekanth, G. R., Rajadevi, R., Nivetha, S. K., Kumar, K. A., Akash, V., Bhuvanesh, S., & Anbarasu, P. (2022). Fruits and vegetables recognition using YOLO. 2022 International Conference on Computer Communication and Informatics, ICCCI 2022. https://doi.org/10.1109/ICCCI54379.2022.9740820

Liu, X., Zhao, D., Jia, W., Ji, W., & Sun, Y. (2019). A detection method for apple fruits based on color and shape features. IEEE Access, 7, 67923–67933. https://doi.org/10.1109/ACCESS.2019.2918313

Lu, S., Chen, W., Zhang, X., & Karkee, M. (2022). Canopy-attention-YOLOv4-based immature/mature apple fruit detection on dense-foliage tree architectures for early crop load estimation. Computers and Electronics in Agriculture, 193, 106696. https://doi.org/10.1016/J.COMPAG.2022.106696

MacEachern, C. B., Esau, T. J., Schumann, A. W., Hennessy, P. J., & Zaman, Q. U. (2023). Detection of fruit maturity stage and yield estimation in wild blueberry using deep learning convolutional neural networks. Smart Agricultural Technology, 3, 100099. https://doi.org/10.1016/J.ATECH.2022.100099

Manzoor, A. (2017). A study on area, production and marketing of apples in Kashmir. International Journal of Trend in Scientific Research and Development, 2(Issue-1), 1247–1251. https://doi.org/10.31142/IJTSRD7090

Mirhaji, H., Soleymani, M., Asakereh, A., & AbdananMehdizadeh, S. (2021). Fruit detection and load estimation of an orange orchard using the YOLO models through simple approaches in different imaging and illumination conditions. Computers and Electronics in Agriculture, 191, 106533. https://doi.org/10.1016/J.COMPAG.2021.106533

Nadimi, M., Divyanth, L. G., & Paliwal, J. (2022). Automated detection of mechanical damage in flaxseeds using radiographic imaging and machine learning. Food and Bioprocess Technology, 16(3), 526–536. https://doi.org/10.1007/S11947-022-02939-5/FIGURES/9

Nayak, M. A. M., R, M. M., & Dhanusha, M. (2019). Fruit recognition using image processing. International Journal of Engineering Research & Technology, 7(8). https://doi.org/10.17577/IJERTCONV7IS08102

Nguyen, T. T., Vandevoorde, K., Wouters, N., Kayacan, E., De Baerdemaeker, J. G., & Saeys, W. (2016). Detection of red and bicoloured apples on tree with an RGB-D camera. Biosystems Engineering, 146, 33–44. https://doi.org/10.1016/J.BIOSYSTEMSENG.2016.01.007

Ranjan, A., & MacHavaram, R. (2022). Detection and localisation of farm mangoes using YOLOv5 deep learning technique. 2022 IEEE 7th International Conference for Convergence in Technology, I2CT 2022. https://doi.org/10.1109/I2CT54291.2022.9825078

Rong, J., Wang, P., Wang, T., Hu, L., & Yuan, T. (2022). Fruit pose recognition and directional orderly grasping strategies for tomato harvesting robots. Computers and Electronics in Agriculture, 202, 107430. https://doi.org/10.1016/J.COMPAG.2022.107430

Shah, Z. A., Dar, M. A., Dar, E. A., Obianefo, C. A., Bhat, A. H., Ali, M. T., El-Sharnouby, M., Shukry, M., Kesba, H., & Sayed, S. (2022). Sustainable fruit growing: An analysis of differences in apple productivity in the Indian state of Jammu and Kashmir. Sustainability, 14(21), 14544. https://doi.org/10.3390/SU142114544

Silwal, A., Davidson, J. R., Karkee, M., Mo, C., Zhang, Q., & Lewis, K. (2017). Design, integration, and field evaluation of a robotic apple harvester. Journal of Field Robotics, 34(6), 1140–1159. https://doi.org/10.1002/ROB.21715

Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. 3rd International Conference on Learning Representations, ICLR 2015 - Conference Track Proceedings. https://doi.org/10.48550/arxiv.1409.1556

Suo, R., Gao, F., Zhou, Z., Fu, L., Song, Z., Dhupia, J., Li, R., & Cui, Y. (2021). Improved multi-classes kiwifruit detection in orchard to avoid collisions during robotic picking. Computers and Electronics in Agriculture, 182, 106052. https://doi.org/10.1016/J.COMPAG.2021.106052

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., & Wojna, Z. (2015). Rethinking the inception architecture for computer vision. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2016-December, 2818–2826. https://doi.org/10.48550/arxiv.1512.00567

Tan, M., & Le, Q. V. (2019). EfficientNet: Rethinking model scaling for convolutional neural networks. 36th International Conference on Machine Learning, ICML 2019, 2019-June, 10691–10700. https://doi.org/10.48550/arxiv.1905.11946

Tang, Y., Zhou, H., Wang, H., & Zhang, Y. (2023). Fruit detection and positioning technology for a Camellia oleifera C. Abel orchard based on improved YOLOv4-tiny model and binocular stereo vision. Expert Systems with Applications, 211, 118573. https://doi.org/10.1016/J.ESWA.2022.118573

Tao, Y., & Zhou, J. (2017). Automatic apple recognition based on the fusion of color and 3D feature for robotic fruit picking. Computers and Electronics in Agriculture, 142, 388–396. https://doi.org/10.1016/J.COMPAG.2017.09.019

Tian, Y., Yang, G., Wang, Z., Wang, H., Li, E., & Liang, Z. (2019). Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Computers and Electronics in Agriculture, 157, 417–426. https://doi.org/10.1016/J.COMPAG.2019.01.012

Ukwuoma, C. C., Zhiguang, Q., Bin Heyat, M. B., Ali, L., Almaspoor, Z., & Monday, H. N. (2022). Recent advancements in fruit detection and classification using deep learning techniques. Mathematical Problems in Engineering. https://doi.org/10.1155/2022/9210947

Wang, D., & He, D. (2021). Channel pruned YOLO V5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosystems Engineering, 210, 271–281. https://doi.org/10.1016/J.BIOSYSTEMSENG.2021.08.015

Wang, Y., Yan, G., Meng, Q., Yao, T., Han, J., & Zhang, B. (2022b). DSE-YOLO: Detail semantics enhancement YOLO for multi-stage strawberry detection. Computers and Electronics in Agriculture, 198, 107057. https://doi.org/10.1016/J.COMPAG.2022.107057

Wang, C.-Y., Bochkovskiy, A., & Liao, H.-Y. M. (2022a). YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. https://doi.org/10.48550/arxiv.2207.02696

Wani, S. A., Kumar, S., Naqash, F., Shaheen, F. A., Wani, F. J., & Rehman, H. U. (2021). Potential of apple cultivation in doubling farmer’s income through technological and market interventions: An empirical study in Jammu & Kashmir. Indian Journal of Agricultural Economics, 76(2).

Wu, D., Jiang, S., Zhao, E., Liu, Y., Zhu, H., Wang, W., & Wang, R. (2022). Detection of Camellia oleifera fruit in complex scenes by using YOLOv7 and data augmentation. Applied Sciences, 12(22), 11318. https://doi.org/10.3390/APP122211318

Yan, B., Fan, P., Lei, X., Liu, Z., & Yang, F. (2021). A real-time apple targets detection method for picking robot based on improved YOLOv5. Remote Sensing, 13(9), 1619. https://doi.org/10.3390/RS13091619

Yoshida, T., Kawahara, T., & Fukao, T. (2022). Fruit recognition method for a harvesting robot with RGB-D cameras. ROBOMECH Journal, 9(1), 1–10. https://doi.org/10.1186/S40648-022-00230-Y/FIGURES/17

Zhang, J., Karkee, M., Zhang, Q., Zhang, X., Yaqoob, M., Fu, L., & Wang, S. (2020). Multi-class object detection using faster R-CNN and estimation of shaking locations for automated shake-and-catch apple harvesting. Computers and Electronics in Agriculture, 173, 105384. https://doi.org/10.1016/J.COMPAG.2020.105384

Zhang, M., Liang, H., Wang, Z., Wang, L., Huang, C., & Luo, X. (2022). Damaged apple detection with a hybrid YOLOv3 algorithm. Information Processing in Agriculture. https://doi.org/10.1016/J.INPA.2022.12.001

Zhaoxin, G., Han, L., Zhijiang, Z., & Libo, P. (2022). Design a robot system for tomato picking based on YOLO v5. IFAC-PapersOnLine, 55(3), 166–171. https://doi.org/10.1016/J.IFACOL.2022.05.029

Zhou, J., Zhang, Y., & Wang, J. (2023). A dragon fruit picking detection method based on YOLOv7 and PSP-Ellipse. Sensors, 23(8), 3803. https://doi.org/10.3390/S23083803

Funding

Research funding received from the Ministry of Electronics and Information Technology (Government of India) through the National Programme on Electronics and ICT Applications in Agriculture and Environment is gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare no competing interests.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Rathore, D., Divyanth, L.G., Reddy, K.L.S. et al. A Two-Stage Deep-Learning Model for Detection and Occlusion-Based Classification of Kashmiri Orchard Apples for Robotic Harvesting. J. Biosyst. Eng. 48, 242–256 (2023). https://doi.org/10.1007/s42853-023-00190-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42853-023-00190-0

Keywords

Profiles

- Peeyush Soni View author profile