Abstract

Background

Valuation surveys of preference-based measures are typically conducted face-to-face or on web panels. In this survey, we considered whether face-to-face and online surveys were reliable using three tasks: composite time trade-off (cTTO), discrete choice experiment (DCE), and DCE with duration.

Methods

Respondents (aged 20–69 years) for both face-to-face (N = 1000, target sample size) and web surveys were selected through quota sampling by sex and age from each panel of the general population in Japan. They were then allocated to one of the three tasks and divided into six groups (two survey modes × three tasks, N = 334 per group). For the cTTO, respondents were asked to rate ten health states described by the EQ-5D-5L. For the DCE and DCE with duration surveys, respondents were asked about 15 health–state pairs. For all participants, as in the second-stage survey, a similar process was repeated two weeks after the first survey. Reliability was evaluated by calculating the percentage of agreement and intraclass correlation coefficients.

Results

The cTTO scores of the face-to-face and web surveys were systematically different. Between the face-to-face and web surveys, the agreement of the TTO survey was not good. The intraclass correlation coefficient (ICC) was 0.37 for the face-to-face test-test and 0.59 for the web test-retest. Discrete choice experiment (DCE) and DCE with duration had similarly good agreement (more than 70%), regardless of face-to-face or web surveys. However, between the first and second surveys (test-retest) of DCE and DCE with duration, the agreement depends on whether the positions of the two cards (health states) are identical.

Conclusion

If the face-to-face cTTO score is the gold standard, a web-based survey of cTTO is not recommended regardless of the ICC. If a DCE survey is performed, positioning effects should be considered.

Similar content being viewed by others

The agreement of the TTO survey between face-to-face and web surveys was not good, although according to the test-retest survey, the two methods had similar reliability. Discrete choice experiment and DCE with duration had similarly good agreement (more than 70%), regardless of the survey type. |

However, if the positions of the cards (health states) were the same in the DCE, the agreement between the two choices was approximately 90%. When the card positions were changed, the agreement percentage was approximately 60%. It seems that 30% of respondents choose the “position” of the card. |

1 Introduction

Utility is typically measured using a preference-based measure (PBM), such as the EQ-5D [1], HUI [2] and SF-6D [3, 4]. Many other PBMs (generic PBM [5, 6], disease-specific PBM [7, 8], PBM for pediatric and adolescents [9, 10], and PBMs for social care [11]) have also been developed. Patients are asked to respond to a PBM, and the responses are converted to utility using the value set. Therefore, we can calculate the general preferences for health states. Value sets are typically constructed using direct methods such as standard gamble (SG), time trade-off (TTO), discrete choice experiment (DCE), and best-worst scaling (BWS).

Valuation surveys using TTO are typically conducted in face-to-face settings. This survey method is expensive and time consuming. However, valuation surveys that use DCE sometimes employ online surveys. Respondents are sampled from a large online panel. Online surveys are cheaper than face-to-face surveys and accumulate responses in a short time. Responses to online surveys are sometimes unreliable (e.g., people take shortcuts to complete tasks quickly) [12]. The advantages and disadvantages of face-to-face and online surveys have been discussed. However, the characteristics of face-to-face and online surveys remain unclear.

Previous studies have examined the test-retest reliability of direct methods [13,14,15,16]. The characteristics of face-to-face and online surveys [17] have been compared. However, there are no studies in which both experiments have been combined. Therefore, we evaluated the EQ-5D-5L (a self-assessed, health related, quality-of-life questionnaire) health state through face-to-face and online surveys using (a) composite TTO (cTTO), (b) DCE, and (c) DCE with duration. This survey was repeated two weeks after the first survey was completed, based on the test-retest method. By comparing these data, we clarified the characteristics of face-to-face and online surveys, as well as practical guidance.

2 Methods

2.1 EQ-5D-5L

The EQ-5D-5L is one of the most widely used PBMs worldwide and comprises five items: “mobility,” “self-care,” “usual activities,” “pain/discomfort,” and “anxiety/depression.” Each item was assessed using five levels of description (no, mild, moderate, severe, and extreme or unable to do). Responses were converted to EQ-5D-5L index values using a predetermined algorithm based on the societal preferences of the general population. It describes 55=3125 health states. The index value of the worst health state [55555] is −0.025 in Japan [18], which is the highest value compared with scores in other countries. Health status with negative scores also existed.

2.2 Composite TTO, DCE, and DCE with Duration

We evaluated the EQ-5D-5L health state through face-to-face and online surveys using (a) composite TTO (cTTO) [19], (b) DCE, and (c) DCE with duration [20]. In the TTO survey, participants always started with a conventional TTO task: living for ten years in a healthy state as described by the EQ-5D-5L or living × years in full health. If the participants considered the presented EQ-5D-5L state to be better than immediate death (i.e., x > 0), the value of x was varied until indifference was reached, and the value of the EQ-5D-5L state was given by x/10. If the participants considered immediate death to be better than living for 10 years in the EQ-5D-5L state (i.e., x < 0), a lead-time TTO [21, 22] was started, which allowed the estimation of negative values. In lead-time TTO, a series of choices were offered between “y years of life in full health” and a life of “10 years in full health followed by 10 years in the presented EQ-5D-5L.” The value of y varied until indifference was reached, and the value of the EQ-5D-5L state was given by (y-10)/10.

The DCE survey presented two health states (A and B) as described in the EQ-5D-5L. For DCE with duration, expected life years (1, 4, 7, and 10 years) were combined with the EQ-5D-5L description. The participants chose their preferred option between the two options.

2.3 Experiment Design

For the TTO, all the respondents were asked to rate the same set of ten health states described in the EQ-5D-5L. Ten health states (shown in the tables in the Results section) were selected from those used in the EQ-5D-5L valuation survey, considering the distribution of misery scores (the sum of the levels in each domain). The order of the ten health states was random. For the DCE or DCE with duration surveys, all respondents were asked about the same set of 15 different health state pairs (shown in the tables in the Results section). These health states were selected from those used in the EQ-5D-5L valuation survey. For each respondent, the order in which the health state pairs were presented was randomized. To avoid positioning effects, the positions of the two health states (left or right) were randomized (1:1) in the first and second phases. These cTTO and two DCE tasks were repeated twice, two weeks after the first task, to compare the first and second responses in the same face-to-face or online mode.

2.4 Face-to-Face Survey

Respondents (aged 20–69 years) were recruited through a panel owned by a research company based on non-random quota sampling by sex and age. The inclusion criteria were as follows: (1) age 20–69 years, (2) current Japanese residency, (3) ability to visit the survey room in Tokyo, (4) ability to provide informed consent, and (5) ability to complete tasks in Japanese. As it was challenging to recruit elderly people for this survey owing to the COVID-19 outbreak, respondents aged > 69 years were excluded. The survey was conducted between October and December 2021.

The target sample size was 334 each for TTO, DCE, and DCE with duration (total of 1000). Thus, 334 respondents were expected to participate in both the first and second surveys. This was not based on statistical considerations. Respondents were asked to visit a survey center in Tokyo. Computer-assisted personal interviewing (CAPI) was performed with the interviewers’ support in a one-on-one setting for 30–60 minutes at the survey center. Three training TTO tasks were completed before the actual TTO tasks; “in a wheelchair,” “much better than being in a wheelchair,” and “much worse than being in a wheelchair, so bad that one would prefer to die immediately” were the responses collected automatically as electronic data.

We asked the same individuals who responded to the first survey to participate in the second survey. The same health state was evaluated using the same valuation method. For the second-stage survey, a similar process was repeated two weeks after the first survey.

2.5 Online Survey

A self-administered, online survey was also conducted. Respondents (aged 20–69 years) were recruited through a Japanese web panel based on quota sampling by sex and age. Age was determined to be consistent with the face-to-face survey. The target sample size was 334 for TTO, DCE, and DCE with duration (number of respondents who participated in the first and second surveys), similar to the face-to-face survey. We expected the dropout rate of the web survey to be much higher than that of the face-to-face survey, and more samples were collected in the first task to obtain 334 respondents in both stages. In the TTO survey, three training tasks were required to be completed. The sample size was not based on statistical analysis. For the second-stage survey, an invitation mail was also sent to ask the same people who responded to the first survey to cooperate in the second-stage survey. They evaluated the same health state using the same valuation method.

2.6 Statistical Analysis

The numbers and percentages of background factors were also calculated. The number of respondents who participated in the first survey and the percentage of dropouts were calculated. The median total response time (and interquartile range [IQR]) was calculated to complete all TTO, DCE, and DCE with duration tasks. In the web survey, the distribution of the total response time was skewed because some respondents interrupted the tasks. Therefore, the median time was preferable.

Mean TTO scores and mean root square error (MRSE) for ten health states were computed and compared between the face-to-face and web surveys, and the first and second surveys. The mean number of responses before the TTO tasks were completed is shown.

As described above, the retest was performed two weeks after the first-stage survey. Reliability was evaluated by calculating the percentage of agreement and intraclass correlation coefficient (ICC). To consider the relationship between the number of agreements, background factors, and response time, a negative binomial regression was performed by including certain background factors such as sex, age, employment, marital status, educational history, household income, and response time. Ethical approval was obtained from the National Institute of Public Health.

3 Results

The collected samples included 1047 respondents (349 for TTO, 348 for DCE, and 350 for DCE with duration) for the face-to-face survey and 1912 respondents (627 for TTO, 650 for DCE, and 635 for DCE with duration) for the web survey. The number of respondents who participated in the second survey was as follows: 1043 (349 for TTO, 347 for DCE, and 347 for DCE with duration) for the face-to-face survey and 1306 (433 for TTO, 432 for DCE, and 441 for DCE with duration) for the web survey. The percentage of respondents who completed both the first and second face-to-face surveys was 100% for TTO, 99.7% for DCE, and 99.1% for DCE with duration, and that for both the first and second web surveys was 69.1% for TTO, 66.5% for DCE, and 69.4% for DCE with duration. The response rate of the web survey was lower than that of the face-to-face survey.

The response time of the web survey was considerably shorter than that of the face-to-face survey and did not depend on the survey methods. The median total response time of respondents to the 10 TTO questions was 20.9 minutes (IQR 17.8–23.6 minutes) for the face-to-face survey and 7.3 minutes (IQR 5.2–10.3 minutes) for the web survey, respectively, in the first phase. Similarly, the following time was needed for 15 DCE and DCE with duration; 15.9 minutes (IQR 14.2–17.4 minutes) for the face-to-face survey of DCE, 4.4 minutes (IQR 2.9–6.6 minutes) for the web of DCE, 18.7 minutes (IQR 17.1–20.5 minutes) for the face-to-face survey of DCE with duration, and 4.6 minutes (IQR 3.0–6.8 minutes) for the web of DCE with duration. Respondents used a similar time for DCE and DCE with duration, independent of the expected lifetime attribute.

3.1 Demographic Factors

Respondents’ background characteristics are presented in Table 1. Median household income ranged from Japanese Yen (JPY) 5 million to JPY 7 million. Compared with the household income of all Japanese families of JPY 4.4 million in 2019, the household income was slightly higher. Many factors were comparable to observations in the general population. According to the 2019 Labor Force Survey, full-time workers accounted for 31.6% of all workers, and part-time workers accounted for 13.7%. In total, 24.3% of Japanese individuals graduated from university or graduate school in 2017, 61.3% of the Japanese people were married, and 31.6% were unmarried in 2015.

We also calculated the backgrounds of the respondents who dropped out. The distribution of background factors did not differ from those of the entire population. Respondents dropped out randomly, suggesting that the dropout rate did not influence the results.

3.2 Comparison of TTO Between Face-to-Face and Web Survey

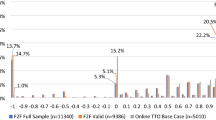

Figure 1 shows a comparison of the TTO scores between the face-to-face and web surveys. Both scores were systematically different regardless of health status. The TTO scores for the face-to-face survey were higher than those for the web survey. The average number of responses before completion of the TTO tasks was 5.7 for the face-to-face survey and 5.4 for the web survey; the difference was not statistically significant. The distribution of the number of responses is shown in Fig. 2. The frequency of small numbers (once and twice) and a large number (nine times) in the web survey was higher than that in the face-to-face survey. In the web-based survey, a TTO score of −1 was frequently observed, because if the respondent continued to select the same option, they reached a TTO score of −1.

3.3 Comparison of TTO Between the First and Second Survey

Table 2 shows the percentage of agreement on the TTO scores between the first and second surveys. The percentages of both face-to-face and web surveys were low and considered unreliable. The percentage of web-based surveys was slightly higher than that of face-to-face surveys. The MRSE between the first and second face-to-face surveys ranged from 0.11 (11211) to 0.36 (51152). The MRSE of the web-based survey went from 0.34 (11211) to 0.38 (35332). The MRSE of the face-to-face survey was smaller than the percentage of agreement, which may imply that the responses to the face-to-face surveys were more homogeneous. The ICC of the face-to-face survey was 0.37, indicating a poor agreement, whereas that of the web survey was 0.59. Although the agreement was not good, as shown in Fig. 1, the graph of the mean TTO scores overlapped between the first and second surveys, regardless of the survey mode. The agreement of each TTO score was poor; however, reliable mean scores were obtained.

3.4 Comparison of DCE and DCE by the Duration Between Face-to-Face and Web Surveys and Between the First and Second Surveys

Table 3 shows the percentage of agreement on DCE and DCE with duration of the first and second surveys. The percentage agreement was similar between DCE and DCE with duration, although the attribute of expected lifetime was added to the health state in the DCE with duration survey. Both the face-to-face and web surveys showed more than 70% agreement, which means that both are reliable. This percentage did not depend on the pairs of health states.

Next, we consider the positioning effects. Table 4 shows the 2 × 2 cross table that considers the first factor: whether the position of cards (health states) was changed between the first and second surveys, and the second factor: whether the choice was agreed upon between the two surveys. Considering the first results, it seems that 30% (90% minus 60%) of respondents tend to choose the “position” of the card, not the characteristics of “health state” on the card. If the positions of the cards were the same, the agreement between the two choices was approximately 90%, which was considered highly reliable. In contrast, when the positions of the cards were changed, the percentage of agreement was approximately 60%. The percentage of respondents who chose left-side cards was 51.4% in the face-to-face surveys and 50.3% in the web-based surveys. The left or right card position did not seem to influence the respondents’ choices.

3.5 Effect of Background Factor and Response Time for the Web Survey

Table 5 presents the relationships between the number of agreements, background factors, and response times. We could not identify meaningful factors that influenced the number of agreements. The response time did not significantly increase the number of agreements, except for in the DCE survey. This suggests that the cut-off time could not be determined.

4 Discussion

We compared responses between face-to-face and web surveys and between the first and second surveys for TTO, DCE, and DCE with duration. This relatively large sample survey considered the test-retest reliability of these valuation methods. A published paper comparing face-to-face and web surveys [17] showed that TTO scores from online surveys were higher than those from face-to-face surveys. This pattern differs from that observed in this study. However, similar to the results of the previous survey, the results of the two survey methods differed significantly. Regarding test-retest reliability of TTO scores, according to the survey by Robinson [13], the ICCs ranged from 0.40 to 0.88 for TTO. In a report from Korea [14], the ICC of TTO scores was 0.827. An Indonesian survey [15] showed that the MRSE between test and retest ranged from 0.00 (state 24553) to 0.23 (state 55233). When the SF-6Dv2 valuation method was used [16], the ICC was 0.958 for TTO, with identical values accounting for 59.3% of observations. Compared to these studies, the ICC of our survey may be slightly smaller; however, this is influenced by the presented health state and experimental design. In addition, considering the DCE survey, a survey [15] showed that of the 1582 DCE responses in the first test, 1147 (72.5%) were identically chosen in the second test. Another survey indicated that 76.4% of choices were identical for DCETTO. These results are comparable to our data.

When TTO is used for valuation surveys, attention must be paid to the discrepancy between face-to-face and web surveys. We cannot detect the reason for this difference, but one possibility is that respondents in the web survey attempted to finish the TTO tasks in a short time or to choose the same options to save more time than in face-to-face surveys. We cannot determine which measurement value is true; however, if face-to-face surveys are standard, web surveys are not recommended because of systematic errors.

Discrete choice experiment and DCE with duration are occasionally used in valuation surveys. In the valuation surveys, the responses of DCE and DCE with duration are collected via the internet. Our results supported this. However, in the DCE or DCE with duration survey, about 30% of respondents did not consider health states on cards but chose only the “position” of the cards. Randomization of card positioning may be needed to reduce bias without depending on survey mode. In both the TTO and DCE surveys, the cut-off time for controlling the quality of responses could not be determined.

One limitation of this survey is that approximately 30% of respondents to the web survey dropped out before the 2nd survey. We were unable to recruit respondents who dropped out before the 2nd survey. The demographic factors were similar between respondents who dropped out and those who did not; however, it is possible that respondents who participated in both the first and second surveys were much more eager to participate. In addition, the evaluated health states were 10 in TTO and 15 in DCE and DCE with duration. In other studies, many health states have been evaluated, and a value set has been created. Compared with these studies, the number of health states was small, although our health states included a variety of severities, considering the misery scores. The generalizability of our results may be limited. Finally, the survey was conducted in Japan. We believe that this survey is reliable, but more deliberation is needed to extrapolate our results to other countries. Response patterns may differ among countries.

5 Conclusion

In this survey, we clarified the characteristics of TTO, DCE, and DCE with duration, considering both face-to-face and web surveys. First, we found a discrepancy in TTO scores between the face-to-face and web surveys. The ICC was poor for face-to-face interactions but appeared to be better for web interactions; however, the mean TTO scores were reliable. Both the DCE and DCE with duration showed good agreement between the two surveys. Web-based surveys on DCE and DCE with duration may be acceptable. However, positioning effects were not negligible. Because the response time did not influence reliability, it was challenging to set a cut-off time.

References

Herdman M, Gudex C, Lloyd A, Janssen M, Kind P, Parkin D, et al. Development and preliminary testing of the new five-level version of EQ-5D (EQ-5D-5L). Qual Life Res. 2011;20(10):1727–36.

Feeny D, Furlong W, Torrance GW, Goldsmith CH, Zhu Z, DePauw S, et al. Multiattribute and single-attribute utility functions for the health utilities index mark 3 system. Med Care. 2002;40(2):113–28.

Brazier J, Usherwood T, Harper R, Thomas K. Deriving a preference-based single index from the UK SF-36 Health Survey. J Clin Epidemiol. 1998;51(11):1115–28.

Brazier JE, Mulhern BJ, Bjorner JB, Gandek B, Rowen D, Alonso J, et al. Developing a new version of the SF-6D health state classification system from the SF-36v2: SF-6Dv2. Med Care. 2020;58(6):557–65.

Richardson J, Iezzi A, Khan MA, Maxwell A. Validity and reliability of the Assessment of Quality of Life (AQoL)-8D multi-attribute utility instrument. Patient. 2014;7(1):85–96.

Kaplan RM, Anderson JP. A general health policy model: update and applications. Health Serv Res. 1988;23(2):203–35.

King MT, Costa DS, Aaronson NK, Brazier JE, Cella DF, Fayers PM, et al. QLU-C10D: a health state classification system for a multi-attribute utility measure based on the EORTC QLQ-C30. Qual Life Res. 2016;25(3):625–36.

King MT, Norman R, Mercieca-Bebber R, Costa DSJ, McTaggart-Cowan H, Peacock S, et al. The Functional Assessment of Cancer Therapy Eight Dimension (FACT-8D), a Multi-Attribute Utility Instrument Derived From the Cancer-Specific FACT-General (FACT-G) quality of life questionnaire: development and Australian value set. Value Health. 2021;24(6):862–73.

Wille N, Badia X, Bonsel G, Burström K, Cavrini G, Devlin N, et al. Development of the EQ-5D-Y: a child-friendly version of the EQ-5D. Qual Life Res. 2010;19(6):875–86.

Stevens KJ. Working with children to develop dimensions for a preference-based, generic, pediatric, health-related quality-of-life measure. Qual Health Res. 2010;20(3):340–51.

Netten A, Burge P, Malley J, Potoglou D, Towers AM, Brazier J, et al. Outcomes of social care for adults: developing a preference-weighted measure. Health Technol Assess. 2012;16(16):1–166.

Conrad FG, Couper MP, Tourangeau R, Zhang C. Reducing speeding in web surveys by providing immediate feedback. Surv Res Methods. 2017;11(1):45–61.

Robinson S. Test-retest reliability of health state valuation techniques: the time trade off and person trade off. Health Econ. 2011;20(11):1379–91.

Kim SH, Lee SI, Jo MW. Feasibility, comparability, and reliability of the standard gamble compared with the rating scale and time trade-off techniques in Korean population. Qual Life Res. 2017;26(12):3387–97.

Purba FD, Hunfeld JAM, Timman R, Iskandarsyah A, Fitriana TS, Sadarjoen SS, et al. Test-retest reliability of EQ-5D-5L valuation techniques: the composite time trade-off and discrete choice experiments. Value Health. 2018;21(10):1243–9.

Xie S, Wu J, Chen G. Discrete choice experiment with duration versus time trade-off: a comparison of test-retest reliability of health utility elicitation approaches in SF-6Dv2 valuation. Qual Life Res. 2022;31:2791–803.

Jiang R, Shaw J, Mühlbacher A, Lee TA, Walton S, Kohlmann T, et al. Comparison of online and face-to-face valuation of the EQ-5D-5L using composite time trade-off. Qual Life Res. 2021;30(5):1433–44.

Shiroiwa T, Ikeda S, Noto S, Igarashi A, Fukuda T, Saito S, et al. Comparison of value set based on DCE and/or TTO data: scoring for EQ-5D-5L health states in Japan. Value Health. 2016;19(5):648–54.

Janssen BM, Oppe M, Versteegh MM, Stolk EA. Introducing the composite time trade-off: a test of feasibility and face validity. Eur J Health Econ. 2013;14(Suppl 1):S5–13.

Bansback N, Brazier J, Tsuchiya A, Anis A. Using a discrete choice experiment to estimate health state utility values. J Health Econ. 2012;31(1):306–18.

Devlin NJ, Tsuchiya A, Buckingham K, Tilling C. A uniform time trade off method for states better and worse than dead: feasibility study of the “lead time” approach. Health Econ. 2011;20(3):348–61.

Devlin N, Buckingham K, Shah K, Tsuchiya A, Tilling C, Wilkinson G, et al. A comparison of alternative variants of the lead and lag time TTO. Health Econ. 2013;22(5):517–32.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

National Institute of Public Health.

Competing Interests

The authors have no competing interests to declare relevant to the content of this article.

Ethics Approval

This study was conducted in accordance with the principles of the Declaration of Helsinki. This study was approved by the Ethics Committee of the National Institute of Public Health (NIPH-IBRA#12338).

Consent to Participate

Informed consent was obtained from all individual participants included in the study.

Consent for Publication

Respondents signed informed consent regarding publishing their anonymous data.

Data Availability

The datasets generated and/or analyzed during the current study are not publicly available due to the lack of consent from participants but are available from the corresponding author upon reasonable request.

Code Availability

Not applicable.

Authors’ Contribution

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Takeru Shiroiwa. The first draft of the manuscript was written by Takeru Shiroiwa, and all authors commented on previous versions of the manuscript. All the authors have read and approved the final version of the manuscript.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

Shiroiwa, T., Fukuda, T. Are Web-Based Valuation Surveys for Preference-Based Measures as Reliable as Face-to-Face Surveys? TTO, DCE and DCE with Duration. Appl Health Econ Health Policy 22, 391–400 (2024). https://doi.org/10.1007/s40258-023-00865-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40258-023-00865-x