Abstract

Alzheimer’s disease represents a neurological condition characterized by steady cognitive decline and eventual memory loss due to the death of brain cells. It is one of the most prominent dementia types observed in patients and which hence underlines the imminent need for potential methods to diagnose the disease early on. This work considers a novel approach by utilizing a reduced version of one of the datasets used in this work to achieve a considerably accurate prediction while also enabling quicker training. It leverages image segmentation to isolate the hippocampus region from brain MRI images and then strikes a comparison between models trained on the segmented portions and models trained on complete images. This research uses two datasets—4 classes of images from Kaggle and a popular OASIS 2 MRI and demographic dataset. A deep learning-based approach was adopted to train the Kaggle dataset to perform severity classification, and the hippocampus region segmented from a reduced version of the OASIS dataset was trained on supervised and ensemble learning algorithms to detect Alzheimer’s disease. The metric used for the assessment of model performance is classification accuracy. A comparative analysis between the proposed approach and existing work was also performed, and it was observed that the proposed approach is effective in the early diagnosis of Alzheimer’s disease.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Alzheimer’s disease has been identified as the most prevalent variety of dementia. Starting with a seemingly mild memory loss, this disease gradually escalates to a loss of the ability to hold conversations and respond to the environment. Alzheimer’s disease affects parts of the brain that control language, thought, and memory. It can adversely impact a person’s ability to run daily errands. Statistics show that 1 in 9 people above the age of 65 has Alzheimer’s disease. This comprises 11.4% of the world’s population. Research shows that Alzheimer's disease cases have increased by 16% due to the Covid-19 pandemic. Another research shows that 1 in 3 Alzheimer patients die, which results in a higher number of deaths than other chronic diseases such as breast cancer and prostate cancer combined [1]. Hence, early diagnosis of the disease is of vital importance.

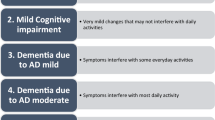

Alzheimer’s disease is classified into different classes concerning the rate of affection in the brain, namely mild, moderate, and severe. Patients with mild Alzheimer’s experience greater memory loss. Patients are often found wandering and getting lost, having trouble handling money, and struggling to do everyday tasks. Patients with moderate Alzheimer’s disease have trouble reasoning, have poor hearing, and lose the ability to smell. At this stage patients also have delusions, hallucinations, and paranoia. Patients with severe Alzheimer’s are completely dependent on others for day-to-day tasks, the brain tissue shrinks significantly, and the patient ends up in bed for the rest of their life [2].

The objective of this research is to use computer vision to analyze brain MRI images of 2 different datasets, namely, the four classes of images from Kaggle and the OASIS dataset to classify and diagnose Alzheimer’s disease. Subsequently, deep learning techniques are applied to the Kaggle dataset after necessary pre-processing, to determine the most suitable model that yields the highest accuracy. On the other hand, the brain MRI images in the OASIS dataset are segmented to extract the hippocampus region in the brain, the shrinkage of which is known to aggravate Alzheimer’s disease and dementia. These segmented images are trained alongside complete images, and the best model is once again determined based on the accuracy score. Moreover, to reduce the training time, the dataset is reduced to half its original size and the training results of this reduced dataset are analyzed alongside the complete dataset training results.

The main highlights of this paper are as follows:

-

This work considers a novel approach by utilizing a reduced version of the OASIS-2 dataset to speed up the training process without compromising on accuracy.

-

Image segmentation was used to isolate the hippocampus region from brain MRI images and then strike a comparison between models trained on the segmented portions and models trained on complete images.

-

Deep learning models such as the CNN model, multilayer model, Resnet50, and more were utilized for the classification of severity.

-

Machine learning and ensemble learning algorithms were used for the detection of Alzheimer’s disease by segmenting the hippocampus region of the brain from MRI images.

This work mainly introduces an approach to obtain significantly accurate results using a reduced version of the OASIS-2 dataset to train models. In place of using all the images from each.nifti file in the dataset, this work uses only one image per.nifti file in the dataset. Furthermore, only one slice of each brain image was used to perform training, compared to conventional approaches that use the entire.nifti file for training. Additionally, this work performs a striking comparison using only the cropped portion of the brain for training. Followed by that it also compares training with only half the dataset to training with the entire dataset. This approach is especially useful in training computationally heavy deep learning models to achieve faster training times while also obtaining fairly accurate predictions.

The remainder of this paper is organized as follows—the next section is a literature survey of existing approaches for Alzheimer’s disease detection and classification. This is followed by a proposed approach section detailing the methodology used in this work. The next section is the experimental setup which describes the software used for implementation. Following this, the results obtained in this work are discussed and explained and the paper is wrapped up with a conclusion section.

2 Literature Survey

Several studies pertaining to the early detection of Alzheimer’s disease have been carried out. While some of the works used comma-separated values as input features, some other works used brain MRI images and extracted the features from these images. Table 1 lists the contemporary works related to Alzheimer’s disease detection.

Conventional approaches that experiment with the OASIS dataset use a CSV file format to diagnose Alzheimer’s disease. This brings with it challenges such as manual inconsistencies, susceptibility to errors, and the tedious work that comes with populating the file. The proposed system eliminates these challenges by adopting an image-based approach where classification is performed solely by analyzing the brain MRI scans. Other contemporary approaches that use this image-based paradigm, analyze the entire area of the brain MRI image. The proposed approach, however, concentrates on the hippocampus region which is known to be the underlying factor behind Alzheimer’s and cognitive decline. The proposed approach also demonstrates the benefits of using only 50% of the dataset for training over 100% of the dataset, thereby saving memory and processing time.

3 Proposed Approach

The proposed approach has been developed by considering two different datasets, namely—the Kaggle dataset consisting of four classes of brain images and the OASIS 2 dataset. The flow diagrams about how the data are processed for these two datasets are shown in Figs. 1 and 2, respectively.

As seen in Fig. 1, the images from the Kaggle dataset are read by specifying the path pertaining to the image location. Once all the images are extracted, they are subjected to pre-processing where images are resized to 45 × 45 pixels following which images are thresholded to highlight dark and light pixels more accurately. This is followed by converting the image list into a NumPy array and then separating and defining the labels for every image. The string labels are then converted to integer labels ranging from 0 to 3. The image array and the labels are used to create the training and testing sets. The pixel values ranging from 0 to 255 of both training and testing images are normalized to 0–1. The labels are categorically hot encoded before model training. The training dataset is oversampled to increase the number of images with which the model can predict.

As shown in Fig. 2, while processing the images in OASIS 2 dataset, images read from the dataset are rotated to maintain uniformity following which a threshold is applied over the images to highlight dark and light areas. The hippocampus region in the brain is then cropped out and stored in a list called segmented features, whereas the full image is appended to a list known as full features. This process is termed hippocampus segmentation. For ease of training, the two lists comprising the full stack of features and segmented features are converted into NumPy arrays. Labels required for training are extracted from a CSV file provided in the OASIS dataset, and they are subsequently encoded as integers ranging from 0 to 2. The training sets and testing sets for both the full features and the segmented features are then created. Following this, the pixel values in these training and testing datasets are scaled down from 0–255 to 0–1 and converted to one-dimensional arrays. Once the training dataset is oversampled, each machine learning algorithm is applied with conditions such as default parameters, best parameters, default parameters trained on the oversampled dataset and best parameters on the oversampled dataset for an entire 2D image (4 models) as well as a segmented 2D image (4 models).

3.1 Architecture

Three models each using a different architecture—namely a simple multi-layered architecture, convolution neural network (CNN), and ResNet50—were developed and tested over the two datasets. The subsequent section discusses in detail the individual architecture specifications.

3.1.1 Simple Multilayer Model

This model contains a total of 15 layers of which 8 are dense layers and 7 are dropout layers. All the dropout layers are placed between two dense layers to avoid overfitting. All the dropout layers are given a value of 0.05. Each dense layer contains a certain number of neurons along with an activation function. Table 2 lists the specifications pertaining to each of the layers in the fully connected neural network. The model is compiled by setting the loss metric to categorical cross-entropy and the optimizer to RMSprop. The batch size is set to 32.

3.1.2 Convolutional Neural Network (CNN) Model

This model has a total of 24 layers including 6 conv2d layers, 3 Maxpool 2d layers, 4 Separableconv2d layers, 4 Dropout layers, 4 BatchNormalization layers, and 3 dense layers. Table 3 lists the specifications pertaining to each of the layers in the CNN model. The model is compiled by setting the loss metric to categorical cross-entropy and using the Adam optimizer.

3.1.3 ResNet50 Model

This model is a ResNet50 model with an additional 18 layers including 6 dense layers, 5 Dropout layers, 1 flatten layer, and 6 BatchNormalization layers. After loading the 50 pre-trained layers in the ResNet 50 model the layers are added in the following sequence as specified in Table 4. ResNet is adept at handling the vanishing gradient problem which can result in inaccurate predictions.

3.2 Pseudo-Logic When Experimented with Kaggle Dataset

The following pseudocode could be categorized into the following steps: Image reading, Image preprocessing, Train test split, and Oversampling. The first step involves loading all the files in the dataset using the glob function. The second step comprises resizing and applying thresholding to the images. The images are resized from the dimensions 208 × 176 to 45 × 45 to reduce the overall processing time. The threshold value is set to 120, and the thresholding technique used here is THRESH_TOZERO, where the pixels above the given threshold will retain their value and the rest will be made 0. The processed images are stored in a list, which is later converted to a NumPy array. The string labels are encoded as integers to facilitate model training. The dataset has been split into training and testing using the train_test_split function, where the training set comprises 80% of the dataset and the testing set comprises 20% of the dataset. The dimensions of the images are reshaped from 45 × 45x3 to 6075 to give the deep-learning models a total of 6075 parameters per image. The final step involves oversampling the training sets using the SMOTE function to make images of both classes in the dataset comparable.

3.3 Hippocampus Segmentation

Shrinkage observed in the hippocampus region is a major factor leading to Alzheimer’s disease. Hence, segmenting the area surrounding the hippocampus to achieve better accuracy has been viewed as a prospective approach. To achieve this, a brute force approach was followed to determine the coordinates. After numerous attempts, the coordinates were obtained as (70, 70), (150, 70), (70, 170), and (150, 170). This area was extracted by slicing the image array as an image [70:150, 70:170]. The implementation of the proposed approach while using the Kaggle dataset and the OASIS dataset is discussed in the subsequent sections. Figure 3 is a sample depiction of the segmented hippocampus region.

3.4 Pseudo-Logic When Experimented with OASIS 2 Dataset

The following pseudocode can be categorized as the following: Dataset loading, Image processing, Hippocampus segmentation, Train test split, and Oversampling. The first step involves loading the nifti images and their corresponding labels. The labels are stored in a CSV file, which is loaded using read_csv. The images are loaded using the glob function, and the images inside the nifti files are extracted using the get_fdata function. The second step involves choosing one 2D image from each nifti file and applying thresholding to it. The middlemost image has been chosen for predicting the presence of Alzheimer’s because the shape of the hippocampus is more apparent in this image. The threshold value is set to 120 and the thresholding technique used here is THRESH_TOZERO, where the pixels above the given threshold will retain their value and the rest will be made 0. The processed images are stored in a list which is later converted to a NumPy array. The third step involves segmenting the hippocampus region, which is achieved by cropping the hippocampus region from the 2D image and using it for prediction. Two sets of datasets are created: one with the entire 2D images and one with the hippocampus segmented images; these images are stored in individual lists and later converted to NumPy arrays. The fourth step involves creating separate training and testing sets to compare the results across various models using train_test_split. The images in the arrays are flattened into a one-dimensional array using the reshape function to facilitate the training of the machine learning models. The final step involves oversampling the training sets using the SMOTE function to increase the accuracy of predicting each class in the dataset.

4 Experimental Setup

This research work called for a high RAM and memory. The maximum RAM utilized was 35 GB. A high-end TPU was leveraged especially to carry out the experiments pertaining to deep learning. Jupyter notebook and Google Colaboratory were used to carry out most of the experimental works. The dataset was mounted on Google Drive. As part of this work, several packages such as TensorFlow, imblearn, OpenCV, and nibabel were also installed for performing specific tasks. Tensorflow was used to analyze the Kaggle dataset. Imblearn was used for oversampling existing images in the dataset to augment the number of images involved in training. OpenCV was used for vision and image processing tasks such as reading, displaying, thresholding, and resizing the images. Nibabel was used in the reading and extraction of files with the.nifti extension in the OASIS dataset. In addition to these, several other python libraries such as NumPy, pandas, and sklearn were imported and used for each model.

As discussed earlier, two datasets, namely Kaggle 4 classes dataset and OASIS 2 dataset, were considered for this work. The Kaggle dataset is a collection of MRI images of the back portion of the brain in jpg format. There are a total of 4 classes present in the dataset, namely non-demented, very mildly demented, mildly demented, and moderately demented. In total, the dataset comprises 6400 images. Among these images, 5120 images are taken for training the model and the remaining 1280 images are used for testing. In total, 3200 images are of type non-demented, 2240 images are of type very mildly demented, 896 images are of type mildly demented, and 64 images are of type moderately demented. A few sample images pertaining to the Kaggle dataset are shown in Fig. 4.

The Oasis dataset consists of 150 different subjects with a total of 373 MRI sessions. The age group of the subjects ranges from 60 to 96 and includes both men and women. Throughout their study of the subjects, 72 people were termed non-demented, 64 were demented, and among these 64 subjects, 51 were individuals possessing mild to moderate Alzheimer’s disease. Another 14 were initially termed non-demented, but over time they were termed demented. The Oasis dataset has 3 classes demented, non-demented, and converted. The MRI scans are in the form of nifti.hdr files. These images are the MRI scans of the side portions of the brain. A CSV file is also attached along with the dataset, and it contains information such as the EDUC, SES, MMSE, CDR, eTIV, nWBV, ASF, and MR delay. A few sample images pertaining to the Kaggle dataset are shown in Fig. 5.

5 Results and Discussion

5.1 Oasis Dataset

Various models were tested over the OASIS 2 dataset. A reduced dataset approach was also tried along with the complete set of OASIS 2 images.

5.1.1 Full Features Vs Segmented Features

Figure 6 performs a comparison of the full feature and segmented feature-based approaches for the input Oasis dataset images. The X-axis corresponds to each model, and the Y-axis corresponds to the accuracy. This hypothesis is postulated based on the fact that the presence of dementia is dependent on the size of the hippocampus in the brain. According to neurology, the smaller the Hippocampus, the more severe the dementia. Thus, the region comprising the hippocampus is segmented and tested.

To improve the above results, discrete wavelet transforms were used as a pre-processing technique. From the following trends observed in the graphs, we could infer that on average, training using the segmented hippocampus part of the brain MRI image provided a higher accuracy as compared to using the entire image for training.

5.1.2 Complete Dataset VS Reduced Dataset

This hypothesis was tested as the Oasis dataset was available in 2 parts—Part A and Part B. Part A had nearly 209 MRI images, and hence, this portion of the dataset was utilized for training. Based on the trends reflected in Fig. 7, it is evident that, on average, the reduced dataset yields a higher accuracy as compared to the accuracy obtained by using the complete dataset. The reduced dataset gave the highest overall accuracy of 94% when trained on the XGBoost model. The reason for this might be because of the concept of overfitting.

5.2 Kaggle Dataset

Many multi-layered models aside from the multi-layered model and CNN model were tested, and the results of those models are mentioned in Table 5:

After these model parameters were tested, the dataset was oversampled, and the final accuracy was 94.45% for the same model with 28 epochs. The epoch vs. accuracy plot and epoch vs. loss plot are shown in Fig. 8. Table 6 shows the classification report for the multi-layered model.

Upon training the CNN model, an accuracy of 94.1% was achieved, and its accuracy and loss for increasing epochs are depicted in Fig. 8. When trained with ResNet50, the model gave an accuracy of 75.64%. Also, the proposed approach was compared with an existing work [12] in terms of precision, recall, F1 score, and accuracy as both of these works use the same Kaggle dataset for training and testing. From Table 7, it can be seen that the proposed system has higher precision, recall, and F1 score compared to the existing work [12].

The proposed approach to the OASIS dataset has been compared with the existing approaches. From Table 8, it is apparent that the proposed approach has higher accuracy compared to most other approaches. There are some entries marked as not available (NA) where in the metric details were not provided in the existing works. Despite the observation that existing approaches [10, 13, 15], and [17] produced a marginally better accuracy than the proposed approach, the most striking aspect of the proposed approach is that an accuracy of 94% was achieved with half the size of the dataset that was used for training. This underlines the fact that the training time will be minimized without compromising much on the accuracy.

6 Conclusion

As a part of this research, two datasets were analyzed, namely four classes of images from Kaggle and the OASIS 2 MRI and demographic dataset. The Kaggle dataset classifies brain MRI images into four classes, namely, Non-demented, Very Mildly Demented, Mildly Demented, and Moderately demented. The area of focus for this dataset was deep learning, and several multi-layered neural networks with a varying number of epochs and batch sizes were tested. The images were also oversampled to expand the training dataset. Additionally, an experimental study on the OASIS 2 dataset was carried out, and this involved utilizing the images for training and analysis and the CSV demographic data as labels. The images were first extracted from the existing nifti file extension. This was followed by pre-processing, where the images were flattened from a 3-dimensional orientation to a 2-dimensional and then resized to make them suitable for further training. Further training was conducted using the complete dataset as well as the reduced dataset. The central idea of this study tied into segmenting a portion of the brain image known as the hippocampus, the shrinkage of which is responsible for Alzheimer’s disease. The images were oversampled to increase the volume of training data. Several machine learning models such as SVM, KNN, XGBoost, Random Forest, Extra Trees, Gradient Boosting, AdaBoost, and Multilayer Perceptron were then applied to the segmented images as well as full images of both halves of the dataset and the complete dataset. GridSearchCV was also used for fine-tuning of models wherever appropriate.

The accuracy achieved in case of the Kaggle dataset was 94.45% using a CNN model with batch size set to 10 and number of epochs set to 10. In the case of OASIS dataset, the proposed method achieved 94% accuracy using only 50% of the available data for training. It was also observed that segmentation of the hippocampus region yields higher classification accuracy than using entire images. The highest accuracy was obtained when the XGBoost algorithm was applied to the reduced dataset and then segmented to focus on the hippocampus region, to yield better results.

References

https://www.nia.nih.gov/health/alzheimers-disease-fact-sheet

Basheer, S.; Bhatia, S.; Sakri, S.B.: Computational modeling of dementia prediction using deep neural network: analysis on OASIS dataset. IEEE Access 9, 42449–42462 (2021)

Vinayak, S.S.; Shahina, E.A.; Nayeemulla Khan, A.: Dementia prediction on OASIS dataset using supervised and ensemble learning techniques. Int. J. Eng. Adv. Technol. 10(1):244-254 (2020)

Islam, J.; Zhang, Y.: An ensemble of deep convolutional neural networks for Alzheimer's disease detection and classification. arXiv preprint: arXiv:1712.01675 (2017)

Battineni, G.; Chintalapudi, N.; Amenta, F.; Traini, E.: Deep learning type convolution neural network architecture for multiclass classification of alzheimer's disease. In: BIOIMAGING (pp. 209–215) (2021)

Ullah, H.T.; Onik, Z.; Islam, R.; Nandi, D.: Alzheimer's disease and dementia detection from 3D brain MRI data using deep convolutional neural networks. In: 2018 3rd International Conference for Convergence in Technology (I2CT) (pp. 1–3). IEEE (2018)

Herrera, L.J.; Rojas, I.; Pomares, H.; Guillén, A.; Valenzuela, O.; Baños, O.: Classification of MRI images for Alzheimer's disease detection. In 2013 International Conference on Social Computing (pp. 846–851). IEEE (2013)

Islam, J.; Zhang, Y.: Early diagnosis of Alzheimer's disease: A neuroimaging study with deep learning architectures. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (pp. 1881–1883) (2018)

Al-Khuzaie, F.E.; Bayat, O.; Duru, A.D.: Diagnosis of Alzheimer’s disease using 2D MRI slices by convolutional neural network. Appl. Bionics Biomech. (2021)

Islam, J.; Zhang, Y.: Brain MRI analysis for Alzheimer’s disease diagnosis using an ensemble system of deep convolutional neural networks. Brain informatics 5(2), 1–14 (2018)

Yildirim, M.; Cinar, A.C.: Classification of Alzheimer’s Disease MRI images with CNN based hybrid method. Ingénierie des Systèmes d Inf. 25(4), 413–418 (2020)

Khagi, B.; Lee, B.; Pyun, J.Y.; Kwon, G.R.: CNN Models Performance Analysis on MRI images of OASIS dataset for distinction between Healthy and Alzheimer's patient. In: 2019 International Conference on Electronics, Information, and Communication (ICEIC) (pp. 1–4). IEEE (2019)

Baglat, P.; Salehi, A.W.; Gupta, A.; Gupta, G.: Multiple machine learning models for detection of Alzheimer’s disease using OASIS dataset. In: International Working Conference on Transfer and Diffusion of IT (pp. 614–622). Springer, Cham (2020)

Hussain, E.; Hasan, M.; Hassan, S.Z.; Azmi, T.H.; Rahman, M.A.; Parvez, M.Z.: Deep learning based binary classification for alzheimer’s disease detection using brain mri images. In: 2020 15th IEEE Conference on Industrial Electronics and Applications (ICIEA) (pp. 1115–1120). IEEE (2020)

Yagis, E.; Citi, L.; Diciotti, S.; Marzi, C.; Atnafu, S.W.; De Herrera, A.G.S.: 3d Convolutional neural networks for diagnosis of alzheimer's disease via structural mri. In: 2020 IEEE 33rd International Symposium on Computer-Based Medical Systems (CBMS) (pp. 65–70). IEEE (2020)

Jabason, E.; Ahmad, M.O., Swamy, M.N.S.: Classification of Alzheimer’s disease from MRI data using an ensemble of hybrid deep convolutional neural networks. In: 2019 IEEE 62nd International Midwest Symposium on Circuits and Systems (MWSCAS) (pp. 481–484). IEEE (2019)

Puente-Castro, A.; Fernandez-Blanco, E.; Pazos, A.; Munteanu, C.R.: Automatic assessment of Alzheimer’s disease diagnosis based on deep learning techniques. Comput. Biol. Med. 120, 103764 (2020)

Fong, J.X.; Shapiai, M.I.; Tiew, Y.Y.; Batool, U.; Fauzi, H.: Bypassing MRI Pre-processing in Alzheimer's Disease Diagnosis using Deep Learning Detection Network. In: 2020 16th IEEE International Colloquium on Signal Processing & Its Applications (CSPA) (pp. 219–224). IEEE (2020)

Venugopalan, J.; Tong, L.; Hassanzadeh, H.R.; Wang, M.D.: Multimodal deep learning models for early detection of Alzheimer’s disease stage. Sci. Rep. 11(1), 1–13 (2021)

Janghel, R.R.; Rathore, Y.K.: Deep convolution neural network based system for early diagnosis of Alzheimer’s disease. Irbm 42(4), 258–267 (2021)

Murugan, S.; Venkatesan, C.; Sumithra, M.G.; Gao, X.Z.; Elakkiya, B.; Akila, M.; Manoharan, S.: DEMNET: a deep learning model for early diagnosis of Alzheimer diseases and dementia from MR images. IEEE Access 9, 90319–90329 (2021)

Jha, D.; Alam, S.; Pyun, J.Y.; Lee, K.H.; Kwon, G.R.: Alzheimer’s disease detection using extreme learning machine, complex dual tree wavelet principal coefficients and linear discriminant analysis. J. Med. Imag. Health Inf. 8(5), 881–890 (2018)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Balasundaram, A., Srinivasan, S., Prasad, A. et al. Hippocampus Segmentation-Based Alzheimer’s Disease Diagnosis and Classification of MRI Images. Arab J Sci Eng 48, 10249–10265 (2023). https://doi.org/10.1007/s13369-022-07538-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-022-07538-2