Abstract

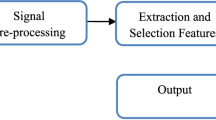

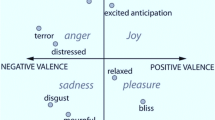

Speech emotion recognition continues to attract a lot of research especially under mixed-language scenarios. Here, we show that emotion is language dependent and that enhanced emotion recognition systems can be built when the language is known. We propose a two-stage emotion recognition system that starts by identifying the language, followed by a dedicated language-dependent recognition system for identifying the type of emotion. The system is able to recognize accurately the four main types of emotion, namely neutral, happy, angry, and sad. These types of emotion states are widely used in practical setups. To keep the computation complexity low, we identify the language using a feature vector consisting of energies from a basic wavelet decomposition. A hidden Markov model (HMM) is then used to track the changes of this vector to identify the language, achieving recognition accuracy close to 100%. Once the language is identified, a set of speech processing features including pitch and MFCCs are used with a neural network (NN) architecture to identify the emotion type. The results show that that identifying the language first can substantially improve the overall accuracy in identifying emotions. The overall accuracy achieved with the proposed system reached more than 93%. To test the robustness of the proposed methodology, we also used a Gaussian mixture model (GMM) for both language identification and emotion recognition. Our proposed HMM-NN approach showed a better performance than the GMM-based approach. More importantly, we tested the proposed algorithm with 6 emotions which are showed that the overall accuracy continues to be excellent, while the performance of the GMM-based approach deteriorates substantially. It is worth noting that the performance we achieved is close to the one attained for single language emotion recognition systems and outperforms by far recognition systems without language identification (around 60%). The work shows the strong correlation between language and type of emotion, and can further be extended to other scenarios including gender-based, facial expression-based, and age-based emotion recognition.

Similar content being viewed by others

References

Engineering, F.O.F.: Parametric Speech Emotion Recognition Using Neural Network, Retrieved from (2014). http://www.diva-portal.org/smash/get/diva2:756207/FULLTEXT01.pdf

Al Machot, F.; Mosa, A.H.; Dabbour, K.; Fasih, A.; Schwarzlm, C.: A novel real-time emotion detection system from audio streams based on bayesian quadratic discriminate classifier for ADAS. In: Proceedings of the Joint INDS’11 & ISTET’11 (2011)

Message, V.; Using, P.; Identifier, F.M.: Voice message priorities using fuzzy mood identifier. In: Natioanal Radio Science Conference (2006)

Nogueiras, A.; Moreno, A.; Bonafonte, A.; Mariño, J.B.: Speech emotion recognition using hidden markov models. In: Proceedings of Eurospeech, Aalborg, Denmark, pp. 2679–2682 (2001)

Schuller, B.; Rigoll, G.; Lang, M.: Speech emotion recognition combining acoustic features and linguistic information in a hybrid support vector machine—belief network architecture. Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP) 1, 577–580 (2004)

Bhatti, M.W.; Wang, Y.; Guan, L.: Language independent recognition of human emotion using artificial neural networks. Int. J. Cogn. Inform. Nat. Intell. (IJCINI) 2(3) (2008). doi:10.4018/jcini.2008070101

Eyben, F.; Batliner, A.; Seppi, D.; Steidl, S.: Cross-corpus classification of realistic emotions—some pilot experiments. In: Proceedings 3rd International Workshop on EMOTION: Corpora for Research on Emotion and Affect, Satellite of LREC 2010, pp. 77–82 (2015)

Feraru, S.M.; Schuller, D.: Cross-language acoustic emotion recognition: an overview and some tendencies. In: International Conference on Affective Computing and Intelligent Interaction (ACII), IEEE, pp. 125–131 (2015)

Hassan, A.: On Automatic Emotion Classification Using Acoustic Features. Retrieved from (2012). http://eprints.soton.ac.uk/340672/1/AliHassanPhDThesis.pdf

Goudbeek, M.; Nilsenová, M.: Context and priming effects in the recognition of emotion of old and young listeners. In: Proceedings of the Annual Conference of the International Speech Communication Association INTERSPEECH, January 2011, pp. 93–96 (2011)

Ververidis, D.; Kotropoulos, C.: Emotional speech recognition: resources, features, and methods. Speech Commun. 48, 1162–1181 (2006)

Douglas-cowie, E.; Campbell, N.; Cowie, R.; Roach, P.: Emotional speech: towards a new generation of databases. Speech Commun. 40, 33–60 (2003)

Yuncu, E.: Speech Emotion Recognition Using Auditory Models, 2013 Thesis. Retrived from http://etd.lib.metu.edu.tr/upload/12616484/index.pdf

Wu, D.; Parsons, T.D.; Narayanan, S.S.: Acoustic feature analysis in speech emotion primitives estimation. In: INTERSPEECH, pp. 785–788 (2010)

Shami, M.; Verhelst, W.: An evaluation of the robustness of existing supervised machine learning approaches to the classification of emotions in speech. Speech Commun. 49, 201–212 (2007)

Pierre-yves, O.: The production and recognition of emotions in speech: features and algorithms. Elsevier Sci. 59, 157–183 (2003)

Schuller, B.; Muller, R.; Lang, M.; Rigoll, G.: Speaker independent emotion recognition by early fusion of acoustic and linguistic features within ensembles. In: Proceedings of INTERSPEECH 2005, pp. 805–809 (2005)

Schuller, B.; Vlasenko, B.; Eyben, F.; Rigoll, G.; Wendemuth, A.: Acoustic emotion recognition: a benchmark comparison of performances. In: 2009 IEEE Workshop on Automatic Speech Recognition & Understanding (ASRU), vol. 1, pp. 552–557. IEEE (2009)

Planet, S.; Iriondo, I.; Socoró, J.; Monzo, C.; Adell, J.; Salle, L.; Ramon, U.; Camins, Q.; Sub-challenge, C.: GTM-URL contribution to the INTERSPEECH 2009 emotion challenge. In: 10th Annual Conference of the International Speech Communication Association, Brighton, UK, pp. 316–319 (2009)

Yildirim, S.; Narayanan, S.; Potamianos, A.: Detecting emotional state of a child in a conversational computer game q. Comput. Speech Lang. 25(1), 29–44 (2011)

Lee, C.; Mower, E.; Busso, C.; Lee, S.; Narayanan, S.: Emotion recognition using a hierarchical binary decision tree approach. Speech Commun. 53(9–10), 1162–1171 (2011)

Yang, B.Ã.; Lugger, M.: Emotion recognition from speech signals using new harmony features. Signal Process. 90(5), 1415–1423 (2010)

Bhaykar, M.; Yadav, J.; Rao, K.S.: Speaker dependent, speaker independent and cross language emotion recognition from speech using GMM and HMM. 2013 National Conference on Communications, NCC 2013 (2013)

Huang, C.; Liang, R.; Wang, Q.; Xi, J.; Zha, C.; Zhao, L.: Practical speech emotion recognition based on online learning: from acted data to elicited data. Math. Probl. Eng. 2013 (2013). doi:10.1155/2013/265819

Sagha, H.; Matejka, P.; Gavryukova, M.; Povolny, F.; Marchi, E.; Schuller, B.: Enhancing multilingual recognition of emotion in speech by language identification. In: Proceedings Interspeech 2016, 17th Annual Conference of the International Speech Communication Association, vol. 1, pp. 1–5. ISCA, San Francsico (2016)

Bozs, C.: Automatic Speech Emotion Recognition Using Auditory Models with Binary Decision Tree and, International Conference on Pattern Recognition (2014)

Iliev, A.I.; Scordilis, M.S.; Papa, J.P.; Falcão, A.X.: Spoken emotion recognition through optimum-path forest classification using glottal features. Comput. Speech Lang. 24(3), 445–460 (2010)

Al-qaraawi, S.M.; Mahmood, S.S.: Wavelet transform based features vector extraction in isolated words speech recognition system. International Symposium Communication on System Networks Digital Signs, pp. 847–850 (2014)

Reyes-herrera, A.L.; Villaseñor-pineda, L.; Montes-y-gómez, M.; Erro, L.E.: Automatic Language Identification using Wavelets, INTERSPEECH, (2006)

Elrgaby, M.; Amoura, A.; Ganoun, A.: Spoken Arabic digits recognition using discrete wavelet. International Conference on Computer Modeling and Simulation, pp. 274–278 (2014)

Sunny, S.; S, D.P.; Jacob, K.P.: performance analysis of different wavelet families in recognizing speech. Int. J. Eng. Trends Technol., 4, 512–517 (2013).

Lilia, A.; Herrera, R.: Un Método para la Identificación Automática del Lenguaje Hablado Basado en Características Suprasegmentales Ana Lilia Reyes Herrera Doctor en Ciencias en el área de Ciencias Computacionales (2007)

John, D.G.M.; Proakis, G.: Digital Signal Processing, 3rd edn. Pearson Education, Prentice Hall, New Jersey (1996)

Snell, Roy; Milinazzo, F.: Formant location from lpc analysis data. IEEE Trans. Speech Audio Process. 1, (1993)

Bhatti, M.W.; Wang, Y.; Guan, L.: A neural network approach for human emotion recognition in Speech. ISCAS, p. 3 (2006)

Pribil, J.; Pribilova, A.: Formant features statistical analysis of male and female emotional speech in Czech and Slovak. 2012 35th International Conference on Telecommunications and Signal Processing, pp. 427–431 (2012)

Principe, J.C.; Euliano, N.R.; Lefebvre, W.C.: Neural adaptive systems: fundamentals through simulations. Wiley (2000)

Thompson W.F.; Balkwill, L.L.: Decoding speech prosody in five languages. Semiotica, 158(Brown 2000), 407–424 (2006)

Konar, A.; Chakraborty, A.: Emotion Recognition—A Pattern Analysis Approach. Wiley, Hoboken (2015)

Acknowledgements

The work presented in this paper has been supported by KFUPM NSTIP Project no. 12-Bio2366-04.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Deriche, M., Abo absa, A.H. A Two-Stage Hierarchical Bilingual Emotion Recognition System Using a Hidden Markov Model and Neural Networks. Arab J Sci Eng 42, 5231–5249 (2017). https://doi.org/10.1007/s13369-017-2742-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-017-2742-5