Abstract

Categorisation models of metaphor interpretation are based on the premiss that categorisation statements (e.g., ‘Wilma is a nurse’) and comparison statements (e.g., ‘Betty is like a nurse’) are fundamentally different types of assertion. Against this assumption, we argue that the difference is merely a quantitative one: ‘x is a y’ unilaterally entails ‘x is like a y’, and therefore the latter is merely weaker than the former. Moreover, if ‘x is like a y’ licenses the inference that x is not a y, then that inference is a scalar implicature. We defend these claims partly on theoretical grounds and partly on the basis of experimental evidence. A suite of experiments indicates both that ‘x is a y’ unilaterally entails that x is like a y, and that in several respects the non-y inference behaves exactly as one should expect from a scalar implicature. We discuss the implications of our view of categorisation and comparison statements for categorisation models of metaphor interpretation.

Similar content being viewed by others

Notes

Following this literature, we understand similes as figurative comparisons (e.g., ‘My love is like a rose’), which are different from literal comparisons (e.g., ‘My love is like her mother’). Seen this way, similes are the comparison counterpart of nominal metaphors (e.g., ‘My love is a rose’), which by definition involve a category violation.

It is worth noting that not only metaphors but also brand names can become polysemous in this way. For example, the name ‘Kleenex’ can be used to refer to a specific type of paper tissue or to paper tissues in general (see Glucksberg and Keysar 1990 and Sperber and Wilson 2008, for a discussion of this and other examples). It is the contention of categorisation models that this kind of language use is ubiquitous in everyday language (including sign languages) and therefore does not require a special interpretation mechanism that distinguishes metaphorical language from literal language.

A related argument has been made by Kennedy and Chiappe (1999) who argued that literal categorisation statements (e.g., ‘That is an apple’) are used when the two concepts share many common properties, while comparison statements (e.g., ‘That is like an apple’) are used when they share few common properties.

According to our reviewers, sentences like (i) are problematic for our account:

(i) Nixon was a Quaker, but he was not like a Quaker.

(For the benefit of any readers under 40 that may have wandered into this article: Richard Milhous Nixon was the 37th president of the United States, and he was in fact a Quaker.) Assuming that (i) is felicitous at all (and we are not entirely convinced that it is; cf. ‘Nixon was a Quaker, but he did not behave like a Quaker’), it may seem to defy our hypothesis that ‘Nixon was a Quaker’ entails ‘Nixon was like a Quaker’, because the entailment would render (i) contradictory. But rather than giving rise to a contradiction, (i) seems to imply that Nixon was not like an ordinary or ‘normal’ Quaker. This suggests that the two occurrences of ‘a Quaker’ denote slightly different concepts, which is not uncommon in contrastive environments (Geurts 1998), as (ii) illustrates:

(ii) That’s not a car, it’s a Ferrari.

This kind of contrast appears to be required in order to support the intended meaning of (i), which we take to be that Nixon was by definition a Quaker, but was unlike an archetypal Quaker.

Note that all these examples are marked in the linguistic sense of the word, and therefore would typically be used in somewhat special circumstances; for example, to correct another speaker. However, this does not affect our argument in any way (see Chiappe and Kennedy 2000 for a discussion of the use of metaphors to correct similes).

Materials were presented in a quasi-random order so that participants had to verify ‘This one is a tiger’ before the corresponding comparison statement. Thus we ensured that participants had correctly identified the animal in question, and did not agree to ‘This one is like a tiger’ because they thought it was a similar animal, though not a tiger.

As pointed out to us by one of the reviewers for this journal, the fact that agreement rates in the B-condition were quite high despite the negative belief bias is additional evidence for our analysis. See Geurts (2010: 157–158) for discussion.

Note that the preference for the stronger statement (if true) is a prerequisite for, but not the same thing as, the implicature that the stronger statement is false. Katsos and Bishop (2011) argue, correctly in our view, that some experimental studies have mixed these two things up, and show that they are dissociated in 5- and 6-year-old children. Relatedly, in order to explain why ‘x is like a y’ is infelicitous if x is known to be a y, we need not assume that a not-y implicature is derived.

Contrary to the theoretical intuition that ‘An apple is like a fruit’ is anomalous, the results of various on-line experiments revealed that comparisons to a superordinate are verified equally often and significantly faster than felicitous comparisons such as ‘A pear is like an apple’.

References

Aristotle 1984. The complete works of Aristotle: The revised Oxford translation. J. Barnes (Ed.) Princeton: Princeton University Press.

Barnden, J.A. 2012. Metaphor and simile: fallacies concerning comparison, ellipsis, and inter-paraphrase. Metaphor and Symbol 27: 265–282.

Bowdle, B.F., and D. Gentner. 2005. The career of metaphor. Psychological Review 112: 193.

Buhrmester, M., T. Kwang, and S.D. Gosling. 2011. Amazon’s mechanical Turk: A new source of inexpensive, yet high-quality, data? Perspectives on Psychological Science 6: 3–5.

Carston, R. 2002. Thoughts and utterances: The pragmatics of explicit communication. Oxford: Blackwell Publishing.

Carston, R., and C. Wearing. 2011. Metaphor, hyperbole and simile: A pragmatic approach. Language and Cognition 3: 283–312.

Chiappe, D., and J. Kennedy. 1999. Aptness predicts preference for metaphors or similes, as well as recall bias. Psychonomic Bulletin and Review 6: 668–676.

Chiappe, D., and J. Kennedy. 2000. Are metaphors elliptical similes? Journal of Psycholinguistic Research 29: 371–398.

Chiappe, D.L., and J. Kennedy. 2001. Literal bases for metaphor and simile. Metaphor and Symbol 16: 249–276.

———. 2003a. Aptness is more important than comprehensibility in preference for metaphors and similes. Poetics 31: 51–68.

———. 2003b. Reversibility, aptness, and the conventionality of metaphors and similes. Metaphor and Symbol 18: 85–105.

Crump, M.J., J.V. McDonnell, and T.M. Gureckis. 2013. Evaluating Amazon’s mechanical Turk as a tool for experimental behavioral research. PloS One 8: e57410.

Evans, J.S., S.E. Newstead, and R.M. Byrne. 1993. Human Reasoning: the Psychology of Deduction. Hove, East Sussex: Lawrence Erlbaum.

Fogelin, R.J. 1988. Figuratively speaking. New Haven, CT: Yale University Press.

Gentner, D., Bowdle, B., Wolff, P., and Boronat, C. 2001. Metaphor is like analogy. In D. Gentner, K. J. Holyoak and B. N. Kokinov (Eds.). The analogical mind: Perspectives from cognitive science. Cambridge, MA: MIT Press.

Geurts, B. 1998. The mechanisms of denial. Language 74: 274–307.

——— 2010. Quantity implicatures. Cambridge University Press.

Geurts, B., & Pouscoulous, N. 2009. No scalar inferences under embedding. In P. Egré and G. Magri (Eds.), Presuppositions and implicatures. MIT Working Papers in Linguistics.

Geurts, B., and B. van Tiel. 2013. Embedded scalars. Semantics and Pragmatics 6: 1–37.

Glucksberg, S. 2001. Understanding figurative language: From metaphors to idioms. New York, NY: Oxford University Press.

——— 2003. The psycholinguisitics of metaphor. Trends in Cognitive Sciences 7: 92–96.

——— 2008. How metaphors create categories—quickly. In The Cambridge handbook of metaphor and thought, ed. R.W. Gibbs Jr., 67–83. Cambridge, UK: Cambridge University Press.

——— 2011. Understanding metaphors: the paradox of unlike things compared. In Affective computing and sentiment analysis: Emotion, metaphor and terminology, ed. K. Ahmad, 1–12. New York, NY: Springer.

Glucksberg, S., and C. Haught. 2006a. On the relation between metaphor and simile: when comparison fails. Mind & Language 21: 360–378.

———. 2006b. Can Florida become like the next Florida? when metaphoric comparisons fail. Psychological Science 17: 935–938.

Glucksberg, S., and B. Keysar. 1990. Understanding metaphorical comparisons: beyond similarity. Psychological Review 97: 3–18.

———. 1993. How metaphors work. In Metaphor and thought, 2nd edn, ed. A. Ortony, 401–424. Cambridge: Cambridge University Press.

Goodman, N.D., and A. Stuhlmüller. 2013. Knowledge and implicature: modeling language understanding as social cognition. Topics in Cognitive Science 5: 173–184.

Grice, H.P. 1975. Logic and conversation. In Syntax and semantics, vol 3, eds. P. Cole, and J. Morgan, 41–58. New York: Academic Press.

Haught, C. 2013a. A tale of two tropes: how metaphor and simile differ. Metaphor and Symbol 28: 254–274.

——— 2013b. Spain is not Greece: How metaphors are understood. Journal of Psycholinguistic Research. Advance online publication. doi:10.1007/s10936-013-9258-2

Horn, L.R. 1989. A natural history of negation. Chicago University Press.

Israel, M., J. Harding, and V. Tobin. 2004. On simile. In Language, Culture, and Mind, eds. M. Achard, and S. Kemmer, 123–135. Stanford: CSLI Publications.

Johnson, A.T. 1996. Comprehension of metaphors and similes: A reaction time study. Metaphor and Symbol 11: 145–159.

Katsos, N., and D.V. Bishop. 2011. Pragmatic tolerance: implications for the acquisition of informativeness and implicature. Cognition 120: 67–81.

Kennedy, J.M., and D.L. Chiappe. 1999. What makes a metaphor stronger than a simile? Metaphor and Symbol 14: 63–69.

Keysar, B. 1989. On the functional equivalence of literal and metaphorical interpretations in discourse. Journal of Memory and Language 28: 375–385.

Kintsch, W. 1974. The representation of meaning in memory. New York: Academic Press.

Matsumoto, Y. 1995. The conversational condition on horn scales. Linguistics and Philosophy 18: 21–60.

Miller, G.A. 1979. Images and models: similes and metaphors. In Metaphor and thought, ed. A. Ortony, 202–250. New York, NY: Cambridge University Press.

O’Donoghue, J. 2009. Is a metaphor (like) a simile? differences in meaning, effects and processing. UCL Working Papers in Linguistics 21: 125–149.

Ortony, A. 1979. Beyond literal similarity. Psychological Review 86: 161–180.

Pierce, R.S., and D.L. Chiappe. 2008. The roles of aptness, conventionality, and working memory in the production of metaphors and similes. Metaphor and Symbol 24: 1–19.

Rubio-Fernández, P. 2007. Suppression in metaphor interpretation: differences between meaning selection and meaning construction. Journal of Semantics 24: 345–371.

Schnoebelen, T., and V. Kuperman. 2010. Using Amazon mechanical Turk for linguistic research. Psihologija 43: 441–464.

Sauerland, U. 2004. Scalar implicatures in complex sentences. Linguistics and Philosophy 27: 367–391.

Searle, J. 1979. Metaphor. In Metaphor and thought, ed. A. Ortony, 92–123. New York, NY: Cambridge University Press.

Soames, S. 1982. How presuppositions are inherited: a solution to the projection problem. Linguistic Inquiry 13: 483–545.

Sperber, D., and D. Wilson. 2008. A deflationary account of metaphor. In The Cambridge handbook of metaphor and thought, ed. R.W. Gibbs Jr., 84–105. Cambridge, UK: Cambridge University Press.

Sprouse, J. 2011. A validation of Amazon mechanical Turk for the collection of acceptability judgments in linguistic theory. Behavior Research Methods 43: 155–167.

Stern, J. 2000. Metaphor in context. Cambridge, MA and London, UK: Bradford Books, MIT Press.

Utsumi, A. 2007. Interpretive diversity explains metaphor-simile distinction. Metaphor and Symbol 22: 291–312.

van Rooij, R., and K. Schulz. 2004. Exhaustive interpretation of complex sentences. Journal of Logic, Language and Information 13: 491–519.

van Tiel, B. 2014a. Embedded scalars and typicality. Journal of Semantics 31: 147–177.

——— 2014b. Quantity matters: Implicatures, typicality and truth. Doctoral dissertation: University of Nijmegen.

Wearing, C. 2014. Interpreting novel metaphors. International Review of Pragmatics. 6: 78–102.

Wilson, D., and R. Carston. 2007. A unitary approach to lexical pragmatics: relevance, inference and ad hoc concepts. In Advances in pragmatics, ed. N. Burton-Roberts, 230–260. Basingstoke: Palgrave Macmillan.

Zharikov, S., and D. Gentner. 2002. Why do metaphors seem deeper than similes? In Proceedings of the 24th annual meeting of the Cognitive Science Society, 976–981. Fairfax, VA: Cognitive Science Society.

Author information

Authors and Affiliations

Corresponding author

Appendix: Experimental Report

Appendix: Experimental Report

1.1 Experiment 1a

1.1.1 Method

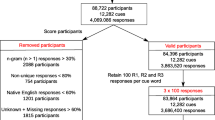

Participants

50 participants were recruited through Mechanical Turk.

Materials and Procedure

We constructed a total of 108 slides including either a comparison or a categorisation statement (e.g., ‘This one is like a moirk’). The statement referred to a figure that was presented on the same slide (e.g., a triangle) and included a made-up category that was also defined on the same slide. The definitions consisted of three properties of each figure: shape, colour and border type (e.g., ‘Moirk: circle, blue, thick border’). The figure on the slide could either share no property with the defined category (Similarity Level 0), one property (Similarity Level 1), two properties (Similarity Level 2) or all three properties (Similarity Level 3).

The critical items consisted of 40 pairs of slides, each pair including a comparison statement and a categorisation statement and 10 pairs corresponding with each of the 4 possible degrees of similarity (i.e. Level 0–3). An extra 20 filler items included a true categorisation statement (Level 3) and another 8 items, one of each type, were used as warm-up trials. The warm-up trails were presented in the same random order to all participants, while the critical and filler items were randomized individually.

Participants were asked to verify the statement that appeared at the top of each slide in relation to the figure and the definition that were presented underneath the statement. Participants were given a true/false choice to respond.

1.1.2 Results

The mean proportions of true responses in each condition are plotted in Fig. 2. The overall pattern of results suggests that participants did not derive a non-y inference in interpreting the comparison statements.

We fitted a logistic mixed-effects model, positing fixed effects of Statement Type and Similarity Level, and random effects of Participant and Item, as well as a random slope of Statement Type by Participant. (Models with additional random slopes did not converge.) This disclosed significant main effects of Statement Type and Similarity Level, and a significant interaction (p < 0.001, model comparison).

Follow-up pairwise comparisons at different similarity levels were implemented using logistic mixed-effects models, positing a fixed effect of Statement Type and random effects of Participant and Item, as well as a random slope of Statement Type by Participant. There was a significant main effect of Statement Type in the Level 2 condition (β = 3.22, SE = 0.67, Z = 4.80, p < 0.001), but no significant main effect in the Level 1 condition (β = 0.70, SE = 3.34, Z = 0.21, p = 0.833) or the Level 3 condition (β = 13.3, SE = 14.3, Z = 0.93, p = 0.352). The significant effect remains significant (p < 0.001) when corrected for multiple comparisons.

Looking at individual performances, 25 of the 50 people who took part in the task adopted the categorisation strategy by default. That is, they responded true in all cases of maximal similarity, responding false in all Similarity Level 0–2 trials regardless of the type of statement. Removing those 25 participants from the analyses did not change the overall pattern of results greatly (see Fig. 9 below). There was a 9 % increase in the proportion of true responses in the Level 1 condition and a 37 % increase in the Level 2 condition, but the mean proportion of true responses in the Level 3 condition was still .90 (decreasing only 5 % from the overall analyses). More specifically, only 2 participants systematically responded false in the Comparison/Level 3 condition.

Mean proportions of true responses to comparison statements from those participants in Experiment 1a who did not adopt the categorisation strategy by default and from Experiment 1b, which included only comparison statements (SE bars; asterisk p < .03)

1.2 Experiment 1b

Given that half of the participants in Experiment 1a applied the same strategy in the Categorisation and Comparison conditions (i.e., responded true only in cases of maximal similarity), Experiment 1b tried to determine whether the results of the Comparison/Level 3 condition may have been skewed. More specifically, we wanted to determine whether participants may derive a non-y inference in interpreting comparison statements if they are not presented with categorisation statements in the same task.

1.2.1 Method

Participants

20 participants were recruited through Mechanical Turk.

Materials and procedure

The materials were those used in Experiment 1a for the Comparison condition. The procedure was the same as in the first experiment.

1.2.2 Results

One participant was eliminated because he had responded randomly. The mean proportions of true responses in each condition are plotted in Fig. 9. The overall pattern of results suggests that participants did not derive a non-y inference in interpreting the comparison statements in Experiment 1b, in line with what was observed in Experiment 1a.

Rather than increasing the proportion of true responses in the Similarity Level 1 and Level 2 conditions, presenting participants only with comparison statements resulted in comparable agreement rates in the Level 1 condition and significantly lower agreement rates in the Level 2 condition. More importantly, in the Similarity Level 3 condition, the results were comparable between the two experiments. In fact, participants were more prone to agreeing with the comparison statements when they were not presented with categorisation statements in the same task and none of the participants in Experiment 1b systematically rejected the comparison statements in the maximal-similarity condition (while 2 participants had done so in Experiment 1a). These results therefore suggest that the results of Experiment 1a were reliable and not an artefact of presenting participants with comparison and categorisation statements in the same task.

1.3 Experiment 2

1.3.1 Method

Participants

20 participants were recruited through Mechanical Turk.

Materials and Procedure

We constructed 80 slides including a picture of an animal and a description of the animal in a bubble (e.g., ‘This one is a wild animal’ referring to a tiger). The descriptions made up 40 pairs of categorisation and comparison statements about 10 well-known animals. We used 4 different types of categories: (1) superordinates (e.g., ‘wild animal’ for a tiger), (2) same category (e.g., ‘tiger’ for a tiger), (3) similar category (e.g., ‘lion’ for a tiger) and (4) dissimilar category (e.g., ‘bear’ for a tiger).

The materials were presented in the same quasi-random order to all participants. In order to avoid possible issues with the identification of the animals in the pictures, the categorisation version of each Same-Category item was presented before the comparison version (i.e., participants had to agree to ‘This one is a tiger’ before they had to decide on ‘This one is like a tiger’).

Participants were asked to verify a series of facts about 10 well-known animals that were presented in pictures. Participants were given a true/false choice to respond.

1.3.2 Results

The mean proportions of true responses for each condition are plotted in Fig. 4. The overall pattern of results suggests that participants did not derive a non-y inference in interpreting the comparison statements relative to what is observed for the categorisation statements.

We fitted a logistic mixed-effects model, positing fixed effects of Statement Type and Category Type, and random effects of Participant and Item, as well as a random slope of Statement Type by Participant. (Models with additional random slopes did not converge.) This disclosed significant main effects of Statement Type and Category Type, and a significant interaction (p < 0.001, model comparison).

Follow-up pairwise comparisons for different category types were implemented using logistic mixed-effects models, positing a fixed effect of Statement Type and random effects of Participant and Item, as well as a random slope of Statement Type by Participant. There was a significant main effect of Statement Type in the Similar condition (β = 3.73, SE = 1.04, Z = 3.59, p < 0.001), which remains significant when corrected for multiple comparisons (p < 0.001), but no significant main effect in the Dissimilar condition (β = 1.68, SE = 1.73, Z = 0.97, p = 0.33), Same condition (β = 4.67, SE = 4.90, Z = 0.95, p = 0.34) or Superordinate condition (β = 3.49, SE = 2.54, Z = 1.37, p = 0.171).

1.4 Experiment 3

1.4.1 Method

Participants

25 participants were recruited through Mechanical Turk.

Materials and Procedure

We constructed 42 different items of the form.

“John says: My mother is like a nurse.

Would you conclude from this that, according to John, his mother is not a nurse?”

The critical items consisted of 24 items evenly distributed in 4 conditions: true conclusion according to common knowledge (T/C); false conclusion according to common knowledge (F/C); true conclusion according to common knowledge if the conclusion is interpreted literally (T(lit.)/C), and possible conclusion according to the speaker’s private knowledge (P/SP). See Table 1 for an example of each condition.

The statements in the T(lit.)/C condition were similes and the conclusions negated metaphors. Taken literally, the conclusions were obviously true since the metaphors included a category violation (e.g., ‘His ideas are like diamonds’ > ‘His ideas are not diamonds’). The similes/metaphors were relatively conventional.

We used 12 filler items in the standard form, and 6 control items in which the statement was a relatively conventional metaphor and participants had to decide whether the corresponding simile followed from the statement (e.g., ‘My lawyer is a shark’ > ‘My lawyer is like a shark’).

The materials were presented in the same random order to all participants. Participants were asked to decide whether, according to the person making the statement, a certain conclusion followed from what they had said. Participants were given a YES/NO choice to respond.

1.4.2 Results

The mean proportions of true responses in each condition are plotted in Fig. 7. The results show different agreement rates in the various conditions depending on the hearer’s knowledge.

We fitted a logistic mixed-effects model, positing fixed effects of Condition and random effects of Participant and Item, as well as a random slope of Condition by Participant. This disclosed a significant main effect of Condition (p < 0.01, model comparison).

Follow-up pairwise comparisons were implemented by using the same model over subsets of the data. These models disclosed significant differences between the T/C condition and each of the other three conditions (F/C: β = 5.17, SE = 1.33, Z = 3.88, p < 0.001; T(lit.)/C: β = 6.73, SE = 3.05, Z = 2.21, p < 0.05; P/SP: β = 5.28, SE = 1.69, Z = 3.13, p < 0.001). Corrected for multiple comparisons, the T/C to F/C comparison is significant with p < 0.001, the T/C to T(lit.)/C comparison is marginally significant (p = 0.082) and the T/C to P/SP comparison is significant with p < 0.01. None of the other pairwise comparisons showed significant differences (F/C vs. T(lit.)/C: β = 0.67, SE = 1.08, Z = 0.62, p = 0.535; F/C vs. P/SP: β = 0.356, SE = 0.907, Z = 0.39, p = 0.695; T(lit.)/C vs. P/SP: β = 0.072, SE = 0.616, Z = 0.12, p = 0.907).

1.5 Experiment 4

1.5.1 Method

Participants

25 participants were recruited through Mechanical Turk.

Materials and Procedure

We constructed 54 slides each including a picture of an animal, fruit, or vegetable in the center. Underneath the picture was a short description, which could be in categorisation form (e.g., ‘Robins are birds’) or in comparison form (e.g., ‘Chickens are like a farm animals’).

The critical items were 18 pairs of categorisation and comparison statements about 18 well-known animals. 3 types of categories were used in the statements: (1) basic level (e.g., ‘Labradors are dogs’), (2) superordinate (e.g., ‘Sharks are predators’) and (3) name repeated (i.e., the category was already mentioned in the name of the animal; e.g., ‘Grizzly bears are bears’).

18 comparison statements were used as fillers, 9 depicting animals and 9 depicting fruits or vegetables. The filler items included two similar animals, fruits or vegetables (e.g., ‘Wild boars are like pigs’/’Shallots are like onions’). The two-animal fillers were used as a baseline for the critical comparison statements. The materials were presented in the same random order to all participants.

Participants were asked rate a series of descriptions of animals, vegetables and fruits on a 1–7 scale ranging from 1 = Completely unacceptable to 7 = Perfectly acceptable.

1.5.2 Results

The mean appropriatness ratings for each condition are plotted in Fig. 6. The overall pattern of results suggests that categorisation statements were preferred over comparison statements in all conditions.

As participants were generally consistent across items within each condition, we consider their means for each condition (i.e. we consider each participant to give rise to one data point per condition). Paired t-tests reveal highly significant differences between categorisation and comparison in each condition (all p < 0.001) and between each comparison condition and the control (all p < .002). However, as the data are not normally distributed, we also report the results of a non-parametric statistical test, namely the sign test. There is a highly significant preference for categorisation in all three conditions (21/25 participants in the Basic-Level condition, 21/25 participants in the Repetition condition, and 22/25 participants in the Superordinate condition: all p < 0.001). There is also a highly significant preference for the comparisons in the control condition than in each of the other comparison conditions (19/25 participants in the Basic-Level condition, 19/25 participants in the Repetition condition, and 20/25 participants in the Superordinate condition: all p < 0.008).

1.6 Experiment 5

1.6.1 Method

Participants

200 participants were recruited through Mechanical Turk.

Materials and Procedure

All participants were randomly presented with one of 4 types of narrative, 2 control and 2 critical conditions. For the actual narrative, see the main text. At the end of the narrative, participants were given a 1–7 Likert scale to indicate their interpretation of the final statement, with 1 meaning ‘Definitely disagree’ and 7 meaning ‘Definitely agree’.

1.6.2 Results

The mean ratings for each condition are plotted in Fig. 8. In the Critical/Partial knowledge condition, the mean rating was 4.94 (SD 1.64), with 10 participants giving the highest possible rating of 7. In the corresponding Full knowledge condition, the mean rating was 6.08 (SD 1.57), with 29 participants giving a maximum rating of 7. An unpaired t-test shows the difference in rating to be highly significant (t = 3.55, df = 98, p < 0.001). As the ratings are not normally distributed, we also consider the proportion of maximum ratings given in each condition: this exhibits a highly significant difference (Fisher’s exact test, p < 0.001).

By comparison, in the Control/Partial knowledge condition, the mean rating was 5.50 (SD 1.91), with 20 participants giving the rating of 7. In the corresponding Full knowledge condition, the mean rating was 6.32 (SD 1.32), with 31 participants giving the rating of 7. An unpaired t-test shows the difference in rating to be significant (t = 2.50, df = 98, p < 0.016), and the proportion of maximal ratings also differs significantly (Fisher’s exact test, p < 0.05).

Rights and permissions

About this article

Cite this article

Rubio-Fernández, P., Geurts, B. & Cummins, C. Is an Apple Like a Fruit? A Study on Comparison and Categorisation Statements. Rev.Phil.Psych. 8, 367–390 (2017). https://doi.org/10.1007/s13164-016-0305-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13164-016-0305-4