Abstract

Purpose of Review

To summarize the advances achieved in the detection and characterization of myocardial ischemia and prediction of related outcomes through machine learning (ML)-based artificial intelligence (AI) workflows in both single-photon emission computed tomography (SPECT) and positron emission tomography (PET).

Recent Findings

In the field of cardiology, the implementation of ML algorithms has recently gravitated around image processing for characterization, diagnostic, and prognostic purposes. Nuclear cardiology represents a particular niche for AI as it deals with complex images of semi-quantitative and quantitative nature acquired with SPECT and PET.

Summary

AI is revolutionizing clinical research. Since the recent convergence of powerful ML algorithms and increasing computational power, the study of very large datasets has demonstrated that clinical classification and prediction can be optimized by exploring very high-dimensional non-linear patterns. In the evaluation of myocardial ischemia, ML is optimizing the recognition of perfusion abnormalities beyond traditional measures and refining prediction of adverse cardiovascular events at the individual-patient level.

Similar content being viewed by others

Introduction

Artificial intelligence (AI) has revolutionized the way in which we interact with the massive stream of data generated by the current process automation, connectivity, and data storage capabilities. The explosion in the implementation of AI can be traced, on the one side, to the utilization of novel machine learning (ML) algorithms (methods that can iteratively elucidate complex non-linear and high-dimensional patterns in order to optimize classification and prediction tasks) such as convolutional neural networks (i.e., Deep Learning, see ahead), and on the other side, to the recent convergence of very large high-quality datasets, massive computational power, and the existence of a range of complex problems in all areas of knowledge [1].

Impressive strides have been made toward AI involvement in a wide array of areas exemplified by Google’s search engine, Tesla’s driverless cars, and DeepMind’s game mastering by AlphaZero [2], as well as customer experience optimization in Netflix, Spotify, and Amazon. Expectedly, ML-based AI has begun to imbue the medical sciences. In fact, breakthrough medical applications of ML have been reported for instances such as distinguishing benign from malignant lesions in dermatology [3••] and detecting diabetic retinopathy through direct processing of funduscopic retinal images [4]. In the field of cardiology, early ML implementations concentrated on ECG signal processing [5] for the detection of disturbances in the conduction system, while modern approaches have been implemented for the diagnosis of arrhythmias.[6, 7] Presently, it has become clear how cardiovascular imaging, and, in particular, nuclear cardiology (i.e., single-photon emission computed tomography [SPECT] and positron emission tomography [PET] myocardial perfusion imaging), comprises a particular niche for the implementation of AI.

The present review will summarize the principles behind AI and ML (with special emphasis on Deep Learning and image processing), the advances achieved in the detection and characterization of myocardial ischemia, and the evidence regarding prediction of ischemia-related outcomes through the incorporation of ML-based AI workflows in both SPECT and PET imaging.

Artificial Intelligence and Machine Learning

The concept of AI has been ubiquitous to computing science since its emergence. It stemmed from the idea that intelligence as a process could be so exhaustively described in mathematical terms that it could be then programed as a system that would exhibit the capability to solve problems or perform tasks requiring “human-level” intellectual capabilities.

As such, early efforts in AI implementations comprised direct rule programing for a discrete number of tasks. This approach rapidly demonstrated that AI tasks would be limited by the sheer amount of built-in rules to process input data. This meant that an AI system would only properly function when encountering conditions directly recognizable within its arsenal of pre-considered action rules, and therefore, that adaptation or refinement could not be a function of exposure to novel data. This rather static nature of early implementations caused an eventual “cooling” of the expectations placed on AI. It was until the end of the twentieth century that the generation of modern ML algorithms revived the interest in AI by introducing a dynamic and responsive character to the area.

While AI is a broad concept and it normally refers to the notion of machines solving tasks where a certain (human) level of intelligence is required, ML pertains to the type of mathematical algorithms that use data to optimize a function in order to solve specific tasks at hand. ML algorithms are characterized by their capability to progressively improve their performance by means of exposure to large amounts of (training) data. In general, ML algorithms are initiated with random parameters, and in their first attempts to provide an accurate estimation of a dependent variable (outcome) perform rather poorly. The goal of most algorithms is to optimize such parameters in order to reduce a pre-defined error metric. This is achieved by refining or adjusting the ML model parameters to progressively minimize the estimation of error after every iteration of data exposure.

This behavior describes the notion of learning embedded in ML. ML algorithms can identify or even create non-linear dependencies (i.e., patterns) between predictors (inputs) at very high-dimensional levels. Once such patterns are identified, they are used to optimize the performance of the model in the intended task. Thereon, trained ML models are tested against a fraction of the dataset, originally reserved to evaluate whether the model properly generalizes, i.e., whether it performs consistently in new unseen data. There are many examples of powerful ML algorithms such as support vector machine [8], boosted ensembles [9], and random forests [10]. But the most notable recent example of highly efficient ML algorithms are convolutional neural networks, which will be discussed ahead under their newly adopted name: Deep Learning.

It was mentioned that the convergence of four factors has made possible the current wave of AI expansion. The first one has been production of and access to sufficient amounts of good-quality data due to the growing interconnection that facilitated recollection and availability of very large and dynamic datasets. The second element has been sizeable computational power. The perpetual innovation in electronic technologies has delivered processing units with exponentially increasing computing capacities (e.g., modern graphics processing units [GPUs]), which currently allow performing massive computational tasks within feasible time frames (a feature especially advantageous when dealing with Deep Learning). The third element is the constant development of novel methods and optimization techniques to improve the performance of such algorithms. The fourth factor is the accessibility of frameworks that allow larger number of researchers and users to experiment with this technology. Complex tasks that can benefit from the elucidation of high-dimensional patterns for the identification of discernible groups or data behaviors are good candidates for ML algorithms. Clearly, natural systems and certainly their pathological states such as those found in cardiovascular disease portray this complexity due to the intricate, dynamic, and vastly numerous interrelations between their predictors and outcomes.

Deep Learning

Neural networks constitute a ML algorithm that took inspiration from the nature of biological synaptic systems. In their most basic form, they consist of input, hidden, and output layers. Input layers can accommodate large amounts of variables, such as the values representing every pixel in an image. Then, hidden layers contain a variable number of units that integrate and process data from the preceding (input) layer and transmit it to the subsequent layer until the processed information finally arrives to an output layer. In the output layer, a cost function that quantifies the performance of our model is tailored to the specific objective of the task. This task can be, for instance, the classification of the input samples into different groups (e.g., presence or severity of disease), the prediction of a continuous outcome (e.g., risk of events), or the identification of a specific object in an image. Among the numerous processing functions that can be assigned to hidden layers, convolutions have represented a key player in shaping networks with exceptional fitness for image recognition. Convolutions allow the network to produce location invariant features by sweeping kernels throughout the image. Before training, these kernels have random parameters, but with each iteration the parameters are modified in order to reduce the cost error defined at the output. This process in which the error travels back from the output and all the parameters are modified in order to reduce the error is called backpropagation. With each layer, the network combines the features created in previous layers and is able to produce more abstract features that capture relevant information to solve the task. Therefore, a common feature of modern neural networks has been the growing number of their hidden layers. Every added processing layer increases the depth of the networks. As a result, the designation Deep Learning has been swiftly coined as the working term for these ML algorithms. Figure 1 depicts the conceptual structure of the terms discussed. Figure 2 shows a schematic depiction of a deep convolutional neural network (architecture of a Deep Learning Model).

Schematic architecture of a convolutional neural network. From the input layer on the left side, the values of every pixel in a given image can be processed forward (toward the right side) through the hidden layers (represented as narrower and thicker panels) and finally arrive to the fully connected output layer which is tailored for the specific task at hand (e.g., diagnosis of myocardial ischemia and estimation of risk of an individual subject of presenting a MACE). Standard architectures are formed by blocks that are composed in turn by different layers. For example, a block can be composed by a convolutional, a batch-normalization and a non-linear activation layer. Certain blocks also include a max pooling layer, which reduces the spatial dimension of the input. The key shows examples of operators that can be selected for the hidden layers

Latest Developments in Image Processing

The architecture of a neural network defines its properties and many groups of researchers have explored different architectures to improve the performance and the abilities of neural networks. One example is the U-Net,[11] an architecture employed for segmentation where each pixel of the input image is classified into one of various categories. Within segmentation, different U-Net architectures can be found. In general, a U-Net is formed by an encoder and a decoder with connections between them. In the encoder, the input image is processed by a series of convolutions and with each operation the dimensions of the output are reduced. The decoder uses the output of the encoder, and with each operation it increases the size of the input and finally tries to recover the original image. The connections between encoder and decoder facilitate the job of the decoder because they provide information about the original image at different processing stages.

Deep neural networks have already proved their value by achieving state-of-the-art results in the beforehand mentioned tasks. Still, scientists have found new applications to neural networks in tasks where no known algorithm was able to produce results close to the ones achieved with Deep Learning. Examples of this are the images produced by modern Generative Adversarial Networks (GANs). GANs originate from the idea of having two networks competing against each other. The goal of one network (D) is to discriminate between real and fake images, just like in a standard classifier. The goal of the second network (G) is to generate images from noise that look as close as possible to the real images. Both networks compete against each other to become better at their task until G is able to generate new images with characteristics of a specific population (in the case of medical imaging) that are considerably different from the original but within the range of quality of real images at the same time. This opens a wide range of possibilities because in medical imaging it can be challenging to obtain large datasets of images with specific characteristics due to cost, low prevalence of the disease, or patient consent. Consequently, this technique has been applied to MR images [12], lung nodules [13], and skin lesions [14], among others. They have also been explored for the conversion of images from one image modality to another (e.g., MR to CT). [15, 16] They have also been used to improve the image reconstruction through de-noising in techniques like CT [17] and PET [18].

Applicability of AI in Nuclear Cardiology

We have laid out the way in which modern ML-based AI is shaping a new frontier in data analysis and optimization of complex estimations. In particular, it is relevant to understand that the nature and amount of available data are pivotal for the selection of the ML algorithm to be implemented (e.g., random forests for numerous clinical variables or Deep Learning in direct image recognition).

In the discipline of cardiology, vast amounts of numerical data have emerged from electronic health records, blood biomarker measurements, and genetic analyses, while direct image data is being provided by invasive and non-invasive imaging techniques (see Fig. 3).

Nuclear cardiology techniques (i.e., SPECT and PET imaging) are based on the detection of emitted radiation from the heart after injection of dedicated radiotracers that couple with a specific physiologic process such as perfusion, glucose uptake, beta-oxidation, etc. PET imaging possesses a more advantageous performance profile due to higher radioactive count rates, increased spatial resolution, and lower radiation burden. Although image quality is superior in PET imaging, SPECT is widely accessible and amounts to most of the modern nuclear cardiology data recollected.

Although SPECT and PET results are complex images (defined spatially pixel- and color channel-wise) for which Deep Learning represents the most suitable tool to implement, most nuclear cardiology data has been operationalized to form image results into structured numerical datasets.

AI analysis of these datasets has been achieved through ML algorithms other than Deep Learning at a lower computational cost. However, full Deep Learning implementation is beginning to provide interesting results. The evidence on the application of ML-based AI into numerical and imaging data will be summarized in the next section.

Optimizing Detection and Characterization of Myocardial Ischemia

The diagnosis of coronary artery disease (CAD) through nuclear imaging has traditionally been subjected to the visual interpretation of clinicians, with a wide spectrum of variability according to the clinical experience of the reader. Automated and standardized analyses of semi-quantitative variables, such as total perfusion deficit (TPD) and summed scores (SRS, SSS, and SDS), can be computed to aid the visual readout. However, it is clear that the integration of other several and fundamental features for the detection of CAD (that is, clinical or demographic data as well as functional variables) can benefit from a novel integrative approach to data analysis.

With the advent of ML, nuclear cardiology has begun to preview enormous benefit from improved capabilities in image processing and big-data classification from different sources. Pioneer studies have used SPECT myocardial perfusion imaging (MPI) images to feed ML algorithms in order to improve the accuracy of CAD detection in an automated fashion. Arsanjani and colleagues extracted quantitative and functional variables from MPI images and combined them through a support vector machine algorithm to improve the diagnosis of coronary stenosis [19]. ML performance was compared to quantitative analysis, such as TPD with coronary angiography as the gold standard. Remarkably, SVM outperformed all the quantitative analyses, as well as the visual interpretation of two readers. Notably, the introduction of a different functional variable (Motion and Thickening Changes, absolute stress end-diastolic or end-systolic volumes) did not affect the detection accuracy of the algorithm. This was the first study that successfully combined multiple features to improve the diagnostic accuracy of SPECT MPI.

The natural follow-up to the previous study was the integration of additional data and a more refined analysis. In fact, a successive study gathered clinical data that spanned from age and sex to the probability of obstructive CAD using the ECG Diamond-Forrester criteria, and combined them with quantitative perfusion variables by means of an ML algorithm (LogitBoost).[20] The authors reported improved accuracy of CAD detection in comparison not only to quantitative TPD but also to the ML that did not use the clinical data. This was a crucial finding, as non-quantitative, clinical variables were given significant importance in a stepwise systematic fashion within the generated ML workflow.

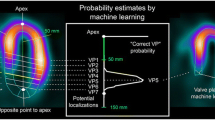

A further breakthrough, however, was achieved few years later when a study developed a Deep Learning model capable of identifying abnormalities directly from SPECT images by mimicking the interpretation of experts [21•]. More specifically, the authors used stress and rest images and their difference to investigate probable stress defects, rest defects, and ischemia, respectively. The resulting software could detect > 5000 candidate regions, which were judged by nuclear cardiologists as abnormal or normal. Those final judgments of the candidate regions were used as input to train the neural network, together with other clinical variables. The model provided a value from 0 to 1 to assess the pseudo-probability of defects or ischemia in SPECT images and it outperformed summed scores not only in terms of the ROC analysis but also when the expert judgments were used as gold standard. On the same line, Bentacur and colleagues used raw and quantitative polar maps from SPECT MPI images to feed and train a Deep Learning model [22]. In a first step, such a model extracted several features from the images that were then transferred to a second step, consisting of three fully connected layers. Each layer gave an output, which corresponded to a score for obstructive disease diagnosis in the left anterior descending (LAD), left circumflex (LCx), and right coronary (RCA) artery territories. The study reported the improvement of CAD diagnosis compared to standard quantitative TPD not only on a per-patient but also on a per-vessel evaluation.

Overall, it is clear how ML has the strong potential of helping clinicians in detecting CAD-related myocardial ischemia. Standard quantitative methods and visual interpretation are limited by the input of a single source of variable and experience of the reader, respectively. The integration of quantitative, clinical, and imaging data can be achieved only by a more defined and innovative approach, which is nowadays available through ML algorithms among which DL conveys the largest potential in direct image processing and recognition.

Refining Prognostic Estimations in Myocardial Ischemia

The prognosis of myocardial ischemia-related major adverse cardiovascular events (MACE) is challenging, and its results are increasingly clear that it needs to take into account a vast set of information that varies from demographic, clinical, diagnostic (such as stress ECG or CCTA), and quantitative nuclear imaging data (SPECT and PET). Clinicians are often expected to achieve the best predictive outcome to reduce unnecessary invasive practices as well as underestimation of risk of outcomes; thus, there is a potential benefit in the implementation of AI support systems for variable and imaging data integration (see Fig. 3). In this context, the ESC guidelines suggest an approach based on traditional statistical analyses known as the SCORE risk model for the prediction of the 10-year risk of MACE. However, since the new explosion of AI implementations, the question has emerged whether ML algorithms may provide optimized patient-based estimations of risk that may improve our identification of subjects at risk who may benefit from specific therapeutic decisions. ML has demonstrated to be a much more powerful tool in the prediction of risks based on the combination of numerous, and often easily accessible, data.

A first study focused on clinical data combined with stress and rest TPD obtained from SPECT MPI to predict revascularization in patients with suspected CAD.[23] A LogitBoost ML algorithm with stress and rest TPD data predicted revascularization better than one out of two visual experts and standard ischemic quantitative TPD, and resulted in higher performance than the algorithm trained with stress data only.

Similarly, Bentacur et al. integrated several SPECT, clinical, and stress data to assess the predictive value of a ML algorithm for 3-year MACE[24•]. As expected, the ML that combined all the data resulted in better AUC than stress or rest TPD and ML with imaging data only, highlighting once again the importance of integrating clinical variables. More importantly, when the ML scores were divided into percentiles, the authors showed a very high correlation of the ML-predicted MACE versus the observed MACE over the ML scores. Focusing on the last 5 percentiles (that is, the highest risk scores), they also detected a significant number of patients that were previously considered as normal by visual and TPD manners. Furthermore, a total reclassification of five MACE risk categories led to an improvement of 30% in predicting MACE and a 5% decrease for MACE-free patients.

In parallel, different ML algorithms were tested for prediction of cardiac mortality and compared to traditional statistical approaches by using MPI, clinical, and ECG values [25]. Not only did all the ML algorithms outperform traditional, but also a specific ML algorithm (LASSO) trained to shrink the input variables to a minimum number (six) was still better than a logistic regression fed with over 100 variables. This points out the potential of ML in accurately selecting the most important variables. Very interestingly, the contribution of each variable in determining the risk score per patient was visually provided: a tool that can help clinicians in better understanding the ML rationale.

A step further was achieved by Juarez-Orozco and colleagues [26]. We used a LogitBoost model trained with clinical and demographic values to predict significant regional and global myocardial ischemia as assessed by PET images. They compared their analysis directly to a traditional logistic regression approach when considering the variables promoted by the ESC guideline models. ML outperformed both the Gender and the SCORE variables analyzed through both traditional logistic regression and ML. Thus, this study specifically referred to the importance of employing ML to select features for optimization of new ML models and of ML-based AI implementations to identify patients in whom performing an expensive technique such as PET could have an effective additional value.

Taken together, these findings clearly support the implementation of ML-based AI in the important exercise of risk stratification and MACE prediction. This will likely be beneficial in clinical practice for a more accurate evaluation of patients with known or suspected ischemia through automation of ischemia detection for risk stratification and optimization of patient selection for invasive and non-invasive therapeutic interventions.

Future Perspectives

Deep Learning has obtained arguably the most remarkable results within the setting of diagnosis and prognosis optimization in nuclear cardiology. Still, for some problems, there are still challenges that require new sets of ideas in order to be solved. One of them is unsupervised learning. It is believed by many scientists that conquering unsupervised learning will produce a radical change in the applications of AI. The large majority of data at our disposition does not possess labels. Understanding the general and detailed structures of this type of data, without having constant supervision that let us correct our parameters, would allow computers to obtain knowledge from every source of information beyond the constraints of human interpretations. An intermediate step is semi-supervised learning. In this scenario, labeled samples and a larger number of unlabeled samples are employed together to solve a task, e.g., classification. There are different strategies about how to solve this problem, but, in general, the unlabeled data is used to identify patterns and the general structure of the data, and the labeled data is used to learn the difference between classes. Different strategies are currently followed in order to improve the performance of semi-supervised learning. Examples are graph-based networks [27] and perturbation models [28].

Every day, a large number of researchers try to push the boundaries of AI, ML, and Deep Learning with constant incremental improvements. Another upcoming domain will be that of hybrid imaging in which parallel or sequential acquisitions of PET or SPECT and CT or MR will surely be integrated when ML can account for the structural relation between the techniques’ results (for further considerations on this topic, a review by Juarez-Orozco et al. [1] can be helpful). From our point of view, we can only expect improvements in all domains of nuclear cardiology in the coming years. But, we still need a radical new idea that enables computer systems to understand the structures of unlabeled data and achieve a genuine, robust AI.

Conclusion

ML-based AI is bound to profoundly change the way in which nuclear cardiology techniques, namely SPECT and PET imaging, can aid in the diagnosis of myocardial ischemia and in the prediction of adverse and potential fatal cardiovascular events. ML conveys a wide variety of algorithms that are able to discover and utilize complex data patterns to optimize these tasks through training on large datasets. Within ML, Deep Learning is of special interest for nuclear cardiology as it offers the possibility to directly process cardiac images to identify and characterize myocardial ischemia as well as the risk of its related outcomes.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance •• Of major importance

Juarez-Orozco LE, Martinez-Manzanera O, Nesterov SV, Kajander S, Knuuti J. The machine learning horizon in cardiac hybrid imaging. Eur J Hybrid Imaging. 2018;2(1):15.

Silver D, Schrittwieser J, Simonyan K, Antonoglou I, Huang A, Guez A, et al. Mastering the game of go without human knowledge. Nat Publ Group. 2017;550(7676):354–9.

•• Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nat Publ Group. 2017;542(7639):115–8 This landmark study proved the applicability and potential performance of deep learning in medical imaging.

Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316(22):2402–10.

Zhao Q, Zhang L. ECG feature extraction and classification using wavelet transform and support vector machines. Int Conf Neural Networks Brain. 2005;2:1089–92.

Salem ABM, Revett K, Ei-Dahshan ESA. Machine learning in electrocardiogram diagnosis. Proc Int Multiconference Comput Sci Inf Technol IMCSIT ‘09. 2009;4:429–33.

Rajpurkar P, Bourn C, Ng AY, Cs P, Edu S, Cs ANG, et al. Cardiologist-level arrhythmia detection with convolutional neural networks. Comput Vis Pattern Recognit. 2017;Submitted.

Smola AJ, Schölkopf B. A tutorial on support vector regression. Stat Comput. 2004 Aug;14(3):199–222.

Friedman J, Hastie T, Tibshirani R. Additive logistic regression: a statistical view of boosting (with discussion and a rejoinder by the authors). Ann Stat. 2000 Apr;28(2):337–407.

Breiman L. Random forests. Mach Learn. 2001;45(1):5–32.

Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. In: Navab N, Hornegger J, Wells WM, Frangi AF, editors. Medical image computing and computer-assisted intervention -- MICCAI 2015. Cham: Springer International Publishing; 2015. p. 234–41.

Han C, Hayashi H, Rundo L, Araki R, Shimoda W, Muramatsu S, et al. GAN-based synthetic brain MR image generation. 2018 IEEE 15th Int Symp Biomed Imaging (ISBI 2018). 2018;734–8.

Chuquicusma MJM, Hussein S, Burt J, Bagci U. How to fool radiologists with generative adversarial networks? A visual Turing test for lung cancer diagnosis. CoRR. 2017;abs/1710.09762.

Baur C, Albarqouni S, Navab N. MelanoGANs: high resolution skin lesion synthesis with GANs. CoRR. 2018;abs/1804.04338.

Nie D, Trullo R, Lian J, Petitjean C, Ruan S, Wang Q, et al. Medical image synthesis with context-aware generative adversarial networks. In: Medical Image Computing and Computer Assisted Intervention - MICCAI 2017 - 20th International Conference, Proceedings. Springer Verlag; 2017. p. 417–25. (Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); vol. 10435 LNCS).

Nie D, Trullo R, Lian J, Wang L, Petitjean C, Ruan S, et al. Medical image synthesis with deep convolutional adversarial networks. IEEE Trans Biomed Eng. IEEE Computer Society; 2018;

Wolterink JM, Leiner T, Viergever MA, Išgum I. Generative adversarial networks for noise reduction in low-dose CT 2017;36(12):2536–45.

Armanious K, Yang C, Fischer M, Thomas K, Nikolaou K. MedGAN. 2015;14(8):1–17.

Arsanjani R, Xu Y, Dey D, Fish M, Dorbala S, Hayes S, et al. Improved accuracy of myocardial perfusion SPECT for the detection of coronary artery disease using a support vector machine algorithm. J Nucl Med. 2013;54(4):549–55.

Arsanjani R, Xu Y, Dey D, Vahistha V, Shalev A, Nakanishi R, et al. Improved accuracy of myocardial perfusion SPECT for detection of coronary artery disease by machine learning in a large population. J Nucl Cardiol. 2013;20(4):553–62.

• Nakajima K, Kudo T, Nakata T, Kiso K, Kasai T, Taniguchi Y, et al. Diagnostic accuracy of an artificial neural network compared with statistical quantitation of myocardial perfusion images: a Japanese multicenter study. Eur J Nucl Med Mol Imaging. 2017;44(13):2280–9 This study documented the first modern implementation of artificial neural networks directly in SPECT polar maps.

Betancur J, Commandeur F, Motlagh M, Sharir T, Einstein AJ, Bokhari S, et al. Deep learning for prediction of obstructive disease from fast myocardial perfusion SPECT. A multicenter study. JACC Cardiovasc Imaging. 2018:1–10.

Arsanjani R, Dey D, Khachatryan T, Shalev A, Hayes SW, Fish M, et al. Prediction of revascularization after myocardial perfusion SPECT by machine learning in a large population. J Nucl Cardiol. 2015;22(5):877–84.

• Betancur J, Otaki Y, Motwani M, Fish MB, Lemley M, Dey D, et al. Prognostic value of combined clinical and myocardial perfusion imaging data using machine learning. JACC Cardiovasc Imaging. 2018;11(7):1000–9 This study combined clinical and SPECT imaging data to demonstrate high predictive accuracy for 3-year risk of MACE. It was superior to existing visual or automated perfusion measures .

Haro Alonso D, Wernick MN, Yang Y, Germano G, Berman DS, Slomka P. Prediction of cardiac death after adenosine myocardial perfusion SPECT based on machine learning. J Nucl Cardiol. 2018:1–9.

Juarez-Orozco LE, Knol RJJ, Sanchez-Catasus CA, Martinez-Manzanera O, van der Zant FM, Knuuti J. Machine learning in the integration of simple variables for identifying patients with myocardial ischemia. J Nucl. Cardiol. 2018 May 22.

Kipf TN, Welling M. Semi-supervised classification with graph convolutional networks. CoRR. 2016;abs/1609.02907.

Rasmus A, Valpola H, Honkala M, Berglund M, Raiko T. Semi-supervised learning with ladder network. CoRR. 2015;abs/1507.02672.

Funding

Open access funding provided by University of Turku (UTU) including Turku University Central Hospital.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Luis Eduardo Juarez-Orozco, Octavio Martinez-Manzanera, and Andrea Ennio Storti declare that they have no conflict of interest.

Juhani Knuuti reports personal fees from AstraZeneca.

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the Topical Collection on Cardiac Nuclear Imaging

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Juarez-Orozco, L.E., Martinez-Manzanera, O., Storti, A.E. et al. Machine Learning in the Evaluation of Myocardial Ischemia Through Nuclear Cardiology. Curr Cardiovasc Imaging Rep 12, 5 (2019). https://doi.org/10.1007/s12410-019-9480-x

Published:

DOI: https://doi.org/10.1007/s12410-019-9480-x