Abstract

A stochastic epidemic model with infectivity rate in incubation period and homestead–isolation on the susceptible is developed with the aim of revealing the effect of stochastic white noise on the long time behavior. A good understanding of extinction and strong persistence in the mean of the disease is obtained. Also, we derive sufficient criteria for the existence of a unique ergodic stationary distribution of the model. Our theoretical results show that the suitably large noise can make the disease extinct while the relatively small noise is advantageous for persistence of the disease and stationary distribution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the last several decades, infectious disease models have gained increasing recognition as a powerful tool to reveal the mechanism spread of diseases. Based on systems design with deterministic and stochastic models, there are a lot of literatures to investigate the transmission rates of diseases, see [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27] and the references therein. One of classic epidemic models is the SEIR model, which subdivides a homogeneous host population into categories containing susceptible, exposed, infectious and recovered individuals, with their population sizes denoted by S, E, I and R, respectively. Anderson and May [2] first used a system of ordinary differential equations (ODEs) to describe a classical SEIR epidemic model in 1991. Later, ODEs, IDEs, PDEs and SDEs are heavily employed to investigate SEIR epidemic models and many good results are obtained, for details to see [3, 22,23,24,25,26,27,28,29,30]. In particular, Zhao et al. [30] established and studied an SEIR model with non-communicability in incubation period. But, for some diseases, such as COVID-19, the incubation period is infectious [31], and the COVID-19 outbreak control in China shows that both physical protection [32,33,34] and social isolation [35,36,37] play important roles in controlling the epidemic in the present absence of vaccines for the virus. With the idea of infectivity in incubation period, Jiao et al. [38] proposed a deterministic SEIR epidemic model with homestead–isolation on the susceptible

where \(\delta >\theta _2(\gamma +\alpha +\mu )\), and the biological meanings of the parameters of model (1.1) are shown in Table 1 below.

Since the variable R in the fourth equation is not involved in the first three equations of model (1.1), a reasonable idea is to consider the following model

It follows from [38] that the basic reproduction number is \(R_0=\frac{\Lambda \beta (1-\theta _1)[\delta +\theta _2(\gamma +\alpha +\mu )]}{\mu (\gamma +\delta +\mu )(\delta +\mu )}\), and the threshold dynamics results of model (1.2) are summarized as follows:

\(\bullet \) The positive equilibrium (denoted by \(P^*\)) is globally asymptotically stable if \(R_0>1\);

\(\bullet \) The disease-free equilibrium (denoted by \(P^0\)) is globally asymptotically stable provided that \(R_0<1\).

Admittedly, deterministic model has significant advantages in simplifying the complex system and facilitating the theoretical analysis, whereas there exist some limitations in describing the population dynamics by this modeling method, especially when the external interference is relatively large and the population number decreases dramatically due to large-scale natural disasters including hurricanes, tsunamis, volcanoes, earthquakes such that the law of large numbers becomes no longer available and the dynamical behaviors have changed even more radically compared with the corresponding deterministic models [39,40,41]. For example, Arnold et al. [39] found that the real environmental white noise could make the stable Lotka-Volterra system no longer stable, even a stationary solution no longer exists. Zhou et al. [40] revealed that random effects may lead the disease to extinction in scenarios where the deterministic model exhibits persistence. Since the fluctuations in the randomly varying environment, such as humidity, temperature, food supply, season, etc., constantly affect the biological population densities [42], which embodies the objectivity and universality of the stochasticity. Accordingly, some researchers have argued that stochastic differential equations (SDEs) should be used to model epidemic systems because they are inevitably affected by the environmental noises, thus their stochastic dynamics have been intensively studied, for example, persistence and extinction [12, 16,17,18,19,20, 23], asymptotic stability [24, 25], positive recurrent [20, 23], stationary distribution [6, 16,17,18,19, 43, 44], periodic solution [43, 44], optimal vaccination strategy [14] and so on.

In the last few years, numerous good works [23, 45,46,47,48] on stochastic SEIR epidemic model have sprung up that focused on the effects of stochastic perturbation on their transmission dynamics. In particular, Yang and Mao [23] observed that the dynamical behaviors of the perturbed SEIR models be considerably different from that of the deterministic counterpart, namely, large perturbations can accelerate the extinction of epidemics regardless of the magnitude of the basic reproduction number \(R_0\). Applying Lyapunov method Zhang and Wang [45] achieved the conditions for the stochastic stability, persistence and extinction of an SEIR model driven simultaneously by white and Lévy noises (termed jumps) which can capture the wide spread of infectious diseases caused by medical negligence. More recently, Boukanjime et al. [46] utilized a stochastic SEIR model to describe the COVID-19 transmission dynamics affected by mixture of white and telegraph noises and investigated the extinction and persistence in the mean of the COVID-19 epidemic in Indian states in terms of the stochastic threshold \(R_0\).

It should also be mentioned that several possible approaches incorporating stochastic effects into the epidemic models have been proposed and extensively studied. Thereinto, one well-known approach to construct the discrete-time or continuous-time Markov chain models [49,50,51], which are conducive to estimating parameters and exploring the implications of the models by using statistical methods and simulation (e.g., seeing [50, 51]). Artalejo and his coauthors [50] formulated a stochastic SEIR model described by a continuous-time Markov chain and developed efficient computational procedures for the distribution of the duration of an outbreak. Another frequently used approach is to incorporate the noises (such as the white, color, Lévy, telegraph noises or the coupling noises between them) into certain deterministic epidemic models [20, 43,44,45,46]. This approach is mainly involved in the following several different forms of perturbations: the noises may perturb model parameters [23, 52,53,54,55,56], or be proportional to the distance between the state variables and the endemic equilibria of the deterministic models [24, 57, 58], or proportional to the state variables measuring population densities [19, 25, 43, 47, 59], with the advantage of good understanding the stochastic dynamics in long time of these models from the theoretical analysis perspective. For instance, Liu et al. [25] analyzed asymptotic behaviors of the equilibria of a stochastic delayed nonlinear SEIR epidemic model via Lyapunov functions.

This contribution is interested in probing into stochastic dynamics of model (1.2) incorporating random perturbations by thorough mathematical analysis. Therefore, following [25, 47, 59], we suppose that random perturbations are proportional to the variables S, E and I in model (1.2) under the influence of white noises and get a stochastic model

where \({\dot{B}}_i(t)\) are the white noises which are regarded as the derivative of mutually independent standard Brownian motions \(B_i(t)\ (i=1,2,3)\). \(\sigma _i^2>0\ (i=1,2,3)\) are the intensities of the noises and \(B_i(t)\) are defined on the complete probability space \((\Omega ,{\mathcal {F}},\{{\mathcal {F}}_t\}_{t\ge 0},{\mathbb {P}})\). Also, we assign \({\mathbb {R}}_+^n=\{x\in {\mathbb {R}}^n: x_j>0,1\le j\le n\}\).

Our main purpose of this work is to investigate the effects of environmental noises on the stochastic dynamics of model (1.3) and reveal whether the noises can inhibit the disease or not by comparing with the global dynamical results of model (1.2) established in [38]. The rest organization is as follows. Section 2 focuses on the extinction and strong persistence in the mean of the disease. Section 3 discusses the existence of a unique ergodic stationary distribution. Numerical simulations are conducted to substantiate our analytical results in Sect. 4. A brief biological discussion is provided in the final section.

2 Survival results of the disease

The section focuses on the survival results of the disease, and we first give the following two lemmas (Lemmas 2.1 and 2.2).

Lemma 2.1

For initial value \((S(0),E(0),I(0))\in {\mathbb {R}}_+^3\), there is a unique solution (S(t), E(t), I(t)) of model (1.3) on \(t\ge 0\) and the solution remains in \({\mathbb {R}}_+^3\) with probability one.

Assign \(W(S,E,I)=(S-a-a\ln \frac{S}{a})+(E-1-\ln E)+\frac{a\beta (1-\theta _1)}{\mu +\alpha +\gamma }(I-1-\ln I)\), where \(a=(\delta +\mu )/[\beta (1-\theta _1)(\theta _2+\frac{\delta }{(\mu +\gamma +\alpha )})]\), the rest proof is similar to Theorem 2.1 in [25], and we omit it here.

The following Lemma 2.2 is useful for the proofs of Theorems 2.1–2.2.

Lemma 2.2

Let (S(t), E(t), I(t)) be the solution of (1.3), then \(\lim \sup _{t\rightarrow +\infty }(S(t)+E(t)+I(t))<+\infty \), \(\lim _{t\rightarrow +\infty }S(t)/t=0\), \(\lim _{t\rightarrow +\infty }E(t)/t=0\), \(\lim _{t\rightarrow +\infty }I(t)/t=0\), \(\lim _{t\rightarrow +\infty }\ln S(t)/t=0\), \(\lim _{t\rightarrow +\infty }\ln E(t)/t=0\), \(\lim _{t\rightarrow +\infty }\ln I(t)/t=0\), a.s. And \(\lim _{t\rightarrow +\infty }\int _0^t S(r)dB_1(r)/t=0\), \(\lim _{t\rightarrow +\infty }\int _0^t E(r)dB_2(r)/t=0\), \(\lim _{t\rightarrow +\infty }\int _0^t I(r)dB_3(r)/t=0\), a.s.

Proof

It follows from (1.3) that

and we obtain, by solving the above equation (2.1), that

Let

Clearly, M(t) is a continuous local martingale with \(M(0)=0\).

Define

where

It follows from (2.2) that \(S(t)+E(t)+I(t)\le X(t)\) for all \(t>0\). Obviously, H(t) and Y(t) are continuous adapted increasing processes on \(t\ge 0\) with \(H(0)=Y(0)=0\). Applying Theorem 3.9 in [60], we obtain \(\lim _{t\rightarrow +\infty }X(t)<+\infty \). Hence

Thus, one easily derives

Assign

It follows from the quadratic variations that

By the large number theorem for martingale (see [60]) and (2.3), one has

Similarly, we have

The proof of the desired conclusions is finished. \(\square \)

Next, we consider the extinction of the disease.

Theorem 2.1

If \({\hat{R}}_{0}=\frac{2\Lambda \beta (1+\theta _2)(1-\theta _1)(\delta +\mu )^2}{\mu [(\delta +\mu )^{2} (\gamma +\alpha +\mu +\frac{1}{2}\sigma _3^2)\wedge \frac{1}{2}\delta ^{2}\sigma _2^2]}<1\), then the disease I(t) will be extinct.

Proof

Integrating both sides of (2.1) over the interval [0, t] and dividing by t lead to

from which we conclude that

We can know from Lemma 2.2 that

Let \(A(t)=\delta E(t)+(\delta +\mu )I(t)\). Applying Itô’s formula yields

Integrating both sides of (2.5) over the interval [0, t], dividing by t, and combining with (2.4) and Lemma 2.2, we have

which implies that

Thus, the disease I(t) will be extinct. \(\square \)

Assumption A

\(R_0^s =\frac{\beta (1-\theta _1)\Lambda \delta }{\left( \frac{1}{2}\sigma _1^{2}+\mu \right) \left( \frac{1}{2}\sigma _3^{2}+\mu +\gamma +\alpha \right) \left( \frac{1}{2}\sigma _2^{2} +\mu +\delta \right) }>1\).

Finally, we give the persistence of the disease.

Theorem 2.2

If Assumption A holds, then the disease I(t) will be strong persistent in the mean, and

Proof

Integrating on both sides of the last equation of (1.3) over the interval [0, t] and dividing by t lead to

We computer that

where

It follows from Lemma 2.2 that we have

Assign

where

Applying Itô’s formula to U, one obtains

where

Thus

Integrating (2.8) over the interval [0, t] and then dividing by t on both sides, we have

Taking the superior limit on both sides of (2.9) and combining with (2.6), (2.7) and Lemma 2.2, we have

The proof is complete. \(\square \)

3 Stationary distribution

In this section, we further study the stationary distribution for model (1.3) by using the theory of Hasminskii [61]. Let X(t) be a regular time-homogeneous Markov process in \({\mathbb {R}}^d\) described by

and the corresponding diffusion matrix is defined as follows

Lemma 3.1

[61] The Markov process X(t) has a unique ergodic stationary distribution \(\pi (\cdot )\) if there exists a bounded domain \(U\subset {\mathbb {R}}^d\) with regular bounded \(\Gamma \) and

-

(i):

A positive number M satisfied that \(\sum _{i,j=1}^{d}a_{ij}(x)\xi _1\xi _2\ge M|\xi |^2, x\in U, \xi \in {\mathbb {R}}^d \).

-

(ii):

There exists V ( V is a nonnegative \(C^2\)-function) such that LV is negative for any \({\mathbb {R}}^d\setminus U\). If \(f(\cdot )\) is a function integrable with respect to the measure \(\pi \), then

$$\begin{aligned} {\mathbb {P}}_x\left\{ \lim _{T\rightarrow +\infty }\frac{1}{T}\int _0^t f(X(t))dt=\int _{{\mathbb {R}}^d} f(x)\pi (dx)\right\} =1 \end{aligned}$$for all \(x\in {\mathbb {R}}^d\).

Theorem 3.1

If Assumption A holds, model (1.3) owns a unique ergodic stationary distribution \(\pi (\cdot )\).

Proof

We only need to verify the assumptions \((i){-}(ii)\) in Lemma 3.1. We first consider the diffusion matrix of model (1.3)

Assign \(M=\min _{(S,E,I)\in {\overline{U}}\subset {\mathbb {R}}_+^3}\{\sigma _1^2S^2,\sigma _2^2E^2,\sigma _3^2I^2\}\), then

which implies that (i) in Lemma 3.1 is satisfied.

Now, we continue to verify (ii). Consider a \(C^2\)-function \({\tilde{V}}: {\mathbb {R}}_+^3\rightarrow {\mathbb {R}}\) with

where \(a_1\), \(a_2\) are positive constants to be chosen later, \(0<q<1\) is a constant satisfying \(\kappa :=\mu -\frac{q}{2}(\sigma _1^2\vee \sigma _2^2\vee \sigma _3^2)>0\) and the constant \({\mathcal {M}}>0\) is sufficient large such that \(g_1^u+g_2^u-\eta {\mathcal {M}}\le -2\), where \(\eta :=(\mu +\frac{1}{2}\sigma _1^2)(R_0^s-1)\),

In addition, \({\tilde{V}}(S,E,I)\) is continuous and tends to \(+\infty \) when (S, E, I) is close to the boundary of \({\mathbb {R}}_+^3\). Therefore, it has lower bounded and reaches the lower bound at this point \((S_0,E_0,I_0)\) in the interior of \({\mathbb {R}}_+^3\). Let us consider a nonnegative \(C^2\)-function \(V:{\mathbb {R}}_+^3\rightarrow {\mathbb {R}}_+\) with

where \((S,E,I)\in (\frac{1}{k},k)\times (\frac{1}{k},k)\times (\frac{1}{k},k)\) and \(k>1\) is a sufficiently large integer, \(V_1=-\ln S-a_1\ln E-a_2\ln I,V_2=-\frac{\beta (1-\theta _1)\theta _2}{\delta }I,V_3=\frac{1}{q+1}(S+E+I)^{q+1},V_4=-\ln S-\ln E-{\tilde{V}}(S_0,E_0,I_0)\), and

Applying Itô’s formula, one has

Similarly,

and

where

In addition, we can obtain

Combining with (3.1)–(3.4), we have

where

Assign a bounded closed set as follows

where \(\varepsilon >0\) is a suitably small constant satisfying the following inequalities

where

and we will determine the two positive constants \(C_1\), \(C_2\) later. For convenience, we first divide \({\mathbb {R}}_+^3\setminus U\) into six domains with the forms

Clearly, \({\mathbb {R}}_+^3\setminus U=U_1\cup U_2\cup U_3\cup U_4\cup U_5\cup U_6\). By verifying the above six cases, we will prove that \(LV(S,E,I)\le -1\) on \({\mathbb {R}}_+^3\setminus U\).

Case 1 When \((S,E,I)\in U_1\), then (3.5) and (3.6) imply that \(LV\le F+g_2^u+g_3^u-\frac{\Lambda }{\varepsilon }\le -1\).

Case 2 When \((S,E,I)\in U_2\), it follows from (3.5) and (3.7) that

Case 3 When \((S,E,I)\in U_3\), we obtain from (3.5) and (3.8) that \(LV\le g_1^u+g_2^u+g_3^u-\beta (1-\theta _1)\frac{1}{\varepsilon }\le -1\).

Case 4 When \((S,E,I)\in U_4\), (3.5) and (3.9) imply that \(LV\le F+g_2^u+g_3^u-\frac{\kappa }{2}\frac{1}{\varepsilon ^{q+1}}\le -1\).

Case 5 When \((S,E,I)\in U_5\), it follows from (3.5) and (3.10) that \(LV\le g_1^u+g_2^u+C_1-\frac{\kappa }{4}\frac{1}{\varepsilon ^{q+1}}\le -1\), where

Case 6 When \((S,E,I)\in U_6\), one obtains from (3.5) and (3.11) that \(LV\le g_1^u+g_3^u+C_2-\frac{\kappa }{4}\frac{1}{\varepsilon ^{3(q+1)}}\le -1\), where \(C_2=\sup _{E\in (0,+\infty )}\{-\frac{\kappa }{4}E^{q+1}+\beta (1-\theta _1)\theta _2E\}\).

From the above discussion, we obtain that \(LV\le -1\) on \({\mathbb {R}}_+^3\setminus U\), which implies that (ii) in Lemma 3.1 also holds. This completes the proof. \(\square \)

4 Numerical simulations

In this section, our analytical results will be verified by numerical simulations. Let us choose initial value \((S(0),E(0),I(0))\!=\!(20,15,10)\), and the parameter values are kept the same as in [38], see Table 2.

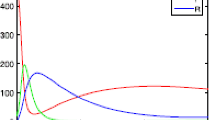

a \(\theta _1=0.7\), \(R_0=1.7619>1\), then the positive equilibrium \(P^*\) of deterministic model (1.2) is globally asymptotically stable. b \(\theta _1=0.9\), \(R_0=0.5873<1\), then its disease-free equilibrium \(P^0\) is globally asymptotically stable

\(\bullet \) Jiao et al. [38] discussed the effect of \(\theta _1\) (see Fig. 1a and b), that is, the disease I(t) of deterministic model (1.2) will die out if \(\theta _1\) is large, and however the disease I(t) will be persistent if \(\theta _1\) is relative small. This fact shows that the strategy of the homestead–isolation on the susceptible is very important in the epidemics of infectious diseases.

\(\bullet \) We choose \(\sigma _1=0.4\), \(\sigma _2=3.5\), \(\sigma _3=1.3\) and the same \(\theta _1=0.9\) as Fig. 1b, then \({\hat{R}}_0\approx 0.957823<1\). It follows from Theorem 2.1 that the disease goes to extinction (see Fig. 2). Compared with Fig. 1b, we can see that the noise will accelerate the extinction of the disease although the disease tends to be extinct in deterministic model (1.2).

a The disease of stochastic model (1.3) is extinct. b Frequency histogram at time 100

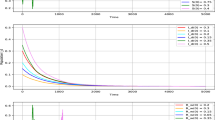

\(\bullet \) To illustrate the threshold of disease and the effect of the environment white noises. To compare with Fig. 1a, we continue to select the same \(\theta _1=0.7\) as Fig. 1a, and smaller noises with \(\sigma _1=0.1\), \(\sigma _2=0.08\), \(\sigma _3=0.05\). For the stochastic model (1.3), a calculation shows that \(R_0^s\approx 1.3952064>1\), then the conditions of Theorems 2.2 and 3.1 are satisfied. As observed in Fig. 3 the disease of model (1.3) goes to strong persistence in the mean, and moreover the conditions support model (1.3) owns an ergodic stationary distribution, see Fig. 4. Recalling Fig. 1a, we know that the disease will persist if \(\theta _1=0.7\), and Figs. 3 and 4 shows that although it is affected by environmental noise, the disease will persist if the noise intensity is relatively low.

a The disease of stochastic model (1.3) will be strongly persistent in the mean. b Frequency histogram at time 100

5 Discussions

In this paper, based on a deterministic SEIR epidemic model with infectivity in incubation period and homestead–isolation on the susceptible proposed by Jiao et al. [38], we incorporate white noise into the above model and establish a stochastic version. We first discuss the extinction and strong persistence in the mean of the disease, and later the stationary distribution is investigated by using Hasminskiis method and Lyapunov function. Let us recall Theorems 2.1, 2.2 and 3.1, we reveal the biological conclusions as follows:

\(\bullet \) Theorem 2.1 shows that the disease will be extinct when \({\hat{R}}_{0}<1\). This fact implies that if the noise intensities \(\sigma _i^2(i=1,2,3)\) are suitably large and the cooperation from the homestead–isolation rate \(\theta _1\) is enough while the infective rate of the exposed in incubation period \(\theta _2\) is relatively small, then the disease I(t) is extinct. That is, increasing the noise intensity and homestead–isolation rate are advantageous for the extinction of the disease while increasing the infective rate of the exposed in incubation period is harmful for it.

\(\bullet \) It follows from \(R_0^s>1\) (see Theorem 2.2) that if the noise intensities \(\sigma _i^2(i=1,2,3)\) are small and the cooperation from the homestead–isolation rate \(\theta _1\) is inadequate, then the disease I(t) will be strong persistent in the mean.

\(\bullet \) When \(R_0^s>1\), Theorem 3.1 shows that model (1.3) has a unique stationary distribution \(\pi (\cdot )\) which is ergodic. This result indicates that decreasing the noise intensities \(\sigma _i^2(i=1,2,3)\) and the homestead–isolation rate \(\theta _1\) may result in a high prevalence level of the disease, and hence the governments should strictly implement the isolation system to make every effort to curb propagation of disease.

In the end, it should be pointed out that, in order to compare with the global dynamical results of model (1.2) established in [38], this work is only concerned with the stochastic dynamics of the variables S, E, I in model (1.3) under the three noises \({\dot{B}}_i(t)\), \(i=1,2,3\), however it also would deserve to introduce a noise into the variable R in the fourth equation of model (1.1) and investigate the four-dimension stochastic model. In addition, we would like to develop more complicated models by incorporating other forms of stochastic perturbations (e.g., perturbed parameters [23, 52,53,54,55,56]), Lévy jump [14, 45] or regime switching [62], and we leave these interesting and challenging issues for future consideration.

References

Kermack, W.O., Mckendrick, A.G.: Combined effects of prevention and quarantine on a breakout in SIR model. Proc. R. Soc. Edin. A 115, 700–721 (1927)

Anderson, R.M., May, R.M.: Infectious Diseases of Humans: Dynamics and Control. Oxford University Press, New York (1991)

Cooke, K., Van Den Driessche, P.: Analysis of an SEIRS epidemic model with two delays. J. Math. Biol. 35, 240–260 (1996)

Ruan, S.G., Wang, W.D.: Dynamical behavior of an epidemic model with a nonlinear incidence rate. J. Differ. Equ. 188, 135–163 (2003)

Wang, W.D.: Epidemic models with nonlinear infection forces. Math. Biosci. Eng. 3, 267–279 (2006)

Cai, Y.L., Kang, Y., Banerjee, M., Wang, W.M.: A stochastic SIRS epidemic model with infectious force under intervention strategies. J. Differ. Equ. 259, 7463–7502 (2015)

Wang, J.L., Yang, J., Kuniya, T.: Dynamics of a PDE viral infection model incorporating cell-to-cell transmission. J. Math. Anal. Appl. 444, 1542–1564 (2016)

Wang, L.W., Liu, Z.J., Zhang, X.A.: Global dynamics for an age-structured epidemic model with media impact and incomplete vaccination. Nonlinear Anal. Real World Appl. 32, 136–158 (2016)

Li, S.P., Jin, Z.: Impacts of cluster on network topology structure and epidemic spreading. Discrete Contin. Dyn. Syst. Ser. B 22, 3749–3770 (2017)

Liu, Z.J., Hu, J., Wang, L.W.: Modelling and analysis of global resurgence of mumps: a multi-group epidemic model with asymptomatic infection, general vaccinated and exposed distributions. Nonlinear Anal. Real World Appl. 37, 137–161 (2017)

Li, J.Q., Wang, X.Q., Lin, X.L.: Impact of behavioral change on the epidemic characteristics of an epidemic model without vital dynamics. Math. Biosci. Eng. 15, 1425–1434 (2018)

Xu, C.Y., Li, X.Y.: The threshold of a stochastic delayed SIRS epidemic model with temporary immunity and vaccination. Chaos Solitons Fractals 111, 227–234 (2018)

Liu, Z.Z., Shen, Z.W., Wang, H., Jin, Z.: Analysis of a local diffusive SIR model with seasonality and nonlocal incidence of infection. SIAM J. Appl. Math. 79, 2218–2241 (2019)

Mu, X.J., Zhang, Q.M., Rong, L.B.: Optimal vaccination strategy for an SIRS model with imprecise parameters and Lévy noise. J. Frankl. Inst. 356, 11385–11413 (2019)

Liu, L.L., Xu, R., Jin, Z.: Global dynamics of a spatial heterogeneous viral infection model with intracellular delay and nonlocal diffusion. Appl. Math. Model. 82, 150–167 (2020)

Gray, A., Greenhalgh, D., Hu, L., Mao, X., Pan, J.: A stochastic differnetial equation SIS epidemic model. SIAM J. Appl. Math. 71, 876–902 (2011)

Liu, Q., Jiang, D.Q., Shi, N.Z., Hayat, T., Ahmad, B.: Stationary distribution and extinction of a stochastic SEIR epidemic model with standard incidence. Phys. A 476, 58–69 (2017)

Feng, T., Qiu, Z.P., Meng, X.Z.: Dynamics of a stochastic hepatitis C virus system with host immunity. Discrete Contin. Dyn. Syst. Ser. B 24, 6367–6385 (2019)

Wei, F.Y., Chen, L.H.: Extinction and stationary distribution of an epidemic model with partial vaccination and nonlinear incidence rate. Phys. A 545, 122852, 10pp (2020)

Cao, Z.W., Shi, Y., Wen, X.D., Liu, L.Y., Hu, J.W.: Analysis of a hybrid switching SVIR epidemic model with vaccination and Lévy noise. Phys. A 537, 122749, 17pp (2020)

Liu, X.N., Wang, Y., Zhao, X.Q.: Dynamics of a climate-based periodic Chikungunya model with incubation period. Appl. Math. Model. 80, 151–168 (2020)

Zhao, Z., Chen, L.S., Song, X.Y.: Impulsive vaccination of SEIR epidemic model with time delay and nonlinear incidence rate. Math. Comput. Simul. 79, 500–510 (2008)

Yang, Q.S., Mao, X.R.: Extinction and recurrence of multi-group SEIR epidemic models with stochastic perturbations. Nonlinear Anal. Real World Appl. 14, 1434–1456 (2013)

Liu, M., Bai, C.Z., Wang, K.: Asymptotic stability of a two-group stochastic SEIR model with infinite delays. Commun. Nonlinear Sci. Numer. Simul. 19, 3444–3453 (2014)

Liu, Q., Jiang, D.Q., Shi, N.Z., Hayat, T., Ahmad, A.: Asymptotic behavior of a stochastic delayed SEIR epidemic model with nonlinear incidence. Phys. A 462, 870–882 (2016)

Tian, B.C., Yuan, R.: Traveling waves for a diffusive SEIR epidemic model with non-local reaction. Appl. Math. Model. 50, 432–449 (2017)

Han, S.Y., Lei, C.X.: Global stability of equilibria of a diffusive SEIR epidemic model with nonlinear incidence. Appl. Math. Lett. 98, 114–120 (2019)

Abta, A., Kaddar, A., Alaoui, H.T.: Global stability for delay SIR and SEIR epidemic models with saturated incidence rates. Electron. J. Differ. Equ. 386, 956–965 (2012)

Lv, G.C., Lu, Z.Y.: Global asymptotic stability for the SEIRS models with varying total population size. Math. Biosci. 296, 17–25 (2018)

Zhao, D.L., Sun, J.B., Tan, Y.J., Wu, J.H., Dou, Y.J.: An extended SEIR model considering homepage effect for the information propagation of online social networks. Phys. A 512, 1019–1031 (2018)

National Health Commission of the People’s Republic of China. (2020). http://www.nhc.gov.cn/. Accessed 26 Jan 2020

Cheng, V.C.C., Wong, S.C., Chuang, V.W.M., So, S.Y.C., Chen, J.H.K., Sridhar, S., To, K.K.W., Chan, J.F.W., Hung, I.F.N., Ho, P.L., Yuen, K.Y.: The role of community-wide wearing of face mask for control of coronavirus disease 2019 (COVID-19) epidemic due to SARS-CoV-2. J. Infect. 81, 107–114 (2020)

Chu, D.K., Akl, E.A., Duda, S., et al.: Physical distancing, face masks, and eye protection to prevent person-to-person transmission of sars-cov-2 and covid-19: a systematic review and meta-analysis. Lancet 395, 1973–1987 (2020)

Feng, S., Shen, C., Xia, N., Song, W., Fan, M., CowlingCowling, B.J.: Rational use of face masks in the COVID-19 pandemic. Lancet Respir. Med. 8, 434–436 (2020)

Maier, B.F., Brockmann, D.: Effective containment explains subexponential growth in recent confirmed COVID-19 cases in china. Science 368, 742–746 (2020)

Wilder-Smith, A., Freedman, D.O.: Isolation, quarantine, social distancing and community containment: pivotal role for old-style public health measures in the novel coronavirus (2019-nCoV) outbreak. J. Travel Med. 27, 1–4 (2020)

Wu, Z., McGoogan, J.M.: Characteristics of and important lessons from the coronavirus disease 2019 (COVID-19) outbreak in China: summary of a report of 72314 cases from the Chinese Center for Disease Control and Prevention. JAMA 323, 1239–1242 (2020)

Jiao, J.J., Liu, Z.Z., Cai, S.H.: Dynamics of an SEIR model with infectivity in incubation period and homestead-isolation on the susceptible. Appl. Math. Lett. 107, 106442, 7pp (2020)

Arnold, L., Horsthemke, W., Stucki, J.W.: The influence of external real and white noise on the Lotka–Volterra model. Biom. J. 21, 451–471 (1979)

Zhou, Y.L., Zhang, W.G., Yuan, S.L.: Survival and stationary distribution of a SIR epidemic model with stochastic perturbations. Appl. Math. Comput. 244, 118–131 (2014)

Zhang, X.B., Wang, X.D., Huo, H. F.: Extinction and stationary distribution of a stochastic SIRS epidemic model with standard incidence rate and partial immunity. Phys. A 531, 121548, 14pp (2019)

Lewontin, R.C., Cohen, D.: On population growth in a randomly varying environment. Proc. Nat. Acad. Sci. 62, 1056–1060 (1969)

Zhang, X.H., Jiang, D.Q., Hayat, T., Alsaedi, A.: Periodic solution and stationary distribution of stochastic S-DI-A epidemic models. Appl. Anal. 97, 179–193 (2018)

Qi, H.K., Leng, X.N., Meng, X.Z., Zhang, T.H.: Periodic solution and ergodic stationary distribution of SEIS dynamical systems with active and latent patients. Qual. Theory Dyn. Syst. 18, 347–369 (2019)

Zhang, X.H., Wang, K.: Stochastic SEIR model with jumps. Appl. Math. Comput. 239, 133–143 (2014)

Boukanjime, B., Caraballo, T., El Fatini, M., El Khalifi, M.: Dynamics of a stochastic coronavirus (COVID-19) epidemic model with Markovian switching. Chaos Solitons Fractals (2020). https://doi.org/10.1016/j.chaos.2020.110361

Li, F., Zhang, S.Q., Meng, X.Z.: Dynamics analysis and numerical simulations of a delayed stochastic epidemic model subject to a general response function. Comput. Appl. Math. 38, 95, 30pp (2019)

Xu, R., Guo, R.: Pontryagin’s maximum principle for optimal control of stochastic SEIR models. Complexity 2020, 1–5 (2020)

Allen, L.J.S., Burgin, A.M.: Comparison of deterministic and stochastic SIS and SIR models in discrete time. Math. Biosci. 163, 1–33 (2000)

Artalejo, J.R., Economou, A., Lopez-Herrero, M.J.: The stochastic SEIR model before extinction: computational approaches. Appl. Math. Comput. 265, 1026–1043 (2015)

Engbert, R., Rabe, M.M., Kliegl, R., Reich, S.: Sequential data assimilation of the stochastic SEIR epidemic model for regional COVID-19 dynamics. Bull. Math. Biol. (2021). https://doi.org/10.1101/2020.04.13.20063768

Mao, X.R., Marion, G., Renshaw, E.: Environmental Brownian noise suppresses explosions in population dynamics. Stoch. Process. Appl. 97, 95–110 (2002)

Dalal, N., Greenhalgh, D., Mao, X.R.: A stochastic model of AIDS and condom use. J. Math. Anal. Appl. 325, 36–53 (2007)

Zhao, Y.N., Jiang, D.Q.: The threshold of a stochastic SIRS epidemic model with saturated incidence. Appl. Math. Lett. 34, 90–93 (2014)

Cai, S., Cai, Y., Mao, X.: A stochastic differential equation SIS epidemic model with two correlated Brownian motions. Nonlinear Dyn. 97, 2175–2187 (2019)

Mummert, A., Otunuga, O.M.: Parameter identification for a stochastic SEIRS epidemic model: case study influenza. J. Math. Biol. 79, 705–729 (2019)

Beretta, E., Kolmanovskii, V., Shaikhet, L.: Stability of epidemic model with time delays influenced by stochastic perturbations. Math. Comput. Simul. 45, 269–277 (1998)

Hussain, G., Khan, A., Zahri, M., Zaman, G.: Stochastic permanence of an epidemic model with a saturated incidence rate. Chaos Solitons Fractals 139, 110005, 7pp (2020)

Jiang, D.Q., Yu, J.J., Ji, C.Y., Shi, N.Z.: Asymptotic behavior of global positive solution to a stochastic SIR model. Math. Comput. Modell. 54, 221–232 (2011)

Mao, X.R.: Stochastic Differential Equations and Applications, vol. 47. Horwood Publishing, Chichester (2007)

Hasminskii, R.Z.: Stochastic Stability of Differential Equations. Sijthoff Noordhoff, Alphen aan den Rijn, The Netherlands (1980)

Liu, Q., Jiang, D.Q., Shi, N.Z.: Threshold behavior in a stochastic SIQR epidemic model with stansard incidence and regime switching. Appl. Math. Comput. 316, 310–325 (2018)

Acknowledgements

The work is supported by the NNSF of China (Nos. 11871201, 11961023), and the NSF of Hubei Province, China (No. 2019CFB241).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shangguan, D., Liu, Z., Wang, L. et al. A stochastic epidemic model with infectivity in incubation period and homestead–isolation on the susceptible. J. Appl. Math. Comput. 67, 785–805 (2021). https://doi.org/10.1007/s12190-021-01504-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12190-021-01504-1

Keywords

- Stochastic epidemic model

- Homestead–isolation

- Infectivity in incubation period

- Survival

- Stationary distribution