Abstract

Due to resolving major technological challenges Additive Manufacturing (AM) is on the brink of industrialization. In order to operate capital-intensive AM equipment in an economically viable manner, service providers must configure their production environment in a way which enables high capacity utilization and short throughput times while minimizing work in process. The interrelation of those three mentioned production-related key performance indicators, also known as the scheduling dilemma, must be addressed with due consideration of the technology’s characteristics. Within the framework of this paper the authors describe the impact of a service provider’s facility configuration regarding machine pool, operator availability and distribution of work content on the production system’s utilization. The evaluations rely on a simulation model developed in Matlab®, which allows for modification and execution of production schedules within AM facilities of different configurations. The validation of the proposed model is based on empirical data gathered on the shopfloor of GKN Additive, a global AM service provider.

Similar content being viewed by others

1 Introduction

Today’s rapidly evolving competitive landscape, characterized by shortened innovation cycles and hence shortened, steeper value chains [1], exerts pressure on Additive Manufacturing (AM) job-shops and OEMs operating capital-intensive AM equipment. Despite offering a technology lead, AM job-shops may create a differentiator and thus competitive advantage by a clear commitment to operational excellence, positively contributing to decreased throughput times, reduced work in process (WIP) and increased machine utilization. The quantification of those Key Perfomance Indicators (KPIs) is influenced by input variables, i.e. shopfloor configuration, such as machine availability, operator presence, distribution of work content and production order release rate. As modifications of the latter parameters for the sake of quantifying their impact on the mentioned KPIs is not justifiable from an economic perspective in a real production environment, a suitable simulation is modeled, applied and validated against real production data gathered from four Laser Powder Bed Fusion (LPBF) machines installed at GKN Additive, a global AM service provider. The described simulation approach allows for sound decisions on both strategic and operational level: the results may be utilized for identification of the right time for a capacity increase, optimization of operator presence, quantification of production order release rate and guidance during the process of data preparation, e.g. when deciding whether or not to add an additional part to a buildjob.

In a first step the nomenclature for all relevant KPIs, input and auxiliary variables is elucidated in order to define and structure a model suitable for the specifics of LPBF technology. Subsequently, the assumptions and Design of Experiment (DoE) for the simulation runs are explained, followed by visualization and validation of the generated results. The paper concludes with a critical interpretation of the results and an overview regarding further research in the domain of AM-specific production simulations.

According to the author’s most recent study, LPBF production environments are characterized by work content distributions (see Sect. 2.1) which are rarely found in the context of conventional manufacturing [2]. Like a job-shop environment, e.g. producing complex molds and dies by means of high-precision 5-axis CNC machining, LPBF is also characterized by unmanned, autonomous production, high average work content per production order and challenges when it comes to scheduling operator presence and production runs. However, differences justifying a dedicated examination regarding the applicability of the state of the art still exist:

-

The work content’s coefficient of variation is significantly lower than in conventional manufacturing, i.e. standard deviation is low compared to average work content [2].

-

Work content does not follow a Normal distribution as assumed in models such as [3].

-

Lot sizing is fundamentally different compared to conventional manufacturing. While the situation described above typically applies to one-of-a kind manufacturing, LPBF manufacturing lots are comprised of a set of highly heterogenous part making the deduction of robust default production times a challenging task.

2 Modeling approach

2.1 Boundary conditions, nomenclature and definitions

The production KPIs mentioned in the previous sections are borrowed from the area of production logistics. This research area constitutes an integrated approach to describe, link and optimize the production-economical subdomains of production theory, program, capacity and process planning [4]. For further details regarding the fundamental interrelations between WIP, lead times and productivity see [3, 5, 6]. In order to create a basic understanding of the terms utilized during the remainder of this paper, they are described with due consideration of the characteristics of LPBF technology. As the way components are created from a digital model in 3D printing is fundamentally different compared to conventional manufacturing, the described framework is limited to the printing process and its interfaces to the previous step of data preparation. In order to generate a profound understanding of the fundamental interrelations that occur when AM and production systematics are combined, downstream activities along the process chain (i.e. heat treatment, base plate separation, milling, quality assurance etc.) are deliberately excluded from the considerations of this paper. This limitation seems reasonable if established methodologies may be applied when deducting production KPIs for the conventional manufacturing technologies.

Throughput (TP). A specific workstation’s average output in a given period of time. As parts populating a build plate and being manufactured on a LPBF system do not constitute a homogenous good, the throughput is measured in productive hours per hour.

Utilization (U). The Throughput TP of a specific workstation divided by its theoretical maximum production capability (hours per unit hour). In order to facilitate conversion in between throughput and utilization within the context of this paper, the maximum production capability is set to 1 h per unit hour.

Work in Process (WIP). The Work in Process is the cumulative sum of the work content waiting for processing or currently being processed on a workstation. A buildjob comprised of 1 to n parts becomes WIP once the process of data preparation is finished, i.e. it is being released for production. Once the job is being completed and removed from the machine it becomes WIP at the subsequent workstation.

Throughput Time (TT). The average time period between a buildjob’s arrival and departure at a specific workstation, i.e. the time it spends as WIP. The transformation of a digital WIP (buildjob populated with oriented, supported and nested parts) to physical WIP during this period is a unique characteristic of AM processes.

Work Content (WC). The total time required for manufacturing a specific buildjob, including mount and unmount time.

Work Content Distribution (WCD). Aggregation of various buildjobs with regard to their work content for a given period of time. A work content distribution is typically depicted in a histogram and can be approximated by a suitable probability distribution, characterized by a set of specific parameters.

Production Order Release Rate (PORR). The rate at which production orders, i.e. buildjobs are being released for manufacturing at a defined workstation. The release rate does not depend on the work content of the production orders being released. It is measured in production orders per unit time and may follow a deterministic or stochastic pattern when being modeled. As soon as a production order is released, it becomes WIP at the respective workstation.

Operator Availability (OA). The variables m,n and q describe the count of operators present during the 6am, 2 pm and 10 pm shift respectively. The operator availability OA is therefore defined as the set \( \left\{ {m,n,q\text{ } \in {\mathbb{N}} |m + n + q > 0} \right\} \). When an operator is busy mounting or unmounting a buildjob he may not be assigned other tasks.

Machine Configuration (MC). The shopfloor’s machine configuration is comprised of 1 to m machines, each characterized by a specific mount and unmount time and material acceptance. A machine may only run on one type of material and changes are not allowed.

2.2 Structure of the simulation model

Within the proposed model, the instances at the LPBF manufacturing systems are characterized by a dynamic, discrete and stochastic behavior:

-

The status of the production environment changes over time as production orders enter and leave the system (dynamic property)

-

Changes with regard to the status of the simulated buildjob are tracked on an hourly base (discrete property)

-

Arrival dates of production orders and their corresponding work content cannot be predicted as they depend on customer orders and decisions made during data preparation (stochastic property)

As the attributes of a buildjob currently being processed change during each period of length Δt even if no new job is being released for production, the system is modeled as time-driven rather than event-discrete.

The UML diagram depicted in Fig. 1 holds the activities and logical structure of the simulation model implemented in Matlab®. The definition of relevant input variables, execution of the simulation run and calculation of corresponding output variables is explained in the following subsections.

Input Variables. Before the simulation run is initiated, the production environment and a feasible set of buildjobs are created. The production environment is described by the number of available machines and the type of materials they are capable of processing. Each machine is furthermore characterized by a material-independent mount and unmount time. Operators are responsible for mounting and unmounting the machines and may be present during three different shifts. Hence, the parameterization of the production environment is complete once the number of operators present during the three respective shifts is defined. As LPBF machines operate autonomously once set up, operator presence is only mandatory for starting and finishing buildjobs.

In order to charge the production during the simulation run, a feasible set of buildjobs is another relevant input variable of the model. Due to the novelty of LPBF processes in the context of a full-scale industrial production, to the best of the author’s knowledge, there is no statistically relevant data available regarding work content distribution (WCD) for LPBF machines. Based on the author’s experience gathered in industry projects, Table 1 provides an overview of relevant production scenarios and a respective characterization regarding their average and standard deviation of work content. The scenarios are derived by selecting application-specific geometries and manually assigning them to a set of buildjobs, such that each job holds at least one part and a maximum of parts according to the available space on the build plate. In between the two extremes, the amount of parts per buildjob follows a Gaussian distribution with an average of four parts per buildjob. Once the set of buildjobs is populated with parts, the required manufacturing time is calculated by means of the approach suggested in [7].

All values are subject to verification with industrial end users. As the provided scenarios fall to the rather extreme regimes of what is typically observed in production, they span a large window of feasible combinations. Intuitively, all scenarios found in real LPBF production settings hence fall into that window, even if they are a mix of the defined scenarios.

To foster a better understanding and enable the creation of randomly distributed WCDs for simulation purposes, the historical production data of four LPBF machines installed at GKN Additive, a global AM full-service provider is analyzed.

Tables 2 and 3 hold an overview of the results of fitting a continuous, semi-infinite Gamma probability distribution to the given WCDs. The goodness of fit (GOF) for this distribution type is tested by applying an Anderson–Darling (AD) test with a significance level of 5%. This type of statistical test is used to determine how well the shape of the given production sample data follows a conjectured probability distribution. Within the context of this paper, the approach of Anderson–Darling is selected as it shows decent sensitivity to differences in the tail of the expected distribution [8]. Other tests, such as Kolmogorov–Smirnov, are particularly sensitive to differences in the regime of the sample data’s median, while Chi square tests come with the challenge of binning the sample data and hence cause a loss of information [9]. The \( H_{0} \) hypothesis is defined per following: the machine-specific WCD follows a Gamma probability distribution characterized by a scale factor α and a shape factor β. In case the formulated null hypothesis does not have to be rejected according to the result of the Anderson–Darling test, the conjectured Gamma probability distribution serves as a valid representation of the analyzed WCD and may be utilized to generate randomized data for simulation purposes. The metrics for calculating both critical and AD test statistics values are stated in [10].

In order to provide further statistical validation, a quantile–quantile plot is created for all four machines. The graphical exploration of the graph created by plotting the quantiles of the sampled data against those of the hypothesized Gamma distribution backs up the results of the AD test. Outliers only occur for buildjobs characterized by extensive WC. Even though the AD test is particularly sensitive when it comes to deviations in the long tail, the \( H_{0} \) hypothesis does not have to be rejected.

Despite its statistic feasibility, the hypothesized Gamma distribution must be capable of creating value-pairs of work content average and standard deviation which cover all scenarios stated in Table 1. Parametrization with \( \alpha \in \left[ {0.1, 25} \right] \) and \( \beta \in \left[ {1, 144} \right] \) yields the window of feasible value-pairs depicted in Fig. 2.

Following the results of the AD test, visual interpretation of the quantile–quantile plots and coverage of all value-pairs resulting from the defined scenarios, the Gamma distribution is accepted for creating WCDs as input for the subsequent simulation. Each WCD, i.e. production schedule, is characterized by WC’s average, median and standard deviation values, constituting implicit input variables to the simulation as they are a result of the Gamma distribution’s shape and scale parameter choice.

Simulation of Production Order Processing. Once all input variables below are set, the simulation run is initiated:

-

Operator presence

-

Machine characteristics (material type, mount time, unmount time)

-

Production order release rate

-

Gamma distribution’s shape and scale parameter (used for creation of WCD and production schedules)

Model-specific parameters include the simulation period’s time interval Δt, simulation time of N time intervals and amount of independent simulations for each WCD.

The simulation run is initiated by randomly selecting and queuing an unprocessed job from the production schedule. As arrival of new production orders is assumed to be a homogenous Poisson process for the remainder of this paper (seasonal effects are not taken into account), characterized by an expected value matching the production order release rate, the intermediate arrival times are exponentially distributed. Whenever the queue is empty, the algorithm skips the current interval and jumps to the next simulation period.

During each simulation period the algorithm then iterates through the current buildjob queue and simulates the execution of one of the following tasks (see UML diagram in Fig. 1 for simulation process details):

-

Mount job j on machine m

-

Reduce remaining mount time by Δt

-

Start processing job j

-

Reduce remaining job time by Δt

-

Unmount job j from machine m

-

Reduce remaining unmount time by Δt

-

Create statistics & log for job j

Output Variables. Once the elapsed simulation time reaches N, the throughput statistics for all completed buildjobs are calculated by means of the developed algorithm.

In order to statistically validate the results generated by applying the described algorithm (the simulation of a similar production schedule yields different results on every run due to the stochastic nature of production order arrival), each production schedule is simulated multiple times. While the statistical descriptors of the production schedule itself (average and standard deviation of work content) are obviously implicit input variables resulting from the hypothesized Gamma distribution, throughput is subject to random fluctuations for every simulation run. The corresponding quantiles, average and median values as well as outliers in the generated data are therefore quantified and interpreted when discussing the results in Sect. 4.2.

3 Literature review

The maturity of Additive Manufacturing technologies such as LPBF is increasing continuously [11, 12]. A sustainable integration however, eventually leading to competitive advantage for end-users, may not solely rely on technological differentiators. Resolving the specific challenges emerging from the perspective of Operations therefore serves as a sound basis for creating a unique selling proposition aside mastery of technology itself. Due to the relevance of operating AM production environments in an economically viable manner, numerous studies covering this topic have been published in recent years. While most papers are characterized by a clear focus on evaluation of profitability-based variables (e.g. production cost, profit per part, see [13,14,15,16]), consideration of time-related variables (e.g. due dates, adherence to delivery dates, cycle times) is also receiving increased attention [17, 18].

An extensive review of the above studies (including literature cited therein) is characterized by two major drawbacks judging from the perspective of production systematics:

-

Utilization of an AM machine is typically measured as the volumetric share of parts populating the build envelope [19, 20]. A definition of utilization and throughput following the perspective of production systematics is proposed by the authors in one of their recent studies [2]. A similar nomenclature was only found to be used as an auxiliary variable in the context of [21].

-

All papers tend to neglect the fundamental interrelations in between the production KPIs and focus on a hence isolated optimization target. A holistic approach has to take the dilemma of logistics into account as utilization, work-in-process and throughput times are tightly connected and therefore require an integrative analysis [3].

Combined with the above, the scarcity of published approaches on how to model and simulate a LPBF production environment (see [22]) makes it mandatory to pursue this topic by means of a holistic approach covering both the specifics of LPBF technology and production systematics.

4 Simulation of different production settings

The model described in Sect. 2 is henceforth applied to simulatively quantify the throughput (output variable) while varying the mentioned input variables. The subsequent analysis and graphical description of simulation results is limited to the output variable of average throughput being plotted against input parameters such as PORR, distribution parameters and operator availability.

4.1 Assumptions

In order to cover the specifics of a LPBF manufacturing process and initially enable a validation of the developed model on a fundamental level, the simulations rely on the following assumptions:

-

A maximum of one buildjob is being released during a simulation period of length Δt

-

Only one buildjob may be processed on a specific machine at a given point in time

-

Arrival rate of production orders is constant, i.e. the number of production orders received in a defined period obeys a Poisson distribution

-

Production orders may also be released when no operator is present

-

Only one type of alloy is being processed, i.e. material changes are neglected

-

Availability of machines is 100%, i.e. machine failures and maintenance are not considered

-

Based on the author’s experience and observations on various LPBF shopfloors, mount and unmount procedures are expected to last 1 h each

4.2 Validation

The validation of the proposed approach is carried out by modeling the input parameters according to the setting found at GKN Additive’s shopfloor (machine specification, production order release rate, operator availability and WCD) and then comparing the simulation results with the actual performance indicators derived from the provided datasets. Table 4 holds an overview of the actual shopfloor data, its modeled configuration and the results gathered from evaluating 100 simulation runs for each machine (see Fig. 4 for detailed simulation results with varying production order release rate). The mean average percentage error (MAPE) is a measure of prediction accuracy of a forecasting method and is utilized to evaluate the overall applicability of the simulation model.

The deviation when comparing the throughput’s medianFootnote 1 Q2 of the simulated data with the actual throughput \( TP_{real} \) is less than 6% for all four machines, while the overall MAPE amounts to 2.42%. The simulation runs for machine 1 and 2 tend to slightly overvalue throughput, while computing slightly undervalued throughput for machine 3 and 4. A comparison between the outputs of the real and the modeled system substantiates the validity of the proposed model for the given operating points. Nevertheless, further validation has to be carried out in order to also gain trust in the results when output variables, such as throughput, fall into more extreme regimes. The production scenarios defined in Fig. 2 serve as a reference framework for further validation.

4.3 Utilization and Interpretation of Simulation Results

Assuming that the described model is of sufficient validity for quantifying the performance of a LPBF production setting, the results created by running the simulation with varying input parameters may be utilized in domains such as production planning & scheduling, data preparation, shift planning and capacity dimensioning. Analysis of the simulation results depicted in Fig. 4 yields a machine-wise indication of reasonable production order release rates (PORR) while not changing work content distribution, operator presence and machine configuration. Once the PORR reaches a level where throughput is no longer increasing, the service provider might consider actions such as a capacity increase by investing in new equipment, proactively influencing work content distribution by adapting part nesting methodology or increasing operator presence.

In order to gain a understanding of the fundamental interrelations in between the input parameters and output variables, the following section provide an excerpt of feasible graphs (comprised of max. two input parameters and one output variable, as a set of more than three variables is difficult to visualize comprehensibly in \( {\mathbb{R}}^{2} \) or \( {\mathbb{R}}^{3} \)).

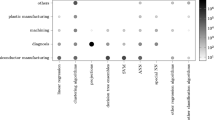

Throughput vs. average and standard deviation of work content. Fixing the production order release rate, operator availability and machine configuration allows for plotting a LPBF system’s throughput against average and standard deviation of work content, each of which combinations belongs to a specific Gamma distribution. Obviously, the statistical descriptors are merely a result of the shape and scale parameters selected for the hypothesized distribution [23]. As it seems more intuitive to deal with averages and standard deviations from a practicioner’s perspective, they are preferred over shape and scale parameters for creating the corresponding graphs. In Fig. 3 the average throughput of a LPBF system is plotted against the work content average (x-axis) and standard deviation (y-axis), while operator presence, machine configuration and production order release rate remain unchanged. Each colored point in the graph corresponds to a specific WCD and represents the average throughput for 50 simulation runs. The outer borders of the colored area resemble the boundary box introduced in Fig. 2, as the underlying Gamma distributions are parametrized identically. The black straights in Fig. 3 indicate constant coefficients of variation (CV). The CV is a standardized measure of the dispersion of a distribution, defined as the ratio of the standard deviation to the mean. Hence, the dispersion of the respective WCDs along the lines is constant. Interpretation of the graphs provides the following findings:

-

The production system achieves maximum throughput when work content averages to at least 75 h per buildjob

-

Average work content above 75 h per buildjob does not result in increased throughput

-

The work content standard deviation only has very little impact on throughput. i.e. fluctuations regarding buildjob duration cause no significant reduction of throughput

-

When running a LPBF production with comparably short buildjobs, i.e. WCDs characterized by low average and work content, the production order release rate has to be significantly higher to achieve reasonable throughput. Obviously, the latter is only feasible under the circumstances of sufficient customer demand and according order intake.

Throughput vs. production order release rate. The box plots (see [24] for statistical description) in Fig. 4 depict simulation results for the four different EOS LPBF machines installed on GKN Additive’s shopfloor. While the red crosses indicate the operating points found in reality and were used beforehand for validating the simulation model, the horizontal red lines quantify the throughput’s median with varying production order release rate. The blue boxes are delimited by lower and upper quartiles and hence contain 50% of the simulated output values. The comparably wide range of the whiskers (dashed black lines) and the occurrence of outliers (red plus symbols) underline the impact of the simulation’s stochastic nature and require the execution of a sufficient number of stochastically independent simulation runs. According to the statistical analysis proposed in [25], execution of 100 simulation runs while not changing the input parameters accounts for a throughput sample precision of ± 0.003 h per working hour at a 95% confidence interval. Interpretation of the graphs provides the following findings:

Simulation of throughput with changing production order release rate (one operator present during two out of three shifts, PORR and WCD according to Table 4, 1 h machine mount and unmount time)

-

While throughput initially grows linearly for the given settings it starts converging to 0.93 h per working hour in the transitional areas found for production order release rates in between 0.5 and 0.65 PO/day (even outliers systematically fall below the maximum).

-

On all four machines a production order release rate of at least 0.6 PO/day results in maximized throughput (please note that the WCDs of the four systems are very similar).

Connecting the throughput median values and linearly interpolating for the values in between (also see Fig. 5) yields a curve similar to the operating curves proposed in [3]. As the production order release rate is directly proportional to the work-in-process (WIP) of a production system, the resemblance of the curves substantiates the validity of the developed model.

Simulation of throughput with changing production order release rate and operator presence (WCD according to Machine 1/Table 4, 1 h machine mount and unmount time)

Throughput vs. production order release rate and operator presence. As LPBF equipment only operates autonomously from process start to end, the presence of operators is mandatory to carry out mounting and unmounting of the machines. According to the author’s experience, backed up with an inconclusive literature review, operators at least supervise the auxiliary process steps even on partially automated LPBF equipment. A typical challenge in production planning is imposed by ensuring a reasonable operator presence for a given production schedule and machine capacity. The proposed simulation model hence incorporates the presence of machine operators during different shifts as an input parameter. The curves in Fig. 5 depicts how operator presence (and production order release rate) is linked to maximum achievable throughput for a LPBF production system similar to Machine 1/Table 4. Interpretation of the graphs provides the following findings:

-

Up to a production order release rate of 0.5 PO/day, operator presence does not have any impact on the system’s throughput as long as at least one operator is present during at least one out of three shifts.

-

The presence of one operator during one shift results in a significant limitation of maximum feasible throughput (0.82 h per working hour, -15% compared to maximum throughput of 0.97 h per working hour for three shift production).

-

Even with the presence of one operator during all three shifts the throughput does not amount to the maximum theoretical value of 1 h per working hour. This model behavior is caused by the fact that operators do not start mounting/unmounting their assigned machine when their remaining work time is shorter than the required mount/unmount time.

-

Considering multiple shifts (and multiple operators) is only feasible when operating the LPBF equipment in the transitional areas of throughput.

5 Conclusion

The presented simulation model serves as a framework for understanding the fundamental interrelations between production parameters and corresponding KPIs considering the specifics of LPBF technology. It is designed in a practicioner-friendly way and may serve as a sound basis for decision making in production planning, control and optimization. The results create a basic and intuitive understanding of the interrelations between input parameters and output variables and hence provide guidance when taking production-related decisions.

5.1 Takeaways for end-users

With LPBF, the manufacturing time is significantly impacted by the way single components are assigned to a buildjob [26]. This upstream process of data preparation is a manual task and hence operator’s decisions wether or not to add an additional part to a buildjob influences work content distribution over time. For a fixed operator presence, end-users may choose from three options in order to increase utilization of their equipment:

-

Increasing average work content by adding more parts to a buildjob (which implies acceptance of longer throughput times) and keeping the production order release rate constant

-

Increasing production order release rate while keeping the work content distribution constant (which is only feasible if a sufficient backlog of customer orders exists)

-

A combination of the above two points

The simulation results furthermore indicate that the impact of releasing additional production orders is continuously decreasing for utilizations beyond 65% (see Fig. 4). As releasing further production orders is equivalent to increasing the work-in-process at a workstations this behavior fits the predictions of the operating curves model [3].

Regarding shift-planning, presence of operators during more than one shift is only increasing utilization beyond 82% for the given production order release rate and work content distribution. (see Fig. 5).

When it comes to utilization, unlike the average work content, the work content standard deviation plays a minor role for the given production order release rate of 0.35 PO/d (see Fig. 3). Hence, deviations are acceptable if average work content is being monitored.

All conclusions above are qualitatively valid regardless of the operating point of the production, whereas quantitative predictions require precise knowledge of the corresponding operation point.

5.2 Future developments

While the focus of this paper is on throughput as a major production system KPI, throughput times, adherence to delivery dates and work-in-process are equally relevant when evaluating production system and shall hence be covered by a holistic model. In order to create widespread adoption and eventually utilize the developed methodology in a LPBF production context, future work shall increase both plausibility and applicability of the simulation model and therefore focus on the following aspects:

-

Consideration of upstream activities, i.e. data preparation (part orientation, support placement and nesting [27])

-

Further validation based on the production scenarios mentioned in Table 1 by collection and evaluation of historical production data (combined with advanced filter algorithms ensuring data quality and soundness).

-

Detailed statistical analysis of the input parameter’s impact on output variables by means of Monte-Carlo simulation and subsequent sensitivity analysis.

-

Incorporation of part- and buildjob-perspective, i.e. analysis of the influence of individual part portfolios, lot-sizing strategies, scheduling rules etc. on the mentioned KPIs.

-

Consideration of multiple materials

-

Integration of metrics for production order release and scheduling in order to evaluate throughput times and adherence to delivery dates

Notes

Q2 is the simulated throughput’s median, Q1 corresponds to the 25th percentile, Q3 corresponds to the 75th percentile.

References

Rehnberg M, Ponte S (2018) From smiling to smirking? 3D printing, upgrading and the restructuring of global value chains. Glob Netw 18:57–80. https://doi.org/10.1111/glob.12166

Stittgen T, Schleifenbaum JH (2020) Towards operating curves of additive manufacturing equipment: production logistics and its contribution to increased productivity and reduced throughput time. Proc CIRP 93:14–19. https://doi.org/10.1016/j.procir.2020.06.006

Nyhuis P, Wiendahl H-P (2009) Fundamentals of production logistics. Springer, Berlin

Wiendahl H-P (2014) Betriebsorganisation für Ingenieure: Mit 3 Tabellen, 8, überarb edn. Hanser, München

Gross D, Harris CM (1985) Fundamentals of queueing theory, 2nd edn. Wiley, New York

Hopp WJ, Spearman ML (2000) Factory physics: Foundations of manufacturing management, 2. ed., internat. ed. Irwin/McGraw-Hill, Boston

Dirks S (2019) Adaption of Cost Calculation Methods for Modular Laser-Powder Bed Fusion (L-PBF) Machine Concepts. In: ASMET (ed) Proceedings of the Metal Additive Manufacturing Conference 2019, pp 79–87

Stephens MA (1974) EDF statistics for goodness of fit and some comparisons. J Am Stat Assoc 69:730. https://doi.org/10.2307/2286009

D’Agostino R, Stephens MA (1986) Goodness-of-Fit-Techniques, 1st ed. Statistics, v.68. CRC Press, New York

Singla N, Jain K, Sharma SK (2016) Goodness of fit tests and power comparisons for weighted gamma distribution. RevStat Stat J 14:29–48

Jiang R, Kleer R, Piller FT (2017) Predicting the future of additive manufacturing: a Delphi study on economic and societal implications of 3D printing for 2030. Technol Forecast Soc Chang 117:84–97. https://doi.org/10.1016/j.techfore.2017.01.006

Lezama-Nicolás R, Rodríguez-Salvador M, Río-Belver R, Bildosola I (2018) A bibliometric method for assessing technological maturity: the case of additive manufacturing. Scientometrics 117:1425–1452. https://doi.org/10.1007/s11192-018-2941-1

Li Q, Kucukkoc I, Zhang DZ (2017) Production planning in additive manufacturing and 3D printing. Comput Oper Res 83:157–172. https://doi.org/10.1016/j.cor.2017.01.013

Li Q, Zhang D, Wang S, Kucukkoc I (2019) A dynamic order acceptance and scheduling approach for additive manufacturing on-demand production. Int J Adv Manuf Technol 105:3711–3729. https://doi.org/10.1007/s00170-019-03796-x

Ruffo M, Tuck C, Hague R (2006) Cost estimation for rapid manufacturing - laser sintering production for low to medium volumes. Proc Inst Mech Eng Part B: J Eng Manuf 220:1417–1427. https://doi.org/10.1243/09544054JEM517

Thomas DS, Gilbert SW (2014) Costs and cost effectiveness of additive manufacturing. Accessed 31 May 2020

Chergui A, Hadj-Hamou K, Vignat F (2018) Production scheduling and nesting in additive manufacturing. Comput Ind Eng 126:292–301. https://doi.org/10.1016/j.cie.2018.09.048

Oh Y, Zhou C, Behdad S (2018) Production planning for mass customization in additive manufacturing: build orientation determination, 2D packing and scheduling. In: Proceedings of the ASME international design engineering technical conferences and computers and information in engineering conference - 2018: Presented at ASME 2018 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, August 26-29, 2018, Quebec City, Canada. The American Society of Mechanical Engineers, New York, N.Y

Kucukkoc I, Li Q, Zhang D (2016) Increasing the utilisation of additive manufacturing and 3D printing machines considering order delivery times. In: 19th International working seminar on production economics, vol 3, pp 195–201

Baumers M, Holweg M (2019) On the economics of additive manufacturing: experimental findings. J Ops Manag 30:343. https://doi.org/10.1002/joom.1053

Lindemann C, Jahnke U, Habdank M, Koch R (2012) Analyzing product lifecycle costs for a better understanding of cost drivers in additive manufacturing. 23rd Annual International Solid Freeform Fabrication Symposium - An Additive Manufacturing Conference, SFF 2012

Trancoso JPG, Piazza V, Frazzon E (2018) Simulation-based analysis of additive manufacturing systems for fuel nozzles. J Aerosp Technol Manag. https://doi.org/10.5028/jatm.v10.963

Walck C Hand-book on statistical distributions for experimentalists

Benjamini Y (1988) Opening the box of a boxplot. Am Stat 42:257. https://doi.org/10.2307/2685133

Garrido JM (2009) Object oriented simulation. Springer, Heidelberg

Fera M, Fruggiero F, Costabile G, Lambiase A, Pham DT (2017) A new mixed production cost allocation model for additive manufacturing (MiProCAMAM). Int J Adv Manuf Technol 92:4275–4291. https://doi.org/10.1007/s00170-017-0492-x

Oh Y, Witherell P, Lu Y, Sprock T (2020) Nesting and scheduling problems for additive manufacturing: a taxonomy and review. Additive Manuf 36:101492. https://doi.org/10.1016/j.addma.2020.101492

Acknowledgements

This work was carried out within the framework of the IDAM project (13N15084) funded by the German Federal Ministry for Education and Research (BMBF). The authors would like to thank GKN Additive for providing the presented datasets.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Stittgen, T., Schleifenbaum, J.H. Simulation of utilization for LPBF manufacturing systems. Prod. Eng. Res. Devel. 15, 45–56 (2021). https://doi.org/10.1007/s11740-020-00998-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11740-020-00998-1