Abstract

Background

Although many studies assess predictors of provider burnout, few analyses provide high-quality, consistent evidence on the impact of provider burnout on patient outcomes exist, particularly among behavioral health providers (BHPs).

Objective

To assess the impact of burnout among psychiatrists, psychologists, and social workers on access-related quality measures in the Veterans Health Administration (VHA).

Design

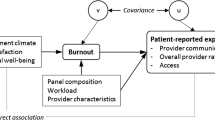

This study used burnout in VA All Employee Survey (AES) and Mental Health Provider Survey (MHPS) data to predict metrics assessed by the Strategic Analytics for Improvement and Learning Value, Mental Health Domain (MH-SAIL), VHA’s quality monitoring system. The study used prior year (2014–2018) facility-level burnout proportion among BHPs to predict subsequent year (2015–2019) facility-level MH-SAIL domain scores. Analyses used multiple regression models, adjusting for facility characteristics, including BHP staffing and productivity.

Participants

Psychologists, psychiatrists, and social workers who responded to the AES and MHPS at 127 VHA facilities.

Main Measures

Four compositive outcomes included two objective measures (population coverage, continuity of care), one subjective measure (experience of care), and one composite measure of the former three measures (mental health domain quality).

Key Results

Adjusted analyses showed prior year burnout generally had no impact on population coverage, continuity of care, and patient experiences of care but had a negative impact on provider experiences of care consistently across 5 years (p < 0.001). Pooled across years, a 5% higher facility-level burnout in AES and MHPS had a 0.05 and 0.09 standard deviation worse facility experiences of care from the prior year, respectively.

Conclusions

Burnout had a significant negative impact on provider-reported experiential outcome measures. This analysis showed that burnout had a negative effect on subjective but not on objective quality measures of Veteran access to care, which could inform future policies and interventions regarding provider burnout.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

INTRODUCTION

Provider burnout has garnered increasing attention and exponential growth in published studies. The triple aim of enhancing patient experience, improving population health, and reducing costs now includes healthcare workforce well-being, known as the quadruple aim.1–3 Health system responses to the COVID-19 pandemic exacerbated provider burnout.4, 5

Many studies examine burnout predictors; few assess associated patient outcomes. Outcomes can include objective measures (e.g., proportion of diagnosed patients that receive treatment) and subjective assessments (e.g., provider perceptions of patient well-being).2, 6 Studies of subjective measures find that provider burnout leads to worse patient satisfaction,7, 8 worse self-perceived work performance,9 and provider-reported suboptimal patient care.10 A 2022 study found that, counterintuitively, physicians reporting burnout may create better outcomes for patients, noting a complex relationship between burnout and outcomes.11

Published data on objective outcomes appears limited. One study found burnout negatively affected patient-reported experiences of patient-provider communication, but not access or overall provider rating.12 A review found a moderate association between burnout and safety-related quality of care.13 Others found significant bivariate relationships between burnout and outcomes.14 A meta-analysis found high heterogeneity and low-to-moderate study quality in studies of the impact of burnout on patient outcomes.15 Additional limitations include lack of prospective studies, cross-sectional analyses, small samples, and single outcomes (e.g., patient safety, post-discharge recovery time).16, 17

Few high-quality studies document how provider burnout affects patient outcomes, particularly among behavioral health providers (BHPs). BHPs have the highest level of burnout after primary care physicians in the Veterans Health Administration (VHA).18 One study found therapist burnout negatively impacts patient depression and anxiety outcomes.19

To address this information gap, we identified the relationship between BHP burnout and objective and subjective measures of patient mental health outcomes collected quarterly within VHA, Strategic Analytics for Improvement and Learning Value, Mental Health Domain (MH-SAIL). We hypothesized that BHP burnout negatively influences outcomes.

METHODS

Study Design

This study comprises one segment of a project assessing predictors and consequences of VHA BHP burnout.18, 20 We used facility-level quality metrics as outcomes and burnout as primary predictors. A “station” (STA3N) within VHA represents a parent facility and may have several subsidiary medical centers or community-based outpatient clinics assigned to it. Since participants provided anonymous responses, we cannot link data by respondent within any data sources or between surveys. VA Ann Arbor Healthcare System Institutional Review Board approved this study.

Data Sources

We used 2014–2019 data from Annual All Employee Survey (AES) and Mental Health Provider Survey (MHPS); 2015–2019 facility-level Mental Health Onboard Clinical (MHOC) staffing and productivity data; and 2015–2019 MH-SAIL data. After merging sources, the study included 127 out of 138 (92%) VHA parent facilities with available data.

AES

National Center for Organizational Development (NCOD) administers AES to all VHA employees annually to assess workplace perceptions and satisfaction. Further information on AES creation, measures, and how it informs VHA appear elsewhere.21 Since 2001, AES represent best practices among large organization surveys.22 All AES responses remain anonymous. We included BHPs: psychologists, psychiatrists, social workers. During the study period, AES had response rates of 54% in psychiatrists, 66% in psychologists, and 67% in social workers.20

MHPS

Office of Mental Health and Suicide Prevention (OMHSP) invites all VHA BHPs to complete the MHPS annually to assess perceptions about access to and quality of mental health care, and job satisfaction.23 Analyses found MHPS data reliable, valid, and consistent.24 The MHPS response rate during the study period exceeded 50%.20

MHOC

OMHSP developed a staffing model that estimates full-time equivalent (FTE) mental health staff per 1000 Veterans treated in outpatient mental health settings, a population-based measure (staffing ratio).25 MHOC includes a measure of provider productivity calculated as the sum of work Relative Value Units (wRVUs) divided by time spent providing direct clinical care in outpatient mental health settings (productivity).26

CDW

We used VA Corporate Data Warehouse (CDW) to create a facility indicator of rural/urban location and a three-part facility complexity measure. Complexity levels include high (high-volume, high-risk patients, most complex clinical programs, large research and teaching programs), medium (medium volume, low-risk patients, few complex clinical programs, small or no research and teaching programs), and low (low volume, low-risk patients, with few or no complex clinical programs, small or no research and teaching programs).

MH-SAIL

In 2010, VHA implemented the SAIL monitoring system to provide VHA management with high-level indicators of health care quality.27 MH-SAIL incorporates a composite of three component measures, each of which represents a composite of constituent measures (see Appendix Table 1). Three components include population coverage, continuity of care, and experience of care. Experience of care includes four provider (collaborative MH care; job satisfaction; quality of MH care; timely access to MH care) and two patient experience subcomponents (MH appointment access; patient-centered MH care). VHA developed components tailored to its intended coverage, available data, and candidate measures identified during selection and refinement. Each component represents measures with moderate to high internal consistency.24

Study Measures

Dependent Variables

We used four MH-SAIL metrics as outcomes.

Population Coverage

An objective measure representing access to care, which combines 16 individual metrics (constituent items) with denominators representing the number of Veterans experiencing specific diagnoses and numerators representing receiving targeted services, treatments, and/or visits.

Continuity of Care

An objective measure combining 11 individual metrics with denominators representing the number of Veterans experiencing specific diagnoses and treatments for and numerators representing continuity of care such as number of follow-up visits within a specified period or amount of continuous medication coverage.

Experience of Care

A subjective measure that includes both provider and patient perspectives combining 32 individual survey responses including provider responses assessing collaborative MH care (6 items), job satisfaction (2 items), quality of MH care (5 items), timely access to MH care (6 items), and Veteran responses assessing MH appointment access (5 items) and patient-centered MH care (8 items).

We used the three domain scores (population average, continuity of care, experience of care) generated by VHA developers of the tracking system every quarter. Within experience of care domain, we also used subdomain scores of provider satisfaction and patient satisfaction. The domain scores each use weighted averages of standardized constituent items where each item’s scores represent quarterly changes from the score of the last quarter of the prior fiscal year within each facility, divided by the standard deviation of the prior year last quarter facility score, and thus have a mean of 0 and standard deviation of 1.24 The standardization combines constituent items with different denominators and statistical distributions into like units to generate each domain scores. The domain scores indicate overall direction of change in a facility’s performance for the specific domain within-facilities.24

Overall Mental Health

This measure represents an overall measure calculated as the equally weighted averages of the two objective and one subjective domain scores.24

Key Independent Variable: Provider Burnout

For AES and MHPS, we defined employee burnout as a dichotomous variable using validated approaches to define burnout.20, 28 We obtained the facility burnout percentage for each survey by averaging dichotomous burnout data among facility survey responses. We analyzed burnout data from AES and MHPS separately because we cannot identify respondents in each survey or link participants who completed the two sets of measures.

AES

We classified whether respondents reported burnout according to methods used by other VHA researchers.28 The approach used two burnout questions: emotional exhaustion (“I feel burned out from my work”) and depersonalization (“I worry that this job is hardening me emotionally”). Each of these two burnout questions had a 7-point response scale (1 = never; 2 = a few times a year or less; 3 = once a month or less; 4 = a few times a month; 5 = once a week; 6 = a few times a week; 7 = every day). We generated a dichotomous variable such that if the respondent answered either question with 5 or higher (once a week or higher frequency), we classified the response as endorsing burnout; otherwise, we classified respondents as not endorsing burnout, as in our prior study.28

MHPS

We generated a dichotomous variable to classify respondent burnout using the sole burnout question of “Overall, based on your definition of burnout, how would you rate your level of burnout?” The response options from 1 to 5 appeared as follows: 1 = I enjoy my work. I have no symptoms of burnout; 2 = Occasionally I am under stress, and I don't always have as much energy as I once did, but I don't feel burned out; 3 = I am definitely burning out and have one or more symptoms of burnout, such as physical and emotional exhaustion; 4 = The symptoms of burnout that I’m experiencing won’t go away. I think about frustration at work a lot; 5 = I feel completely burned out and often wonder if I can go on. I am at the point where I may need some changes or may need to seek some sort of help. We generated the dichotomous burnout variable by response of ≥ 3. Our prior work indicated that facility-level MHPS burnout rate using ≥ 3 as the cutoff showed the highest correlation to facility-level burnout rate in AES across yearly data from 2015 to 2018.20

MHOC

We used two facility-level variables (staffing ratio and productivity) as possible predictors of the relationship between self-reported work-environment characteristics and burnout. Details outlining the purpose, origins, and definitions of these metrics appear elsewhere.25, 26, 29

Statistical Analysis

We summarized facility-level characteristics (annually), burnout (annually), and MH-SAIL domain scores (annually by averaging four quarterly scores). As annual burnout percentages represent summary data for BHPs who responded to the burnout items and do not include non-responders to the burnout items, we summarized the yearly burnout percentages as (1) crude average of the facility burnout percentages among BHPs, (2) average weighted by the number of facility survey responders, and (3) average weighted by inverse of the facility response rate of burnout items, using this final approach in our adjusted models.

We assessed relationships between burnout and MH-SAIL outcomes using multiple regression analysis with facility-level prior year burnout percentages among BHPs as predictors and weighted by the number of facility survey responders. We examined impact of prior year burnout on subsequent year MH-SAIL outcomes to disentangle temporal ordering of provider burnout and outcomes. We repeated analyses using burnout percentages based on yearly AES and on MHPS separately to assess consistency. For each year, we estimated raw and covariate adjusted facility-level burnout effect on each of the four MH-SAIL composite outcomes. For meaningful interpretations of the burnout regression coefficient, we divided facility burnout percentage by 5. A one-unit increment in burnout corresponded to a 5% increment in burnout. In adjusted models, we included as covariates facility complexity, rurality, staffing ratio, and productivity. Finally, we pooled data across years and obtained a summary burnout effect on each MH-SAIL outcome (the four composite measures and six composite experience of care measures) using generalized linear models with generalized estimating equations (GEE) to account for repeated data over years within each facility, adjusting for covariates and year.

RESULTS

Table 1 summarizes facility productivity and staffing ratio, burnout levels in AES and in MHPS respondents, and MH-SAIL scores by year. Facilities were mostly urban (88%), and 66% had high complexity, 13% medium complexity, and 20% low complexity. Annual burnout levels in AES respondents fluctuated from 32% in 2018 to 38% in 2017; weighted yearly burnout levels did not differ notably from the unweighted levels. Yearly burnout levels in MHPS responders also fluctuated across years. Burnout levels between AES and MHPS surveys differed somewhat within the same year, with burnout levels reported in MHPS higher by over 4% in 2014, 2018, and 2019.

MH-SAIL scores of 0 reflect no change from last year, positive values represent improvement, and negative values represent worsening. Facility scores showed wider ranges for subjective experiences of care than the objective scores, suggesting that facility-level experiences of care showed greater fluctuations from quarter to quarter than objective measures.

Table 2 presents regression coefficients for burnout predicting each MH-SAIL score and the overall mental health by year, adjusting for facility characteristics (unadjusted analyses appear in Appendix Table 2). For AES and MHPS, facility burnout had a significant negative relationship with facility experiences of care, and inconsistent, nonsignificant relationships across years for continuity of care and population coverage. During 2015–2019, coefficients of AES burnout predicting facility experiences of care ranged from − 0.10 in year 2018 to − 0.18 in year 2019, and the coefficients of MHPS burnout ranged from − 0.11 in year 2015 to − 0.20 in year 2017; negative coefficients indicated worsening facility experiences of care compared to prior year with higher facility burnout among BHPs. AES burnout coefficient of − 0.10 in year 2018 indicated that facilities with 5% higher burnout among BHPs in prior year had an estimated − 0.10 standard deviation worse facility experience of care relative to prior year last quarter of that facility. Burnout coefficients also remained significantly negative for the overall mental health score across all study years, which represents an average of all three domains.

Pooled across years, a 5% higher facility-level burnout in AES and MHPS each had a 0.05 and 0.09 standard deviation worse facility experiences of care from prior year last quarter, respectively. Each of the pooled AES and MHPS burnout coefficients was somewhat attenuated from the corresponding annual estimates but remained highly significant (Table 2 and Figs. 1 and 2). We found no significant relationship between AES burnout and continuity of care (coefficient = − 0.0009; p = 0.91) and AES burnout and population coverage (coefficient = − 0.01; p = 0.11) in the pooled analysis. Similarly, we did not find relationships between MHPS burnout and continuity of care or between MHPS burnout and population coverage.

Pooled multivariable GEE models with facility clusters and control for facility characteristics: Experiences of care. X-axis: Burnout measure. Y-axis: Prior year burnout slope. Lines: green, AES burnout; orange, MHPS burnout. Abbreviations: AES, All Employee Survey; MHPS, Mental Health Provider Survey. Adjusted for complexity, rurality, staff ratio, productivity. Experiences of care is composed of (1) Veteran access experience, (2) Veteran MH care experience; (3) provider experience, quality care; (4) provider experience, collaboration; (5) provider experience, access; (6) provider job experience.

Figure 1 illustrates relationships between prior year burnout and MH-SAIL metrics based on the pooled analysis where the negative coefficient appeared larger in magnitude for subjective domain of experience of care than two objective domains for AES and MHPS burnout. Figure 2 demonstrates that burnout did not affect Veteran satisfaction but did negatively affect provider satisfaction within the experiences of care domain.

DISCUSSION

In a sizable study of burnout among BHPs over time in one of the largest mental health systems nationally, we found higher prior year facility-level burnout associated with lower scores on subjective but not objective measures of quality of mental health care. The finding corresponds to facilities with higher burnout showing a negative change provider but not patient experiences of care relative to the prior year, with larger effects in the relationship between MHPS-burnout and provider experience of care than the similar relationship in the AES-burnout analyses. Differences in magnitudes of results in the two surveys may reveal variation in the respondent pools in each measure. Further, since the analyses accounted for variation that could have occurred due to differences in facility complexity, staffing, rurality, and productivity, future work will need to identify other explanations for why providers who feel burned out perceive a decrease in the quality of care they provide and how health systems can respond effectively.

This work adds to literature on the relationship between burnout and patient outcomes. One review study focused on objectively measured outcomes including quality of care and medical errors found no significant relationship in six studies and negative relationships between burnout and patient outcomes in four studies (namely less effective provider-patient communication; more referrals, increased standardized mortality ratio, and increased hospitalizations for ambulatory care sensitive conditions).30 Other researchers suggested that when providers conceptualize negative outcomes, they include factors that coders consider to be process variables instead of outcome variables.31, 32

Intervention studies support the limited impact of burnout on objective patient outcomes. One mixed method, randomized, comparative effectiveness study tested two competing approaches to improve care, one addressing clinician burnout and the other addressing how clinicians interact with consumers, in community mental health centers. They found no difference in effectiveness between interventions on burnout, patient-centered processes, or other outcomes.33 Another study found that work-life interventions improving clinician satisfaction and well-being do not reduce errors or improve quality, suggesting a need for longer, more focused interventions to produce meaningful improvements in patient care.34

Our findings combined with the literature suggest an uncertain future for studies and science in this area. How should the field address the seemingly incongruent findings that provider burnout may not negatively affect patient outcomes, when accounting for staffing and productivity levels, even while it harms provider experiences? Others indicate that quality of care remains preserved at great personal cost to providers.11 Across 5 years of data, greater BHP burnout in a year had an association with poorer changes in experience of care in the following year. This suggests downstream effects of burnout not immediately apparent. Even if burnout does not have a consistent association with all patient outcomes, providers’ experiences of care matter.

Limitations

This study has limitations. We examined how facility-level provider burnout influenced facility-level provider and patient experiences. We did not have individual-level patient or provider data to assess the relationship between an individual provider’s burnout and a specific set of patients and their outcomes, so we cannot know if a particular provider treated a particular patient. We did not include data from after the onset of the global pandemic, COVID-19, so we do not know how the relationships between BHP burnout and outcomes measures changed in that context. We did not conduct an analysis of the individual MH-SAIL measures, which could provide a more granular relationship between burnout and individual outcomes. Not every VHA facility provides all relevant data sources; missing facilities could have different provider and patient experiences and outcomes than the included centers. Finally, objective measures receipt of service rather than quality of care; subjective measures may capture a different dimension of quality of services provided and received. Despite limitations, two similar, distinct surveys yielded nearly identical results, increasing robustness of identified associations.

CONCLUSION

BHP burnout negatively affected subjective provider outcomes. Our findings align with others suggesting providers may ensure high-quality patient care, even with a significant impact on provider well-being.11 That burnout may affect providers experiences of care remains a meaningful finding and consideration for future policies and interventions.

Data Availability

The datasets during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Bodenheimer T, Sinsky C. From triple to quadruple aim: care of the patient requires care of the provider. Ann Fam Med. 2014;12(6):573-6. doi:https://doi.org/10.1370/afm.1713

Rathert C, Williams ES, Linhart H. Evidence for the Quadruple Aim: A Systematic Review of the Literature on Physician Burnout and Patient Outcomes. Med Care. 2018;56(12):976-84. doi:https://doi.org/10.1097/Mlr.0000000000000999

Williams ES, Rathert C, Buttigieg SC. The Personal and Professional Consequences of Physician Burnout: A Systematic Review of the Literature. Med Care Res Rev. 2019. doi:https://doi.org/10.1177/1077558719856787

Ross J. The Exacerbation of Burnout During COVID-19: A Major Concern for Nurse Safety. J PeriAnesthesia Nurs. 2020;35(4):439-40. doi:https://doi.org/10.1016/j.jopan.2020.04.001

Kruse CS, Mileski M, Dray G, Johnson Z, Shaw C, Shirodkar H. Physician Burnout and the Electronic Health Record Leading Up to and During the First Year of COVID-19: Systematic Review. J Med Internet Res. 2022;24(3):e36200. doi:https://doi.org/10.2196/36200

Tawfik DS, Scheid A, Profit J, Shanafelt T, Trockel M, Adair KC, et al. Evidence Relating Health Care Provider Burnout and Quality of Care: A Systematic Review and Meta-analysis. Ann Intern Med. 2019;171(8):555-67. doi:https://doi.org/10.7326/M19-1152

Argentero P, Dell'Olivo B, Ferretti MS, Dialysis WGOB. Staff burnout and patient satisfaction with the quality of dialysis care. Am J Kidney Dis. 2008;51(1):80-92. doi:https://doi.org/10.1053/j.ajkd.2007.09.011

Leiter MP, Harvie P, Frizzell C. The correspondence of patient satisfaction and nurse burnout. Soc Sci Med. 1998;47(10):1611-7. doi:Doi https://doi.org/10.1016/S0277-9536(98)00207-X

Bakker AB, Demerouti E, Sanz-Vergel AI. Burnout and work engagement: The JD–R approach. Ann Rev Organ Psychol Organ Behav. 2014;1(1):389-411.

Williams ES, Manwell LB, Konrad TR, Linzer M. The relationship of organizational culture, stress, satisfaction, and burnout with physician-reported error and suboptimal patient care: Results from the MEMO study. Health Care Manag Rev. 2007;32(3):203-12. doi:https://doi.org/10.1097/01.hmr.0000281626.28363.59

Casalino LP, Li J, Peterson LE, Rittenhouse DR, Zhang M, O’Donnell EM, et al. Relationship Between Physician Burnout And The Quality And Cost Of Care For Medicare Beneficiaries Is Complex: Study examines the relationship between physician burnout and the quality and cost of care for Medicare beneficiaries. Health Aff. 2022;41(4):549-56.

Chung S, Dillon EC, Meehan AE, Nordgren R, Frosch DL. The Relationship Between Primary Care Physician Burnout and Patient-Reported Care Experiences: a Cross-sectional Study. J Gen Intern Med. 2020;35(8):2357-64. doi:https://doi.org/10.1007/s11606-020-05770-w

Dewa CS, Loong D, Bonato S, Trojanowski L. The relationship between physician burnout and quality of healthcare in terms of safety and acceptability: a systematic review. BMJ Open. 2017;7(6):e015141. doi:https://doi.org/10.1136/bmjopen-2016-015141

Mohr DC, Swamy L, Wong ES, Mealer M, Moss M, Rinne ST. Critical Care Nurse Burnout in Veterans Health Administration: Relation to Clinician and Patient Outcomes. Am J Crit Care. 2021;30(6):435-42. doi:https://doi.org/10.4037/ajcc2021187

Panagioti M, Geraghty K, Johnson J, Zhou A, Panagopoulou E, Chew-Graham C, et al. Association Between Physician Burnout and Patient Safety, Professionalism, and Patient Satisfaction: A Systematic Review and Meta-analysis. JAMA Intern Med. 2018;178(10):1317-30. doi:https://doi.org/10.1001/jamainternmed.2018.3713

Hall LH, Johnson J, Watt I, Tsipa A, O'Connor DB. Healthcare Staff Wellbeing, Burnout, and Patient Safety: A Systematic Review. PLoS One. 2016;11(7):e0159015. doi:https://doi.org/10.1371/journal.pone.0159015

Halbesleben JRB, Rathert C. Linking physician burnout and patient outcomes: Exploring the dyadic relationship between physicians and patients. Health Care Manag Rev. 2008;33(1):29-39.

Zivin K, Kononowech J, Boden M, Abraham K, Harrod M, Sripada RK, et al. Predictors and Consequences of Veterans Affairs Mental Health Provider Burnout: Protocol for a Mixed Methods Study. JMIR Res Protocol. 2020;9(12):e18345. doi:https://doi.org/10.2196/18345

Delgadillo J, Saxon D, Barkham M. Associations between therapists' occupational burnout and their patients' depression and anxiety treatment outcomes. Depression Anxiety. 2018;35(9):844-50. doi:https://doi.org/10.1002/da.22766

Zivin K, Chang MM, Van T, Osatuke K, Boden M, Sripada RK, et al. Relationships between work-environment characteristics and behavioral health provider burnout in the Veterans Health Administration. Health Serv Res. 2022;57(Supplement 1):83-94. doi:https://doi.org/10.1111/1475-6773.13964

Osatuke K, Draime J, Moore SC, Ramsel D, Meyer A, Barnes S, et al. Organization development in the Department of Veterans Affairs. The Praeger Handbook of Veteran’s Health: History, Challenges, Issues, and Developments Volume IV: Future Directions in Veterans Healthcare 2012. p. 21–76. https://www.researchgate.net/publication/297270678_Organization_development_in_the_Department_of_Veterans_Affairs

Schmidt EM, Krahn DD, McGuire MH, Tavakoli S, Wright DM, Solares HE, et al. Using organizational and clinical performance data to increase the value of mental health care. Psychol Serv. 2017;14(1):13-22.

Lemke S, Boden MT, Kearney LK, Krahn DD, Neuman MJ, Schmidt EM, et al. Measurement-based management of mental health quality and access in VHA: SAIL Mental Health Domain. Psychol Serv. 2017;14(1):1-12.

Boden MT, Smith CA, Klocek JW, Trafton JA. Mental Health Treatment Quality, Access, and Satisfaction: Optimizing Staffing in an Era of Fiscal Accountability. Psychiatr Serv (Washington, D.C.). 2019;70(3):168-75. doi:https://doi.org/10.1176/appi.ps.201800229

Boden M, Smith CA, Trafton JA. Investigation of population-based mental health staffing and efficiency-based mental health productivity using an information-theoretic approach. PLoS One. 2021;16(8):e0256268. doi:https://doi.org/10.1371/journal.pone.0256268

National Academies of Sciences E, and Medicine,. Evaluation of the Department of Veterans Affairs mental health services 2018. Report No.: 0309466601.

Rinne ST, Mohr DC, Swamy L, Blok AC, Wong ES, Charns MP. National Burnout Trends Among Physicians Working in the Department of Veterans Affairs. J Gen Intern Med. 2020;35(5):1382-8.

Kearney LK, Smith C, Kivlahan DR, Gresen RC, Moran E, Schohn M, et al. Mental health productivity monitoring in the Veterans Health Administration: Challenges and lessons learned. Psychol Serv. 2018;15(4):486-95. doi:https://doi.org/10.1037/ser0000173

Mangory KY, Ali LY, Ro KI, Tyssen R. Effect of burnout among physicians on observed adverse patient outcomes: a literature review. BMC Health Serv Res. 2021;21(1):369. doi:https://doi.org/10.1186/s12913-021-06371-x

Salyers MP, Flanagan ME, Firmin R, Rollins AL. Clinicians’ perceptions of how burnout affects their work. Psychiatr Serv (Washington, D.C.). 2015;66(2):204-7. doi:https://doi.org/10.1176/appi.ps.201400138

Rabatin J, Williams E, Baier Manwell L, Schwartz MD, Brown RL, Linzer M. Predictors and Outcomes of Burnout in Primary Care Physicians. J Prim Care Community Health. 2016;7(1):41-3. doi:https://doi.org/10.1177/2150131915607799

Salyers MP, Garabrant JM, Luther L, Henry N, Fukui S, Shimp D, et al. A Comparative Effectiveness Trial to Reduce Burnout and Improve Quality of Care. Adm Policy Ment Hlth. 2019;46(2):238-54. doi:https://doi.org/10.1007/s10488-018-0908-4

Linzer M, Poplau S, Brown R, Grossman E, Varkey A, Yale S, et al. Do Work Condition Interventions Affect Quality and Errors in Primary Care? Results from the Healthy Work Place Study. J Gen Intern Med. 2017;32(1):56-61. doi:https://doi.org/10.1007/s11606-016-3856-2

Funding

The VA Health Services Research and Development Service provided funding for this study (VA IIR 17–262; VA RCS 21–138).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics Approval

This study was approved by the VA Ann Arbor Healthcare System Institutional Review Board (IRBNet ID 15975568).

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Prior Presentations

VA Program on Access, Community Care, and Specialty Care Services (ProACCeSS): April 2022.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Zivin, K., Van, T., Osatuke, K. et al. Behavioral Health Provider Burnout and Mental Health Care in the Veterans Health Administration. J GEN INTERN MED 38, 2254–2261 (2023). https://doi.org/10.1007/s11606-023-08235-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-023-08235-y