Abstract

Purpose

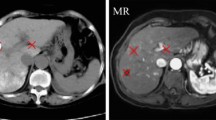

Cross-sequence magnetic resonance image (MRI) registration and segmentation are two essential steps in a variety of medical image analysis tasks. And have attracted considerable research interest. However, they remain challenging due to domain shifts between different sequences. This study is aiming at proposing a novel method via disentangled representations, latent shape image learning (LSIL), for cross-sequence image registration and segmentation.

Methods

Images from different sequences were firstly decomposed into a shared domain-invariant shape space and a domain-specific appearance space via an unsupervised image-to-image translation approach. A latent shape image learning model is then built on the disentangled shape representations to generate latent shape images. A series of experiments including cross-sequence image registration and segmentation were performed to qualitatively and quantitatively verify the validity of our method. Dice similarity coefficient (DSC) and 95th percentile Hausdorff distance (HD95) were adopted as our evaluation metrics.

Results

The performance of our method was evaluated based on 2 datasets total of 50 MRIs. The experimental results showed the superiority of the proposed framework over the state-of-the-art cross-sequence registration and segmentation approaches. The proposed method shows the mean DSCs of 0.711 and 0.867, respectively, in cross-sequence registration and segmentation.

Conclusion

We proposed a novel method based on representation disentangling to solve the cross-sequence registration and segmentation problem. Experimental results prove the feasibility and generalization of the generated latent shape images. The proposed method demonstrates significant potential for use in clinical environments of missing sequences. The source code is available at https://github.com/wujiong-hub/LSIL.

Similar content being viewed by others

References

Toga AW, Thompson PM, Mori S, Amunts K, Zilles K (2006) Towards multimodal atlases of the human brain. Nat Rev Neurosci 7(12):952–966

Nishioka T, Shiga T, Shirato H, Tsukamoto E, Tsuchiya K, Kato T, Ohmori K, Yamazaki A, Aoyama H, Hashimoto S, Chang T-C, Miyasaka K (2002) Image fusion between 18FDG-PET and MRI/CT for radiotherapy planning of oropharyngeal and nasopharyngeal carcinomas. Int J Radiat Oncol Biol Phys 53(4):1051–1057

Kybic J, Thevenaz P, Nirkko A, Unser M (2000) Unwarping of unidirectionally distorted epi images. IEEE Trans Med Imaging 19(2):80–93

Tang Z, Yap P-T, Shen D (2018) A new multi-atlas registration framework for multimodal pathological images using conventional monomodal normal atlases. IEEE Trans Image Process 28(5):2293–2304

Maintz JA, Viergever MA (1998) A survey of medical image registration. Med Image Anal 2(1):1–36

Chen M, Carass A, Jog A, Lee J, Roy S, Prince JL (2017) Cross contrast multi-channel image registration using image synthesis for MR brain images. Med Image Anal 36:2–14

Kasiri K, Fieguth P, Clausi DA (2014) Cross modality label fusion in multi-atlas segmentation. In: 2014 IEEE international conference on image processing (ICIP). IEEE, pp 16–20

Dong P, Guo Y, Shen D, Wu G (2015) Multi-atlas and multi-modal hippocampus segmentation for infant MR brain images by propagating anatomical labels on hypergraph. In: International workshop on patch-based techniques in medical imaging. Springer, Berlin, pp 188–196

Creswell A, White T, Dumoulin V, Arulkumaran K, Sengupta B, Bharath AA (2018) Generative adversarial networks: an overview. IEEE Signal Process Mag 35(1):53–65

Zhu J-Y, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision, pp 2223–2232

Liu X, Wei X, Yu A, Pan Z (2019) Unpaired data based cross-domain synthesis and segmentation using attention neural network. In: Asian conference on machine learning. PMLR, pp 987–1000

Yang J, Dvornek NC, Zhang F, Chapiro J, Lin M, Duncan JS (2019) Unsupervised domain adaptation via disentangled representations: application to cross-modality liver segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, Berlin, pp 255–263

Qin C, Shi B, Liao R, Mansi T, Rueckert D, Kamen A (2019) Unsupervised deformable registration for multi-modal images via disentangled representations. In: International conference on information processing in medical imaging. Springer, Berlin, pp 249–261

Wu J, Zhou S (2021) A disentangled representations based unsupervised deformable framework for cross-modality image registration. In: 2021 43rd annual international conference of the IEEE engineering in medicine & biology society (EMBC). IEEE, pp 3531–3534

Chen C, Dou Q, Chen H, Qin J, Heng P-A (2019) Synergistic image and feature adaptation: Towards cross-modality domain adaptation for medical image segmentation. In: Proceedings of The thirty-third conference on artificial intelligence (AAAI), pp 865–872

Huang X, Liu M-Y, Belongie S, Kautz J (2018) Multimodal unsupervised image-to-image translation. In: Proceedings of the European conference on computer vision (ECCV), pp 172–189

Avants BB, Epstein CL, Grossman M, Gee JC (2008) Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Med Image Anal 12(1):26–41

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 234–241

Ouyang J, Adeli E, Pohl KM, Zhao Q, Zaharchuk G (2021) Representation disentanglement for multi-modal brain MRI analysis. In: International conference on information processing in medical imaging. Springer, Berlin, pp 321–333

Chong MJ, Forsyth D (2021) Gans n’roses: Stable, controllable, diverse image to image translation (works for videos too!). arXiv preprint arXiv:2106.06561

Klein A, Andersson J, Ardekani BA, Ashburner J, Avants B, Chiang M-C, Christensen GE, Collins DL, Gee J, Hellier P, Song JH, Jenkinson M, Lepage C, Rueckert D, Thompson P, Vercauteren T, Woods RP, Mann JJ, Parsey RV (2009) Evaluation of 14 nonlinear deformation algorithms applied to human brain mri registration. Neuroimage 46(3):786–802

Wu J, Zhang Y, Tang X (2019) A joint 3d+ 2d fully convolutional framework for subcortical segmentation. In: International conference on medical image computing and computer-assisted intervention, pp 301–309. https://doi.org/10.1007/978-3-030-32248-9_34. Springer

Wu J, Zhang Y, Tang X (2019) A multi-atlas guided 3d fully convolutional network for MRI-based subcortical segmentation. In: 2019 IEEE 16th international symposium on biomedical imaging (ISBI 2019). IEEE, pp 705–708

Jenkinson M, Beckmann CF, Behrens TE, Woolrich MW, Smith SM (2012) FSL. Neuroimage 62(2):782–790

Dice LR (1945) Measures of the amount of ecologic association between species. Ecology 26(3):297–302

Acknowledgements

This study was supported by the National Natural Science Foundation of China (62206093), the Natural Science Foundation of Hunan Province (2022JJ40290), the Youth Foundation of Hunan Province Department of Education (21B0619) and the Scientific Research Project of Hunan University of Arts and Science (20ZD01).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

This article does not contain patient data.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wu, J., Yang, Q. & Zhou, S. Latent shape image learning via disentangled representation for cross-sequence image registration and segmentation. Int J CARS 18, 621–628 (2023). https://doi.org/10.1007/s11548-022-02788-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-022-02788-9